1、跨集群迁移ceph pool rgw

我这里是迁移rgw的pool

l老环境

[root@ceph-1 data]# yum install s3cmd -y

[root@ceph-1 ~]# ceph config dump

WHO MASK LEVEL OPTION VALUE RO

mon advanced auth_allow_insecure_global_id_reclaim false

mgr advanced mgr/dashboard/ALERTMANAGER_API_HOST http://20.3.10.91:9093 *

mgr advanced mgr/dashboard/GRAFANA_API_PASSWORD admin *

mgr advanced mgr/dashboard/GRAFANA_API_SSL_VERIFY false *

mgr advanced mgr/dashboard/GRAFANA_API_URL https://20.3.10.93:3000 *

mgr advanced mgr/dashboard/GRAFANA_API_USERNAME admin *

mgr advanced mgr/dashboard/PROMETHEUS_API_HOST http://20.3.10.91:9092 *

mgr advanced mgr/dashboard/RGW_API_ACCESS_KEY 9UYWS54KEGHPTXIZK61J *

mgr advanced mgr/dashboard/RGW_API_HOST 20.3.10.91 *

mgr advanced mgr/dashboard/RGW_API_PORT 8080 *

mgr advanced mgr/dashboard/RGW_API_SCHEME http *

mgr advanced mgr/dashboard/RGW_API_SECRET_KEY MGaia4UnZhKO6DRRtRu89iKwUJjZ0KVS8IgjA2p8 *

mgr advanced mgr/dashboard/RGW_API_USER_ID ceph-dashboard *

mgr advanced mgr/dashboard/ceph-1/server_addr 20.3.10.91 *

mgr advanced mgr/dashboard/ceph-2/server_addr 20.3.10.92 *

mgr advanced mgr/dashboard/ceph-3/server_addr 20.3.10.93 *

mgr advanced mgr/dashboard/server_port 8443 *

mgr advanced mgr/dashboard/ssl true *

mgr advanced mgr/dashboard/ssl_server_port 8443 *

[root@ceph-1 ~]# cat /root/.s3cfg

[default]

access_key = 9UYWS54KEGHPTXIZK61J

access_token =

add_encoding_exts =

add_headers =

bucket_location = US

ca_certs_file =

cache_file =

check_ssl_certificate = True

check_ssl_hostname = True

cloudfront_host = cloudfront.amazonaws.com

connection_max_age = 5

connection_pooling = True

content_disposition =

content_type =

default_mime_type = binary/octet-stream

delay_updates = False

delete_after = False

delete_after_fetch = False

delete_removed = False

dry_run = False

enable_multipart = True

encrypt = False

expiry_date =

expiry_days =

expiry_prefix =

follow_symlinks = False

force = False

get_continue = False

gpg_command = /usr/bin/gpg

gpg_decrypt = %(gpg_command)s -d --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s

gpg_encrypt = %(gpg_command)s -c --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s

gpg_passphrase =

guess_mime_type = True

host_base = 20.3.10.91:8080

host_bucket = 20.3.10.91:8080%(bucket)

human_readable_sizes = False

invalidate_default_index_on_cf = False

invalidate_default_index_root_on_cf = True

invalidate_on_cf = False

kms_key =

limit = -1

limitrate = 0

list_allow_unordered = False

list_md5 = False

log_target_prefix =

long_listing = False

max_delete = -1

mime_type =

multipart_chunk_size_mb = 15

multipart_copy_chunk_size_mb = 1024

multipart_max_chunks = 10000

preserve_attrs = True

progress_meter = True

proxy_host =

proxy_port = 0

public_url_use_https = False

put_continue = False

recursive = False

recv_chunk = 65536

reduced_redundancy = False

requester_pays = False

restore_days = 1

restore_priority = Standard

secret_key = MGaia4UnZhKO6DRRtRu89iKwUJjZ0KVS8IgjA2p8

send_chunk = 65536

server_side_encryption = False

signature_v2 = False

signurl_use_https = False

simpledb_host = sdb.amazonaws.com

skip_existing = False

socket_timeout = 300

ssl_client_cert_file =

ssl_client_key_file =

stats = False

stop_on_error = False

storage_class =

throttle_max = 100

upload_id =

urlencoding_mode = normal

use_http_expect = False

use_https = False

use_mime_magic = True

verbosity = WARNING

website_endpoint = http://%(bucket)s.s3-website-%(location)s.amazonaws.com/

website_error =

website_index = index.html

[root@ceph-1 ~]# s3cmd ls

2024-01-24 10:52 s3://000002

2024-02-01 08:20 s3://000010

2024-01-24 10:40 s3://cloudengine

2024-02-07 02:58 s3://component-000010

2024-01-24 10:52 s3://component-pub

2024-02-27 10:55 s3://deploy-2

2024-01-26 10:53 s3://digital-000002

2024-01-26 11:14 s3://digital-000010

2024-01-29 02:04 s3://docker-000010

2024-01-26 11:46 s3://docker-pub

2024-03-06 11:42 s3://warp-benchmark-bucket

[root@ceph-1 data]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 900 GiB 154 GiB 740 GiB 746 GiB 82.86

TOTAL 900 GiB 154 GiB 740 GiB 746 GiB 82.86

POOLS:

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

cephfs_data 1 16 0 B 0 0 B 0 0 B

cephfs_metadata 2 16 1.0 MiB 23 4.7 MiB 100.00 0 B

.rgw.root 3 16 3.5 KiB 8 1.5 MiB 100.00 0 B

default.rgw.control 4 16 0 B 8 0 B 0 0 B

default.rgw.meta 5 16 8.5 KiB 31 5.4 MiB 100.00 0 B

default.rgw.log 6 16 64 KiB 207 64 KiB 100.00 0 B

default.rgw.buckets.index 7 16 3.5 MiB 192 3.5 MiB 100.00 0 B

default.rgw.buckets.data 8 16 146 GiB 51.55k 440 GiB 100.00 0 B

default.rgw.buckets.non-ec 9 16 123 KiB 10 2.0 MiB 100.00 0 B

|

只迁移default.rgw.buckets.data发现没有backet桶的信息所以要迁移

default.rgw.buckets.data 这里是数据

default.rgw.meta 这里面存的是用户信息和桶的信息

default.rgw.buckets.index 这里是对应关系

1、通过 rados -p pool_name export --all 文件

[root@ceph-1 data]# rados -p default.rgw.buckets.data export --all rgwdata [root@ceph-1 data]# rados -p default.rgw.buckets.index export --all rgwindex [root@ceph-1 data]# rados -p default.rgw.meta export --all rgwmeta [root@ceph-1 data]# ls rgwdata rgwindex rgwmeta |

2、获取原集群的user信息记住ceph-dashboard的access_key和secret_key 原因是default.rgw.meta 有用户ceph-dashboard 恰好在里面

[root@ceph-1 data]# radosgw-admin user list

[

"registry",

"ceph-dashboard"

]

[root@ceph-1 data]# radosgw-admin user info --uid=ceph-dashboard

{

"user_id": "ceph-dashboard",

"display_name": "Ceph dashboard",

"email": "",

"suspended": 0,

"max_buckets": 1000,

"subusers": [],

"keys": [

{

"user": "ceph-dashboard",

"access_key": "9UYWS54KEGHPTXIZK61J",

"secret_key": "MGaia4UnZhKO6DRRtRu89iKwUJjZ0KVS8IgjA2p8"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"system": "true",

"default_placement": "",

"default_storage_class": "",

"placement_tags": [],

"bucket_quota": {

"enabled": true,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": 1638400

},

"user_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"temp_url_keys": [],

"type": "rgw",

"mfa_ids": []

}

|

新环境

切换到新建好的集群

[root@ceph-1 data]# ceph -s

cluster:

id: d073f5d6-6b4a-4c87-901b-a0f4694ee878

health: HEALTH_WARN

mon is allowing insecure global_id reclaim

services:

mon: 1 daemons, quorum ceph-1 (age 46h)

mgr: ceph-1(active, since 46h)

mds: cephfs:1 {0=ceph-1=up:active}

osd: 2 osds: 2 up (since 46h), 2 in (since 8d)

rgw: 1 daemon active (ceph-1.rgw0)

task status:

data:

pools: 9 pools, 144 pgs

objects: 61.83k objects, 184 GiB

usage: 1.9 TiB used, 3.3 TiB / 5.2 TiB avail

pgs: 144 active+clean

io:

client: 58 KiB/s rd, 7 op/s rd, 0 op/s wr

|

测试是都可以用rgw

[root@ceph-1 data]# yum install s3cmd -y

[root@ceph-1 data]# ceph config dump

WHO MASK LEVEL OPTION VALUE RO

global advanced mon_warn_on_pool_no_redundancy false

mgr advanced mgr/dashboard/ALERTMANAGER_API_HOST http://20.3.14.124:9093 *

mgr advanced mgr/dashboard/GRAFANA_API_PASSWORD admin *

mgr advanced mgr/dashboard/GRAFANA_API_SSL_VERIFY false *

mgr advanced mgr/dashboard/GRAFANA_API_URL https://20.3.14.124:3000 *

mgr advanced mgr/dashboard/GRAFANA_API_USERNAME admin *

mgr advanced mgr/dashboard/PROMETHEUS_API_HOST http://20.3.14.124:9092 *

mgr advanced mgr/dashboard/RGW_API_ACCESS_KEY 9UYWS54KEGHPTXIZK61J *

mgr advanced mgr/dashboard/RGW_API_HOST 20.3.14.124 *

mgr advanced mgr/dashboard/RGW_API_PORT 8090 *

mgr advanced mgr/dashboard/RGW_API_SCHEME http *

mgr advanced mgr/dashboard/RGW_API_SECRET_KEY MGaia4UnZhKO6DRRtRu89iKwUJjZ0KVS8IgjA2p8 *

mgr advanced mgr/dashboard/RGW_API_USER_ID ceph-dashboard *

mgr advanced mgr/dashboard/ceph-1/server_addr 20.3.14.124 *

mgr advanced mgr/dashboard/server_port 8443 *

mgr advanced mgr/dashboard/ssl true *

mgr advanced mgr/dashboard/ssl_server_port 8443 *

[root@ceph-1 data]# s3cmd ls

#创建桶

[root@ceph-1 data]# s3cmd mb s3://test

Bucket 's3://test/' created

#上传测试

[root@ceph-1 data]# s3cmd put test.txt s3://test -r

upload: 'test.txt' -> 's3://test/1234' [1 of 1]

29498 of 29498 100% in 0s 634.42 KB/s done

#删除桶文件

[root@ceph-1 data]# s3cmd del s3://test --recursive --force

delete: 's3://test/1234'

#删除桶

[root@ceph-1 data]# s3cmd rb s3://test --recursive --force

Bucket 's3://test/' removed

[root@ceph-1 data]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

ssd 5.2 TiB 3.3 TiB 1.9 TiB 1.9 TiB 36.80

TOTAL 5.2 TiB 3.3 TiB 1.9 TiB 1.9 TiB 36.80

POOLS:

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

cephfs_data 1 16 36 GiB 9.33k 36 GiB 1.16 3.0 TiB

cephfs_metadata 2 16 137 KiB 23 169 KiB 0 3.0 TiB

.rgw.root 3 16 1.2 KiB 4 16 KiB 0 3.0 TiB

default.rgw.control 4 16 0 B 8 0 B 0 3.0 TiB

default.rgw.meta 5 16 6.5 KiB 32 120 KiB 0 3.0 TiB

default.rgw.log 6 16 1.6 MiB 207 1.6 MiB 0 3.0 TiB

default.rgw.buckets.index 7 16 0 B 192 0 B 0 3.0 TiB

default.rgw.buckets.data 8 16 0 B 52.04k 0 B 4.52 3.0 TiB

default.rgw.buckets.non-ec 9 16 0 B 0 0 B 0 3.0 TiB

|

1、把上面的文件传输到当前集群

[root@ceph-1 data]# ls

rgwdata rgwindex rgwmeta

[root@ceph-1 data]#rados -p default.rgw.buckets.data import rgwdata

Importing pool

***Overwrite*** #-9223372036854775808:00000000:::obj_delete_at_hint.0000000078:head#

***Overwrite*** #-9223372036854775808:00000000:gc::gc.30:head#

***Overwrite*** #-9223372036854775808:00000000:::obj_delete_at_hint.0000000070:head#

..............

[root@ceph-1 data]#rados -p default.rgw.buckets.index import rgwindex

Importing pool

***Overwrite*** #-9223372036854775808:00000000:::obj_delete_at_hint.0000000078:head#

***Overwrite*** #-9223372036854775808:00000000:gc::gc.30:head#

***Overwrite*** #-9223372036854775808:00000000:::obj_delete_at_hint.0000000070:head#

..............

[root@ceph-1 data]#rados -p default.rgw.meta import rgwmeta

Importing pool

***Overwrite*** #-9223372036854775808:00000000:::obj_delete_at_hint.0000000078:head#

***Overwrite*** #-9223372036854775808:00000000:gc::gc.30:head#

***Overwrite*** #-9223372036854775808:00000000:::obj_delete_at_hint.0000000070:head#

..............

[root@ceph-1 data]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

ssd 5.2 TiB 3.3 TiB 1.9 TiB 1.9 TiB 36.80

TOTAL 5.2 TiB 3.3 TiB 1.9 TiB 1.9 TiB 36.80

POOLS:

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

cephfs_data 1 16 36 GiB 9.33k 36 GiB 1.16 3.0 TiB

cephfs_metadata 2 16 137 KiB 23 169 KiB 0 3.0 TiB

.rgw.root 3 16 1.2 KiB 4 16 KiB 0 3.0 TiB

default.rgw.control 4 16 0 B 8 0 B 0 3.0 TiB

default.rgw.meta 5 16 6.5 KiB 32 120 KiB 0 3.0 TiB

default.rgw.log 6 16 1.6 MiB 207 1.6 MiB 0 3.0 TiB

default.rgw.buckets.index 7 16 0 B 192 0 B 0 3.0 TiB

default.rgw.buckets.data 8 16 147 GiB 52.04k 147 GiB 4.52 3.0 TiB

default.rgw.buckets.non-ec 9 16 0 B 0 0 B 0 3.0 TiB

[root@ceph-1 data]# |

2、传输完了发现网页异常和s3cmd 不可用了

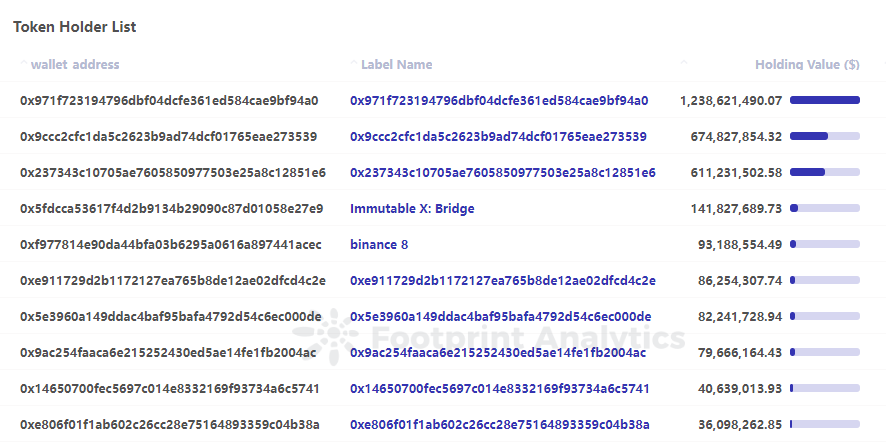

1、发现ceph config dump的RGW_API_ACCESS_KEY和RGW_API_SECRET_KEY和 radosgw-admin user info --uid=ceph-dashboard 输出的结果不一样

radosgw-admin user info --uid=ceph-dashboard结果是老集群的access_key和secret_key

导入的radosgw-admin user info --uid=ceph-dashboard的access_key和secret_key

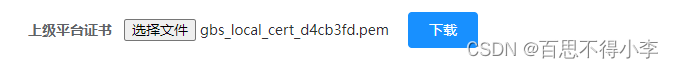

[root@ceph-1 data]# radosgw-admin user info --uid=ceph-dashboard [root@ceph-1 data]# echo 9UYWS54KEGHPTXIZK61J > access_key [root@ceph-1 data]# echo MGaia4UnZhKO6DRRtRu89iKwUJjZ0KVS8IgjA2p8 > secret_key [root@ceph-1 data]# ceph dashboard set-rgw-api-access-key -i access_key [root@ceph-1 data]# ceph dashboard set-rgw-api-secret-key -i secret_key |

发现一样了

网页也正常了

用新的access_key和secret_key 配置s3cmd

迁移后的registry 无法使用failed to retrieve info about container registry (HTTP Error: 301: 301 Moved Permanently) 和CEPH RGW集群和bucket的zone group 不一致导致的404异常解决 及 使用radosgw-admin metadata 命令设置bucket metadata 的方法

[root@ceph-1 data]# vi /var/log/ceph/ceph-rgw-ceph-1.rgw0.log

查看 zonegroup 发现不一致

radosgw-admin zonegroup list 看default_info是

79ee051e-ac44-4677-b011-c7f3ad0d1d75

但是 radosgw-admin metadata get bucket.instance:registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1里面的zonegroup

3ea718b5-ddfe-4641-8f80-53152066e03e

[root@ceph-1 data]# radosgw-admin zonegroup list

{

"default_info": "79ee051e-ac44-4677-b011-c7f3ad0d1d75",

"zonegroups": [

"default"

]

}

[root@ceph-1 data]# radosgw-admin metadata list bucket.instance

[

"docker-000010:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.4",

"docker-pub:204e1689-81f3-41e6-a487-8a0cfe918e2e.4743.3",

"digital-000010:204e1689-81f3-41e6-a487-8a0cfe918e2e.4801.1",

"digital-000002:204e1689-81f3-41e6-a487-8a0cfe918e2e.4743.2",

"000002:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.3",

"component-pub:204e1689-81f3-41e6-a487-8a0cfe918e2e.4743.1",

"cloudengine:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.2",

"deploy-2:204e1689-81f3-41e6-a487-8a0cfe918e2e.4801.5",

"warp-benchmark-bucket:204e1689-81f3-41e6-a487-8a0cfe918e2e.4801.6",

"registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"000010:204e1689-81f3-41e6-a487-8a0cfe918e2e.4801.2",

"component-000010:204e1689-81f3-41e6-a487-8a0cfe918e2e.4743.4"

]

[root@ceph-1 data]# radosgw-admin metadata get bucket.instance:registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1

{

"key": "bucket.instance:registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"ver": {

"tag": "_CMGeYR69ptByuWSkghrYCln",

"ver": 1

},

"mtime": "2024-03-08 07:42:50.397826Z",

"data": {

"bucket_info": {

"bucket": {

"name": "registry",

"marker": "204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"bucket_id": "204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"tenant": "",

"explicit_placement": {

"data_pool": "",

"data_extra_pool": "",

"index_pool": ""

}

},

"creation_time": "2024-01-24 10:36:55.798976Z",

"owner": "registry",

"flags": 0,

"zonegroup": "3ea718b5-ddfe-4641-8f80-53152066e03e",

"placement_rule": "default-placement",

"has_instance_obj": "true",

"quota": {

"enabled": false,

"check_on_raw": true,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"num_shards": 16,

"bi_shard_hash_type": 0,

"requester_pays": "false",

"has_website": "false",

"swift_versioning": "false",

"swift_ver_location": "",

"index_type": 0,

"mdsearch_config": [],

"reshard_status": 0,

"new_bucket_instance_id": ""

},

"attrs": [

{

"key": "user.rgw.acl",

"val": "AgKTAAAAAwIYAAAACAAAAHJlZ2lzdHJ5CAAAAHJlZ2lzdHJ5BANvAAAAAQEAAAAIAAAAcmVnaXN0cnkPAAAAAQAAAAgAAAByZWdpc3RyeQUDPAAAAAICBAAAAAAAAAAIAAAAcmVnaXN0cnkAAAAAAAAAAAICBAAAAA8AAAAIAAAAcmVnaXN0cnkAAAAAAAAAAAAAAAAAAAAA"

}

]

}

} |

解决

1、把registry信息导入文件

[root@ceph-1 data]# radosgw-admin metadata get bucket.instance:registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1 > conf.json |

2、获取当前集群的zonegroup

[root@ceph-1 data]# radosgw-admin zonegroup list

{

"default_info": "79ee051e-ac44-4677-b011-c7f3ad0d1d75",

"zonegroups": [

"default"

]

} |

3、修改conf.json的zonegroup

结果如下

[root@ceph-1 data]# cat conf.json

{

"key": "bucket.instance:registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"ver": {

"tag": "_CMGeYR69ptByuWSkghrYCln",

"ver": 1

},

"mtime": "2024-03-08 07:42:50.397826Z",

"data": {

"bucket_info": {

"bucket": {

"name": "registry",

"marker": "204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"bucket_id": "204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"tenant": "",

"explicit_placement": {

"data_pool": "",

"data_extra_pool": "",

"index_pool": ""

}

},

"creation_time": "2024-01-24 10:36:55.798976Z",

"owner": "registry",

"flags": 0,

"zonegroup": "79ee051e-ac44-4677-b011-c7f3ad0d1d75", #替换成radosgw-admin zonegroup list的default_inf

"placement_rule": "default-placement",

"has_instance_obj": "true",

"quota": {

"enabled": false,

"check_on_raw": true,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"num_shards": 16,

"bi_shard_hash_type": 0,

"requester_pays": "false",

"has_website": "false",

"swift_versioning": "false",

"swift_ver_location": "",

"index_type": 0,

"mdsearch_config": [],

"reshard_status": 0,

"new_bucket_instance_id": ""

},

"attrs": [

{

"key": "user.rgw.acl",

"val": "AgKTAAAAAwIYAAAACAAAAHJlZ2lzdHJ5CAAAAHJlZ2lzdHJ5BANvAAAAAQEAAAAIAAAAcmVnaXN0cnkPAAAAAQAAAAgAAAByZWdpc3RyeQUDPAAAAAICBAAAAAAAAAAIAAAAcmVnaXN0cnkAAAAAAAAAAAICBAAAAA8AAAAIAAAAcmVnaXN0cnkAAAAAAAAAAAAAAAAAAAAA"

}

]

}

} |

4、导入信息

[root@ceph-1 data]# radosgw-admin metadata put bucket.instance:registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1 < conf.json |

5、查看"zonegroup": "79ee051e-ac44-4677-b011-c7f3ad0d1d75", 和当前集群一直

[root@ceph-1 data]# radosgw-admin metadata list bucket.instance

[

"docker-000010:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.4",

"docker-pub:204e1689-81f3-41e6-a487-8a0cfe918e2e.4743.3",

"digital-000010:204e1689-81f3-41e6-a487-8a0cfe918e2e.4801.1",

"digital-000002:204e1689-81f3-41e6-a487-8a0cfe918e2e.4743.2",

"000002:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.3",

"component-pub:204e1689-81f3-41e6-a487-8a0cfe918e2e.4743.1",

"cloudengine:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.2",

"deploy-2:204e1689-81f3-41e6-a487-8a0cfe918e2e.4801.5",

"warp-benchmark-bucket:204e1689-81f3-41e6-a487-8a0cfe918e2e.4801.6",

"registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"000010:204e1689-81f3-41e6-a487-8a0cfe918e2e.4801.2",

"component-000010:204e1689-81f3-41e6-a487-8a0cfe918e2e.4743.4"

]

[root@ceph-1 data]# radosgw-admin metadata get bucket.instance:registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1

{

"key": "bucket.instance:registry:204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"ver": {

"tag": "_CMGeYR69ptByuWSkghrYCln",

"ver": 1

},

"mtime": "2024-03-08 07:42:50.397826Z",

"data": {

"bucket_info": {

"bucket": {

"name": "registry",

"marker": "204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"bucket_id": "204e1689-81f3-41e6-a487-8a0cfe918e2e.4772.1",

"tenant": "",

"explicit_placement": {

"data_pool": "",

"data_extra_pool": "",

"index_pool": ""

}

},

"creation_time": "2024-01-24 10:36:55.798976Z",

"owner": "registry",

"flags": 0,

"zonegroup": "79ee051e-ac44-4677-b011-c7f3ad0d1d75",

"placement_rule": "default-placement",

"has_instance_obj": "true",

"quota": {

"enabled": false,

"check_on_raw": true,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"num_shards": 16,

"bi_shard_hash_type": 0,

"requester_pays": "false",

"has_website": "false",

"swift_versioning": "false",

"swift_ver_location": "",

"index_type": 0,

"mdsearch_config": [],

"reshard_status": 0,

"new_bucket_instance_id": ""

},

"attrs": [

{

"key": "user.rgw.acl",

"val": "AgKTAAAAAwIYAAAACAAAAHJlZ2lzdHJ5CAAAAHJlZ2lzdHJ5BANvAAAAAQEAAAAIAAAAcmVnaXN0cnkPAAAAAQAAAAgAAAByZWdpc3RyeQUDPAAAAAICBAAAAAAAAAAIAAAAcmVnaXN0cnkAAAAAAAAAAAICBAAAAA8AAAAIAAAAcmVnaXN0cnkAAAAAAAAAAAAAAAAAAAAA"

}

]

}

} |

6、重启registry 问题解决