关于langchain中的memory,即对话历史(message history)

1、

Add message history (memory) | 🦜️🔗 Langchain

RunnableWithMessageHistory,可用于任何的chain中添加对话历史,将以下之一作为输入

(1)一个BaseMessage序列

(2)一个dict,其中一个键的值是一个BaseMessage序列

(3)一个dict,其中一个键的值存储最后一次对话信息,另外一个键的值存储之前的历史对话信息

输出以下之一

(1)一个可以作为AIMessage的content的字符串

(2)一个BaseMessage序列

(3)一个dict,其中一个键的值是一个BaseMessage序列

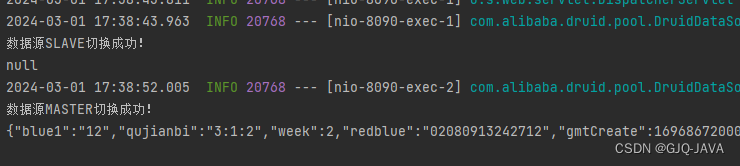

首先需要一个返回BaseChatMessageHistory实例的可调用函数,这里我们将历史对话存储在内存中,同时langchain也支持将历史对话存储在redis中(RedisChatMessageHistory)更持久的存储,

from langchain_community.chat_message_histories import ChatMessageHistory

def get_session_history(session_id):#一轮对话的内容只存储在一个key/session_id

if session_id not in store:

store[session_id] = ChatMessageHistory()

return store[session_id](1)输入是一个BaseMessage序列的示例

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain_core.messages import HumanMessage

with_message_history=RunnableWithMessageHistory(

ChatOpenAI(),

get_session_history,

)

print(with_message_history.invoke(input=HumanMessage("介绍下王阳明")

,config={'configurable':{'session_id':'id123'}}))(2)输入是一个dict,其中一个键的值是一个BaseMessage序列的示例

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.messages import HumanMessage

from langchain_core.runnables.history import RunnableWithMessageHistory

model = ChatOpenAI()

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"你是一个助手,擅长能力{ability}。用20个字以内回答",

),

MessagesPlaceholder(variable_name="history"),

("human", "{input}"),

]

)

runnable = prompt | model

with_message_history = RunnableWithMessageHistory(

runnable,

get_session_history,

input_messages_key="input",

history_messages_key="history",

)

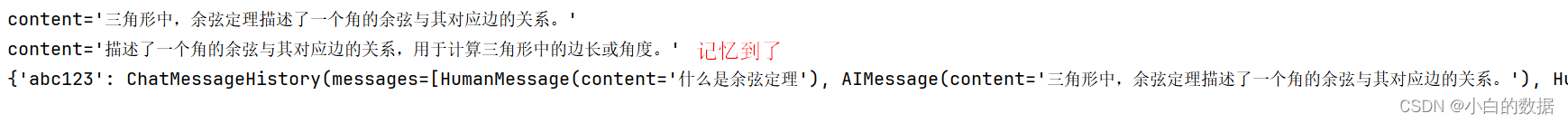

i1=with_message_history.invoke(

{"ability": "数学", "input": HumanMessage("什么是余弦定理")},

config={"configurable": {"session_id": "abc123"}},#历史信息存入session_id

)

print(i1)

i2=with_message_history.invoke(

{"ability": "math", "input": HumanMessage("重新回答一次")},

config={"configurable": {"session_id": "abc123"}},#历史信息存入session_id

)

print(i2)#记忆到了

print(store)

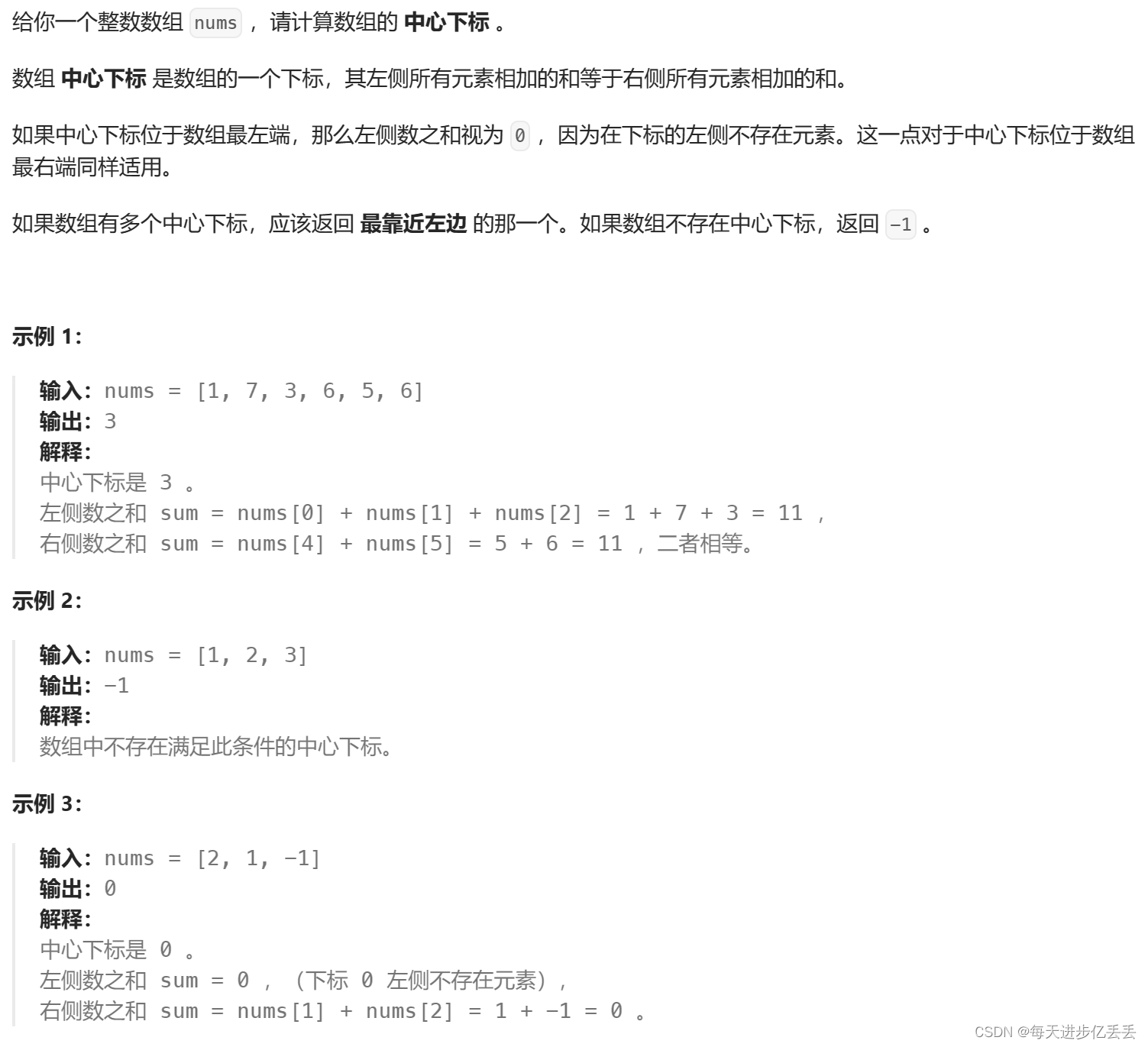

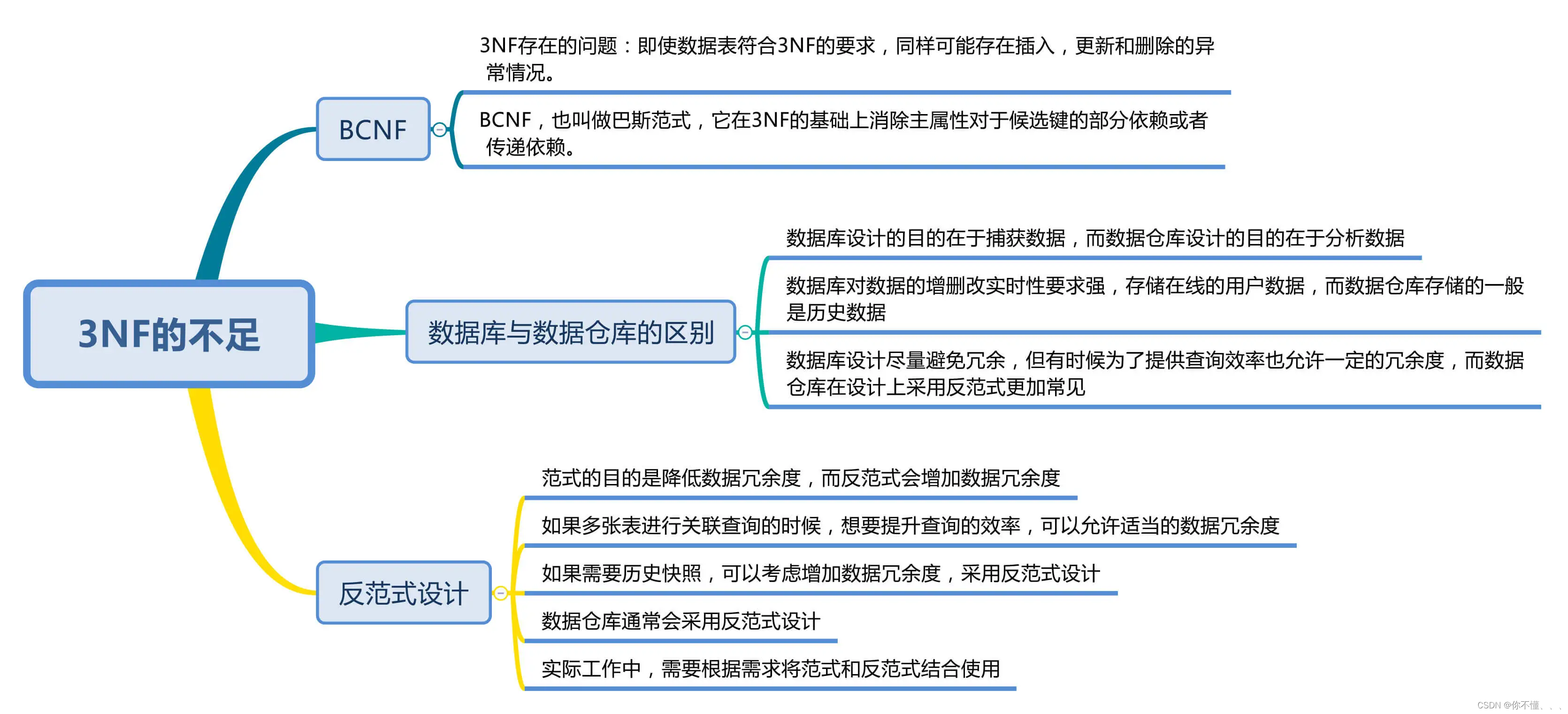

(3)前面的是dict输入message输出,下面是其他的方案

输入message,输出dict

![]()

from langchain_core.runnables import RunnableParallel

chain = RunnableParallel({"output_message": ChatOpenAI()})

with_message_history = RunnableWithMessageHistory(

chain,

get_session_history,

output_messages_key="output_message",

)

i1=with_message_history.invoke(

[HumanMessage(content="白雪公主是哪里的人物")],

config={"configurable": {"session_id": "baz"}},

)

print(i1)输入message,输出message:简易实现对话系统

from operator import itemgetter

with_message_history =RunnableWithMessageHistory(

itemgetter("input_messages") | ChatOpenAI(),

get_session_history,

input_messages_key="input_messages",

)

while True:

# print(store)

query=input('user:')

answer=with_message_history.invoke(

input={'input_messages':query},

config={'configurable':{'session_id':'id123'}})

print(answer)待续