🍨 本文为[🔗365天深度学习训练营学习记录博客

🍦 参考文章:365天深度学习训练营

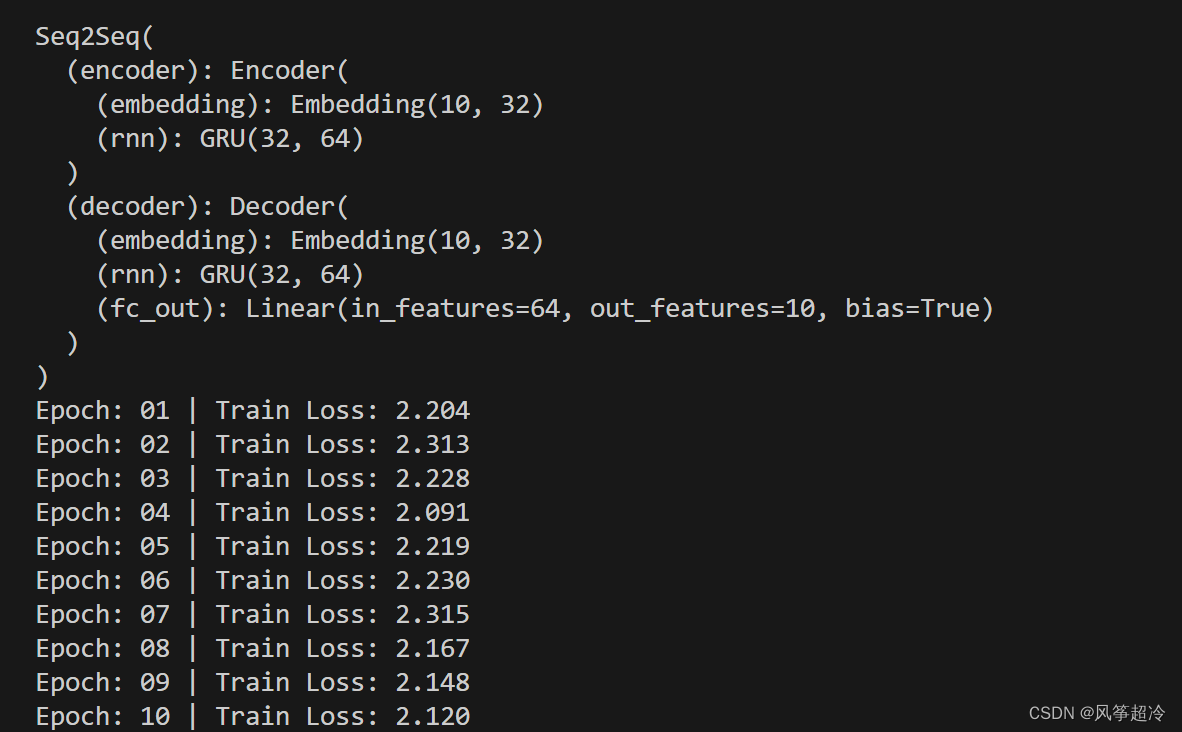

🍖 原作者:[K同学啊 | 接辅导、项目定制]\n🚀 文章来源:[K同学的学习圈子](https://www.yuque.com/mingtian-fkmxf/zxwb45)Seq2Seq模型是一种深度学习模型,用于处理序列到序列的任务,它由两个主要部分组成:编码器(Encoder)和解码器(Decoder)。

-

编码器(Encoder): 编码器负责将输入序列(例如源语言句子)编码成一个固定长度的向量,通常称为上下文向量或编码器的隐藏状态。编码器可以是循环神经网络(RNN)、长短期记忆网络(LSTM)或者变种如门控循环单元(GRU)等。编码器的目标是捕捉输入序列中的语义信息,并将其编码成一个固定维度的向量表示。

-

解码器(Decoder): 解码器接收编码器生成的上下文向量,并根据它来生成输出序列(例如目标语言句子)。解码器也可以是RNN、LSTM、GRU等。在训练阶段,解码器一次生成一个词或一个标记,并且其隐藏状态从一个时间步传递到下一个时间步。解码器的目标是根据上下文向量生成与输入序列对应的输出序列。

在训练阶段,Seq2Seq模型的目标是最大化目标序列的条件概率给定输入序列。为了实现这一点,通常使用了一种称为教师强制(Teacher Forcing)的技术,即将目标序列中的真实标记作为解码器的输入。但是,在推理阶段(即模型用于生成新的序列时),解码器则根据先前生成的标记生成下一个标记,直到生成一个特殊的终止标记或达到最大长度为止。

下面演示了如何使用PyTorch实现一个简单的Seq2Seq模型,用于将一个序列翻译成另一个序列。这里我们将使用一个虚构的数据集来进行简单的法语到英语翻译。

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

from torch.nn.utils.rnn import pad_sequence

from torch.utils.data import DataLoader, Dataset

# 定义数据集

class SimpleDataset(Dataset):

def __init__(self, data):

self.data = data

def __len__(self):

return len(self.data)

def __getitem__(self, idx):

return self.data[idx]

# 定义Encoder

class Encoder(nn.Module):

def __init__(self, input_dim, emb_dim, hidden_dim):

super(Encoder, self).__init__()

self.embedding = nn.Embedding(input_dim, emb_dim)

self.rnn = nn.GRU(emb_dim, hidden_dim)

def forward(self, src):

embedded = self.embedding(src)

outputs, hidden = self.rnn(embedded)

return outputs, hidden

# 定义Decoder

class Decoder(nn.Module):

def __init__(self, output_dim, emb_dim, hidden_dim):

super(Decoder, self).__init__()

self.embedding = nn.Embedding(output_dim, emb_dim)

self.rnn = nn.GRU(emb_dim, hidden_dim)

self.fc_out = nn.Linear(hidden_dim, output_dim)

def forward(self, input, hidden):

input = input.unsqueeze(0)

embedded = self.embedding(input)

output, hidden = self.rnn(embedded, hidden)

prediction = self.fc_out(output.squeeze(0))

return prediction, hidden

# 定义Seq2Seq模型

class Seq2Seq(nn.Module):

def __init__(self, encoder, decoder, device):

super(Seq2Seq, self).__init__()

self.encoder = encoder

self.decoder = decoder

self.device = device

def forward(self, src, trg, teacher_forcing_ratio=0.5):

batch_size = trg.shape[1]

trg_len = trg.shape[0]

trg_vocab_size = self.decoder.fc_out.out_features

outputs = torch.zeros(trg_len, batch_size, trg_vocab_size).to(self.device)

encoder_outputs, hidden = self.encoder(src)

input = trg[0,:]

for t in range(1, trg_len):

output, hidden = self.decoder(input, hidden)

outputs[t] = output

teacher_force = np.random.rand() < teacher_forcing_ratio

top1 = output.argmax(1)

input = trg[t] if teacher_force else top1

return outputs

# 设置参数

INPUT_DIM = 10

OUTPUT_DIM = 10

ENC_EMB_DIM = 32

DEC_EMB_DIM = 32

HID_DIM = 64

N_LAYERS = 1

ENC_DROPOUT = 0.5

DEC_DROPOUT = 0.5

# 实例化模型

enc = Encoder(INPUT_DIM, ENC_EMB_DIM, HID_DIM)

dec = Decoder(OUTPUT_DIM, DEC_EMB_DIM, HID_DIM)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = Seq2Seq(enc, dec, device).to(device)

# 打印模型结构

print(model)

# 定义训练函数

def train(model, iterator, optimizer, criterion, clip):

model.train()

epoch_loss = 0

for i, batch in enumerate(iterator):

src, trg = batch

src = src.to(device)

trg = trg.to(device)

optimizer.zero_grad()

output = model(src, trg)

output_dim = output.shape[-1]

output = output[1:].view(-1, output_dim)

trg = trg[1:].view(-1)

loss = criterion(output, trg)

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), clip)

optimizer.step()

epoch_loss += loss.item()

return epoch_loss / len(iterator)

# 定义测试函数

def evaluate(model, iterator, criterion):

model.eval()

epoch_loss = 0

with torch.no_grad():

for i, batch in enumerate(iterator):

src, trg = batch

src = src.to(device)

trg = trg.to(device)

output = model(src, trg, 0) # 关闭teacher forcing

output_dim = output.shape[-1]

output = output[1:].view(-1, output_dim)

trg = trg[1:].view(-1)

loss = criterion(output, trg)

epoch_loss += loss.item()

return epoch_loss / len(iterator)

# 示例数据

train_data = [(torch.tensor([1, 2, 3]), torch.tensor([3, 2, 1])),

(torch.tensor([4, 5, 6]), torch.tensor([6, 5, 4])),

(torch.tensor([7, 8, 9]), torch.tensor([9, 8, 7]))]

# 超参数

BATCH_SIZE = 3

N_EPOCHS = 10

LEARNING_RATE = 0.001

CLIP = 1

# 数据集与迭代器

train_dataset = SimpleDataset(train_data)

train_loader = DataLoader(train_dataset, batch_size=BATCH_SIZE, shuffle=True)

# 定义损失函数与优化器

optimizer = optim.Adam(model.parameters(), lr=LEARNING_RATE)

criterion = nn.CrossEntropyLoss()

# 训练模型

for epoch in range(N_EPOCHS):

train_loss = train(model, train_loader, optimizer, criterion, CLIP)

print(f'Epoch: {epoch+1:02} | Train Loss: {train_loss:.3f}')