✅作者简介:人工智能专业本科在读,喜欢计算机与编程,写博客记录自己的学习历程。

🍎个人主页:小嗷犬的个人主页

🍊个人网站:小嗷犬的技术小站

🥭个人信条:为天地立心,为生民立命,为往圣继绝学,为万世开太平。

本文目录

- Scheduler

- Warm-up + CosineAnnealingLR

- Warm-up + ExponentialLR

- Warm-up + StepLR

- 可视化对比

- Pytorch 模板

Scheduler

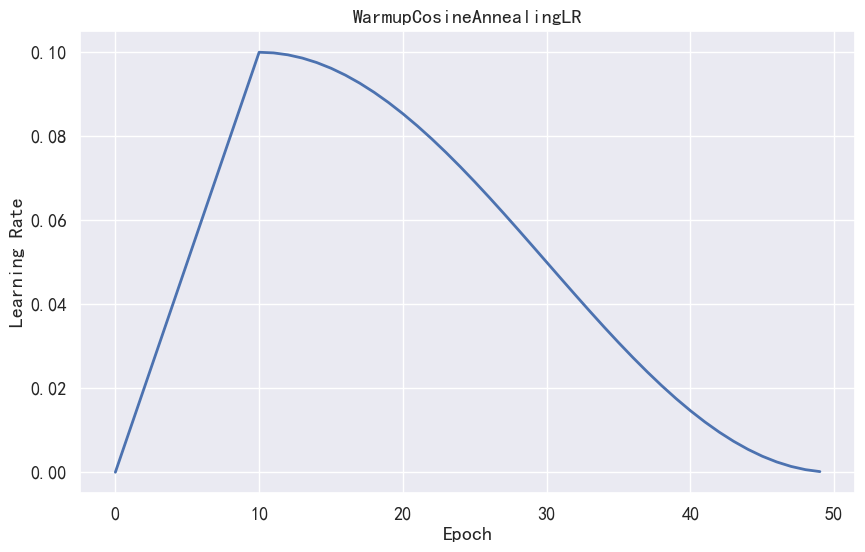

Warm-up + CosineAnnealingLR

import math

warm_up_iter = 10

T_max = 50

lr_max = 0.1

lr_min = 1e-5

def WarmupCosineAnnealingLR(cur_iter):

if cur_iter < warm_up_iter:

return (lr_max - lr_min) * (cur_iter / warm_up_iter) + lr_min

else:

return lr_min + 0.5 * (lr_max - lr_min) * (

1 + math.cos((cur_iter - warm_up_iter) / (T_max - warm_up_iter) * math.pi)

)

前 warm_up_iter 步,学习率从 lr_min 线性增加到 lr_max;后 T_max - warm_up_iter 步,学习率按照余弦退火从 lr_max 降到 lr_min。

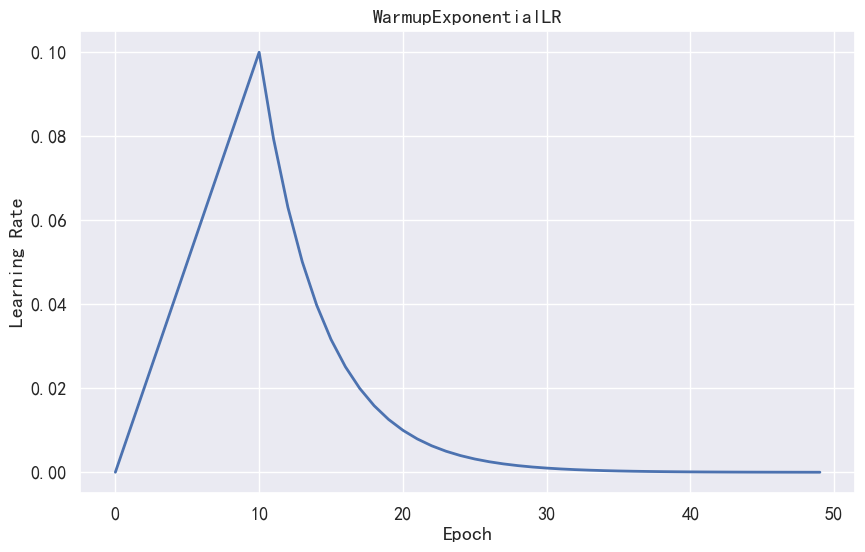

Warm-up + ExponentialLR

import math

warm_up_iter = 10

T_max = 50

lr_max = 0.1

lr_min = 1e-5

def WarmupExponentialLR(cur_iter):

gamma = math.exp(math.log(lr_min / lr_max) / (T_max - warm_up_iter))

if cur_iter < warm_up_iter:

return (lr_max - lr_min) * (cur_iter / warm_up_iter) + lr_min

else:

return lr_max * gamma ** (cur_iter - warm_up_iter)

前 warm_up_iter 步,学习率从 lr_min 线性增加到 lr_max;后 T_max - warm_up_iter 步,学习率按照指数衰减从 lr_max 降到 lr_min。

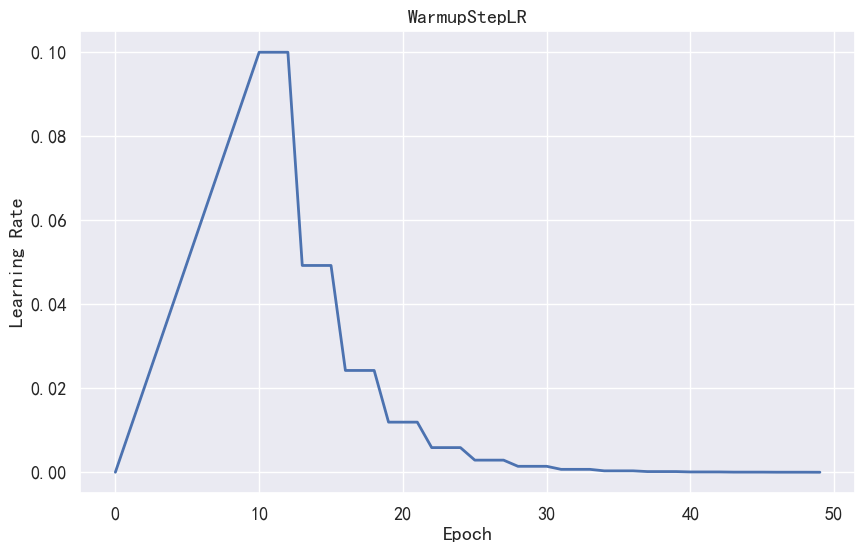

Warm-up + StepLR

import math

warm_up_iter = 10

T_max = 50

lr_max = 0.1

lr_min = 1e-5

step_size = 3

def WarmupStepLR(cur_iter):

gamma = math.exp(math.log(lr_min / lr_max) / ((T_max - warm_up_iter) // step_size))

if cur_iter < warm_up_iter:

return (lr_max - lr_min) * (cur_iter / warm_up_iter) + lr_min

else:

return lr_max * gamma ** ((cur_iter - warm_up_iter) // step_size)

前 warm_up_iter 步,学习率从 lr_min 线性增加到 lr_max;后 T_max - warm_up_iter 步,学习率按照指数衰减从 lr_max 降到 lr_min,每 step_size 步衰减一次。

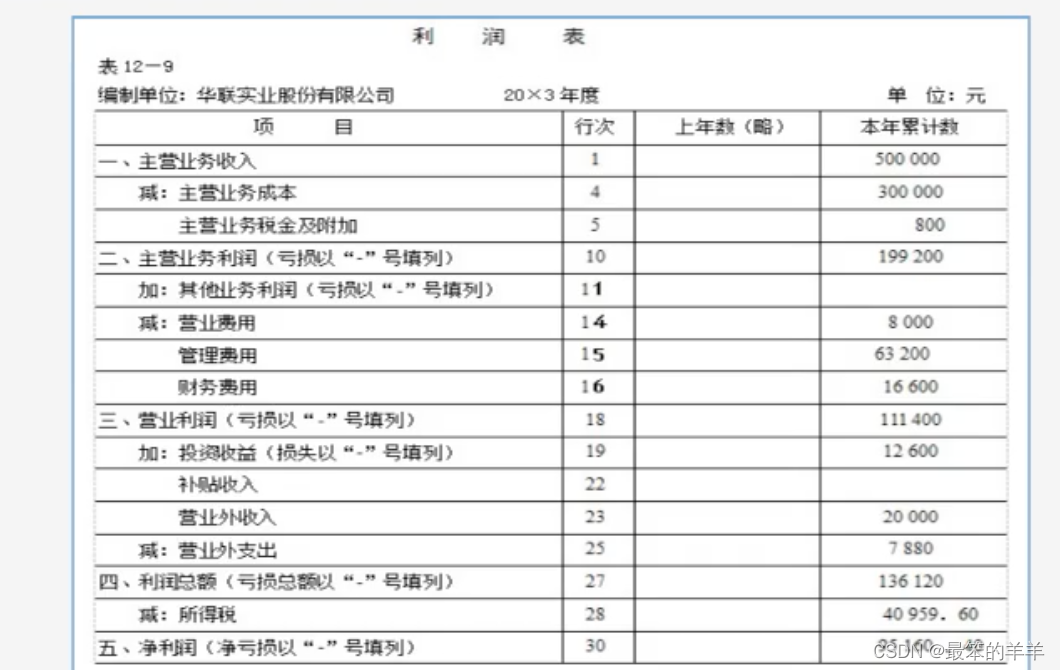

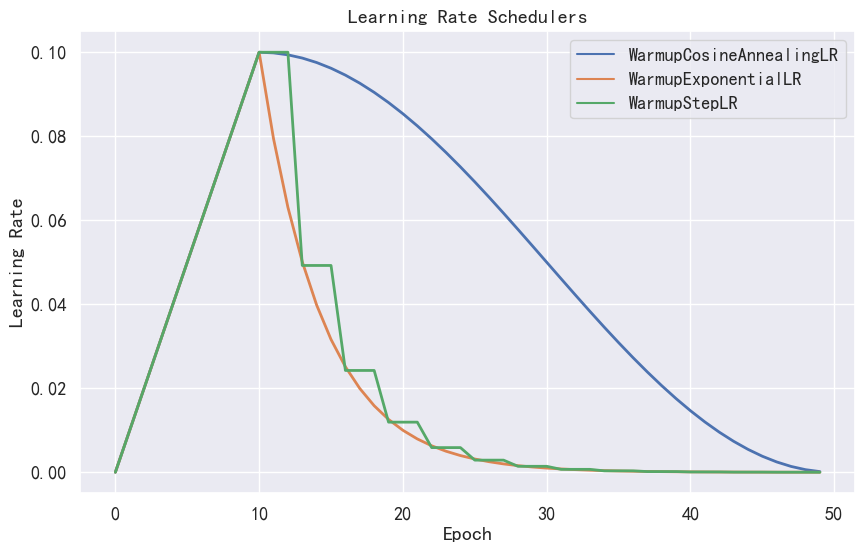

可视化对比

Pytorch 模板

import math

import torch

from torch import nn, optim

from torch.optim.lr_scheduler import LambdaLR

warm_up_iter = 10

T_max = 50

lr_max = 0.1

lr_min = 1e-5

def WarmupCosineAnnealingLR(cur_iter):

if cur_iter < warm_up_iter:

return (lr_max - lr_min) * (cur_iter / warm_up_iter) + lr_min

else:

return lr_min + 0.5 * (lr_max - lr_min) * (

1 + math.cos((cur_iter - warm_up_iter) / (T_max - warm_up_iter) * math.pi)

)

model = nn.Linear(1, 1)

optimizer = optim.AdamW(model.parameters(), lr=0.1)

scheduler = LambdaLR(optimizer, lr_lambda=WarmupCosineAnnealingLR)

for epoch in range(T_max):

train(...)

valid(...)

scheduler.step()