一、项目需求描述

现在使用的架构是nfs+keepalived+rsync+sersync,目前这套架构存在主从nfs节点数据同步不一致问题,大概会有 120s左右的数据延长同步时间,需要提供优化的自动化方案。

二、现有方案缺点

1、切换不能保证主从节点数据一致。

2、客户端需要umount,在从新mount,客户端才能正常读写。

3、sersync+nfs主从节点进行切换时,如果主节点数据没有完全同步到从节点,从节点接管了主节点,sersync在去推送时会导致数据丢失。

4、目前只能单点运行。

三、方案目标

保证业务稳定性和数据的完整性。

四、资源选型

-

三台centos7.6 4C8G200G规格的机器

-

规划资源角色

| 类型 | 主节点 | 备节点 | VIP地址 | 客户端 |

| IP | 10.241.212.3 | 10.241.212.4 | 10.241.212.249 | 10.241.212.1 |

| 角色 | keepalived | keepalived | ||

| nfs-server | nfs-server | nfs-client | ||

| drbd-Primary | drbd-Secondary |

-

DRBD90版

五、准备工作

-

修改主机名称(主备节点两台机器执行)

vim /etc/hosts

10.241.212.3 nfs-master

10.241.212.4 nfs-backup-

设置ssh互信(主节点执行)

ssh-keygen -f ~/.ssh/id_rsa -P '' -q

ssh-copy-id nfs-backup

ssh-copy-id nfs-master-

关闭防火墙(主备节点两台机器执行)

systemctl stop firewalld && systemctl stop iptables

systemctl disable firewalld && systemctl disable iptables-

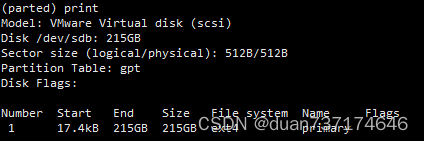

配置磁盘gpt分区(主备节点两台机器执行)

parted /dev/sdb

mklabel gpt

mkpart primary 0 -1

-

配置yum源(主备节点两台机器执行)

rpm -ivh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

yum install -y glib2-devel libxml2-devel bzip2-devel flex-devel bison-devel OpenIPMI-devel net-snmp-devel ipmitool ipmiutil ipmiutil-devel asciidoc kernel-devel

-

安装drbd、keepalived、nfs软件包(主备节点两台机器执行)

yum -y install drbd90-utils kmod-drbd90 keepalived rpcbind nfs-utils-

加载drbd模块(主备节点两台机器执行)

modprobe drbd

lsmod |grep drbd

六、DRBD配置(主备节点两台机器执行)

-

编辑全局配置文件

cat /etc/drbd.d/global_common.conf |egrep -v "^.*#|^$"

global {

usage-count yes;

}

common {

protocol C; //采用完全同步复制模式

handlers {

}

startup {

wfc-timeout 240;

degr-wfc-timeout 240;

outdated-wfc-timeout 240;

}

options {

}

disk {

on-io-error detach;

}

net {

cram-hmac-alg md5;

shared-secret "testdrbd";

}

}-

编辑资源配置文件

cat > /etc/drbd.d/data.res << EOF

resource data { #资源名称

protocol C; #使用协议

meta-disk internal;

device /dev/drbd0; #DRBD设备名称

on nfs-master {

disk /dev/sdb1;

address 10.241.212.3:7789; #设置DRBD监听地址与端口

}

on nfs-backup {

disk /dev/sdb1;

address 10.241.212.4:7789;

}

}

EOF拷贝到备节点

cd /etc/drbd.d

scp data.res global_common.conf root@nfs-bakcup:/etc/drbd.d/-

创建DRBD设备激活data资源

mknod /dev/drbd0 b 147 0[root@nfs-master drbd.d]# ll /dev/drbd0

brw-rw---- 1 root disk 147, 0 Feb 20 14:37 /dev/drbd0-

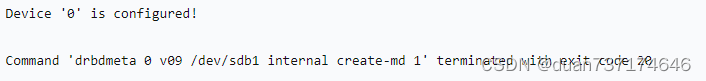

创建drbd设备的元数据

drbdadm create-md data-

#注:如出现

-

执行

dd if=/dev/zero of=/dev/sdb1 bs=1M count=100在执行drbdadm create-md data即可

-

启动drbd服务,并设置开机自启动

systemctl start drbd

systemctl enable drbd-

初始化设备同步

#查询主备节点drbd状态

[root@nfs-master drbd.d]# drbdadm status

data role:Secondary

disk:Inconsistent

Secondary role:Secondary

peer-disk:Inconsistent

#此步骤只能在一个节点上执行(主节点)

drbdadm primary --force data

#查看同步进度情况

drbdadm status

data role:Primary

disk:UpToDate

Secondary role:Secondary

replication:SyncSource peer-disk:Inconsistent done:36.82 //这里有初始化全量同步过程

#同步完成情况

drbdadm status

data role:Primary

disk:UpToDate

Secondary role:Secondary

peer-disk:UpToDate //显示UpToDate/UpToDate 表示主从配置成功

七、挂载DRBD文件系统到目录,主节点操作

#格式化 /dev/drbd0(主备节点均执行) mkfs.ext4 /dev/drbd0 #创建挂载目录,然后执行DRBD挂载(主备节点均执行) mkdir /data #(主节点执行) mount /dev/drbd0 /data # 查询挂载情况 df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 3.8G 0 3.8G 0% /dev tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 3.9G 28M 3.8G 1% /run tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/sda3 15G 2.6G 12G 19% / /dev/sda1 1008M 145M 813M 16% /boot tmpfs 781M 0 781M 0% /run/user/0 /dev/drbd0 197G 61M 187G 1% /data 特别注意: Secondary节点上不允许对DRBD设备进行任何操作,包括只读,所有的读写操作只能在Primary节点上进行。 只有当Primary节点挂掉时,Secondary节点才能提升为Primary节点

八、DRBD提升和降级资源测试(手动故障主备切换)

# DBRD的提升和降级资源测试

使用以下命令之一手动将aresource’s role从次要角色切换到主要角色(提升)或反之亦然(降级):

#创建测试文件

cd /data/

touch file{1..5}

ls

file1 file2 file3 file4 file5

#切换测试

umount /data

drbdadm secondary data //切换资源到从节点,主节点降级

# 从节点检查

drbdadm primary data //备用节点提权测试

#挂载drbd0磁盘

mount /dev/drbd0 /data/

cd /data/

ls

file1 file2 file3 file4 file5 //可以看到数据已经同步

#从节点测试是否能够写入数据

touch slave-{1..3}

ls

file1 file2 file3 file4 file5 slave-1 slave-2 slave-3 //可以写入,说明切换测试正常

九、NFS配置

#编辑nfs配置文件

vim /etc/exports

/data 10.241.212.249/16(rw,sync,no_root_squash)#启动nfs服务和开机自启

systemctl start rpcbind.service && systemctl enable rpcbind.service

systemctl start nfs-server.service && systemctl enable nfs-server.service十、keepalived配置(主配置)

#修改keepalived配置文件

vim keepalived.conf

! Configuration File for keepalived

global_defs {

router_id nfs-master

}

vrrp_script chk_nfs {

script "/etc/keepalived/check_nfs.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 60

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nfs

}

notify_stop /etc/keepalived/notify_stop.sh #当服务停止时执行的脚本

notify_master /etc/keepalived/notify_master.sh #当切换成主时执行的脚本

virtual_ipaddress {

10.241.212.249

}

}

##启动keepalived服务

systemctl start keepalived.service

systemctl enable keepalived.service #备节点配置:

! Configuration File for keepalived

global_defs {

router_id nfs-backup

}

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 60

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

notify_master /etc/keepalived/notify_master.sh #当切换成主时执行的脚本

notify_backup /etc/keepalived/notify_backup.sh #当切换成备时执行的脚本

virtual_ipaddress {

10.241.212.249

}

}

##启动keepalived服务

systemctl start keepalived.service

systemctl enable keepalived.service #主节点配置

vim /etc/keepalived/check_nfs.sh

#!/bin/bash

##检查nfs服务可用性:进程和是否能挂载

systemctl status nfs-server &> /dev/null

if [ $? -ne 0 ];then //-ne测试两个整数是否不等,不等为真,相等为假。

##如果服务不正常,重启服务

systemctl restart nfs-server

systemctl status nfs-server &>/dev/null

if [ $? -ne 0 ];then

##若重启后还不正常。卸载drbd0,并降级为备用节点

umount /dev/drbd0

drbdadm secondary r0

##关闭keepalived服务

systemctl stop keepalived

fi

fi

#添加权限

chmod 755 /etc/keepalived/check_nfs.sh

此脚本只在主节点机器上配置

#创建日志目录

mkdir /etc/keepalived/logs

# 创建stop脚本

cat notify_stop.sh

#!/bin/bash

time=`date "+%F %H:%M:%S"`

echo -e "$time ------notify_stop------\n" >> /etc/keepalived/logs/notify_stop.log

systemctl stop nfs-server &>> /etc/keepalived/logs/notify_stop.log

umount /data &>> /etc/keepalived/logs/notify_stop.log

drbdadm secondary r0 &>> /etc/keepalived/logs/notify_stop.log

echo -e "\n" >> /etc/keepalived/logs/notify_stop.log

#添加stop脚本权限

chmod 755 /etc/keepalived/notify_stop.sh

此脚本在(主备两台机器上都要配置)

#配置master脚本

vim notify_master.sh

#!/bin/bash

time=`date "+%F %H:%M:%S"`

echo -e "$time ------notify_master------\n" >> /etc/keepalived/logs/notify_master.log

drbdadm primary r0 &>> /etc/keepalived/logs/notify_master.log

mount /dev/drbd0 /data &>> /etc/keepalived/logs/notify_master.log

systemctl restart nfs-server &>> /etc/keepalived/logs/notify_master.log

echo -e "\n" >> /etc/keepalived/logs/notify_master.log

#配置master脚本权限

chmod 755 /etc/keepalived/notify_master.sh

此脚本(只在备节点机器上配置)

#创建logs目录

mkdir /etc/keepalived/logs

#创建backup脚本

vim notify_backup.sh

#!/bin/bash

time=`date "+%F %H:%M:%S"`

echo -e "$time ------notify_stop------\n" >> /etc/keepalived/logs/notify_backup.log

systemctl stop nfs-server &>> /etc/keepalived/logs/notify_backup.log

umount /data &>> /etc/keepalived/logs/notify_backup.log

drbdadm secondary r0 &>> /etc/keepalived/logs/notify_backup.log

echo -e "\n" >> /etc/keepalived/logs/notify_backup.log

#配置backup权限

chmod 755 /etc/keepalived/notify_backup.sh

十一、NFS客户端挂载测试

#客户端只需要安装rpcbind程序,并确认服务正常

#安装nfs服务依赖和服务包

[root@nfsclient ~]# yum -y install rpcbind nfs-utils

#创建开机自启动和开启服务

[root@nfsclient ~]# systemctl start rpcbind && systemctl enable rpcbind

[root@nfsclient ~]# systemctl start nfs-server && systemctl enable nfs-server

#挂载NFS

mount -t nfs 10.241.212.249:/data /data

[root@localhost data]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda3 15G 2.4G 12G 18% /

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs 1.9G 196M 1.7G 11% /run

tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/sda1 1008M 103M 854M 11% /boot

/dev/sdb1 40G 48M 38G 1% /mnt

tmpfs 378M 0 378M 0% /run/user/0

10.241.212.249:/data 197G 60M 187G 1% /data

十二、高可用环境故障自动切换测试

先关闭主节点主机上的keepalived服务。就会发现VIP资源已经转移到备节点上了。同时,主节点的nfs也会主动关闭,同时备节点会升级为DRBD的主节点

## 停止主节点的keepalived服务

systemctl stop keepalived.service

#在备节点查看ip情况,是否接管vip地址

[root@nfs-backup ~]# ip a |grep 10.241

inet 10.241.212.4/16 brd 10.241.255.255 scope global eth1

inet 10.241.212.249/16 scope global secondary eth1

#也可以通过var/log/messages 查看日志

#查看drbd节点资源状态

[root@nfs-backup ~]# drbdadm status

data role:Primary

disk:UpToDate

nfs-master role:Secondary

peer-disk:UpToDate

#查询数据情况,确认是否一致

cd /data

#刚做了主从切换,现在在primary的节点测试删除数据,观察客户端是否同步

rm -rf test-*

#登录客户端,进入data目录查看

cd /data

ls

#当主节点机器的keepalived服务恢复启动后,VIP资源又会强制夺回来(可以查看/var/log/message系统日志)

#并且主节点机器还会再次变为DRBD的主节点

FAQ总结:

此套服务不能解决vip切换节点时,nfs服务client端挂载异常问题,还需要在client手动执行umount,重新mount恢复。