简介

库名称:AudioChannel

版本:1.0

由于项目需求录音并base64编码存到服务器中,就顺手改装了一个别人的封装类

原封装类地址:Android AudioRecord音频录制wav文件输出 - 简书 (jianshu.com)

描述:此封装类基于AudioRecord实现wav的音频录制,本封装类对原版进行了以下修改:

1.部分修正

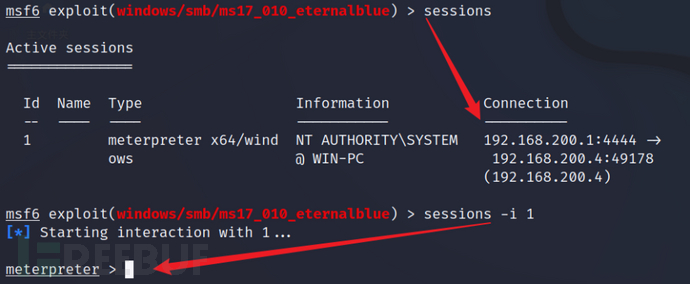

(1).可以看到,原封装类继承Thread,代码逻辑很清晰,因此改动过程也较轻松,单次运行能够正常,但是在二次运行,发现报错:

D/CompatibilityChangeReporter: Compat change id reported: 147798919; UID 10428; state: ENABLED

W/System.err: java.lang.IllegalThreadStateException

W/System.err: at java.lang.Thread.start(Thread.java:960)

at com.yy.audiochannaldemo.AudioChannel.startLive(AudioChannel.java:84)经过跟踪发现,在二次运行的时候,线程的state变为TERMINATED,这意味着线程已经完成了它的执行并且已经退出。一旦线程终止,不能重新启动,因此新版封装类不再继承Thread,而是通过priavate线程重建函数initThread来实现。

(2).首先AudioRecord不能够直接保存录音为wav,因此必须先保存为pcm文件,再通过头部写入数据,转换为wav文件,在这个过程中注意到原封装库,没有对保存pcm的文件进行删除处理,后续可能导致容量过大

(3).构建函数,传入context,以此就无需动态授权外部存储写入权限,也方便后续需要context的操作部分

2.权限控制

在使用过程注意到,原版库并没有处理权限申请,在改版加上了,6.0以上安卓加入了权限控制,另外去除了使用外部存储,需要额外动态授权的情况,直接存入cache

3.功能实现

在原先基础上加入了音高、声音贝计算,并通过onResult接口回调这三个变量,不过db和hz都有一定偏差

一、配置部分

需要先在清单中加入这两项:

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />此封装库需要配置的部分就这么多。

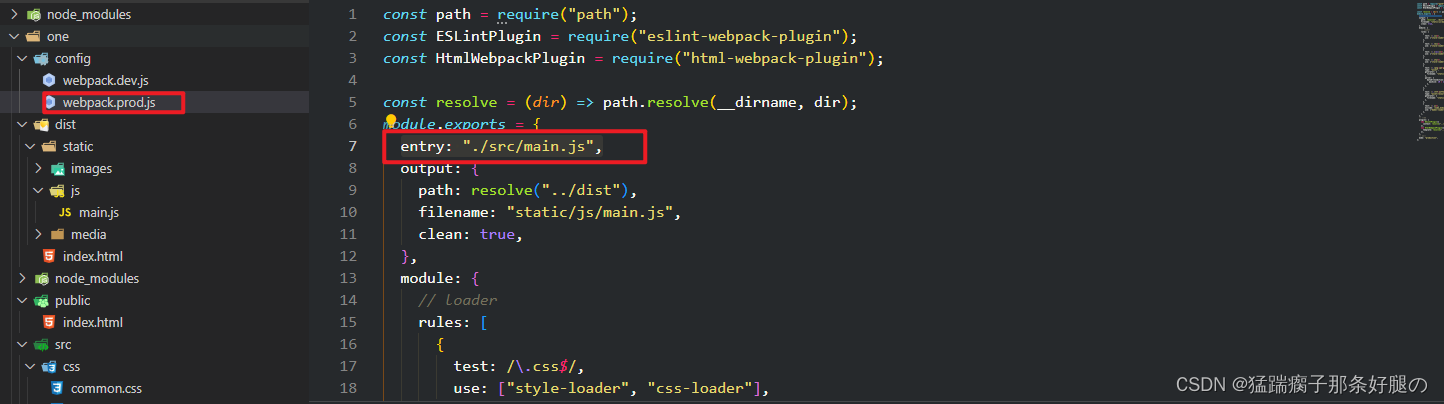

需要在build.gradle加入以下依赖添加代码

implementation 'com.github.wendykierp:JTransforms:3.1'二、代码部分

1.PcmToWavUtil.java:Pcm转Wav工具类

public class PcmToWavUtil {

private int mBufferSize; //缓存的音频大小

private int mSampleRate = 8000;// 8000|16000

private int mChannelConfig = AudioFormat.CHANNEL_IN_STEREO; //立体声

private int mChannelCount = 2;

private int mEncoding = AudioFormat.ENCODING_PCM_16BIT;

public PcmToWavUtil() {

this.mBufferSize = AudioRecord.getMinBufferSize(mSampleRate, mChannelConfig, mEncoding);

}

public PcmToWavUtil(int sampleRate, int channelConfig, int channelCount, int encoding) {

this.mSampleRate = sampleRate;

this.mChannelConfig = channelConfig;

this.mChannelCount = channelCount;

this.mEncoding = encoding;

this.mBufferSize = AudioRecord.getMinBufferSize(mSampleRate, mChannelConfig, mEncoding);

}

public void pcmToWav(String inFilename, String outFilename) {

FileInputStream in;

FileOutputStream out;

long totalAudioLen;

long totalDataLen;

long longSampleRate = mSampleRate;

int channels = mChannelCount;

long byteRate = 16 * mSampleRate * channels / 8;

byte[] data = new byte[mBufferSize];

try {

in = new FileInputStream(inFilename);

out = new FileOutputStream(outFilename);

totalAudioLen = in.getChannel().size();

totalDataLen = totalAudioLen + 36;//44-8(RIFF+dadasize(4个字节))

writeWaveFileHeader(out, totalAudioLen, totalDataLen,

longSampleRate, channels, byteRate);

while (in.read(data) != -1) {

out.write(data);

}

in.close();

out.close();

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 加入wav文件头

*/

private void writeWaveFileHeader(FileOutputStream out, long totalAudioLen,

long totalDataLen, long longSampleRate, int channels, long byteRate)

throws IOException {

byte[] header = new byte[44];

header[0] = 'R'; // RIFF/WAVE header

header[1] = 'I';

header[2] = 'F';

header[3] = 'F';

header[4] = (byte) (totalDataLen & 0xff);

header[5] = (byte) ((totalDataLen >> 8) & 0xff);

header[6] = (byte) ((totalDataLen >> 16) & 0xff);

header[7] = (byte) ((totalDataLen >> 24) & 0xff);

header[8] = 'W'; //WAVE

header[9] = 'A';

header[10] = 'V';

header[11] = 'E';

header[12] = 'f'; // 'fmt ' chunk

header[13] = 'm';

header[14] = 't';

header[15] = ' ';

header[16] = 16; // 4 bytes: size of 'fmt ' chunk

header[17] = 0;

header[18] = 0;

header[19] = 0;

header[20] = 1; // format = 1

header[21] = 0;

header[22] = (byte) channels;

header[23] = 0;

header[24] = (byte) (longSampleRate & 0xff);

header[25] = (byte) ((longSampleRate >> 8) & 0xff);

header[26] = (byte) ((longSampleRate >> 16) & 0xff);

header[27] = (byte) ((longSampleRate >> 24) & 0xff);

header[28] = (byte) (byteRate & 0xff);

header[29] = (byte) ((byteRate >> 8) & 0xff);

header[30] = (byte) ((byteRate >> 16) & 0xff);

header[31] = (byte) ((byteRate >> 24) & 0xff);

header[32] = (byte) (2 * 16 / 8); // block align

header[33] = 0;

header[34] = 16; // bits per sample

header[35] = 0;

header[36] = 'd'; //data

header[37] = 'a';

header[38] = 't';

header[39] = 'a';

header[40] = (byte) (totalAudioLen & 0xff);

header[41] = (byte) ((totalAudioLen >> 8) & 0xff);

header[42] = (byte) ((totalAudioLen >> 16) & 0xff);

header[43] = (byte) ((totalAudioLen >> 24) & 0xff);

out.write(header, 0, 44);

}

}

2.AudioChannel.java:录音封装类主体

package com.yy.audiochannaldemo;

import android.Manifest;

import android.app.Activity;

import android.content.Context;

import android.content.pm.PackageManager;

import android.media.AudioFormat;

import android.media.AudioRecord;

import android.media.MediaRecorder;

import android.util.Log;

import android.widget.Toast;

import androidx.core.app.ActivityCompat;

import androidx.core.content.ContextCompat;

import org.jtransforms.fft.DoubleFFT_1D;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.text.SimpleDateFormat;

import java.util.Date;

public class AudioChannel {

private int sampleRate;

private int channelConfig;

private int minBufferSize;

private byte[] buffer;

private Thread recordThread;

private AudioRecord audioRecord;

private boolean isRecoding;

private SimpleDateFormat sdf;

String filename;

Context context;

long startTime;

private onResult onResult;

private DoubleFFT_1D fft;

public AudioChannel(int sampleRate, int channels, Context context) {

this.sampleRate = sampleRate;

this.context = context;

channelConfig = channels == 2 ? AudioFormat.CHANNEL_IN_STEREO : AudioFormat.CHANNEL_IN_MONO;

minBufferSize = AudioRecord.getMinBufferSize(sampleRate, channelConfig, AudioFormat.ENCODING_PCM_16BIT);

Log.i("AudioChannel", "minBufferSize: " + minBufferSize);

buffer = new byte[minBufferSize];

sdf = new SimpleDateFormat("yyyy-MM-dd-HH:mm:ss");

fft = new DoubleFFT_1D(minBufferSize/ 2);

}

private double calculateRMS(byte[] audioBuffer) {

double sum = 0.0;

for (byte sample : audioBuffer) {

sum += sample * sample;

}

return Math.sqrt(sum / audioBuffer.length);

}

// 将RMS值转换为分贝值的方法

private double rmsToDB(double rms) {

// 假设参考值为1(通常是最小可听声音的RMS值)

double reference = 1.0;

return 20 * Math.log10(rms / reference);

}

private double calculateHZ(byte[] buffer) {

// Convert byte array to double array for FFT

double[] fftBuffer = new double[buffer.length / 2];

for (int i = 0; i < buffer.length; i += 2) {

short sample = (short) ((buffer[i] << 8) | (buffer[i + 1] & 0xFF));

fftBuffer[i / 2] = sample;

}

// 执行 FFT

fft.realForward(fftBuffer);

double maxAmplitude = 0.0;

int pitchIndex = 0;

for (int i = 0; i < fftBuffer.length-1; i++) {

double amplitude = fftBuffer[i] * fftBuffer[i] + fftBuffer[i + 1] * fftBuffer[i + 1];

if (i < fftBuffer.length / 2 && amplitude > maxAmplitude) {

maxAmplitude = amplitude;

pitchIndex = i;

}

}

//计算hz,不过偏差比较大

double frequency = (double) pitchIndex * sampleRate / (fftBuffer.length / 2) ;

return frequency /100;

}

void initPremission() {

ActivityCompat.requestPermissions((Activity)context, new String[]{Manifest.permission.RECORD_AUDIO}, 169);

}

void initThread() {

this.recordThread=new Thread(){ //开线程

@Override

public void run() {

audioRecord = new AudioRecord(MediaRecorder.AudioSource.MIC, sampleRate, channelConfig, AudioFormat.ENCODING_PCM_16BIT, minBufferSize);

audioRecord.startRecording();

FileOutputStream writer = null;

Date current = new Date();

String time = sdf.format(current);

byte[] audioBuffer = new byte[minBufferSize]; // 创建一个缓冲区

try {

filename = context.getCacheDir() + "/" + time + ".pcm"; //cache目录不需要权限

writer = new FileOutputStream(filename, true);

while (!Thread.currentThread().isInterrupted() && isRecoding) { //如果线程没有Interrupted而且isRecording变量为True代表在录制状态的情况

if (audioRecord.getRecordingState() == AudioRecord.RECORDSTATE_RECORDING) {

audioRecord.read(audioBuffer, 0, minBufferSize); // 读取音频数据到缓冲区

double rms = calculateRMS(audioBuffer);

double db = rmsToDB(rms); //db的值

double hz = calculateHZ(audioBuffer);

int seaconds =(int) (System.currentTimeMillis() -startTime) /1000;

if (isRecoding) {

((Activity)context).runOnUiThread(new Runnable() {

@Override

public void run() {

onResult.update(seaconds,db,hz); //如果还在线程运行状态把信息回调出来

}

});

}

writer.write(audioBuffer);

}

}

} catch (IOException e) {

e.printStackTrace();

} finally {

audioRecord.stop();

audioRecord.release();

audioRecord = null;

try {

writer.close();

} catch (IOException e) {

e.printStackTrace();

}

}

new PcmToWavUtil(44100, AudioFormat.CHANNEL_IN_STEREO, 2, AudioFormat.ENCODING_PCM_16BIT).pcmToWav(filename, filename.replace("pcm","wav"));

}

};

}

public void startLive() { //录制

initThread();

initPremission();

if (ContextCompat.checkSelfPermission(context, Manifest.permission.RECORD_AUDIO) ==

PackageManager.PERMISSION_GRANTED) {

isRecoding = true;

recordThread.start();

startTime = System.currentTimeMillis();

} else {

Toast.makeText(context,"没有录音权限",Toast.LENGTH_LONG).show();

}

}

public void stopLive(int mode) { //mode为-1时代表取消,为0代表取消

if (!isRecoding) return;

try {

isRecoding = false;

recordThread.join();

} catch (Exception e){

isRecoding = false;

e.printStackTrace();

}

new File(filename).delete();

switch (mode) {

case 0:

onResult.finish(filename.replace("pcm","wav")); //正常结束后pcm会被转换成wav

break;

case -1:

new File(filename.replace("pcm","wav")).delete();

onResult.cancel(); //取消回调

break;

}

}

public interface onResult { //三个回调

void update(int seaconds,double db,double hz);

void finish(String filename);

void cancel();

}

public void onResult(onResult onResult) { //功能点击

this.onResult = onResult;

}

}三.Demo部分

Demo下载地址:

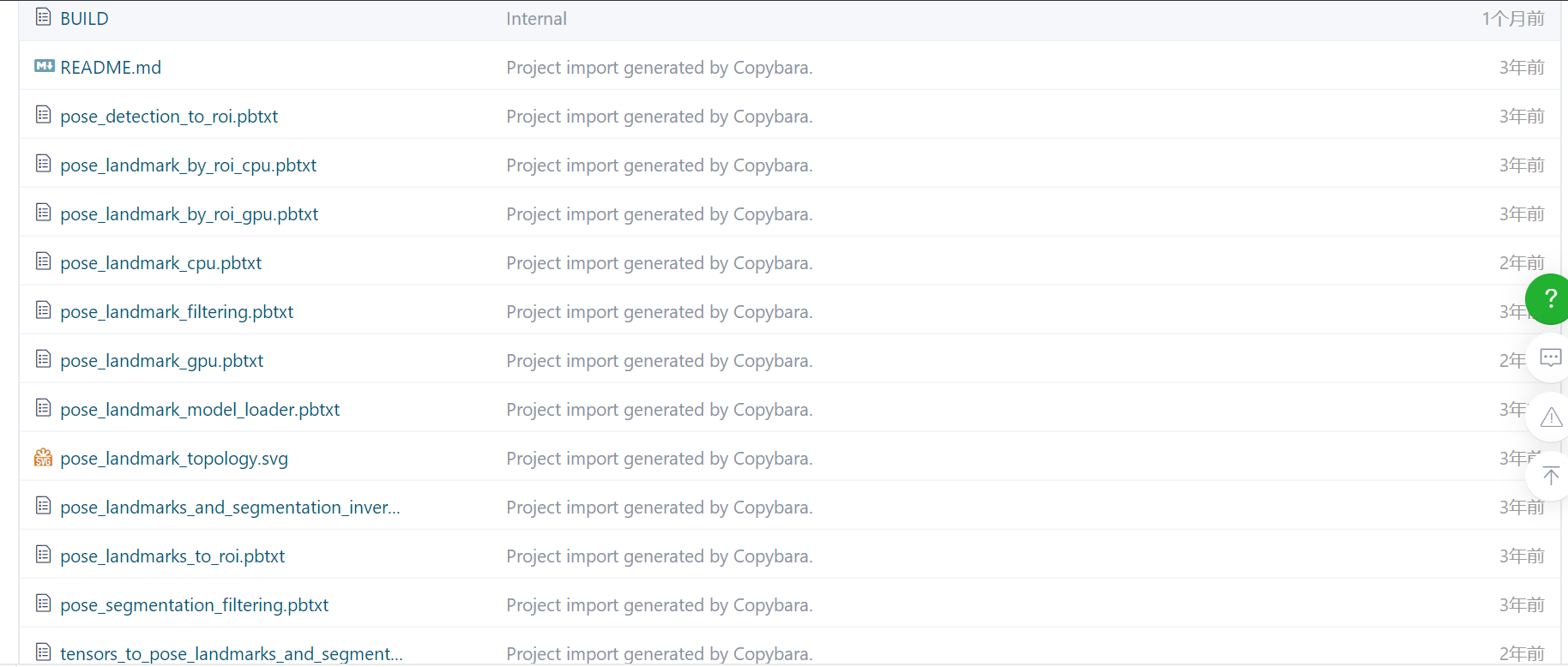

gitee地址:

AudioChannel/demo · keyxh/AndroidUtils - 码云 - 开源中国 (gitee.com)

csdn地址:【免费】安卓开发:挑战每天发布一个封装类02-Wav录音封装类AudioChannel1.0资源资源-CSDN文库

在Demo中有两个Actvity

1.MainActvity:简易demo,示范调用

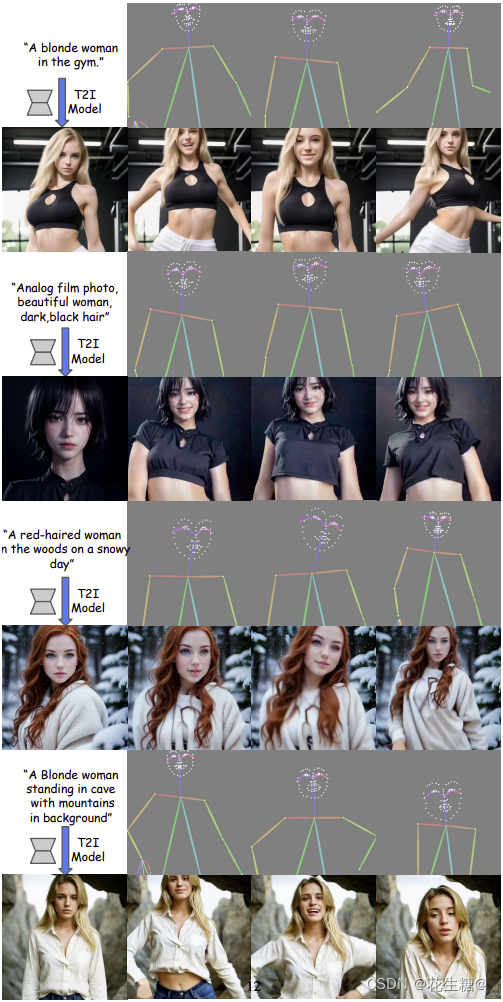

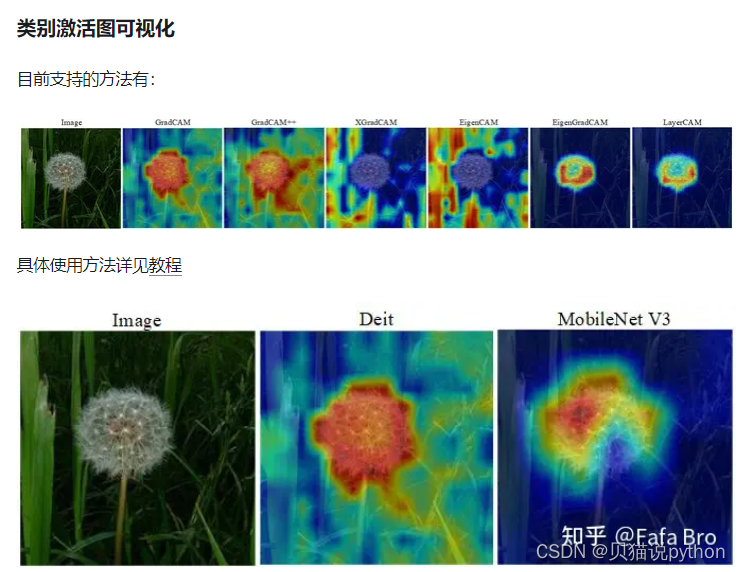

MainActvity的案例是普通调用,调用过程会将参数打印出来,结束时会将音频转base64,界面和logcat如下图所示,MainActvity的demo是没有任何交互

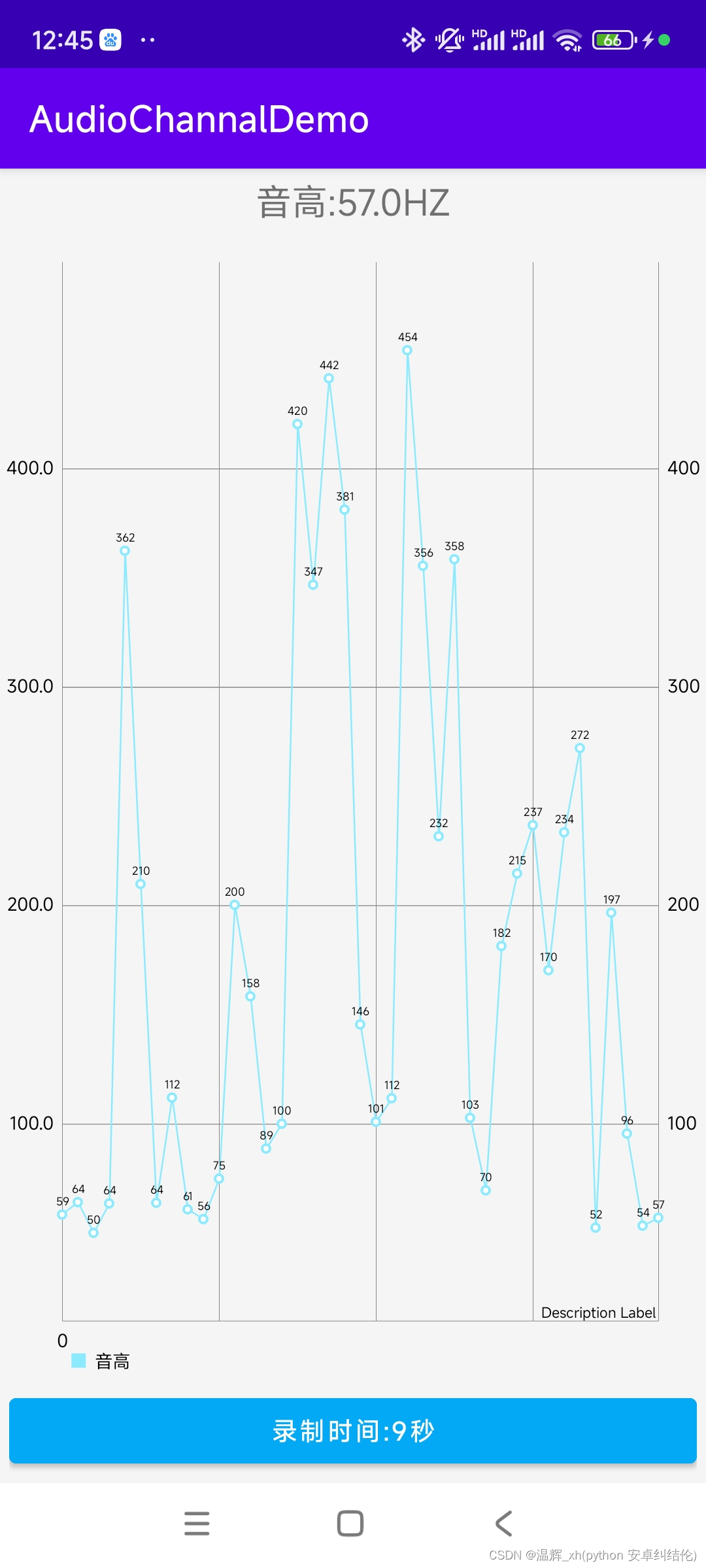

第二个demo:PPActvity(音高测试仪)

第二个demo:PPActvity(音高测试仪)

本来想做音高测试仪的,后来音高频率转换(例如440HZ转A4)没有整出来,后面有空再修改投放gitee,目前最终效果如下:

由福州职业技术学校温辉编写,欢迎搬运帮助更多人,但请带上以上这句。

由福州职业技术学校温辉编写,欢迎搬运帮助更多人,但请带上以上这句。