Oracle Kubernetes Engine(OKE)为用户提供了便捷而强大的Kubernetes服务,而集群自动伸缩(Cluster AutoScaler)则是OKE中的一项重要功能。该功能允许根据工作负载的需要自动调整集群的大小,确保资源的最佳利用和应用的高可用性。

本文将介绍如何在Oracle云上基于OKE配置和使用集群自动伸缩,为您的Kubernetes工作负载提供动态而智能的伸缩策略,以满足不断变化的需求,实现高效的资源利用。

新年快乐!预祝大家在即将到来的龙年里,心想事成、身体健康、幸福安康,生活工作皆有所成。

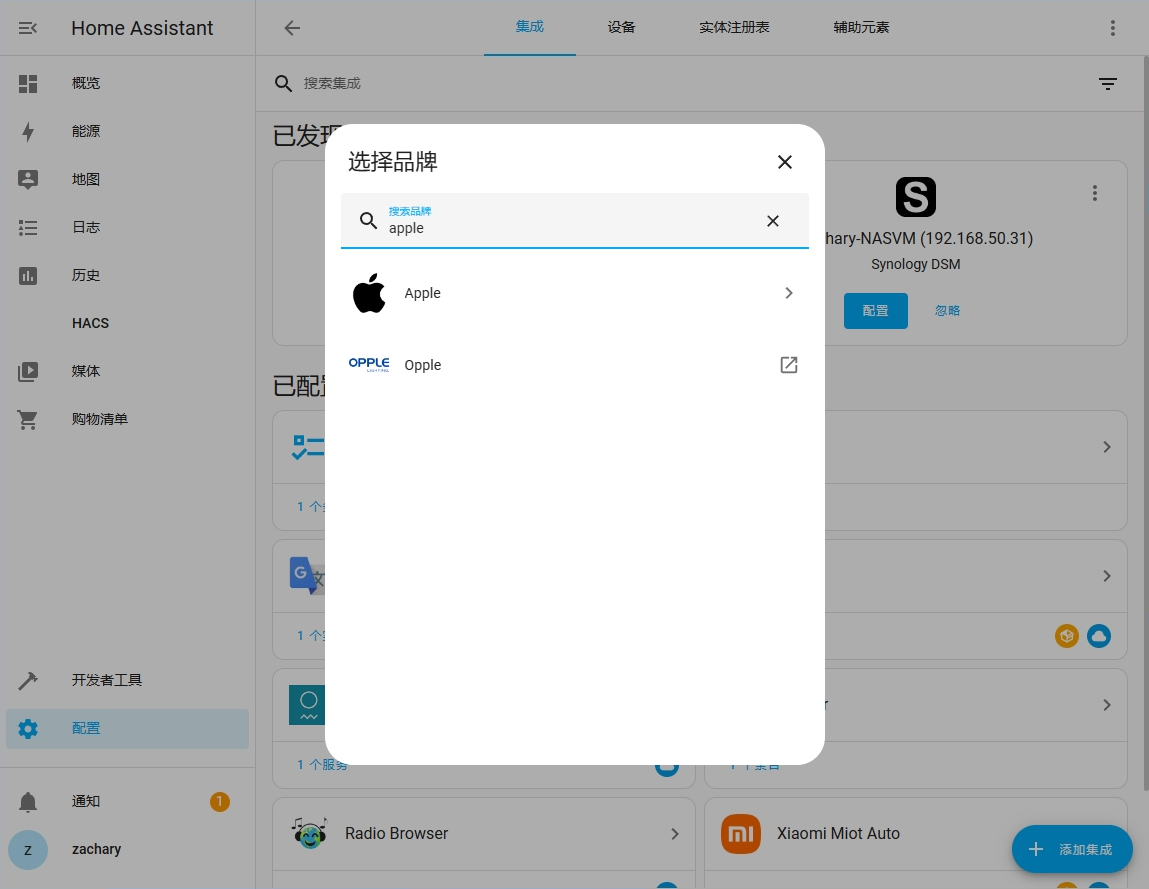

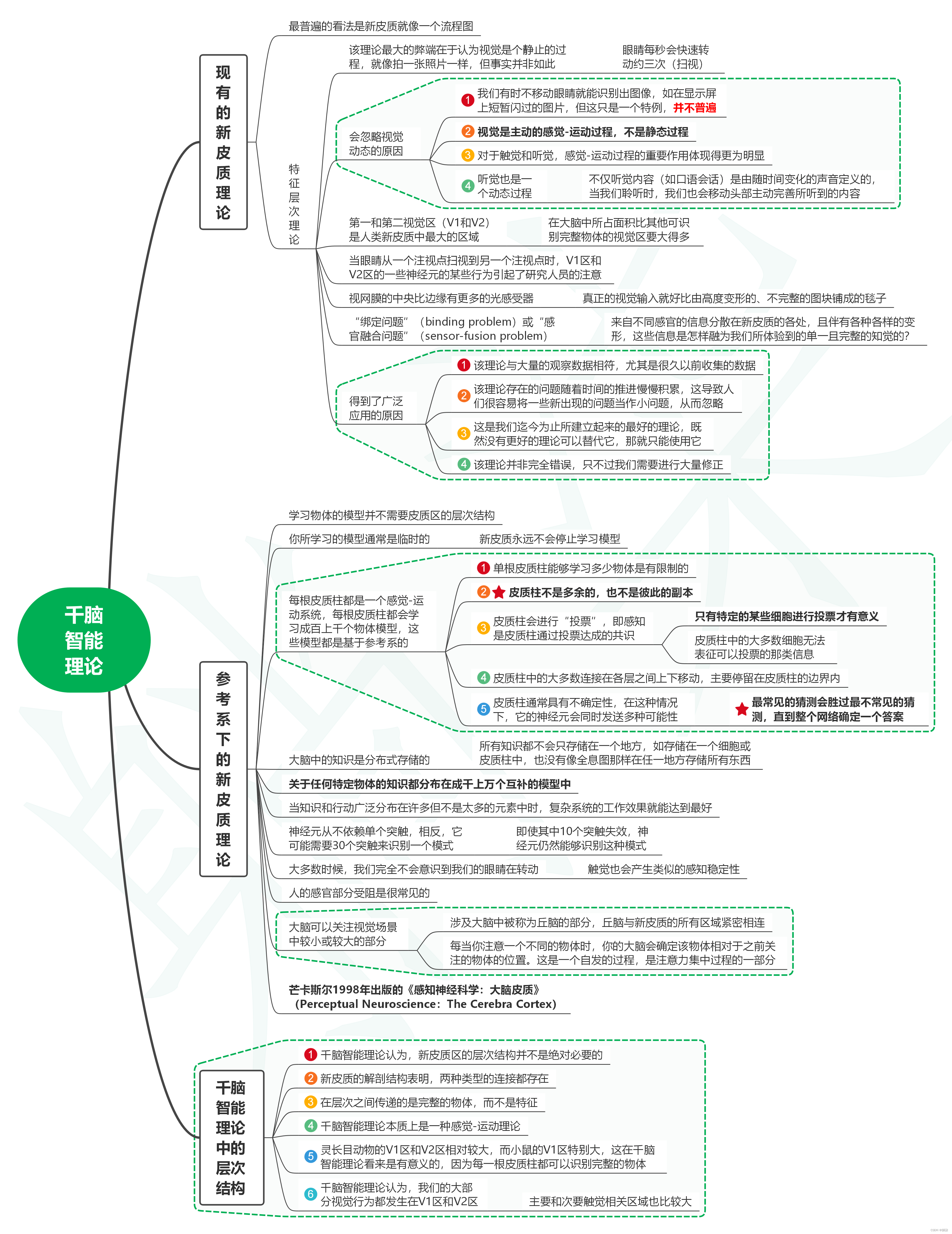

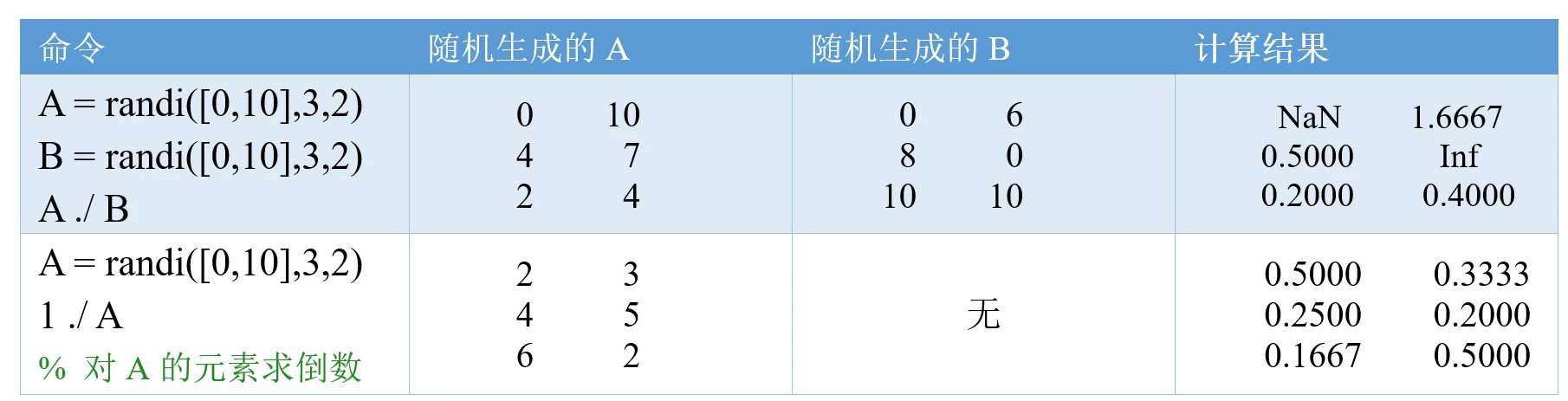

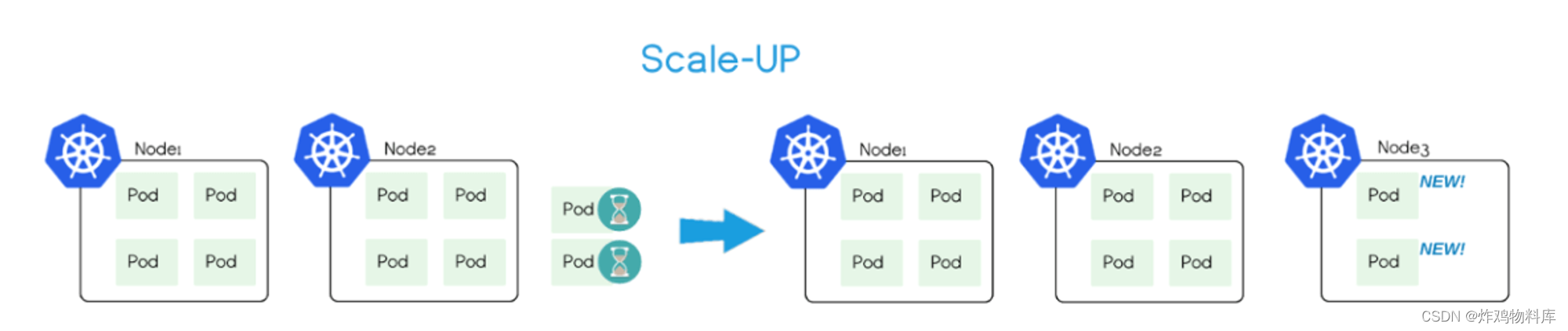

1 Cluster AutoScaler架构图

1.1 扩容架构

1.2 缩容架构

1.3 最佳实践架构

1.3 最佳实践架构

结合 Cluster AutoScaler 和 Horizontal Pod Autoscaler(HPA)是目前在 OKE Kubernetes 集群自动伸缩的最佳实践。同样我们可以在整个过程集成事件通知与告警,完善整个过程的闭环。

2 OKE + Cluster AutoScaler 建议&&注意:

- 至少保留一个集群不使用Autoscaler进行管理,用来运行核心的集群容器。

- 构建多节点的Autoscaler,如果采用单一节点pod,当pod处在不可用状态时,将无法继续进行调度。

- 注意指定的最大值的node,不要超过您租户的资源限制(如:CPU, 内存,node节点的个数)。

- 建议您使用多个节点池,每个节点池由独立的AutoScaler控制。

- 应用程序尽可能的容忍Cluster Autoscaler删除节点带来的中断性。

- 不要手动去管理由cluster autoscaler管理的节点池。

- 如果OKE升级版本后,需要手动升级Cluster Autoscaler,Cluster Autoscaler不会自动完成升级。

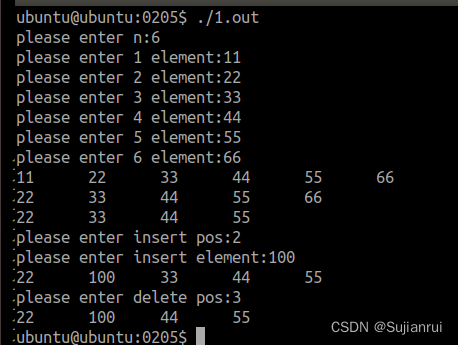

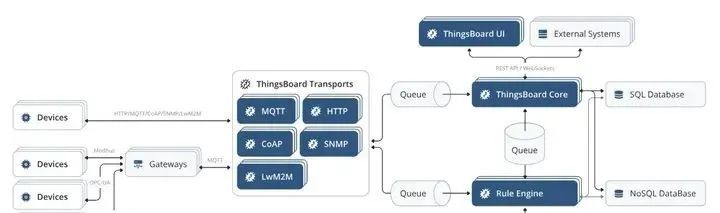

3 Cluster Autoscaler在OCI上的使用

- 创建隔间(compartment)级别的动态组(dynamic group) - 允许Cluster Autoscaler管理node pool的资源

- 创建policy以允许管理节点池

- 复制或自定义Cluster Autoscaler配置文件

- 部署Kubernetes Cluster Autoscaler清单

- 查看缩放管理

3.1 创建租户级别的dynamic group

# oracle-oke-cluster-autoscaler-dyn-grp

ALL {instance.compartment.id = '<compartment id>'}3.2 创建Policy以管理node pools

Allow dynamic-group '<dynamic group name>' to manage cluster-node-pools in compartment '<comparment name>'

Allow dynamic-group '<dynamic group name>' to manage instance-family in compartment '<comparment name>'

Allow dynamic-group '<dynamic group name>' to use subnets in compartment '<comparment name>'

Allow dynamic-group '<dynamic group name>' to read virtual-network-family in compartment '<comparment name>'

Allow dynamic-group '<dynamic group name>' to use vnics in compartment '<comparment name>'

Allow dynamic-group '<dynamic group name>' to inspect compartments in compartment '<comparment name>'

#####

Allow dynamic-group oracle-oke-cluster-autoscaler-dyn-grp to manage cluster-node-pools in compartment oraclePOC

Allow dynamic-group oracle-oke-cluster-autoscaler-dyn-grp to manage instance-family in compartment oraclePOC

Allow dynamic-group oracle-oke-cluster-autoscaler-dyn-grp to use subnets in compartment oraclePOC

Allow dynamic-group oracle-oke-cluster-autoscaler-dyn-grp to read virtual-network-family in compartment oraclePOC

Allow dynamic-group oracle-oke-cluster-autoscaler-dyn-grp to use vnics in compartment oraclePOC

Allow dynamic-group oracle-oke-cluster-autoscaler-dyn-grp to inspect compartments in compartment oraclePOC

Allow dynamic-group oracle-oke-cluster-autoscaler-dyn-grp to manage instance-pools in compartment oraclePOC

Allow dynamic-group oracle-oke-cluster-autoscaler-dyn-grp to manage instance-configurations in compartment oraclePOC3.3 构建 Cluster Autoscaler 配置清单 (官方示例)

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cluster-autoscaler

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

rules:

- apiGroups: [""]

resources: ["events", "endpoints"]

verbs: ["create", "patch"]

- apiGroups: [""]

resources: ["pods/eviction"]

verbs: ["create"]

- apiGroups: [""]

resources: ["pods/status"]

verbs: ["update"]

- apiGroups: [""]

resources: ["endpoints"]

resourceNames: ["cluster-autoscaler"]

verbs: ["get", "update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["watch", "list", "get", "patch", "update"]

- apiGroups: [""]

resources:

- "pods"

- "services"

- "replicationcontrollers"

- "persistentvolumeclaims"

- "persistentvolumes"

verbs: ["watch", "list", "get"]

- apiGroups: ["extensions"]

resources: ["replicasets", "daemonsets"]

verbs: ["watch", "list", "get"]

- apiGroups: ["policy"]

resources: ["poddisruptionbudgets"]

verbs: ["watch", "list"]

- apiGroups: ["apps"]

resources: ["statefulsets", "replicasets", "daemonsets"]

verbs: ["watch", "list", "get"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses", "csinodes"]

verbs: ["watch", "list", "get"]

- apiGroups: ["batch", "extensions"]

resources: ["jobs"]

verbs: ["get", "list", "watch", "patch"]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["create"]

- apiGroups: ["coordination.k8s.io"]

resourceNames: ["cluster-autoscaler"]

resources: ["leases"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create","list","watch"]

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["cluster-autoscaler-status", "cluster-autoscaler-priority-expander"]

verbs: ["delete", "get", "update", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: cluster-autoscaler

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-autoscaler

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: cluster-autoscaler

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

app: cluster-autoscaler

spec:

replicas: 3

selector:

matchLabels:

app: cluster-autoscaler

template:

metadata:

labels:

app: cluster-autoscaler

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '8085'

spec:

serviceAccountName: cluster-autoscaler

containers:

- image: iad.ocir.io/oracle/oci-cluster-autoscaler:{{ image tag }}

name: cluster-autoscaler

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 100m

memory: 300Mi

command:

- ./cluster-autoscaler

- --v=4

- --stderrthreshold=info

- --cloud-provider=oci-oke

- --max-node-provision-time=25m

- --nodes=1:5:{{ node pool ocid 1 }}

- --nodes=1:5:{{ node pool ocid 2 }}

- --scale-down-delay-after-add=10m

- --scale-down-unneeded-time=10m

- --unremovable-node-recheck-timeout=5m

- --balance-similar-node-groups

- --balancing-ignore-label=displayName

- --balancing-ignore-label=hostname

- --balancing-ignore-label=internal_addr

- --balancing-ignore-label=oci.oraclecloud.com/fault-domain

imagePullPolicy: "Always"

env:

- name: OKE_USE_INSTANCE_PRINCIPAL

value: "true"

- name: OCI_SDK_APPEND_USER_AGENT

value: "oci-oke-cluster-autoscaler"3.3.1 image tag 设置

可以按照就近的区域选择下载的镜像,具体参考表格:

- image: iad.ocir.io/oracle/oci-cluster-autoscaler:{{ image tag }}

# 示例

- image: lhr.ocir.io/oracle/oci-cluster-autoscaler:1.24.0-5| 镜像所在区域 | Kubernetes Version | Image Path |

| Germany Central (Frankfurt) | Kubernetes 1.25 | fra.ocir.io/oracle/oci-cluster-autoscaler:1.25.0-6 |

|---|---|---|

| Germany Central (Frankfurt) | Kubernetes 1.26 | fra.ocir.io/oracle/oci-cluster-autoscaler:1.26.2-11 |

| Germany Central (Frankfurt) | Kubernetes 1.27 | fra.ocir.io/oracle/oci-cluster-autoscaler:1.27.2-9 |

| Germany Central (Frankfurt) | Kubernetes 1.28 | fra.ocir.io/oracle/oci-cluster-autoscaler:1.28.0-5 |

| UK South (London) | Kubernetes 1.25 | lhr.ocir.io/oracle/oci-cluster-autoscaler:1.25.0-6 |

| UK South (London) | Kubernetes 1.26 | lhr.ocir.io/oracle/oci-cluster-autoscaler:1.26.2-11 |

| UK South (London) | Kubernetes 1.27 | lhr.ocir.io/oracle/oci-cluster-autoscaler:1.27.2-9 |

| UK South (London) | Kubernetes 1.28 | lhr.ocir.io/oracle/oci-cluster-autoscaler:1.28.0-5 |

| US East (Ashburn) | Kubernetes 1.25 | iad.ocir.io/oracle/oci-cluster-autoscaler:1.25.0-6 |

| US East (Ashburn) | Kubernetes 1.26 | iad.ocir.io/oracle/oci-cluster-autoscaler:1.26.2-11 |

| US East (Ashburn) | Kubernetes 1.27 | iad.ocir.io/oracle/oci-cluster-autoscaler:1.27.2-9 |

| US East (Ashburn) | Kubernetes 1.28 | iad.ocir.io/oracle/oci-cluster-autoscaler:1.28.0-5 |

| US West (Phoenix) | Kubernetes 1.25 | phx.ocir.io/oracle/oci-cluster-autoscaler:1.25.0-6 |

| US West (Phoenix) | Kubernetes 1.26 | phx.ocir.io/oracle/oci-cluster-autoscaler:1.26.2-11 |

| US West (Phoenix) | Kubernetes 1.27 | phx.ocir.io/oracle/oci-cluster-autoscaler:1.27.2-9 |

| US West (Phoenix) | Kubernetes 1.28 | phx.ocir.io/oracle/oci-cluster-autoscaler:1.28.0-5 |

3.3.2 node设置

通过指定node pool 的 ocid 进行对应节点池的扩缩容

--nodes=<min-nodes>:<max-nodes>:<nodepool-ocid>

- --nodes=1:5:{{ node pool ocid 1 }}4 验证扩缩容能力

4.1 创建 nginx deployment验证

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

resources:

requests:

memory: "500Mi"4.2 扩展Deployment Pod数量

kubectl scale deployment nginx-deployment --replicas=1004.3 观察Deployment的变化

kubectl get deployment nginx-deployment --watch5 参考地址

Cluster AutoScaler架构

Cluster Autoscaler - Kubernetes指南

OCI OKE Cluster AutoScaler 部署

Using the Kubernetes Cluster Autoscaler

🚀 炸鸡物料库 🚀

🔍 内容定位: IT技术领域,云计算、云原生、DevOps 和 AI 等相关的技术资讯、实践和经验分享。面向开发、系统和云计算,提供实用的技术内容。

🎯 目标受众: 面向开发者、系统管理员和云计算从业者,提供实用的技术内容。

🗓️ 更新频率:每周更新,每次分享都为您带来有价值的技术内容。

🤝 互动与反馈: 鼓励读者留言、评论,有问题随时提问,我们将积极互动并提供帮助。

💌 订阅方式: 扫描二维码或搜索“炸鸡物料库”关注,不错过每一篇精彩内容。

🌐 合作与分享: 如果您有技术分享或合作计划,欢迎联系我们,共同成长!