移动设备

在 iOS 上进行图像分割 DeepLabV3

原文:

pytorch.org/tutorials/beginner/deeplabv3_on_ios.html译者:飞龙

协议:CC BY-NC-SA 4.0

作者:Jeff Tang

审阅者:Jeremiah Chung

介绍

语义图像分割是一种计算机视觉任务,使用语义标签标记输入图像的特定区域。PyTorch 语义图像分割DeepLabV3 模型可用于使用20 个语义类别标记图像区域,包括自行车、公共汽车、汽车、狗和人等。图像分割模型在自动驾驶和场景理解等应用中非常有用。

在本教程中,我们将提供一个逐步指南,介绍如何在 iOS 上准备和运行 PyTorch DeepLabV3 模型,从拥有一个您可能想要在 iOS 上使用的模型的开始,到拥有一个使用该模型的完整 iOS 应用程序的结束。我们还将介绍如何检查您的下一个喜爱的预训练 PyTorch 模型是否可以在 iOS 上运行的实用和一般提示,以及如何避免陷阱。

注意

在阅读本教程之前,您应该查看用于 iOS 的 PyTorch Mobile,并尝试一下 PyTorch iOS HelloWorld示例应用程序。本教程将超越图像分类模型,通常是移动设备上部署的第一种模型。本教程的完整代码可在此处找到。

学习目标

在本教程中,您将学习如何:

-

将 DeepLabV3 模型转换为 iOS 部署。

-

将模型对示例输入图像的输出在 Python 中获取,并将其与 iOS 应用程序的输出进行比较。

-

构建一个新的 iOS 应用程序或重用一个 iOS 示例应用程序来加载转换后的模型。

-

准备模型期望的格式的输入并处理模型输出。

-

完成 UI、重构、构建和运行应用程序,看到图像分割的效果。

先决条件

-

PyTorch 1.6 或 1.7

-

torchvision 0.7 或 0.8

-

Xcode 11 或 12

步骤

1. 将 DeepLabV3 模型转换为 iOS 部署

在 iOS 上部署模型的第一步是将模型转换为TorchScript格式。

注意

目前并非所有 PyTorch 模型都可以转换为 TorchScript,因为模型定义可能使用 TorchScript 中没有的语言特性,TorchScript 是 Python 的一个子集。有关更多详细信息,请参阅脚本和优化配方。

只需运行下面的脚本以生成脚本化模型 deeplabv3_scripted.pt:

import torch

# use deeplabv3_resnet50 instead of deeplabv3_resnet101 to reduce the model size

model = torch.hub.load('pytorch/vision:v0.8.0', 'deeplabv3_resnet50', pretrained=True)

model.eval()

scriptedm = torch.jit.script(model)

torch.jit.save(scriptedm, "deeplabv3_scripted.pt")

生成的 deeplabv3_scripted.pt 模型文件的大小应该约为 168MB。理想情况下,模型还应该进行量化以显著减小大小并加快推断速度,然后再部署到 iOS 应用程序上。要对量化有一个一般的了解,请参阅量化配方和那里的资源链接。我们将详细介绍如何在未来的教程或配方中正确应用一种称为后训练静态量化的量化工作流程到 DeepLabV3 模型。

2. 在 Python 中获取模型的示例输入和输出

现在我们有了一个脚本化的 PyTorch 模型,让我们使用一些示例输入来测试,以确保模型在 iOS 上能够正确工作。首先,让我们编写一个 Python 脚本,使用模型进行推断并检查输入和输出。对于这个 DeepLabV3 模型的示例,我们可以重用步骤 1 中的代码以及DeepLabV3 模型 hub 站点中的代码。将以下代码片段添加到上面的代码中:

from PIL import Image

from torchvision import transforms

input_image = Image.open("deeplab.jpg")

preprocess = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

input_tensor = preprocess(input_image)

input_batch = input_tensor.unsqueeze(0)

with torch.no_grad():

output = model(input_batch)['out'][0]

print(input_batch.shape)

print(output.shape)

从这里下载 deeplab.jpg 并运行上面的脚本以查看模型的输入和输出的形状:

torch.Size([1, 3, 400, 400])

torch.Size([21, 400, 400])

因此,如果您向在 iOS 上运行的模型提供大小为 400x400 的相同图像输入 deeplab.jpg,则模型的输出应该具有大小[21, 400, 400]。您还应该至少打印出输入和输出的实际数据的开头部分,以在下面的第 4 步中与在 iOS 应用程序中运行时模型的实际输入和输出进行比较。

3. 构建一个新的 iOS 应用程序或重用示例应用程序并加载模型

首先,按照为 iOS 准备模型的步骤 3 使用启用了 PyTorch Mobile 的 Xcode 项目中的模型。因为本教程中使用的 DeepLabV3 模型和 PyTorch Hello World iOS 示例中使用的 MobileNet v2 模型都是计算机视觉模型,您可以选择从HelloWorld 示例存储库开始,作为重用加载模型和处理输入输出代码的模板。

现在让我们将在第 2 步中使用的 deeplabv3_scripted.pt 和 deeplab.jpg 添加到 Xcode 项目中,并修改 ViewController.swift 以类似于:

class ViewController: UIViewController {

var image = UIImage(named: "deeplab.jpg")!

override func viewDidLoad() {

super.viewDidLoad()

}

private lazy var module: TorchModule = {

if let filePath = Bundle.main.path(forResource: "deeplabv3_scripted",

ofType: "pt"),

let module = TorchModule(fileAtPath: filePath) {

return module

} else {

fatalError("Can't load the model file!")

}

}()

}

然后在 return module 一行设置断点,构建并运行应用程序。应用程序应该在断点处停止,这意味着在 iOS 上成功加载了脚本化模型。

4. 处理模型输入和输出以进行模型推断

在上一步加载模型后,让我们验证它是否能够使用预期的输入并生成预期的输出。由于 DeepLabV3 模型的模型输入是一幅图像,与 Hello World 示例中的 MobileNet v2 相同,我们将重用来自 Hello World 的TorchModule.mm文件中的一些代码用于输入处理。将 TorchModule.mm 中的 predictImage 方法实现替换为以下代码:

- (unsigned char*)predictImage:(void*)imageBuffer {

// 1\. the example deeplab.jpg size is size 400x400 and there are 21 semantic classes

const int WIDTH = 400;

const int HEIGHT = 400;

const int CLASSNUM = 21;

at::Tensor tensor = torch::from_blob(imageBuffer, {1, 3, WIDTH, HEIGHT}, at::kFloat);

torch::autograd::AutoGradMode guard(false);

at::AutoNonVariableTypeMode non_var_type_mode(true);

// 2\. convert the input tensor to an NSMutableArray for debugging

float* floatInput = tensor.data_ptr<float>();

if (!floatInput) {

return nil;

}

NSMutableArray* inputs = [[NSMutableArray alloc] init];

for (int i = 0; i < 3 * WIDTH * HEIGHT; i++) {

[inputs addObject:@(floatInput[i])];

}

// 3\. the output of the model is a dictionary of string and tensor, as

// specified at https://pytorch.org/hub/pytorch_vision_deeplabv3_resnet101

auto outputDict = _impl.forward({tensor}).toGenericDict();

// 4\. convert the output to another NSMutableArray for easy debugging

auto outputTensor = outputDict.at("out").toTensor();

float* floatBuffer = outputTensor.data_ptr<float>();

if (!floatBuffer) {

return nil;

}

NSMutableArray* results = [[NSMutableArray alloc] init];

for (int i = 0; i < CLASSNUM * WIDTH * HEIGHT; i++) {

[results addObject:@(floatBuffer[i])];

}

return nil;

}

注意

DeepLabV3 模型的模型输出是一个字典,因此我们使用 toGenericDict 来正确提取结果。对于其他模型,模型输出也可能是单个张量或张量元组,等等。

通过上面显示的代码更改,您可以在填充输入和结果的两个 for 循环之后设置断点,并将它们与第 2 步中看到的模型输入和输出数据进行比较,以查看它们是否匹配。对于在 iOS 和 Python 上运行的模型相同的输入,应该得到相同的输出。

到目前为止,我们所做的一切只是确认我们感兴趣的模型可以在我们的 iOS 应用程序中像在 Python 中一样被脚本化并正确运行。到目前为止,为在 iOS 应用程序中使用模型走过的步骤消耗了大部分,如果不是全部,我们的应用程序开发时间,类似于数据预处理是典型机器学习项目中最费力的部分。

5. 完成 UI,重构,构建和运行应用程序

现在我们准备完成应用程序和 UI,以实际查看处理后的结果作为新图像。输出处理代码应该像这样,添加到 TorchModule.mm 中第 4 步代码片段的末尾 - 记得首先删除暂时放在那里以使代码构建和运行的 return nil;行:

// see the 20 semantic classes link in Introduction

const int DOG = 12;

const int PERSON = 15;

const int SHEEP = 17;

NSMutableData* data = [NSMutableData dataWithLength:

sizeof(unsigned char) * 3 * WIDTH * HEIGHT];

unsigned char* buffer = (unsigned char*)[data mutableBytes];

// go through each element in the output of size [WIDTH, HEIGHT] and

// set different color for different classnum

for (int j = 0; j < WIDTH; j++) {

for (int k = 0; k < HEIGHT; k++) {

// maxi: the index of the 21 CLASSNUM with the max probability

int maxi = 0, maxj = 0, maxk = 0;

float maxnum = -100000.0;

for (int i = 0; i < CLASSNUM; i++) {

if ([results[i * (WIDTH * HEIGHT) + j * WIDTH + k] floatValue] > maxnum) {

maxnum = [results[i * (WIDTH * HEIGHT) + j * WIDTH + k] floatValue];

maxi = i; maxj = j; maxk = k;

}

}

int n = 3 * (maxj * width + maxk);

// color coding for person (red), dog (green), sheep (blue)

// black color for background and other classes

buffer[n] = 0; buffer[n+1] = 0; buffer[n+2] = 0;

if (maxi == PERSON) buffer[n] = 255;

else if (maxi == DOG) buffer[n+1] = 255;

else if (maxi == SHEEP) buffer[n+2] = 255;

}

}

return buffer;

这里的实现基于 DeepLabV3 模型的理解,该模型为宽度高度的输入图像输出大小为[21,宽度,高度]的张量。宽度高度输出数组中的每个元素是介于 0 和 20 之间的值(介绍中描述的 21 个语义标签的总和),该值用于设置特定颜色。这里的分割颜色编码基于具有最高概率的类,并且您可以扩展颜色编码以适用于您自己数据集中的所有类别。

在输出处理之后,您还需要调用一个辅助函数将 RGB 缓冲区转换为 UIImage 实例,以便显示在 UIImageView 上。您可以参考代码存储库中 UIImageHelper.mm 中定义的 convertRGBBufferToUIImage 示例代码。

此应用程序的 UI 也类似于 Hello World 的 UI,只是您不需要 UITextView 来显示图像分类结果。您还可以添加两个按钮 Segment 和 Restart,如代码存储库中所示,以运行模型推断并在显示分割结果后显示原始图像。

在我们运行应用程序之前的最后一步是将所有部分连接在一起。修改 ViewController.swift 文件以使用在存储库中重构并更改为 segmentImage 的 predictImage,以及您在 ViewController.swift 中的示例代码中构建的辅助函数。将按钮连接到操作,然后您就可以开始了。

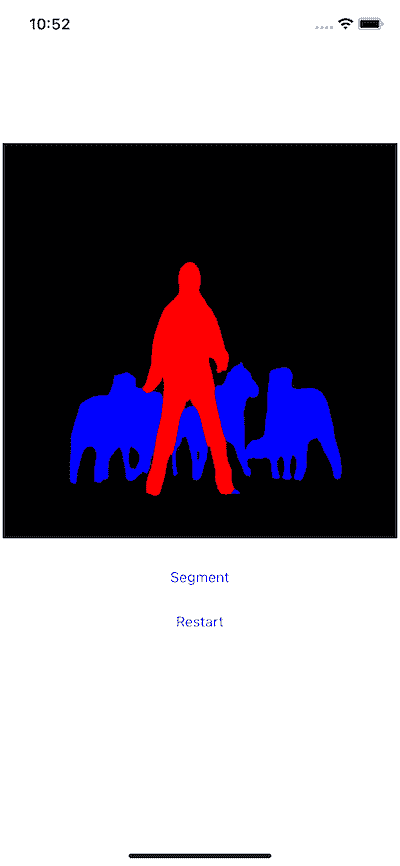

现在当您在 iOS 模拟器或实际 iOS 设备上运行应用程序时,您将看到以下屏幕:

总结

在本教程中,我们描述了将预训练的 PyTorch DeepLabV3 模型转换为 iOS 所需的步骤,以及如何确保模型可以成功在 iOS 上运行。我们的重点是帮助您了解确认模型确实可以在 iOS 上运行的过程。完整的代码存储库可在此处找到。

更高级的主题,如量化和在 iOS 上使用迁移学习模型或自己的模型,将很快在未来的演示应用程序和教程中介绍。

了解更多

-

PyTorch 移动站点

-

DeepLabV3 模型

-

DeepLabV3 论文

在 Android 上进行图像分割 DeepLabV3

原文:

pytorch.org/tutorials/beginner/deeplabv3_on_android.html译者:飞龙

协议:CC BY-NC-SA 4.0

作者:Jeff Tang

审阅者:Jeremiah Chung

介绍

语义图像分割是一种计算机视觉任务,使用语义标签来标记输入图像的特定区域。PyTorch 语义图像分割DeepLabV3 模型可用于使用20 个语义类别标记图像区域,例如自行车、公共汽车、汽车、狗和人。图像分割模型在自动驾驶和场景理解等应用中非常有用。

在本教程中,我们将提供一个逐步指南,介绍如何在 Android 上准备和运行 PyTorch DeepLabV3 模型,从拥有一个您可能想要在 Android 上使用的模型的开始,到拥有一个使用该模型的完整 Android 应用程序的结束。我们还将介绍如何检查您的下一个有利的预训练 PyTorch 模型是否可以在 Android 上运行的实用和一般提示,以及如何避免陷阱。

注意

在阅读本教程之前,您应该查看用于 Android 的 PyTorch Mobile并尝试一下 PyTorch Android Hello World示例应用程序。本教程将超越通常部署在移动设备上的第一种模型——图像分类模型。本教程的完整代码可在此处找到。

学习目标

在本教程中,您将学习如何:

-

将 DeepLabV3 模型转换为 Android 部署。

-

在 Python 中获取示例输入图像的模型输出,并将其与 Android 应用程序的输出进行比较。

-

构建一个新的 Android 应用程序或重用一个 Android 示例应用程序来加载转换后的模型。

-

准备模型期望的输入格式并处理模型输出。

-

完成 UI,重构,构建并运行应用程序,以查看图像分割的效果。

先决条件

-

PyTorch 1.6 或 1.7

-

torchvision 0.7 或 0.8

-

Android Studio 3.5.1 或更高版本,已安装 NDK

步骤

1. 将 DeepLabV3 模型转换为 Android 部署

在 Android 上部署模型的第一步是将模型转换为TorchScript格式。

注意

目前并非所有 PyTorch 模型都可以转换为 TorchScript,因为模型定义可能使用 TorchScript 中没有的语言特性,TorchScript 是 Python 的一个子集。有关更多详细信息,请参阅脚本和优化配方。

只需运行下面的脚本以生成脚本化模型 deeplabv3_scripted.pt:

import torch

# use deeplabv3_resnet50 instead of resnet101 to reduce the model size

model = torch.hub.load('pytorch/vision:v0.7.0', 'deeplabv3_resnet50', pretrained=True)

model.eval()

scriptedm = torch.jit.script(model)

torch.jit.save(scriptedm, "deeplabv3_scripted.pt")

生成的 deeplabv3_scripted.pt 模型文件的大小应该约为 168MB。理想情况下,模型还应该进行量化以显著减小大小并加快推理速度,然后再部署到 Android 应用程序上。要对量化有一个一般的理解,请参阅量化配方和那里的资源链接。我们将详细介绍如何在未来的教程或配方中正确应用名为后训练静态量化的量化工作流程到 DeepLabV3 模型。

2. 在 Python 中获取模型的示例输入和输出

现在我们有了一个脚本化的 PyTorch 模型,让我们使用一些示例输入进行测试,以确保模型在 Android 上能够正确工作。首先,让我们编写一个使用模型进行推断并检查输入和输出的 Python 脚本。对于这个 DeepLabV3 模型的示例,我们可以重用第 1 步中的代码和DeepLabV3 模型中心网站中的代码。将以下代码片段添加到上面的代码中:

from PIL import Image

from torchvision import transforms

input_image = Image.open("deeplab.jpg")

preprocess = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

input_tensor = preprocess(input_image)

input_batch = input_tensor.unsqueeze(0)

with torch.no_grad():

output = model(input_batch)['out'][0]

print(input_batch.shape)

print(output.shape)

从这里下载 deeplab.jpg,然后运行上面的脚本,您将看到模型的输入和输出的形状:

torch.Size([1, 3, 400, 400])

torch.Size([21, 400, 400])

因此,如果您在 Android 上向模型提供大小为 400x400 的相同图像输入 deeplab.jpg,则模型的输出应该具有大小[21, 400, 400]。您还应该至少打印出输入和输出的实际数据的开始部分,以便在下面的第 4 步中与在 Android 应用程序中运行时模型的实际输入和输出进行比较。

3. 构建一个新的 Android 应用程序或重用示例应用程序并加载模型

首先,按照为 Android 准备模型的步骤 3 使用我们的模型在启用 PyTorch Mobile 的 Android Studio 项目中。因为本教程中使用的 DeepLabV3 和 PyTorch Hello World Android 示例中使用的 MobileNet v2 都是计算机视觉模型,您还可以获取Hello World 示例存储库以便更轻松地修改加载模型和处理输入和输出的代码。本步骤和第 4 步的主要目标是确保在 Android 上正确运行第 1 步生成的 deeplabv3_scripted.pt 模型。

现在让我们将在第 2 步中使用的 deeplabv3_scripted.pt 和 deeplab.jpg 添加到 Android Studio 项目中,并修改 MainActivity 中的 onCreate 方法如下:

Module module = null;

try {

module = Module.load(assetFilePath(this, "deeplabv3_scripted.pt"));

} catch (IOException e) {

Log.e("ImageSegmentation", "Error loading model!", e);

finish();

}

然后在 finish()一行设置断点,构建并运行应用程序。如果应用程序没有在断点处停止,这意味着在 Android 上成功加载了第 1 步中的脚本模型。

4. 处理模型输入和输出以进行模型推断

在上一步加载模型后,让我们验证它是否能够使用预期的输入并生成预期的输出。由于 DeepLabV3 模型的模型输入与 Hello World 示例中的 MobileNet v2 相同,我们将重用 Hello World 中的MainActivity.java文件中的一些代码用于输入处理。将 MainActivity.java 中line 50和 73 之间的代码片段替换为以下代码:

final Tensor inputTensor = TensorImageUtils.bitmapToFloat32Tensor(bitmap,

TensorImageUtils.TORCHVISION_NORM_MEAN_RGB,

TensorImageUtils.TORCHVISION_NORM_STD_RGB);

final float[] inputs = inputTensor.getDataAsFloatArray();

Map<String, IValue> outTensors =

module.forward(IValue.from(inputTensor)).toDictStringKey();

// the key "out" of the output tensor contains the semantic masks

// see https://pytorch.org/hub/pytorch_vision_deeplabv3_resnet101

final Tensor outputTensor = outTensors.get("out").toTensor();

final float[] outputs = outputTensor.getDataAsFloatArray();

int width = bitmap.getWidth();

int height = bitmap.getHeight();

注意

DeepLabV3 模型的模型输出是一个字典,因此我们使用 toDictStringKey 来正确提取结果。对于其他模型,模型输出也可能是单个张量或张量元组等。

通过上面显示的代码更改,您可以在 final float[] inputs 和 final float[] outputs 之后设置断点,这些断点将将输入张量和输出张量数据填充到浮点数组中以便进行简单调试。运行应用程序,当它在断点处停止时,将输入和输出中的数字与第 2 步中看到的模型输入和输出数据进行比较,以查看它们是否匹配。对于在 Android 和 Python 上运行的模型相同的输入,您应该获得相同的输出。

警告

由于某些 Android 模拟器的浮点实现问题,当在 Android 模拟器上运行时,您可能会看到相同图像输入的不同模型输出。因此,最好在真实的 Android 设备上测试应用程序。

到目前为止,我们所做的一切只是确认我们感兴趣的模型可以在我们的 Android 应用程序中像在 Python 中一样被脚本化和正确运行。到目前为止,我们在 iOS 应用程序中使用模型的步骤消耗了大部分,如果不是大部分,我们的应用程序开发时间,类似于数据预处理是典型机器学习项目中最繁重的工作。

5. 完成用户界面,重构,构建和运行应用程序

现在我们准备完成应用程序和用户界面,实际上看到处理后的结果作为新图像。输出处理代码应该像这样,添加到第 4 步中代码片段的末尾:

int[] intValues = new int[width * height];

// go through each element in the output of size [WIDTH, HEIGHT] and

// set different color for different classnum

for (int j = 0; j < width; j++) {

for (int k = 0; k < height; k++) {

// maxi: the index of the 21 CLASSNUM with the max probability

int maxi = 0, maxj = 0, maxk = 0;

double maxnum = -100000.0;

for (int i=0; i < CLASSNUM; i++) {

if (outputs[i*(width*height) + j*width + k] > maxnum) {

maxnum = outputs[i*(width*height) + j*width + k];

maxi = i; maxj = j; maxk= k;

}

}

// color coding for person (red), dog (green), sheep (blue)

// black color for background and other classes

if (maxi == PERSON)

intValues[maxj*width + maxk] = 0xFFFF0000; // red

else if (maxi == DOG)

intValues[maxj*width + maxk] = 0xFF00FF00; // green

else if (maxi == SHEEP)

intValues[maxj*width + maxk] = 0xFF0000FF; // blue

else

intValues[maxj*width + maxk] = 0xFF000000; // black

}

}

上面代码中使用的常量在 MainActivity 类的开头被定义:

private static final int CLASSNUM = 21;

private static final int DOG = 12;

private static final int PERSON = 15;

private static final int SHEEP = 17;

这里的实现是基于 DeepLabV3 模型的理解,该模型为宽度高度的输入图像输出一个大小为 [21, width, height] 的张量。宽度高度输出数组中的每个元素是一个介于 0 和 20 之间的值(共 21 个在介绍中描述的语义标签),该值用于设置特定颜色。这里的分割颜色编码基于具有最高概率的类,并且您可以扩展颜色编码以适用于您自己数据集中的所有类。

在输出处理之后,您还需要调用下面的代码将 RGB intValues 数组渲染到位图实例 outputBitmap,然后在 ImageView 上显示它:

Bitmap bmpSegmentation = Bitmap.createScaledBitmap(bitmap, width, height, true);

Bitmap outputBitmap = bmpSegmentation.copy(bmpSegmentation.getConfig(), true);

outputBitmap.setPixels(intValues, 0, outputBitmap.getWidth(), 0, 0,

outputBitmap.getWidth(), outputBitmap.getHeight());

imageView.setImageBitmap(outputBitmap);

这个应用程序的用户界面也类似于 Hello World,只是您不需要 TextView 来显示图像分类结果。您还可以添加两个按钮 Segment 和 Restart,如代码存储库中所示,以运行模型推断并在显示分割结果后显示原始图像。

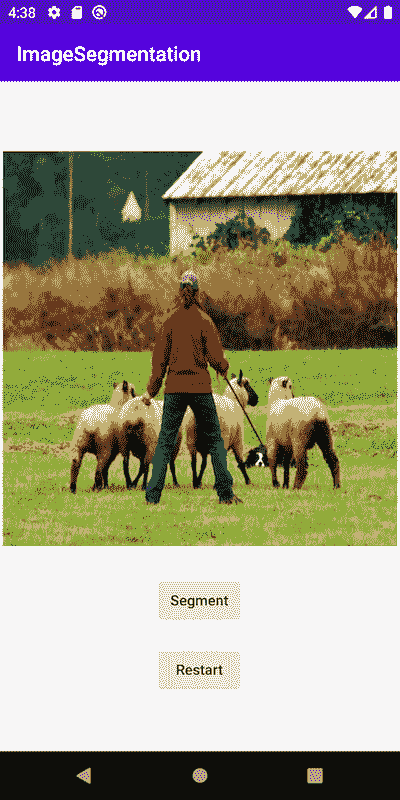

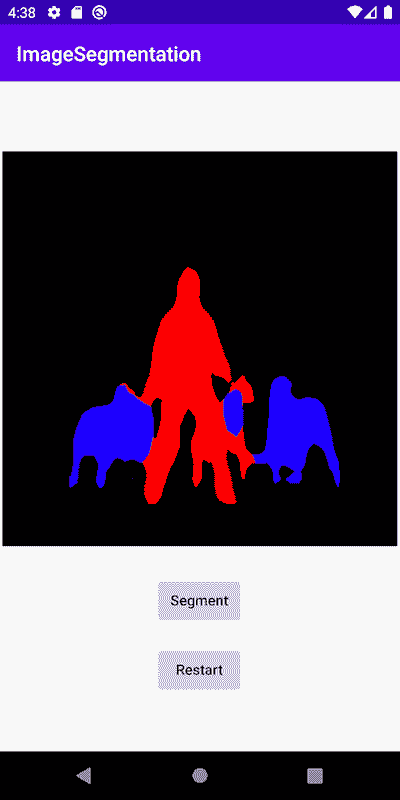

现在,当您在 Android 模拟器上运行应用程序,或者最好是在实际设备上运行时,您将看到以下屏幕:

总结

在本教程中,我们描述了将预训练的 PyTorch DeepLabV3 模型转换为 Android 所需的步骤,以及如何确保模型可以在 Android 上成功运行。我们的重点是帮助您了解确认模型确实可以在 Android 上运行的过程。完整的代码存储库可在此处找到。

更高级的主题,如量化和通过迁移学习或在 Android 上使用自己的模型,将很快在未来的演示应用程序和教程中进行介绍。

了解更多

-

PyTorch Mobile 网站

-

DeepLabV3 模型

-

DeepLabV3 论文

推荐系统

TorchRec 简介

原文:

pytorch.org/tutorials/intermediate/torchrec_tutorial.html译者:飞龙

协议:CC BY-NC-SA 4.0

提示

为了充分利用本教程,我们建议使用这个Colab 版本。这将使您能够尝试下面提供的信息。

请跟随下面的视频或在youtube上观看。

www.youtube.com/embed/cjgj41dvSeQ

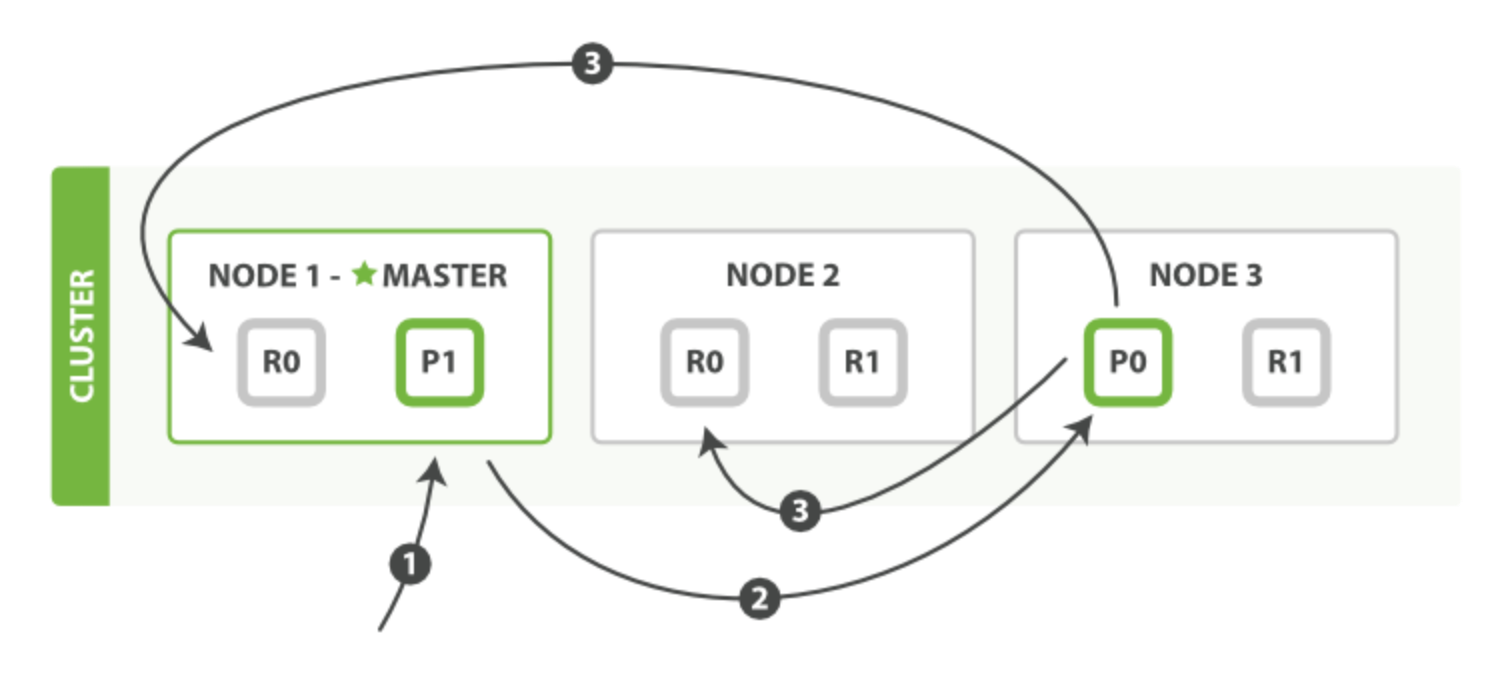

在构建推荐系统时,我们经常希望用嵌入来表示产品或页面等实体。例如,参见 Meta AI 的深度学习推荐模型,或 DLRM。随着实体数量的增长,嵌入表的大小可能超过单个 GPU 的内存。一种常见做法是将嵌入表分片到不同设备上,这是一种模型并行的类型。为此,TorchRec 引入了其主要 API 称为DistributedModelParallel,或 DMP。与 PyTorch 的 DistributedDataParallel 类似,DMP 包装了一个模型以实现分布式训练。

安装

要求:python >= 3.7

在使用 TorchRec 时,我们强烈建议使用 CUDA(如果使用 CUDA:cuda >= 11.0)。

# install pytorch with cudatoolkit 11.3

conda install pytorch cudatoolkit=11.3 -c pytorch-nightly -y

# install TorchRec

pip3 install torchrec-nightly

概述

本教程将涵盖 TorchRec 的三个部分:nn.module EmbeddingBagCollection,DistributedModelParallel API 和数据结构KeyedJaggedTensor。

分布式设置

我们使用 torch.distributed 设置我们的环境。有关分布式的更多信息,请参见此tutorial。

在这里,我们使用一个 rank(colab 进程)对应于我们的 1 个 colab GPU。

import os

import torch

import torchrec

import torch.distributed as dist

os.environ["RANK"] = "0"

os.environ["WORLD_SIZE"] = "1"

os.environ["MASTER_ADDR"] = "localhost"

os.environ["MASTER_PORT"] = "29500"

# Note - you will need a V100 or A100 to run tutorial as as!

# If using an older GPU (such as colab free K80),

# you will need to compile fbgemm with the appripriate CUDA architecture

# or run with "gloo" on CPUs

dist.init_process_group(backend="nccl")

从 EmbeddingBag 到 EmbeddingBagCollection

PyTorch 通过torch.nn.Embedding和torch.nn.EmbeddingBag来表示嵌入。EmbeddingBag 是 Embedding 的池化版本。

TorchRec 通过创建嵌入的集合来扩展这些模块。我们将使用EmbeddingBagCollection来表示一组 EmbeddingBags。

在这里,我们创建了一个包含两个嵌入包的 EmbeddingBagCollection(EBC)。每个表,product_table和user_table,由大小为 4096 的 64 维嵌入表示。请注意,我们最初将 EBC 分配到设备“meta”。这将告诉 EBC 暂时不要分配内存。

ebc = torchrec.EmbeddingBagCollection(

device="meta",

tables=[

torchrec.EmbeddingBagConfig(

name="product_table",

embedding_dim=64,

num_embeddings=4096,

feature_names=["product"],

pooling=torchrec.PoolingType.SUM,

),

torchrec.EmbeddingBagConfig(

name="user_table",

embedding_dim=64,

num_embeddings=4096,

feature_names=["user"],

pooling=torchrec.PoolingType.SUM,

)

]

)

DistributedModelParallel

现在,我们准备用DistributedModelParallel (DMP)包装我们的模型。实例化 DMP 将:

-

决定如何分片模型。DMP 将收集可用的“分片器”并提出一种最佳方式来分片嵌入表(即 EmbeddingBagCollection)的“计划”。

-

实际分片模型。这包括为每个嵌入表在适当设备上分配内存。

在这个示例中,由于我们有两个 EmbeddingTables 和一个 GPU,TorchRec 将两者都放在单个 GPU 上。

model = torchrec.distributed.DistributedModelParallel(ebc, device=torch.device("cuda"))

print(model)

print(model.plan)

使用输入和偏移查询普通的 nn.EmbeddingBag

我们使用input和offsets查询nn.Embedding和nn.EmbeddingBag。Input 是包含查找值的 1-D 张量。Offsets 是一个 1-D 张量,其中序列是每个示例要汇总的值的累积和。

让我们看一个例子,重新创建上面的产品 EmbeddingBag:

|------------|

| product ID |

|------------|

| [101, 202] |

| [] |

| [303] |

|------------|

product_eb = torch.nn.EmbeddingBag(4096, 64)

product_eb(input=torch.tensor([101, 202, 303]), offsets=torch.tensor([0, 2, 2]))

使用 KeyedJaggedTensor 表示小批量

我们需要一个有效的表示,每个示例的每个特征中有任意数量的实体 ID 的多个示例。为了实现这种“不规则”表示,我们使用 TorchRec 数据结构KeyedJaggedTensor(KJT)。

让我们看看如何查找两个嵌入包“product”和“user”的集合。假设小批量由三个用户的三个示例组成。第一个示例有两个产品 ID,第二个没有,第三个有一个产品 ID。

|------------|------------|

| product ID | user ID |

|------------|------------|

| [101, 202] | [404] |

| [] | [505] |

| [303] | [606] |

|------------|------------|

查询应该是:

mb = torchrec.KeyedJaggedTensor(

keys = ["product", "user"],

values = torch.tensor([101, 202, 303, 404, 505, 606]).cuda(),

lengths = torch.tensor([2, 0, 1, 1, 1, 1], dtype=torch.int64).cuda(),

)

print(mb.to(torch.device("cpu")))

请注意,KJT 批量大小为batch_size = len(lengths)//len(keys)。在上面的例子中,batch_size 为 3。

将所有内容整合在一起,使用 KJT 小批量查询我们的分布式模型

最后,我们可以使用我们的产品和用户的小批量查询我们的模型。

结果查找将包含一个 KeyedTensor,其中每个键(或特征)包含一个大小为 3x64(batch_size x embedding_dim)的 2D 张量。

pooled_embeddings = model(mb)

print(pooled_embeddings)

更多资源

有关更多信息,请参阅我们的dlrm示例,其中包括在 criteo terabyte 数据集上进行多节点训练,使用 Meta 的DLRM。

探索 TorchRec 分片

原文:

pytorch.org/tutorials/advanced/sharding.html译者:飞龙

协议:CC BY-NC-SA 4.0

本教程将主要涵盖通过EmbeddingPlanner和DistributedModelParallel API 对嵌入表进行分片方案的探索,并通过显式配置不同分片方案来探索嵌入表的不同分片方案的好处。

安装

要求:- python >= 3.7

我们强烈建议在使用 torchRec 时使用 CUDA。如果使用 CUDA:- cuda >= 11.0

# install conda to make installying pytorch with cudatoolkit 11.3 easier.

!sudo rm Miniconda3-py37_4.9.2-Linux-x86_64.sh Miniconda3-py37_4.9.2-Linux-x86_64.sh.*

!sudo wget https://repo.anaconda.com/miniconda/Miniconda3-py37_4.9.2-Linux-x86_64.sh

!sudo chmod +x Miniconda3-py37_4.9.2-Linux-x86_64.sh

!sudo bash ./Miniconda3-py37_4.9.2-Linux-x86_64.sh -b -f -p /usr/local

# install pytorch with cudatoolkit 11.3

!sudo conda install pytorch cudatoolkit=11.3 -c pytorch-nightly -y

安装 torchRec 还将安装FBGEMM,这是一组 CUDA 内核和 GPU 启用的操作,用于运行

# install torchrec

!pip3 install torchrec-nightly

安装 multiprocess,它与 ipython 一起在 colab 中进行多进程编程

!pip3 install multiprocess

Colab 运行时需要以下步骤来检测添加的共享库。运行时在/usr/lib 中搜索共享库,因此我们复制在/usr/local/lib/中安装的库。这是非常必要的步骤,仅在 colab 运行时中。

!sudo cp /usr/local/lib/lib* /usr/lib/

**在此时重新启动您的运行时,以便看到新安装的软件包。**在重新启动后立即运行下面的步骤,以便 python 知道在哪里查找软件包。在重新启动运行时后始终运行此步骤。

import sys

sys.path = ['', '/env/python', '/usr/local/lib/python37.zip', '/usr/local/lib/python3.7', '/usr/local/lib/python3.7/lib-dynload', '/usr/local/lib/python3.7/site-packages', './.local/lib/python3.7/site-packages']

分布式设置

由于笔记本环境,我们无法在此运行SPMD程序,但我们可以在笔记本内部进行多进程操作以模拟设置。用户在使用 Torchrec 时应负责设置自己的SPMD启动器。我们设置我们的环境,以便 torch 分布式基于通信后端可以工作。

import os

import torch

import torchrec

os.environ["MASTER_ADDR"] = "localhost"

os.environ["MASTER_PORT"] = "29500"

构建我们的嵌入模型

在这里,我们使用 TorchRec 提供的EmbeddingBagCollection来构建我们的嵌入包模型与嵌入表。

在这里,我们创建了一个包含四个嵌入包的 EmbeddingBagCollection(EBC)。我们有两种类型的表:大表和小表,通过它们的行大小差异区分:4096 vs 1024。每个表仍然由 64 维嵌入表示。

我们为表配置ParameterConstraints数据结构,为模型并行 API 提供提示,以帮助决定表的分片和放置策略。在 TorchRec 中,我们支持* table-wise:将整个表放在一个设备上;* row-wise:按行维度均匀分片表,并将一个分片放在通信世界的每个设备上;* column-wise:按嵌入维度均匀分片表,并将一个分片放在通信世界的每个设备上;* table-row-wise:针对可用的快速主机内部通信进行优化的特殊分片,例如 NVLink;* data_parallel:为每个设备复制表;

请注意我们最初在设备“meta”上分配 EBC。这将告诉 EBC 暂时不分配内存。

from torchrec.distributed.planner.types import ParameterConstraints

from torchrec.distributed.embedding_types import EmbeddingComputeKernel

from torchrec.distributed.types import ShardingType

from typing import Dict

large_table_cnt = 2

small_table_cnt = 2

large_tables=[

torchrec.EmbeddingBagConfig(

name="large_table_" + str(i),

embedding_dim=64,

num_embeddings=4096,

feature_names=["large_table_feature_" + str(i)],

pooling=torchrec.PoolingType.SUM,

) for i in range(large_table_cnt)

]

small_tables=[

torchrec.EmbeddingBagConfig(

name="small_table_" + str(i),

embedding_dim=64,

num_embeddings=1024,

feature_names=["small_table_feature_" + str(i)],

pooling=torchrec.PoolingType.SUM,

) for i in range(small_table_cnt)

]

def gen_constraints(sharding_type: ShardingType = ShardingType.TABLE_WISE) -> Dict[str, ParameterConstraints]:

large_table_constraints = {

"large_table_" + str(i): ParameterConstraints(

sharding_types=[sharding_type.value],

) for i in range(large_table_cnt)

}

small_table_constraints = {

"small_table_" + str(i): ParameterConstraints(

sharding_types=[sharding_type.value],

) for i in range(small_table_cnt)

}

constraints = {**large_table_constraints, **small_table_constraints}

return constraints

ebc = torchrec.EmbeddingBagCollection(

device="cuda",

tables=large_tables + small_tables

)

多进程中的分布式模型并行

现在,我们有一个单进程执行函数,用于模拟SPMD执行期间一个等级的工作。

此代码将与其他进程一起分片模型并相应地分配内存。它首先设置进程组,并使用规划器进行嵌入表放置,并使用DistributedModelParallel生成分片模型。

def single_rank_execution(

rank: int,

world_size: int,

constraints: Dict[str, ParameterConstraints],

module: torch.nn.Module,

backend: str,

) -> None:

import os

import torch

import torch.distributed as dist

from torchrec.distributed.embeddingbag import EmbeddingBagCollectionSharder

from torchrec.distributed.model_parallel import DistributedModelParallel

from torchrec.distributed.planner import EmbeddingShardingPlanner, Topology

from torchrec.distributed.types import ModuleSharder, ShardingEnv

from typing import cast

def init_distributed_single_host(

rank: int,

world_size: int,

backend: str,

# pyre-fixme[11]: Annotation `ProcessGroup` is not defined as a type.

) -> dist.ProcessGroup:

os.environ["RANK"] = f"{rank}"

os.environ["WORLD_SIZE"] = f"{world_size}"

dist.init_process_group(rank=rank, world_size=world_size, backend=backend)

return dist.group.WORLD

if backend == "nccl":

device = torch.device(f"cuda:{rank}")

torch.cuda.set_device(device)

else:

device = torch.device("cpu")

topology = Topology(world_size=world_size, compute_device="cuda")

pg = init_distributed_single_host(rank, world_size, backend)

planner = EmbeddingShardingPlanner(

topology=topology,

constraints=constraints,

)

sharders = [cast(ModuleSharder[torch.nn.Module], EmbeddingBagCollectionSharder())]

plan: ShardingPlan = planner.collective_plan(module, sharders, pg)

sharded_model = DistributedModelParallel(

module,

env=ShardingEnv.from_process_group(pg),

plan=plan,

sharders=sharders,

device=device,

)

print(f"rank:{rank},sharding plan: {plan}")

return sharded_model

多进程执行

现在让我们在多个 GPU 等级中执行代码。

import multiprocess

def spmd_sharing_simulation(

sharding_type: ShardingType = ShardingType.TABLE_WISE,

world_size = 2,

):

ctx = multiprocess.get_context("spawn")

processes = []

for rank in range(world_size):

p = ctx.Process(

target=single_rank_execution,

args=(

rank,

world_size,

gen_constraints(sharding_type),

ebc,

"nccl"

),

)

p.start()

processes.append(p)

for p in processes:

p.join()

assert 0 == p.exitcode

表分片

现在让我们在两个进程中为 2 个 GPU 执行代码。我们可以在计划打印中看到我们的表如何跨 GPU 分片。每个节点将有一个大表和一个小表,显示我们的规划器尝试为嵌入表实现负载平衡。对于许多中小型表的负载平衡,表方式是默认的分片方案。

spmd_sharing_simulation(ShardingType.TABLE_WISE)

rank:1,sharding plan: {'': {'large_table_0': ParameterSharding(sharding_type='table_wise', compute_kernel='batched_fused', ranks=[0], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[4096, 64], placement=rank:0/cuda:0)])), 'large_table_1': ParameterSharding(sharding_type='table_wise', compute_kernel='batched_fused', ranks=[1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[4096, 64], placement=rank:1/cuda:1)])), 'small_table_0': ParameterSharding(sharding_type='table_wise', compute_kernel='batched_fused', ranks=[0], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[1024, 64], placement=rank:0/cuda:0)])), 'small_table_1': ParameterSharding(sharding_type='table_wise', compute_kernel='batched_fused', ranks=[1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[1024, 64], placement=rank:1/cuda:1)]))}}

rank:0,sharding plan: {'': {'large_table_0': ParameterSharding(sharding_type='table_wise', compute_kernel='batched_fused', ranks=[0], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[4096, 64], placement=rank:0/cuda:0)])), 'large_table_1': ParameterSharding(sharding_type='table_wise', compute_kernel='batched_fused', ranks=[1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[4096, 64], placement=rank:1/cuda:1)])), 'small_table_0': ParameterSharding(sharding_type='table_wise', compute_kernel='batched_fused', ranks=[0], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[1024, 64], placement=rank:0/cuda:0)])), 'small_table_1': ParameterSharding(sharding_type='table_wise', compute_kernel='batched_fused', ranks=[1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[1024, 64], placement=rank:1/cuda:1)]))}}

探索其他分片模式

我们最初探讨了表格分片的外观以及它如何平衡表格的放置。现在我们将更加专注于负载平衡的分片模式:按行分片。按行分片专门解决了由于大嵌入行数导致内存增加而单个设备无法容纳的大表格。它可以解决模型中超大表格的放置问题。用户可以在打印计划日志中的shard_sizes部分看到,表格按行维度减半,分布到两个 GPU 上。

spmd_sharing_simulation(ShardingType.ROW_WISE)

rank:1,sharding plan: {'': {'large_table_0': ParameterSharding(sharding_type='row_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[2048, 64], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[2048, 0], shard_sizes=[2048, 64], placement=rank:1/cuda:1)])), 'large_table_1': ParameterSharding(sharding_type='row_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[2048, 64], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[2048, 0], shard_sizes=[2048, 64], placement=rank:1/cuda:1)])), 'small_table_0': ParameterSharding(sharding_type='row_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[512, 64], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[512, 0], shard_sizes=[512, 64], placement=rank:1/cuda:1)])), 'small_table_1': ParameterSharding(sharding_type='row_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[512, 64], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[512, 0], shard_sizes=[512, 64], placement=rank:1/cuda:1)]))}}

rank:0,sharding plan: {'': {'large_table_0': ParameterSharding(sharding_type='row_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[2048, 64], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[2048, 0], shard_sizes=[2048, 64], placement=rank:1/cuda:1)])), 'large_table_1': ParameterSharding(sharding_type='row_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[2048, 64], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[2048, 0], shard_sizes=[2048, 64], placement=rank:1/cuda:1)])), 'small_table_0': ParameterSharding(sharding_type='row_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[512, 64], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[512, 0], shard_sizes=[512, 64], placement=rank:1/cuda:1)])), 'small_table_1': ParameterSharding(sharding_type='row_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[512, 64], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[512, 0], shard_sizes=[512, 64], placement=rank:1/cuda:1)]))}}

列式分割另一方面,解决了具有大嵌入维度的表格的负载不平衡问题。我们将表格垂直分割。用户可以在打印计划日志中的shard_sizes部分看到,表格按嵌入维度减半,分布到两个 GPU 上。

spmd_sharing_simulation(ShardingType.COLUMN_WISE)

rank:0,sharding plan: {'': {'large_table_0': ParameterSharding(sharding_type='column_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[4096, 32], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[0, 32], shard_sizes=[4096, 32], placement=rank:1/cuda:1)])), 'large_table_1': ParameterSharding(sharding_type='column_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[4096, 32], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[0, 32], shard_sizes=[4096, 32], placement=rank:1/cuda:1)])), 'small_table_0': ParameterSharding(sharding_type='column_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[1024, 32], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[0, 32], shard_sizes=[1024, 32], placement=rank:1/cuda:1)])), 'small_table_1': ParameterSharding(sharding_type='column_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[1024, 32], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[0, 32], shard_sizes=[1024, 32], placement=rank:1/cuda:1)]))}}

rank:1,sharding plan: {'': {'large_table_0': ParameterSharding(sharding_type='column_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[4096, 32], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[0, 32], shard_sizes=[4096, 32], placement=rank:1/cuda:1)])), 'large_table_1': ParameterSharding(sharding_type='column_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[4096, 32], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[0, 32], shard_sizes=[4096, 32], placement=rank:1/cuda:1)])), 'small_table_0': ParameterSharding(sharding_type='column_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[1024, 32], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[0, 32], shard_sizes=[1024, 32], placement=rank:1/cuda:1)])), 'small_table_1': ParameterSharding(sharding_type='column_wise', compute_kernel='batched_fused', ranks=[0, 1], sharding_spec=EnumerableShardingSpec(shards=[ShardMetadata(shard_offsets=[0, 0], shard_sizes=[1024, 32], placement=rank:0/cuda:0), ShardMetadata(shard_offsets=[0, 32], shard_sizes=[1024, 32], placement=rank:1/cuda:1)]))}}

对于table-row-wise,不幸的是,由于其在多主机设置下运行的特性,我们无法模拟它。我们将在未来提供一个 Python SPMD示例,以使用table-row-wise训练模型。

使用数据并行,我们将为所有设备重复表格。

spmd_sharing_simulation(ShardingType.DATA_PARALLEL)

rank:0,sharding plan: {'': {'large_table_0': ParameterSharding(sharding_type='data_parallel', compute_kernel='batched_dense', ranks=[0, 1], sharding_spec=None), 'large_table_1': ParameterSharding(sharding_type='data_parallel', compute_kernel='batched_dense', ranks=[0, 1], sharding_spec=None), 'small_table_0': ParameterSharding(sharding_type='data_parallel', compute_kernel='batched_dense', ranks=[0, 1], sharding_spec=None), 'small_table_1': ParameterSharding(sharding_type='data_parallel', compute_kernel='batched_dense', ranks=[0, 1], sharding_spec=None)}}

rank:1,sharding plan: {'': {'large_table_0': ParameterSharding(sharding_type='data_parallel', compute_kernel='batched_dense', ranks=[0, 1], sharding_spec=None), 'large_table_1': ParameterSharding(sharding_type='data_parallel', compute_kernel='batched_dense', ranks=[0, 1], sharding_spec=None), 'small_table_0': ParameterSharding(sharding_type='data_parallel', compute_kernel='batched_dense', ranks=[0, 1], sharding_spec=None), 'small_table_1': ParameterSharding(sharding_type='data_parallel', compute_kernel='batched_dense', ranks=[0, 1], sharding_spec=None)}}

多模态

TorchMultimodal 教程:微调 FLAVA

原文:

pytorch.org/tutorials/beginner/flava_finetuning_tutorial.html译者:飞龙

协议:CC BY-NC-SA 4.0

注意

点击这里下载完整示例代码

多模态人工智能最近变得非常流行,因为它的普遍性,从图像字幕和视觉搜索等用例到最近的应用,如根据文本生成图像。TorchMultimodal 是一个由 Pytorch 提供支持的库,包含构建模块和端到端示例,旨在促进和加速多模态研究。

在本教程中,我们将演示如何使用 TorchMultimodal 库中的 预训练 SoTA 模型 FLAVA 进行多模态任务微调,即视觉问答(VQA)。该模型由两个基于 transformer 的文本和图像单模态编码器以及一个多模态编码器组成,用于组合这两个嵌入。它使用对比、图像文本匹配以及文本、图像和多模态掩码损失进行预训练。

安装

我们将在本教程中使用 TextVQA 数据集和 Hugging Face 的 bert tokenizer。因此,除了 TorchMultimodal,您还需要安装 datasets 和 transformers。

注意

在 Google Colab 中运行本教程时,请通过创建一个新单元格并运行以下命令来安装所需的包:

!pip install torchmultimodal-nightly

!pip install datasets

!pip install transformers

步骤

-

通过运行以下命令将 Hugging Face 数据集下载到计算机上的一个目录中:

wget http://dl.fbaipublicfiles.com/pythia/data/vocab.tar.gz tar xf vocab.tar.gz注意

如果您在 Google Colab 中运行本教程,请在新单元格中运行这些命令,并在这些命令前加上感叹号(!)。

-

在本教程中,我们将将 VQA 视为一个分类任务,其中输入是图像和问题(文本),输出是一个答案类别。因此,我们需要下载包含答案类别的词汇文件,并创建答案到标签的映射。

我们还从 Hugging Face 加载包含 34602 个训练样本(图像、问题和答案)的 textvqa 数据集。

我们看到有 3997 个答案类别,包括一个代表未知答案的类别。

with open("data/vocabs/answers_textvqa_more_than_1.txt") as f:

vocab = f.readlines()

answer_to_idx = {}

for idx, entry in enumerate(vocab):

answer_to_idx[entry.strip("\n")] = idx

print(len(vocab))

print(vocab[:5])

from datasets import load_dataset

dataset = load_dataset("textvqa")

3997

['<unk>\n', 'nokia\n', 'ec\n', 'virgin\n', '2011\n']

Downloading builder script: 0%| | 0.00/5.02k [00:00<?, ?B/s]

Downloading builder script: 100%|##########| 5.02k/5.02k [00:00<00:00, 30.1MB/s]

Downloading readme: 0%| | 0.00/13.2k [00:00<?, ?B/s]

Downloading readme: 100%|##########| 13.2k/13.2k [00:00<00:00, 41.4MB/s]

Downloading data files: 0%| | 0/5 [00:00<?, ?it/s]

Downloading data: 0%| | 0.00/21.6M [00:00<?, ?B/s]

Downloading data: 32%|###2 | 6.94M/21.6M [00:00<00:00, 69.4MB/s]

Downloading data: 69%|######8 | 14.9M/21.6M [00:00<00:00, 75.3MB/s]

Downloading data: 100%|##########| 21.6M/21.6M [00:00<00:00, 76.9MB/s]

Downloading data files: 20%|## | 1/5 [00:00<00:01, 2.30it/s]

Downloading data: 0.00B [00:00, ?B/s]

Downloading data: 3.12MB [00:00, 168MB/s]

Downloading data files: 40%|#### | 2/5 [00:00<00:00, 3.62it/s]

Downloading data: 0.00B [00:00, ?B/s]

Downloading data: 2.77MB [00:00, 191MB/s]

Downloading data files: 60%|###### | 3/5 [00:00<00:00, 4.57it/s]

Downloading data: 0%| | 0.00/7.07G [00:00<?, ?B/s]

Downloading data: 0%| | 4.31M/7.07G [00:00<02:44, 43.1MB/s]

Downloading data: 0%| | 11.0M/7.07G [00:00<02:03, 57.3MB/s]

Downloading data: 0%| | 18.0M/7.07G [00:00<01:52, 62.8MB/s]

Downloading data: 0%| | 25.7M/7.07G [00:00<01:43, 68.4MB/s]

Downloading data: 0%| | 33.0M/7.07G [00:00<01:40, 70.1MB/s]

Downloading data: 1%| | 40.0M/7.07G [00:00<01:43, 68.2MB/s]

Downloading data: 1%| | 46.8M/7.07G [00:00<01:45, 66.9MB/s]

Downloading data: 1%| | 53.5M/7.07G [00:00<01:46, 65.6MB/s]

Downloading data: 1%| | 60.1M/7.07G [00:00<01:49, 64.3MB/s]

Downloading data: 1%| | 66.5M/7.07G [00:01<01:51, 63.1MB/s]

Downloading data: 1%|1 | 72.8M/7.07G [00:01<01:52, 62.4MB/s]

Downloading data: 1%|1 | 79.1M/7.07G [00:01<01:52, 62.2MB/s]

Downloading data: 1%|1 | 85.3M/7.07G [00:01<01:52, 62.1MB/s]

Downloading data: 1%|1 | 91.5M/7.07G [00:01<02:09, 53.9MB/s]

Downloading data: 1%|1 | 97.4M/7.07G [00:01<02:06, 55.2MB/s]

Downloading data: 1%|1 | 103M/7.07G [00:01<02:04, 56.0MB/s]

Downloading data: 2%|1 | 110M/7.07G [00:01<01:56, 59.9MB/s]

Downloading data: 2%|1 | 118M/7.07G [00:01<01:45, 66.0MB/s]

Downloading data: 2%|1 | 126M/7.07G [00:01<01:39, 70.1MB/s]

Downloading data: 2%|1 | 134M/7.07G [00:02<01:35, 72.8MB/s]

Downloading data: 2%|2 | 142M/7.07G [00:02<01:35, 72.8MB/s]

Downloading data: 2%|2 | 149M/7.07G [00:02<01:42, 67.3MB/s]

Downloading data: 2%|2 | 158M/7.07G [00:02<01:33, 74.3MB/s]

Downloading data: 2%|2 | 167M/7.07G [00:02<01:29, 77.0MB/s]

Downloading data: 2%|2 | 175M/7.07G [00:02<01:25, 80.6MB/s]

Downloading data: 3%|2 | 184M/7.07G [00:02<01:24, 81.7MB/s]

Downloading data: 3%|2 | 193M/7.07G [00:02<01:22, 83.1MB/s]

Downloading data: 3%|2 | 201M/7.07G [00:02<01:22, 83.0MB/s]

Downloading data: 3%|2 | 209M/7.07G [00:03<01:21, 83.8MB/s]

Downloading data: 3%|3 | 218M/7.07G [00:03<01:23, 82.5MB/s]

Downloading data: 3%|3 | 227M/7.07G [00:03<01:19, 86.1MB/s]

Downloading data: 3%|3 | 236M/7.07G [00:03<01:18, 87.3MB/s]

Downloading data: 3%|3 | 246M/7.07G [00:03<01:16, 89.5MB/s]

Downloading data: 4%|3 | 255M/7.07G [00:03<01:14, 91.2MB/s]

Downloading data: 4%|3 | 265M/7.07G [00:03<01:13, 92.4MB/s]

Downloading data: 4%|3 | 274M/7.07G [00:03<01:12, 93.4MB/s]

Downloading data: 4%|4 | 284M/7.07G [00:03<01:12, 94.2MB/s]

Downloading data: 4%|4 | 294M/7.07G [00:03<01:11, 94.7MB/s]

Downloading data: 4%|4 | 303M/7.07G [00:04<01:11, 94.9MB/s]

Downloading data: 4%|4 | 313M/7.07G [00:04<01:10, 95.5MB/s]

Downloading data: 5%|4 | 322M/7.07G [00:04<01:10, 95.7MB/s]

Downloading data: 5%|4 | 332M/7.07G [00:04<01:10, 95.8MB/s]

Downloading data: 5%|4 | 342M/7.07G [00:04<01:10, 96.1MB/s]

Downloading data: 5%|4 | 351M/7.07G [00:04<01:09, 96.4MB/s]

Downloading data: 5%|5 | 361M/7.07G [00:04<01:09, 96.5MB/s]

Downloading data: 5%|5 | 371M/7.07G [00:04<01:09, 96.6MB/s]

Downloading data: 5%|5 | 381M/7.07G [00:04<01:09, 96.7MB/s]

Downloading data: 6%|5 | 390M/7.07G [00:04<01:09, 96.7MB/s]

Downloading data: 6%|5 | 400M/7.07G [00:05<01:08, 96.7MB/s]

Downloading data: 6%|5 | 410M/7.07G [00:05<01:08, 96.8MB/s]

Downloading data: 6%|5 | 419M/7.07G [00:05<01:08, 96.7MB/s]

Downloading data: 6%|6 | 429M/7.07G [00:05<01:08, 96.5MB/s]

Downloading data: 6%|6 | 439M/7.07G [00:05<01:08, 96.7MB/s]

Downloading data: 6%|6 | 448M/7.07G [00:05<01:08, 96.7MB/s]

Downloading data: 6%|6 | 458M/7.07G [00:05<01:08, 96.6MB/s]

Downloading data: 7%|6 | 468M/7.07G [00:05<01:08, 96.6MB/s]

Downloading data: 7%|6 | 477M/7.07G [00:05<01:08, 96.7MB/s]

Downloading data: 7%|6 | 487M/7.07G [00:05<01:08, 96.3MB/s]

Downloading data: 7%|7 | 497M/7.07G [00:06<01:08, 96.4MB/s]

Downloading data: 7%|7 | 506M/7.07G [00:06<01:08, 96.4MB/s]

Downloading data: 7%|7 | 516M/7.07G [00:06<01:07, 96.4MB/s]

Downloading data: 7%|7 | 526M/7.07G [00:06<01:07, 96.4MB/s]

Downloading data: 8%|7 | 535M/7.07G [00:06<01:07, 96.5MB/s]

Downloading data: 8%|7 | 545M/7.07G [00:06<01:07, 96.3MB/s]

Downloading data: 8%|7 | 555M/7.07G [00:06<01:07, 96.3MB/s]

Downloading data: 8%|7 | 564M/7.07G [00:06<01:07, 96.3MB/s]

Downloading data: 8%|8 | 574M/7.07G [00:06<01:07, 96.4MB/s]

Downloading data: 8%|8 | 584M/7.07G [00:06<01:07, 96.5MB/s]

Downloading data: 8%|8 | 593M/7.07G [00:07<01:07, 96.3MB/s]

Downloading data: 9%|8 | 603M/7.07G [00:07<01:07, 96.2MB/s]

Downloading data: 9%|8 | 612M/7.07G [00:07<01:07, 96.3MB/s]

Downloading data: 9%|8 | 622M/7.07G [00:07<01:06, 96.4MB/s]

Downloading data: 9%|8 | 632M/7.07G [00:07<01:06, 96.4MB/s]

Downloading data: 9%|9 | 641M/7.07G [00:07<01:06, 96.4MB/s]

Downloading data: 9%|9 | 651M/7.07G [00:07<01:06, 96.3MB/s]

Downloading data: 9%|9 | 661M/7.07G [00:07<01:06, 96.4MB/s]

Downloading data: 9%|9 | 670M/7.07G [00:07<01:06, 96.5MB/s]

Downloading data: 10%|9 | 680M/7.07G [00:07<01:06, 96.3MB/s]

Downloading data: 10%|9 | 690M/7.07G [00:08<01:06, 96.3MB/s]

Downloading data: 10%|9 | 699M/7.07G [00:08<01:06, 96.2MB/s]

Downloading data: 10%|# | 709M/7.07G [00:08<01:06, 96.2MB/s]

Downloading data: 10%|# | 719M/7.07G [00:08<01:05, 96.3MB/s]

Downloading data: 10%|# | 728M/7.07G [00:08<01:05, 96.2MB/s]

Downloading data: 10%|# | 738M/7.07G [00:08<01:05, 96.3MB/s]

Downloading data: 11%|# | 747M/7.07G [00:08<01:05, 96.2MB/s]

Downloading data: 11%|# | 757M/7.07G [00:08<01:06, 94.6MB/s]

Downloading data: 11%|# | 767M/7.07G [00:08<01:07, 93.1MB/s]

Downloading data: 11%|# | 776M/7.07G [00:08<01:08, 91.8MB/s]

Downloading data: 11%|#1 | 785M/7.07G [00:09<01:09, 91.0MB/s]

Downloading data: 11%|#1 | 794M/7.07G [00:09<01:09, 90.5MB/s]

Downloading data: 11%|#1 | 803M/7.07G [00:09<01:10, 89.4MB/s]

Downloading data: 11%|#1 | 812M/7.07G [00:09<01:12, 85.8MB/s]

Downloading data: 12%|#1 | 821M/7.07G [00:09<01:19, 78.7MB/s]

Downloading data: 12%|#1 | 829M/7.07G [00:09<01:19, 78.4MB/s]

Downloading data: 12%|#1 | 837M/7.07G [00:09<01:26, 72.0MB/s]

Downloading data: 12%|#1 | 846M/7.07G [00:09<01:20, 77.8MB/s]

Downloading data: 12%|#2 | 855M/7.07G [00:09<01:16, 80.8MB/s]

Downloading data: 12%|#2 | 863M/7.07G [00:10<01:17, 80.4MB/s]

Downloading data: 12%|#2 | 872M/7.07G [00:10<01:15, 81.9MB/s]

Downloading data: 12%|#2 | 880M/7.07G [00:10<01:14, 82.6MB/s]

Downloading data: 13%|#2 | 890M/7.07G [00:10<01:11, 85.9MB/s]

Downloading data: 13%|#2 | 899M/7.07G [00:10<01:09, 88.4MB/s]

Downloading data: 13%|#2 | 908M/7.07G [00:10<01:08, 90.3MB/s]

Downloading data: 13%|#2 | 918M/7.07G [00:10<01:07, 91.7MB/s]

Downloading data: 13%|#3 | 927M/7.07G [00:10<01:06, 92.6MB/s]

Downloading data: 13%|#3 | 937M/7.07G [00:10<01:05, 93.5MB/s]

Downloading data: 13%|#3 | 947M/7.07G [00:10<01:04, 94.3MB/s]

Downloading data: 14%|#3 | 956M/7.07G [00:11<01:04, 94.8MB/s]

Downloading data: 14%|#3 | 966M/7.07G [00:11<01:04, 95.3MB/s]

Downloading data: 14%|#3 | 975M/7.07G [00:11<01:03, 95.6MB/s]

Downloading data: 14%|#3 | 985M/7.07G [00:11<01:03, 95.9MB/s]

Downloading data: 14%|#4 | 995M/7.07G [00:11<01:03, 96.1MB/s]

Downloading data: 14%|#4 | 1.00G/7.07G [00:11<01:03, 96.1MB/s]

Downloading data: 14%|#4 | 1.01G/7.07G [00:11<01:02, 96.2MB/s]

Downloading data: 14%|#4 | 1.02G/7.07G [00:11<01:02, 96.1MB/s]

Downloading data: 15%|#4 | 1.03G/7.07G [00:11<01:02, 96.1MB/s]

Downloading data: 15%|#4 | 1.04G/7.07G [00:11<01:02, 96.1MB/s]

Downloading data: 15%|#4 | 1.05G/7.07G [00:12<01:02, 96.2MB/s]

Downloading data: 15%|#5 | 1.06G/7.07G [00:12<01:02, 96.3MB/s]

Downloading data: 15%|#5 | 1.07G/7.07G [00:12<01:02, 96.4MB/s]

Downloading data: 15%|#5 | 1.08G/7.07G [00:12<01:02, 96.2MB/s]

Downloading data: 15%|#5 | 1.09G/7.07G [00:12<01:02, 95.7MB/s]

Downloading data: 16%|#5 | 1.10G/7.07G [00:12<01:02, 95.5MB/s]

Downloading data: 16%|#5 | 1.11G/7.07G [00:12<01:02, 95.5MB/s]

Downloading data: 16%|#5 | 1.12G/7.07G [00:12<01:02, 95.3MB/s]

Downloading data: 16%|#5 | 1.13G/7.07G [00:12<01:02, 95.2MB/s]

Downloading data: 16%|#6 | 1.14G/7.07G [00:12<01:02, 95.3MB/s]

Downloading data: 16%|#6 | 1.15G/7.07G [00:13<01:02, 94.9MB/s]

Downloading data: 16%|#6 | 1.16G/7.07G [00:13<01:02, 95.0MB/s]

Downloading data: 17%|#6 | 1.17G/7.07G [00:13<01:02, 95.2MB/s]

Downloading data: 17%|#6 | 1.18G/7.07G [00:13<01:01, 95.4MB/s]

Downloading data: 17%|#6 | 1.19G/7.07G [00:13<01:01, 95.2MB/s]

Downloading data: 17%|#6 | 1.20G/7.07G [00:13<01:01, 95.3MB/s]

Downloading data: 17%|#7 | 1.21G/7.07G [00:13<01:01, 95.2MB/s]

Downloading data: 17%|#7 | 1.22G/7.07G [00:13<01:01, 95.3MB/s]

Downloading data: 17%|#7 | 1.22G/7.07G [00:13<01:01, 95.4MB/s]

Downloading data: 17%|#7 | 1.23G/7.07G [00:13<01:01, 95.4MB/s]

Downloading data: 18%|#7 | 1.24G/7.07G [00:14<01:01, 95.4MB/s]

Downloading data: 18%|#7 | 1.25G/7.07G [00:14<01:01, 95.4MB/s]

Downloading data: 18%|#7 | 1.26G/7.07G [00:14<01:00, 95.3MB/s]

Downloading data: 18%|#7 | 1.27G/7.07G [00:14<01:00, 95.2MB/s]

Downloading data: 18%|#8 | 1.28G/7.07G [00:14<01:00, 95.2MB/s]

Downloading data: 18%|#8 | 1.29G/7.07G [00:14<01:00, 95.2MB/s]

Downloading data: 18%|#8 | 1.30G/7.07G [00:14<01:00, 95.3MB/s]

Downloading data: 19%|#8 | 1.31G/7.07G [00:14<01:00, 95.5MB/s]

Downloading data: 19%|#8 | 1.32G/7.07G [00:14<01:00, 95.6MB/s]

Downloading data: 19%|#8 | 1.33G/7.07G [00:14<01:00, 95.6MB/s]

Downloading data: 19%|#8 | 1.34G/7.07G [00:15<00:59, 95.6MB/s]

Downloading data: 19%|#9 | 1.35G/7.07G [00:15<00:59, 95.7MB/s]

Downloading data: 19%|#9 | 1.36G/7.07G [00:15<00:59, 95.7MB/s]

Downloading data: 19%|#9 | 1.37G/7.07G [00:15<00:59, 95.7MB/s]

Downloading data: 19%|#9 | 1.38G/7.07G [00:15<00:59, 95.5MB/s]

Downloading data: 20%|#9 | 1.39G/7.07G [00:15<00:59, 95.5MB/s]

Downloading data: 20%|#9 | 1.40G/7.07G [00:15<00:59, 95.5MB/s]

Downloading data: 20%|#9 | 1.41G/7.07G [00:15<00:59, 95.5MB/s]

Downloading data: 20%|## | 1.42G/7.07G [00:15<00:59, 95.7MB/s]

Downloading data: 20%|## | 1.43G/7.07G [00:15<00:59, 95.5MB/s]

Downloading data: 20%|## | 1.44G/7.07G [00:16<00:58, 95.6MB/s]

Downloading data: 20%|## | 1.44G/7.07G [00:16<00:58, 95.5MB/s]

Downloading data: 21%|## | 1.45G/7.07G [00:16<00:58, 95.3MB/s]

Downloading data: 21%|## | 1.46G/7.07G [00:16<00:58, 95.6MB/s]

Downloading data: 21%|## | 1.47G/7.07G [00:16<00:58, 95.5MB/s]

Downloading data: 21%|## | 1.48G/7.07G [00:16<00:58, 95.4MB/s]

Downloading data: 21%|##1 | 1.49G/7.07G [00:16<00:58, 95.4MB/s]

Downloading data: 21%|##1 | 1.50G/7.07G [00:16<00:58, 95.4MB/s]

Downloading data: 21%|##1 | 1.51G/7.07G [00:16<00:58, 95.3MB/s]

Downloading data: 22%|##1 | 1.52G/7.07G [00:16<00:58, 95.2MB/s]

Downloading data: 22%|##1 | 1.53G/7.07G [00:17<00:58, 94.9MB/s]

Downloading data: 22%|##1 | 1.54G/7.07G [00:17<00:58, 94.9MB/s]

Downloading data: 22%|##1 | 1.55G/7.07G [00:17<00:58, 95.0MB/s]

Downloading data: 22%|##2 | 1.56G/7.07G [00:17<00:58, 94.9MB/s]

Downloading data: 22%|##2 | 1.57G/7.07G [00:17<00:58, 94.8MB/s]

Downloading data: 22%|##2 | 1.58G/7.07G [00:17<00:57, 94.8MB/s]

Downloading data: 22%|##2 | 1.59G/7.07G [00:17<00:57, 94.9MB/s]

Downloading data: 23%|##2 | 1.60G/7.07G [00:17<00:57, 94.9MB/s]

Downloading data: 23%|##2 | 1.61G/7.07G [00:17<00:57, 95.0MB/s]

Downloading data: 23%|##2 | 1.62G/7.07G [00:17<00:57, 95.0MB/s]

Downloading data: 23%|##2 | 1.63G/7.07G [00:18<00:57, 95.0MB/s]

Downloading data: 23%|##3 | 1.64G/7.07G [00:18<00:57, 95.1MB/s]

Downloading data: 23%|##3 | 1.64G/7.07G [00:18<00:57, 95.1MB/s]

Downloading data: 23%|##3 | 1.65G/7.07G [00:18<01:29, 60.6MB/s]

Downloading data: 23%|##3 | 1.66G/7.07G [00:18<01:26, 62.8MB/s]

Downloading data: 24%|##3 | 1.67G/7.07G [00:18<01:22, 65.2MB/s]

Downloading data: 24%|##3 | 1.68G/7.07G [00:18<01:20, 67.2MB/s]

Downloading data: 24%|##3 | 1.68G/7.07G [00:18<01:16, 70.0MB/s]

Downloading data: 24%|##3 | 1.69G/7.07G [00:19<01:10, 76.1MB/s]

Downloading data: 24%|##4 | 1.70G/7.07G [00:19<01:06, 80.8MB/s]

Downloading data: 24%|##4 | 1.71G/7.07G [00:19<01:03, 84.5MB/s]

Downloading data: 24%|##4 | 1.72G/7.07G [00:19<01:01, 87.4MB/s]

Downloading data: 24%|##4 | 1.73G/7.07G [00:19<00:59, 89.6MB/s]

Downloading data: 25%|##4 | 1.74G/7.07G [00:19<00:58, 91.1MB/s]

Downloading data: 25%|##4 | 1.75G/7.07G [00:19<00:57, 92.1MB/s]

Downloading data: 25%|##4 | 1.76G/7.07G [00:19<00:57, 92.7MB/s]

Downloading data: 25%|##5 | 1.77G/7.07G [00:19<00:56, 93.4MB/s]

Downloading data: 25%|##5 | 1.78G/7.07G [00:19<00:56, 93.9MB/s]

Downloading data: 25%|##5 | 1.79G/7.07G [00:20<00:56, 94.2MB/s]

Downloading data: 25%|##5 | 1.80G/7.07G [00:20<00:55, 94.4MB/s]

Downloading data: 26%|##5 | 1.81G/7.07G [00:20<00:55, 94.7MB/s]

Downloading data: 26%|##5 | 1.82G/7.07G [00:20<00:55, 94.7MB/s]

Downloading data: 26%|##5 | 1.83G/7.07G [00:20<00:55, 94.8MB/s]

Downloading data: 26%|##5 | 1.84G/7.07G [00:20<00:55, 94.9MB/s]

Downloading data: 26%|##6 | 1.85G/7.07G [00:20<00:55, 94.9MB/s]

Downloading data: 26%|##6 | 1.85G/7.07G [00:20<00:54, 95.0MB/s]

Downloading data: 26%|##6 | 1.86G/7.07G [00:20<00:54, 94.8MB/s]

Downloading data: 26%|##6 | 1.87G/7.07G [00:20<00:54, 94.9MB/s]

Downloading data: 27%|##6 | 1.88G/7.07G [00:21<00:54, 95.0MB/s]

Downloading data: 27%|##6 | 1.89G/7.07G [00:21<00:54, 94.8MB/s]

Downloading data: 27%|##6 | 1.90G/7.07G [00:21<00:54, 94.9MB/s]

Downloading data: 27%|##7 | 1.91G/7.07G [00:21<00:54, 94.9MB/s]

Downloading data: 27%|##7 | 1.92G/7.07G [00:21<00:54, 94.9MB/s]

Downloading data: 27%|##7 | 1.93G/7.07G [00:21<00:54, 95.1MB/s]

Downloading data: 27%|##7 | 1.94G/7.07G [00:21<00:53, 95.3MB/s]

Downloading data: 28%|##7 | 1.95G/7.07G [00:21<00:53, 95.2MB/s]

Downloading data: 28%|##7 | 1.96G/7.07G [00:21<00:53, 95.0MB/s]

Downloading data: 28%|##7 | 1.97G/7.07G [00:21<00:53, 95.1MB/s]

Downloading data: 28%|##7 | 1.98G/7.07G [00:22<00:53, 95.0MB/s]

Downloading data: 28%|##8 | 1.99G/7.07G [00:22<00:53, 94.8MB/s]

Downloading data: 28%|##8 | 2.00G/7.07G [00:22<00:53, 94.7MB/s]

Downloading data: 28%|##8 | 2.01G/7.07G [00:22<00:53, 95.0MB/s]

Downloading data: 29%|##8 | 2.02G/7.07G [00:22<00:53, 94.9MB/s]

Downloading data: 29%|##8 | 2.03G/7.07G [00:22<00:53, 95.0MB/s]

Downloading data: 29%|##8 | 2.04G/7.07G [00:22<00:53, 95.0MB/s]

Downloading data: 29%|##8 | 2.05G/7.07G [00:22<00:52, 95.1MB/s]

Downloading data: 29%|##9 | 2.05G/7.07G [00:22<00:52, 95.0MB/s]

Downloading data: 29%|##9 | 2.06G/7.07G [00:22<00:52, 94.6MB/s]

Downloading data: 29%|##9 | 2.07G/7.07G [00:23<00:52, 94.6MB/s]

Downloading data: 29%|##9 | 2.08G/7.07G [00:23<00:52, 94.8MB/s]

Downloading data: 30%|##9 | 2.09G/7.07G [00:23<00:52, 94.6MB/s]

Downloading data: 30%|##9 | 2.10G/7.07G [00:23<00:52, 94.7MB/s]

Downloading data: 30%|##9 | 2.11G/7.07G [00:23<00:52, 94.9MB/s]

Downloading data: 30%|##9 | 2.12G/7.07G [00:23<00:52, 95.0MB/s]

Downloading data: 30%|### | 2.13G/7.07G [00:23<00:52, 94.9MB/s]

Downloading data: 30%|### | 2.14G/7.07G [00:23<00:51, 95.1MB/s]

Downloading data: 30%|### | 2.15G/7.07G [00:23<00:51, 95.0MB/s]

Downloading data: 31%|### | 2.16G/7.07G [00:23<00:51, 95.1MB/s]

Downloading data: 31%|### | 2.17G/7.07G [00:24<00:51, 94.9MB/s]

Downloading data: 31%|### | 2.18G/7.07G [00:24<00:51, 94.6MB/s]

Downloading data: 31%|### | 2.19G/7.07G [00:24<00:51, 94.3MB/s]

Downloading data: 31%|###1 | 2.20G/7.07G [00:24<00:51, 94.3MB/s]

Downloading data: 31%|###1 | 2.21G/7.07G [00:24<00:51, 94.4MB/s]

Downloading data: 31%|###1 | 2.22G/7.07G [00:24<00:51, 94.3MB/s]

Downloading data: 31%|###1 | 2.23G/7.07G [00:24<00:51, 94.5MB/s]

Downloading data: 32%|###1 | 2.24G/7.07G [00:24<00:51, 94.6MB/s]

Downloading data: 32%|###1 | 2.24G/7.07G [00:24<00:50, 94.8MB/s]

Downloading data: 32%|###1 | 2.25G/7.07G [00:24<00:50, 95.0MB/s]

Downloading data: 32%|###2 | 2.26G/7.07G [00:25<00:50, 95.0MB/s]

Downloading data: 32%|###2 | 2.27G/7.07G [00:25<00:50, 94.7MB/s]

Downloading data: 32%|###2 | 2.28G/7.07G [00:25<00:50, 94.7MB/s]

Downloading data: 32%|###2 | 2.29G/7.07G [00:25<00:50, 94.8MB/s]

Downloading data: 33%|###2 | 2.30G/7.07G [00:25<00:50, 95.1MB/s]

Downloading data: 33%|###2 | 2.31G/7.07G [00:25<00:50, 95.2MB/s]

Downloading data: 33%|###2 | 2.32G/7.07G [00:25<00:49, 95.3MB/s]

Downloading data: 33%|###2 | 2.33G/7.07G [00:25<00:49, 95.3MB/s]

Downloading data: 33%|###3 | 2.34G/7.07G [00:25<00:49, 95.4MB/s]

Downloading data: 33%|###3 | 2.35G/7.07G [00:25<00:49, 95.5MB/s]

Downloading data: 33%|###3 | 2.36G/7.07G [00:26<00:49, 95.6MB/s]

Downloading data: 33%|###3 | 2.37G/7.07G [00:26<00:49, 95.6MB/s]

Downloading data: 34%|###3 | 2.38G/7.07G [00:26<00:49, 95.4MB/s]

Downloading data: 34%|###3 | 2.39G/7.07G [00:26<00:49, 95.6MB/s]

Downloading data: 34%|###3 | 2.40G/7.07G [00:26<00:48, 95.5MB/s]

Downloading data: 34%|###4 | 2.41G/7.07G [00:26<00:48, 95.5MB/s]

Downloading data: 34%|###4 | 2.42G/7.07G [00:26<00:48, 95.5MB/s]

Downloading data: 34%|###4 | 2.43G/7.07G [00:26<00:48, 95.5MB/s]

Downloading data: 34%|###4 | 2.44G/7.07G [00:26<00:48, 95.5MB/s]

Downloading data: 35%|###4 | 2.45G/7.07G [00:26<00:48, 95.4MB/s]

Downloading data: 35%|###4 | 2.45G/7.07G [00:27<00:48, 95.4MB/s]

Downloading data: 35%|###4 | 2.46G/7.07G [00:27<00:48, 95.4MB/s]

Downloading data: 35%|###4 | 2.47G/7.07G [00:27<00:48, 95.1MB/s]

Downloading data: 35%|###5 | 2.48G/7.07G [00:27<00:48, 95.3MB/s]

Downloading data: 35%|###5 | 2.49G/7.07G [00:27<00:48, 95.2MB/s]

Downloading data: 35%|###5 | 2.50G/7.07G [00:27<00:47, 95.3MB/s]

Downloading data: 36%|###5 | 2.51G/7.07G [00:27<00:47, 95.4MB/s]

Downloading data: 36%|###5 | 2.52G/7.07G [00:27<00:47, 95.3MB/s]

Downloading data: 36%|###5 | 2.53G/7.07G [00:27<00:47, 95.5MB/s]

Downloading data: 36%|###5 | 2.54G/7.07G [00:27<00:47, 95.5MB/s]

Downloading data: 36%|###6 | 2.55G/7.07G [00:28<00:47, 95.3MB/s]

Downloading data: 36%|###6 | 2.56G/7.07G [00:28<00:47, 95.4MB/s]

Downloading data: 36%|###6 | 2.57G/7.07G [00:28<00:47, 95.5MB/s]

Downloading data: 36%|###6 | 2.58G/7.07G [00:28<00:47, 95.6MB/s]

Downloading data: 37%|###6 | 2.59G/7.07G [00:28<00:47, 95.4MB/s]

Downloading data: 37%|###6 | 2.60G/7.07G [00:28<00:46, 95.4MB/s]

Downloading data: 37%|###6 | 2.61G/7.07G [00:28<00:46, 95.2MB/s]

Downloading data: 37%|###7 | 2.62G/7.07G [00:28<00:46, 95.1MB/s]

Downloading data: 37%|###7 | 2.63G/7.07G [00:28<00:46, 95.1MB/s]

Downloading data: 37%|###7 | 2.64G/7.07G [00:28<00:46, 95.0MB/s]

Downloading data: 37%|###7 | 2.65G/7.07G [00:29<00:46, 95.0MB/s]

Downloading data: 38%|###7 | 2.66G/7.07G [00:29<00:46, 95.3MB/s]

Downloading data: 38%|###7 | 2.66G/7.07G [00:29<00:46, 95.1MB/s]

Downloading data: 38%|###7 | 2.67G/7.07G [00:29<00:46, 95.1MB/s]

Downloading data: 38%|###7 | 2.68G/7.07G [00:29<00:46, 95.1MB/s]

Downloading data: 38%|###8 | 2.69G/7.07G [00:29<00:46, 95.0MB/s]

Downloading data: 38%|###8 | 2.70G/7.07G [00:29<00:45, 95.1MB/s]

Downloading data: 38%|###8 | 2.71G/7.07G [00:29<00:45, 94.9MB/s]

Downloading data: 38%|###8 | 2.72G/7.07G [00:29<00:45, 95.1MB/s]

Downloading data: 39%|###8 | 2.73G/7.07G [00:29<00:45, 95.0MB/s]

Downloading data: 39%|###8 | 2.74G/7.07G [00:30<00:45, 95.2MB/s]

Downloading data: 39%|###8 | 2.75G/7.07G [00:30<00:45, 94.9MB/s]

Downloading data: 39%|###9 | 2.76G/7.07G [00:30<00:45, 95.1MB/s]

Downloading data: 39%|###9 | 2.77G/7.07G [00:30<00:45, 95.1MB/s]

Downloading data: 39%|###9 | 2.78G/7.07G [00:30<00:45, 95.0MB/s]

Downloading data: 39%|###9 | 2.79G/7.07G [00:30<00:44, 95.2MB/s]

Downloading data: 40%|###9 | 2.80G/7.07G [00:30<00:44, 95.2MB/s]

Downloading data: 40%|###9 | 2.81G/7.07G [00:30<00:44, 95.2MB/s]

Downloading data: 40%|###9 | 2.82G/7.07G [00:30<00:44, 95.2MB/s]

Downloading data: 40%|###9 | 2.83G/7.07G [00:30<00:44, 95.3MB/s]

Downloading data: 40%|#### | 2.84G/7.07G [00:31<00:44, 95.2MB/s]

Downloading data: 40%|#### | 2.85G/7.07G [00:31<00:44, 95.3MB/s]

Downloading data: 40%|#### | 2.86G/7.07G [00:31<00:44, 95.4MB/s]

Downloading data: 41%|#### | 2.86G/7.07G [00:31<00:44, 95.2MB/s]

Downloading data: 41%|#### | 2.87G/7.07G [00:31<00:44, 95.2MB/s]

Downloading data: 41%|#### | 2.88G/7.07G [00:31<00:44, 95.2MB/s]

Downloading data: 41%|#### | 2.89G/7.07G [00:31<00:43, 95.1MB/s]

Downloading data: 41%|####1 | 2.90G/7.07G [00:31<00:43, 95.3MB/s]

Downloading data: 41%|####1 | 2.91G/7.07G [00:31<00:43, 95.3MB/s]

Downloading data: 41%|####1 | 2.92G/7.07G [00:31<00:43, 95.4MB/s]

Downloading data: 41%|####1 | 2.93G/7.07G [00:32<00:43, 95.2MB/s]

Downloading data: 42%|####1 | 2.94G/7.07G [00:32<00:43, 95.3MB/s]

Downloading data: 42%|####1 | 2.95G/7.07G [00:32<00:43, 95.4MB/s]

Downloading data: 42%|####1 | 2.96G/7.07G [00:32<00:43, 95.1MB/s]

Downloading data: 42%|####1 | 2.97G/7.07G [00:32<00:43, 95.1MB/s]

Downloading data: 42%|####2 | 2.98G/7.07G [00:32<00:43, 95.1MB/s]

Downloading data: 42%|####2 | 2.99G/7.07G [00:32<00:42, 95.1MB/s]

Downloading data: 42%|####2 | 3.00G/7.07G [00:32<00:42, 95.0MB/s]

Downloading data: 43%|####2 | 3.01G/7.07G [00:32<00:42, 95.2MB/s]

Downloading data: 43%|####2 | 3.02G/7.07G [00:32<00:42, 95.4MB/s]

Downloading data: 43%|####2 | 3.03G/7.07G [00:33<00:42, 95.5MB/s]

Downloading data: 43%|####2 | 3.04G/7.07G [00:33<00:42, 95.5MB/s]

Downloading data: 43%|####3 | 3.05G/7.07G [00:33<00:42, 95.4MB/s]

Downloading data: 43%|####3 | 3.06G/7.07G [00:33<00:41, 95.7MB/s]

Downloading data: 43%|####3 | 3.07G/7.07G [00:33<00:41, 95.6MB/s]

Downloading data: 43%|####3 | 3.08G/7.07G [00:33<00:41, 95.5MB/s]

Downloading data: 44%|####3 | 3.08G/7.07G [00:33<00:41, 95.4MB/s]

Downloading data: 44%|####3 | 3.09G/7.07G [00:33<00:41, 95.5MB/s]

Downloading data: 44%|####3 | 3.10G/7.07G [00:33<00:41, 95.4MB/s]

Downloading data: 44%|####4 | 3.11G/7.07G [00:33<00:41, 95.2MB/s]

Downloading data: 44%|####4 | 3.12G/7.07G [00:34<00:41, 95.3MB/s]

Downloading data: 44%|####4 | 3.13G/7.07G [00:34<00:41, 95.3MB/s]

Downloading data: 44%|####4 | 3.14G/7.07G [00:34<00:41, 95.0MB/s]

Downloading data: 45%|####4 | 3.15G/7.07G [00:34<00:41, 95.0MB/s]

Downloading data: 45%|####4 | 3.16G/7.07G [00:34<00:41, 95.2MB/s]

Downloading data: 45%|####4 | 3.17G/7.07G [00:34<00:40, 95.4MB/s]

Downloading data: 45%|####4 | 3.18G/7.07G [00:34<00:40, 95.0MB/s]

Downloading data: 45%|####5 | 3.19G/7.07G [00:34<00:40, 95.2MB/s]

Downloading data: 45%|####5 | 3.20G/7.07G [00:34<00:40, 95.2MB/s]

Downloading data: 45%|####5 | 3.21G/7.07G [00:34<00:40, 95.2MB/s]

Downloading data: 46%|####5 | 3.22G/7.07G [00:35<00:40, 95.3MB/s]

Downloading data: 46%|####5 | 3.23G/7.07G [00:35<00:40, 95.4MB/s]

Downloading data: 46%|####5 | 3.24G/7.07G [00:35<00:40, 95.6MB/s]

Downloading data: 46%|####5 | 3.25G/7.07G [00:35<00:39, 95.6MB/s]

Downloading data: 46%|####6 | 3.26G/7.07G [00:35<00:39, 95.5MB/s]

Downloading data: 46%|####6 | 3.27G/7.07G [00:35<00:39, 95.6MB/s]

Downloading data: 46%|####6 | 3.28G/7.07G [00:35<00:39, 95.8MB/s]

Downloading data: 46%|####6 | 3.29G/7.07G [00:35<00:39, 95.7MB/s]

Downloading data: 47%|####6 | 3.29G/7.07G [00:35<00:39, 95.6MB/s]

Downloading data: 47%|####6 | 3.30G/7.07G [00:35<00:39, 95.5MB/s]

Downloading data: 47%|####6 | 3.31G/7.07G [00:36<00:39, 95.2MB/s]

Downloading data: 47%|####6 | 3.32G/7.07G [00:36<00:39, 95.2MB/s]

Downloading data: 47%|####7 | 3.33G/7.07G [00:36<00:39, 95.3MB/s]

Downloading data: 47%|####7 | 3.34G/7.07G [00:36<00:39, 94.9MB/s]

Downloading data: 47%|####7 | 3.35G/7.07G [00:36<00:39, 95.1MB/s]

Downloading data: 48%|####7 | 3.36G/7.07G [00:36<00:39, 95.0MB/s]

Downloading data: 48%|####7 | 3.37G/7.07G [00:36<00:38, 95.0MB/s]

Downloading data: 48%|####7 | 3.38G/7.07G [00:36<00:38, 95.0MB/s]

Downloading data: 48%|####7 | 3.39G/7.07G [00:36<00:38, 95.1MB/s]

Downloading data: 48%|####8 | 3.40G/7.07G [00:36<00:38, 94.7MB/s]

Downloading data: 48%|####8 | 3.41G/7.07G [00:37<00:38, 94.9MB/s]

Downloading data: 48%|####8 | 3.42G/7.07G [00:37<00:38, 95.1MB/s]

Downloading data: 48%|####8 | 3.43G/7.07G [00:37<00:38, 95.1MB/s]

Downloading data: 49%|####8 | 3.44G/7.07G [00:37<00:38, 95.0MB/s]

Downloading data: 49%|####8 | 3.45G/7.07G [00:37<00:38, 95.1MB/s]

Downloading data: 49%|####8 | 3.46G/7.07G [00:37<00:37, 95.2MB/s]

Downloading data: 49%|####9 | 3.47G/7.07G [00:37<00:37, 95.0MB/s]

Downloading data: 49%|####9 | 3.48G/7.07G [00:37<00:37, 95.0MB/s]

Downloading data: 49%|####9 | 3.49G/7.07G [00:37<00:37, 94.9MB/s]

Downloading data: 49%|####9 | 3.49G/7.07G [00:37<00:37, 94.9MB/s]

Downloading data: 50%|####9 | 3.50G/7.07G [00:38<00:37, 94.9MB/s]

Downloading data: 50%|####9 | 3.51G/7.07G [00:38<00:37, 94.6MB/s]

Downloading data: 50%|####9 | 3.52G/7.07G [00:38<00:37, 94.7MB/s]

Downloading data: 50%|####9 | 3.53G/7.07G [00:38<00:37, 94.8MB/s]

Downloading data: 50%|##### | 3.54G/7.07G [00:38<00:37, 95.0MB/s]

Downloading data: 50%|##### | 3.55G/7.07G [00:38<00:37, 94.9MB/s]

Downloading data: 50%|##### | 3.56G/7.07G [00:38<00:37, 94.8MB/s]

Downloading data: 50%|##### | 3.57G/7.07G [00:38<00:36, 94.8MB/s]

Downloading data: 51%|##### | 3.58G/7.07G [00:38<00:36, 95.0MB/s]

Downloading data: 51%|##### | 3.59G/7.07G [00:39<00:36, 95.0MB/s]

Downloading data: 51%|##### | 3.60G/7.07G [00:39<00:36, 94.8MB/s]

Downloading data: 51%|#####1 | 3.61G/7.07G [00:39<00:36, 94.9MB/s]

Downloading data: 51%|#####1 | 3.62G/7.07G [00:39<00:36, 95.1MB/s]

Downloading data: 51%|#####1 | 3.63G/7.07G [00:39<00:36, 95.2MB/s]

Downloading data: 51%|#####1 | 3.64G/7.07G [00:39<00:36, 95.2MB/s]

Downloading data: 52%|#####1 | 3.65G/7.07G [00:39<00:36, 95.1MB/s]

Downloading data: 52%|#####1 | 3.66G/7.07G [00:39<00:35, 95.1MB/s]

Downloading data: 52%|#####1 | 3.67G/7.07G [00:39<00:35, 95.0MB/s]

Downloading data: 52%|#####1 | 3.68G/7.07G [00:39<00:35, 95.1MB/s]

Downloading data: 52%|#####2 | 3.69G/7.07G [00:40<00:35, 95.3MB/s]

Downloading data: 52%|#####2 | 3.69G/7.07G [00:40<00:35, 95.0MB/s]

Downloading data: 52%|#####2 | 3.70G/7.07G [00:40<00:35, 95.1MB/s]

Downloading data: 53%|#####2 | 3.71G/7.07G [00:40<00:35, 95.0MB/s]

Downloading data: 53%|#####2 | 3.72G/7.07G [00:40<00:35, 95.1MB/s]

Downloading data: 53%|#####2 | 3.73G/7.07G [00:40<00:35, 95.0MB/s]

Downloading data: 53%|#####2 | 3.74G/7.07G [00:40<00:35, 95.1MB/s]

Downloading data: 53%|#####3 | 3.75G/7.07G [00:40<00:34, 95.0MB/s]

Downloading data: 53%|#####3 | 3.76G/7.07G [00:40<00:34, 95.0MB/s]

Downloading data: 53%|#####3 | 3.77G/7.07G [00:40<00:34, 95.0MB/s]

Downloading data: 53%|#####3 | 3.78G/7.07G [00:41<00:34, 95.2MB/s]

Downloading data: 54%|#####3 | 3.79G/7.07G [00:41<00:34, 95.0MB/s]

Downloading data: 54%|#####3 | 3.80G/7.07G [00:41<00:34, 95.2MB/s]

Downloading data: 54%|#####3 | 3.81G/7.07G [00:41<00:34, 95.2MB/s]

Downloading data: 54%|#####3 | 3.82G/7.07G [00:41<00:34, 95.3MB/s]

Downloading data: 54%|#####4 | 3.83G/7.07G [00:41<00:33, 95.5MB/s]

Downloading data: 54%|#####4 | 3.84G/7.07G [00:41<00:33, 95.4MB/s]

Downloading data: 54%|#####4 | 3.85G/7.07G [00:41<00:33, 95.4MB/s]

Downloading data: 55%|#####4 | 3.86G/7.07G [00:41<00:33, 95.4MB/s]

Downloading data: 55%|#####4 | 3.87G/7.07G [00:41<00:33, 95.5MB/s]

Downloading data: 55%|#####4 | 3.88G/7.07G [00:42<00:33, 95.6MB/s]

Downloading data: 55%|#####4 | 3.89G/7.07G [00:42<00:33, 95.4MB/s]

Downloading data: 55%|#####5 | 3.90G/7.07G [00:42<00:33, 95.4MB/s]

Downloading data: 55%|#####5 | 3.90G/7.07G [00:42<00:33, 95.6MB/s]

Downloading data: 55%|#####5 | 3.91G/7.07G [00:42<00:33, 95.6MB/s]

Downloading data: 55%|#####5 | 3.92G/7.07G [00:42<00:32, 95.5MB/s]

Downloading data: 56%|#####5 | 3.93G/7.07G [00:42<00:32, 95.5MB/s]

Downloading data: 56%|#####5 | 3.94G/7.07G [00:42<00:32, 95.4MB/s]

Downloading data: 56%|#####5 | 3.95G/7.07G [00:42<00:32, 95.4MB/s]

Downloading data: 56%|#####6 | 3.96G/7.07G [00:42<00:32, 95.3MB/s]

Downloading data: 56%|#####6 | 3.97G/7.07G [00:43<00:32, 95.4MB/s]

Downloading data: 56%|#####6 | 3.98G/7.07G [00:43<00:32, 95.4MB/s]

Downloading data: 56%|#####6 | 3.99G/7.07G [00:43<00:32, 95.3MB/s]

Downloading data: 57%|#####6 | 4.00G/7.07G [00:43<00:32, 95.5MB/s]

Downloading data: 57%|#####6 | 4.01G/7.07G [00:43<00:32, 95.5MB/s]

Downloading data: 57%|#####6 | 4.02G/7.07G [00:43<00:31, 95.4MB/s]

Downloading data: 57%|#####6 | 4.03G/7.07G [00:43<00:31, 95.4MB/s]

Downloading data: 57%|#####7 | 4.04G/7.07G [00:43<00:31, 95.6MB/s]

Downloading data: 57%|#####7 | 4.05G/7.07G [00:43<00:31, 95.6MB/s]

Downloading data: 57%|#####7 | 4.06G/7.07G [00:43<00:31, 95.6MB/s]

Downloading data: 58%|#####7 | 4.07G/7.07G [00:44<00:50, 59.9MB/s]

Downloading data: 58%|#####7 | 4.07G/7.07G [00:44<00:48, 62.3MB/s]

Downloading data: 58%|#####7 | 4.08G/7.07G [00:44<00:46, 64.6MB/s]

Downloading data: 58%|#####7 | 4.09G/7.07G [00:44<00:44, 66.5MB/s]

Downloading data: 58%|#####7 | 4.10G/7.07G [00:44<00:43, 68.3MB/s]

Downloading data: 58%|#####8 | 4.10G/7.07G [00:44<00:42, 69.9MB/s]

Downloading data: 58%|#####8 | 4.11G/7.07G [00:44<00:41, 71.1MB/s]

Downloading data: 58%|#####8 | 4.12G/7.07G [00:44<00:41, 71.8MB/s]

Downloading data: 58%|#####8 | 4.13G/7.07G [00:45<00:40, 72.4MB/s]

Downloading data: 58%|#####8 | 4.13G/7.07G [00:45<00:40, 72.9MB/s]

Downloading data: 59%|#####8 | 4.14G/7.07G [00:45<00:40, 72.9MB/s]

Downloading data: 59%|#####8 | 4.15G/7.07G [00:45<00:39, 73.3MB/s]

Downloading data: 59%|#####8 | 4.16G/7.07G [00:45<00:39, 73.3MB/s]

Downloading data: 59%|#####8 | 4.16G/7.07G [00:45<00:39, 73.4MB/s]

Downloading data: 59%|#####8 | 4.17G/7.07G [00:45<00:39, 73.5MB/s]

Downloading data: 59%|#####9 | 4.18G/7.07G [00:45<00:38, 75.5MB/s]

Downloading data: 59%|#####9 | 4.19G/7.07G [00:45<00:35, 80.4MB/s]

Downloading data: 59%|#####9 | 4.20G/7.07G [00:45<00:34, 84.3MB/s]

Downloading data: 59%|#####9 | 4.21G/7.07G [00:46<00:32, 87.1MB/s]

Downloading data: 60%|#####9 | 4.22G/7.07G [00:46<00:31, 89.4MB/s]

Downloading data: 60%|#####9 | 4.23G/7.07G [00:46<00:31, 90.8MB/s]

Downloading data: 60%|#####9 | 4.24G/7.07G [00:46<00:30, 92.3MB/s]

Downloading data: 60%|###### | 4.25G/7.07G [00:46<00:30, 93.0MB/s]

Downloading data: 60%|###### | 4.25G/7.07G [00:46<00:30, 93.3MB/s]

Downloading data: 60%|###### | 4.26G/7.07G [00:46<00:29, 93.8MB/s]

Downloading data: 60%|###### | 4.27G/7.07G [00:46<00:29, 94.1MB/s]

Downloading data: 61%|###### | 4.28G/7.07G [00:46<00:29, 94.4MB/s]

Downloading data: 61%|###### | 4.29G/7.07G [00:46<00:29, 94.4MB/s]

Downloading data: 61%|###### | 4.30G/7.07G [00:47<00:29, 94.6MB/s]

Downloading data: 61%|###### | 4.31G/7.07G [00:47<00:29, 94.7MB/s]

Downloading data: 61%|######1 | 4.32G/7.07G [00:47<00:28, 95.0MB/s]

Downloading data: 61%|######1 | 4.33G/7.07G [00:47<00:28, 95.2MB/s]

Downloading data: 61%|######1 | 4.34G/7.07G [00:47<00:28, 95.1MB/s]

Downloading data: 62%|######1 | 4.35G/7.07G [00:47<00:28, 95.2MB/s]

Downloading data: 62%|######1 | 4.36G/7.07G [00:47<00:28, 95.0MB/s]

Downloading data: 62%|######1 | 4.37G/7.07G [00:47<00:28, 95.0MB/s]

Downloading data: 62%|######1 | 4.38G/7.07G [00:47<00:28, 95.0MB/s]

Downloading data: 62%|######2 | 4.39G/7.07G [00:47<00:28, 95.0MB/s]

Downloading data: 62%|######2 | 4.40G/7.07G [00:48<00:28, 95.0MB/s]

Downloading data: 62%|######2 | 4.41G/7.07G [00:48<00:27, 95.3MB/s]

Downloading data: 62%|######2 | 4.42G/7.07G [00:48<00:27, 95.3MB/s]

Downloading data: 63%|######2 | 4.43G/7.07G [00:48<00:27, 94.9MB/s]

Downloading data: 63%|######2 | 4.44G/7.07G [00:48<00:27, 94.7MB/s]

Downloading data: 63%|######2 | 4.44G/7.07G [00:48<00:27, 94.8MB/s]

Downloading data: 63%|######2 | 4.45G/7.07G [00:48<00:27, 94.9MB/s]

Downloading data: 63%|######3 | 4.46G/7.07G [00:48<00:27, 94.7MB/s]

Downloading data: 63%|######3 | 4.47G/7.07G [00:48<00:27, 94.8MB/s]

Downloading data: 63%|######3 | 4.48G/7.07G [00:48<00:27, 95.1MB/s]

Downloading data: 64%|######3 | 4.49G/7.07G [00:49<00:27, 95.3MB/s]

Downloading data: 64%|######3 | 4.50G/7.07G [00:49<00:26, 95.4MB/s]

Downloading data: 64%|######3 | 4.51G/7.07G [00:49<00:26, 95.3MB/s]

Downloading data: 64%|######3 | 4.52G/7.07G [00:49<00:26, 95.3MB/s]

Downloading data: 64%|######4 | 4.53G/7.07G [00:49<00:26, 95.4MB/s]

Downloading data: 64%|######4 | 4.54G/7.07G [00:49<00:26, 95.3MB/s]

Downloading data: 64%|######4 | 4.55G/7.07G [00:49<00:26, 95.4MB/s]

Downloading data: 64%|######4 | 4.56G/7.07G [00:49<00:26, 95.2MB/s]

Downloading data: 65%|######4 | 4.57G/7.07G [00:49<00:26, 95.3MB/s]

Downloading data: 65%|######4 | 4.58G/7.07G [00:49<00:26, 95.3MB/s]

Downloading data: 65%|######4 | 4.59G/7.07G [00:50<00:26, 95.4MB/s]

Downloading data: 65%|######5 | 4.60G/7.07G [00:50<00:25, 95.4MB/s]

Downloading data: 65%|######5 | 4.61G/7.07G [00:50<00:25, 95.2MB/s]

Downloading data: 65%|######5 | 4.62G/7.07G [00:50<00:25, 95.2MB/s]

Downloading data: 65%|######5 | 4.63G/7.07G [00:50<00:25, 95.3MB/s]

Downloading data: 66%|######5 | 4.64G/7.07G [00:50<00:25, 95.1MB/s]

Downloading data: 66%|######5 | 4.65G/7.07G [00:50<00:25, 95.3MB/s]

Downloading data: 66%|######5 | 4.65G/7.07G [00:50<00:25, 95.3MB/s]

Downloading data: 66%|######5 | 4.66G/7.07G [00:50<00:25, 95.3MB/s]

Downloading data: 66%|######6 | 4.67G/7.07G [00:50<00:25, 95.3MB/s]

Downloading data: 66%|######6 | 4.68G/7.07G [00:51<00:25, 95.1MB/s]

Downloading data: 66%|######6 | 4.69G/7.07G [00:51<00:25, 94.9MB/s]

Downloading data: 66%|######6 | 4.70G/7.07G [00:51<00:24, 95.0MB/s]

Downloading data: 67%|######6 | 4.71G/7.07G [00:51<00:24, 95.1MB/s]

Downloading data: 67%|######6 | 4.72G/7.07G [00:51<00:24, 95.1MB/s]

Downloading data: 67%|######6 | 4.73G/7.07G [00:51<00:24, 95.3MB/s]

Downloading data: 67%|######7 | 4.74G/7.07G [00:51<00:24, 95.5MB/s]

Downloading data: 67%|######7 | 4.75G/7.07G [00:51<00:24, 95.4MB/s]

Downloading data: 67%|######7 | 4.76G/7.07G [00:51<00:24, 95.2MB/s]

Downloading data: 67%|######7 | 4.77G/7.07G [00:51<00:24, 95.2MB/s]

Downloading data: 68%|######7 | 4.78G/7.07G [00:52<00:24, 95.2MB/s]

Downloading data: 68%|######7 | 4.79G/7.07G [00:52<00:24, 95.1MB/s]

Downloading data: 68%|######7 | 4.80G/7.07G [00:52<00:23, 95.2MB/s]

Downloading data: 68%|######7 | 4.81G/7.07G [00:52<00:23, 95.4MB/s]

Downloading data: 68%|######8 | 4.82G/7.07G [00:52<00:23, 95.3MB/s]

Downloading data: 68%|######8 | 4.83G/7.07G [00:52<00:23, 95.2MB/s]

Downloading data: 68%|######8 | 4.84G/7.07G [00:52<00:23, 95.0MB/s]

Downloading data: 69%|######8 | 4.85G/7.07G [00:52<00:23, 95.0MB/s]

Downloading data: 69%|######8 | 4.85G/7.07G [00:52<00:23, 95.1MB/s]

Downloading data: 69%|######8 | 4.86G/7.07G [00:52<00:23, 95.2MB/s]

Downloading data: 69%|######8 | 4.87G/7.07G [00:53<00:23, 95.3MB/s]

Downloading data: 69%|######9 | 4.88G/7.07G [00:53<00:22, 95.2MB/s]

Downloading data: 69%|######9 | 4.89G/7.07G [00:53<00:22, 95.2MB/s]

Downloading data: 69%|######9 | 4.90G/7.07G [00:53<00:22, 95.3MB/s]

Downloading data: 69%|######9 | 4.91G/7.07G [00:53<00:22, 95.4MB/s]

Downloading data: 70%|######9 | 4.92G/7.07G [00:53<00:22, 95.5MB/s]

Downloading data: 70%|######9 | 4.93G/7.07G [00:53<00:22, 95.9MB/s]

Downloading data: 70%|######9 | 4.94G/7.07G [00:53<00:22, 96.5MB/s]

Downloading data: 70%|####### | 4.95G/7.07G [00:53<00:21, 96.9MB/s]

Downloading data: 70%|####### | 4.96G/7.07G [00:53<00:21, 97.2MB/s]

Downloading data: 70%|####### | 4.97G/7.07G [00:54<00:21, 97.2MB/s]

Downloading data: 70%|####### | 4.98G/7.07G [00:54<00:21, 97.3MB/s]

Downloading data: 71%|####### | 4.99G/7.07G [00:54<00:21, 97.6MB/s]

Downloading data: 71%|####### | 5.00G/7.07G [00:54<00:21, 97.4MB/s]

Downloading data: 71%|####### | 5.01G/7.07G [00:54<00:21, 97.6MB/s]

Downloading data: 71%|####### | 5.02G/7.07G [00:54<00:20, 97.8MB/s]

Downloading data: 71%|#######1 | 5.03G/7.07G [00:54<00:20, 97.8MB/s]

Downloading data: 71%|#######1 | 5.04G/7.07G [00:54<00:20, 97.9MB/s]

Downloading data: 71%|#######1 | 5.05G/7.07G [00:54<00:20, 97.8MB/s]

Downloading data: 72%|#######1 | 5.06G/7.07G [00:54<00:20, 97.8MB/s]

Downloading data: 72%|#######1 | 5.07G/7.07G [00:55<00:20, 97.9MB/s]

Downloading data: 72%|#######1 | 5.08G/7.07G [00:55<00:20, 98.0MB/s]

Downloading data: 72%|#######1 | 5.09G/7.07G [00:55<00:20, 97.9MB/s]

Downloading data: 72%|#######2 | 5.10G/7.07G [00:55<00:20, 97.8MB/s]

Downloading data: 72%|#######2 | 5.11G/7.07G [00:55<00:20, 97.9MB/s]

Downloading data: 72%|#######2 | 5.12G/7.07G [00:55<00:19, 98.0MB/s]

Downloading data: 73%|#######2 | 5.13G/7.07G [00:55<00:19, 98.0MB/s]

Downloading data: 73%|#######2 | 5.14G/7.07G [00:55<00:19, 98.1MB/s]

Downloading data: 73%|#######2 | 5.15G/7.07G [00:55<00:19, 97.8MB/s]

Downloading data: 73%|#######2 | 5.16G/7.07G [00:55<00:19, 97.9MB/s]

Downloading data: 73%|#######3 | 5.17G/7.07G [00:56<00:19, 97.6MB/s]

Downloading data: 73%|#######3 | 5.18G/7.07G [00:56<00:19, 97.5MB/s]

Downloading data: 73%|#######3 | 5.19G/7.07G [00:56<00:19, 97.4MB/s]

Downloading data: 73%|#######3 | 5.20G/7.07G [00:56<00:19, 97.0MB/s]

Downloading data: 74%|#######3 | 5.21G/7.07G [00:56<00:19, 97.1MB/s]

Downloading data: 74%|#######3 | 5.22G/7.07G [00:56<00:19, 97.2MB/s]

Downloading data: 74%|#######3 | 5.23G/7.07G [00:56<00:18, 97.2MB/s]

Downloading data: 74%|#######4 | 5.23G/7.07G [00:56<00:18, 97.0MB/s]

Downloading data: 74%|#######4 | 5.24G/7.07G [00:56<00:18, 97.1MB/s]

Downloading data: 74%|#######4 | 5.25G/7.07G [00:56<00:18, 97.4MB/s]

Downloading data: 74%|#######4 | 5.26G/7.07G [00:57<00:18, 97.3MB/s]

Downloading data: 75%|#######4 | 5.27G/7.07G [00:57<00:18, 97.2MB/s]

Downloading data: 75%|#######4 | 5.28G/7.07G [00:57<00:18, 97.3MB/s]

Downloading data: 75%|#######4 | 5.29G/7.07G [00:57<00:18, 97.2MB/s]

Downloading data: 75%|#######4 | 5.30G/7.07G [00:57<00:18, 97.3MB/s]

Downloading data: 75%|#######5 | 5.31G/7.07G [00:57<00:18, 97.3MB/s]

Downloading data: 75%|#######5 | 5.32G/7.07G [00:57<00:17, 97.4MB/s]

Downloading data: 75%|#######5 | 5.33G/7.07G [00:57<00:17, 97.3MB/s]

Downloading data: 76%|#######5 | 5.34G/7.07G [00:57<00:17, 97.2MB/s]

Downloading data: 76%|#######5 | 5.35G/7.07G [00:57<00:17, 97.1MB/s]

Downloading data: 76%|#######5 | 5.36G/7.07G [00:58<00:17, 97.0MB/s]

Downloading data: 76%|#######5 | 5.37G/7.07G [00:58<00:17, 97.3MB/s]

Downloading data: 76%|#######6 | 5.38G/7.07G [00:58<00:17, 97.5MB/s]

Downloading data: 76%|#######6 | 5.39G/7.07G [00:58<00:17, 97.6MB/s]

Downloading data: 76%|#######6 | 5.40G/7.07G [00:58<00:17, 97.7MB/s]

Downloading data: 77%|#######6 | 5.41G/7.07G [00:58<00:17, 97.5MB/s]

Downloading data: 77%|#######6 | 5.42G/7.07G [00:58<00:16, 97.7MB/s]

Downloading data: 77%|#######6 | 5.43G/7.07G [00:58<00:16, 97.6MB/s]

Downloading data: 77%|#######6 | 5.44G/7.07G [00:58<00:16, 97.7MB/s]

Downloading data: 77%|#######7 | 5.45G/7.07G [00:58<00:16, 97.5MB/s]

Downloading data: 77%|#######7 | 5.46G/7.07G [00:59<00:16, 97.5MB/s]

Downloading data: 77%|#######7 | 5.47G/7.07G [00:59<00:16, 97.6MB/s]