概述

clickhouse的容器化部署,已经有非常成熟的生态了。在一些互联网大厂也已经得到了大规模的应用。

clickhouse作为一款数据库,其容器化的主要难点在于它是有状态的服务,因此,我们需要配置PVC。

目前业界比较流行的部署方式有两种:

- kubectl 原生部署

- 这种方式部署流程复杂,需要管理的资源非常多,稍不留神就容易出错

- 维护繁琐,涉及到集群的扩缩容、rebalance等操作会很复杂

- 非常不推荐这种部署方式

- kubectl + operator部署

- 资源集中管理,部署方便

- 维护方便

- 业界已经有成熟的方案,如 clickhouse-operator、RadonDB clickhouse等。

本文以 clickhouse-operator为例,来讲解clickhouse容器化的步骤以及注意事项。

clickhouse容器化部署

clickhouse-operator部署

我们可以直接下载clickhouse-operator的yaml文件用于部署。

文件路径如下:

https://raw.githubusercontent.com/Altinity/clickhouse-operator/masterraw.githubusercontent.com/Altinity/clickhouse-operator/master/deploy/operator/clickhouse-operator-install-bundle.yaml

直接使用kubectl 应用上述yaml文件:

su01:~/chenyc/ck # kubectl apply -f clickhouse-operator-install-bundle.yaml

kubectl apply -f clickhouse-operator-install-bundle.yaml

customresourcedefinition.apiextensions.k8s.io/clickhouseinstallations.clickhouse.altinity.com created

customresourcedefinition.apiextensions.k8s.io/clickhouseinstallationtemplates.clickhouse.altinity.com created

customresourcedefinition.apiextensions.k8s.io/clickhouseoperatorconfigurations.clickhouse.altinity.com created

serviceaccount/clickhouse-operator created

clusterrole.rbac.authorization.k8s.io/clickhouse-operator-kube-system created

clusterrolebinding.rbac.authorization.k8s.io/clickhouse-operator-kube-system created

configmap/etc-clickhouse-operator-files created

configmap/etc-clickhouse-operator-confd-files created

configmap/etc-clickhouse-operator-configd-files created

configmap/etc-clickhouse-operator-templatesd-files created

configmap/etc-clickhouse-operator-usersd-files created

secret/clickhouse-operator created

deployment.apps/clickhouse-operator created

service/clickhouse-operator-metrics created运行成功后,可以看到以下信息:

su01:~/chenyc/ck # kubectl get crd |grep clickhouse.altinity.com

clickhouseinstallations.clickhouse.altinity.com 2023-11-16T09:41:08Z

clickhouseinstallationtemplates.clickhouse.altinity.com 2023-11-16T09:41:08Z

clickhouseoperatorconfigurations.clickhouse.altinity.com 2023-11-16T09:41:08Z

su01:~/chenyc/ck # kubectl get pod -n kube-system |grep clickhouse

clickhouse-operator-7ff755d4df-9bcbd 2/2 Running 0 10d看到如上信息,说明operator部署成功。

快速验证

我们从官网repo里找到一个最简单的单节点部署案例:

apiVersion: "clickhouse.altinity.com/v1"

kind: "ClickHouseInstallation"

metadata:

name: "simple-01"

spec:

configuration:

clusters:

- name: "simple"应用部署:

kubectl apply -f ck-sample.yaml查询状态:

chenyc@su01:~/chenyc/ch> kubectl get pod

NAME READY STATUS RESTARTS AGE

chi-simple-01-simple-0-0-0 1/1 Running 0 63s尝试登录该节点:

chenyc@su01:~/chenyc/ch> kubectl exec -it chi-simple-01-simple-0-0-0 -- clickhouse-client

ClickHouse client version 23.10.5.20 (official build).

Connecting to localhost:9000 as user default.

Connected to ClickHouse server version 23.10.5 revision 54466.

Warnings:

* Linux transparent hugepages are set to "always". Check /sys/kernel/mm/transparent_hugepage/enabled

* Delay accounting is not enabled, OSIOWaitMicroseconds will not be gathered. Check /proc/sys/kernel/task_delayacct

chi-simple-01-simple-0-0-0.chi-simple-01-simple-0-0.default.svc.cluster.local :)登陆成功,说明部署没有问题。

但是这种部署方式有一个问题,就是外部无法访问。

service暴露外部IP端口

我们看它的service信息:

chenyc@su01:~/chenyc/ch> kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

chi-simple-01-simple-0-0 ClusterIP None <none> 9000/TCP,8123/TCP,9009/TCP 16m

clickhouse-simple-01 LoadBalancer 10.103.187.96 <pending> 8123:30260/TCP,9000:32685/TCP 16m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d可以看到,它默认使用了LoadBalancer的service,而且它的EXTERNAL-IP是pending状态,这是因为clickhouse-operator默认我们在云环境上使用,在私服部署的话,我们并没有提供一个负载均衡器,因此无法选择IP,导致无法访问。

这时有两种解决方案:

- 安装一个负载均衡器,如metallb

- 改成nodePort方式

我们先看第一种方式,安装metallb。安装步骤大家可自行网上搜索,安装成功后如下所示:

su01:~/chenyc/ck # kubectl get pod -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-595f88d88f-mmmfq 1/1 Running 2 146d

speaker-5n4qh 1/1 Running 5 (14d ago) 146d

speaker-f4pgr 1/1 Running 9 (14d ago) 146d

speaker-qcfl2 1/1 Running 5 (14d ago) 146d我们再次部署, 可以看到他给我们分配了一个192.168.110.198的IP, 注意,这个IP是真实存在的,也就是说,一定要有可分配的IP,才能成功。

su01:~/chenyc/ck # kubectl get pod

NAME READY STATUS RESTARTS AGE

chi-simple-01-simple-0-0-0 1/1 Running 0 71s

su01:~/chenyc/ck/ch # kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

chi-simple-01-simple-0-0 ClusterIP None <none> 9000/TCP,8123/TCP,9009/TCP 11s

ckman NodePort 10.105.69.159 <none> 38808:38808/TCP 4d23h

clickhouse-simple-01 LoadBalancer 10.100.12.57 192.168.110.198 8123:61626/TCP,9000:20461/TCP 8s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d验证该IP是否可用:

su01:~/chenyc/ck # curl http://192.168.110.198:8123

Ok.但是现在我们是不能从外部直接访问到这个clickhouse节点的。原因我们后面再说。

先看另一种解决方式,通过nodePort的方式来暴露端口。chi这个资源里提供了修改service的模板,修改如下:

apiVersion: "clickhouse.altinity.com/v1"

kind: "ClickHouseInstallation"

metadata:

name: "svc"

spec:

defaults:

templates:

serviceTemplate: service-template

templates:

serviceTemplates:

- name: service-template

generateName: chendpoint-{chi}

spec:

ports:

- name: http

port: 8123

nodePort: 38123

targetPort: 8123

- name: tcp

port: 9000

nodePort: 39000

targetPort: 9000

type: NodePort此时查看service,得到如下结果:

su01:~/chenyc/ck/ch # kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

chendpoint-svc NodePort 10.111.132.229 <none> 8123:38123/TCP,9000:39000/TCP 21s

chi-svc-cluster-0-0 ClusterIP None <none> 9000/TCP,8123/TCP,9009/TCP 23s

ckman NodePort 10.105.69.159 <none> 38808:38808/TCP 5d3h

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d同样,我们验证一下:

su01:~/chenyc/ck/ch # curl http://10.111.132.229:8123

Ok.

su01:~/chenyc/ck/ch # curl http://192.168.110.186:38123

Ok.

su01:~/chenyc/ck/ch # curl http://192.168.110.187:38123

Ok.

su01:~/chenyc/ck/ch # curl http://192.168.110.188:38123

Ok.上例中,10.111.132.229是集群提供的cluster-ip,可以用pod内部端口访问,而192.168.110.186~192.168.110.188是k8s集群的节点IP,我们可以使用任意节点来访问。

实现从外部登录

这个端口虽然对外暴露出来了,但是我们并不能直接使用TCP的方式登录上去。除了default用户,clickhouse-operator还为我们提供了一个clickhouse_operator的用户,默认密码是clickhouse_operator_password,但是目前这两个用户都无法从外面访问。

su01:~/chenyc/ck/ch # clickhouse-client -m -h 192.168.110.186 --port 39000

ClickHouse client version 23.3.1.2823 (official build).

Connecting to 192.168.110.186:39000 as user default.

Password for user (default):

Connecting to 192.168.110.186:39000 as user default.

Code: 516. DB::Exception: Received from 192.168.110.186:39000. DB::Exception: default: Authentication failed: password is incorrect, or there is no user with such name.

If you have installed ClickHouse and forgot password you can reset it in the configuration file.

The password for default user is typically located at /etc/clickhouse-server/users.d/default-password.xml

and deleting this file will reset the password.

See also /etc/clickhouse-server/users.xml on the server where ClickHouse is installed.

. (AUTHENTICATION_FAILED)

su01:~/chenyc/ck/ch # clickhouse-client -m -h 192.168.110.186 --port 39000 -u clickhouse_operator --password clickhouse_operator_password

ClickHouse client version 23.3.1.2823 (official build).

Connecting to 192.168.110.186:39000 as user clickhouse_operator.

Code: 516. DB::Exception: Received from 192.168.110.186:39000. DB::Exception: clickhouse_operator: Authentication failed: password is incorrect, or there is no user with such name.. (AUTHENTICATION_FAILED)这两个用户肯定是有效的,我们可以从pod内部登录访问:

su01:~/chenyc/ck/ch # kubectl exec -it chi-svc-cluster-0-0-0 -- /bin/bash

root@chi-svc-cluster-0-0-0:/# clickhouse-client -m

ClickHouse client version 23.10.5.20 (official build).

Connecting to localhost:9000 as user default.

Connected to ClickHouse server version 23.10.5 revision 54466.

Warnings:

* Linux transparent hugepages are set to "always". Check /sys/kernel/mm/transparent_hugepage/enabled

* Delay accounting is not enabled, OSIOWaitMicroseconds will not be gathered. Check /proc/sys/kernel/task_delayacct

chi-svc-cluster-0-0-0.chi-svc-cluster-0-0.default.svc.cluster.local :) 为什么会如此,我们打开用户的配置文件:

root@chi-svc-cluster-0-0-0:/# cat /etc/clickhouse-server/users.d/chop-generated-users.xml

<yandex>

<users>

<clickhouse_operator>

<networks>

<ip>10.0.2.45</ip>

</networks>

<password_sha256_hex>716b36073a90c6fe1d445ac1af85f4777c5b7a155cea359961826a030513e448</password_sha256_hex>

<profile>clickhouse_operator</profile>

</clickhouse_operator>

<default>

<networks>

<host_regexp>(chi-svc-[^.]+\d+-\d+|clickhouse\-svc)\.default\.svc\.cluster\.local$</host_regexp>

<ip>::1</ip>

<ip>127.0.0.1</ip>

</networks>

<profile>default</profile>

<quota>default</quota>

</default>

</users>

</yandex>可以看到,clickhouse_operator用户仅仅对内部ip开放,而default用户仅仅对本地回环开放以及hostname满足内部正则表达式规则的主机名开放,所以外面是无法登录的。那这样我们仍然用不了。

解决方案仍然有两种:

- 增加一个普通用户,该用户可以用来从外部访问

- 去掉default用户和clickhouse_operator用户的权限控制

我们先看第一种方法。

我们可以在configuration里增加users的信息,比如我们增加一个叫chenyc的用户:

apiVersion: "clickhouse.altinity.com/v1"

kind: "ClickHouseInstallation"

metadata:

name: "user"

spec:

configuration:

users:

chenyc/network/ip: "::/0"

chenyc/password: qwerty

chenyc/profile: default

defaults:

templates:

serviceTemplate: service-template

templates:

serviceTemplates:

- name: service-template

generateName: chendpoint-{chi}

spec:

ports:

- name: http

port: 8123

nodePort: 38123

targetPort: 8123

- name: tcp

port: 9000

nodePort: 39000

targetPort: 9000

type: NodePort查看配置文件:

su01:~/chenyc/ck/ch # kubectl exec -it chi-user-cluster-0-0-0 -- cat /etc/clickhouse-server/users.d/chop-generated-users.xml

<yandex>

<users>

<chenyc>

<network>

<ip>::/0</ip>

</network>

<networks>

<host_regexp>(chi-user-[^.]+\d+-\d+|clickhouse\-user)\.default\.svc\.cluster\.local$</host_regexp>

<ip>::1</ip>

<ip>127.0.0.1</ip>

</networks>

<password_sha256_hex>65e84be33532fb784c48129675f9eff3a682b27168c0ea744b2cf58ee02337c5</password_sha256_hex>

<profile>default</profile>

<quota>default</quota>

</chenyc>

<clickhouse_operator>

<networks>

<ip>10.0.2.45</ip>

</networks>

<password_sha256_hex>716b36073a90c6fe1d445ac1af85f4777c5b7a155cea359961826a030513e448</password_sha256_hex>

<profile>clickhouse_operator</profile>

</clickhouse_operator>

<default>

<networks>

<host_regexp>(chi-user-[^.]+\d+-\d+|clickhouse\-user)\.default\.svc\.cluster\.local$</host_regexp>

<ip>::1</ip>

<ip>127.0.0.1</ip>

</networks>

<profile>default</profile>

<quota>default</quota>

</default>

</users>

</yandex>但是我们发现仍然访问不了,因为仍然有下面这段权限限制:

<networks>

<host_regexp>(chi-user-[^.]+\d+-\d+|clickhouse\-user)\.default\.svc\.cluster\.local$</host_regexp>

<ip>::1</ip>

<ip>127.0.0.1</ip>

</networks>似乎第一种方案已经走入死胡同了,接下来我们来尝试第二种方式。

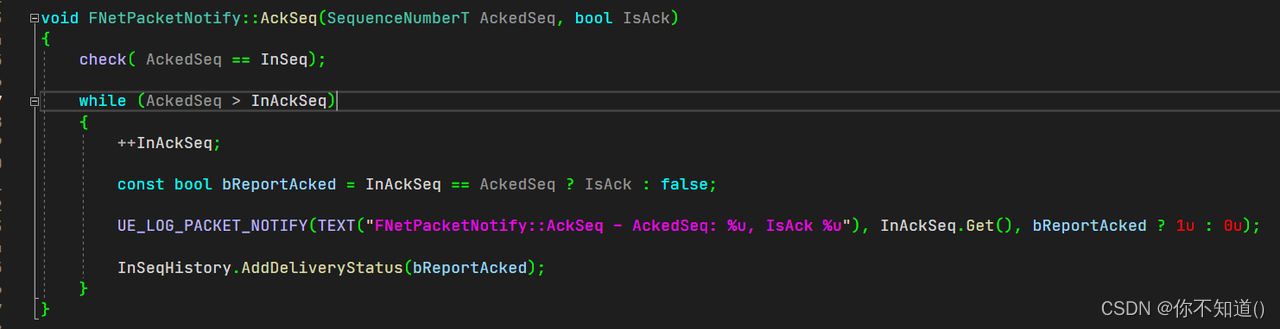

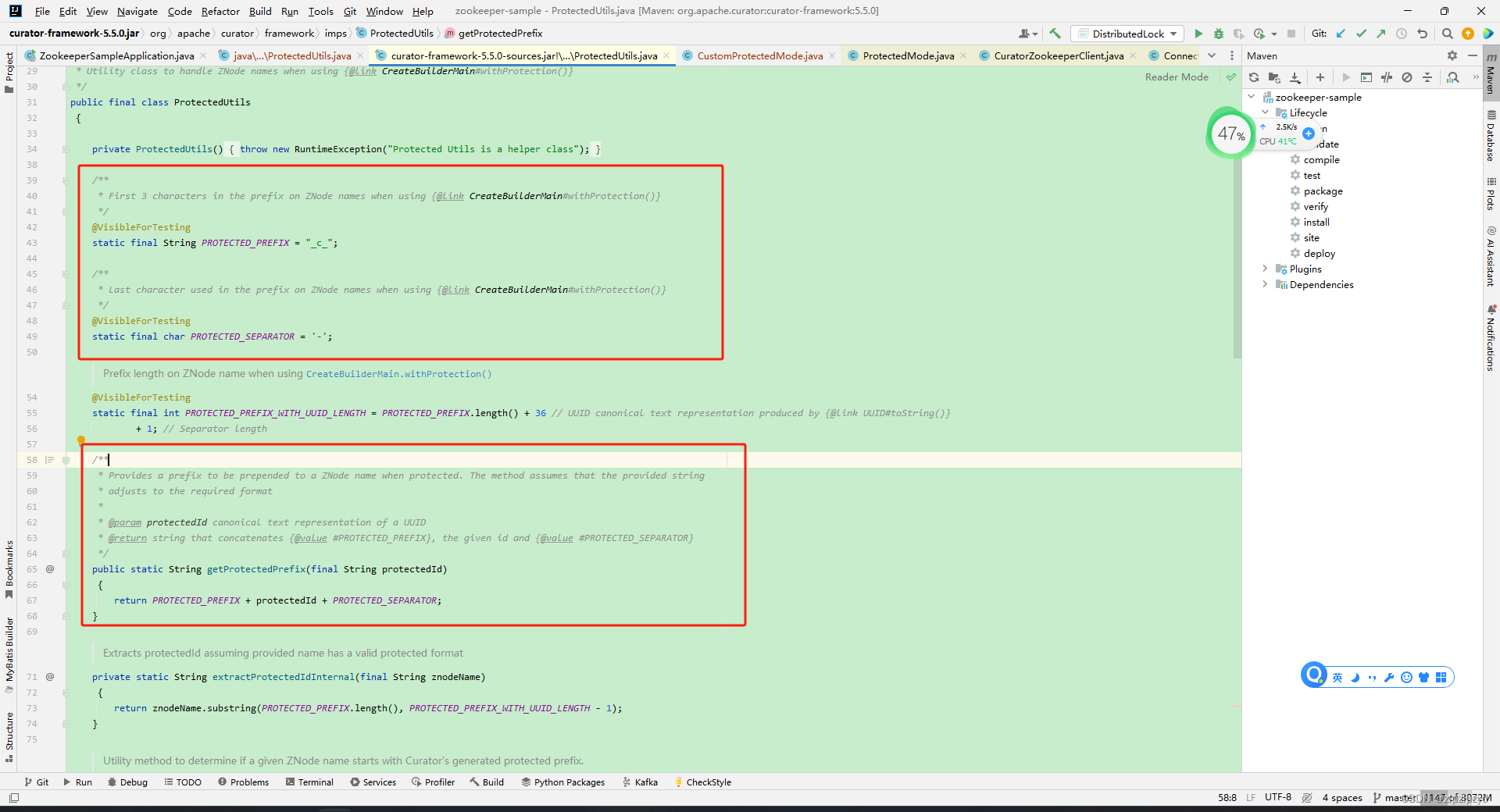

我们先看看clickhouse-operator源码中是如何处理用户的访问权限的。

func (n *Normalizer) normalizeConfigurationUserEnsureMandatorySections(users *chiV1.Settings, username string) {

chopUsername := chop.Config().ClickHouse.Access.Username

//

// Ensure each user has mandatory sections:

//

// 1. user/profile

// 2. user/quota

// 3. user/networks/ip

// 4. user/networks/host_regexp

profile := chop.Config().ClickHouse.Config.User.Default.Profile

quota := chop.Config().ClickHouse.Config.User.Default.Quota

ips := append([]string{}, chop.Config().ClickHouse.Config.User.Default.NetworksIP...)

regexp := CreatePodHostnameRegexp(n.ctx.chi, chop.Config().ClickHouse.Config.Network.HostRegexpTemplate)

// Some users may have special options

switch username {

case defaultUsername:

ips = append(ips, n.ctx.options.DefaultUserAdditionalIPs...)

if !n.ctx.options.DefaultUserInsertHostRegex {

regexp = ""

}

case chopUsername:

ip, _ := chop.Get().ConfigManager.GetRuntimeParam(chiV1.OPERATOR_POD_IP)

profile = chopProfile

quota = ""

ips = []string{ip}

regexp = ""

}

// Ensure required values are in place and apply non-empty values in case no own value(s) provided

if profile != "" {

users.SetIfNotExists(username+"/profile", chiV1.NewSettingScalar(profile))

}

if quota != "" {

users.SetIfNotExists(username+"/quota", chiV1.NewSettingScalar(quota))

}

if len(ips) > 0 {

users.Set(username+"/networks/ip", chiV1.NewSettingVector(ips).MergeFrom(users.Get(username+"/networks/ip")))

}

if regexp != "" {

users.SetIfNotExists(username+"/networks/host_regexp", chiV1.NewSettingScalar(regexp))

}

}

- default用户

根据DefaultUserInsertHostRegex变量来判断是否要配置host_regexp, 而该变量默认是true:

// NewNormalizerOptions creates new NormalizerOptions

func NewNormalizerOptions() *NormalizerOptions {

return &NormalizerOptions{

DefaultUserInsertHostRegex: true,

}

}ip的限制则取决于配置的additional ip列表。

因此,我们只需要将additional IP以及host_regexp的规则在clickhouse_operator的yaml中去掉即可。

- chop用户

chop就是clickhouse_operator用户,它的ip限制主要取决于OPERATOR_POD_IP环境变量。而该环境变量可以通过clickhouse_operator的yaml传入,它默认是取的pod的IP,我们将其设置成::/0, 即可所有人都能访问了。

ip, _ := chop.Get().ConfigManager.GetRuntimeParam(chiV1.OPERATOR_POD_IP)- 其他普通用户

ips := append([]string{}, chop.Config().ClickHouse.Config.User.Default.NetworksIP...)

regexp := CreatePodHostnameRegexp(n.ctx.chi, chop.Config().ClickHouse.Config.Network.HostRegexpTemplate)同样也是修改yaml。

有了以上的基础,我们直接修改yaml:

原始yaml:

user:

# Default settings for user accounts, created by the operator.

# IMPORTANT. These are not access credentials or settings for 'default' user account,

# it is a template for filling out missing fields for all user accounts to be created by the operator,

# with the following EXCEPTIONS:

# 1. 'default' user account DOES NOT use provided password, but uses all the rest of the fields.

# Password for 'default' user account has to be provided explicitly, if to be used.

# 2. CHOP user account DOES NOT use:

# - profile setting. It uses predefined profile called 'clickhouse_operator'

# - quota setting. It uses empty quota name.

# - networks IP setting. Operator specifies 'networks/ip' user setting to match operators' pod IP only.

# - password setting. Password for CHOP account is used from 'clickhouse.access.*' section

default:

# Default values for ClickHouse user account(s) created by the operator

# 1. user/profile - string

# 2. user/quota - string

# 3. user/networks/ip - multiple strings

# 4. user/password - string

# These values can be overwritten on per-user basis.

profile: "default"

quota: "default"

networksIP:

- "::1"

- "127.0.0.1"

password: "default"

################################################

##

## Configuration Network Section

##

################################################

network:

# Default host_regexp to limit network connectivity from outside

hostRegexpTemplate: "(chi-{chi}-[^.]+\\d+-\\d+|clickhouse\\-{chi})\\.{namespace}\\.svc\\.cluster\\.local$"修改后yaml:

user:

# Default settings for user accounts, created by the operator.

# IMPORTANT. These are not access credentials or settings for 'default' user account,

# it is a template for filling out missing fields for all user accounts to be created by the operator,

# with the following EXCEPTIONS:

# 1. 'default' user account DOES NOT use provided password, but uses all the rest of the fields.

# Password for 'default' user account has to be provided explicitly, if to be used.

# 2. CHOP user account DOES NOT use:

# - profile setting. It uses predefined profile called 'clickhouse_operator'

# - quota setting. It uses empty quota name.

# - networks IP setting. Operator specifies 'networks/ip' user setting to match operators' pod IP only.

# - password setting. Password for CHOP account is used from 'clickhouse.access.*' section

default:

# Default values for ClickHouse user account(s) created by the operator

# 1. user/profile - string

# 2. user/quota - string

# 3. user/networks/ip - multiple strings

# 4. user/password - string

# These values can be overwritten on per-user basis.

profile: "default"

quota: "default"

password: "default"

################################################

##

## Configuration Network Section

##

################################################修改前yaml:

env:

# Pod-specific

# spec.nodeName: ip-172-20-52-62.ec2.internal

- name: OPERATOR_POD_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# metadata.name: clickhouse-operator-6f87589dbb-ftcsf

- name: OPERATOR_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# metadata.namespace: kube-system

- name: OPERATOR_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# status.podIP: 100.96.3.2

- name: OPERATOR_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

# spec.serviceAccount: clickhouse-operator

# spec.serviceAccountName: clickhouse-operator

- name: OPERATOR_POD_SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName修改后yaml:

env:

# Pod-specific

# spec.nodeName: ip-172-20-52-62.ec2.internal

- name: OPERATOR_POD_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# metadata.name: clickhouse-operator-6f87589dbb-ftcsf

- name: OPERATOR_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# metadata.namespace: kube-system

- name: OPERATOR_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# status.podIP: 100.96.3.2

- name: OPERATOR_POD_IP

value: "::/0"

#valueFrom:

# fieldRef:

# fieldPath: status.podIP

# spec.serviceAccount: clickhouse-operator

# spec.serviceAccountName: clickhouse-operator

- name: OPERATOR_POD_SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName以上内容修改完成后,重新apply clickhouse-operator。实际上,chop的用户我们也可以通过yaml去修改。比如我们将用户改成eoi,密码改成123456:

apiVersion: v1

kind: Secret

metadata:

name: clickhouse-operator

namespace: kube-system

labels:

clickhouse.altinity.com/chop: 0.21.3

app: clickhouse-operator

type: Opaque

stringData:

username: eoi

password: "123456"再次部署clickhouse, 查看配置文件:

<yandex>

<users>

<chenyc>

<networks>

<ip>::/0</ip>

</networks>

<password_sha256_hex>65e84be33532fb784c48129675f9eff3a682b27168c0ea744b2cf58ee02337c5</password_sha256_hex>

<profile>default</profile>

<quota>default</quota>

</chenyc>

<default>

<networks>

<ip>::/0</ip>

</networks>

<profile>default</profile>

<quota>default</quota>

</default>

<eoi>

<networks>

<ip>::/0</ip>

</networks>

<password_sha256_hex>8d969eef6ecad3c29a3a629280e686cf0c3f5d5a86aff3ca12020c923adc6c92</password_sha256_hex>

<profile>clickhouse_operator</profile>

</eoi>

</users>

</yandex>从配置文件来看一切满足预期,我们尝试登录一下:

default用户可正常登录:

su01:~/chenyc/ck/ch # clickhouse-client -h 192.168.110.186 --port 39000

ClickHouse client version 23.3.1.2823 (official build).

Connecting to 192.168.110.186:39000 as user default.

Connected to ClickHouse server version 23.10.5 revision 54466.

ClickHouse client version is older than ClickHouse server. It may lack support for new features.

Warnings:

* Linux transparent hugepages are set to "always". Check /sys/kernel/mm/transparent_hugepage/enabled

* Delay accounting is not enabled, OSIOWaitMicroseconds will not be gathered. Check /proc/sys/kernel/task_delayacct

chi-user-cluster-0-0-0.chi-user-cluster-0-0.default.svc.cluster.local :) exit

Bye.chop用户:

su01:~/chenyc/ck/ch # clickhouse-client -h 192.168.110.186 --port 39000 -u eoi --password 123456

ClickHouse client version 23.3.1.2823 (official build).

Connecting to 192.168.110.186:39000 as user eoi.

Connected to ClickHouse server version 23.10.5 revision 54466.

ClickHouse client version is older than ClickHouse server. It may lack support for new features.

Warnings:

* Linux transparent hugepages are set to "always". Check /sys/kernel/mm/transparent_hugepage/enabled

* Delay accounting is not enabled, OSIOWaitMicroseconds will not be gathered. Check /proc/sys/kernel/task_delayacct

chi-user-cluster-0-0-0.chi-user-cluster-0-0.default.svc.cluster.local :) exit

Bye.chenyc用户(自定义普通用户):

su01:~/chenyc/ck/ch # clickhouse-client -h 192.168.110.186 --port 39000 -u chenyc --password qwerty

ClickHouse client version 23.3.1.2823 (official build).

Connecting to 192.168.110.186:39000 as user chenyc.

Connected to ClickHouse server version 23.10.5 revision 54466.

ClickHouse client version is older than ClickHouse server. It may lack support for new features.

Warnings:

* Linux transparent hugepages are set to "always". Check /sys/kernel/mm/transparent_hugepage/enabled

* Delay accounting is not enabled, OSIOWaitMicroseconds will not be gathered. Check /proc/sys/kernel/task_delayacct

chi-user-cluster-0-0-0.chi-user-cluster-0-0.default.svc.cluster.local :) exit

Bye.以上就是通过修改operator的yaml,实现外部登录的过程。当然,像default用户、chop用户等有特殊含义的用户,实际使用时是不建议暴露出来让外界使用的。建议创建一个普通用户能让外界访问即可。

storageclass部署

clickhouse作为一个数据库,最关键的自然是数据持久化的问题。那就意味着我们必须要使用PVC来指定持久化路径。下面是一个指定了持久化目录的例子:

apiVersion: "clickhouse.altinity.com/v1"

kind: "ClickHouseInstallation"

metadata:

name: "aimeter"

spec:

defaults:

templates:

serviceTemplate: service-template

podTemplate: pod-template

dataVolumeClaimTemplate: volume-claim

logVolumeClaimTemplate: volume-claim

templates:

serviceTemplates:

- name: service-template

generateName: chendpoint-{chi}

spec:

ports:

- name: http

port: 8123

nodePort: 38123

targetPort: 8123

- name: tcp

port: 9000

nodePort: 39000

targetPort: 9000

type: NodePort

podTemplates:

- name: pod-template

spec:

containers:

- name: clickhouse

imagePullPolicy: Always

image: yandex/clickhouse-server:latest

volumeMounts:

- name: volume-claim

mountPath: /var/lib/clickhouse

- name: volume-claim

mountPath: /var/log/clickhouse-server

volumeClaimTemplates:

- name: volume-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Gi前面提到过,clickhouse-operator默认是在云环境运行的。上面这段yaml在云环境是可以直接运行的,但是在私有k8s环境运行会报错。可以看到,其运行状态是pending。

su01:~/chenyc/ck/ch # kubectl get pod

NAME READY STATUS RESTARTS AGE

chi-aimeter-cluster-0-0-0 0/2 Pending 0 6s

ckman-6d8cd8fbdc-mlsmb 1/1 Running 0 5d4h使用describe去查看具体信息,得到如下报错:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 68s (x2 over 70s) default-scheduler 0/3 nodes are available: pod has unbound immediate PersistentVolumeClaims. preemption: 0/3 nodes are available: 3 No preemption victims found for incoming pod..以上错误是因为没有可用的持久化卷。我们可以通过自建nfs的方式提供一个PV来供挂载。

我们先在一台服务器上创建nfs service。

# 安装nfs以及rpc服务

yum install nfs-utils rpcbind -y

# 修改 /etc/exports

/data01/nfs 192.168.110.0/24(insecure,rw,sync,no_subtree_check,no_root_squash)

exportfs -r #使配置生效

#重启nfs服务:

service rpcbind restart ;service nfs restart创建provisioner、storageclass:

#### 权限配置

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-common-provisioner

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-common-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-common-provisioner

subjects:

- kind: ServiceAccount

name: nfs-common-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-common-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-common-provisioner

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-common-provisioner

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-common-provisioner

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-common-provisioner

apiGroup: rbac.authorization.k8s.io

#### class

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-common # 配置pvc使用

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # 指定一个供应商的名字 与 PROVISIONER_NAME 保持一致

# or choose another name, 必须匹配 deployment 的 env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false" # 删除 PV 的时候,PV 中的内容是否备份

#### 部署deploy

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-common-provisioner

labels:

app: nfs-common-provisioner

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-common-provisioner

template:

metadata:

labels:

app: nfs-common-provisioner

spec:

serviceAccountName: nfs-common-provisioner

containers:

- name: nfs-common-provisioner

image: ccr.ccs.tencentyun.com/gcr-containers/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-common-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.110.10 # NFS 服务器的地址

- name: NFS_PATH

value: /data01/nfs # NFS 服务器的共享目录

volumes:

- name: nfs-common-root

nfs:

server: 192.168.110.10

path: /data01/nfs应用:

su01:~/chenyc/ck/ch # kubectl apply -f storageclass.yaml

serviceaccount/nfs-common-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-common-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-common-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-common-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-common-provisioner created

storageclass.storage.k8s.io/nfs-common created

deployment.apps/nfs-common-provisioner created成功应该可以查到以下信息:

su01:~/chenyc/ck/ch # kubectl get pod

NAME READY STATUS RESTARTS AGE

chi-aimeter-cluster-0-0-0 0/2 Pending 0 16m

ckman-6d8cd8fbdc-mlsmb 1/1 Running 0 5d4h

nfs-common-provisioner-594bc9d55d-clhvl 1/1 Running 0 5m51s

su01:~/chenyc/ck/ch # kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-common k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 5m57s我们将上述的yaml的pvc挂载到这个sc上:

volumeClaimTemplates:

- name: volume-claim

spec:

accessModes:

- ReadWriteOnce

storageClassName: "nfs-common"

resources:

requests:

storage: 100Gi再次查看,部署成功:

su01:~/chenyc/ck/ch # kubectl get pod

NAME READY STATUS RESTARTS AGE

chi-aimeter-cluster-0-0-0 2/2 Running 0 117s

ckman-6d8cd8fbdc-mlsmb 1/1 Running 0 5d4h

nfs-common-provisioner-594bc9d55d-clhvl 1/1 Running 0 10m

su01:~/chenyc/ck/ch # kubectl get sts

NAME READY AGE

chi-aimeter-cluster-0-0 1/1 2m1s

su01:~/chenyc/ck/ch # kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-e23ae072-5e95-415c-9628-3ea7fb1ed4d6 100Gi RWO Delete Bound default/volume-claim-chi-aimeter-cluster-0-0-0 nfs-common 2m4s

su01:~/chenyc/ck/ch # kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

volume-claim-chi-aimeter-cluster-0-0-0 Bound pvc-e23ae072-5e95-415c-9628-3ea7fb1ed4d6 100Gi RWO nfs-common 2m8s从nfs原始目录可以看到已经有目录挂载在上面:

zookeeper部署

clickhouse集群是依赖zookeeper的。clickhouse-operator项目官方很贴心地提供了pv方式部署以及emptyDir方式部署zookeeper的方案,且都提供了单节点和三节点的部署yaml。

我们这里以单节点持久化的场景为例:

# Setup Service to provide access to Zookeeper for clients

apiVersion: v1

kind: Service

metadata:

# DNS would be like zookeeper.zoons

name: zookeeper

labels:

app: zookeeper

spec:

type: NodePort

ports:

- port: 2181

name: client

- port: 7000

name: prometheus

selector:

app: zookeeper

what: node

---

# Setup Headless Service for StatefulSet

apiVersion: v1

kind: Service

metadata:

# DNS would be like zookeeper-0.zookeepers.etc

name: zookeepers

labels:

app: zookeeper

spec:

ports:

- port: 2888

name: server

- port: 3888

name: leader-election

clusterIP: None

selector:

app: zookeeper

what: node

---

# Setup max number of unavailable pods in StatefulSet

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: zookeeper-pod-disruption-budget

spec:

selector:

matchLabels:

app: zookeeper

maxUnavailable: 1

---

# Setup Zookeeper StatefulSet

# Possible params:

# 1. replicas

# 2. memory

# 3. cpu

# 4. storage

# 5. storageClassName

# 6. user to run app

apiVersion: apps/v1

kind: StatefulSet

metadata:

# nodes would be named as zookeeper-0, zookeeper-1, zookeeper-2

name: zookeeper

labels:

app: zookeeper

spec:

selector:

matchLabels:

app: zookeeper

serviceName: zookeepers

replicas: 1

updateStrategy:

type: RollingUpdate

podManagementPolicy: OrderedReady

template:

metadata:

labels:

app: zookeeper

what: node

annotations:

prometheus.io/port: '7000'

prometheus.io/scrape: 'true'

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- zookeeper

# TODO think about multi-AZ EKS

# topologyKey: topology.kubernetes.io/zone

topologyKey: "kubernetes.io/hostname"

containers:

- name: kubernetes-zookeeper

imagePullPolicy: IfNotPresent

image: "docker.io/zookeeper:3.8.1"

resources:

requests:

memory: "512M"

cpu: "1"

limits:

memory: "4Gi"

cpu: "2"

ports:

- containerPort: 2181

name: client

- containerPort: 2888

name: server

- containerPort: 3888

name: leader-election

- containerPort: 7000

name: prometheus

env:

- name: SERVERS

value: "1"

# See those links for proper startup settings:

# https://github.com/kow3ns/kubernetes-zookeeper/blob/master/docker/scripts/start-zookeeper

# https://clickhouse.yandex/docs/en/operations/tips/#zookeeper

# https://github.com/ClickHouse/ClickHouse/issues/11781

command:

- bash

- -x

- -c

- |

HOST=`hostname -s` &&

DOMAIN=`hostname -d` &&

CLIENT_PORT=2181 &&

SERVER_PORT=2888 &&

ELECTION_PORT=3888 &&

PROMETHEUS_PORT=7000 &&

ZOO_DATA_DIR=/var/lib/zookeeper/data &&

ZOO_DATA_LOG_DIR=/var/lib/zookeeper/datalog &&

{

echo "clientPort=${CLIENT_PORT}"

echo 'tickTime=2000'

echo 'initLimit=300'

echo 'syncLimit=10'

echo 'maxClientCnxns=2000'

echo 'maxTimeToWaitForEpoch=2000'

echo 'maxSessionTimeout=60000000'

echo "dataDir=${ZOO_DATA_DIR}"

echo "dataLogDir=${ZOO_DATA_LOG_DIR}"

echo 'autopurge.snapRetainCount=10'

echo 'autopurge.purgeInterval=1'

echo 'preAllocSize=131072'

echo 'snapCount=3000000'

echo 'leaderServes=yes'

echo 'standaloneEnabled=false'

echo '4lw.commands.whitelist=*'

echo 'metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider'

echo "metricsProvider.httpPort=${PROMETHEUS_PORT}"

echo "skipACL=true"

echo "fastleader.maxNotificationInterval=10000"

} > /conf/zoo.cfg &&

{

echo "zookeeper.root.logger=CONSOLE"

echo "zookeeper.console.threshold=INFO"

echo "log4j.rootLogger=\${zookeeper.root.logger}"

echo "log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender"

echo "log4j.appender.CONSOLE.Threshold=\${zookeeper.console.threshold}"

echo "log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout"

echo "log4j.appender.CONSOLE.layout.ConversionPattern=%d{ISO8601} - %-5p [%t:%C{1}@%L] - %m%n"

} > /conf/log4j.properties &&

echo 'JVMFLAGS="-Xms128M -Xmx4G -XX:ActiveProcessorCount=8 -XX:+AlwaysPreTouch -Djute.maxbuffer=8388608 -XX:MaxGCPauseMillis=50"' > /conf/java.env &&

if [[ $HOST =~ (.*)-([0-9]+)$ ]]; then

NAME=${BASH_REMATCH[1]} &&

ORD=${BASH_REMATCH[2]};

else

echo "Failed to parse name and ordinal of Pod" &&

exit 1;

fi &&

mkdir -pv ${ZOO_DATA_DIR} &&

mkdir -pv ${ZOO_DATA_LOG_DIR} &&

whoami &&

chown -Rv zookeeper "$ZOO_DATA_DIR" "$ZOO_DATA_LOG_DIR" &&

export MY_ID=$((ORD+1)) &&

echo $MY_ID > $ZOO_DATA_DIR/myid &&

for (( i=1; i<=$SERVERS; i++ )); do

echo "server.$i=$NAME-$((i-1)).$DOMAIN:$SERVER_PORT:$ELECTION_PORT" >> /conf/zoo.cfg;

done &&

if [[ $SERVERS -eq 1 ]]; then

echo "group.1=1" >> /conf/zoo.cfg;

else

echo "group.1=1:2:3" >> /conf/zoo.cfg;

fi &&

for (( i=1; i<=$SERVERS; i++ )); do

WEIGHT=1

if [[ $i == 1 ]]; then

WEIGHT=10

fi

echo "weight.$i=$WEIGHT" >> /conf/zoo.cfg;

done &&

zkServer.sh start-foreground

readinessProbe:

exec:

command:

- bash

- -c

- '

IFS=;

MNTR=$(exec 3<>/dev/tcp/127.0.0.1/2181 ; printf "mntr" >&3 ; tee <&3; exec 3<&- ;);

while [[ "$MNTR" == "This ZooKeeper instance is not currently serving requests" ]];

do

echo "wait mntr works";

sleep 1;

MNTR=$(exec 3<>/dev/tcp/127.0.0.1/2181 ; printf "mntr" >&3 ; tee <&3; exec 3<&- ;);

done;

STATE=$(echo -e $MNTR | grep zk_server_state | cut -d " " -f 2);

if [[ "$STATE" =~ "leader" ]]; then

echo "check leader state";

SYNCED_FOLLOWERS=$(echo -e $MNTR | grep zk_synced_followers | awk -F"[[:space:]]+" "{print \$2}" | cut -d "." -f 1);

if [[ "$SYNCED_FOLLOWERS" != "0" ]]; then

./bin/zkCli.sh ls /;

exit $?;

else

exit 0;

fi;

elif [[ "$STATE" =~ "follower" ]]; then

echo "check follower state";

PEER_STATE=$(echo -e $MNTR | grep zk_peer_state);

if [[ "$PEER_STATE" =~ "following - broadcast" ]]; then

./bin/zkCli.sh ls /;

exit $?;

else

exit 1;

fi;

else

exit 1;

fi

'

initialDelaySeconds: 10

periodSeconds: 60

timeoutSeconds: 60

livenessProbe:

exec:

command:

- bash

- -xc

- 'date && OK=$(exec 3<>/dev/tcp/127.0.0.1/2181 ; printf "ruok" >&3 ; IFS=; tee <&3; exec 3<&- ;); if [[ "$OK" == "imok" ]]; then exit 0; else exit 1; fi'

initialDelaySeconds: 10

periodSeconds: 30

timeoutSeconds: 5

volumeMounts:

- name: datadir-volume

mountPath: /var/lib/zookeeper

# Run as a non-privileged user

securityContext:

runAsUser: 1000

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: datadir-volume

spec:

accessModes:

- ReadWriteOnce

storageClassName: "nfs-common"

resources:

requests:

storage: 25Gi看到状态为Running, 就说明部署成功。

su01:~/chenyc/ck/ch # kubectl get pod

NAME READY STATUS RESTARTS AGE

chi-aimeter-cluster-0-0-0 2/2 Running 0 8m9s

ckman-6d8cd8fbdc-mlsmb 1/1 Running 0 5d5h

nfs-common-provisioner-594bc9d55d-clhvl 1/1 Running 0 16m

zookeeper-0 1/1 Running 0 69sclickhouse集群部署

zookeeper部署好,就可以开始部署clickhouse集群了。

clickhouse集群配置和单节点配置大同小异,需要注意两点:

- zookeeper配置

- 集群信息配置(分片和副本)

接下来我们创建一个2分片4副本的集群:

apiVersion: "clickhouse.altinity.com/v1"

kind: "ClickHouseInstallation"

metadata:

name: "ck-cluster"

spec:

defaults:

templates:

serviceTemplate: service-template

podTemplate: pod-template

dataVolumeClaimTemplate: volume-claim

logVolumeClaimTemplate: volume-claim

configuration:

zookeeper:

nodes:

- host: zookeeper-0.zookeepers.default.svc.cluster.local

port: 2181

clusters:

- name: "cktest"

layout:

shardsCount: 2

replicasCount: 2

templates:

serviceTemplates:

- name: service-template

generateName: chendpoint-{chi}

spec:

ports:

- name: http

port: 8123

nodePort: 38123

targetPort: 8123

- name: tcp

port: 9000

nodePort: 39000

targetPort: 9000

type: NodePort

podTemplates:

- name: pod-template

spec:

containers:

- name: clickhouse

imagePullPolicy: Always

image: yandex/clickhouse-server:latest

volumeMounts:

- name: volume-claim

mountPath: /var/lib/clickhouse

- name: volume-claim

mountPath: /var/log/clickhouse-server

resources:

limits:

memory: "1Gi"

cpu: "1"

requests:

memory: "1Gi"

cpu: "1"

volumeClaimTemplates:

- name: volume-claim

spec:

accessModes:

- ReadWriteOnce

storageClassName: "nfs-common"

resources:

requests:

storage: 100Gi集群创建比较慢,我们等所有节点都创建好。

su01:~/chenyc/ck/ch # kubectl get pod

NAME READY STATUS RESTARTS AGE

chi-ck-cluster-cktest-0-0-0 2/2 Running 0 2m29s

chi-ck-cluster-cktest-0-1-0 2/2 Running 0 109s

chi-ck-cluster-cktest-1-0-0 2/2 Running 0 68s

chi-ck-cluster-cktest-1-1-0 2/2 Running 0 27s

ckman-6d8cd8fbdc-mlsmb 1/1 Running 0 5d5h

nfs-common-provisioner-594bc9d55d-clhvl 1/1 Running 0 25m

zookeeper-0 1/1 Running 0 10m查看配置文件:

su01:~/chenyc/ck/ch # kubectl exec -it chi-ck-cluster-cktest-0-0-0 -- cat /etc/clickhouse-server/config.d/chop-generated-remote_servers.xml

Defaulted container "clickhouse" out of: clickhouse, clickhouse-log

<yandex>

<remote_servers>

<!-- User-specified clusters -->

<cktest>

<shard>

<internal_replication>True</internal_replication>

<replica>

<host>chi-ck-cluster-cktest-0-0</host>

<port>9000</port>

<secure>0</secure>

</replica>

<replica>

<host>chi-ck-cluster-cktest-0-1</host>

<port>9000</port>

<secure>0</secure>

</replica>

</shard>

<shard>

<internal_replication>True</internal_replication>

<replica>

<host>chi-ck-cluster-cktest-1-0</host>

<port>9000</port>

<secure>0</secure>

</replica>

<replica>

<host>chi-ck-cluster-cktest-1-1</host>

<port>9000</port>

<secure>0</secure>

</replica>

</shard>

</cktest>

<!-- Autogenerated clusters -->

<all-replicated>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>chi-ck-cluster-cktest-0-0</host>

<port>9000</port>

<secure>0</secure>

</replica>

<replica>

<host>chi-ck-cluster-cktest-0-1</host>

<port>9000</port>

<secure>0</secure>

</replica>

<replica>

<host>chi-ck-cluster-cktest-1-0</host>

<port>9000</port>

<secure>0</secure>

</replica>

<replica>

<host>chi-ck-cluster-cktest-1-1</host>

<port>9000</port>

<secure>0</secure>

</replica>

</shard>

</all-replicated>

<all-sharded>

<shard>

<internal_replication>false</internal_replication>

<replica>

<host>chi-ck-cluster-cktest-0-0</host>

<port>9000</port>

<secure>0</secure>

</replica>

</shard>

<shard>

<internal_replication>false</internal_replication>

<replica>

<host>chi-ck-cluster-cktest-0-1</host>

<port>9000</port>

<secure>0</secure>

</replica>

</shard>

<shard>

<internal_replication>false</internal_replication>

<replica>

<host>chi-ck-cluster-cktest-1-0</host>

<port>9000</port>

<secure>0</secure>

</replica>

</shard>

<shard>

<internal_replication>false</internal_replication>

<replica>

<host>chi-ck-cluster-cktest-1-1</host>

<port>9000</port>

<secure>0</secure>

</replica>

</shard>

</all-sharded>

</remote_servers>

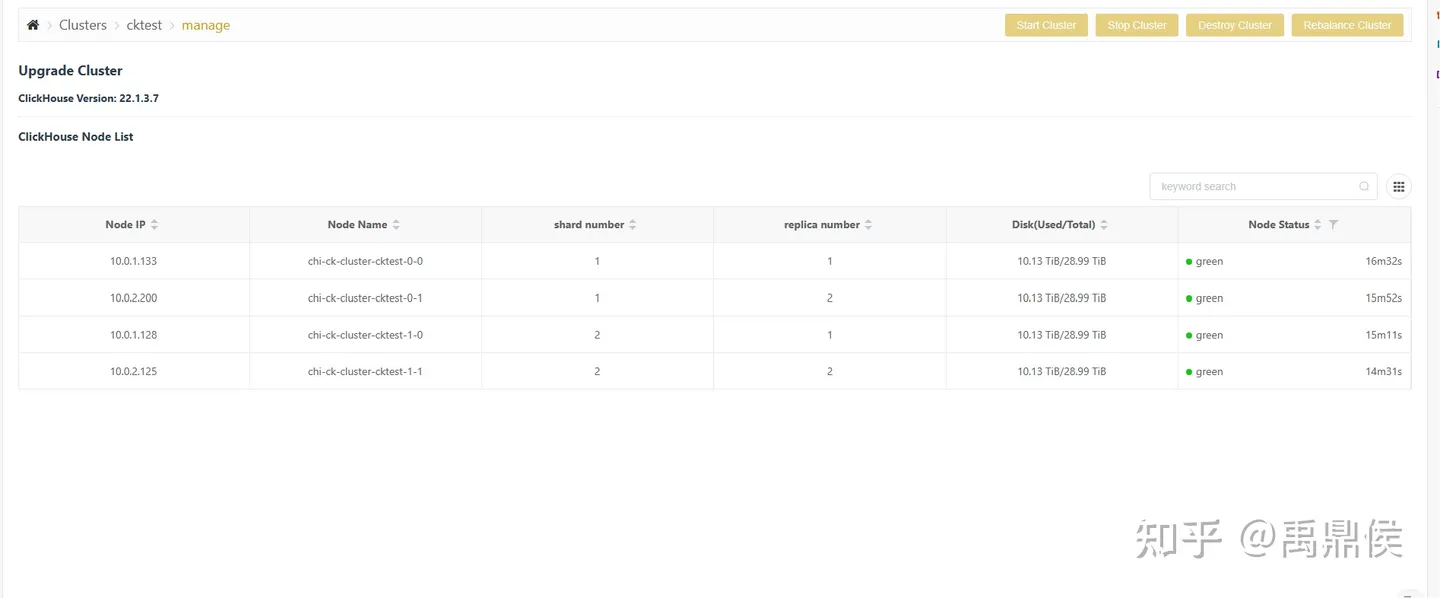

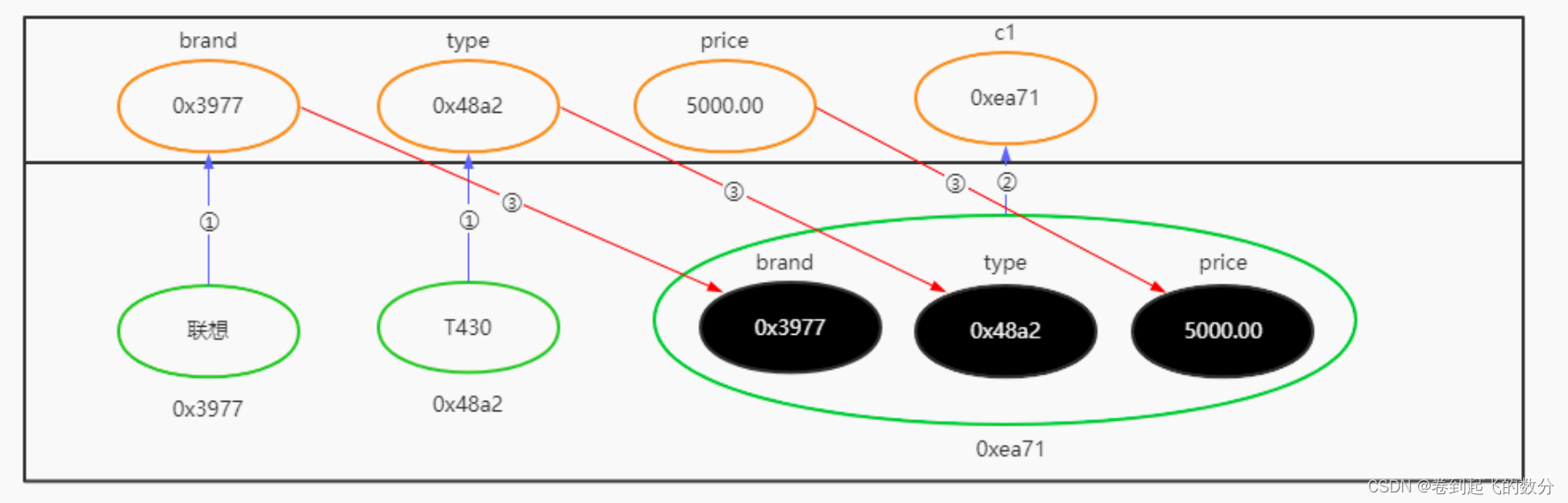

</yandex>查看集群信息:

我们来建表验证一下:

创建分布式表:

插入数据:

查本地表:

查分布式表:

注意事项

clickhouse-operator部署clickhouse集群实际上使用的是nodeName方式,如果集群节点上资源不够,那么pod会直接创建失败,而不会调度到其他节点上。

与ckman的联动

关于clickhouse容器化的思考

支持hostname访问

我们使用ckman管理集群的一个目的在于, ckman可以将集群配置直接导出给clickhouse_sinker等组件使用,因此,我们需要知道每个节点的确切IP,而不是system.clusters里查出来的127.0.0.1。

但是使用IP面临的一个问题是, 节点重启,IP会漂,最差的结果,集群整体重启,所有的IP都变掉了,那么这个集群就失联了,这显然不是我们所期望的。

因此,最好使使用hostname去访问。而hostname访问的问题是dns解析。在k8s集群内问题不大,但是集群外则无法访问。

当然,云内环境提供的服务仅供云内访问也是说得通的。(这一点需要ckman率先去支持)

集群服务暴露给外部访问

秉承着云内环境仅供云内访问的原则,clickhouse服务对外仅提供一个nodePort的端口,随机访问集群节点。所有操作入口都为ckman。

云内集群运维

指集群的扩缩容,升级等,这些理论上只需要修改yaml就可以完成。

ckman集成clickhouse容器化

ckman作为一个定位为管理和监控clickhouse集群的运维工具,云外环境已经做得足够完善,那么云内部署自然是责无旁贷的。

那么接下来ckman的RoadMap里,clickhouse的容器化部署集成到ckman中,自然是重中之重。

本专栏知识点是通过<零声教育>的系统学习,进行梳理总结写下文章,对C/C++课程感兴趣的读者,可以点击链接,查看详细的服务:C/C++Linux服务器开发/高级架构师

![[AutoSar]BSW_OS 08 Autosar OS_内存保护](https://img-blog.csdnimg.cn/direct/84382a3c560e435da5ef2b99dfe01bc8.png)