1.EndType

package com.example.utils.wordfilter;

/**

* 结束类型定义

*/

public enum EndType {

/**

* 有下一个,结束

*/

HAS_NEXT, IS_END

}2.WordType

package com.example.utils.wordfilter;

/**

* 词汇类型

*/

public enum WordType {

/**

* 黑名单/白名单

*/

BLACK, WHITE

}3.FlagIndex

package com.example.utils.wordfilter;

import java.util.List;

/**

* 敏感词标记

*/

public class FlagIndex {

/**

* 标记结果

*/

private boolean flag;

/**

* 是否黑名单词汇

*/

private boolean isWhiteWord;

/**

* 标记索引

*/

private List<Integer> index;

public boolean isFlag() {

return flag;

}

public void setFlag(boolean flag) {

this.flag = flag;

}

public List<Integer> getIndex() {

return index;

}

public void setIndex(List<Integer> index) {

this.index = index;

}

public boolean isWhiteWord() {

return isWhiteWord;

}

public void setWhiteWord(boolean whiteWord) {

isWhiteWord = whiteWord;

}

}

4.WordContext

package com.example.utils.wordfilter;

import java.io.BufferedReader;

import java.io.InputStreamReader;

import java.util.*;

/**

* 词库上下文环境

* 初始化敏感词库,将敏感词加入到HashMap中,构建DFA算法模型

*/

@SuppressWarnings({"rawtypes", "unchecked"})

public class WordContext {

/**

* 敏感词字典

*/

private final Map wordMap = new HashMap();

/**

* 是否已初始化

*/

private boolean init;

/**

* 黑名单列表

*/

private final String blackList;

/**

* 白名单列表

*/

private final String whiteList;

public WordContext() {

this.blackList = "/blacklist.txt";

this.whiteList = "/whitelist.txt";

initKeyWord();

}

public WordContext(String blackList, String whiteList) {

this.blackList = blackList;

this.whiteList = whiteList;

initKeyWord();

}

/**

* 获取初始化的敏感词列表

*

* @return 敏感词列表

*/

public Map getWordMap() {

return wordMap;

}

/**

* 初始化

*/

private synchronized void initKeyWord() {

try {

if (!init) {

// 将敏感词库加入到HashMap中

addWord(readWordFile(blackList), WordType.BLACK);

// 将非敏感词库也加入到HashMap中

addWord(readWordFile(whiteList), WordType.WHITE);

}

init = true;

} catch (Exception e) {

throw new RuntimeException(e);

}

}

/**

* 读取敏感词库,将敏感词放入HashSet中,构建一个DFA算法模型:<br>

* 中 = { isEnd = 0 国 = {<br>

* isEnd = 1 人 = {isEnd = 0 民 = {isEnd = 1} } 男 = { isEnd = 0 人 = { isEnd = 1 }

* } } } 五 = { isEnd = 0 星 = { isEnd = 0 红 = { isEnd = 0 旗 = { isEnd = 1 } } } }

*/

public void addWord(Iterable<String> wordList, WordType wordType) {

Map nowMap;

Map<String, String> newWorMap;

// 迭代keyWordSet

for (String key : wordList) {

nowMap = wordMap;

for (int i = 0; i < key.length(); i++) {

// 转换成char型

char keyChar = key.charAt(i);

// 获取

Object wordMap = nowMap.get(keyChar);

// 如果存在该key,直接赋值

if (wordMap != null) {

nowMap = (Map) wordMap;

} else {

// 不存在则构建一个map,同时将isEnd设置为0,因为他不是最后一个

newWorMap = new HashMap<>(4);

// 不是最后一个

newWorMap.put("isEnd", String.valueOf(EndType.HAS_NEXT.ordinal()));

nowMap.put(keyChar, newWorMap);

nowMap = newWorMap;

}

if (i == key.length() - 1) {

// 最后一个

nowMap.put("isEnd", String.valueOf(EndType.IS_END.ordinal()));

nowMap.put("isWhiteWord", String.valueOf(wordType.ordinal()));

}

}

}

}

/**

* 在线删除敏感词

*

* @param wordList 敏感词列表

* @param wordType 黑名单 BLACk,白名单WHITE

*/

public void removeWord(Iterable<String> wordList, WordType wordType) {

Map nowMap;

for (String key : wordList) {

List<Map> cacheList = new ArrayList<>();

nowMap = wordMap;

for (int i = 0; i < key.length(); i++) {

char keyChar = key.charAt(i);

Object map = nowMap.get(keyChar);

if (map != null) {

nowMap = (Map) map;

cacheList.add(nowMap);

} else {

return;

}

if (i == key.length() - 1) {

char[] keys = key.toCharArray();

boolean cleanable = false;

char lastChar = 0;

for (int j = cacheList.size() - 1; j >= 0; j--) {

Map cacheMap = cacheList.get(j);

if (j == cacheList.size() - 1) {

if (String.valueOf(WordType.BLACK.ordinal()).equals(cacheMap.get("isWhiteWord"))) {

if (wordType == WordType.WHITE) {

return;

}

}

if (String.valueOf(WordType.WHITE.ordinal()).equals(cacheMap.get("isWhiteWord"))) {

if (wordType == WordType.BLACK) {

return;

}

}

cacheMap.remove("isWhiteWord");

cacheMap.remove("isEnd");

if (cacheMap.size() == 0) {

cleanable = true;

continue;

}

}

if (cleanable) {

Object isEnd = cacheMap.get("isEnd");

if (String.valueOf(EndType.IS_END.ordinal()).equals(isEnd)) {

cleanable = false;

}

cacheMap.remove(lastChar);

}

lastChar = keys[j];

}

if (cleanable) {

wordMap.remove(lastChar);

}

}

}

}

}

/**

* 读取敏感词库中的内容,将内容添加到set集合中

*/

private Set<String> readWordFile(String file) throws Exception {

Set<String> set;

// 字符编码

String encoding = "UTF-8";

try (InputStreamReader read = new InputStreamReader(

this.getClass().getResourceAsStream(file), encoding)) {

set = new HashSet<>();

BufferedReader bufferedReader = new BufferedReader(read);

String txt;

// 读取文件,将文件内容放入到set中

while ((txt = bufferedReader.readLine()) != null) {

set.add(txt);

}

}

// 关闭文件流

return set;

}

}5.WordFilter

package com.example.utils.wordfilter;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

import java.util.Objects;

/**

* 敏感词过滤器

*/

@SuppressWarnings("rawtypes")

public class WordFilter {

/**

* 敏感词表

*/

private final Map wordMap;

/**

* 构造函数

*/

public WordFilter(WordContext context) {

this.wordMap = context.getWordMap();

}

/**

* 替换敏感词

*

* @param text 输入文本

*/

public String replace(final String text) {

return replace(text, 0, '*');

}

/**

* 替换敏感词

*

* @param text 输入文本

* @param symbol 替换符号

*/

public String replace(final String text, final char symbol) {

return replace(text, 0, symbol);

}

/**

* 替换敏感词

*

* @param text 输入文本

* @param skip 文本距离

* @param symbol 替换符号

*/

public String replace(final String text, final int skip, final char symbol) {

char[] charset = text.toCharArray();

for (int i = 0; i < charset.length; i++) {

FlagIndex fi = getFlagIndex(charset, i, skip);

if (fi.isFlag()) {

if (!fi.isWhiteWord()) {

for (int j : fi.getIndex()) {

charset[j] = symbol;

}

} else {

i += fi.getIndex().size() - 1;

}

}

}

return new String(charset);

}

/**

* 是否包含敏感词

*

* @param text 输入文本

*/

public boolean include(final String text) {

return include(text, 0);

}

/**

* 是否包含敏感词

*

* @param text 输入文本

* @param skip 文本距离

*/

public boolean include(final String text, final int skip) {

boolean include = false;

char[] charset = text.toCharArray();

for (int i = 0; i < charset.length; i++) {

FlagIndex fi = getFlagIndex(charset, i, skip);

if(fi.isFlag()) {

if (fi.isWhiteWord()) {

i += fi.getIndex().size() - 1;

} else {

include = true;

break;

}

}

}

return include;

}

/**

* 获取敏感词数量

*

* @param text 输入文本

*/

public int wordCount(final String text) {

return wordCount(text, 0);

}

/**

* 获取敏感词数量

*

* @param text 输入文本

* @param skip 文本距离

*/

public int wordCount(final String text, final int skip) {

int count = 0;

char[] charset = text.toCharArray();

for (int i = 0; i < charset.length; i++) {

FlagIndex fi = getFlagIndex(charset, i, skip);

if (fi.isFlag()) {

if(fi.isWhiteWord()) {

i += fi.getIndex().size() - 1;

} else {

count++;

}

}

}

return count;

}

/**

* 获取敏感词列表

*

* @param text 输入文本

*/

public List<String> wordList(final String text) {

return wordList(text, 0);

}

/**

* 获取敏感词列表

*

* @param text 输入文本

* @param skip 文本距离

*/

public List<String> wordList(final String text, final int skip) {

List<String> wordList = new ArrayList<>();

char[] charset = text.toCharArray();

for (int i = 0; i < charset.length; i++) {

FlagIndex fi = getFlagIndex(charset, i, skip);

if (fi.isFlag()) {

if(fi.isWhiteWord()) {

i += fi.getIndex().size() - 1;

} else {

StringBuilder builder = new StringBuilder();

for (int j : fi.getIndex()) {

char word = text.charAt(j);

builder.append(word);

}

wordList.add(builder.toString());

}

}

}

return wordList;

}

/**

* 获取标记索引

*

* @param charset 输入文本

* @param begin 检测起始

* @param skip 文本距离

*/

private FlagIndex getFlagIndex(final char[] charset, final int begin, final int skip) {

FlagIndex fi = new FlagIndex();

Map current = wordMap;

boolean flag = false;

int count = 0;

List<Integer> index = new ArrayList<>();

for (int i = begin; i < charset.length; i++) {

char word = charset[i];

Map mapTree = (Map) current.get(word);

if (count > skip || (i == begin && Objects.isNull(mapTree))) {

break;

}

if (Objects.nonNull(mapTree)) {

current = mapTree;

count = 0;

index.add(i);

} else {

count++;

if (flag && count > skip) {

break;

}

}

if ("1".equals(current.get("isEnd"))) {

flag = true;

}

if ("1".equals(current.get("isWhiteWord"))) {

fi.setWhiteWord(true);

break;

}

}

fi.setFlag(flag);

fi.setIndex(index);

return fi;

}

}注:Skip距离

程序会跳过不同的距离,查找敏感词,距离越长,过滤越严格,效率越低,开发者可以根据具体需求设置,这里以“紧急”为敏感词举例,以此类推

0 匹配紧急

1 匹配不紧不急,紧x急

2 匹配紧急,紧x急,紧xx急

6.功能测试

package com.example.wordfilter;

import com.example.utils.wordfilter.WordContext;

import com.example.utils.wordfilter.WordFilter;

import com.example.utils.wordfilter.WordType;

import org.junit.Test;

import org.springframework.boot.test.context.SpringBootTest;

import java.util.Collections;

/**

* DFA敏感词单元测试用例

*/

@SpringBootTest

public class DFATest {

/**

* 词库上下文环境

*/

private final WordContext context = new WordContext();

private final WordFilter filter = new WordFilter(context);

/**

* 测试替换敏感词

*/

@Test

public void replace() {

String text = "利于上游行业发展的政策逐渐发布";

System.out.println(filter.replace(text)); //利于上游行业发展的政策逐渐发布

context.removeWord(Collections.singletonList("上游行业"), WordType.WHITE);

System.out.println(filter.replace(text)); //利于上**业发展的政策逐渐发布

}

/**

* 测试是否包含敏感词

*/

@Test

public void include() {

String text = "利于上游行业发展的政策逐渐发布";

System.out.println(filter.include(text)); //false

context.removeWord(Collections.singletonList("上游行业"), WordType.WHITE);

System.out.println(filter.include(text)); //true

}

/**

* 获取敏感词数

*/

@Test

public void wordCount() {

String text = "利于上游行业发展的政策逐渐发布";

System.out.println(filter.wordCount(text)); //0

context.removeWord(Collections.singletonList("上游行业"), WordType.WHITE);

System.out.println(filter.wordCount(text)); //1

}

/**

* 获取敏感词列表

*/

@Test

public void wordList() {

String text = "利于上游1行业发展的政策逐渐发布";

System.out.println(filter.wordList(text)); //[]]

context.removeWord(Collections.singletonList("上游行业"), WordType.WHITE);

System.out.println(filter.wordList(text)); //[游行]

}

/**

* 测试替换敏感词

*/

@Test

public void replace2() {

String text = "你是个笨蛋吧";

System.out.println(filter.replace(text));

}

/**

* 测试替换敏感词

*/

@Test

public void replace3() {

String text = "你是个日本鬼子吧";

System.out.println(filter.replace(text));

}

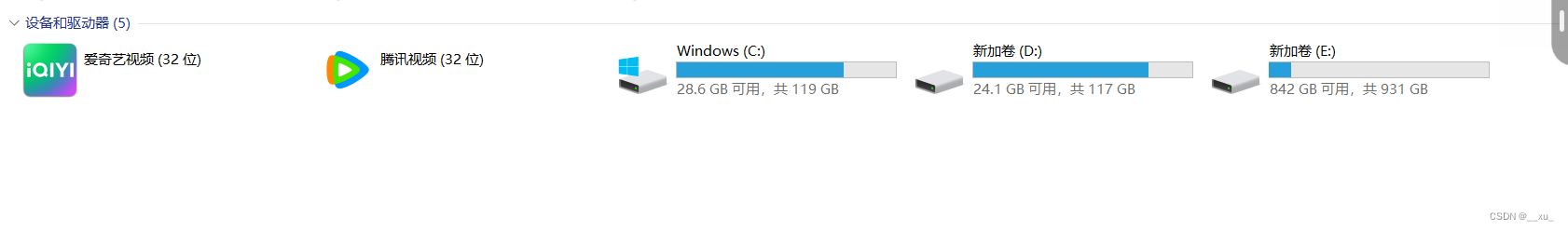

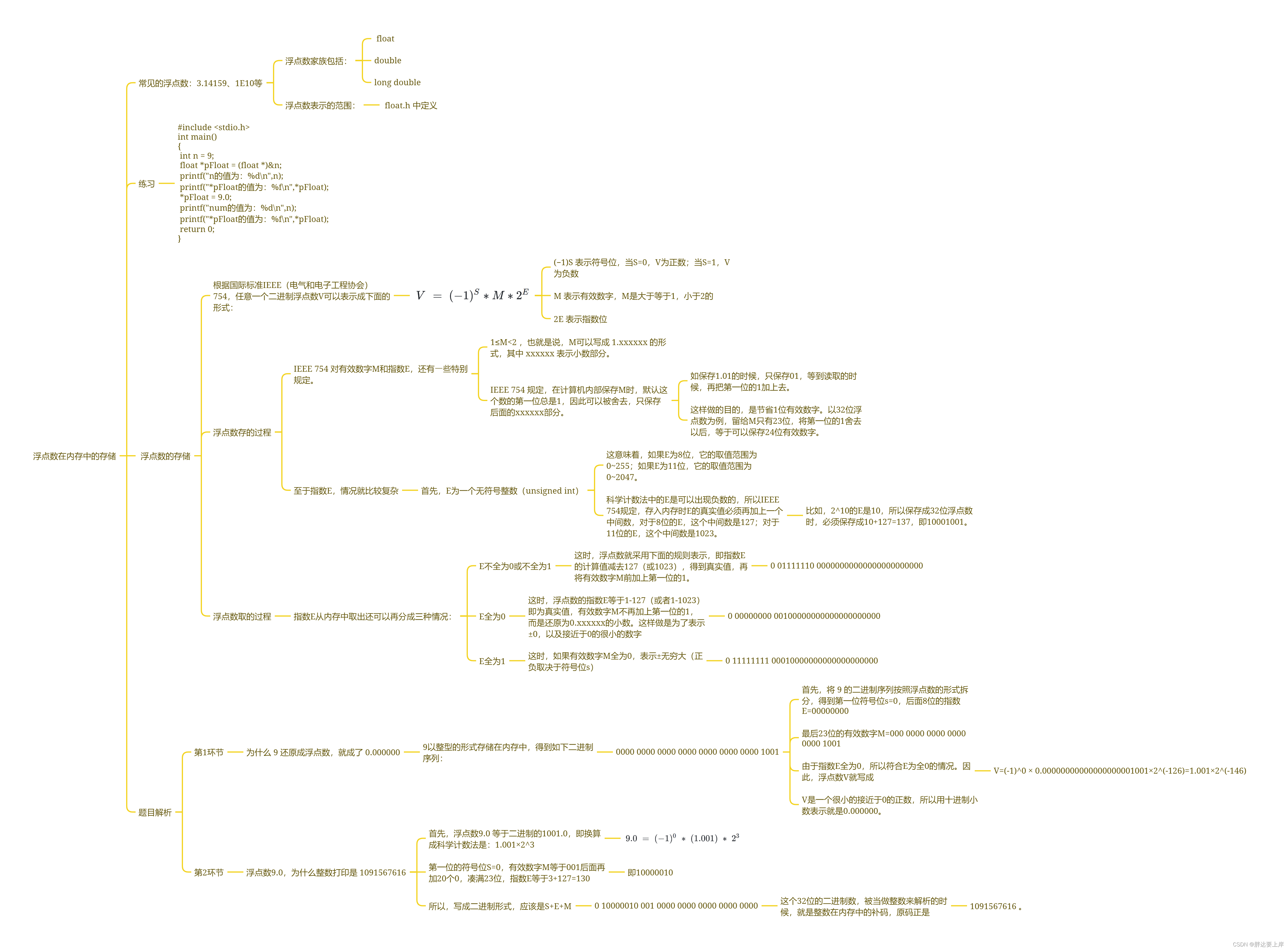

}7.工程目录

可以点个免费的赞吗!!!