目录

一、单层结构

二、 双层结构

三、运行结果

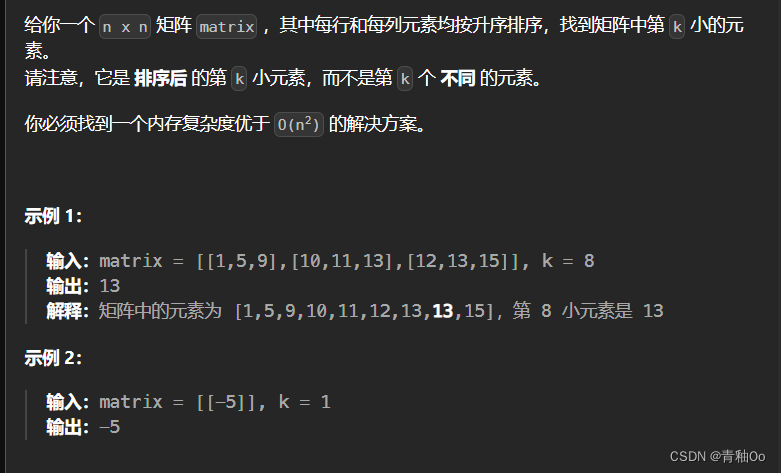

一、单层Stacking结构,基学习器为:朴素贝叶斯、随即下降、随机森林、决策树、AdaBoost、GBDT、XGBoost,7个任选3个作为基学习器组合,Meta函数固定为MLP。

import itertools

import pandas as pd

from sklearn.neural_network import MLPClassifier

from sklearn.linear_model import SGDClassifier

from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier, AdaBoostClassifier

from sklearn.tree import DecisionTreeClassifier

from xgboost import XGBClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import accuracy_score, roc_auc_score, f1_score, confusion_matrix

from sklearn.ensemble import StackingClassifier

# 导入数据

data_train_lasso = pd.read_excel()

data_validation_lasso = pd.read_excel()

data_test_lasso = pd.read_excel()

# 划分数据集

X_train = data_train_lasso.iloc[:, 0:-1]

y_train = data_train_lasso.iloc[:, -1]

X_validation = data_validation_lasso.iloc[:, 0:-1]

y_validation = data_validation_lasso.iloc[:, -1]

X_test = data_test_lasso.iloc[:, 0:-1]

y_test = data_test_lasso.iloc[:, -1]

# 定义基础分类器

base_classifiers = {

'nb': GaussianNB(),

'sgd': SGDClassifier(random_state=514),

'rf': RandomForestClassifier(random_state=514),

'dt': DecisionTreeClassifier(random_state=514),

'ada': AdaBoostClassifier(random_state=514),

'gbdt': GradientBoostingClassifier(random_state=514),

'xgb': XGBClassifier(random_state=514)

}

# 定义元分类器

meta_classifier = MLPClassifier(random_state=514)

# 生成基学习器的组合

base_combinations = list(itertools.combinations(base_classifiers.keys(), 3))

# 保存结果的列表

results = []

# 遍历每个组合

for base_combination in base_combinations:

# 选取当前组合的基学习器

current_classifiers = [(name, base_classifiers[name]) for name in base_combination]

# 创建StackingClassifier

stacking_classifier = StackingClassifier(estimators=current_classifiers, final_estimator=meta_classifier)

# 训练Stacking分类器

stacking_classifier.fit(X_train, y_train)

# 预测测试集

y_pred = stacking_classifier.predict(X_test)

# 评估性能

accuracy_stacking = accuracy_score(y_test, y_pred)

auc_stacking = roc_auc_score(y_test, y_pred)

f1_stacking = f1_score(y_test, y_pred)

tn, fp, fn, tp = confusion_matrix(y_test, y_pred).ravel()

fpr_stacking = fp / (fp + tn)

# 保存当前组合的评估指标

result = {

'Classifiers': base_combination,

'Accuracy': accuracy_stacking,

'AUC': auc_stacking,

'F1 Score': f1_stacking,

'FPR': fpr_stacking

}

results.append(result)

# 将结果转为DataFrame

results_df = pd.DataFrame(results)

# 打印结果

print(results_df)

# 保存结果到Excel文件

results_df.to_excel()二、 双层Stacking结构,基学习器为:朴素贝叶斯、随即下降、随机森林、决策树、AdaBoost、GBDT、XGBoost,7个中每层任选2个作为基学习器组合,Meta函数固定为MLP。

from itertools import combinations

from sklearn.ensemble import RandomForestClassifier, AdaBoostClassifier, GradientBoostingClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.linear_model import SGDClassifier

from sklearn.naive_bayes import GaussianNB

from xgboost import XGBClassifier

from sklearn.neural_network import MLPClassifier

from sklearn.ensemble import StackingClassifier

from sklearn.metrics import accuracy_score, roc_auc_score, f1_score, confusion_matrix

import pandas as pd

# 导入数据

data_train_lasso = pd.read_excel()

data_validation_lasso = pd.read_excel()

data_test_lasso = pd.read_excel()

# 划分数据集

X_train = data_train_lasso.iloc[:, 0:-1]

y_train = data_train_lasso.iloc[:, -1]

X_validation = data_validation_lasso.iloc[:, 0:-1]

y_validation = data_validation_lasso.iloc[:, -1]

X_test = data_test_lasso.iloc[:, 0:-1]

y_test = data_test_lasso.iloc[:, -1]

# 定义基学习器

base_classifiers = [

('naive_bayes', GaussianNB()),

('sgd', SGDClassifier(random_state=514)),

('random_forest', RandomForestClassifier(random_state=514)),

('decision_tree', DecisionTreeClassifier(random_state=514)),

('adaboost', AdaBoostClassifier(random_state=514)),

('gbdt', GradientBoostingClassifier(random_state=514)),

('xgboost', XGBClassifier(random_state=514))

]

# 定义元学习器

meta_classifier = MLPClassifier(random_state=514)

# 创建双层StackingClassifier

results = {'combo': [], 'accuracy': [], 'auc': [], 'f1': [], 'fpr': []}

for combo_first_layer in combinations(base_classifiers, 2):

for combo_second_layer in combinations(combo_first_layer, 2):

# 创建StackingClassifier

stacking_classifier = StackingClassifier(estimators=list(combo_second_layer), final_estimator=meta_classifier)

# 训练Stacking分类器

stacking_classifier.fit(X_train, y_train)

# 预测测试集

y_pred = stacking_classifier.predict(X_test)

# 评估性能

accuracy_stacking = accuracy_score(y_test, y_pred)

auc_stacking = roc_auc_score(y_test, y_pred)

f1_stacking = f1_score(y_test, y_pred)

tn, fp, fn, tp = confusion_matrix(y_test, y_pred).ravel()

fpr_stacking = fp / (fp + tn)

# 保存结果

combo_key = tuple(sorted([combo_first_layer[0][0], combo_first_layer[1][0], combo_second_layer[0][0], combo_second_layer[1][0]]))

results['combo'].append(combo_key)

results['accuracy'].append(accuracy_stacking)

results['auc'].append(auc_stacking)

results['f1'].append(f1_stacking)

results['fpr'].append(fpr_stacking)

# 将结果保存到 DataFrame

results_df = pd.DataFrame(results)

# 将 DataFrame 写入 Excel 文件

results_df.to_excel()三、运行结果如下:

| Classifiers | Accuracy | AUC | F1 Score | FPR |

| ('nb', 'sgd', 'rf') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('nb', 'sgd', 'dt') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('nb', 'sgd', 'ada') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('nb', 'sgd', 'gbdt') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('nb', 'sgd', 'xgb') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('nb', 'rf', 'dt') | 0.991138711 | 0.991302724 | 0.990570941 | 0.011286089 |

| ('nb', 'rf', 'ada') | 0.990859615 | 0.990969244 | 0.990265289 | 0.010761155 |

| ('nb', 'rf', 'gbdt') | 0.991138711 | 0.991293848 | 0.99056954 | 0.011154856 |

| ('nb', 'rf', 'xgb') | 0.991208485 | 0.991368341 | 0.990644491 | 0.011154856 |

| ('nb', 'dt', 'ada') | 0.989813006 | 0.989905102 | 0.989148209 | 0.011548556 |

| ('nb', 'dt', 'gbdt') | 0.990859615 | 0.990969244 | 0.990265289 | 0.010761155 |

| ('nb', 'dt', 'xgb') | 0.991557354 | 0.991660918 | 0.991007061 | 0.009973753 |

| ('nb', 'ada', 'gbdt') | 0.985556796 | 0.985627301 | 0.984613097 | 0.015485564 |

| ('nb', 'ada', 'xgb') | 0.990510745 | 0.99072105 | 0.989910979 | 0.012598425 |

| ('nb', 'gbdt', 'xgb') | 0.990580519 | 0.990795543 | 0.989985906 | 0.012598425 |

| ('sgd', 'rf', 'dt') | 0.531677365 | 0.5 | 0 | 0 |

| ('sgd', 'rf', 'ada') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('sgd', 'rf', 'gbdt') | 0.531677365 | 0.5 | 0 | 0 |

| ('sgd', 'rf', 'xgb') | 0.531677365 | 0.5 | 0 | 0 |

| ('sgd', 'dt', 'ada') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('sgd', 'dt', 'gbdt') | 0.531677365 | 0.5 | 0 | 0 |

| ('sgd', 'dt', 'xgb') | 0.531677365 | 0.5 | 0 | 0 |

| ('sgd', 'ada', 'gbdt') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('sgd', 'ada', 'xgb') | 0.897990511 | 0.899683178 | 0.894805008 | 0.127034121 |

| ('sgd', 'gbdt', 'xgb') | 0.531677365 | 0.5 | 0 | 0 |

| ('rf', 'dt', 'ada') | 0.991138711 | 0.991302724 | 0.990570941 | 0.011286089 |

| ('rf', 'dt', 'gbdt') | 0.990510745 | 0.99064116 | 0.989897489 | 0.011417323 |

| ('rf', 'dt', 'xgb') | 0.991348032 | 0.991481821 | 0.990788887 | 0.010629921 |

| ('rf', 'ada', 'gbdt') | 0.991138711 | 0.991276094 | 0.990566738 | 0.010892388 |

| ('rf', 'ada', 'xgb') | 0.991138711 | 0.991240588 | 0.99056113 | 0.010367454 |

| ('rf', 'gbdt', 'xgb') | 0.991208485 | 0.991368341 | 0.990644491 | 0.011154856 |

| ('dt', 'ada', 'gbdt') | 0.990231649 | 0.990378693 | 0.989603446 | 0.011942257 |

| ('dt', 'ada', 'xgb') | 0.990510745 | 0.99069442 | 0.989906487 | 0.012204724 |

| ('dt', 'gbdt', 'xgb') | 0.990789841 | 0.990939134 | 0.990197535 | 0.011417323 |

| ('ada', 'gbdt', 'xgb') | 0.990650293 | 0.99086116 | 0.990059347 | 0.012467192 |