文章目录

- 6、Ingress ★

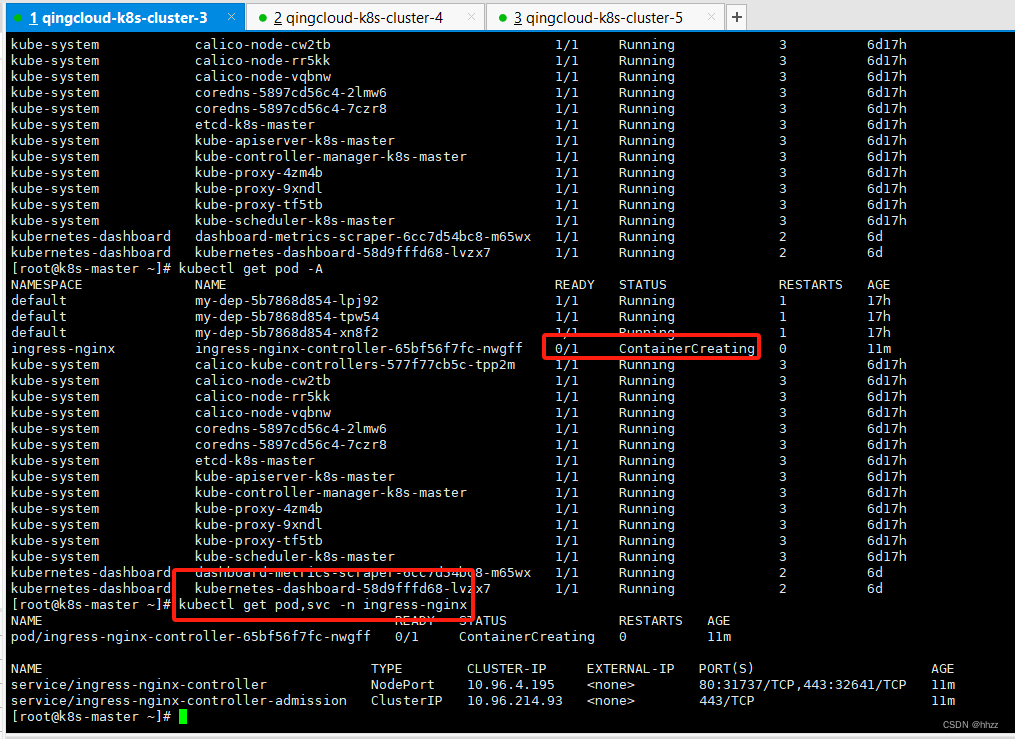

- 6.1 安装 Ingress

- 6.2 访问

- 6.3 安装不成功的bug解决

- 6.4 测试使用

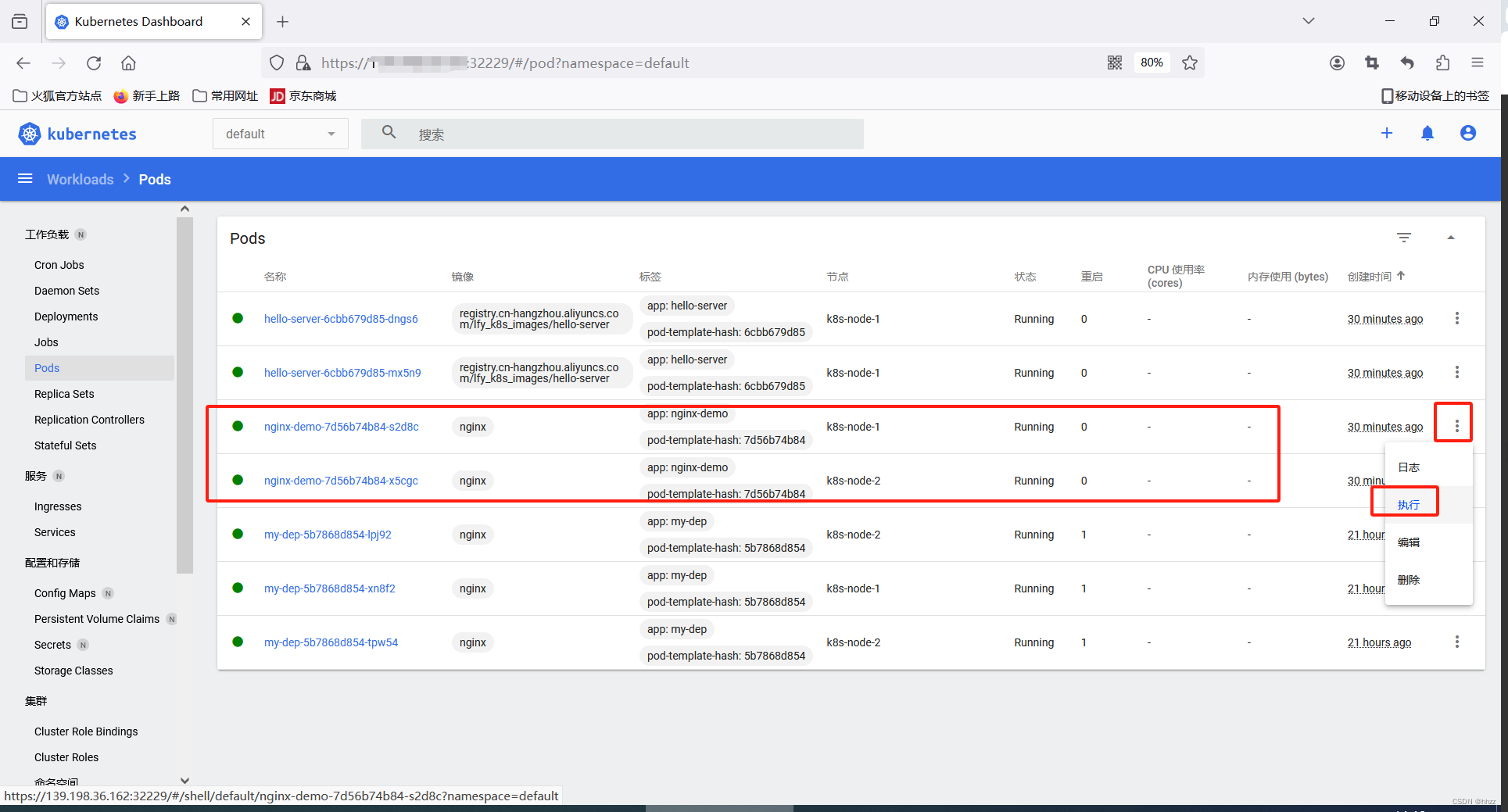

- 6.4.1 搭建测试环境

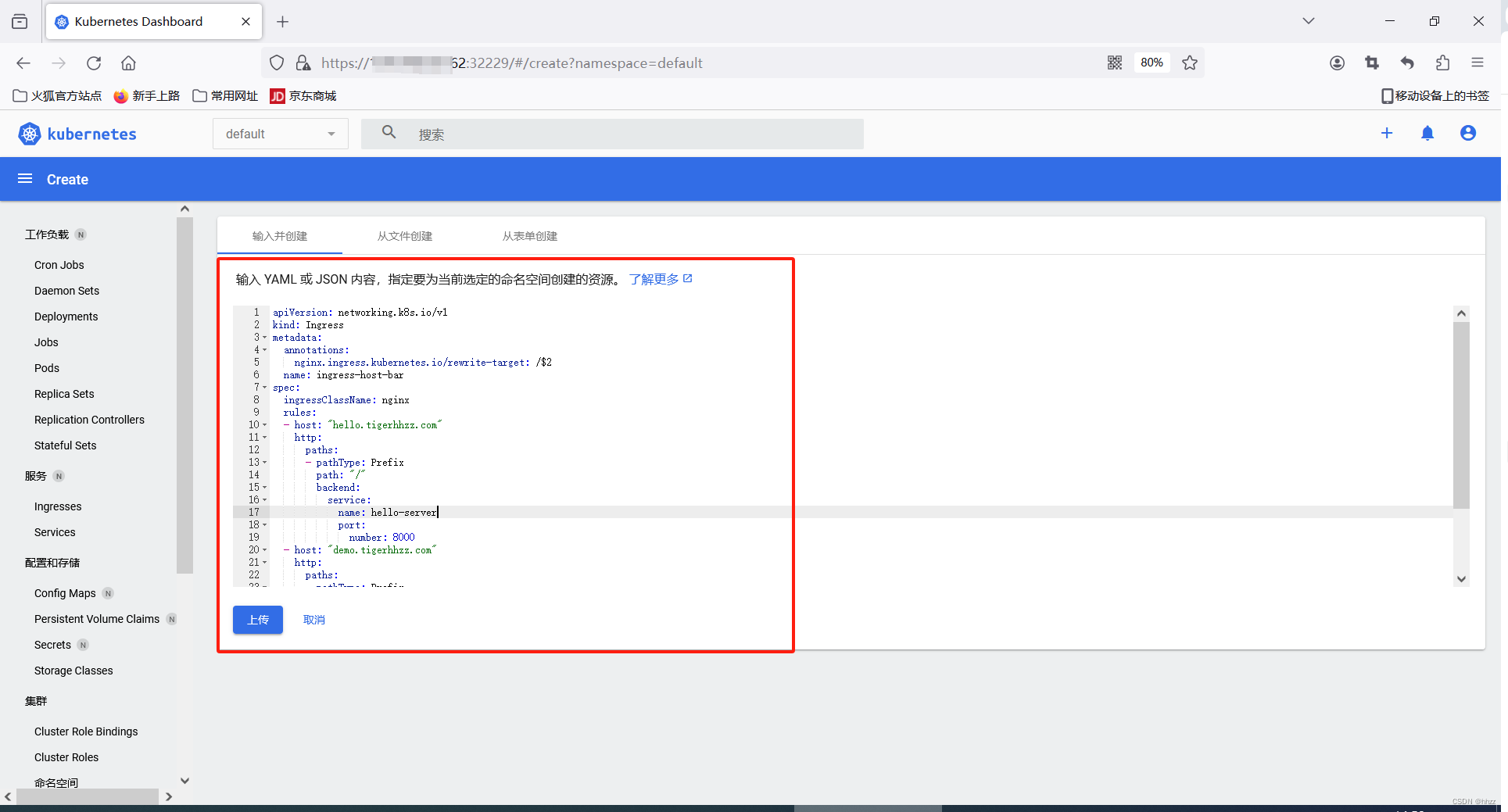

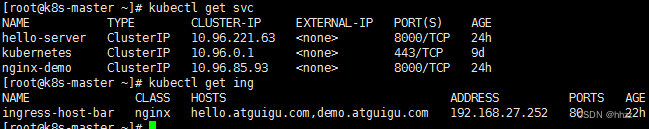

- 6.4.2 配置 Ingress的规则

- 6.4.3 测试I

- 6.4.4 测试II

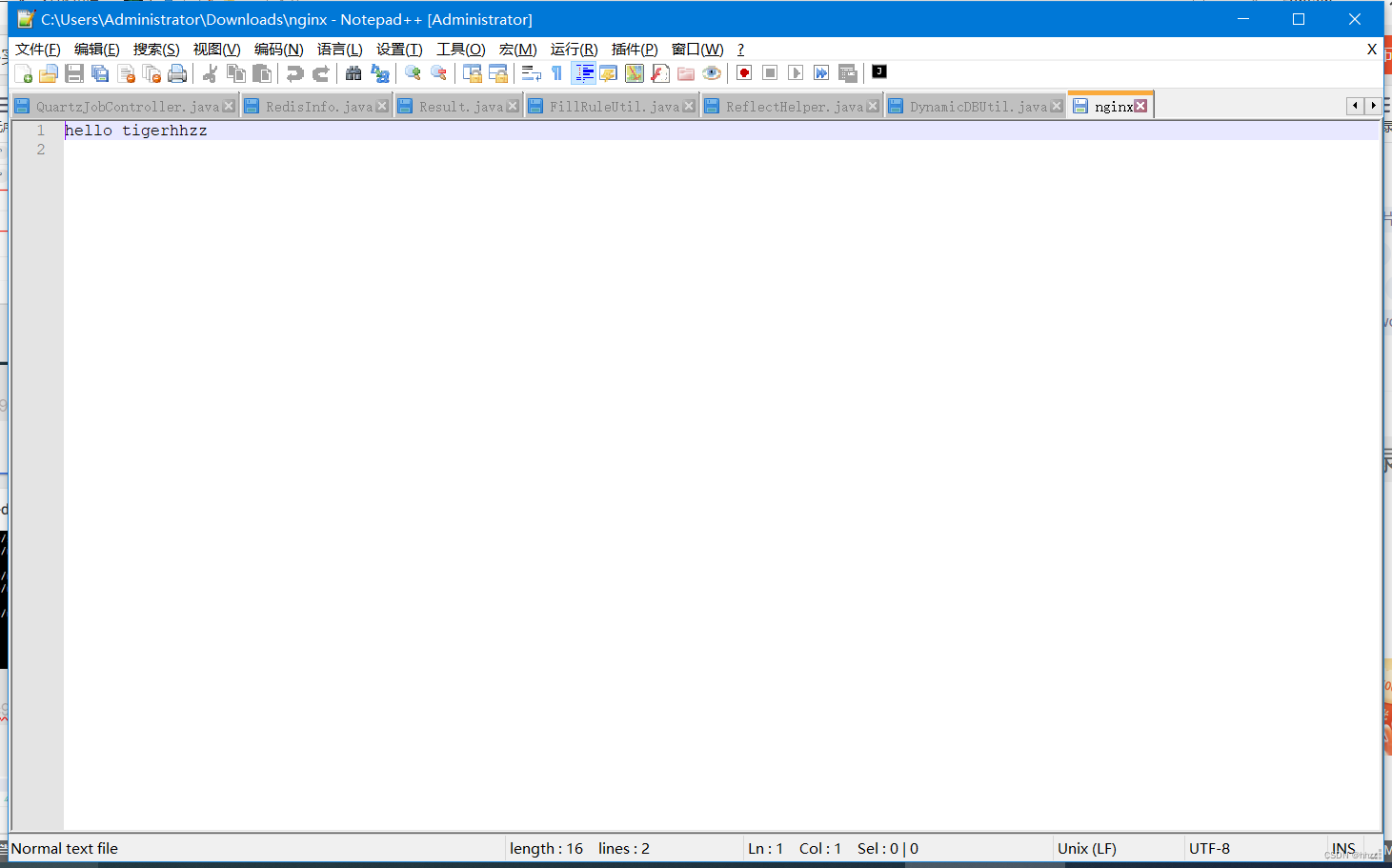

- 6.4.5 路径重写

- 6.4.6 限流

- 7. Kubernetes 存储抽象

- 7.1 NFS 搭建

- 7.2 原生方式 数据挂载

- 7.3 PV 和 PVC ★

- 7.3.1 创建 PV 池

- 7.3.2 创建、绑定 PCV

- 7.3.3 创建 Pod 绑定 PVC

- 7.4 ConfigMap ★

- 7.4.1 redis示例

- 7.5 Secret

- 7.5.1 拉取失败

- 7.5.2 创建 Secret

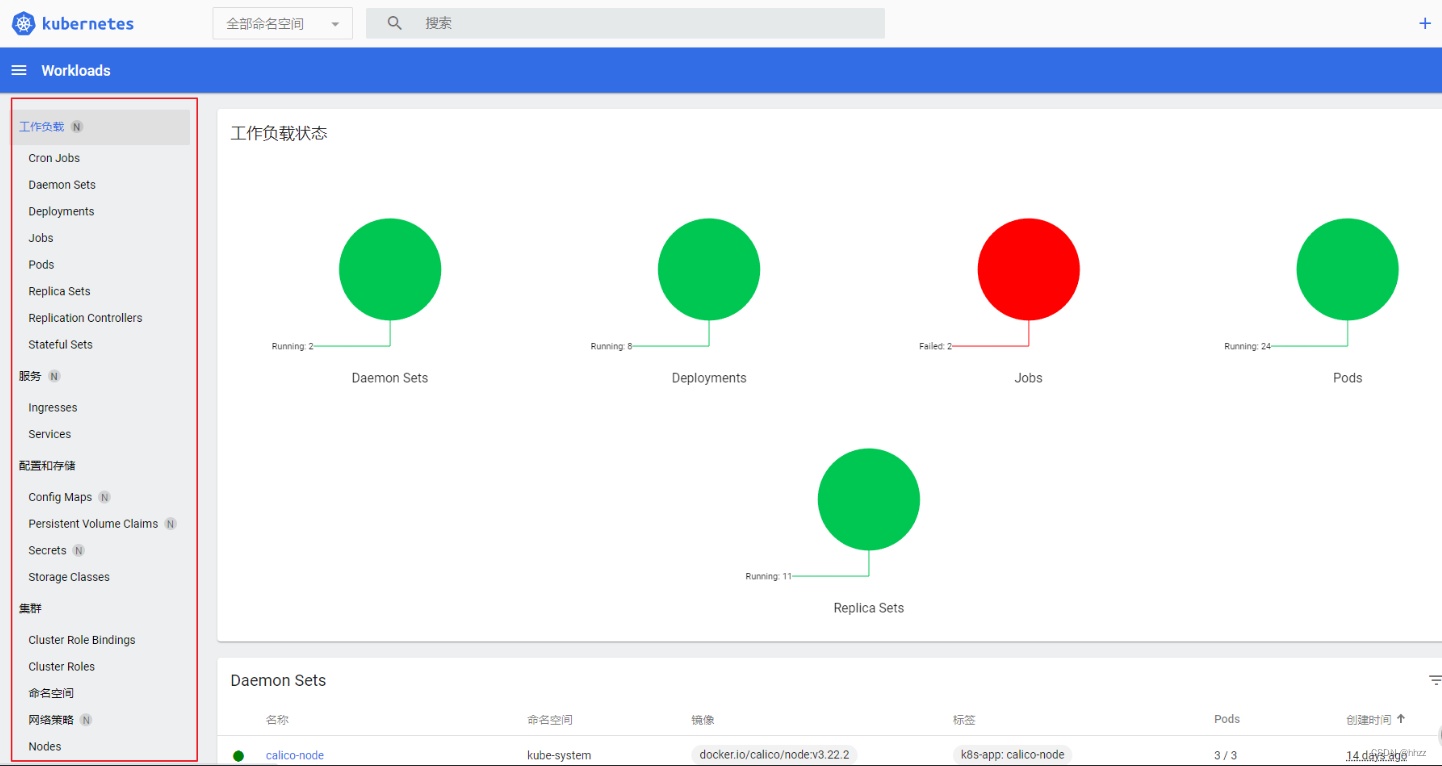

6、Ingress ★

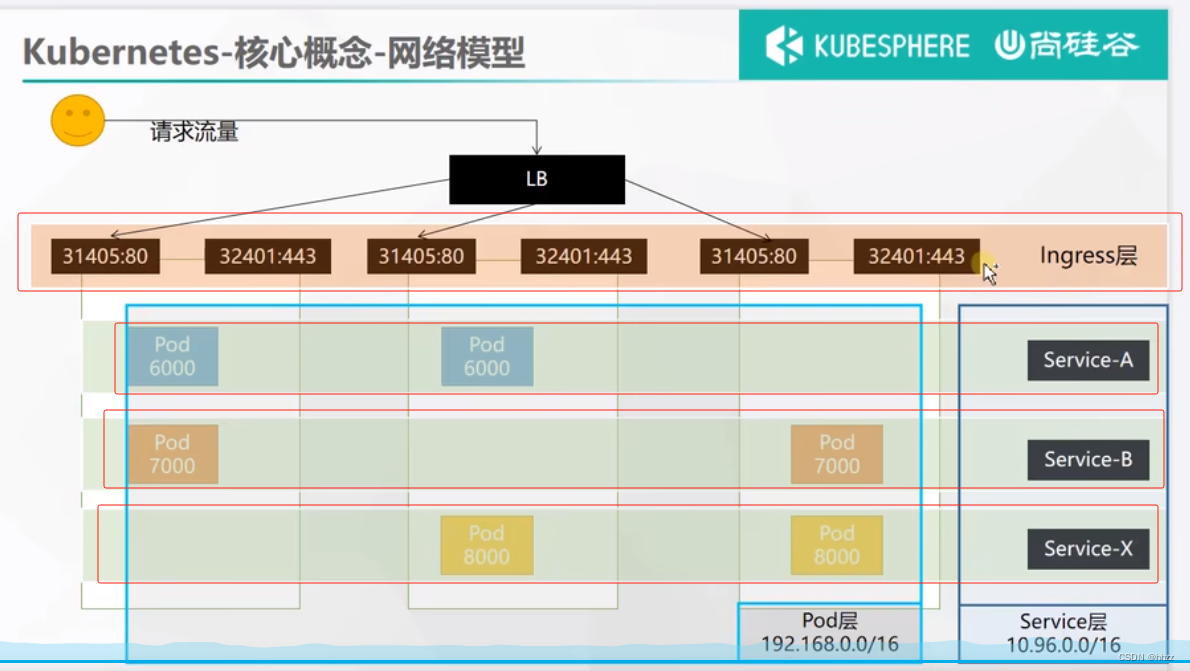

Ingress:Service 的统一网关入口,底层就是 nginx。(服务)

官网地址:https://kubernetes.github.io/ingress-nginx/ (都是从这里看的)

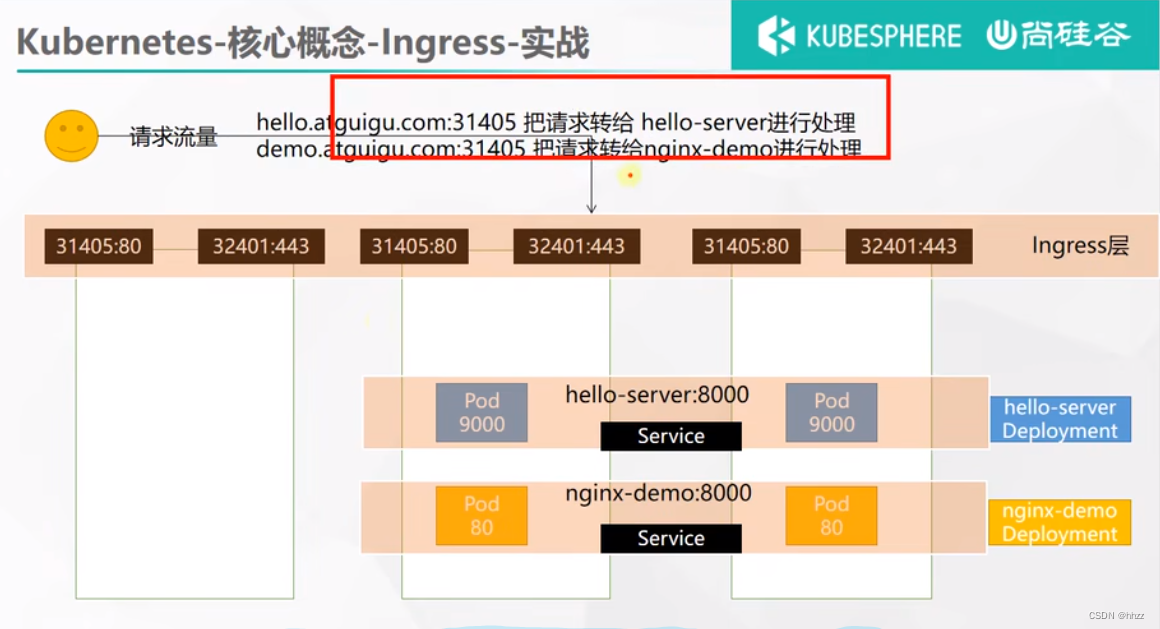

所有的请求都先通过 Ingress,由 Ingress 来 打理这些请求。类似微服务中的 网关。

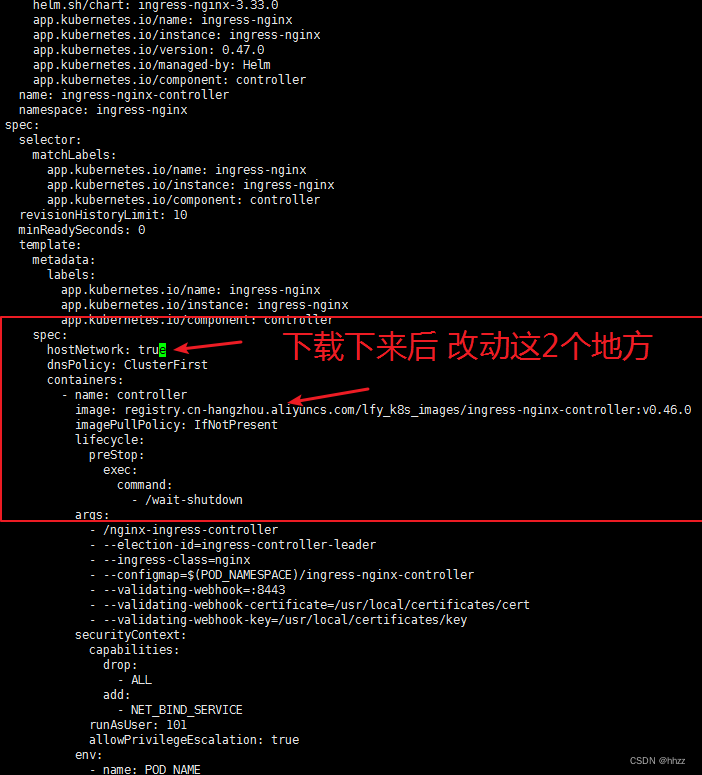

6.1 安装 Ingress

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml

## 这里我喜欢把depoly.yaml名字修改为ingress.yaml

# 修改镜像

vi ingress.yaml

# 将 image 的值改为如下值

registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

# 安装资源

kubectl apply -f ingress.yaml

# 检查安装的结果

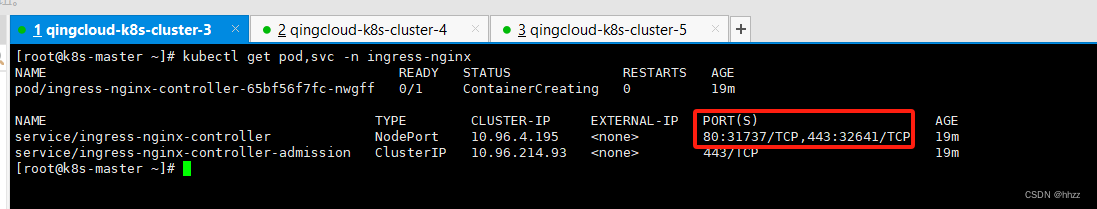

kubectl get pod,svc -n ingress-nginx

# 最后别忘记把 svc 暴露的端口 在安全组放行

ingress.yaml整个文件内容:

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

耐心等待pod创建完成,所有状态变成running。

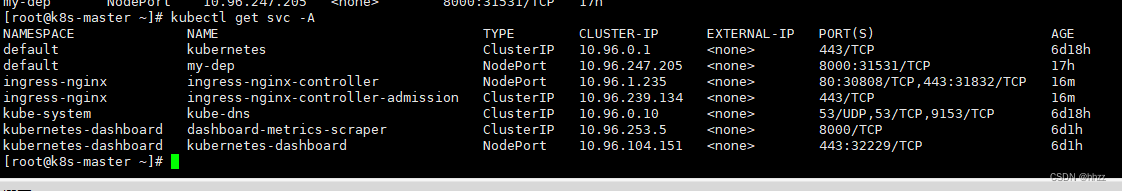

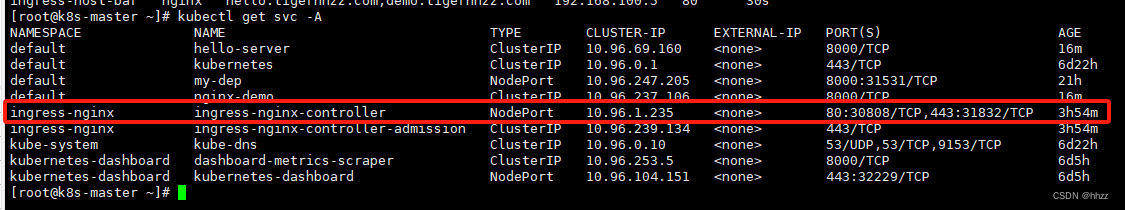

开放青云服务器端口 30000-32767

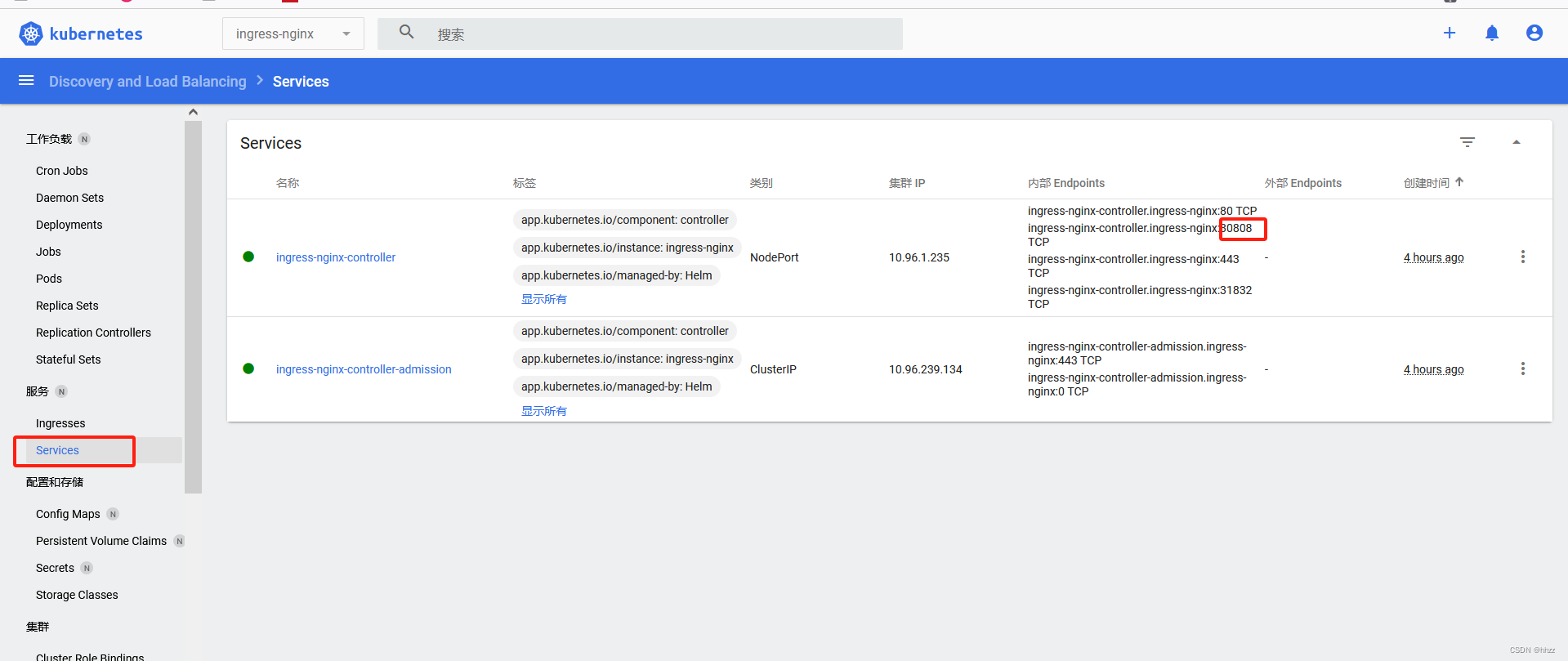

查看 映射后的 IP

kubectl get pod,svc -n ingress-nginx

6.2 访问

每台服务器 都开放 映射后的 端口:

31737、32641

https://xxxxxxxx:32641

http://xxxxxxxxx:31737

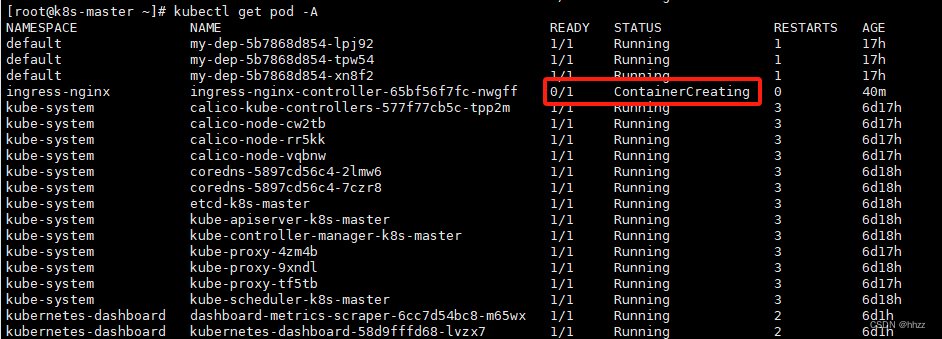

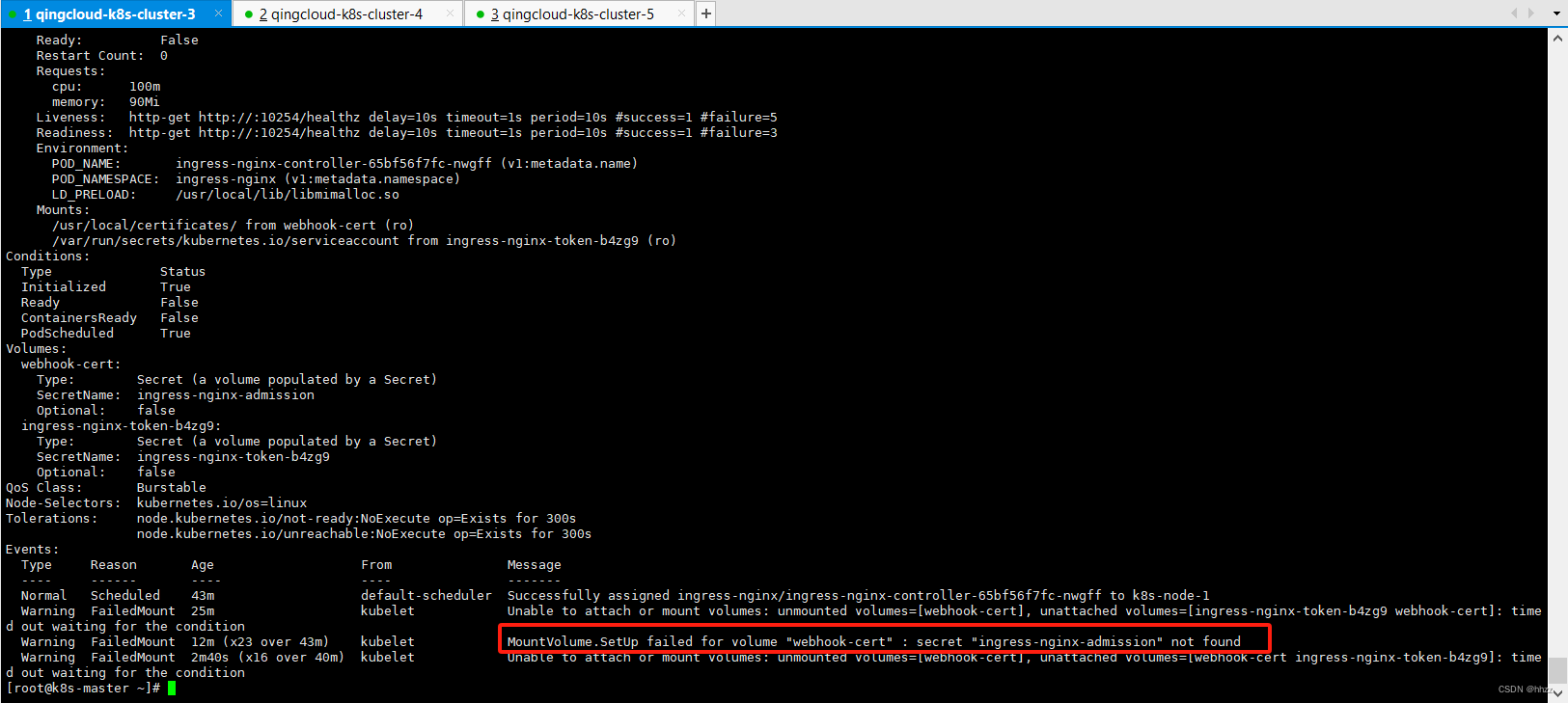

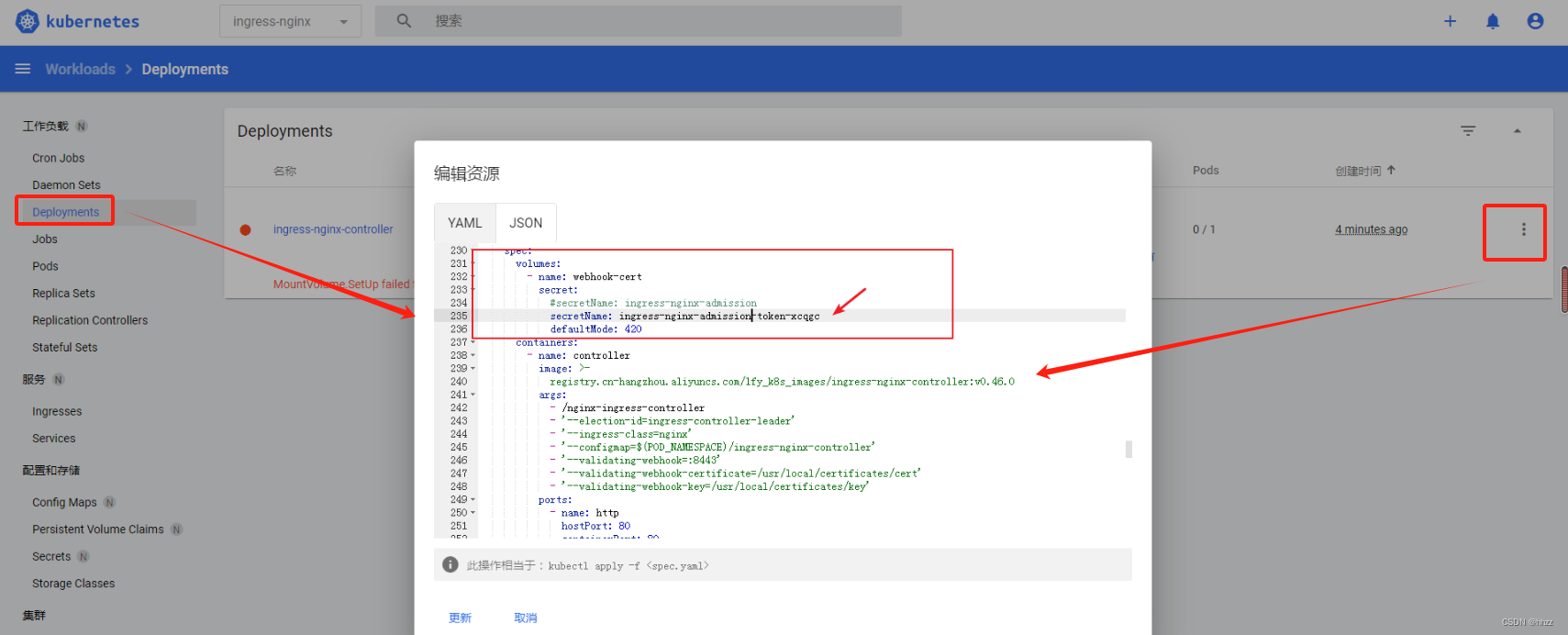

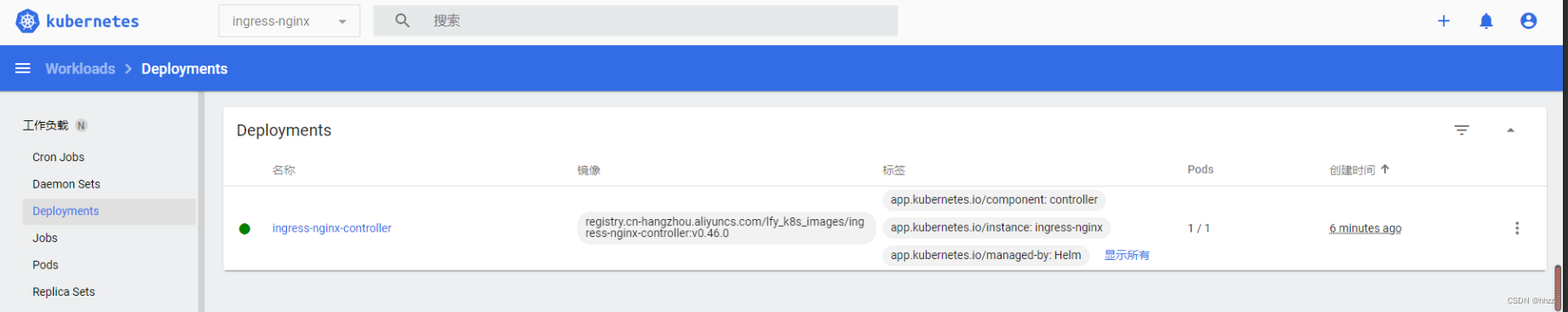

6.3 安装不成功的bug解决

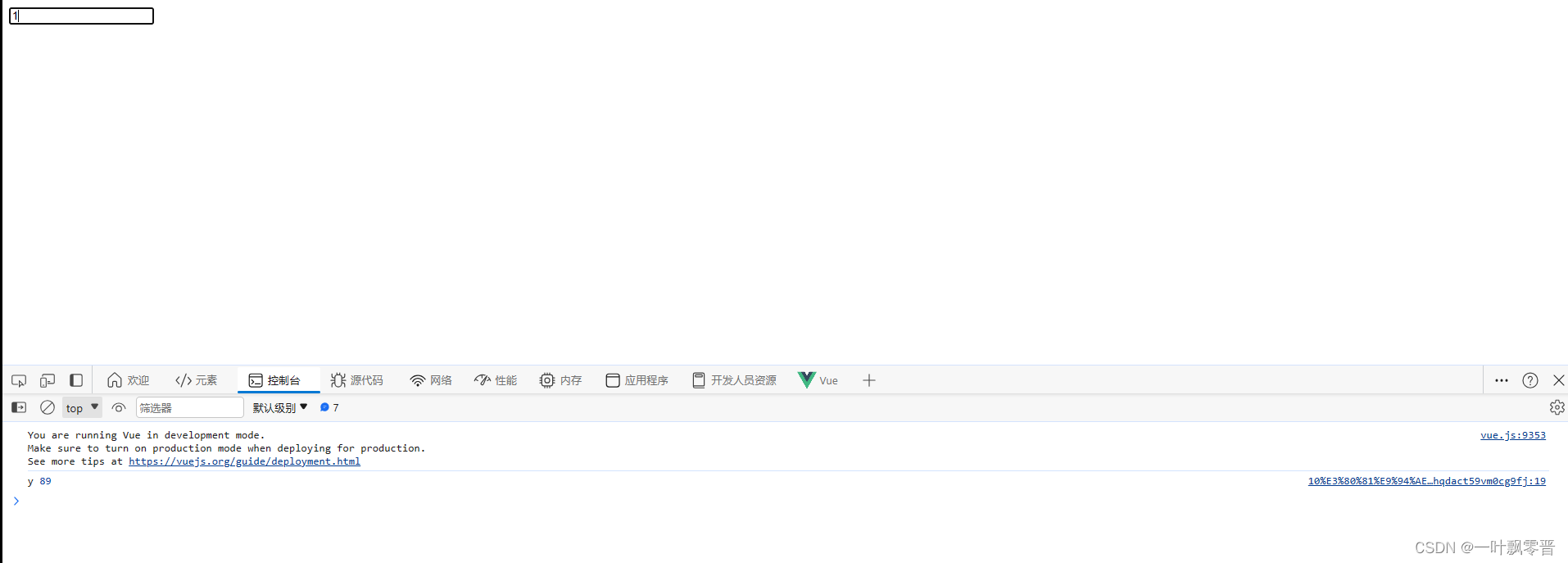

查看pod,一直在创建中。

使用describe,查看详细问题日志:

kubectl describe pod ingress-nginx-controller-65bf56f7fc-nwgff -n ingress-nginx

解决办法:

成功启动:

重新查看端口并访问

6.4 测试使用

官网地址:https://kubernetes.github.io/ingress-nginx/

ingress底层其实就是nginx。

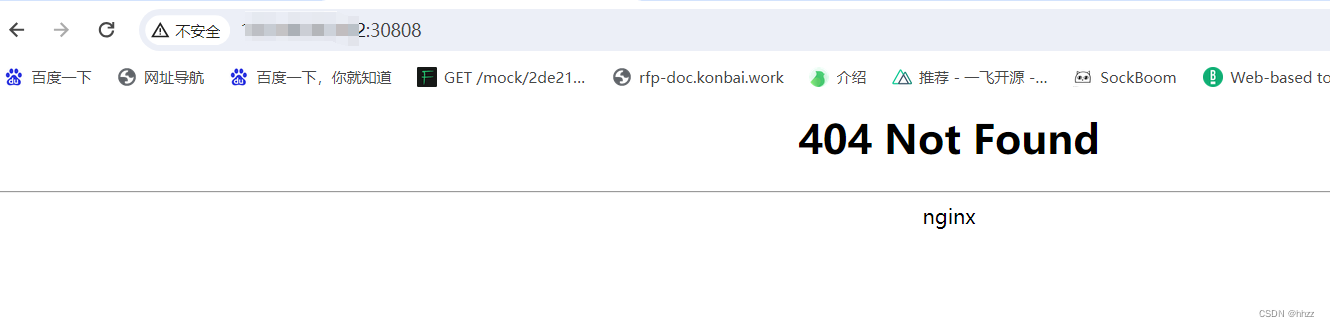

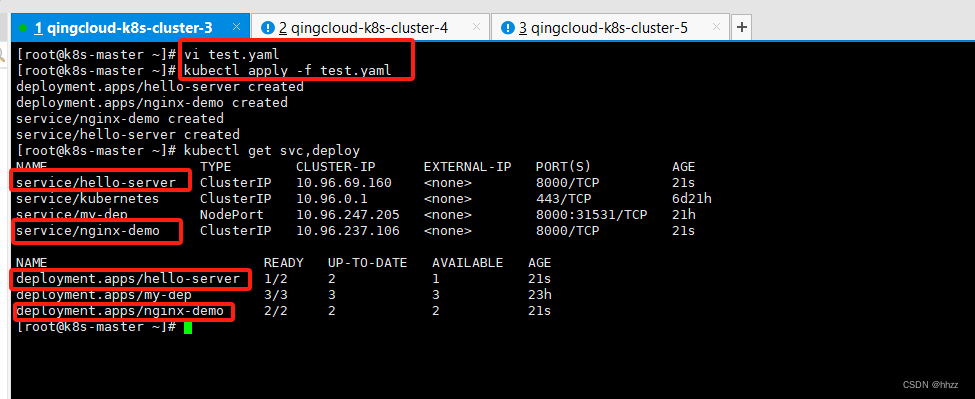

6.4.1 搭建测试环境

创建两个 Service和deployment

vi test.yaml

# 复制下面

kubectl apply -f test.yaml

test.yaml整个文件内容:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-server

spec:

replicas: 2

selector:

matchLabels:

app: hello-server

template:

metadata:

labels:

app: hello-server

spec:

containers:

- name: hello-server

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-server

ports:

- containerPort: 9000

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- image: nginx

name: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

selector:

app: nginx-demo

ports:

- port: 8000

protocol: TCP

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hello-server

name: hello-server

spec:

selector:

app: hello-server

ports:

- port: 8000

protocol: TCP

targetPort: 9000

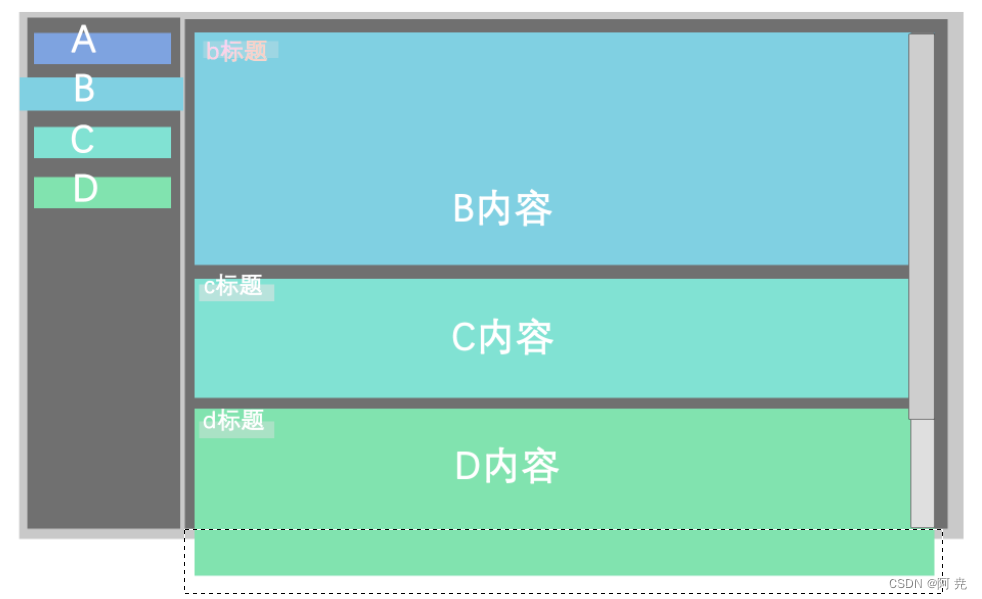

6.4.2 配置 Ingress的规则

vi ingress-rule.yaml

# 复制下面配置

kubectl apply -f ingress-rule.yaml

# 查看 集群中的 Ingress

kubectl get ingress

ingress-rule.yaml完整内容:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.tigerhhzz.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000 # hello-server (service) 的端口是 8000

- host: "demo.tigerhhzz.com"

http:

paths:

- pathType: Prefix

path: "/" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo #java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.tigerhhzz.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000 # hello-server 的端口是 8000

- host: "demo.tigerhhzz.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

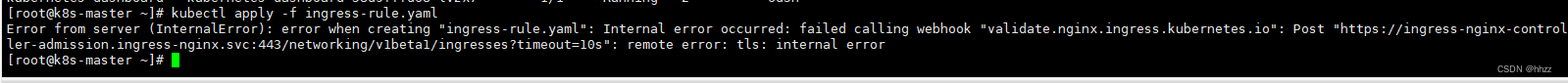

解决上面错误的方法:

kubectl get ValidatingWebhookConfiguration

# 把该死的 admission 删掉

kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

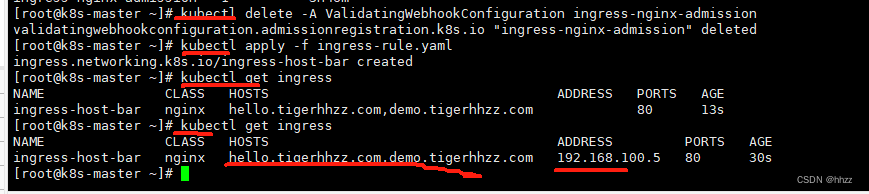

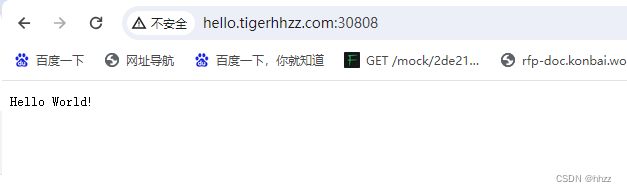

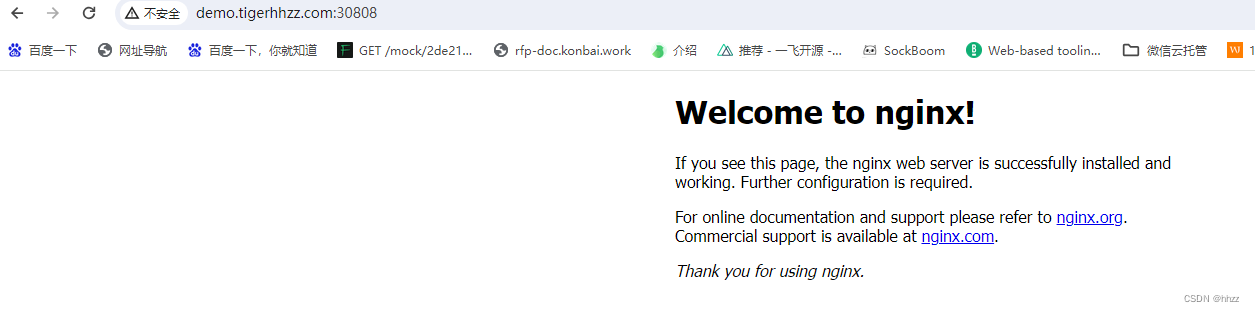

6.4.3 测试I

在 自己电脑(不是虚拟机) hosts 中增加映射:

master的公网IP hello.tigerhhzz.com

master的公网IP demo.tigerhhzz.com

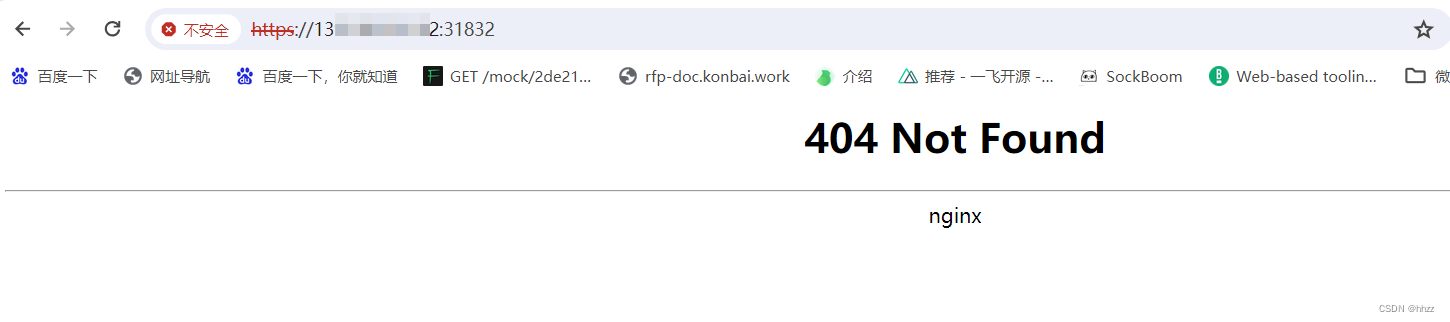

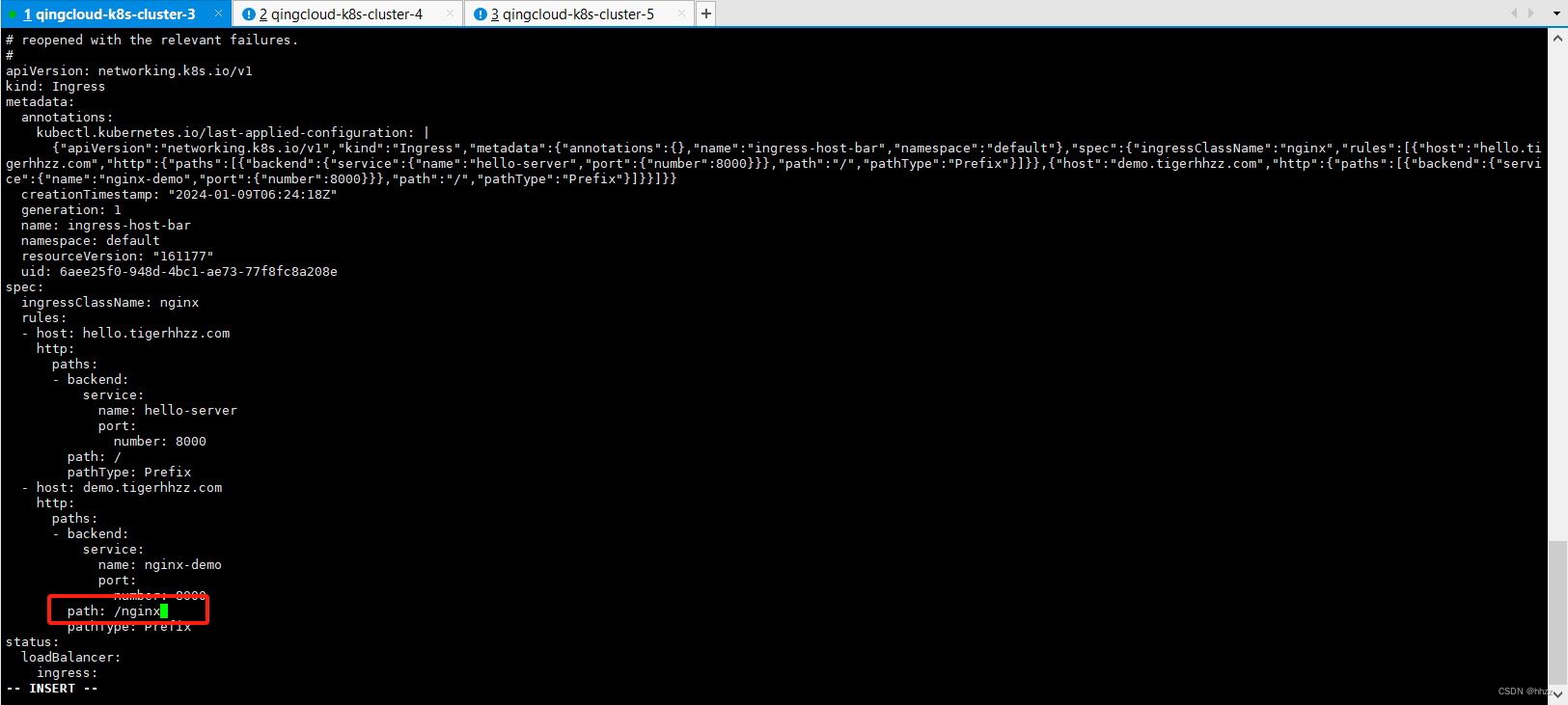

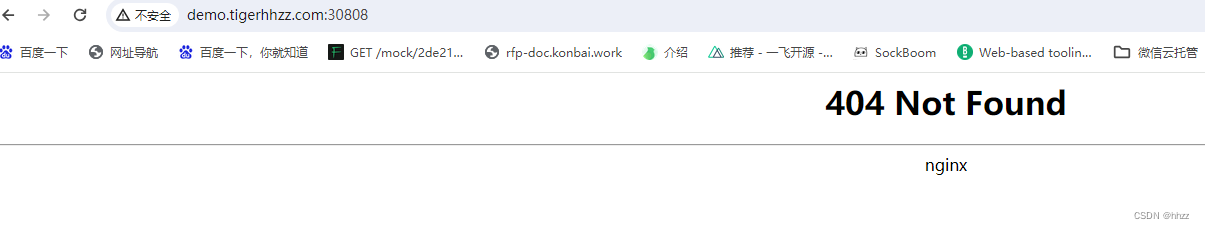

6.4.4 测试II

# kubectl get ingress

kubectl get ing

kubectl edit ing ingress的NAME -n

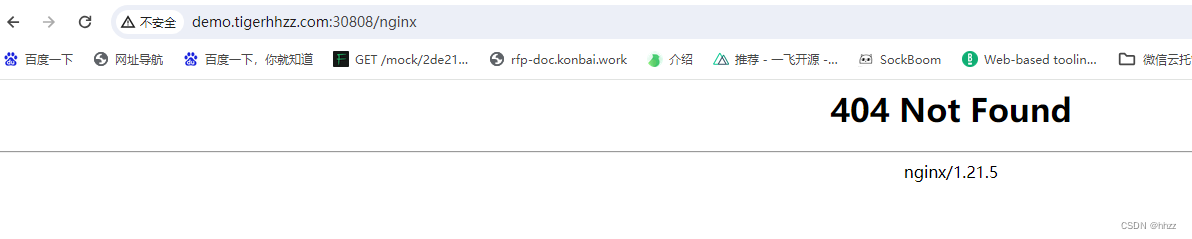

# 改变匹配的 path

- host: "demo.tigerhhzz.com"

http:

paths:

- pathType: Prefix

path: "/nginx" # 匹配请求 /nginx 的,并且查找 nginx 文件.

backend:

service:

name: nginx-demo

port:

number: 8000

随便写 /xxx 不匹配 nginx的,都返回 Ingress的 404的nginx

下面这个是 通过了 Ingress,Service 里的 Pod 没匹配到,才返回的 404(下面打印的 nginx 版本不一样的)

页面 进入 Pod 的那个nginx

cd /usr/share/nginx/html

ls

echo "hello tigerhhzz" > nginx

6.4.5 路径重写

修改ingress配置规则,下面这个这样子 就和 SpringCloud Gateway 网管转发一样的效果了。

ingress-rule.yaml的完整内容如下:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.tigerhhzz.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.tigerhhzz.com"

http:

paths:

- pathType: Prefix

path: "/nginx(/|$)(.*)"

backend:

service:

name: nginx-demo

port:

number: 8000

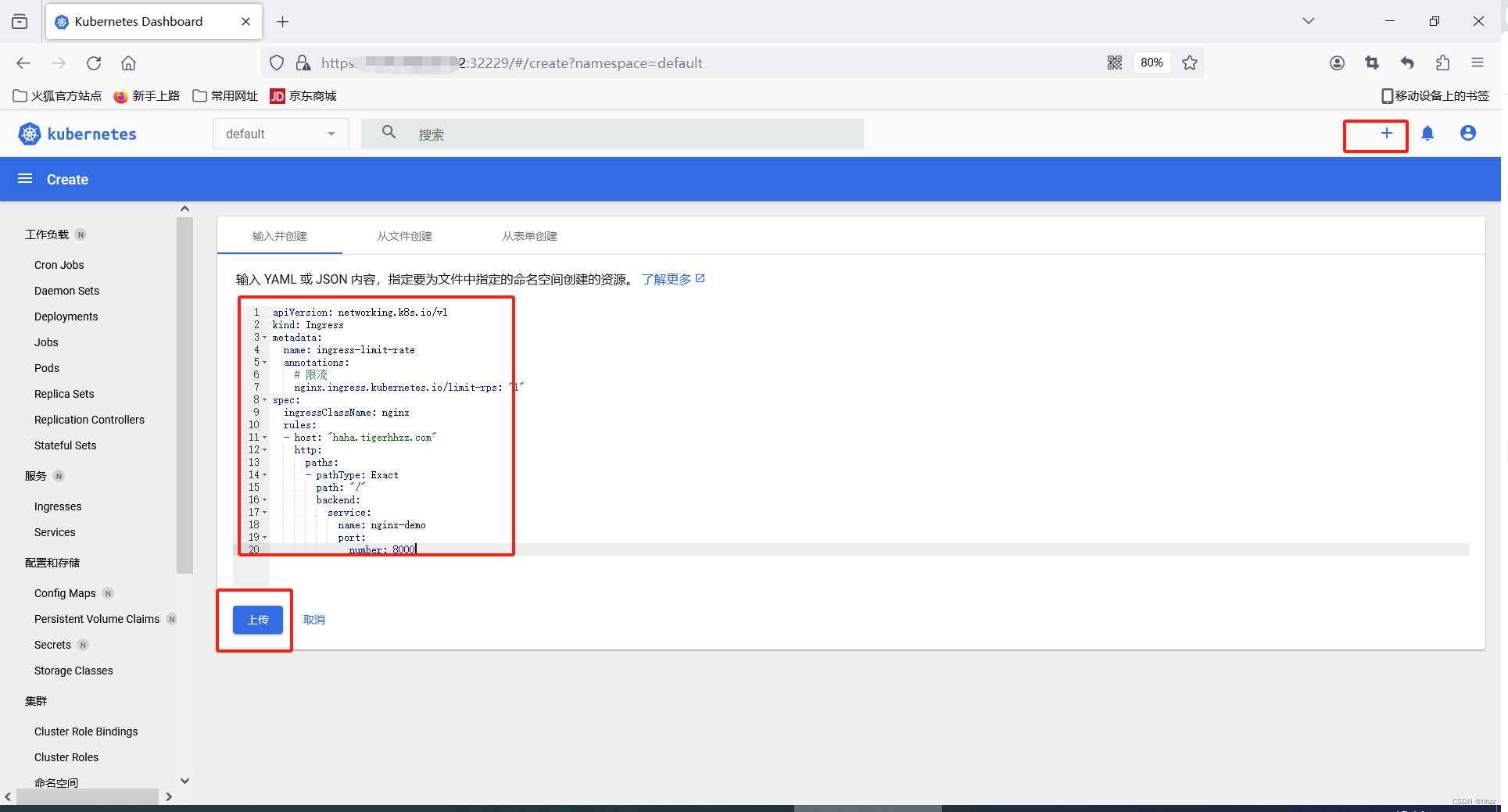

在可视化界面 创建

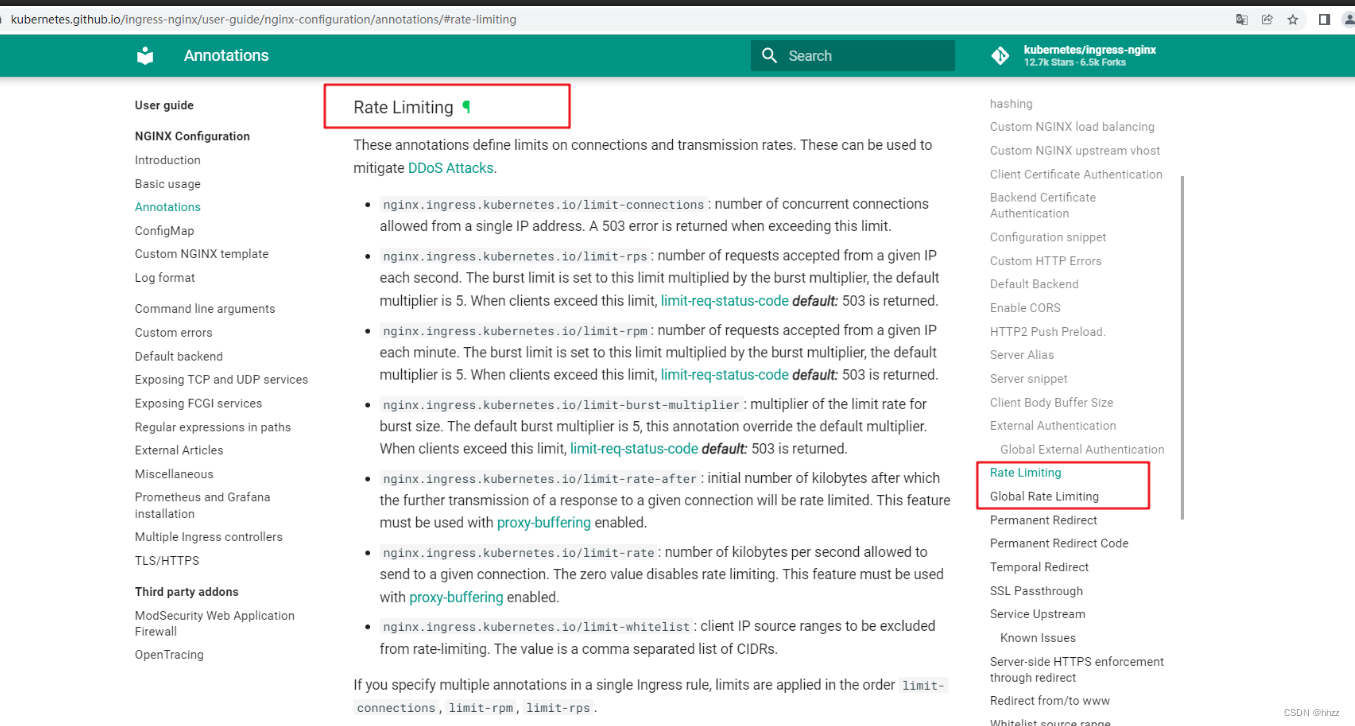

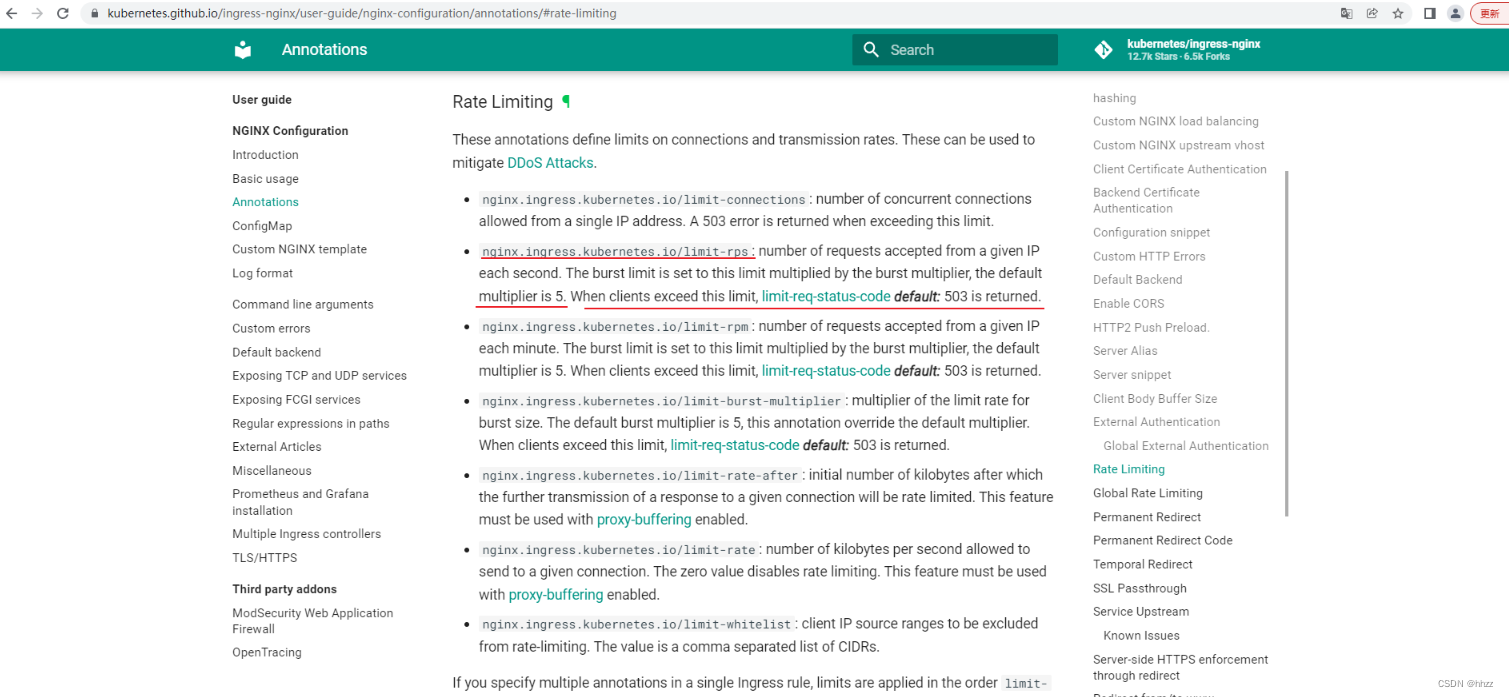

6.4.6 限流

官网文档:https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-limit-rate

annotations:

# 限流

nginx.ingress.kubernetes.io/limit-rps: "1"

spec:

ingressClassName: nginx

rules:

- host: "haha.tigerhhzz.com"

http:

paths:

- pathType: Exact

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

vim ingress-rule-2.yaml

# 复制上面配置

kubectl apply -f ingress-rule-2.yaml

kubect get ing

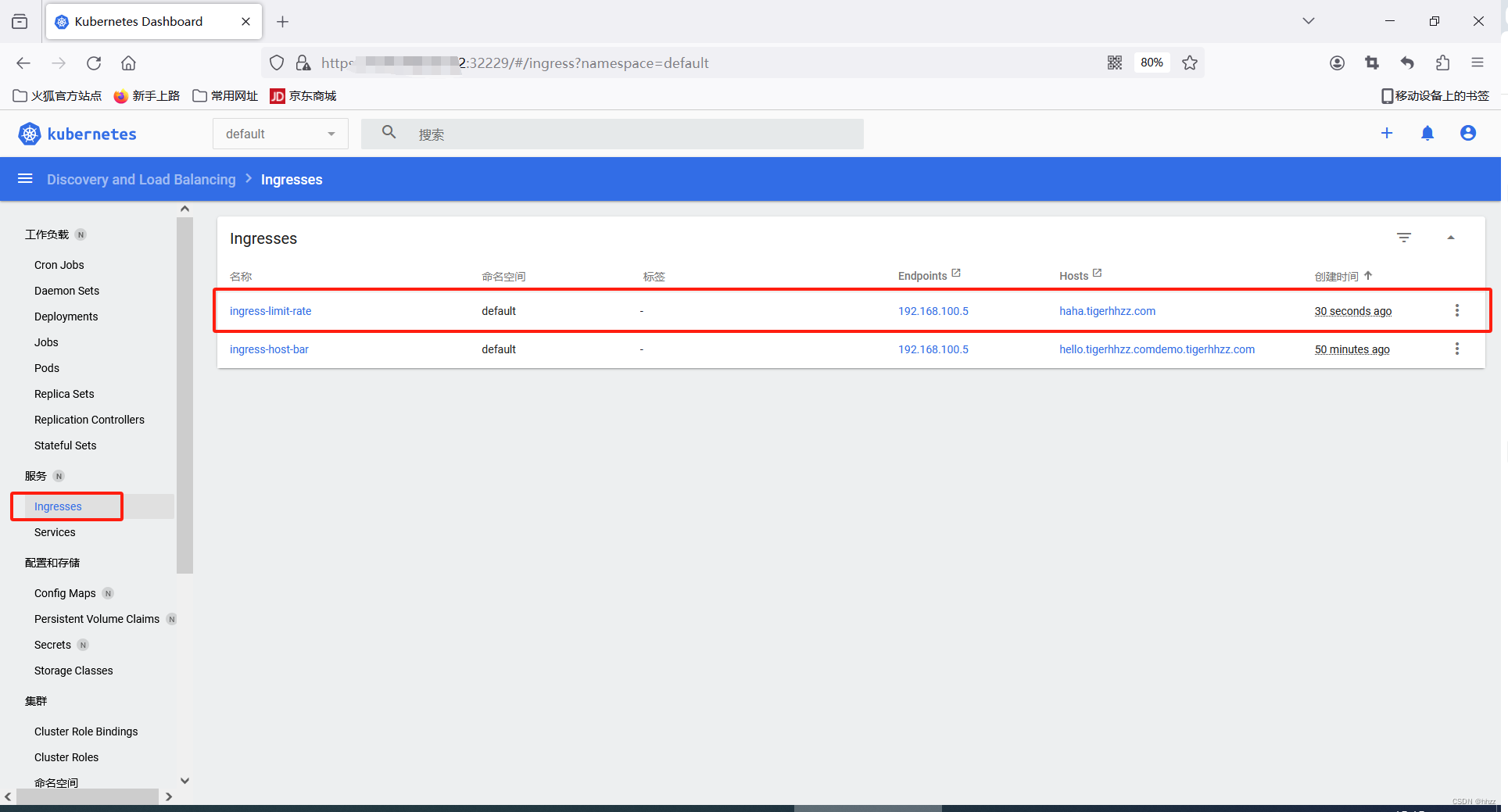

在可视化界面 创建ingress

在 自己电脑(不是虚拟机) hosts 中增加映射:

公网IP haha.tigerhhzz.com

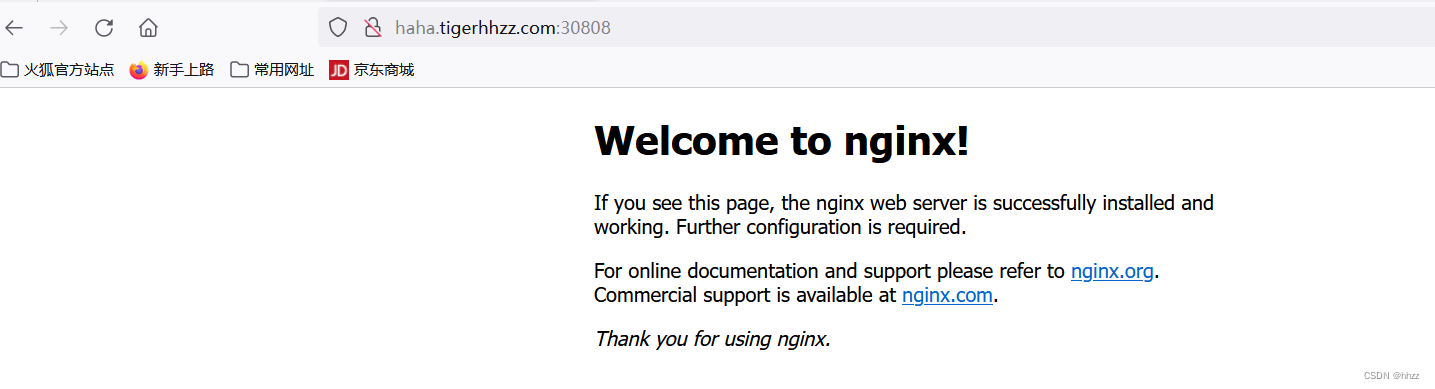

访问测试:

http://haha.tigerhhzz.com:30808/

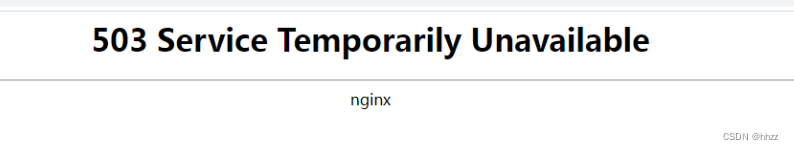

刷新过快 返回 503,官网文档也写了。

速率限制

这些注释定义了连接和传输速率的限制。这些可用于缓解DDoS 攻击

- nginx.ingress.kubernetes.io/limit-connections:允许来自单个IP 地址的并发连接数超过此限制时返回 503 错误

- nginx.ingress.kubernetes.io/limit-rps :每秒从给定IP 接受的请求数。突发限制设置为此限制乘以突发倍数,默认倍数为 5。当客户端超过此限制时,返回limit-reg-status-codedefault: 503

- nginx.ingress,kubernetes.io/limit-rpm:每分钟从给定IP 接受的请求数。突发限制设署为此限制乘以突发倍数,默认倍数为 5。当客户端超过此限制时,返回limit-reg-status-codedefault: 503

- nginx.ingress.kubernetes.io/limit-burst-multiplier:突发大小限制率的乘数。默认突发乘数为5,此注释覆盖默认乘数。当客户端超过此限制时,返回limit-req-status-codedefault: 503

- nginx.ingress.kubernetes.io/limit-rate-after :初始千字节数,之后对给定连接的响应的进一步传输将受到速率限制。此功能必须在启用代理缓冲的情况下使用

- nginx.ingress.kubernetes.io/limit-rate :每秒允许发送到给定连接的千字节数。零值禁用速率限制。此功能必须在启用代理缓冲的情况下使用。

- nginx.ingress.kubernetes.io/limit-whitelist :要从速率限制中排除的客户端IP源范围。该值是一个逗号分隔的 CIDR 列表

如果您在单个Ingress 规则中指定多个注释,则会按顺序应用限制

limit-connections,limit-rpm,limit-rps。要为所有Ingress 规则全局配置设置,可以在NGINX ConfigMap limit-rate-after

中设置和值.Ingress注解中设置的值将覆盖全局设置。limit-rate客户端IP 地址将根据PROXY 协议的使用或启用use-forwarded-headers X-Forwarded-For

时的标头值设置。

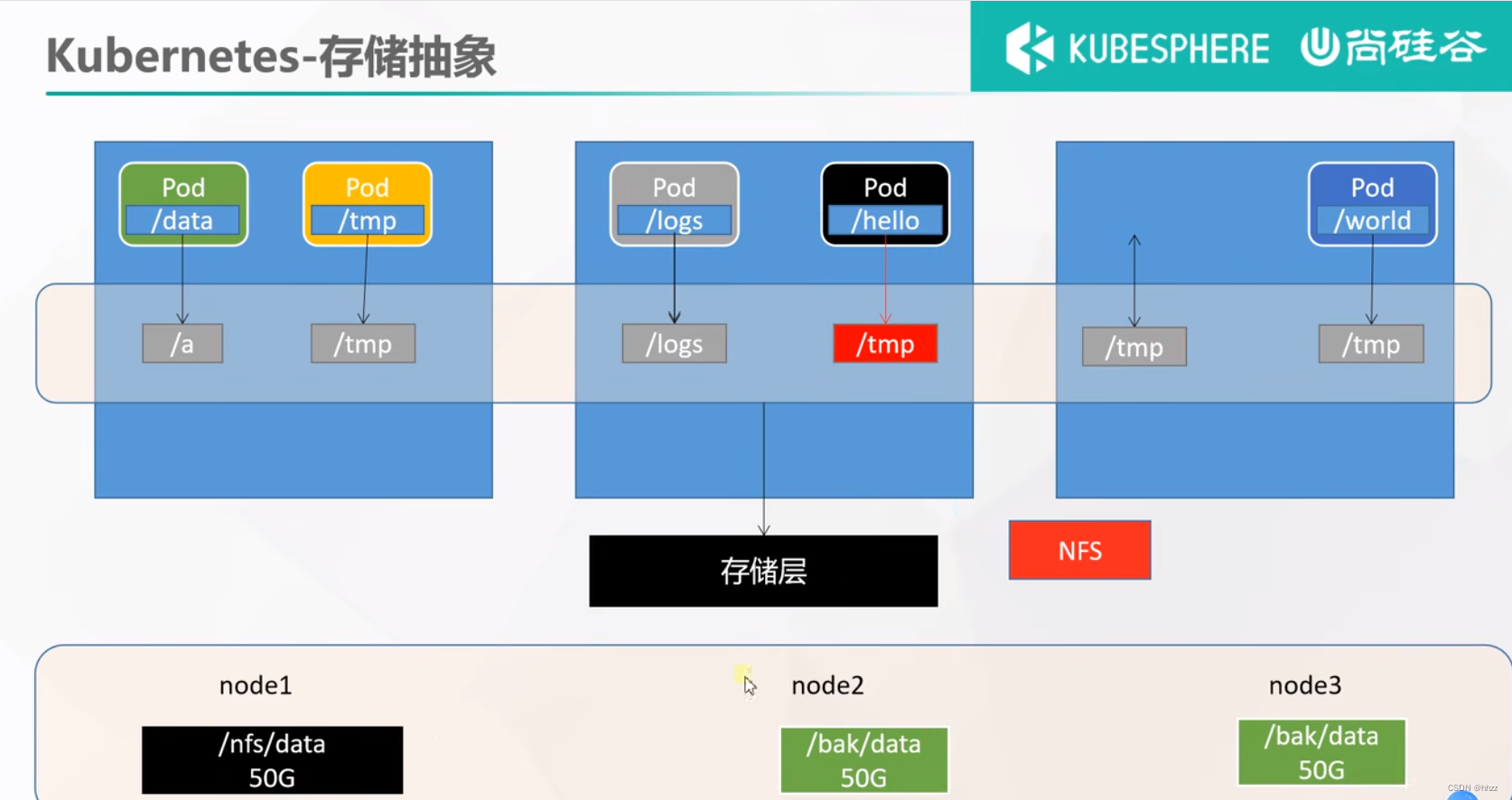

7. Kubernetes 存储抽象

类似于 Docker 中的 挂载。但要考虑 自愈、故障转移 时的情况

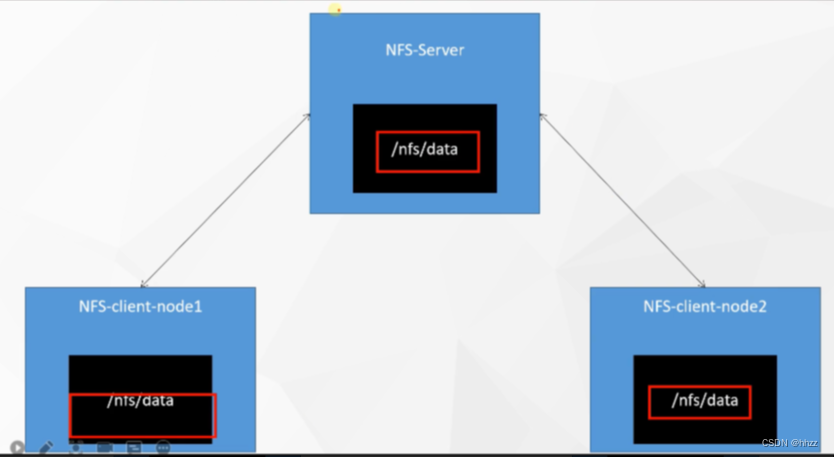

7.1 NFS 搭建

网络文件系统

1、所有节点

安装nfs-utils

# 所有机器执行

yum install -y nfs-utils

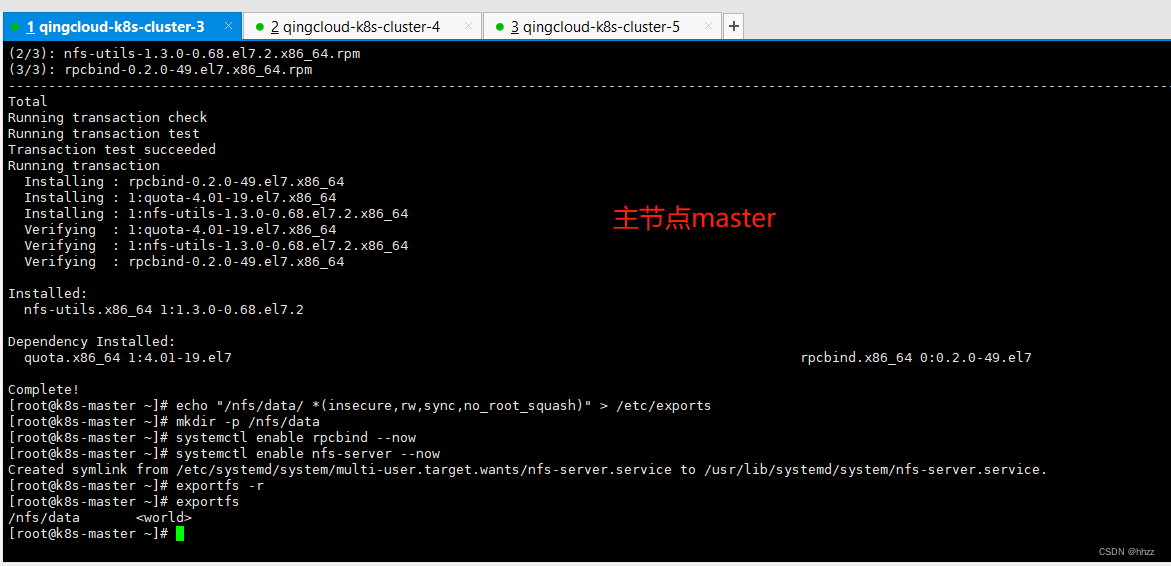

2、主节点

# 只在 mster 机器执行:nfs主节点,rw 读写

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --now

# 配置生效

exportfs -r

3、从节点

# 检查,下面的 IP 是master IP

showmount -e xxx.xxx.xxx.xxx

# 在 2 个从服务器 执行,执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount

mkdir -p /nfs/data

# 在 2 个从服务器执行,将远程 和本地的 文件夹 挂载

mount -t nfs 139.198.36.162:/nfs/data /nfs/data

# 在 master 服务器,写入一个测试文件

echo "hello nfs server" > /nfs/data/test.txt

# 在 2 个从服务器查看

cd /nfs/data

ls

# 在 从服务器 修改,然后去 其他 服务器 查看,也能 同步

7.2 原生方式 数据挂载

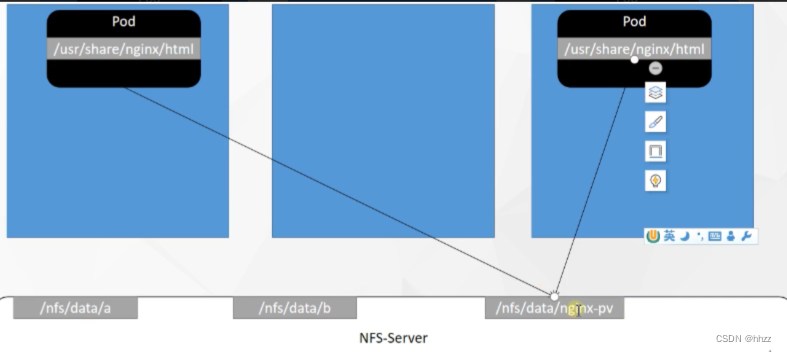

在 /nfs/data/nginx-pv 挂载,然后 修改, 里面 两个 Pod 也会 同步修改。

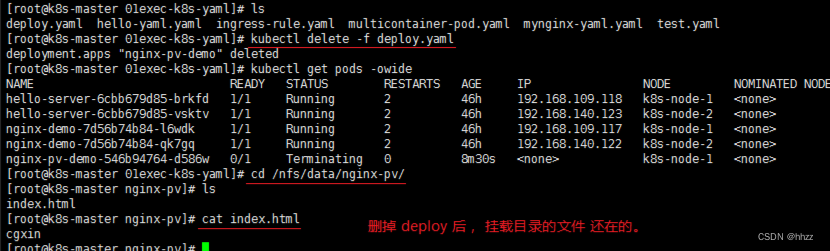

问题:删掉之后,文件还在,内容也在,是没法管理大小的。

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-pv-demo

name: nginx-pv-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-pv-demo

template:

metadata:

labels:

app: nginx-pv-demo

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html # 挂载目录

volumes:

# 和 volumeMounts.name 一样

- name: html

nfs:

# master IP

server: 192.168.27.251 #master节点ip

path: /nfs/data/nginx-pv # 要提前创建好文件夹,否则挂载失败

cd /nfs/data

mkdir -p nginx-pv

ls

vi deploy.yaml

# 复制上面配置

kubectl apply -f deploy.yaml

kubectl get pod -owide

cd /nfs/data/

ls

cd nginx-pv/

echo "cgxin" > index.html

# 进入 pod 里面查看

问题:占用空间,删掉之后,文件还在,内容也在,是没法管理大小的。

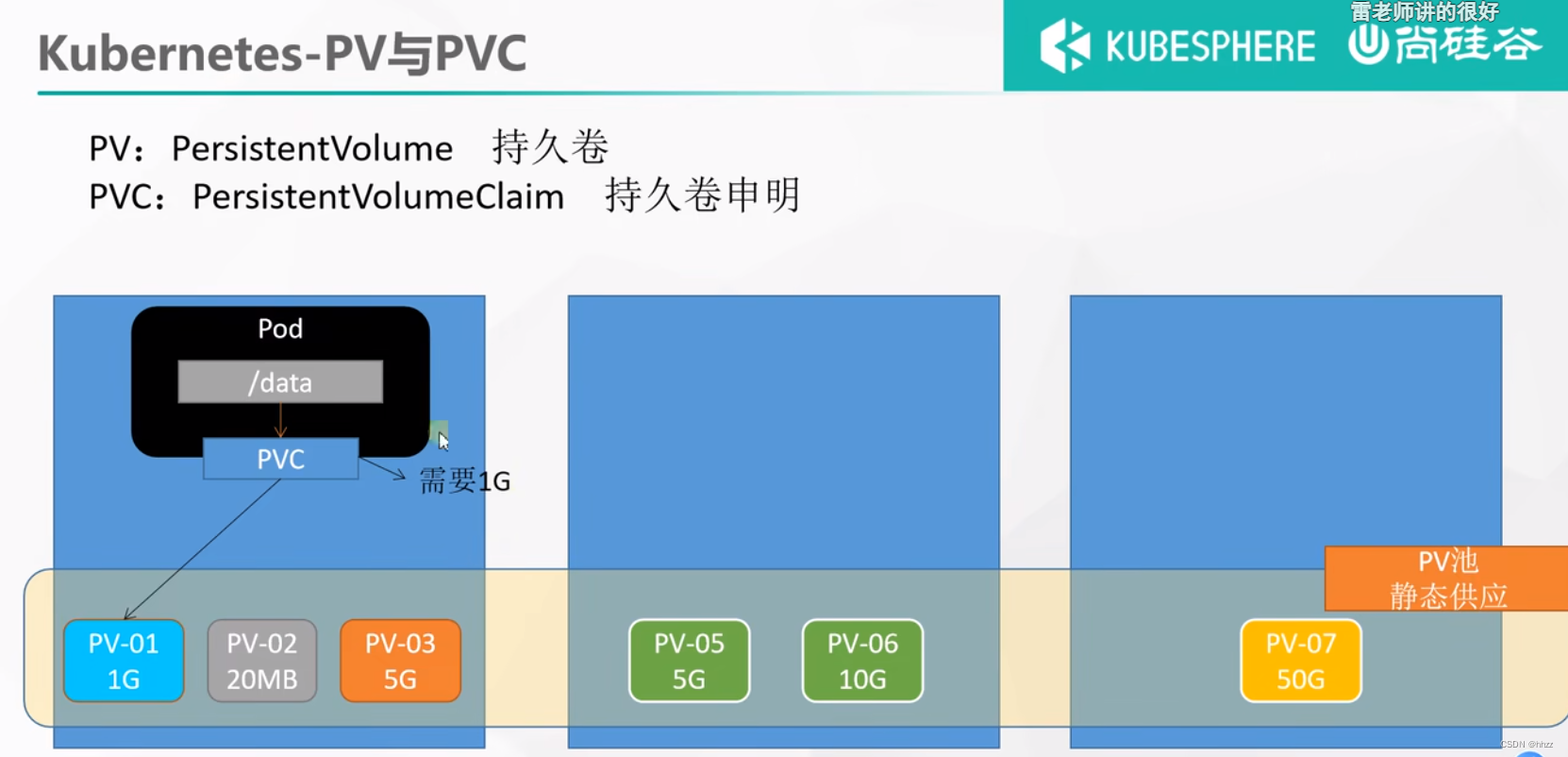

7.3 PV 和 PVC ★

PV:持久卷(Persistent Volume),将应用需要持久化的数据保存到指定位置

PVC:持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格

挂载目录。ConfigMap 挂载配置文件。

这里是 是 静态的, 就是自己创建好了 容量,然后 PVC 去挑。 还有 动态供应的,不用手动去创建 PV池子。

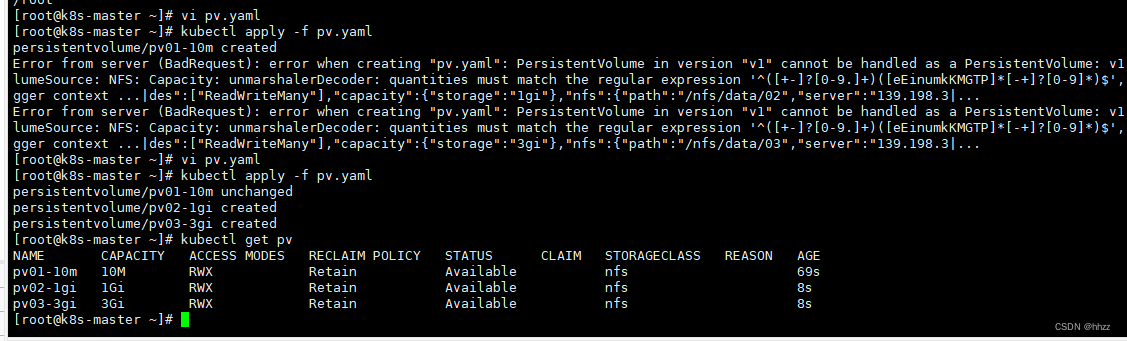

7.3.1 创建 PV 池

静态供应

# 在 nfs主节点(master服务器) 执行

mkdir -p /nfs/data/01

mkdir -p /nfs/data/02

mkdir -p /nfs/data/03

使用pv.yaml 创建 3个 PV

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-10m

spec:

# 限制容量

capacity:

storage: 10M

# 读写模式:可读可写

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

# 挂载 上面创建过的文件夹

path: /nfs/data/01

# nfs 主节点服务器的 IP

server: 139.198.36.162

---

apiVersion: v1

kind: PersistentVolume

metadata:

# 这个name 要小写,如 Gi 大写就不行

name: pv02-1gi

spec:

capacity:

storage: 1gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

# nfs 主节点服务器的 IP

server: 139.198.36.162

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi

spec:

capacity:

storage: 3gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

# nfs 主节点服务器的 IP

server: 139.198.36.162

vi pv.yaml

# 复制上面文件

kubectl apply -f pv.yaml

# 查看 pv, kubectl get pv

kubectl get persistentvolume

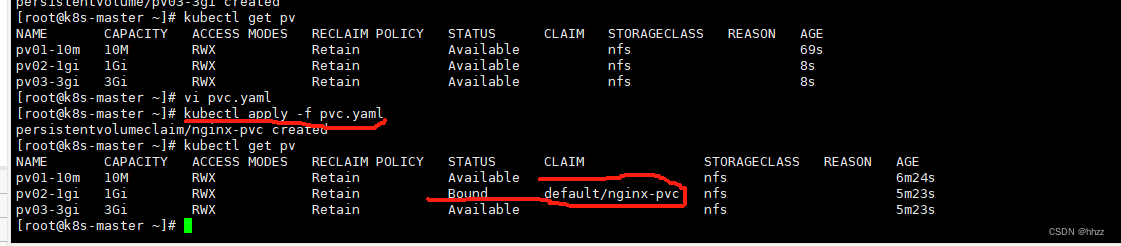

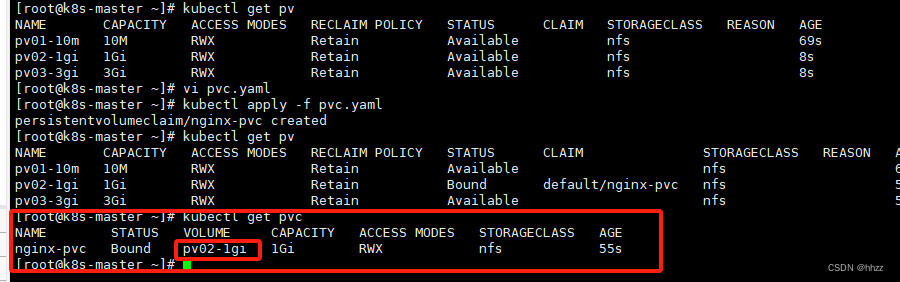

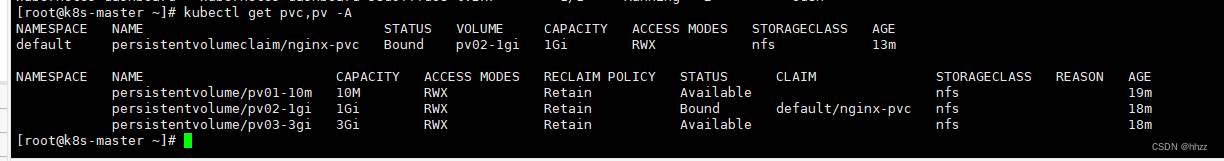

7.3.2 创建、绑定 PCV

相当于创建设用pv的申请书

创建pvc

pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

# 需要 200M的 PV

storage: 200Mi

# 上面 PV 写的什么 这里就写什么

storageClassName: nfs

vi pvc.yaml

# 复制上面配置

kubectl get pv

kubectl apply -f pvc.yaml

kubectl get pv

kubectl get pvc

绑定了, 绑定了1G的,10M 不够,3G太大,就选择了 1G

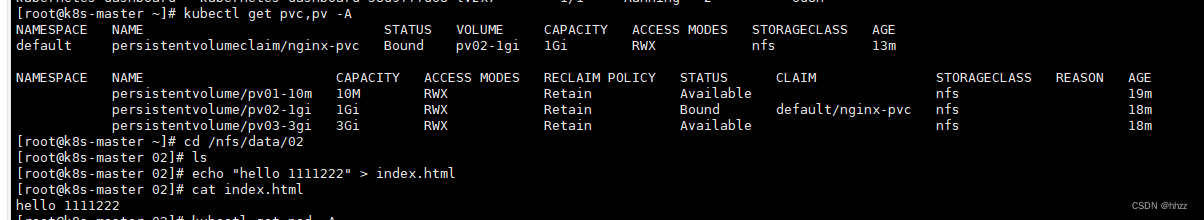

7.3.3 创建 Pod 绑定 PVC

创建 Pod,绑定 PVC

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-pvc

name: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

# 之前是 nfs,这里用 pvc

persistentVolumeClaim:

claimName: nginx-pvc

vi dep02.yaml

# 复制上面 yaml

kubectl apply -f dep02.yaml

kubectl get pod

kubectl get pv

kubectl get pvc

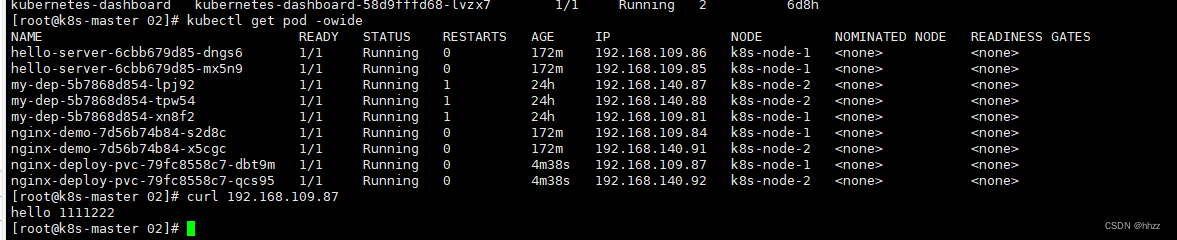

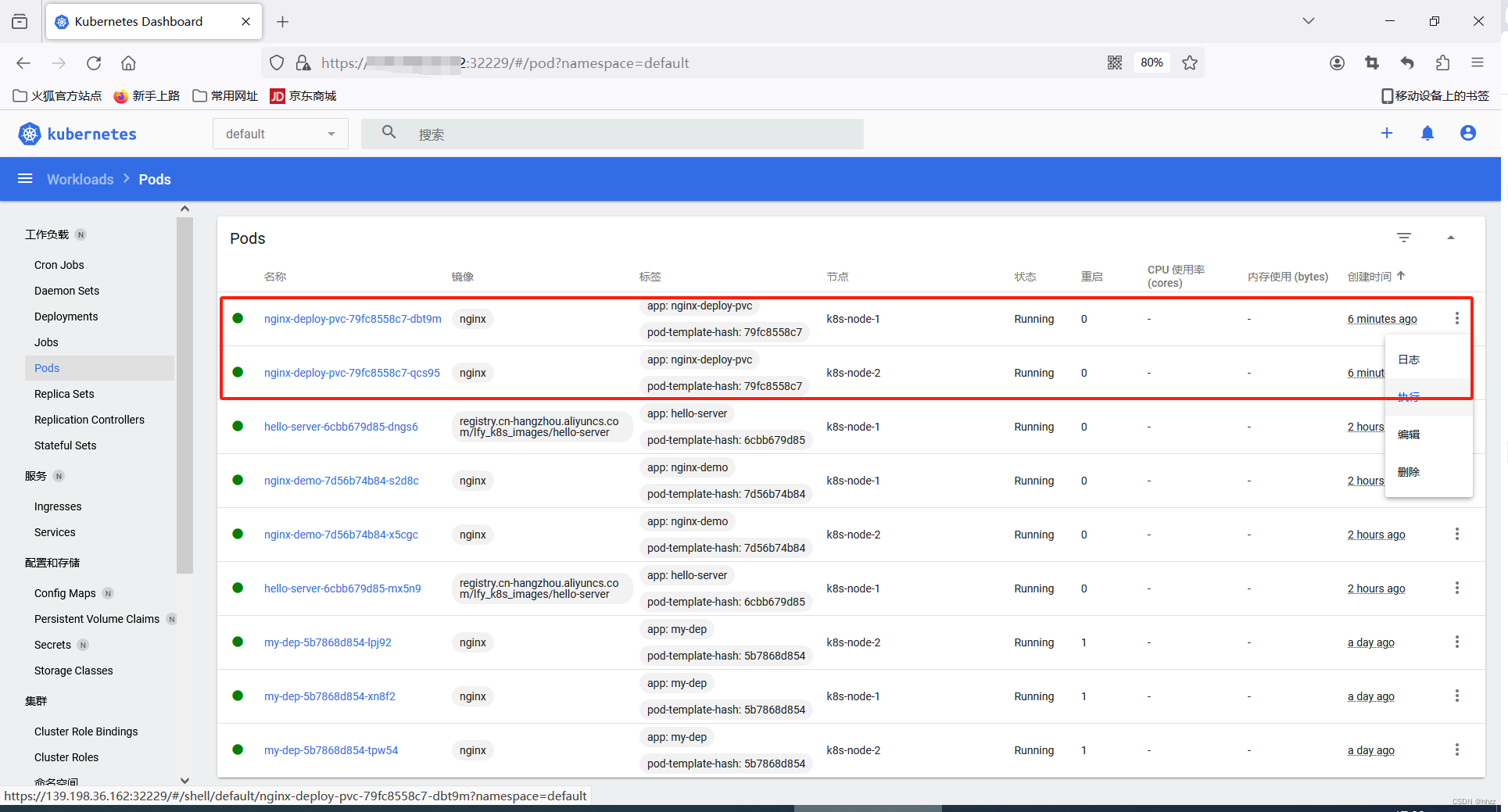

挂载后,测试

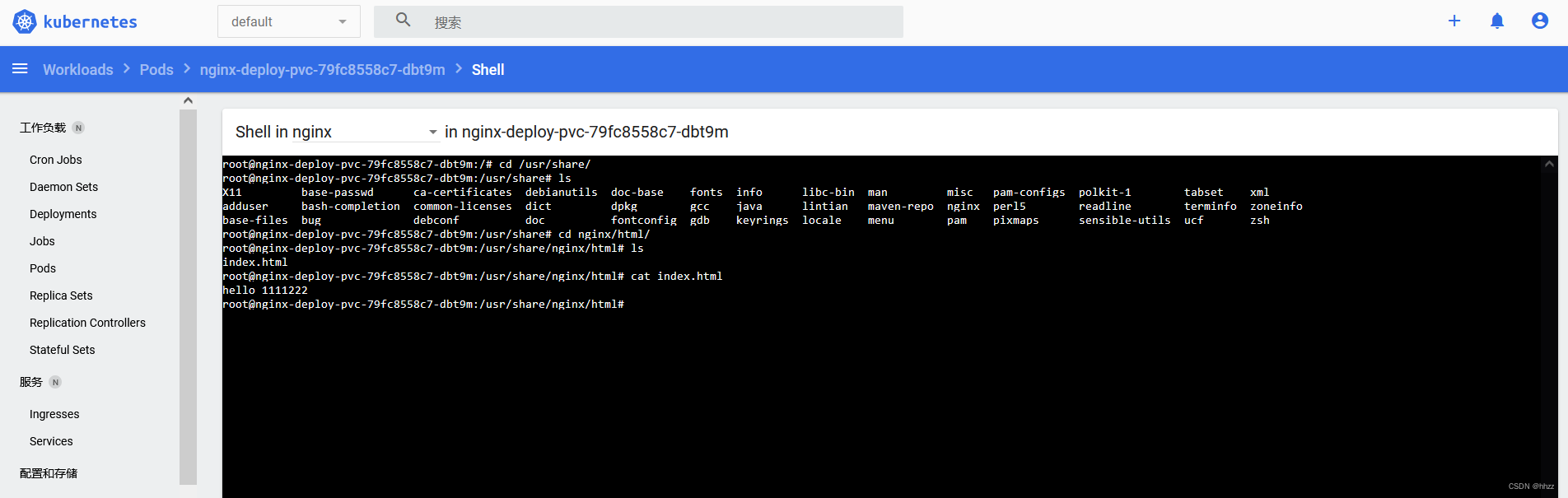

进入 Pod 内部查看 同步的文件

7.4 ConfigMap ★

ConfigMap:抽取应用配置,并且可以自动更新。挂载配置文件, PV 和 PVC 是挂载目录的。

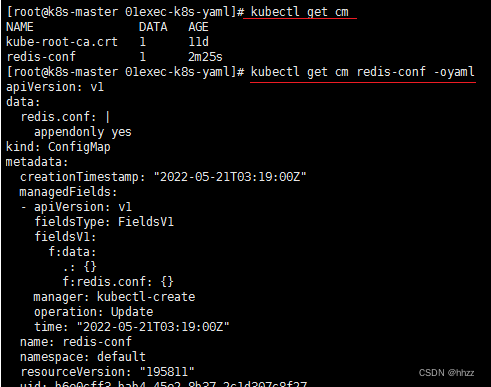

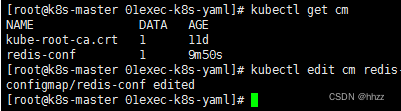

7.4.1 redis示例

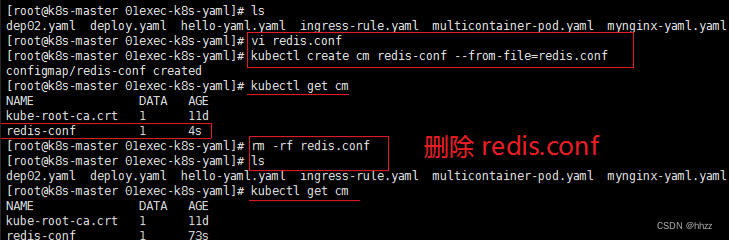

- 创建 ConfigMap

创建 / 删除 cm

vi redis.conf

# 写

appendonly yes

# 创建配置,redis保存到k8s的etcd;

kubectl create cm redis-conf --from-file=redis.conf

# 查看

kubectl get cm

rm -rf redis.conf

# 查看 ConfigMap 的 yaml 配置咋写的

kubectl get cm redis-conf -oyaml

apiVersion: v1

data: # data是所有真正的数据,key:默认是文件名 value:配置文件的内容(appendonly yes 是随便写的)

redis.conf: |

appendonly yes

kind: ConfigMap

metadata:

name: redis-conf

namespace: default

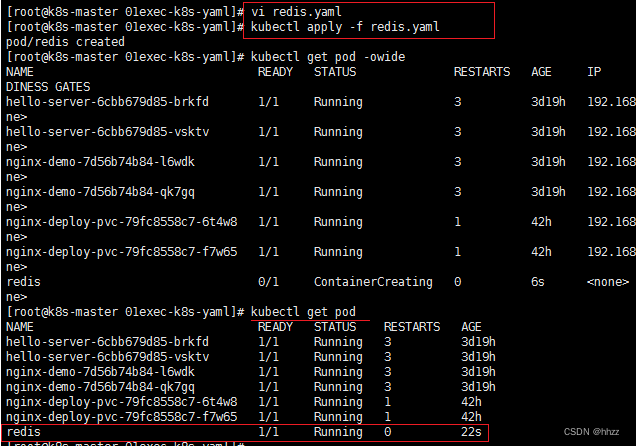

- 创建 Pod

redis.yaml

apiVersion: v1

kind: Pod

metadata:

name: redis

spec:

containers:

- name: redis

image: redis

command:

# 启动命令

- redis-server

# 指的是redis容器内部的位置

- "/redis-master/redis.conf"

ports:

- containerPort: 6379

volumeMounts:

- mountPath: /data

name: data

- mountPath: /redis-master

name: config

volumes:

- name: data

emptyDir: {}

- name: config

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

redis.conf 会放在 /redis-master 下

vi redis.yaml

# 复制上面配置

kubectl apply -f redis.yaml

kubectl get pod

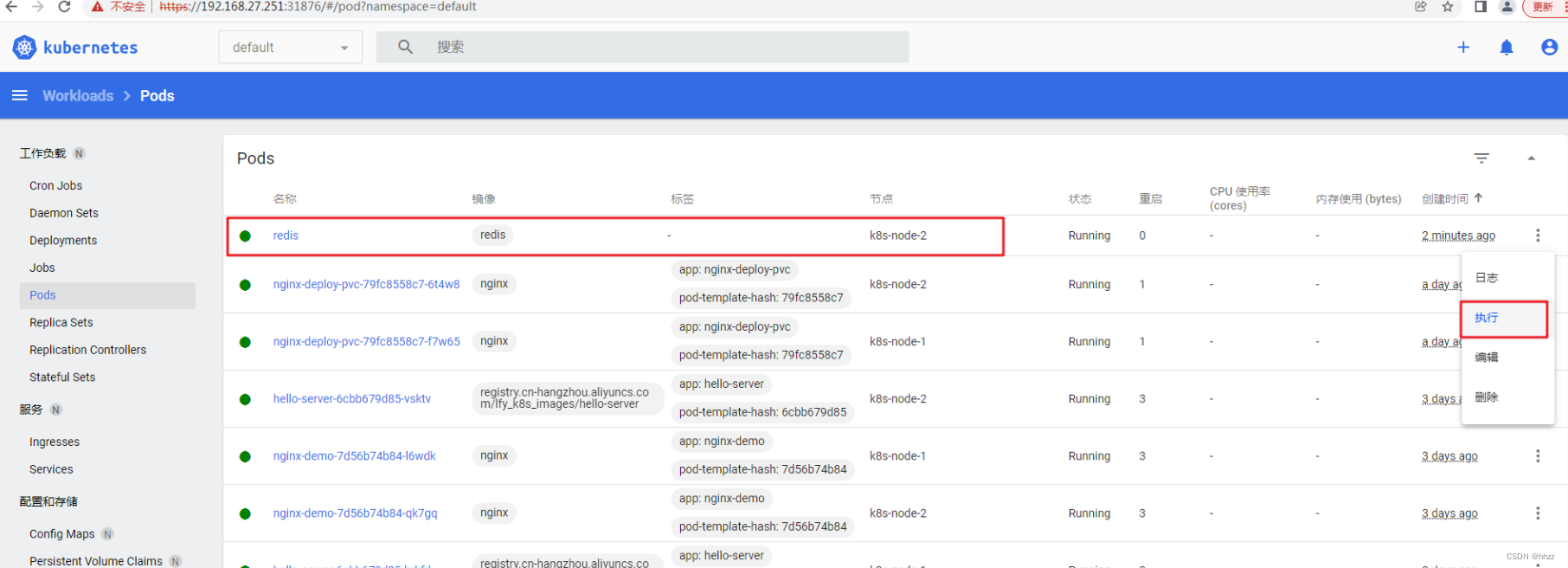

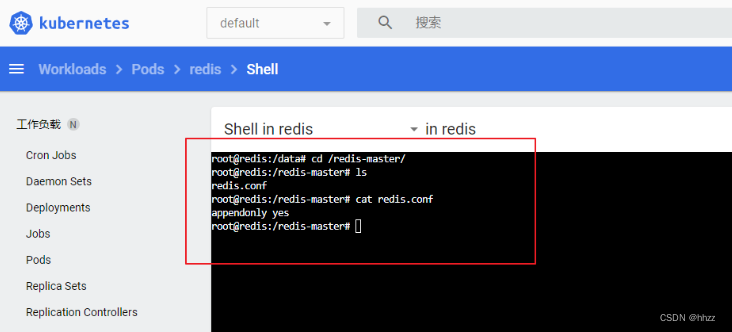

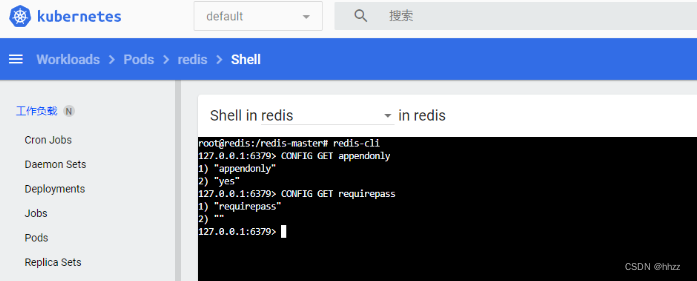

页面中 进入刚才创建的 pod redis 内部

查看 redis.conf 配置文件 内容

kubectl get cm

# 修改配置 里 redis.conf 的内容

kubectl edit cm redis-conf

修改 redis-conf 的 redis.conf 内容

修改 redis-conf 的 redis.conf 内容

过了一会, 就同步了

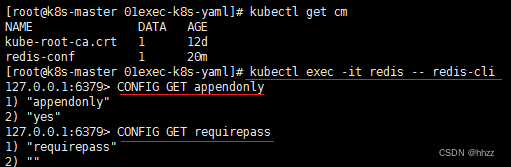

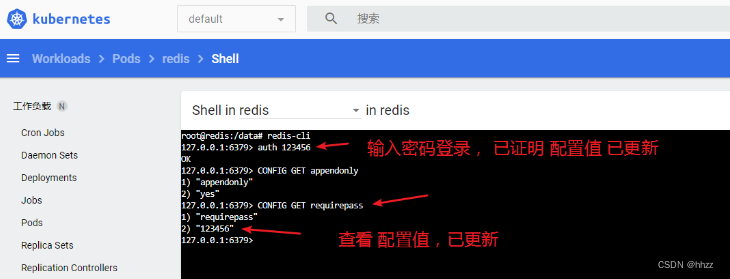

3. 检查默认配置

kubectl exec -it redis -- redis-cli

127.0.0.1:6379> CONFIG GET appendonly

127.0.0.1:6379> CONFIG GET requirepass

和 命令行一样的

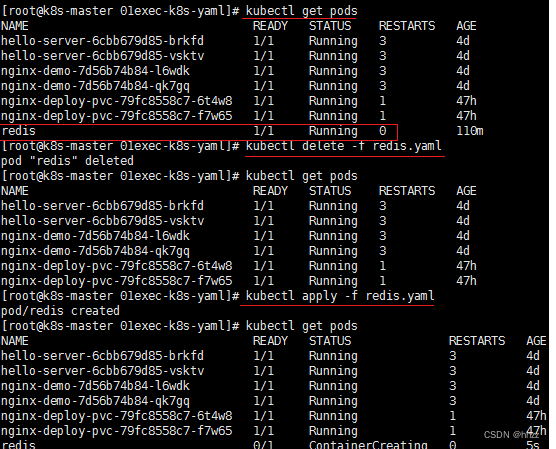

删除,重新创建 Pod,更新 配置文件的 配置值

查看 更新的 配置值

总结:

● 修改了 ConfigMap,Pod里面的配置文件会跟着同步。

● 但配置值 未更改,需要重新启动 Pod 才能从关联的ConfigMap 中获取 更新的值。 Pod 部署的中间件 自己本身没有热更新能力。

7.5 Secret

Secret :是对象类型,用来保存敏感信息,例如密码、OAuth 令牌和 SSH 密钥。 将这些信息放在 secret 中比放在 Pod 的定义或者 容器镜像 中来说更加安全和灵活。

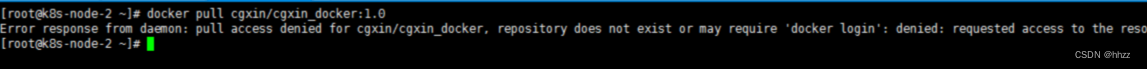

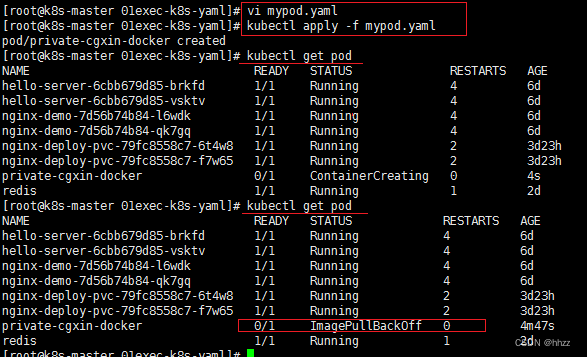

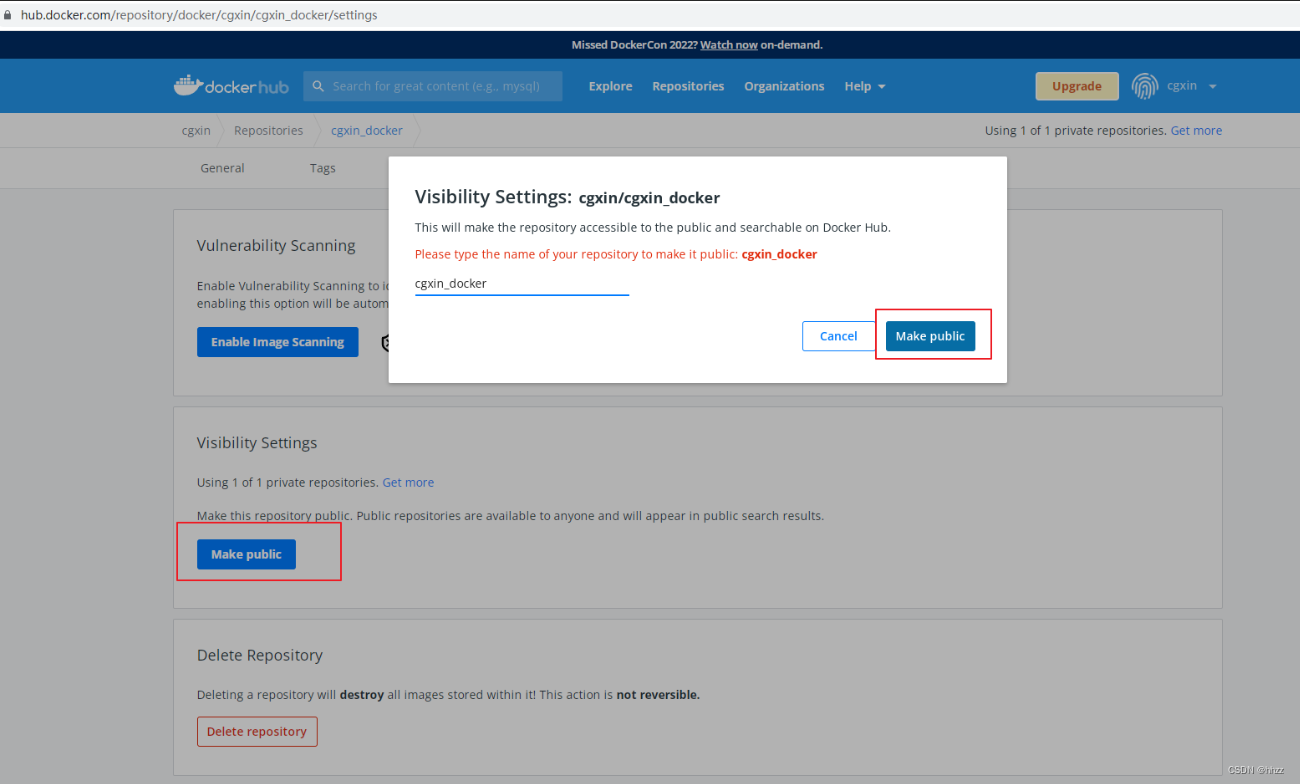

7.5.1 拉取失败

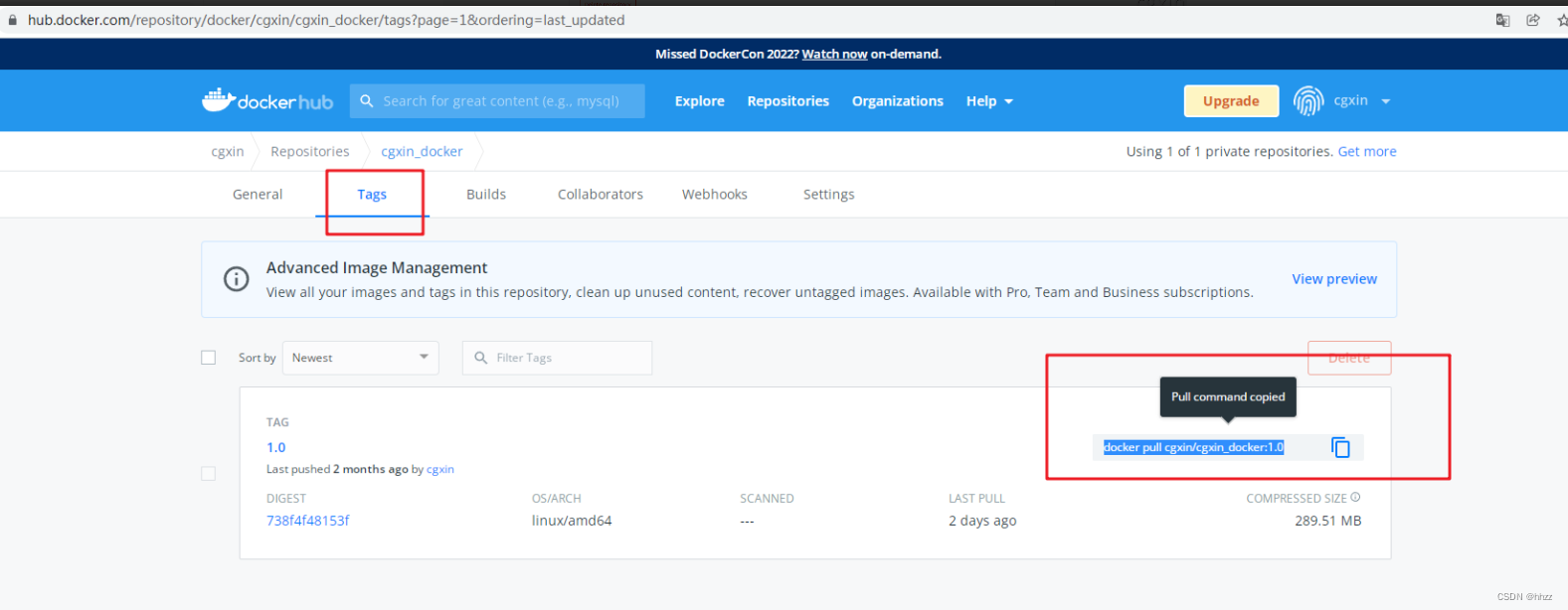

Docker hub 仓库中,自己的仓库设置成私有的。 然后去 下载私有的。下载不了(未登录)。

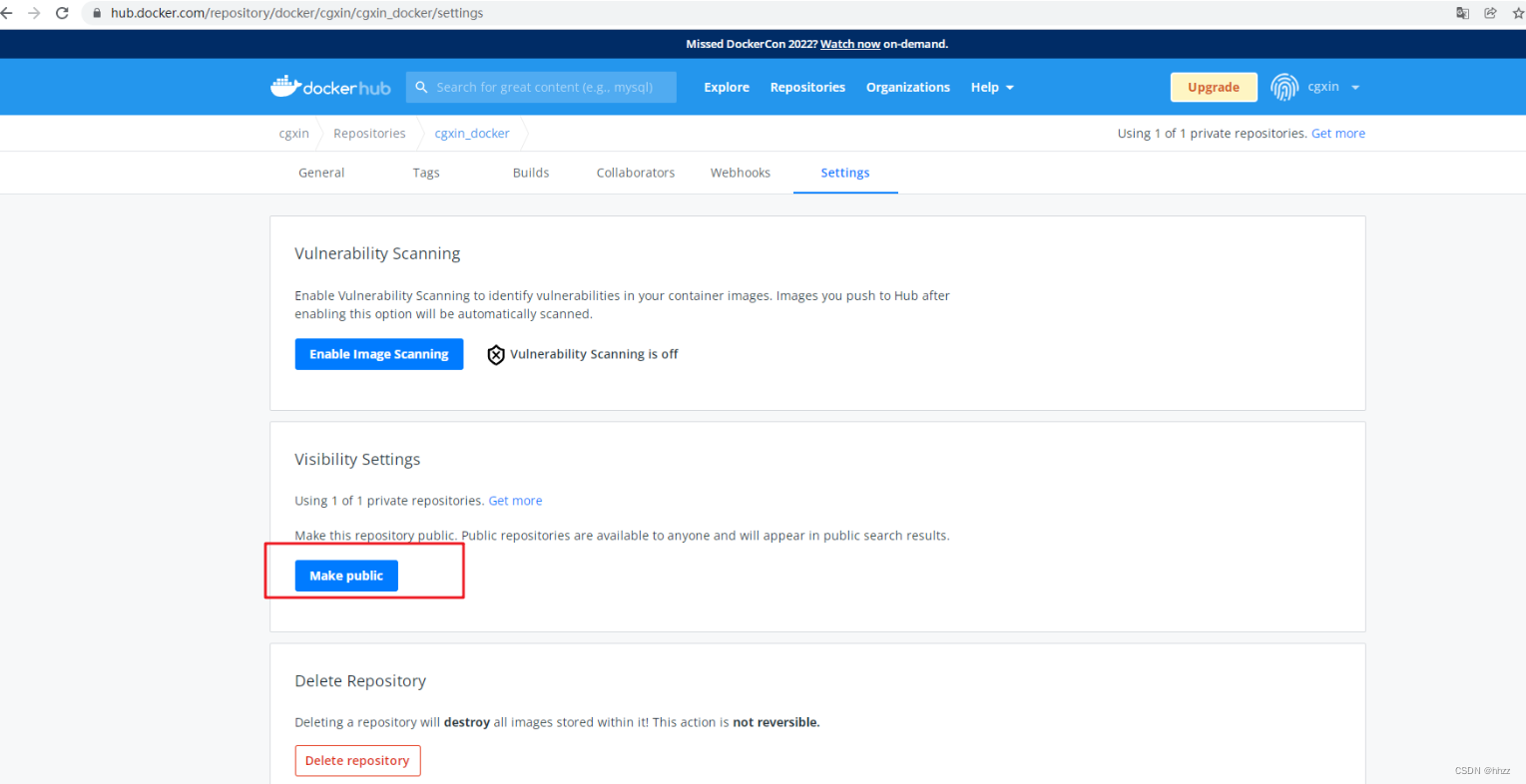

自己的仓库设置成私有的

查看 拉取命令

拒绝拉取

mypod.yaml

apiVersion: v1

kind: Pod

metadata:

name: private-cgxin-docker

spec:

containers:

- name: private-cgxin-docker

image: cgxin/cgxin_docker:1.0

vi mypod.yaml

# 复制上面配置

kubectl apply -f mypod.yaml

kubectl get pod

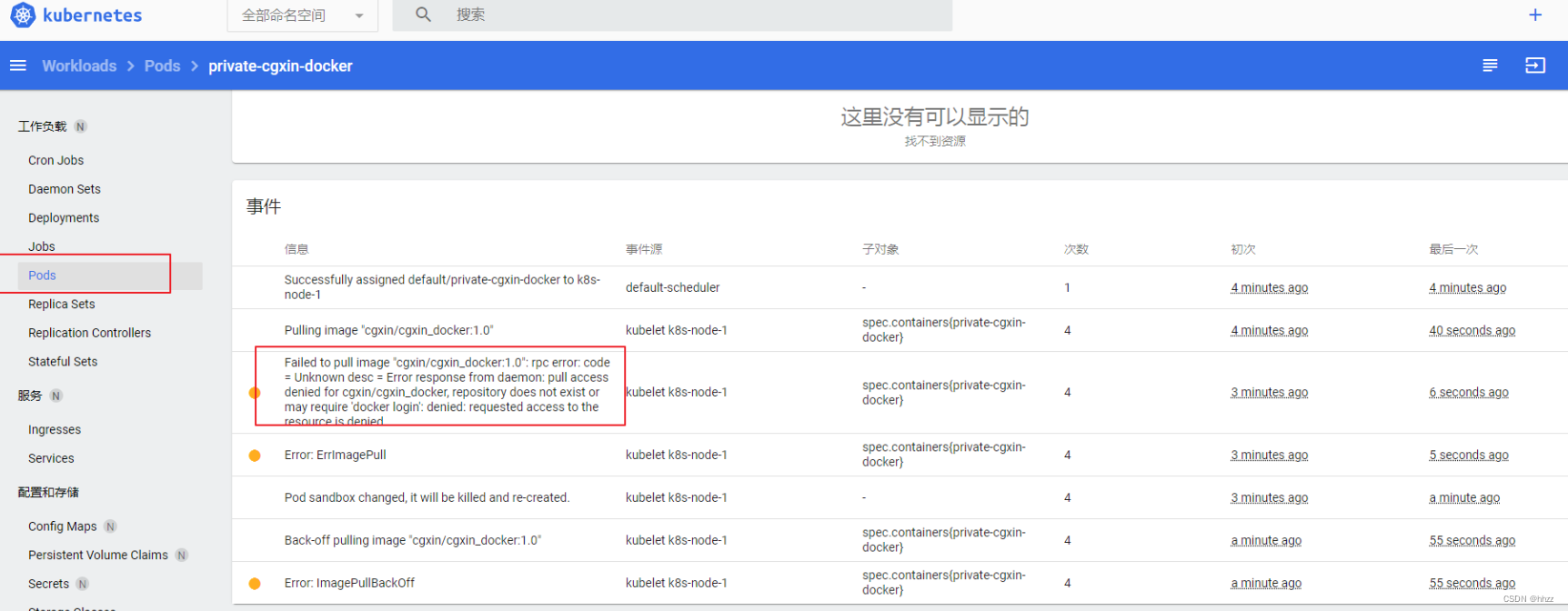

提示 镜像拉取失败

可视化界面 查看错误描述:也是没有权限。

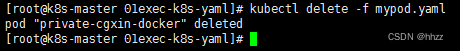

删除配置文件 创建的错误 Pod

7.5.2 创建 Secret

创建 Secret

kubectl create secret docker-registry cgxin-docker-secret \

--docker-username=leifengyang \

--docker-password=Lfy123456 \

--docker-email=534096094@qq.com

##命令格式

kubectl create secret docker-registry regcred \

--docker-server=<你的镜像仓库服务器> \

--docker-username=<你的用户名> \

--docker-password=<你的密码> \

--docker-email=<你的邮箱地址>

# 查看

kubectl get secret

kubectl get secret cgxin-docker-secret -oyaml

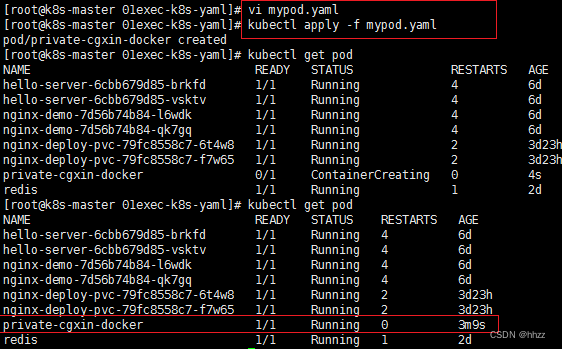

重新修改 配置文件,加入 Secret

apiVersion: v1

kind: Pod

metadata:

name: private-cgxin-docker

spec:

containers:

- name: private-cgxin-docker

image: cgxin/cgxin_docker:1.0

# 加上 Secret

imagePullSecrets:

- name: cgxin-docker-secret

vi mypod.yaml

# 复制上面配置

kubectl apply -f mypod.yaml

kubectl get pod

使用 Secret 后,可以成功 拉取下来了。

Docker Hub 镜像 复原成 public

总结:

可视化界面 操作很方便