目录

background

(1) heterogeneity of graph

(2) semantic-level attention

(3) Node-level attention

(4) HAN

contributions

2. Related Work

2.1 GNN

2.2 Network Embedding

3. Preliminary

background

4. Proposed Model

4.1 Node-level attention

ideas: challenge: hetero graph -> homo graph lose much semantics and structural info.

4.2 Semantic-level attention

ideas:semantics

4.3 Analysis of the proposed model

5.4 classification

5.5 Analysis

GNN, a powerful graph representation technique

problem: it not beeen fully considered in graph neural network for heterogeneous graph which contains different types of nodes and links.

- heterogeneity

- rich semantic information

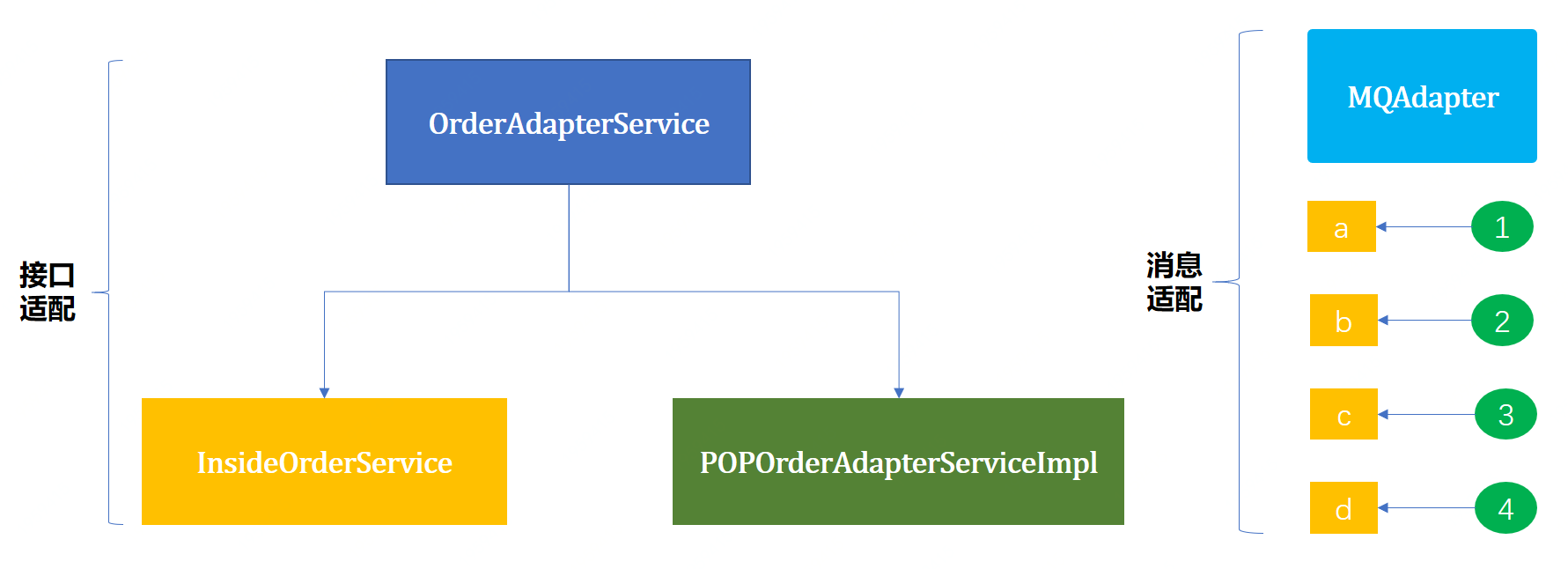

solution: HAN(Heterogeneous graph attention network)

- node-level attention: learn the importance between a node and its meta-path based neighbors

- semantic-level attention: is able to learn the importance of different meta-paths.

-> model can generate node embedding by aggregating features from meta-path based neighbors in a hierarchical manner.

background

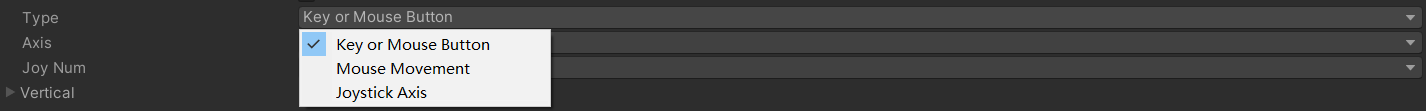

GAT: leverages attention mechanism for the homogeneous graph which includes only one type of nodes or links.

- As a matter of fact, the real-world graph usually comes with multi-types of nodes and edges, also widely known as heterogeneous information network(HIN)

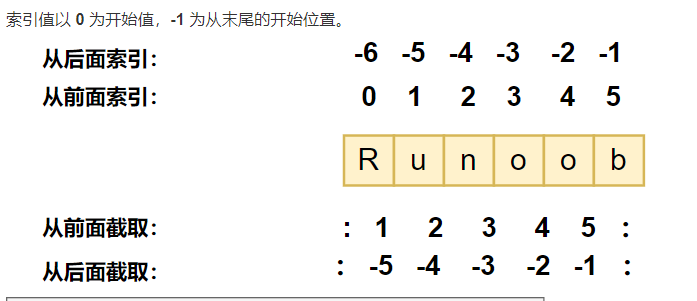

- meta-path, a composite relation connecting two objects, is a widely used structure to capture the semantics. e.g. meta-path Movie-Actor-Movie(MAM) -> 因为只有依据meta-path才能得到meta-path based neighbors.

- heterogeneous graph contains more comprehensive information and rich semantics. depending on the meta-paths, the relation between nodes in the heterogeneous graph can have different semantics.

(1) heterogeneity of graph

problem: an intrinsic property of heterogeneous graph -> different types of nodes have different traits and their features may fall in different feature spaces.

-> how to handle such complex structured information and preserve the diverse feature information simultaneously is an urgent problem that needs to be solved.

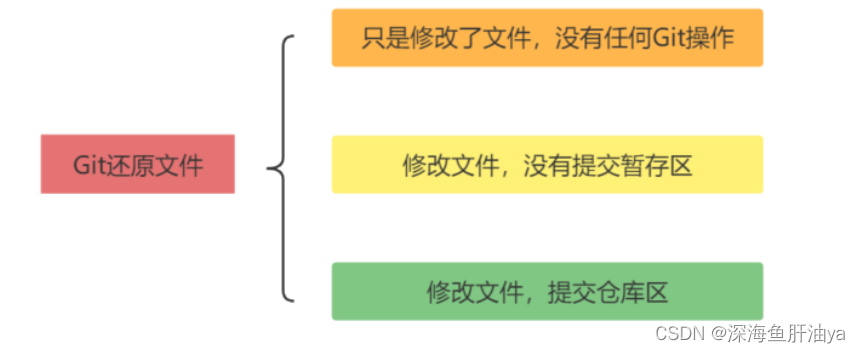

(2) semantic-level attention

background: Different meta-paths in the heterogeneous graph may extract diverse semantic information.

problem: how to select the most meaningful meta-paths and fuse the semantic information for the specific task.

solution: semantic-level attention aims to learn the importance of each meta-path and assign proper weights to them.

problem: treating different meta-path equally is unpractical and will weaken the semantic information <- some useful meta-paths

(3) Node-level attention

how to distinguish subtle difference of these neighbors and select some informative neighbors is required.

solution: node-level attention aims to learn the importance of meta-path based neighbors and assign different attention values to them.

e.g. The Terminator movie <-> meta-path relation

problem: how to design a model which can discover the subtle differences of neighbors and learn their weights properly will be desired.

(4) HAN

solution: HAN

- node-level attention: learn meta-path based neighbors' attention values

- semantic-level attention: learn meta-paths' attention values

-> our model can get the optimal combination of neighbors and multiple meta-paths in a hierarchical manner, which enables the learned node embeddings to better capture the complex structure and rich semantic information in a heterogeneous graph.

contributions

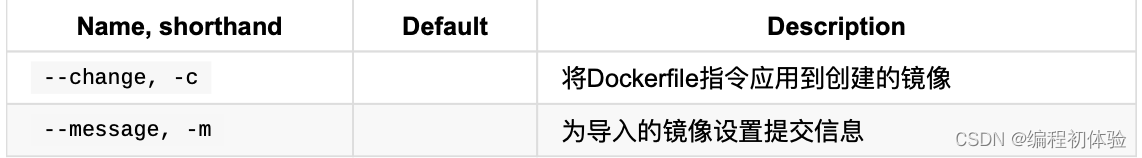

- first attempt to study the heterogeneous gnn based on attention mechanism. -> GNN directly applied to heterogeneous graph.

- HAN

- superiority -> good interpretability for heterogeneous graph analysis.

2. Related Work

2.1 GNN

- GCN, spectral approach, which design a graph convolutional network via a localized first-order approximation of spectral graph convolutions(graph Fourier).

- GraphSAGE, non-spectral approach, which performs a neural network based aggregator over a fixed size node neighbor. generates embeddings by aggregating features from a node's local neighborhood.

- GAT, proposed to learn the importance between nodes and its neighbors and fuse the neighbors to perform node classification.

2.2 Network Embedding

Network Representation Learning(NRI) -> is proposed to embed network into a low dimensional space while preserving the network structure and property so that the learned embeddings can be applied to the downstream network tasks.

Heterogeneous graph embedding mainly focuses on preserving the meta-path based structural information.

Aim-1: needs to conduct grid search to find the optimal weights of meta-paths.

3. Preliminary

background

- different meta-paths always reveal different semantics.

- Given a meta-path φ,there exists a set of meta-path based neighbors of each node which can reveal diverse structural and rich semantics in a heterogeneous graph.

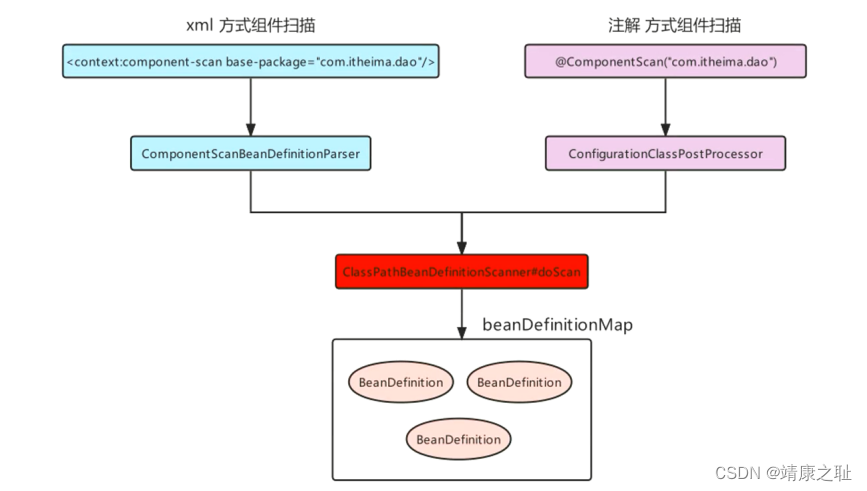

- graph neural network has been proposed to deal with arbitrary graph-structure data. however, all of them are designed for homogeneous network.

4. Proposed Model

semi-supervised gnn for heterogenous graph.

(1) nodel-level attention -> learn the weight of meta-path based neighbors and aggregate them to get the semantic-spicific node embedding.

for node i, 同一meta-path(即semantics)下,求 neighbors weight.

(2) semantic-level attention -> can tell the difference for meta-paths and get the optimal weighted combination of the semantic-specific node embedding.

for node i, different meta-path 的 weight

4.1 Node-level attention

problem: due to the heterogeneity of nodes, different types of nodes have different feature spaces.

solution: design type-specific transformation matrix to project the features of different types of nodes into the same feature space.

<- type-specific transformation matrix is based on node-type rather than edge-type.

- asymmetric不对称, node-level attention can preserve the asymmetric which is a critical property of heterogeneous graph.

ideas: challenge: hetero graph -> homo graph lose much semantics and structural info.

- problem-1: fail to learn the meta-path importance well.

- problem-2: heterogeneous element can't be applied directly with gnn, but converting into homogeneous graph first.

attention weight is generated for single meta-path, it is semantic-specific and able to capture one kind of semantic information.

-> multi-head attention, repeat the node-level attention for k times and concatenate the learned embeddings as the semantic-specific embeddings.

4.2 Semantic-level attention

need to fuse multiple semantics which can be revealed by meta-paths.

ideas:semantics

这里的semantics只是nlp传统意义上很狭隘的语序概念,而在更广泛的语义概念上,包括形状、图片、音色、颜色等可以指明一个物体独特性的语义属性

4.3 Analysis of the proposed model

5.4 classification

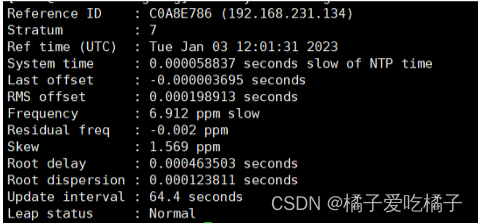

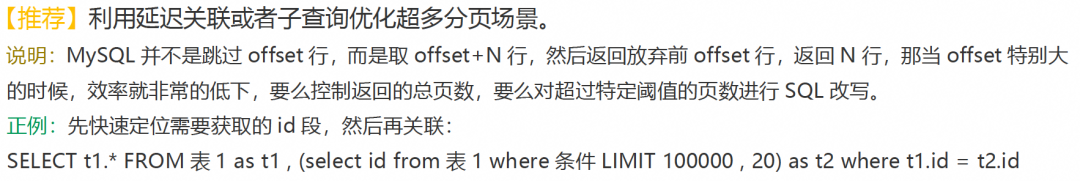

problem: the variance of graph-structured data can be quite high.

solution: repeat the process for 10 times and report the average.

HAN -> designs for heterogeneous graph, captures the rich semantics successfully and show its superiority.

5.5 Analysis

- node-level attention, learn the attention values between nodes and its neighbors in a meta-path

- semantic-level attention, learn the attention values between meta-paths.