经典卷积神经网络-ResNet

一、背景介绍

残差神经网络(ResNet)是由微软研究院的何恺明、张祥雨、任少卿、孙剑等人提出的。ResNet 在2015 年的ILSVRC(ImageNet Large Scale Visual Recognition Challenge)中取得了冠军。残差神经网络的主要贡献是发现了“退化现象(Degradation)”,并针对退化现象发明了 “快捷连接(Shortcut connection)”,极大的消除了深度过大的神经网络训练困难问题。神经网络的“深度”首次突破了100层、最大的神经网络甚至超过了1000层。

二、ResNet网络结构

2.0 残差块

配合吴恩达深度学习视频中的图片进行讲解:

如图所示,Residual block就是将 a [ l ] a^{[l]} a[l]传送到 z l + 2 z^{l+2} zl+2上,其相加之后再进行激活得到 a [ l + 2 ] a^{[l+2]} a[l+2]。这一步骤称为"skip connection",即指 a [ l ] a^{[l]} a[l]跳过一层或好几层,从而将信息传递到神经网络的更深层。所以构建一个ResNet网络就是通过将很多这样的残差块堆积在一起,形成一个深度神经网络。

那么引入残差块为什么有效呢? 一个直观上的理解:

如图所示,假设我们给我们的神经网络再增加两层,我们要得到 a [ l + 2 ] a^{[l+2]} a[l+2],我们通过增加一个残差块来完成。这时 a [ l + 2 ] = g ( z [ l + 2 ] + a [ l ] ) = g ( w [ l + 2 ] a [ l + 1 ] + a [ l ] ) a^{[l+2]}=g(z^{[l+2]}+a^{[l]})=g(w^{[l+2]}a^{[l+1]}+a^{}[l]) a[l+2]=g(z[l+2]+a[l])=g(w[l+2]a[l+1]+a[l]),如果我们应用了L2正则化,此时权重参数会减小,我们可以极端的假设 w [ l + 2 ] = 0 , b [ l + 2 ] = 0 w^{[l+2]}=0,b^{[l+2]}=0 w[l+2]=0,b[l+2]=0,那么得到 a [ l + 2 ] = g ( a [ l ] ) = a [ l ] a^{[l+2]}=g(a^{[l]})=a^{[l]} a[l+2]=g(a[l])=a[l](因为使用的是ReLU激活函数,非负的值激活后为原来的值, a [ l ] a^{[l]} a[l]已经经过ReLU激活过了,所以全为非负值)。这意味着,即使给神经网络增加了两层,它的效果并不逊色于更简单的神经网络。所以给大型的神经网络添加残差块来增加网络深度,并不会影响网络的表现。如果我们增加的这两层碰巧能学习到一些有用的信息,那么它就比原来的神经网络表现的更好。

论文中ResNet层数在34及以下和50及以上时采用的是不同的残差块。下面我们分别介绍:

2.1 ResNet-34

如上图所示是ResNet-34以下采用的残差块,我们将其称作BasicBlock。

ResNet-34的网络结构如下:图中实线表示通道数没有变化,虚线表示通道数发生了变化。

其具体的网络结构如下表:

2.2 ResNet-50

如图所示是ResNet-50以上采用的残差块,我们将其称作Bottleneck,使用了1 × 1的卷积来进行通道数的改变,减小计算量。其具体的网络结构见上面的表。

三、论文部分解读

-

论文中的第一张图就表明了更深层的“plain”神经网络(即不用Residual Learning)的错误率在训练集和测试集上甚至比层数少的“plain”神经网络还要高,所以就引出了问题:训练很深的网络是一个很难的问题。

-

论文中的这张图就表明了,使用Residual Learning后训练更深层的神经网络效果会变得更好。

-

论文中处理残差连接中输入和输出不对等的解决方法:

- 添加一些额外的0,使得输入和输出的形状可以对应起来可以做相加。

- 输入和输出不对等的时候使用1 × 1的卷积操作来做投影(目前都是这种方法)

- 所有的地方都使用1 × 1的卷积来做投影,计算量太大没必要

-

论文最主要的是提出了residual结构(残差结构),并搭建超深的网络结构(突破1000层)

-

使用了大量BN来加速训练(丢弃dropout)

-

论文中对CIFAR10数据集做了大量实验验证其效果,最后还将ResNet用到了目标检测领域

三、ResNet-18的Pytorch实现

import torch

import torch.nn as nn

import torchsummary

# BasicBlock

class BasicBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=1):

super(BasicBlock, self).__init__()

# 第一个卷积有可能要进行下采样 即将输出通道翻倍 输出数据大小全部减半

# 所以我们让第一个卷积的stride设置为可传入的参数

# 如果要进行BN 卷积就不需要加偏置

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

# 第二个卷积保持尺寸和通道数

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

# 如果最后输入和输出的通道数不一样 那么就用1 × 1的卷积调节输入 方便后面能和输出相加

# shortcut操作也是downsample(下采样) 即将输出通道翻倍 输出数据大小全部减半

# 这一步主要做的就是为了能让最后的输出数据和输入数据"连接"上 即相加

self.shortcut = nn.Sequential()

if stride != 1 or in_channels != out_channels:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels, stride=stride, kernel_size=1, bias=False),

nn.BatchNorm2d(out_channels)

)

def forward(self, x):

# 记录identity

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

# 进行残差连接

out += self.shortcut(identity)

# 残差连接之后激活

out = self.relu(out)

return out

# ResNet-18

class ResNet18(nn.Module):

def __init__(self, num_classes=1000):

super(ResNet18, self).__init__()

# output_size = [(input_size - kernel_size + 2padding) / stride] + 1

# 112 = [(224 - 7 + 2padding) / stride] + 1 -> padding = 3

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

# 56 = [(112 - 3 + 2padding) / 2] + 1 -> padding = 1

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(in_channels=64, out_channels=64, blocks=2, stride=1)

self.layer2 = self._make_layer(in_channels=64, out_channels=128, blocks=2, stride=2)

self.layer3 = self._make_layer(in_channels=128, out_channels=256, blocks=2, stride=2)

self.layer4 = self._make_layer(in_channels=256, out_channels=512, blocks=2, stride=2)

# 平均池化 参数就是out_size

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512, num_classes)

def _make_layer(self, in_channels, out_channels, blocks, stride=1):

layer = []

# 因为第一个残差块的输入通道可能与输出通道不同 所以单独拿出来赋值

layer.append(BasicBlock(in_channels, out_channels, stride))

for _ in range(1, blocks):

layer.append(BasicBlock(out_channels, out_channels))

return nn.Sequential(*layer)

def forward(self, x):

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.maxpool(out)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.avgpool(out)

out = torch.flatten(out, 1)

out = self.fc(out)

return out

if __name__ == '__main__':

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

model = (ResNet18())

model.to(DEVICE)

# print(model)

print(torch.cuda.is_available())

torchsummary.summary(model, (3, 224, 224), 64)

主要注意点是:

- BasicBlock(34层以下的残差块)中残差连接的方法,注意输入和输出的通道数,用1×1的卷积做好尺寸和维度对齐。

- 使用_make_layer()函数批量生成残差块。

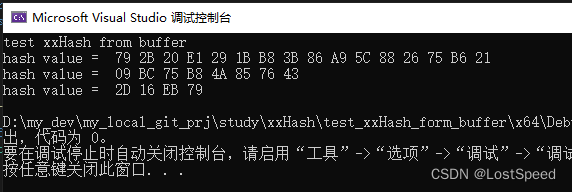

在控制台输出网络结构:

ResNet18(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(1): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

)

(layer2): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

)

(layer3): Sequential(

(0): BasicBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

)

(layer4): Sequential(

(0): BasicBlock(

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Linear(in_features=512, out_features=1000, bias=True)

)

使用torchsummary来测试网络:

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [64, 64, 112, 112] 9,408

BatchNorm2d-2 [64, 64, 112, 112] 128

ReLU-3 [64, 64, 112, 112] 0

MaxPool2d-4 [64, 64, 56, 56] 0

Conv2d-5 [64, 64, 56, 56] 36,864

BatchNorm2d-6 [64, 64, 56, 56] 128

ReLU-7 [64, 64, 56, 56] 0

Conv2d-8 [64, 64, 56, 56] 36,864

BatchNorm2d-9 [64, 64, 56, 56] 128

ReLU-10 [64, 64, 56, 56] 0

BasicBlock-11 [64, 64, 56, 56] 0

Conv2d-12 [64, 64, 56, 56] 36,864

BatchNorm2d-13 [64, 64, 56, 56] 128

ReLU-14 [64, 64, 56, 56] 0

Conv2d-15 [64, 64, 56, 56] 36,864

BatchNorm2d-16 [64, 64, 56, 56] 128

ReLU-17 [64, 64, 56, 56] 0

BasicBlock-18 [64, 64, 56, 56] 0

Conv2d-19 [64, 128, 28, 28] 73,728

BatchNorm2d-20 [64, 128, 28, 28] 256

ReLU-21 [64, 128, 28, 28] 0

Conv2d-22 [64, 128, 28, 28] 147,456

BatchNorm2d-23 [64, 128, 28, 28] 256

Conv2d-24 [64, 128, 28, 28] 8,192

BatchNorm2d-25 [64, 128, 28, 28] 256

ReLU-26 [64, 128, 28, 28] 0

BasicBlock-27 [64, 128, 28, 28] 0

Conv2d-28 [64, 128, 28, 28] 147,456

BatchNorm2d-29 [64, 128, 28, 28] 256

ReLU-30 [64, 128, 28, 28] 0

Conv2d-31 [64, 128, 28, 28] 147,456

BatchNorm2d-32 [64, 128, 28, 28] 256

ReLU-33 [64, 128, 28, 28] 0

BasicBlock-34 [64, 128, 28, 28] 0

Conv2d-35 [64, 256, 14, 14] 294,912

BatchNorm2d-36 [64, 256, 14, 14] 512

ReLU-37 [64, 256, 14, 14] 0

Conv2d-38 [64, 256, 14, 14] 589,824

BatchNorm2d-39 [64, 256, 14, 14] 512

Conv2d-40 [64, 256, 14, 14] 32,768

BatchNorm2d-41 [64, 256, 14, 14] 512

ReLU-42 [64, 256, 14, 14] 0

BasicBlock-43 [64, 256, 14, 14] 0

Conv2d-44 [64, 256, 14, 14] 589,824

BatchNorm2d-45 [64, 256, 14, 14] 512

ReLU-46 [64, 256, 14, 14] 0

Conv2d-47 [64, 256, 14, 14] 589,824

BatchNorm2d-48 [64, 256, 14, 14] 512

ReLU-49 [64, 256, 14, 14] 0

BasicBlock-50 [64, 256, 14, 14] 0

Conv2d-51 [64, 512, 7, 7] 1,179,648

BatchNorm2d-52 [64, 512, 7, 7] 1,024

ReLU-53 [64, 512, 7, 7] 0

Conv2d-54 [64, 512, 7, 7] 2,359,296

BatchNorm2d-55 [64, 512, 7, 7] 1,024

Conv2d-56 [64, 512, 7, 7] 131,072

BatchNorm2d-57 [64, 512, 7, 7] 1,024

ReLU-58 [64, 512, 7, 7] 0

BasicBlock-59 [64, 512, 7, 7] 0

Conv2d-60 [64, 512, 7, 7] 2,359,296

BatchNorm2d-61 [64, 512, 7, 7] 1,024

ReLU-62 [64, 512, 7, 7] 0

Conv2d-63 [64, 512, 7, 7] 2,359,296

BatchNorm2d-64 [64, 512, 7, 7] 1,024

ReLU-65 [64, 512, 7, 7] 0

BasicBlock-66 [64, 512, 7, 7] 0

AdaptiveAvgPool2d-67 [64, 512, 1, 1] 0

Linear-68 [64, 1000] 513,000

================================================================

Total params: 11,689,512

Trainable params: 11,689,512

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 36.75

Forward/backward pass size (MB): 4018.74

Params size (MB): 44.59

Estimated Total Size (MB): 4100.08

----------------------------------------------------------------

四、ResNet-50的Pytorch实现

import torch

import torch.nn as nn

import torchsummary

# Bottleneck

class Bottleneck(nn.Module):

# expansion = 4,因为Bottleneck中每个残差结构输出维度都是输入维度的4倍

expansion = 4

def __init__(self, in_channels, out_channels, stride=1):

super(Bottleneck, self).__init__()

# 注意这里1×1的卷积不用padding

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=stride, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

# 维持特征图尺寸 padding = 1

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

# 注意这里1×1的卷积不用padding

self.conv3 = nn.Conv2d(out_channels, out_channels * self.expansion, kernel_size=1, stride=1, bias=False)

self.bn3 = nn.BatchNorm2d(out_channels * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.shortcut = nn.Sequential()

if stride != 1 or in_channels != out_channels * self.expansion:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels * self.expansion, stride=stride, kernel_size=1, bias=False),

nn.BatchNorm2d(out_channels * self.expansion)

)

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

# 残差连接

out += self.shortcut(identity)

out = self.relu(out)

return out

# ResNet50

class ResNet50(nn.Module):

def __init__(self, num_classes=1000):

super(ResNet50, self).__init__()

# output_size = [(input_size - kernel_size + 2padding) / stride] + 1

# 112 = [(224 - 7 + 2padding) / stride] + 1 -> padding = 3

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

# 56 = [(112 - 3 + 2padding) / 2] + 1 -> padding = 1

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(64, 64, blocks=3, stride=1)

self.layer2 = self._make_layer(256, 128, blocks=4, stride=2)

self.layer3 = self._make_layer(512, 256, blocks=6, stride=2)

self.layer4 = self._make_layer(1024, 512, blocks=3, stride=2)

# 平均池化 参数就是out_size

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(2048, num_classes)

def _make_layer(self, in_channels, out_channels, blocks, stride=1):

layer = []

# 因为第一个残差块的输入通道可能与输出通道不同 所以单独拿出来赋值

layer.append(Bottleneck(in_channels, out_channels, stride))

for _ in range(1, blocks):

# 接下来的每一个其输入通道都是输出的四倍

layer.append(Bottleneck(out_channels * 4, out_channels))

return nn.Sequential(*layer)

def forward(self, x):

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.maxpool(out)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.avgpool(out)

out = torch.flatten(out, 1)

out = self.fc(out)

return out

if __name__ == '__main__':

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

model = ResNet50()

model.to(DEVICE)

# print(model)

torchsummary.summary(model, (3, 224, 224), 64)

主要注意点是:

-

Bottleneck(50层以上残差块)中块与块直接连接的通道数问题。在BasicBlock中由于两个相同的块在连接的时候通道数是相同的,而Bottleneck中两个相同的块在连接的时候其通道数相差四倍。即下面这段代码:

for _ in range(1, blocks): # 接下来的每一个其输入通道都是输出的四倍 layer.append(Bottleneck(out_channels * 4, out_channels)) -

注意最后全连接的通道数,ResNet50以上的最后全连接的in_features为2048。

-

注意在残差连接的时候,shortcut调整的维度一定要和输出维度对上,即下面这段代码:

self.shortcut = nn.Sequential() if stride != 1 or in_channels != out_channels * self.expansion: self.shortcut = nn.Sequential( nn.Conv2d(in_channels, out_channels * self.expansion, stride=stride, kernel_size=1, bias=False), nn.BatchNorm2d(out_channels * self.expansion) ) -

写代码的时候,要注意只有每一堆残差块结构中的第一个残差块的第一个卷积操作的stride为2,其余都为1,(除了第一堆残差块要维持尺度不变),即:

self.layer1 = self._make_layer(64, 64, blocks=3, stride=1)

在控制台输出网络结构:

ResNet50(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(shortcut): Sequential()

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Linear(in_features=2048, out_features=1000, bias=True)

)

使用torchsummary来测试网络:

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [64, 64, 112, 112] 9,408

BatchNorm2d-2 [64, 64, 112, 112] 128

ReLU-3 [64, 64, 112, 112] 0

MaxPool2d-4 [64, 64, 56, 56] 0

Conv2d-5 [64, 64, 56, 56] 4,096

BatchNorm2d-6 [64, 64, 56, 56] 128

ReLU-7 [64, 64, 56, 56] 0

Conv2d-8 [64, 64, 56, 56] 36,864

BatchNorm2d-9 [64, 64, 56, 56] 128

ReLU-10 [64, 64, 56, 56] 0

Conv2d-11 [64, 256, 56, 56] 16,384

BatchNorm2d-12 [64, 256, 56, 56] 512

Conv2d-13 [64, 256, 56, 56] 16,384

BatchNorm2d-14 [64, 256, 56, 56] 512

ReLU-15 [64, 256, 56, 56] 0

Bottleneck-16 [64, 256, 56, 56] 0

Conv2d-17 [64, 64, 56, 56] 16,384

BatchNorm2d-18 [64, 64, 56, 56] 128

ReLU-19 [64, 64, 56, 56] 0

Conv2d-20 [64, 64, 56, 56] 36,864

BatchNorm2d-21 [64, 64, 56, 56] 128

ReLU-22 [64, 64, 56, 56] 0

Conv2d-23 [64, 256, 56, 56] 16,384

BatchNorm2d-24 [64, 256, 56, 56] 512

ReLU-25 [64, 256, 56, 56] 0

Bottleneck-26 [64, 256, 56, 56] 0

Conv2d-27 [64, 64, 56, 56] 16,384

BatchNorm2d-28 [64, 64, 56, 56] 128

ReLU-29 [64, 64, 56, 56] 0

Conv2d-30 [64, 64, 56, 56] 36,864

BatchNorm2d-31 [64, 64, 56, 56] 128

ReLU-32 [64, 64, 56, 56] 0

Conv2d-33 [64, 256, 56, 56] 16,384

BatchNorm2d-34 [64, 256, 56, 56] 512

ReLU-35 [64, 256, 56, 56] 0

Bottleneck-36 [64, 256, 56, 56] 0

Conv2d-37 [64, 128, 28, 28] 32,768

BatchNorm2d-38 [64, 128, 28, 28] 256

ReLU-39 [64, 128, 28, 28] 0

Conv2d-40 [64, 128, 28, 28] 147,456

BatchNorm2d-41 [64, 128, 28, 28] 256

ReLU-42 [64, 128, 28, 28] 0

Conv2d-43 [64, 512, 28, 28] 65,536

BatchNorm2d-44 [64, 512, 28, 28] 1,024

Conv2d-45 [64, 512, 28, 28] 131,072

BatchNorm2d-46 [64, 512, 28, 28] 1,024

ReLU-47 [64, 512, 28, 28] 0

Bottleneck-48 [64, 512, 28, 28] 0

Conv2d-49 [64, 128, 28, 28] 65,536

BatchNorm2d-50 [64, 128, 28, 28] 256

ReLU-51 [64, 128, 28, 28] 0

Conv2d-52 [64, 128, 28, 28] 147,456

BatchNorm2d-53 [64, 128, 28, 28] 256

ReLU-54 [64, 128, 28, 28] 0

Conv2d-55 [64, 512, 28, 28] 65,536

BatchNorm2d-56 [64, 512, 28, 28] 1,024

ReLU-57 [64, 512, 28, 28] 0

Bottleneck-58 [64, 512, 28, 28] 0

Conv2d-59 [64, 128, 28, 28] 65,536

BatchNorm2d-60 [64, 128, 28, 28] 256

ReLU-61 [64, 128, 28, 28] 0

Conv2d-62 [64, 128, 28, 28] 147,456

BatchNorm2d-63 [64, 128, 28, 28] 256

ReLU-64 [64, 128, 28, 28] 0

Conv2d-65 [64, 512, 28, 28] 65,536

BatchNorm2d-66 [64, 512, 28, 28] 1,024

ReLU-67 [64, 512, 28, 28] 0

Bottleneck-68 [64, 512, 28, 28] 0

Conv2d-69 [64, 128, 28, 28] 65,536

BatchNorm2d-70 [64, 128, 28, 28] 256

ReLU-71 [64, 128, 28, 28] 0

Conv2d-72 [64, 128, 28, 28] 147,456

BatchNorm2d-73 [64, 128, 28, 28] 256

ReLU-74 [64, 128, 28, 28] 0

Conv2d-75 [64, 512, 28, 28] 65,536

BatchNorm2d-76 [64, 512, 28, 28] 1,024

ReLU-77 [64, 512, 28, 28] 0

Bottleneck-78 [64, 512, 28, 28] 0

Conv2d-79 [64, 256, 14, 14] 131,072

BatchNorm2d-80 [64, 256, 14, 14] 512

ReLU-81 [64, 256, 14, 14] 0

Conv2d-82 [64, 256, 14, 14] 589,824

BatchNorm2d-83 [64, 256, 14, 14] 512

ReLU-84 [64, 256, 14, 14] 0

Conv2d-85 [64, 1024, 14, 14] 262,144

BatchNorm2d-86 [64, 1024, 14, 14] 2,048

Conv2d-87 [64, 1024, 14, 14] 524,288

BatchNorm2d-88 [64, 1024, 14, 14] 2,048

ReLU-89 [64, 1024, 14, 14] 0

Bottleneck-90 [64, 1024, 14, 14] 0

Conv2d-91 [64, 256, 14, 14] 262,144

BatchNorm2d-92 [64, 256, 14, 14] 512

ReLU-93 [64, 256, 14, 14] 0

Conv2d-94 [64, 256, 14, 14] 589,824

BatchNorm2d-95 [64, 256, 14, 14] 512

ReLU-96 [64, 256, 14, 14] 0

Conv2d-97 [64, 1024, 14, 14] 262,144

BatchNorm2d-98 [64, 1024, 14, 14] 2,048

ReLU-99 [64, 1024, 14, 14] 0

Bottleneck-100 [64, 1024, 14, 14] 0

Conv2d-101 [64, 256, 14, 14] 262,144

BatchNorm2d-102 [64, 256, 14, 14] 512

ReLU-103 [64, 256, 14, 14] 0

Conv2d-104 [64, 256, 14, 14] 589,824

BatchNorm2d-105 [64, 256, 14, 14] 512

ReLU-106 [64, 256, 14, 14] 0

Conv2d-107 [64, 1024, 14, 14] 262,144

BatchNorm2d-108 [64, 1024, 14, 14] 2,048

ReLU-109 [64, 1024, 14, 14] 0

Bottleneck-110 [64, 1024, 14, 14] 0

Conv2d-111 [64, 256, 14, 14] 262,144

BatchNorm2d-112 [64, 256, 14, 14] 512

ReLU-113 [64, 256, 14, 14] 0

Conv2d-114 [64, 256, 14, 14] 589,824

BatchNorm2d-115 [64, 256, 14, 14] 512

ReLU-116 [64, 256, 14, 14] 0

Conv2d-117 [64, 1024, 14, 14] 262,144

BatchNorm2d-118 [64, 1024, 14, 14] 2,048

ReLU-119 [64, 1024, 14, 14] 0

Bottleneck-120 [64, 1024, 14, 14] 0

Conv2d-121 [64, 256, 14, 14] 262,144

BatchNorm2d-122 [64, 256, 14, 14] 512

ReLU-123 [64, 256, 14, 14] 0

Conv2d-124 [64, 256, 14, 14] 589,824

BatchNorm2d-125 [64, 256, 14, 14] 512

ReLU-126 [64, 256, 14, 14] 0

Conv2d-127 [64, 1024, 14, 14] 262,144

BatchNorm2d-128 [64, 1024, 14, 14] 2,048

ReLU-129 [64, 1024, 14, 14] 0

Bottleneck-130 [64, 1024, 14, 14] 0

Conv2d-131 [64, 256, 14, 14] 262,144

BatchNorm2d-132 [64, 256, 14, 14] 512

ReLU-133 [64, 256, 14, 14] 0

Conv2d-134 [64, 256, 14, 14] 589,824

BatchNorm2d-135 [64, 256, 14, 14] 512

ReLU-136 [64, 256, 14, 14] 0

Conv2d-137 [64, 1024, 14, 14] 262,144

BatchNorm2d-138 [64, 1024, 14, 14] 2,048

ReLU-139 [64, 1024, 14, 14] 0

Bottleneck-140 [64, 1024, 14, 14] 0

Conv2d-141 [64, 512, 7, 7] 524,288

BatchNorm2d-142 [64, 512, 7, 7] 1,024

ReLU-143 [64, 512, 7, 7] 0

Conv2d-144 [64, 512, 7, 7] 2,359,296

BatchNorm2d-145 [64, 512, 7, 7] 1,024

ReLU-146 [64, 512, 7, 7] 0

Conv2d-147 [64, 2048, 7, 7] 1,048,576

BatchNorm2d-148 [64, 2048, 7, 7] 4,096

Conv2d-149 [64, 2048, 7, 7] 2,097,152

BatchNorm2d-150 [64, 2048, 7, 7] 4,096

ReLU-151 [64, 2048, 7, 7] 0

Bottleneck-152 [64, 2048, 7, 7] 0

Conv2d-153 [64, 512, 7, 7] 1,048,576

BatchNorm2d-154 [64, 512, 7, 7] 1,024

ReLU-155 [64, 512, 7, 7] 0

Conv2d-156 [64, 512, 7, 7] 2,359,296

BatchNorm2d-157 [64, 512, 7, 7] 1,024

ReLU-158 [64, 512, 7, 7] 0

Conv2d-159 [64, 2048, 7, 7] 1,048,576

BatchNorm2d-160 [64, 2048, 7, 7] 4,096

ReLU-161 [64, 2048, 7, 7] 0

Bottleneck-162 [64, 2048, 7, 7] 0

Conv2d-163 [64, 512, 7, 7] 1,048,576

BatchNorm2d-164 [64, 512, 7, 7] 1,024

ReLU-165 [64, 512, 7, 7] 0

Conv2d-166 [64, 512, 7, 7] 2,359,296

BatchNorm2d-167 [64, 512, 7, 7] 1,024

ReLU-168 [64, 512, 7, 7] 0

Conv2d-169 [64, 2048, 7, 7] 1,048,576

BatchNorm2d-170 [64, 2048, 7, 7] 4,096

ReLU-171 [64, 2048, 7, 7] 0

Bottleneck-172 [64, 2048, 7, 7] 0

AdaptiveAvgPool2d-173 [64, 2048, 1, 1] 0

Linear-174 [64, 1000] 2,049,000

================================================================

Total params: 25,557,032

Trainable params: 25,557,032

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 36.75

Forward/backward pass size (MB): 15200.01

Params size (MB): 97.49

Estimated Total Size (MB): 15334.25

----------------------------------------------------------------

参考链接:

-

https://link.zhihu.com/?target=https%3A//arxiv.org/pdf/1512.03385.pdf

-

https://blog.csdn.net/m0_64799972/article/details/132753608

-

https://blog.csdn.net/m0_50127633/article/details/117200212

-

https://www.bilibili.com/video/BV1Bo4y1T7Lc/?spm_id_from=333.999.0.0&vd_source=c7e390079ff3e10b79e23fb333bea49d