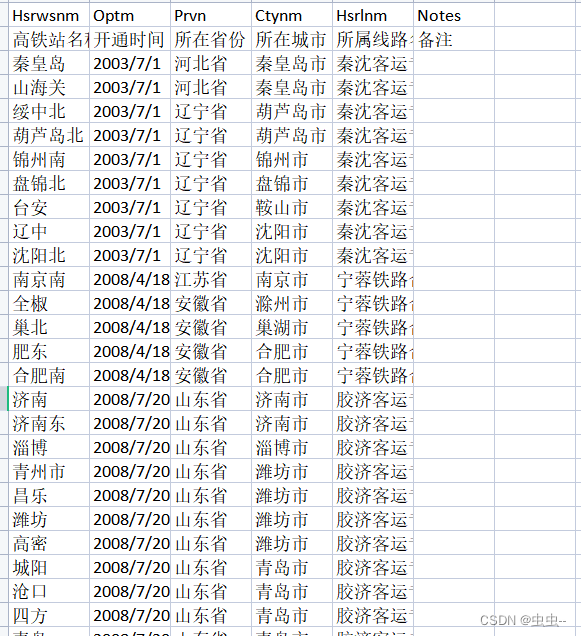

使用opencv提取连个图像的重叠区域,其本质就是提取两个图像的特征点,然后对两个图像的特征点进行匹配,根据匹配的特征点计算出透视变换矩阵H,然后根据H即可提取出两个图像的重叠区域。这里要注意的是,普通的opencv库没有包含opencv-contrib,无法使用xfeatures2d.hpp里面的SURF、SIFT算法提取图像的特征点。故此,需要自行编译opencv(将opencv-contrib加到动态库中),或者下载别人编译好的opencv。

1、包含opencv-contrib的opencv

win10下vs2019编译的opencv470:https://download.csdn.net/download/a486259/87355761

win10下vs2019编译的opencv453+cuda:

https://download.csdn.net/download/a486259/81328226

2、核心步骤

提取图像重叠区域的关键步骤为,加载图像、提特征点、特征点匹配、计算透视变换矩阵、计算重叠区域、提取重叠区域。

2.1 加载图像

stringstream fmt1, fmt2;

fmt1 << "IMG_20221231_160033.jpg";

Mat left = imread(fmt1.str());//左侧:图片路径

fmt2 << "IMG_20221231_160037.jpg";

Mat right = imread(fmt2.str());//右侧:图片路径

Size msize = { 512, 512 };//left.size();//

resize(left, left, msize);

resize(right, right, msize);

2.2 提取图像特征点

创建surf对象,提取两个图像的特征点和特征描述符

Ptr<SURF>surf; //可以容纳800个特征点

surf = SURF::create(800);//参数 查找的海森矩阵 create 海森矩阵阀值

vector<KeyPoint>key1, key2;//特征点

Mat c, d;//特征点描述符

//提取特征点

surf->detectAndCompute(left, Mat(), key2, d);

surf->detectAndCompute(right, Mat(), key1, c);

2.2 提取图像特征点

使用暴力匹配器匹配两个图像中的特征描述符,匹配结果存入 vector<DMatch>中,然后按照比例提取一定匹配的点

BFMatcher matcher; //暴力匹配器

vector<DMatch>matches;//DMatch 点和点之间的关系

//使用暴力匹配器匹配特征点,找到存来

matcher.match(d, c, matches);

//3、筛选特征点

//排序 从小到大

sort(matches.begin(), matches.end());

//保留最优的特征点对象

vector<DMatch>good_matches;//最优

//设置比例

int ptrPoint = std::max(50, (int)(matches.size() * 0.15));

for (int i = 0; i < ptrPoint; i++)

{

good_matches.push_back(matches[i]);

}

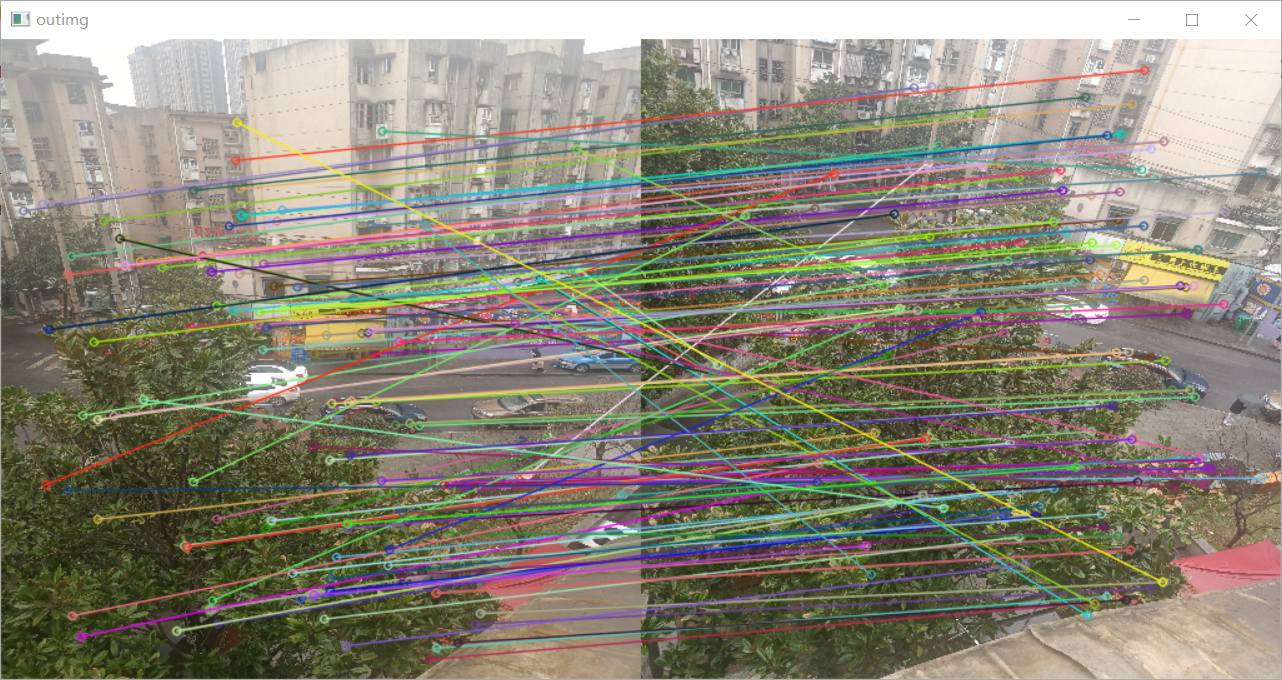

//4、最佳匹配的特征点连成线

Mat outimg;

drawMatches(left, key2, right, key1, good_matches, outimg,

Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

2.3 计算透视变换矩阵

提取vector<DMatch>中已匹配的特征点存入imagepoint1, imagepoint2中计算透视变换矩阵

//5、计算透视变换矩阵

//提取已匹配的特征点

vector<Point2f>imagepoint1, imagepoint2;

for (int i = 0; i < good_matches.size(); i++)

{

imagepoint1.push_back(key1[good_matches[i].trainIdx].pt);

imagepoint2.push_back(key2[good_matches[i].queryIdx].pt);

}

//透视转换

Mat H = findHomography(imagepoint1, imagepoint2, cv::RANSAC);

cout << "H:::" << H << endl;

2.4 计算透视角点

根据H矩阵计算出透视角点,并将透视检点绘制为mask

//定义四个角点坐标。

Point2f obj_corners[4] = { cv::Point(0,0),cv::Point(left.cols, 0), cv::Point(left.cols, left.rows), cv::Point(0, left.rows) };

Point scene_corners2[4]; //在srcImage1上画线

//获取透视变化的角点

for (int i = 0; i < 4; i++)

{

double x = obj_corners[i].x;

double y = obj_corners[i].y;

double Z = 1. / (H.at<double>(2, 0) * x + H.at<double>(2, 1) * y + H.at<double>(2, 2));

double X = (H.at<double>(0, 0) * x + H.at<double>(0, 1) * y + H.at<double>(0, 2)) * Z;

double Y = (H.at<double>(1, 0) * x + H.at<double>(1, 1) * y + H.at<double>(1, 2)) * Z;

scene_corners2[i] = cv::Point(cvRound(X), cvRound(Y));

}

//使用drawContours将透视检点绘制为mask(超出图像大小的区域不可见)

Mat roi = Mat::zeros(left.size(), CV_8UC1);

vector<vector<Point>> contour;

vector<Point> pts;

pts.push_back(scene_corners2[0]);

pts.push_back(scene_corners2[1]);

pts.push_back(scene_corners2[2]);

pts.push_back(scene_corners2[3]);

contour.push_back(pts);

drawContours(roi, contour, 0, Scalar::all(255), -1);

2.5 截取重叠区域

截取两个图像的重叠区域并绘图展示

//截取第一个图的重复区域

Mat dstimg1;

left.copyTo(dstimg1, roi);

Mat roi_left_by = get_by(roi);

//截取第二个图的重复区域

Mat dstimg2 = Mat::zeros(left.size(), CV_8UC3);

warpPerspective(right, dstimg2, H, left.size());

//对roi进行逆变换,得到在右图的重叠区域

Mat roi_right;

warpPerspective(roi, roi_right, H, left.size(), WARP_INVERSE_MAP);

Mat roi_right_by = get_by(roi_right);

imshow("outimg", outimg);

imshows("ss", {left,dstimg1,roi_left_by+ left ,right,dstimg2,roi_right_by+ right }, 3);

waitKey();

3、完整示例

3.1 完整代码

其中Mattool.hpp的内容在3.2章

#include <iostream>

#include <opencv2/opencv.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/xfeatures2d.hpp>

#include <opencv2/calib3d.hpp>

#include <opencv2/imgproc.hpp>

#include "Matutils.hpp"

using namespace std;

using namespace cv;

using namespace cv::xfeatures2d;

Mat sobel_mat(Mat srcImage) {

Mat grad_x, grad_y;

Mat abs_grad_x, abs_grad_y;

// 计算x方向的梯度

Sobel(srcImage, grad_x, CV_16S, 1, 0, 3, 1, 0, BORDER_DEFAULT);

convertScaleAbs(grad_x, abs_grad_x);

// 计算y方向的梯度

Sobel(srcImage, grad_y, CV_16S, 0, 1, 3, 1, 0, BORDER_DEFAULT);

convertScaleAbs(grad_y, abs_grad_y);

// 合并梯度

Mat dstImage;

addWeighted(abs_grad_x, 0.5, abs_grad_y, 0.5, 0, dstImage);

return dstImage;

}

Mat line2red(Mat line) {

vector<cv::Mat> mv;

Mat red_line;

Mat zeros = Mat::zeros(line.size(), CV_8UC1);

//mv.push_back(zeros);

//mv.push_back(zeros);

mv.push_back(line);

mv.push_back(line);

mv.push_back(line);

//将vector内的多个mat合并为一个多通道mat

cv::merge(mv, red_line);

return red_line;

}

Mat get_by(Mat roi) {

Mat roi_left, roi_erode, roi_by;

Mat element = getStructuringElement(MORPH_RECT, Size(7, 7));

roi_left = pad_mat(roi);

morphologyEx(roi_left, roi_erode, MORPH_ERODE, element);

roi_by = roi_left - roi_erode;

roi_by = rm_pad(roi_by);

//cvtColor(roi_by, roi_by, color);

return line2red(roi_by);

}

void test_img() {

stringstream fmt1, fmt2;

fmt1 << "IMG_20221231_160033.jpg";

Mat left = imread(fmt1.str());//左侧:图片路径

fmt2 << "IMG_20221231_160037.jpg";

Mat right = imread(fmt2.str());//右侧:图片路径

Size msize = { 512, 512 };//left.size();//

resize(left, left, msize);

resize(right, right, msize);

//1、创建SURF对象

Ptr<SURF>surf; //可以容纳800个特征点

surf = SURF::create(800);//参数 查找的海森矩阵 create 海森矩阵阀值

vector<KeyPoint>key1, key2;//特征点

Mat c, d;//特征点描述符

//提取特征点

surf->detectAndCompute(left, Mat(), key2, d);

surf->detectAndCompute(right, Mat(), key1, c);

//2、进行特征匹配

BFMatcher matcher; //暴力匹配器

//特征点对比,保存下来

vector<DMatch>matches;//DMatch 点和点之间的关系

//使用暴力匹配器匹配特征点,找到存来

matcher.match(d, c, matches);

//3、筛选特征点

//排序 从小到大

sort(matches.begin(), matches.end());

//保留最优的特征点对象

vector<DMatch>good_matches;//最优

//设置比例

int ptrPoint = std::max(50, (int)(matches.size() * 0.15));

for (int i = 0; i < ptrPoint; i++)

{

good_matches.push_back(matches[i]);

}

//4、最佳匹配的特征点连成线

Mat outimg;

drawMatches(left, key2, right, key1, good_matches, outimg,

Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

//5、进行透视变化

//特征点配对

vector<Point2f>imagepoint1, imagepoint2;

for (int i = 0; i < good_matches.size(); i++)

{

imagepoint1.push_back(key1[good_matches[i].trainIdx].pt);

imagepoint2.push_back(key2[good_matches[i].queryIdx].pt);

}

//透视转换

Mat H = findHomography(imagepoint1, imagepoint2, cv::RANSAC);

cout << "H:::" << H << endl;

//根据H矩阵计算出透视角点

//定义四个角点坐标。

Point2f obj_corners[4] = { cv::Point(0,0),cv::Point(left.cols, 0), cv::Point(left.cols, left.rows), cv::Point(0, left.rows) };

Point scene_corners2[4]; //在srcImage1上画线

//获取透视变化的角点

for (int i = 0; i < 4; i++)

{

double x = obj_corners[i].x;

double y = obj_corners[i].y;

double Z = 1. / (H.at<double>(2, 0) * x + H.at<double>(2, 1) * y + H.at<double>(2, 2));

double X = (H.at<double>(0, 0) * x + H.at<double>(0, 1) * y + H.at<double>(0, 2)) * Z;

double Y = (H.at<double>(1, 0) * x + H.at<double>(1, 1) * y + H.at<double>(1, 2)) * Z;

scene_corners2[i] = cv::Point(cvRound(X), cvRound(Y));

}

//使用drawContours将透视检点绘制为mask(超出图像大小的区域不可见)

Mat roi = Mat::zeros(left.size(), CV_8UC1);

vector<vector<Point>> contour;

vector<Point> pts;

pts.push_back(scene_corners2[0]);

pts.push_back(scene_corners2[1]);

pts.push_back(scene_corners2[2]);

pts.push_back(scene_corners2[3]);

contour.push_back(pts);

drawContours(roi, contour, 0, Scalar::all(255), -1);

//截取第一个图的重复区域

Mat dstimg1;

left.copyTo(dstimg1, roi);

Mat roi_left_by = get_by(roi);

//截取第二个图的重复区域

Mat dstimg2 = Mat::zeros(left.size(), CV_8UC3);

warpPerspective(right, dstimg2, H, left.size());

//对roi进行逆变换,得到在右图的重叠区域

Mat roi_right;

warpPerspective(roi, roi_right, H, left.size(), WARP_INVERSE_MAP);

Mat roi_right_by = get_by(roi_right);

imshow("outimg", outimg);

imshows("ss", {left,dstimg1,roi_left_by+ left ,right,dstimg2,roi_right_by+ right }, 3);

waitKey();

}

int main(int argc, char* argv[])

{

test_img();

return 0;

}

3.2 Matutils.hpp

这是进行图像处理中通用的函数库

#ifndef __Matutils__

#define __Matutils__

#pragma once

#include <iostream>

#include <opencv2/opencv.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

#include <vector>

#include <io.h>

#include <stdlib.h>

#include <iostream>

#include <string>

using namespace std;

using namespace cv;

//对图像填充黑边

inline Mat pad_mat(Mat img,int pad=10) {

Mat imgWindow = Mat::zeros(img.cols + 2*pad , img.rows + 2 * pad, img.type());

img.copyTo(imgWindow(Rect({ pad ,pad }, img.size())));

return imgWindow;

}

//移除图像填充的黑边

inline Mat rm_pad(Mat img, int pad = 10) {

Size size = { img.cols - 2 * pad , img.rows - 2 * pad };

return img(Rect({ pad ,pad }, size));

}

//图像拼接

inline void multipleImage(vector<Mat> imgVector, Mat& dst, int imgCols, int MAX_PIXEL = 300)

{

//两列图像间的空白区域

int pad = 10;

int imgNum = imgVector.size();

//选择图片最大的一边 将最大的边按比例变为300像素

Size imgOriSize = imgVector[0].size();

int imgMaxPixel = max(imgOriSize.height, imgOriSize.width);

//获取最大像素变为MAX_PIXEL的比例因子

double prop = imgMaxPixel < MAX_PIXEL ? (double)imgMaxPixel / MAX_PIXEL : MAX_PIXEL / (double)imgMaxPixel;

Size imgStdSize(imgOriSize.width * prop, imgOriSize.height * prop); //窗口显示的标准图像的Size

Mat imgStd; //标准图片

Point2i location(0, 0); //坐标点(从0,0开始)

//Mat imgWindow(imgStdSize.height * ((imgNum - 1) / imgCols + 1), imgStdSize.width * imgCols+ pad * imgCols-pad, imgVector[0].type());

int imgRows = (imgNum - 1) / imgCols + 1;

Mat imgWindow = Mat::zeros(imgStdSize.height * imgRows + pad * imgRows - pad, imgStdSize.width * imgCols + pad * imgCols - pad, imgVector[0].type());

for (int i = 0; i < imgNum; i++)

{

location.x = (i % imgCols) * (imgStdSize.width + pad);

location.y = (i / imgCols) * imgStdSize.height;

resize(imgVector[i], imgStd, imgStdSize, prop, prop, INTER_LINEAR); //设置为标准大小

//将imgStd复制到imgWindow的指定区域中

imgStd.copyTo(imgWindow(Rect(location, imgStdSize)));

}

dst = imgWindow;

}

//多图显示

inline void imshows(string title, vector<Mat> imgVector, int imgCols = -1) {

Mat dst;

if (imgCols == -1) {

imgCols = imgVector.size();

}

multipleImage(imgVector, dst, imgCols);

namedWindow(title);

imshow(title, dst);

imwrite(title + ".png", dst);

}

//删除小面积的连通域

inline Mat deleteMinWhiteArea(Mat src, int min_area) {

Mat labels, stats, centroids, img_color, grayImg;

//1、连通域信息统计

int nccomps = connectedComponentsWithStats(

src, //二值图像

labels,

stats,

centroids

);

//2、连通域状态区分

//为每一个连通域初始化颜色表

vector<Vec3b> colors(nccomps);

colors[0] = Vec3b(0, 0, 0); // background pixels remain black.

for (int i = 1; i < nccomps; i++)

{

colors[i] = Vec3b(rand() % 256, rand() % 256, rand() % 256);

//面积阈值筛选

if ((stats.at<int>(i, CC_STAT_AREA) < min_area))

{

//如果连通域面积不合格则置黑

colors[i] = Vec3b(0, 0, 0);

}

}

//3、连通域删除

//按照label值,对不同的连通域进行着色

img_color = Mat::zeros(src.size(), CV_8UC3);

for (int y = 0; y < img_color.rows; y++)

{

int* labels_p = labels.ptr<int>(y);//使用行指针,加速运算

Vec3b* img_color_p = img_color.ptr<Vec3b>(y);//使用行指针,加速运算

for (int x = 0; x < img_color.cols; x++)

{

int label = labels_p[x];//取出label值

CV_Assert(0 <= label && label <= nccomps);

img_color_p[x] = colors[label];//设置颜色

}

}

//return img_color;

//如果是需要二值结果则将img_color进行二值化

cvtColor(img_color, grayImg, COLOR_BGR2GRAY);

threshold(grayImg, grayImg, 1, 255, THRESH_BINARY);

return grayImg;

}

//删除图形中小面积的黑色孔洞

inline Mat deleteMinBlackArea(Mat src, int min_area) {

Mat inv = 255 - src;//颜色取反

Mat res = deleteMinWhiteArea(inv, min_area);

return 255 - res;//颜色取反

}

//找图中topk个连通域

inline bool mypairsort(pair<int, int> i, pair<int, int> j) { return (i.second > j.second); }

inline Mat findTopKArea(Mat srcImage, int topk)

{

Mat temp;

Mat labels;

srcImage.copyTo(temp);

//1. 标记连通域

int n_comps = connectedComponents(temp, labels, 4, CV_16U);

vector<pair<int, int>> histogram_of_labels;

for (int i = 0; i < n_comps; i++)//初始化labels的个数为0

{

histogram_of_labels.push_back({ i,0 });

}

int rows = labels.rows;

int cols = labels.cols;

for (int row = 0; row < rows; row++) //计算每个labels的个数--即连通域的面积

{

for (int col = 0; col < cols; col++)

{

histogram_of_labels.at(labels.at<unsigned short>(row, col)).second += 1;

}

}

//histogram_of_labels.at(0).second = 0; //将背景的labels个数设置为0

//2.对连通域进行排序

std::sort(histogram_of_labels.begin(), histogram_of_labels.end(), mypairsort);

//3. 取前k个连通域的labels id

vector<int> select_labels;

for (int i = 0; i < topk; i++)

{

if (histogram_of_labels[i].first == 0) {

topk += 1;

//如果碰到背景,则跳过,且topk+1

}

else {

select_labels.push_back(histogram_of_labels[i].first);

}

}

//3. 将label id在select_labels的连通域标记为255,并将其他连通域置0

for (int row = 0; row < rows; row++)

{

for (int col = 0; col < cols; col++)

{

int now_label_id = labels.at<unsigned short>(row, col);

if (std::count(select_labels.begin(), select_labels.end(), now_label_id)) {

labels.at<unsigned short>(row, col) = 255;

}

else {

labels.at<unsigned short>(row, col) = 0;

}

}

}

//4. 将图像更改为CV_8U格式

labels.convertTo(labels, CV_8U);

return labels;

}

//获取脊线

inline Mat get_ridge_line(Mat dst, int ksize = 3) {

Mat skeleton, result, open_dst;

Mat kernel = getStructuringElement(MORPH_CROSS, Size(ksize, ksize));

skeleton = Mat::zeros(dst.rows, dst.cols, dst.type());

while (true) {

if (sum(dst)[0] == 0) {

break;

}

morphologyEx(dst, dst, MORPH_ERODE, kernel);//消除毛刺,删除部分连通域

morphologyEx(dst, open_dst, MORPH_OPEN, kernel);

result = dst - open_dst;

skeleton = skeleton + result;

}

return skeleton;

}

#endif

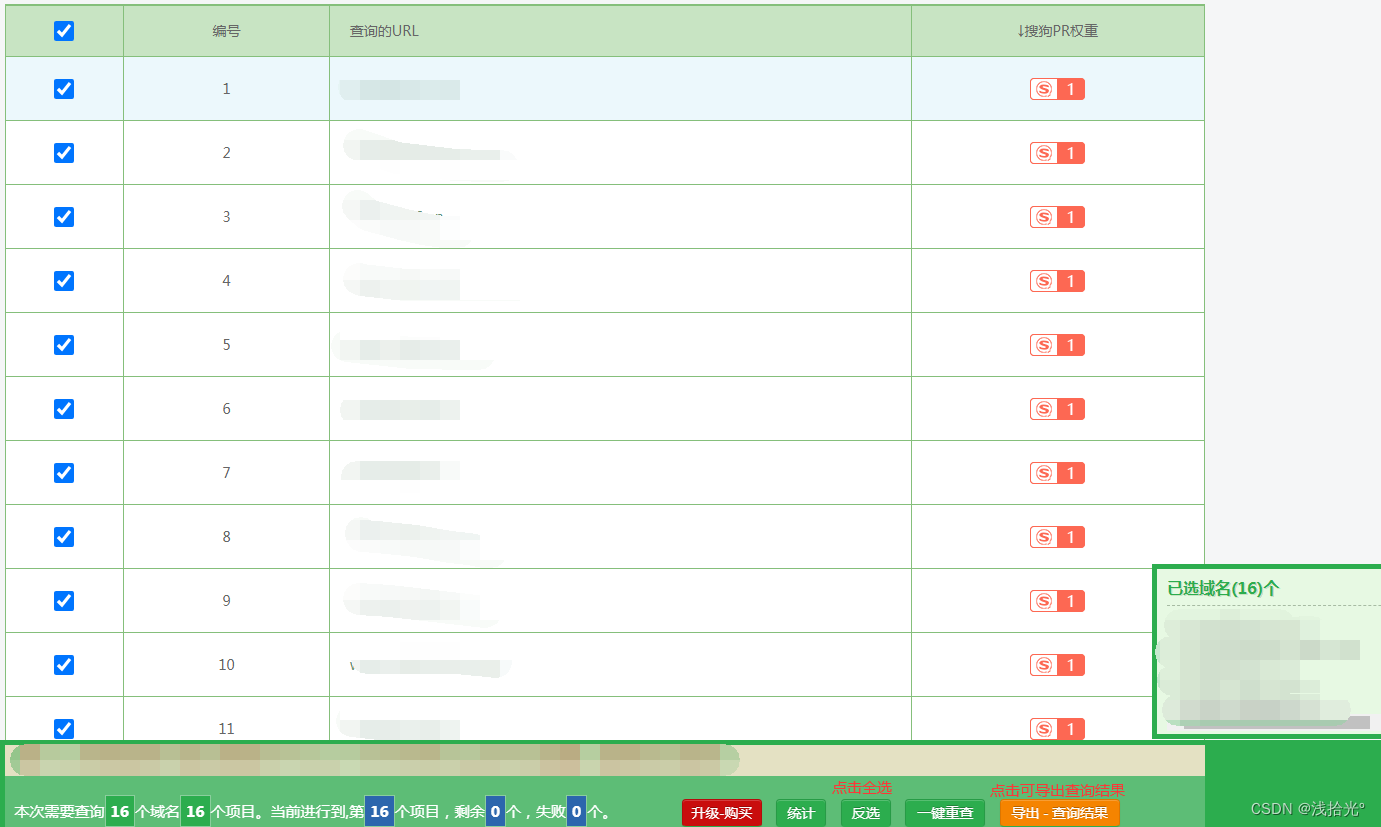

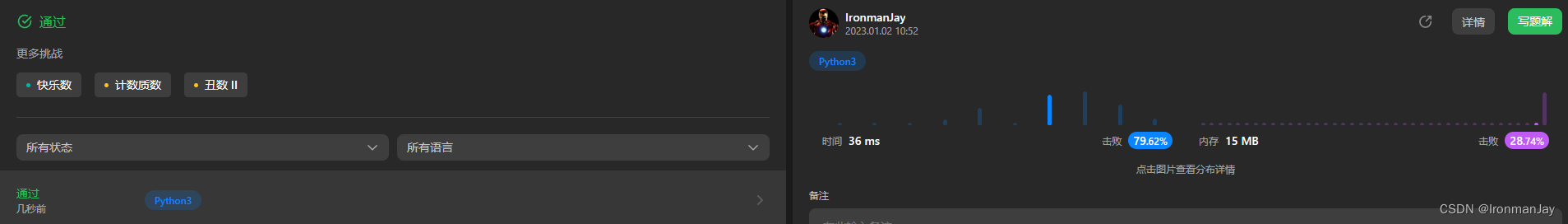

3.3 测试效果

两个图像的特征点匹配关系

算法提取出的重叠区域(中间列),第一例为两个原图,最后一列为重叠区域在原图中的效果。