K8s集群环境搭建

修改hosts文件

[root@master ~]# vim /etc/hosts

[root@master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.193.128 master.example.com master

192.168.193.129 node1.example.com node1

192.168.193.130 node2.example.com node2

[root@master ~]#

[root@master ~]# scp /etc/hosts root@192.168.193.129:/etc/hosts

The authenticity of host '192.168.193.129 (192.168.193.129)' can't be established.

ECDSA key fingerprint is SHA256:tgf2yiFV2TrjOQEd9a9e9dFRgo/eHo0oKloKyIVulaI.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.193.129' (ECDSA) to the list of known hosts.

root@192.168.193.129's password:

hosts 100% 290 3.4KB/s 00:00

[root@master ~]# scp /etc/hosts root@192.168.193.130:/etc/hosts

The authenticity of host '192.168.193.130 (192.168.193.130)' can't be established.

ECDSA key fingerprint is SHA256:ejuoTwhMCCJB4Hbr6FqIQ7kvTKXjoenEigo/IZkdwy4.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.193.130' (ECDSA) to the list of known hosts.

root@192.168.193.130's password:

hosts 100% 290 30.3KB/s 00:00

免密钥

[root@master ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:14tkp514KCDLuvpk3RoRf7Jhhc4GYyaaPslrST7ipYM root@master.example.com

The key's randomart image is:

+---[RSA 3072]----+

| |

| . |

| . * . . |

| o + B . . |

|o o X S + o |

|o..o B * + B o |

|+=+.= o . = = |

|EO=. o . . |

|=**.. |

+----[SHA256]-----+

[root@master ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@node1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'node1 (192.168.193.129)' can't be established.

ECDSA key fingerprint is SHA256:tgf2yiFV2TrjOQEd9a9e9dFRgo/eHo0oKloKyIVulaI.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@node1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@node1'"

and check to make sure that only the key(s) you wanted were added.

[root@master ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@node2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'node2 (192.168.193.130)' can't be established.

ECDSA key fingerprint is SHA256:ejuoTwhMCCJB4Hbr6FqIQ7kvTKXjoenEigo/IZkdwy4.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@node2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@node2'"

and check to make sure that only the key(s) you wanted were added.

时钟同步

master:

[root@master ~]# vim /etc/chrony.conf

local stratum 10

[root@master ~]# systemctl restart chronyd

[root@master ~]# systemctl enable chronyd

Created symlink /etc/systemd/system/multi-user.target.wants/chronyd.service → /usr/lib/systemd/system/chronyd.service.

[root@master ~]# hwclock -w

node1:

[root@node1 ~]# vim /etc/chrony.conf

server master.example.com iburst

[root@node1 ~]# systemctl restart chronyd

[root@node1 ~]# systemctl enable chronyd

[root@node1 ~]# hwclock -w

node2:

[root@node2 ~]# vim /etc/chrony.conf

server master.example.com iburst

[root@node2 ~]# systemctl restart chronyd

[root@node2 ~]# systemctl enable chronyd

[root@node2 ~]# hwclock -w

禁用firewalld、selinux、postfix

master:

[root@master ~]# systemctl stop firewalld

[root@master ~]# systemctl disable firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master ~]# vim /etc/selinux/config

SELINUX=disabled

[root@master ~]# setenforce 0

[root@master ~]# systemctl stop postfix

Failed to stop postfix.service: Unit postfix.service not loaded.

[root@master ~]# systemctl disable postfix

Failed to disable unit: Unit file postfix.service does not exist.

node1:

[root@node1 ~]# systemctl stop firewalld

[root@node1 ~]# systemctl disable firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@node1 ~]# vim /etc/selinux/config

SELINUX=disabled

[root@node1 ~]# setenforce 0

[root@node1 ~]# systemctl stop postfix

Failed to stop postfix.service: Unit postfix.service not loaded.

[root@node1 ~]# systemctl disable postfix

Failed to disable unit: Unit file postfix.service does not exist.

node2:

[root@node2 ~]# systemctl stop firewalld

[root@node2 ~]# systemctl disable firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@node2 ~]# vim /etc/selinux/config

SELINUX=disabled

[root@node2 ~]# setenforce 0

[root@node2 ~]# systemctl stop postfix

Failed to stop postfix.service: Unit postfix.service not loaded.

[root@node2 ~]# systemctl disable postfix

Failed to disable unit: Unit file postfix.service does not exist.

禁用swap分区

master :

[root@master ~]# vim /etc/fstab

[root@master ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Jun 30 06:34:44 2022

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/cs-root / xfs defaults 0 0

UUID=e18bd9c4-e065-46b2-ba15-a668954e3087 /boot xfs defaults 0 0

/dev/mapper/cs-home /home xfs defaults 0 0

#/dev/mapper/cs-swap none swap defaults 0 0

[root@master ~]# swapoff -a

node1 :

[root@node1 ~]# vim /etc/fstab

[root@node1 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Sep 27 03:59:33 2022

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/cs-root / xfs defaults 0 0

UUID=1ae9c603-27ba-433c-a37b-8d2d043c2746 /boot xfs defaults 0 0

/dev/mapper/cs-home /home xfs defaults 0 0

#/dev/mapper/cs-swap none swap defaults 0 0

[root@node1 ~]# swapoff -a

node2 :

[root@node2 ~]# vim /etc/fstab

[root@node2 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Sep 27 03:59:33 2022

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/cs-root / xfs defaults 0 0

UUID=1ae9c603-27ba-433c-a37b-8d2d043c2746 /boot xfs defaults 0 0

/dev/mapper/cs-home /home xfs defaults 0 0

#/dev/mapper/cs-swap none swap defaults 0 0

[root@node2 ~]# swapoff -a

开启IP转发,和修改内核信息

master :

[root@master ~]# vim /etc/sysctl.d/k8s.conf

[root@master ~]# cat /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master ~]# modprobe br_netfilter

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

node1 :

[root@node1 ~]# vim /etc/sysctl.d/k8s.conf

[root@node1 ~]# modprobe br_netfilter

[root@node1 ~]#

[root@node1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

node2 :

[root@node2 ~]# vim /etc/sysctl.d/k8s.conf

[root@node2 ~]# modprobe br_netfilter

[root@node2 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

配置IPVS功能

master :

[root@master ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@master ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@master ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@master ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@master ~]# reboot

node1 :

[root@node1 ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@node1 ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@node1 ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@node1 ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@node1 ~]# reboot

node2 :

[root@node2 ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@node2 ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@node2 ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@node2 ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@node2 ~]# reboot

安装docker

切换镜像源

master :

[root@master yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

--2022-11-17 15:32:29-- https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 119.96.90.238, 119.96.90.236, 119.96.90.242, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|119.96.90.238|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2495 (2.4K) [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/CentOS-Base.repo’

/etc/yum.repos.d/CentOS-B 100%[==================================>] 2.44K --.-KB/s in 0.02s

2022-11-17 15:32:29 (104 KB/s) - ‘/etc/yum.repos.d/CentOS-Base.repo’ saved [2495/2495]

[root@master yum.repos.d]# dnf -y install epel-release

[root@master yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

--2022-11-17 15:36:12-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 119.96.90.242, 119.96.90.243, 119.96.90.241, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|119.96.90.242|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2081 (2.0K) [application/octet-stream]

Saving to: ‘docker-ce.repo’

docker-ce.repo 100%[==================================>] 2.03K --.-KB/s in 0.01s

2022-11-17 15:36:12 (173 KB/s) - ‘docker-ce.repo’ saved [2081/2081]

[root@master yum.repos.d]# yum list | grep docker

ansible-collection-community-docker.noarch 2.6.0-1.el8 epel

containerd.io.x86_64 1.6.9-3.1.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.21-3.el8 docker-ce-stable

docker-ce-cli.x86_64 1:20.10.21-3.el8 docker-ce-stable

docker-ce-rootless-extras.x86_64 20.10.21-3.el8 docker-ce-stable

docker-compose-plugin.x86_64 2.12.2-3.el8 docker-ce-stable

docker-scan-plugin.x86_64 0.21.0-3.el8 docker-ce-stable

pcp-pmda-docker.x86_64 5.3.1-5.el8 AppStream

podman-docker.noarch 3.3.1-9.module_el8.5.0+988+b1f0b741 AppStream

python-docker-tests.noarch 5.0.0-2.el8 epel

python2-dockerpty.noarch 0.4.1-18.el8 epel

python3-docker.noarch 5.0.0-2.el8 epel

python3-dockerpty.noarch 0.4.1-18.el8 epel

standard-test-roles-inventory-docker.noarch 4.10-1.el8 epel

node1 :

[root@node1 yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

--2022-11-17 02:33:03-- https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

正在解析主机 mirrors.aliyun.com (mirrors.aliyun.com)... 119.96.90.240, 119.96.90.237, 119.96.90.236, ...

正在连接 mirrors.aliyun.com (mirrors.aliyun.com)|119.96.90.240|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:2495 (2.4K) [application/octet-stream]

正在保存至: “/etc/yum.repos.d/CentOS-Base.repo”

/etc/yum.repos.d/CentOS-B 100%[==================================>] 2.44K --.-KB/s 用时 0.02s

2022-11-17 02:33:03 (139 KB/s) - 已保存 “/etc/yum.repos.d/CentOS-Base.repo” [2495/2495])

[root@node1 yum.repos.d]# dnf -y install epel-release

[root@node1 yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

--2022-11-17 02:36:15-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

正在解析主机 mirrors.aliyun.com (mirrors.aliyun.com)... 119.96.90.243, 119.96.90.242, 119.96.90.239, ...

正在连接 mirrors.aliyun.com (mirrors.aliyun.com)|119.96.90.243|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:2081 (2.0K) [application/octet-stream]

正在保存至: “docker-ce.repo”

docker-ce.repo 100%[==================================>] 2.03K --.-KB/s 用时 0.01s

2022-11-17 02:36:15 (140 KB/s) - 已保存 “docker-ce.repo” [2081/2081])

[root@node1 yum.repos.d]# Yum list | grep docker

bash: Yum: 未找到命令...

相似命令是: 'yum'

[root@node1 yum.repos.d]# yum list | grep docker

ansible-collection-community-docker.noarch 2.6.0-1.el8 epel

containerd.io.x86_64 1.6.9-3.1.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.21-3.el8 docker-ce-stable

docker-ce-cli.x86_64 1:20.10.21-3.el8 docker-ce-stable

docker-ce-rootless-extras.x86_64 20.10.21-3.el8 docker-ce-stable

docker-compose-plugin.x86_64 2.12.2-3.el8 docker-ce-stable

docker-scan-plugin.x86_64 0.21.0-3.el8 docker-ce-stable

pcp-pmda-docker.x86_64 5.3.1-5.el8 AppStream

podman-docker.noarch 3.3.1-9.module_el8.5.0+988+b1f0b741 AppStream

python-docker-tests.noarch 5.0.0-2.el8 epel

python2-dockerpty.noarch 0.4.1-18.el8 epel

python3-docker.noarch 5.0.0-2.el8 epel

python3-dockerpty.noarch 0.4.1-18.el8 epel

standard-test-roles-inventory-docker.noarch 4.10-1.el8 epel

node2 :

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

--2022-11-17 02:46:13-- https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

正在解析主机 mirrors.aliyun.com (mirrors.aliyun.com)... 119.96.90.239, 119.96.90.236, 119.96.90.237, ...

正在连接 mirrors.aliyun.com (mirrors.aliyun.com)|119.96.90.239|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:2495 (2.4K) [application/octet-stream]

正在保存至: “/etc/yum.repos.d/CentOS-Base.repo”

/etc/yum.repos.d/CentOS-B 100%[==================================>] 2.44K --.-KB/s 用时 0.03s

2022-11-17 02:46:14 (87.9 KB/s) - 已保存 “/etc/yum.repos.d/CentOS-Base.repo” [2495/2495])

[root@node2 yum.repos.d]# dnf -y install epel-release

[root@node2 yum.repos.d]# yum list | grep docker

ansible-collection-community-docker.noarch 2.6.0-1.el8 epel

containerd.io.x86_64 1.6.9-3.1.el8 docker-ce-stable

docker-ce.x86_64 3:20.10.21-3.el8 docker-ce-stable

docker-ce-cli.x86_64 1:20.10.21-3.el8 docker-ce-stable

docker-ce-rootless-extras.x86_64 20.10.21-3.el8 docker-ce-stable

docker-compose-plugin.x86_64 2.12.2-3.el8 docker-ce-stable

docker-scan-plugin.x86_64 0.21.0-3.el8 docker-ce-stable

pcp-pmda-docker.x86_64 5.3.1-5.el8 AppStream

podman-docker.noarch 3.3.1-9.module_el8.5.0+988+b1f0b741 AppStream

python-docker-tests.noarch 5.0.0-2.el8 epel

python2-dockerpty.noarch 0.4.1-18.el8 epel

python3-docker.noarch 5.0.0-2.el8 epel

python3-dockerpty.noarch 0.4.1-18.el8 epel

standard-test-roles-inventory-docker.noarch 4.10-1.el8 epel

安装docker-ce和添加一个配置文件,配置docker仓库加速器

master :

[root@master yum.repos.d]# dnf -y install docker-ce --allowerasing

[root@master yum.repos.d]# systemctl restart docker

[root@master yum.repos.d]# systemctl enable docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@master yum.repos.d]# cat > /etc/docker/daemon.json << EOF

"registry-mirrors": ["https://14lrk6zd.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

> {

> "registry-mirrors": ["https://14lrk6zd.mirror.aliyuncs.com"],

> "exec-opts": ["native.cgroupdriver=systemd"],

> "log-driver": "json-file",

> "log-opts": {

> "max-size": "100m"

> },

> "storage-driver": "overlay2"

> }

> EOF

[root@master yum.repos.d]# systemctl daemon-reload

[root@master yum.repos.d]# systemctl restart docker

node1 :

[root@node1 yum.repos.d]# dnf -y install docker-ce --allowerasing

[root@node1 yum.repos.d]# systemctl restart docker

[root@node1 yum.repos.d]# systemctl enable docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@node1 yum.repos.d]# cat > /etc/docker/daemon.json << EOF

> {

> "registry-mirrors": ["https://14lrk6zd.mirror.aliyuncs.com"],

> "exec-opts": ["native.cgroupdriver=systemd"],

> "log-driver": "json-file",

> "log-opts": {

> "max-size": "100m"

> },

> "storage-driver": "overlay2"

> }

> EOF

[root@node1 yum.repos.d]# systemctl daemon-reload

[root@node1 yum.repos.d]# systemctl restart docker

node2 :

[root@node2 yum.repos.d]# dnf -y install docker-ce --allowerasing

[root@node2 yum.repos.d]# systemctl restart docker

[root@node2 yum.repos.d]# systemctl enable docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@node2 yum.repos.d]# cat > /etc/docker/daemon.json << EOF

> {

> "registry-mirrors": ["https://14lrk6zd.mirror.aliyuncs.com"],

> "exec-opts": ["native.cgroupdriver=systemd"],

> "log-driver": "json-file",

> "log-opts": {

> "max-size": "100m"

> },

> "storage-driver": "overlay2"

> }

> EOF

[root@node2 yum.repos.d]# systemctl daemon-reload

[root@node2 yum.repos.d]# systemctl restart docker

安装kubernetes组件

由于kubernetes的镜像在国外,速度比较慢,这里切换成国内的镜像源

master :

[root@master yum.repos.d]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@master yum.repos.d]# yum list | grep kube

cri-tools.x86_64 1.25.0-0 kubernetes

kubeadm.x86_64 1.25.4-0 kubernetes

kubectl.x86_64 1.25.4-0 kubernetes

kubelet.x86_64 1.25.4-0 kubernetes

kubernetes-cni.x86_64 1.1.1-0 kubernetes

libguac-client-kubernetes.x86_64 1.4.0-5.el8 epel

python3-kubernetes.noarch 1:11.0.0-6.el8 epel

python3-kubernetes-tests.noarch 1:11.0.0-6.el8 epel

rkt.x86_64 1.27.0-1 kubernetes

rsyslog-mmkubernetes.x86_64 8.2102.0-5.el8 AppStream

node1 :

[root@node1 yum.repos.d]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@node1 yum.repos.d]# yum list | grep kube

cri-tools.x86_64 1.25.0-0 kubernetes

kubeadm.x86_64 1.25.4-0 kubernetes

kubectl.x86_64 1.25.4-0 kubernetes

kubelet.x86_64 1.25.4-0 kubernetes

kubernetes-cni.x86_64 1.1.1-0 kubernetes

libguac-client-kubernetes.x86_64 1.4.0-5.el8 epel

python3-kubernetes.noarch 1:11.0.0-6.el8 epel

python3-kubernetes-tests.noarch 1:11.0.0-6.el8 epel

rkt.x86_64 1.27.0-1 kubernetes

rsyslog-mmkubernetes.x86_64 8.2102.0-5.el8 AppStream

node2 :

[root@node2 yum.repos.d]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@node2 yum.repos.d]# yum list | grep kube

cri-tools.x86_64 1.25.0-0 kubernetes

kubeadm.x86_64 1.25.4-0 kubernetes

kubectl.x86_64 1.25.4-0 kubernetes

kubelet.x86_64 1.25.4-0 kubernetes

kubernetes-cni.x86_64 1.1.1-0 kubernetes

libguac-client-kubernetes.x86_64 1.4.0-5.el8 epel

python3-kubernetes.noarch 1:11.0.0-6.el8 epel

python3-kubernetes-tests.noarch 1:11.0.0-6.el8 epel

rkt.x86_64 1.27.0-1 kubernetes

rsyslog-mmkubernetes.x86_64 8.2102.0-5.el8 AppStream

安装kubeadm kubelet kubectl工具

master :

[root@master yum.repos.d]# dnf -y install kubeadm kubelet kubectl

[root@master yum.repos.d]# systemctl restart kubelet

[root@master yum.repos.d]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

node1 :

[root@node1 yum.repos.d]# dnf -y install kubeadm kubelet kubectl

[root@node1 yum.repos.d]# systemctl restart kubelet

[root@node1 yum.repos.d]#

[root@node1 yum.repos.d]#

[root@node1 yum.repos.d]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

node2 :

[root@node2 yum.repos.d]# dnf -y install kubeadm kubelet kubectl

[root@node2 yum.repos.d]# systemctl restart kubelet

[root@node2 yum.repos.d]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

配置containerd

为确保后面集群初始化及加入集群能够成功执行,需要配置containerd的配置文件/etc/containerd/config.toml,此操作需要在所有节点执行

将/etc/containerd/config.toml文件中的k8s镜像仓库改为registry.aliyuncs.com/google_containers

然后重启并设置containerd服务

master :

[root@master ~]# containerd config default > /etc/containerd/config.toml

[root@master ~]# vim /etc/containerd/config.toml

[root@master ~]# systemctl restart containerd

[root@master ~]# systemctl enable containerd

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /usr/lib/systemd/system/containerd.service.

node1 :

[root@node1 ~]# containerd config default > /etc/containerd/config.toml

[root@node1 ~]# vim /etc/containerd/config.toml

[root@node1 ~]# systemctl restart containerd

[root@node1 ~]# systemctl enable containerd

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /usr/lib/systemd/system/containerd.service.

node2 :

[root@node2 ~]# containerd config default > /etc/containerd/config.toml

[root@node2 ~]# vim /etc/containerd/config.toml

[root@node2 ~]# systemctl restart containerd

[root@node2 ~]# systemctl enable containerd

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /usr/lib/systemd/system/containerd.service.

部署k8s的master节点(在master节点运行)

[root@master ~]# kubeadm init \

> --apiserver-advertise-address=192.168.193.128 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.25.4 \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16

//建议将初始化内容保存在某个文件中

[root@master ~]# vim k8s

[root@master ~]# cat k8s

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.193.128:6443 --token r72qo2.hat90535pgenesgy \

--discovery-token-ca-cert-hash sha256:5fca25770cc037e2f5f23b540a71657145e80b403c9809d93d48cfb0c9369e91

配置环境变量

[root@master ~]# vim /etc/profile.d/k8s.sh

[root@master ~]# cat /etc/profile.d/k8s.sh

export KUBECONFIG=/etc/kubernetes/admin.conf

[root@master ~]# source /etc/profile.d/k8s.sh

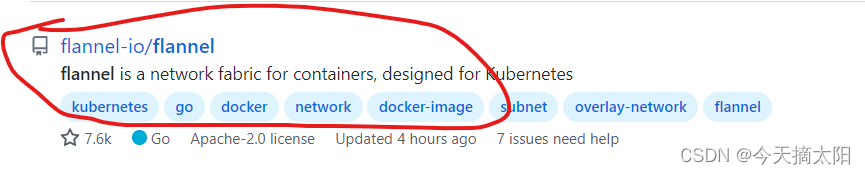

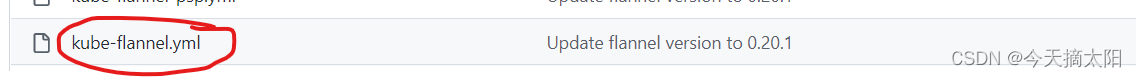

5、安装pod网络插件(CNI/flannel)

先wget下载 https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml 如果访问不了下载不了就手动编辑文件 ,去GitHub官网查找flannel

找到这个文件打开

打开这个yml文件复制粘贴里面的东西

[root@master ~]# vim kube-flannel.yml

[root@master ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

将node节点加入到k8s集群中

[root@node1 ~]# kubeadm join 192.168.193.128:6443 --token r72qo2.hat90535pgenesgy \

> --discovery-token-ca-cert-hash sha256:5fca25770cc037e2f5f23b540a71657145e80b403c9809d93d48cfb0c9369e91

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node2 ~]# kubeadm join 192.168.193.128:6443 --token r72qo2.hat90535pgenesgy \

> --discovery-token-ca-cert-hash sha256:5fca25770cc037e2f5f23b540a71657145e80b403c9809d93d48cfb0c9369e91

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

kubectl get pods 查看pod状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master.example.com Ready control-plane 41m v1.25.4

node1.example.com Ready <none> 4m27s v1.25.4

node2.example.com Ready <none> 4m25s v1.25.4

使用k8s集群创建一个pod,运行nginx容器,然后进行测试

[root@master ~]# kubectl create deployment nginx --image nginx

deployment.apps/nginx created

[root@master ~]# kubectl create deployment httpd --image httpd

deployment.apps/httpd created

[root@master ~]# kubectl expose deployment nginx --port 80 --type NodePort

service/nginx exposed

[root@master ~]#

[root@master ~]#

[root@master ~]# kubectl expose deployment httpd --port 80 --type NodePort

service/httpd exposed

[root@master ~]# kubectl get pods -o wide 查看容器在哪个节点中运行的

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

httpd-65bfffd87f-9dqm2 1/1 Running 0 96s 10.244.2.2 node2.example.com <none> <none>

nginx-76d6c9b8c-wblrh 1/1 Running 0 102s 10.244.1.2 node1.example.com <none> <none>

[root@master ~]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

httpd NodePort 10.97.237.88 <none> 80:30050/TCP 84s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 43m

nginx NodePort 10.110.93.150 <none> 80:32407/TCP 95s

修改默认网页

[root@master ~]# kubectl exec -it pod/nginx-76d6c9b8c-wblrh -- /bin/bash

root@nginx-76d6c9b8c-wblrh:/# cd /usr/share/nginx/html/

root@nginx-76d6c9b8c-wblrh:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-76d6c9b8c-wblrh:/usr/share/nginx/html# echo "hi zhan nihao" > index.html

root@nginx-76d6c9b8c-wblrh:/usr/share/nginx/html#

访问http://节点ip:端口

[root@master ~]# curl 10.244.2.2

<html><body><h1>It works!</h1></body></html>

[root@master ~]# curl 10.244.1.2

hi zhan nihao

[root@master ~]#

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-LPHmFUma-1668689843799)(./1668689675581.png)]](https://img-blog.csdnimg.cn/3d2f4ec1f0ec42a3a71e40adf897c7da.png)

![[附源码]java毕业设计剧本杀门店管理系统-](https://img-blog.csdnimg.cn/34d494fdd8a4431dbb79ae904104e667.png)

![[附源码]Python计算机毕业设计《数据库系统原理》在线学习平台](https://img-blog.csdnimg.cn/2d31b8b732e54684a89fca7302c289a5.png)