This is a Pytorch implementation 实现 of Mask R-CNN that is in large parts based on Matterport's Mask_RCNN. Matterport's repository is an implementation on Keras and TensorFlow. The following parts of the README are excerpts 摘录 from the Matterport README. Details on the requirements, training on MS COCO and detection results for this repository can be found at the end of the document.

The Mask R-CNN model generates bounding boxes and segmentation masks 分割掩码 for each instance of an object in the image. It's based on Feature Pyramid Network (FPN) and a ResNet101 backbone.

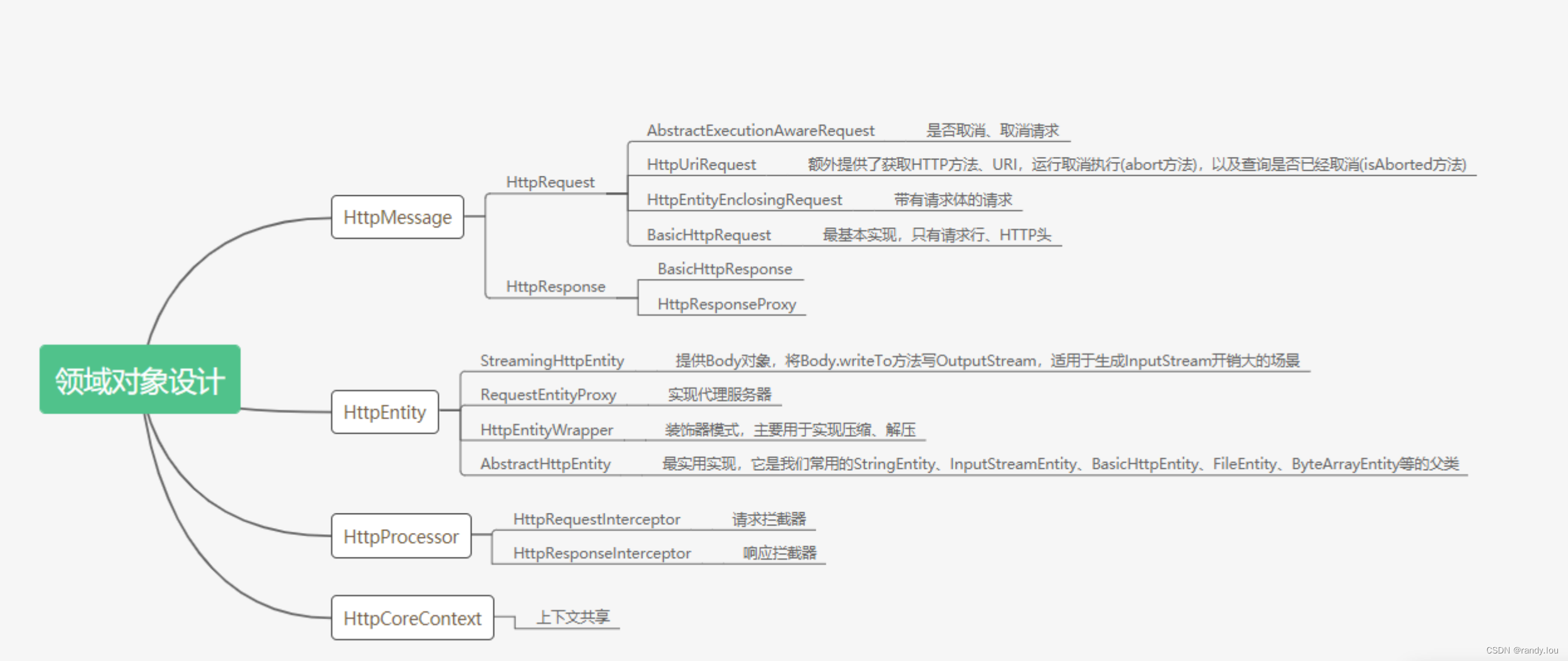

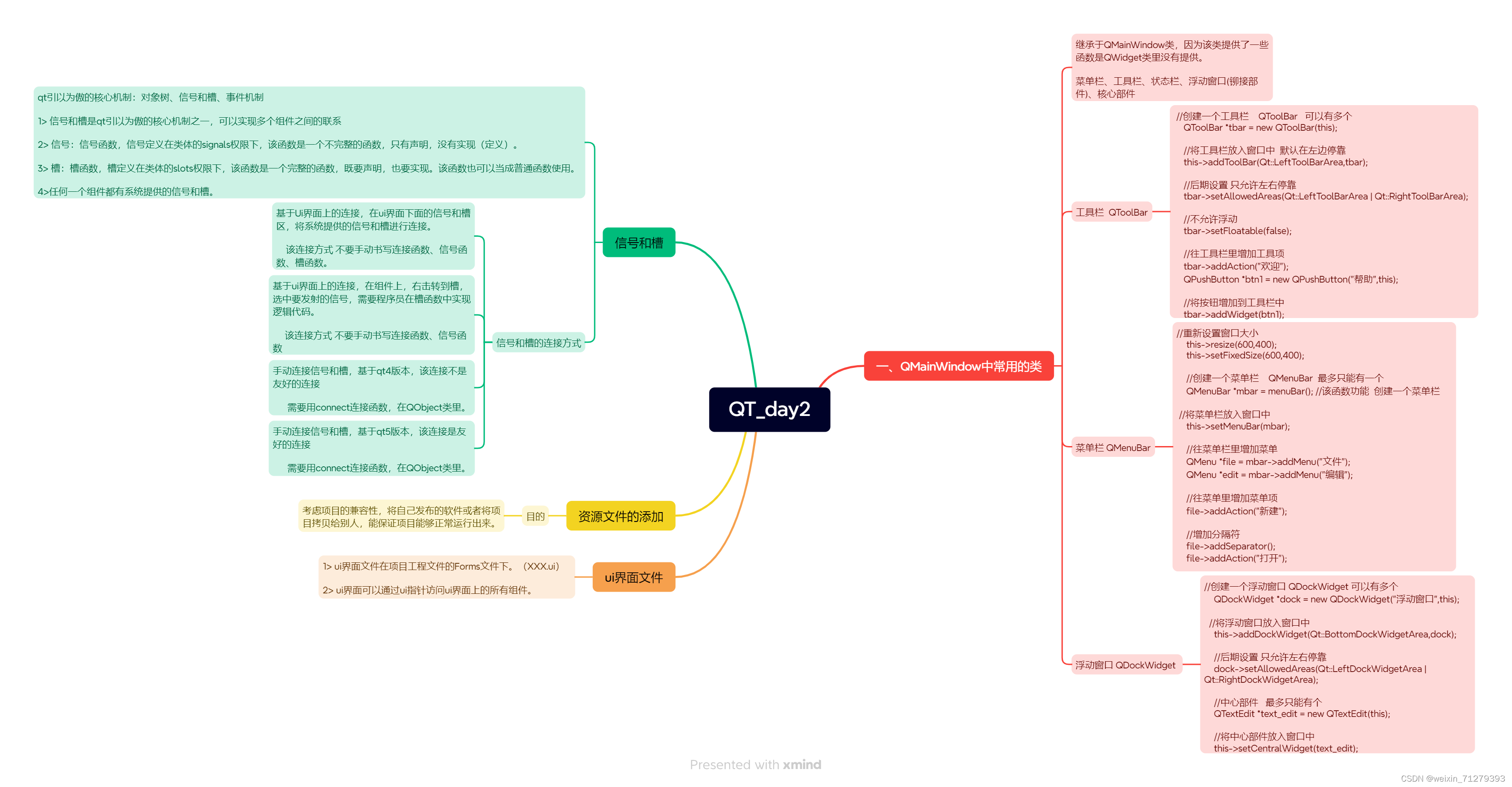

The next four images visualize different stages in the detection pipeline 检测管道:

1. Anchor sorting and filtering 锚排序和过滤

The Region Proposal Network proposes bounding boxes that are likely to belong to an object. Positive and negative anchors along with anchor box refinement are visualized. 区域建议网络建议可能属于某个对象的边界框。正锚和负锚以及锚框细化被可视化。

2. Bounding Box Refinement

This is an example of final detection boxes (dotted lines) and the refinement applied to them (solid lines) in the second stage.

3. Mask Generation

Examples of generated masks 生成遮罩的示例. These then get scaled 缩放这些图像 and placed on the image in the right location 放置在图像的正确位置 .

4. Composing 合成 the different pieces into a final result

Requirements

- Python 3

- Pytorch 0.3

- matplotlib, scipy, skimage, h5py

Installation

1.Clone this repository.

git clone https://github.com/multimodallearning/pytorch-mask-rcnn.git2. We use functions from two more repositories that need to be build with the right --arch option for cuda support. The two functions are Non-Maximum Suppression 非最大抑制 from ruotianluo's pytorch-faster-rcnn repository and longcw's RoiAlign.

cd nms/src/cuda/

nvcc -c -o nms_kernel.cu.o nms_kernel.cu -x cu -Xcompiler -fPIC -arch=[arch]

cd ../../

python build.py

cd ../

cd roialign/roi_align/src/cuda/

nvcc -c -o crop_and_resize_kernel.cu.o crop_and_resize_kernel.cu -x cu -Xcompiler -fPIC -arch=[arch]

cd ../../

python build.py

cd ../../3. As we use the COCO dataset 当我们使用COCO数据集时 install the Python COCO API and create a symlink 符号链接。 .

4.Download the pretrained models 预训练模型 on COCO and ImageNet from Google Drive.

Demo

To test your installation simply run the demo with 只需使用运行演示

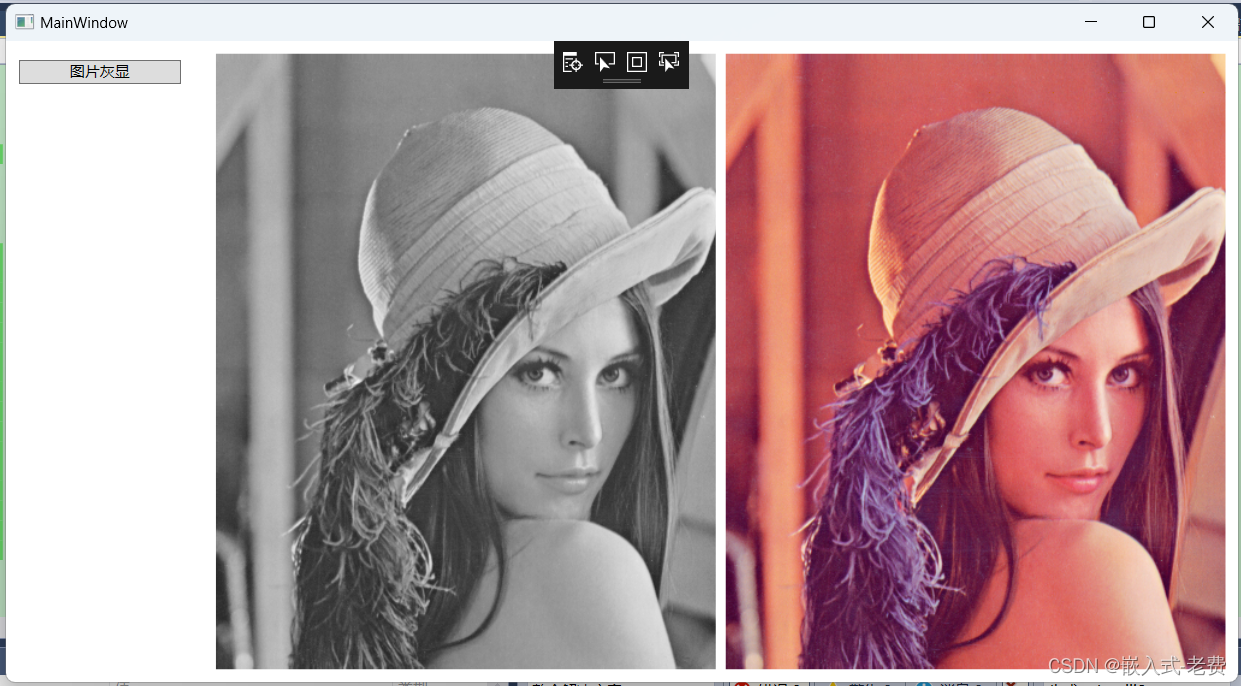

python demo.pyIt works on CPU or GPU and the result should look like this:

Training on COCO

Training and evaluation code is in coco.py. You can run it from the command line as such:

# Train a new model starting from pre-trained COCO weights

python coco.py train --dataset=/path/to/coco/ --model=coco

# Train a new model starting from ImageNet weights

python coco.py train --dataset=/path/to/coco/ --model=imagenet

# Continue training a model that you had trained earlier

python coco.py train --dataset=/path/to/coco/ --model=/path/to/weights.h5

# Continue training the last model you trained. This will find

# the last trained weights in the model directory.

python coco.py train --dataset=/path/to/coco/ --model=lastIf you have not yet downloaded the COCO dataset you should run the command with the download option set, e.g.:

# Train a new model starting from pre-trained COCO weights

python coco.py train --dataset=/path/to/coco/ --model=coco --download=trueYou can also run the COCO evaluation code with:

# Run COCO evaluation on the last trained model

python coco.py evaluate --dataset=/path/to/coco/ --model=lastThe training schedule, learning rate, and other parameters can be set in coco.py.

Results

COCO results for bounding box and segmentation are reported based on training with the default configuration and backbone initialized with pretrained ImageNet weights. Used metric is AP on IoU=0.50:0.95.

![二叉树的层序遍历[中等]](https://img-blog.csdnimg.cn/direct/28fbc84b21fa4685ad55efbcf90b82b1.png)

![【算法每日一练]-图论(保姆级教程篇12 tarjan篇)#POJ3352道路建设 #POJ2553图的底部 #POJ1236校园网络 #缩点](https://img-blog.csdnimg.cn/f68d05a84ef541f595c0886ca4d6a348.png)

![[ndss 2023]确保联邦敏感主题分类免受中毒攻击](https://img-blog.csdnimg.cn/direct/8163200e253d4d4ca31a599048128579.png)