1、背景

在学习audio的过程中,看到了大神zyuanyun的博客,在博客的结尾,大神留下了这些问题:

但是大神没有出后续的博文来说明audio环形缓冲队列的具体实现,这勾起了我强烈的好奇心。经过一段时间的走读代码,同时阅读其他大佬的博文,把环形缓冲队列的内容整理出来。

2、AudioPolicyService、AudioFlinger及相关类

AudioPolicyService,简称APS,是负责音频策略的制定者:比如什么时候打开音频接口设备、某种Stream类型的音频对应什么设备等等;AudioFlinger,简称AF,负责音频策略的具体执行,比如:如何与音频设备通信,如何维护现有系统中的音频设备,以及多个音频流的混音如何处理等。

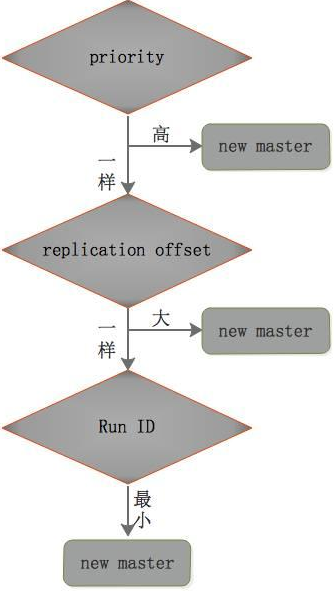

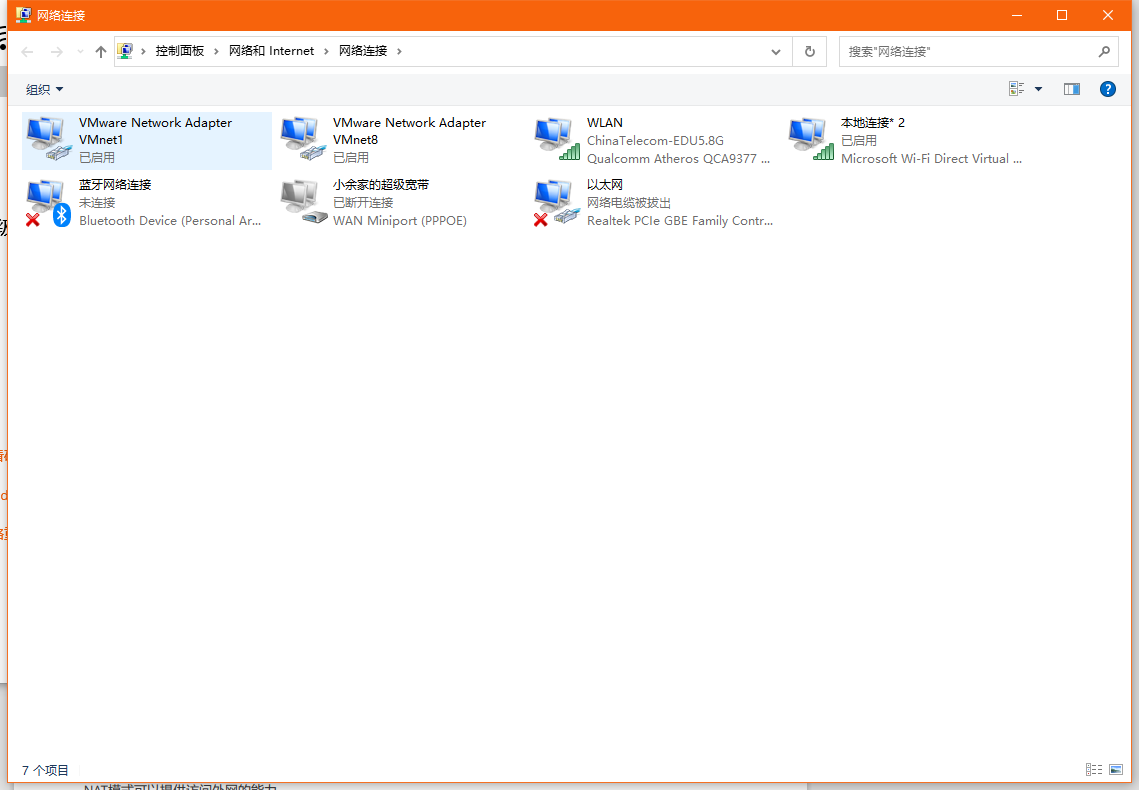

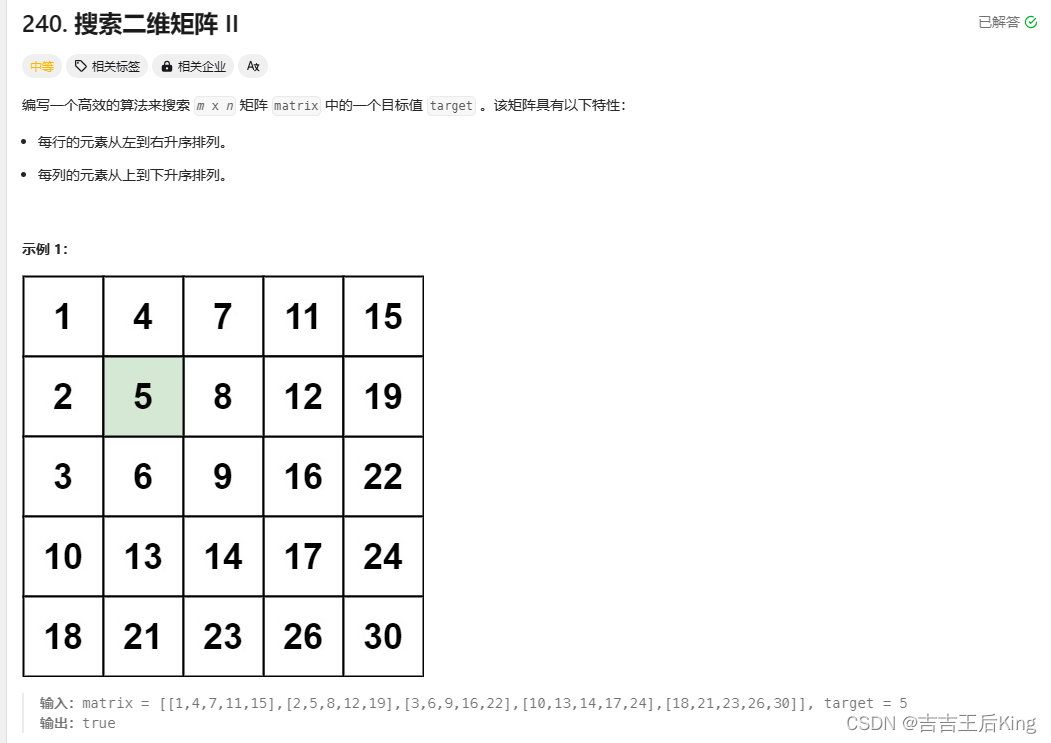

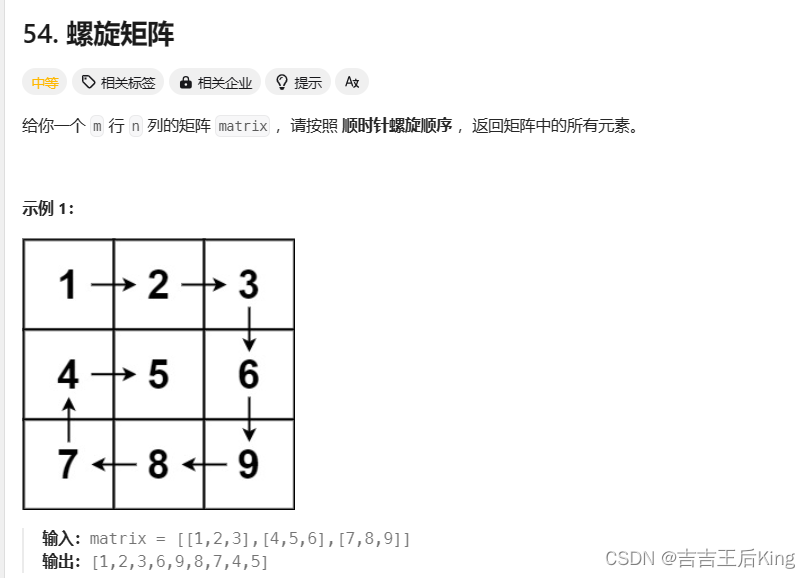

环形缓冲队列大致可以以下面这幅图来描述其流程:

3、Track的创建

AudioTrack的创建经过漫长的调用链,最终是/frameworks/av/services/audioflinger/Tracks.cpp里完成创建的。

// TrackBase constructor must be called with AudioFlinger::mLock held

AudioFlinger::ThreadBase::TrackBase::TrackBase()

{

//计算最小帧大小

size_t minBufferSize = buffer == NULL ? roundup(frameCount) : frameCount;

……

minBufferSize *= mFrameSize;

size_t size = sizeof(audio_track_cblk_t);

if (buffer == NULL && alloc == ALLOC_CBLK) {

// check overflow when computing allocation size for streaming tracks.

if (size > SIZE_MAX - bufferSize) {

android_errorWriteLog(0x534e4554, "34749571");

return;

}

size += bufferSize;

}

if (client != 0) {

//为客户端分配内存

mCblkMemory = client->heap()->allocate(size);

……

} else {

mCblk = (audio_track_cblk_t *) malloc(size);

……

}

// construct the shared structure in-place.

if (mCblk != NULL) {

// 这是 C++ 的 placement new(定位创建对象)语法:new(@BUFFER) @CLASS();

// 可以在特定内存位置上构造一个对象

// 这里,在匿名共享内存首地址上构造了一个 audio_track_cblk_t 对象

// 这样 AudioTrack 与 AudioFlinger 都能访问这个 audio_track_cblk_t 对象了

new(mCblk) audio_track_cblk_t();

switch (alloc) {

……

case ALLOC_CBLK:

// clear all buffers

if (buffer == NULL) {

// 数据 FIFO 的首地址紧靠控制块(audio_track_cblk_t)之后

// | |

// | -------------------> mCblkMemory <--------------------- |

// | |

// +--------------------+------------------------------------+

// | audio_track_cblk_t | Buffer |

// +--------------------+------------------------------------+

// ^ ^

// | |

// mCblk mBuffer

//这里mCblk被强制转型成占用内存1字节的char类型,这个"1"在后面会用到

mBuffer = (char*)mCblk + sizeof(audio_track_cblk_t);

memset(mBuffer, 0, bufferSize);

} else {

// 数据传输模式为 MODE_STATIC/TRANSFER_SHARED 时,直接指向 sharedBuffer

// sharedBuffer 是应用进程分配的匿名共享内存,应用进程已经一次性把数据

// 写到 sharedBuffer 来了,AudioFlinger 可以直接从这里读取

// +--------------------+ +-----------------------------------+

// | audio_track_cblk_t | | sharedBuffer |

// +--------------------+ +-----------------------------------+

// ^ ^

// | |

// mCblk mBuffer

mBuffer = buffer;

}

break;

……

}

……

}

}

4、生产者向共享内存写入数据

4.1

4.2 向共享内存写入数据

//framework/av/media/libaudioclient/AudioTrack.cpp

ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking)

{

……

size_t written = 0;

Buffer audioBuffer;

while (userSize >= mFrameSize) {

// 单帧数据量 frameSize = channelCount * bytesPerSample

// 对于双声道,16位采样的音频数据来说,frameSize = 2 * 2 = 4(bytes)

// 用户传入的数据帧数 frameCount = userSize / frameSize

audioBuffer.frameCount = userSize / mFrameSize;

// obtainBuffer() 从 FIFO 上得到一块可用区间

status_t err = obtainBuffer(&audioBuffer,

blocking ? &ClientProxy::kForever : &ClientProxy::kNonBlocking);

……

size_t toWrite = audioBuffer.size;

memcpy(audioBuffer.i8, buffer, toWrite);

buffer = ((const char *) buffer) + toWrite;

userSize -= toWrite;

written += toWrite;

releaseBuffer(&audioBuffer);

}

……

return written;

}

status_t AudioTrack::obtainBuffer(Buffer* audioBuffer, const struct timespec *requested,

struct timespec *elapsed, size_t *nonContig)

{

// previous and new IAudioTrack sequence numbers are used to detect track re-creation

uint32_t oldSequence = 0;

uint32_t newSequence;

Proxy::Buffer buffer;

status_t status = NO_ERROR;

static const int32_t kMaxTries = 5;

int32_t tryCounter = kMaxTries;

do {

// obtainBuffer() is called with mutex unlocked, so keep extra references to these fields to

// keep them from going away if another thread re-creates the track during obtainBuffer()

sp<AudioTrackClientProxy> proxy;

sp<IMemory> iMem;

{ // start of lock scope

AutoMutex lock(mLock);

……

// Keep the extra references

proxy = mProxy;

iMem = mCblkMemory;

……

} // end of lock scope

buffer.mFrameCount = audioBuffer->frameCount;

// FIXME starts the requested timeout and elapsed over from scratch

status = proxy->obtainBuffer(&buffer, requested, elapsed);

} while (((status == DEAD_OBJECT) || (status == NOT_ENOUGH_DATA)) && (tryCounter-- > 0));

audioBuffer->frameCount = buffer.mFrameCount;

audioBuffer->size = buffer.mFrameCount * mFrameSize;

audioBuffer->raw = buffer.mRaw;

if (nonContig != NULL) {

*nonContig = buffer.mNonContig;

}

return status;

}

//framework/av/media/libaudioclient/AudioTrackShared.cpp

__attribute__((no_sanitize("integer")))

status_t ClientProxy::obtainBuffer(Buffer* buffer, const struct timespec *requested,

struct timespec *elapsed)

{

……

struct timespec before;

bool beforeIsValid = false;

audio_track_cblk_t* cblk = mCblk;

bool ignoreInitialPendingInterrupt = true;

for (;;) {

int32_t flags = android_atomic_and(~CBLK_INTERRUPT, &cblk->mFlags);

……

int32_t front;

int32_t rear;

if (mIsOut) {

front = android_atomic_acquire_load(&cblk->u.mStreaming.mFront);

rear = cblk->u.mStreaming.mRear;

} else {

// On the other hand, this barrier is required.

rear = android_atomic_acquire_load(&cblk->u.mStreaming.mRear);

front = cblk->u.mStreaming.mFront;

}

// write to rear, read from front

ssize_t filled = audio_utils::safe_sub_overflow(rear, front);

……

ssize_t adjustableSize = (ssize_t) getBufferSizeInFrames();

ssize_t avail = (mIsOut) ? adjustableSize - filled : filled;

if (avail < 0) {

avail = 0;

} else if (avail > 0) {

// 'avail' may be non-contiguous, so return only the first contiguous chunk

size_t part1;

if (mIsOut) {

rear &= mFrameCountP2 - 1;

part1 = mFrameCountP2 - rear;

} else {

front &= mFrameCountP2 - 1;

part1 = mFrameCountP2 - front;

}

if (part1 > (size_t)avail) {

part1 = avail;

}

if (part1 > buffer->mFrameCount) {

part1 = buffer->mFrameCount;

}

buffer->mFrameCount = part1;

buffer->mRaw = part1 > 0 ?

&((char *) mBuffers)[(mIsOut ? rear : front) * mFrameSize] : NULL;

buffer->mNonContig = avail - part1;

mUnreleased = part1;

status = NO_ERROR;

break;

}

struct timespec remaining;

const struct timespec *ts;

……

int32_t old = android_atomic_and(~CBLK_FUTEX_WAKE, &cblk->mFutex);

if (!(old & CBLK_FUTEX_WAKE)) {

……

errno = 0;

(void) syscall(__NR_futex, &cblk->mFutex,

mClientInServer ? FUTEX_WAIT_PRIVATE : FUTEX_WAIT, old & ~CBLK_FUTEX_WAKE, ts);

……

}

}

end:

……

return status;

}

void ClientProxy::releaseBuffer(Buffer* buffer)

{

size_t stepCount = buffer->mFrameCount;

……

mUnreleased -= stepCount;

audio_track_cblk_t* cblk = mCblk;

// Both of these barriers are required

if (mIsOut) {

int32_t rear = cblk->u.mStreaming.mRear;

android_atomic_release_store(stepCount + rear, &cblk->u.mStreaming.mRear);

} else {

int32_t front = cblk->u.mStreaming.mFront;

android_atomic_release_store(stepCount + front, &cblk->u.mStreaming.mFront);

}

}

4、消费者从共享内存读数据

找到当前活动的track需要经过很长的准备及调用链,可以参考这篇博客。

4.1 PlaybackThread的混音

代码路径:frameworks/av/services/audioflinger/Threads.cpp

void AudioFlinger::MixerThread::threadLoop_mix()

{

// mix buffers...

mAudioMixer->process();

mCurrentWriteLength = mSinkBufferSize;

……

}

process()方法之后进入track的混音流程,代码位于frameworks/av/media/libaudioprocessing/AudioMixer.cpp。来看process()的定义:

using process_hook_t = void(AudioMixer::*)();

process_hook_t mHook = &AudioMixer::process__nop;

void invalidate() {

mHook = &AudioMixer::process__validate;

}

void process__validate();

void process__nop();

void process__genericNoResampling();

void process__genericResampling();

void process__oneTrack16BitsStereoNoResampling();

template <int MIXTYPE, typename TO, typename TI, typename TA>

void process__noResampleOneTrack();

hook是一个函数指针,根据不同场景会分别指向不同函数实现。详细可以参考这篇博客,以及这篇博客。以process__nop方法为例:

void AudioMixer::process__nop()

{

for (const auto &pair : mGroups) {

const auto &group = pair.second;

const std::shared_ptr<Track> &t = mTracks[group[0]];

memset(t->mainBuffer, 0,

mFrameCount * audio_bytes_per_frame(

t->mMixerChannelCount + t->mMixerHapticChannelCount, t->mMixerFormat));

// now consume data

for (const int name : group) {

const std::shared_ptr<Track> &t = mTracks[name];

size_t outFrames = mFrameCount;

while (outFrames) {

t->buffer.frameCount = outFrames;

t->bufferProvider->getNextBuffer(&t->buffer);

if (t->buffer.raw == NULL) break;

outFrames -= t->buffer.frameCount;

t->bufferProvider->releaseBuffer(&t->buffer);

}

}

}

}

frameworks/av/services/audioflinger/Tracks.cpp

status_t AudioFlinger::PlaybackThread::Track::getNextBuffer(AudioBufferProvider::Buffer* buffer)

{

ServerProxy::Buffer buf;

size_t desiredFrames = buffer->frameCount;

buf.mFrameCount = desiredFrames;

status_t status = mServerProxy->obtainBuffer(&buf);

buffer->frameCount = buf.mFrameCount;

buffer->raw = buf.mRaw;

if (buf.mFrameCount == 0 && !isStopping() && !isStopped() && !isPaused()) {

mAudioTrackServerProxy->tallyUnderrunFrames(desiredFrames);

} else {

mAudioTrackServerProxy->tallyUnderrunFrames(0);

}

return status;

}

void AudioFlinger::PlaybackThread::Track::releaseBuffer(AudioBufferProvider::Buffer* buffer)

{

interceptBuffer(*buffer);

TrackBase::releaseBuffer(buffer);

}

/frameworks/av/media/libaudioclient/AudioTrackShared.cpp

status_t ServerProxy::obtainBuffer(Buffer* buffer, bool ackFlush)

{

{

audio_track_cblk_t* cblk = mCblk;

// compute number of frames available to write (AudioTrack) or read (AudioRecord),

// or use previous cached value from framesReady(), with added barrier if it omits.

int32_t front;

int32_t rear;

// See notes on barriers at ClientProxy::obtainBuffer()

if (mIsOut) {

flushBufferIfNeeded(); // might modify mFront

rear = getRear();

front = cblk->u.mStreaming.mFront;

} else {

front = android_atomic_acquire_load(&cblk->u.mStreaming.mFront);

rear = cblk->u.mStreaming.mRear;

}

……

// 'availToServer' may be non-contiguous, so return only the first contiguous chunk

size_t part1;

if (mIsOut) {

front &= mFrameCountP2 - 1;

part1 = mFrameCountP2 - front;

} else {

rear &= mFrameCountP2 - 1;

part1 = mFrameCountP2 - rear;

}

if (part1 > availToServer) {

part1 = availToServer;

}

size_t ask = buffer->mFrameCount;

……

}

int32_t AudioTrackServerProxy::getRear() const

{

const int32_t stop = android_atomic_acquire_load(&mCblk->u.mStreaming.mStop);

const int32_t rear = android_atomic_acquire_load(&mCblk->u.mStreaming.mRear);

const int32_t stopLast = mStopLast.load(std::memory_order_acquire);

……

return rear;

}

5、总结

经过一段时间的代码走读,能够回答文章开头提出的部分问题,但是诸如“读写指针线程安全”、“Futex同步机制”等问题现阶段还回答不上来,以后有机会再深入研究下。