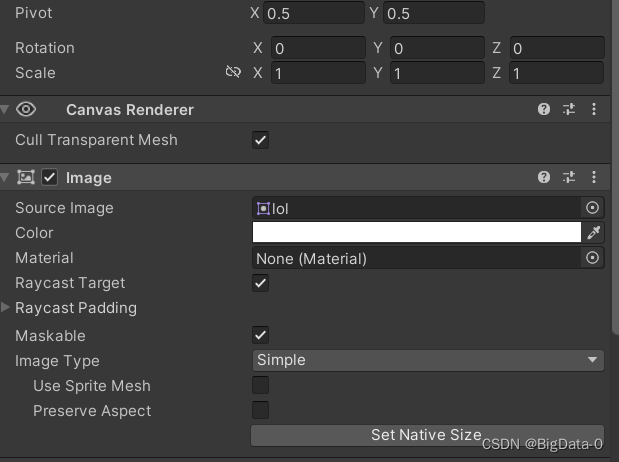

unity3d 自定义的图片无法放入source image中

news2026/2/11 7:55:32

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.coloradmin.cn/o/1280809.html

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈,一经查实,立即删除!相关文章

QML中常见布局方法

目录 引言常见方法锚定(anchors)定位器Row、ColumnGridFlow 布局管理器RowLayout、ColumnLayoutGridLayoutStackLayout 总结 引言

UI界面由诸多元素构成,如Label、Button、Input等等,各种元素需要按照一定规律进行排布才能提高界…

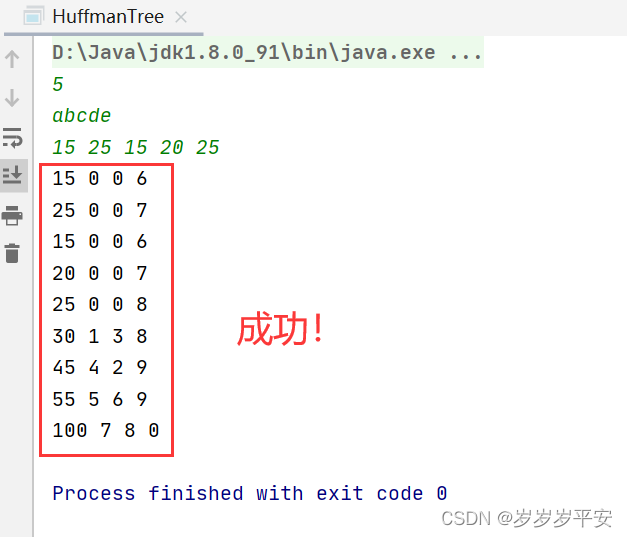

Java数据结构之《构造哈夫曼树》题目

一、前言: 这是怀化学院的:Java数据结构中的一道难度中等(偏难理解)的一道编程题(此方法为博主自己研究,问题基本解决,若有bug欢迎下方评论提出意见,我会第一时间改进代码,谢谢!) 后面其他编程题…

kgma转换flac格式、酷狗下载转换车载模式能听。

帮朋友下载几首歌到U盘里、发现kgma格式不能识别出来,这是酷狗加密过的格式,汽车不识别,需要转换成mp3或者flac格式,网上的一些辣鸡软件各种收费、限制、广告,后来发现一个宝藏网站,可以在线免费转换成flac…

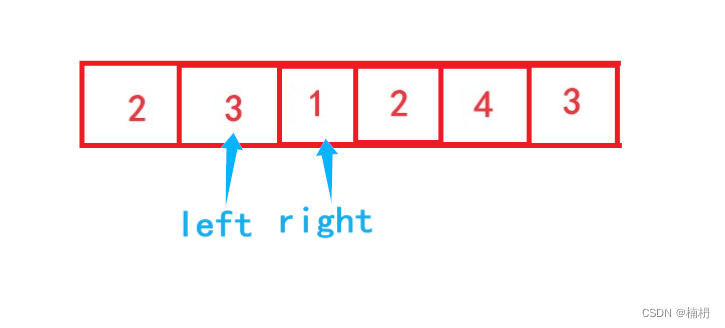

长度最小的子数组(Java详解)

目录

题目描述

题解

思路分析

暴力枚举代码

滑动窗口代码 题目描述

给定一个含有 n 个正整数的数组和一个正整数 target 。

找出该数组中满足其和 ≥ target 的长度最小的 连续子数组 [numsl, numsl1, ..., numsr-1, numsr] ,并返回其长度。如果不存在符合条…

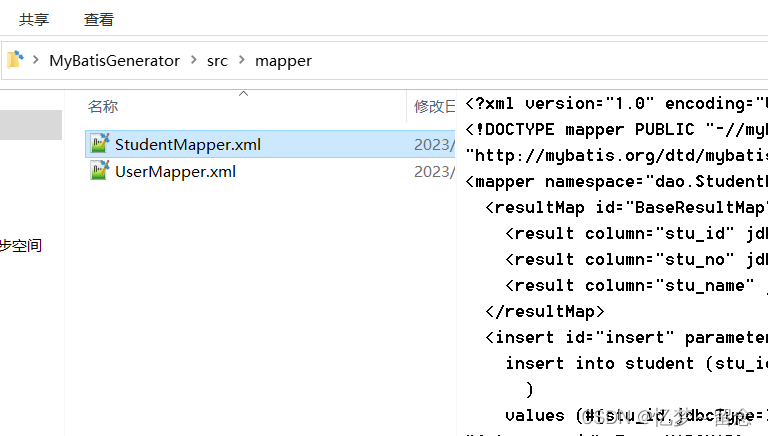

MyBatis自动生成代码(扩展)

可以利用Mybatis-Generator来帮我们自动生成文件

1、自动生成实体类 可以帮助我们针对数据库中的每张表自动生成实体类

2、自动生成SQL映射文件 可以帮助我们针对每张表自动生成SQL配置文件,配置文件里已经定义好对于该表的增删改查的SQL以及映射

3、自动生成接…

数据层融合、特征层融合和决策层融合是三种常见的数据融合方式!!

文章目录 一、数据融合的方式有什么二、数据层融合三、特征层融合:四、决策层融合: 一、数据融合的方式有什么

数据层融合、特征层融合和决策层融合是三种常见的数据融合方式。

二、数据层融合

定义:数据层融合也称像素级融合,…

Chat-GPT原理

GPT原理

核心是基于Transformer 架构

英文原文:

Transformers are based on the “attention mechanism,” which allows the model to pay more attention to some inputs than others, regardless of where they show up in the input sequence. For exampl…

10 分钟解释 StyleGAN

一、说明 G在过去的几年里,生成对抗网络一直是生成内容的首选机器学习技术。看似神奇地将随机输入转换为高度详细的输出,它们已在生成图像、生成音乐甚至生成药物方面找到了应用。 StyleGAN是一种真正推动 GAN 最先进技术向前发展的 GAN 类型。当Karras …

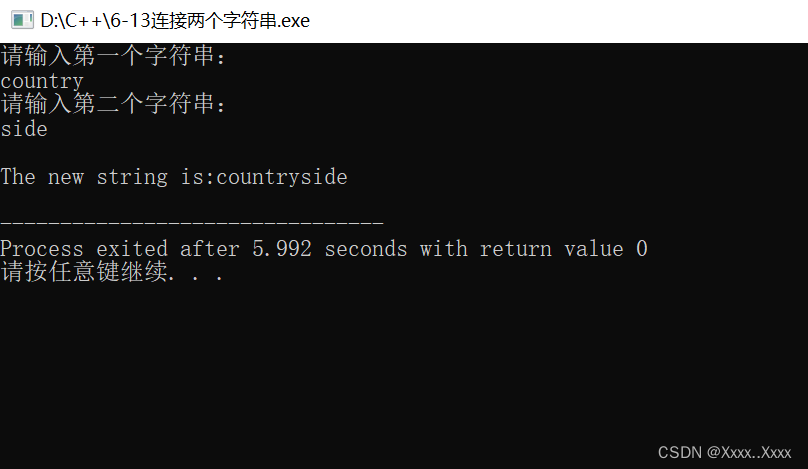

6-13连接两个字符串

#include<stdio.h>

int main(){int i0,j0;char s1[222],s2[333];printf("请输入第一个字符串:\n");gets(s1);//scanf("%s",s1);printf("请输入第二个字符串:\n");gets(s2);while(s1[i]!\0)i;while(s2[j]!\0)s1[i]s2…

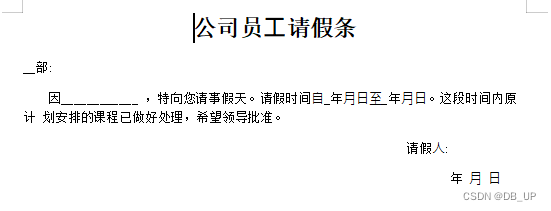

python--自动化办公(Word)

python自动化办公之—Word python-docx库

1、安装python-docx库

pip install python-docx2、基本语法

1、打开文档

document Document()

2、加入标题

document.add_heading(总标题,0)

document.add_heading(⼀级标题,1)

document.add_heading(⼆级标题,2)

3、添加文本

para…

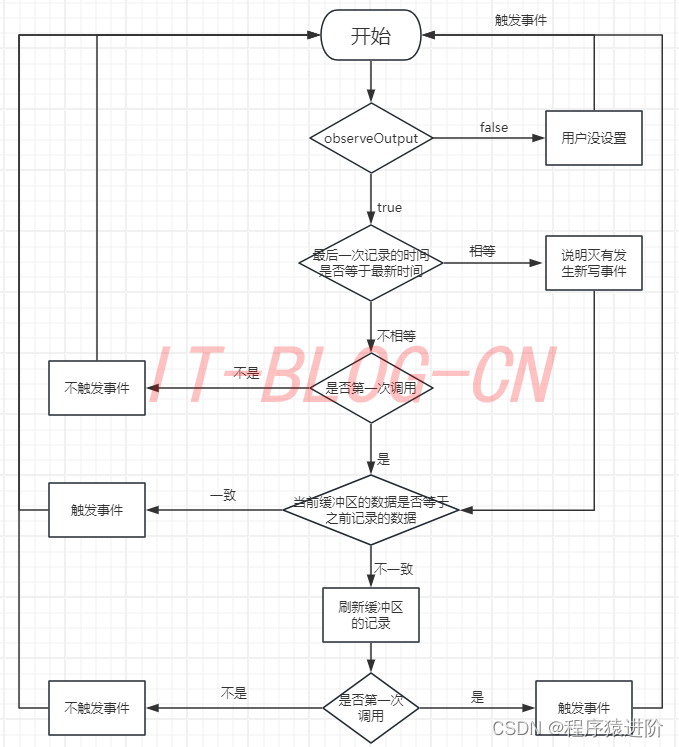

IdleStateHandler 心跳机制源码详解

优质博文:IT-BLOG-CN

一、心跳机制

Netty支持心跳机制,可以检测远程服务端是否存活或者活跃。心跳是在TCP长连接中,客户端和服务端定时向对方发送数据包通知对方自己还在线,保证连接的有效性的一种机制。在服务器和客户端之间一…

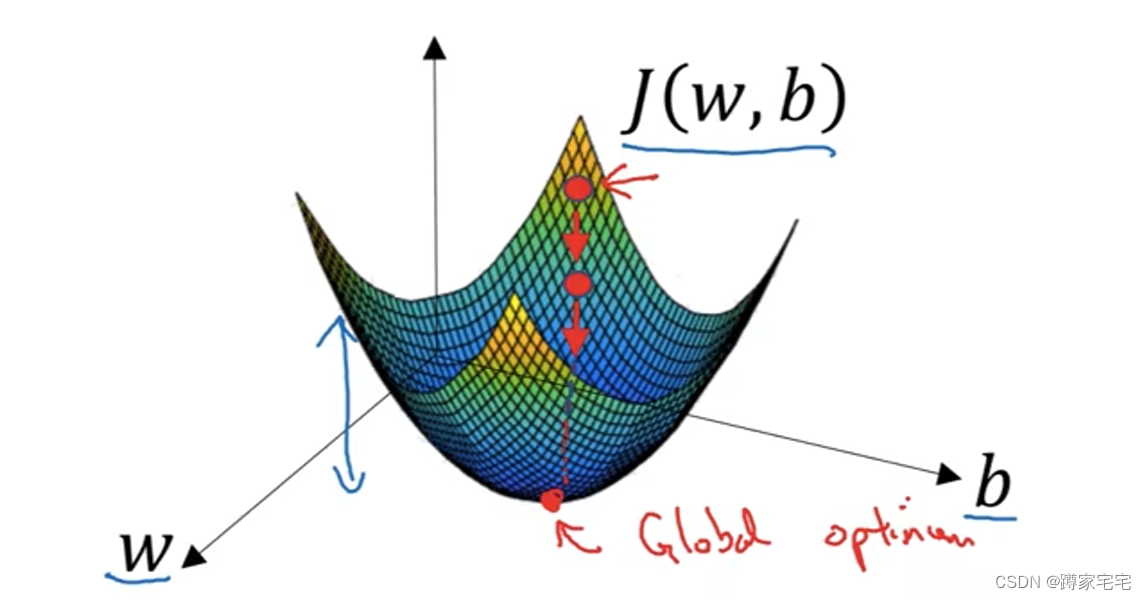

深度学习记录--梯度下降法

什么是梯度下降法?

梯度下降法是用来求解成本函数cost函数中使得J(w,b)函数值最小的参数(w,b) 梯度下降法的实现

通过对参数w,b的不断更新迭代,使J(w,b)的值趋于局部最小值或者全局最小值

如何进行更新?

以w为例:迭代公式 ww-…

Go连接mysql数据库

package main

import ("database/sql""fmt"_ "github.com/go-sql-driver/mysql"

)

//go连接数据库示例

func main() {// 数据库信息dsn : "root:roottcp(192.168.169.11:3306)/sql_test"//连接数据库 数据库类型mysql,以及数据库信息d…

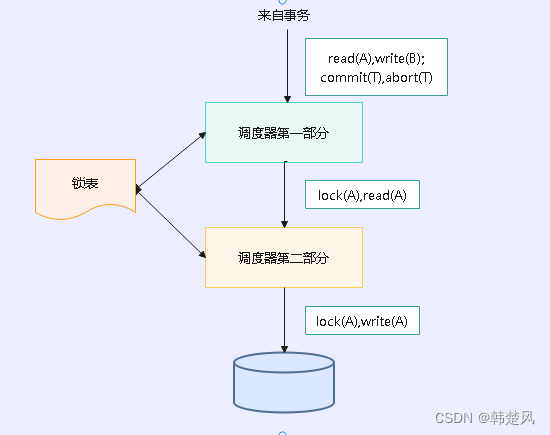

【数据库】基于封锁的数据库调度器,以及等待锁处理的优先级策略

封锁调度器的体系结构 专栏内容: 手写数据库toadb 本专栏主要介绍如何从零开发,开发的步骤,以及开发过程中的涉及的原理,遇到的问题等,让大家能跟上并且可以一起开发,让每个需要的人成为参与者。 本专栏会…

LeedCode刷题---子数组问题

顾得泉:个人主页

个人专栏:《Linux操作系统》 《C/C》 《LeedCode刷题》

键盘敲烂,年薪百万! 一、最大子数组和

题目链接:最大子数组和

题目描述 给你一个整数数组 nums ,请你找出一个具有最大和的连…

开发猿的平平淡淡周末---2023/12/3

2023/12/3 天气晴 温度适宜

AM 早安八点多的世界,起来舒展了下腰,阳光依旧明媚,给平淡的生活带来了一丝暖意 日常操作,喂鸡,时政,洗漱,恰饭,肝会儿游戏 看会儿手机 ___看累…

“此应用专为旧版android打造,因此可能无法运行”,问题解决方案

当用户在Android P系统上打开某些应用程序时,可能会弹出一个对话框,提示内容为:“此应用专为旧版Android打造,可能无法正常运行。请尝试检查更新或与开发者联系”。 随着Android平台的发展,每个新版本通常都会引入新的…

[英语学习][6][Word Power Made Easy]的精读与翻译优化

[序言]

针对第18页的阅读, 进行第二次翻译优化以及纠错, 这次译者的翻译出现的严重问题: 没有考虑时态的变化导致整个翻译跟上下文脱节, 然后又有偷懒的嫌疑, 翻译得很随意.

[英文学习的目标]

提升自身的英语水平, 对日后编程技能的提升有很大帮助. 希望大家这次能学到东西,…

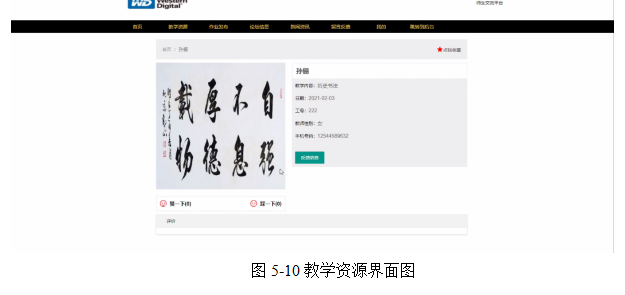

基于SSM的职业高中智慧作业试题系统设计

末尾获取源码 开发语言:Java Java开发工具:JDK1.8 后端框架:SSM 前端:JSP 数据库:MySQL5.7和Navicat管理工具结合 服务器:Tomcat8.5 开发软件:IDEA / Eclipse 是否Maven项目:是 一、…

基于Java SSM框架实现师生交流答疑作业系统项目【项目源码+论文说明】计算机毕业设计

基于java的SSM框架实现师生交流答疑作业系统演示 摘要

在新发展的时代,众多的软件被开发出来,给用户带来了很大的选择余地,而且人们越来越追求更个性的需求。在这种时代背景下,人们对师生交流平台越来越重视,更好的实…

![[英语学习][6][Word Power Made Easy]的精读与翻译优化](https://img-blog.csdnimg.cn/direct/16c48dd5b27045869c59ac0c3d73561e.jpeg)