如何自动地从高分辨率遥感影像中提取建筑物等人工目标是高分辨率遥感影像处理与理解领域的一个热点与难点问题。本篇文章我们将学习如何使用pytorch实现遥感建筑物的智能提取。

智能提取的流程

基于深度学习的遥感建筑物智能提取,首先需要制作数据集,然后构建深度学习神经网络,接着让深度学习神经网络从制作的数据集中学习建筑物的特征,最终实现建筑物的智能提取。

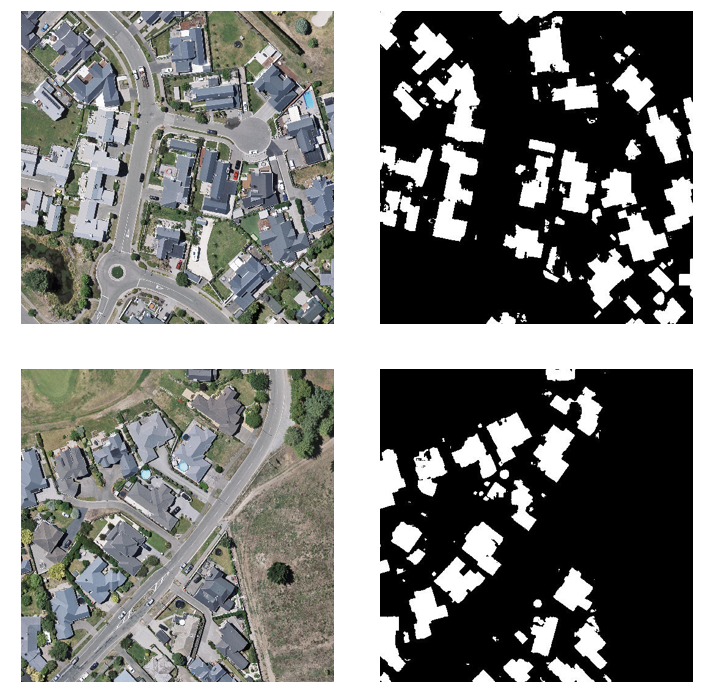

数据集选择

本文选取的是WHU-Building-DataSets。数据集[1]包含了从新西兰基督城的航空图像中提取的超过220,000个独立建筑,图像被分割成了8189个512×512像素的片,其中包含了训练集(130,500个建筑),验证集(14,500个建筑)和测试集(42,000个建筑)。

网络构建

这里我们选用最基础的UNet网络进行搭建。

import torch

import torch.nn as nn

class DoubleConv(nn.Module):

def __init__(self, in_channels, out_channels):

super(DoubleConv, self).__init__()

self.double_conv = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(out_channels, out_channels, kernel_size=3, padding=1),

nn.ReLU(inplace=True)

)

def forward(self, x):

return self.double_conv(x)

class UNet(nn.Module):

def __init__(self, in_channels, out_channels):

super(UNet, self).__init__()

self.down1 = DoubleConv(in_channels, 32)

self.pool1 = nn.MaxPool2d(2)

self.down2 = DoubleConv(32, 64)

self.pool2 = nn.MaxPool2d(2)

self.down3 = DoubleConv(64, 128)

self.pool3 = nn.MaxPool2d(2)

self.down4 = DoubleConv(128, 256)

self.pool4 = nn.MaxPool2d(2)

self.middle = DoubleConv(256, 512)

self.up1 = nn.ConvTranspose2d(512, 256, kernel_size=2, stride=2)

self.upconv1 = DoubleConv(512, 256)

self.up2 = nn.ConvTranspose2d(256, 128, kernel_size=2, stride=2)

self.upconv2 = DoubleConv(256, 128)

self.up3 = nn.ConvTranspose2d(128, 64, kernel_size=2, stride=2)

self.upconv3 = DoubleConv(128, 64)

self.up4 = nn.ConvTranspose2d(64, 32, kernel_size=2, stride=2)

self.upconv4 = DoubleConv(64, 32)

self.out_conv = nn.Conv2d(32, out_channels, kernel_size=1)

def forward(self, x):

down1 = self.down1(x)

pool1 = self.pool1(down1)

down2 = self.down2(pool1)

pool2 = self.pool2(down2)

down3 = self.down3(pool2)

pool3 = self.pool3(down3)

down4 = self.down4(pool3)

pool4 = self.pool4(down4)

middle = self.middle(pool4)

up1 = self.up1(middle)

concat1 = torch.cat([down4, up1], dim=1)

upconv1 = self.upconv1(concat1)

up2 = self.up2(upconv1)

concat2 = torch.cat([down3, up2], dim=1)

upconv2 = self.upconv2(concat2)

up3 = self.up3(upconv2)

concat3 = torch.cat([down2, up3], dim=1)

upconv3 = self.upconv3(concat3)

up4 = self.up4(upconv3)

concat4 = torch.cat([down1, up4], dim=1)

upconv4 = self.upconv4(concat4)

out = self.out_conv(upconv4)

return out

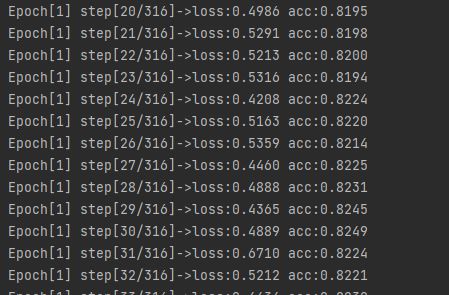

网络训练

训练结果

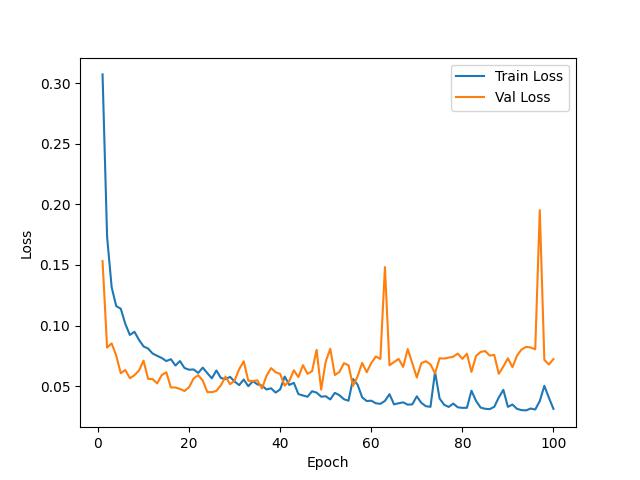

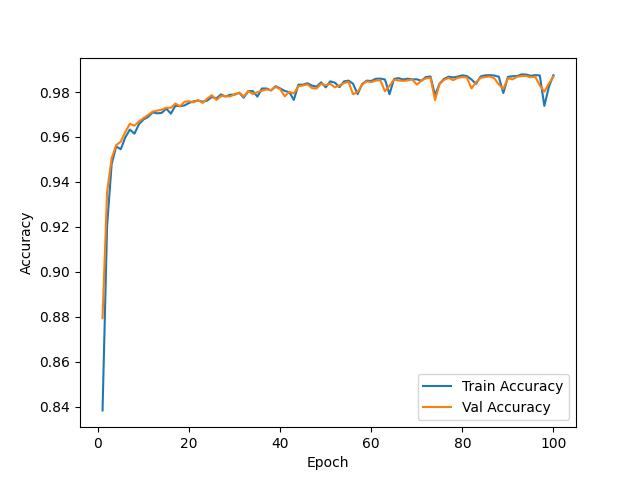

训练完成后,loss与accuracy变化曲线如下所示。

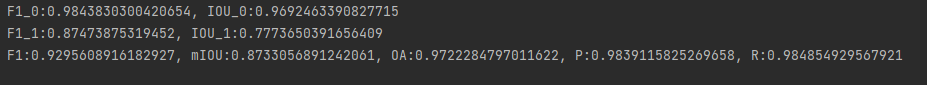

测试精度

我们对IOU、F1、OA、Precision、Recall等做了测试,测试精度如下。

测试结果

总结

本期的分享就到这里,感兴趣的点点关注。

参考资料

WHU-Building-DataSets: https://study.rsgis.whu.edu.cn/pages/download/building_dataset.html

本文由 mdnice 多平台发布

![[SpringCloud] SpringCloud配置中心的核心原理](https://img-blog.csdnimg.cn/d3cb3e27a4b64d7d8f7968362288c45d.png)