GCN(Graph Convolutional Network)图卷积神经网络是一种用于处理图数据的深度学习模型。它是基于图结构的卷积操作进行信息传递和特征学习的。

GCN模型的核心思想是通过利用邻居节点的特征来更新中心节点的表示。它通过迭代地聚集邻居节点的信息,逐渐将全局的图结构信息融入到节点的特征表示中。

具体来说,GCN模型的计算过程如下:

- 初始化节点的特征表示,通常是一个节点特征矩阵。

- 迭代地进行图卷积操作,每一次迭代都更新节点的特征表示。在每次迭代中,节点会聚集其邻居节点的特征,并将聚集后的特征进行转换和更新。

- 重复进行多次迭代,直到节点的特征表示达到稳定状态或达到预定的迭代轮数。

GCN模型的优点包括:

- 能够处理不定长的图结构数据,适用于各种类型的图数据,如社交网络、推荐系统、生物信息学等领域。

- 能够捕捉节点之间的关系和全局的图结构信息,从而提高节点特征的表示能力。

- 可以进行端到端的学习,不需要手工设计特征。

GCN模型的应用包括节点分类、图分类、链接预测等任务。

下面是实例代码实现:

import numpy as np

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

class GraphConvLayer(layers.Layer):

def __init__(self, output_dim):

super(GraphConvLayer, self).__init__()

self.output_dim = output_dim

def build(self, input_shape):

self.kernel = self.add_weight(

name="kernel",

shape=(input_shape[1], self.output_dim),

initializer="glorot_uniform",

trainable=True,

)

def call(self, inputs, adjacency_matrix):

adjacency_matrix = tf.cast(adjacency_matrix, tf.float32)

output = tf.matmul(adjacency_matrix, inputs)

output = tf.matmul(output, self.kernel)

return tf.nn.relu(output)

class GCNModel(tf.keras.Model):

def __init__(self, num_classes):

super(GCNModel, self).__init__()

self.graph_conv1 = GraphConvLayer(64)

self.graph_conv2 = GraphConvLayer(num_classes)

def call(self, inputs, adjacency_matrix):

x = self.graph_conv1(inputs, adjacency_matrix)

x = self.graph_conv2(x, adjacency_matrix)

return tf.nn.softmax(x)

model = GCNModel(num_classes)

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=0.01),

loss=tf.keras.losses.CategoricalCrossentropy(),

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=10, batch_size=32)这里本文的核心目的是想要初始尝试基于GCN模型来开发构建文本分类模型,这里选择的是一个论文相关的数据集,如下所示:

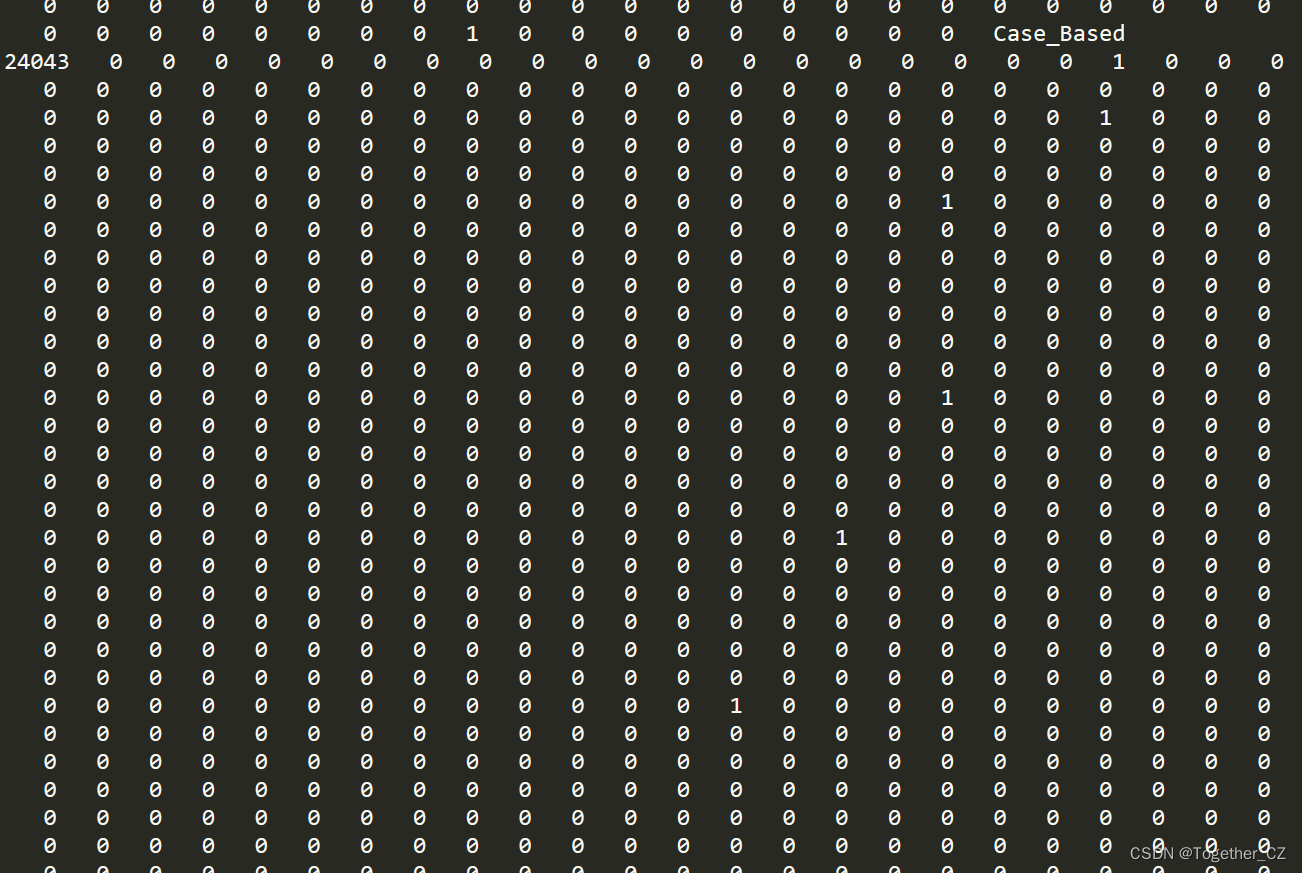

节点引用关系如下:

【论文内容数据】如下:

可以看到:这里论文内容数据已经是记过词袋模型处理过后的向量数据集了,可以直接使用。

共分为以下七个类别,如下所示:

案例型

遗传算法

神经网络

概率论

强化学习

规则学习

理论接下来我们来具体实现,首先加载数据集,如下所示:

def load4Split():

"""

加载数据,随机划分

"""

X, A, y = load_data(dataset='cora')

print("X_shape: ", X.shape)

print("A_shape: ", A.shape)

print("y_shape: ", y.shape)

y_train, y_val, y_test, idx_train, idx_val, idx_test, train_mask = get_splits(y)

return y_train, y_val, y_test, idx_train, idx_val, idx_test, train_mask, X, A, y

y_train, y_val, y_test, idx_train, idx_val, idx_test, train_mask, X, A, y = load4Split()之后对数据进行缩放处理,构建graph,如下所示:

X /= X.sum(1).reshape(-1, 1)

print('Using local pooling filters...')

A_ = preprocess_adj(A, SYM_NORM)

support = 1

graph = [X, A_]

G = [Input(shape=(None, None), batch_shape=(None, None), sparse=True)]

print("G: ", G)

print("graph: ", graph)

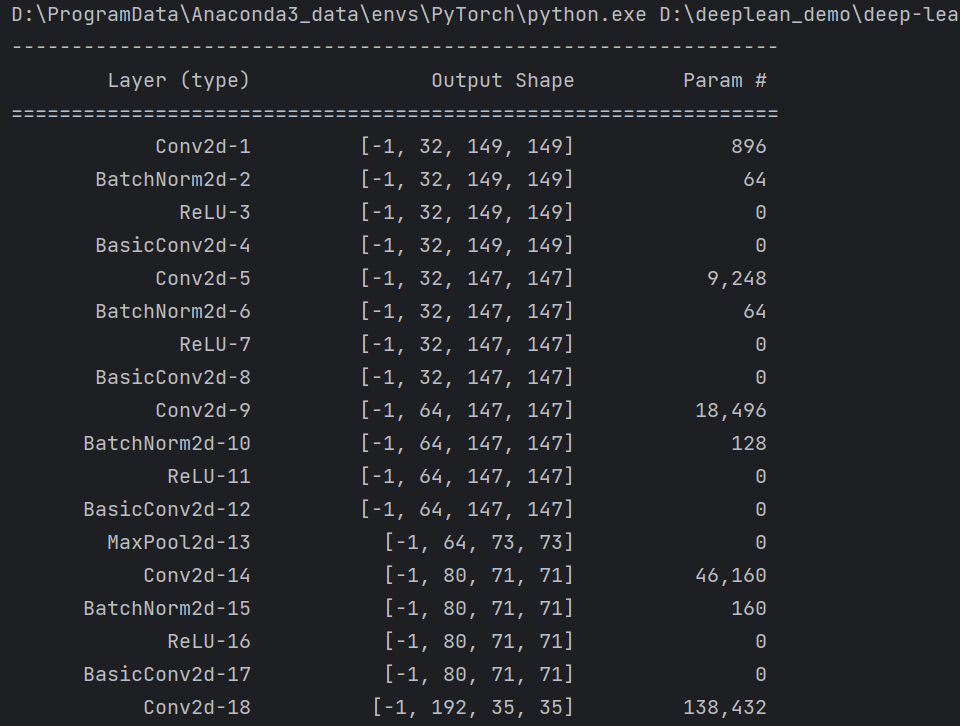

接着初始化搭建模型,如下所示:

X_in = Input(shape=(X.shape[1],))

H = Dropout(0.5)(X_in)

H = GraphConvolution(16, support, activation='relu', kernel_regularizer=l2(5e-4))([H]+G)

H = Dropout(0.5)(H)

Y = GraphConvolution(y.shape[1], support, activation='softmax')([H]+G)

# 编译

model = Model(inputs=[X_in]+G, outputs=Y)

model.compile(loss='categorical_crossentropy', optimizer=Adam(lr=0.01))完成模型构建后就可以开始模型的训练了,如下所示:

for epoch in range(1, NB_EPOCH+1):

# Log wall-clock time

t = time.time()

# Single training iteration (we mask nodes without labels for loss calculation)

model.fit(graph, y_train, sample_weight=train_mask,

batch_size=A.shape[0], epochs=1, shuffle=False, verbose=0)

# Predict on full dataset

preds = model.predict(graph, batch_size=A.shape[0])

# Train / validation scores

train_val_loss, train_val_acc = evaluate_preds(preds, [y_train, y_val],

[idx_train, idx_val])

print("Epoch: {:04d}".format(epoch),

"train_loss= {:.4f}".format(train_val_loss[0]),

"train_acc= {:.4f}".format(train_val_acc[0]),

"val_loss= {:.4f}".format(train_val_loss[1]),

"val_acc= {:.4f}".format(train_val_acc[1]),

"time= {:.4f}".format(time.time() - t))

train_loss_list.append(train_val_loss[0])

val_loss_list.append(train_val_loss[1])

train_acc_list.append(train_val_acc[0])

val_acc_list.append(train_val_acc[1])

# Early stopping

if train_val_loss[1] < best_val_loss:

best_val_loss = train_val_loss[1]

wait = 0

else:

if wait >= PATIENCE:

print('Epoch {}: early stopping'.format(epoch))

break

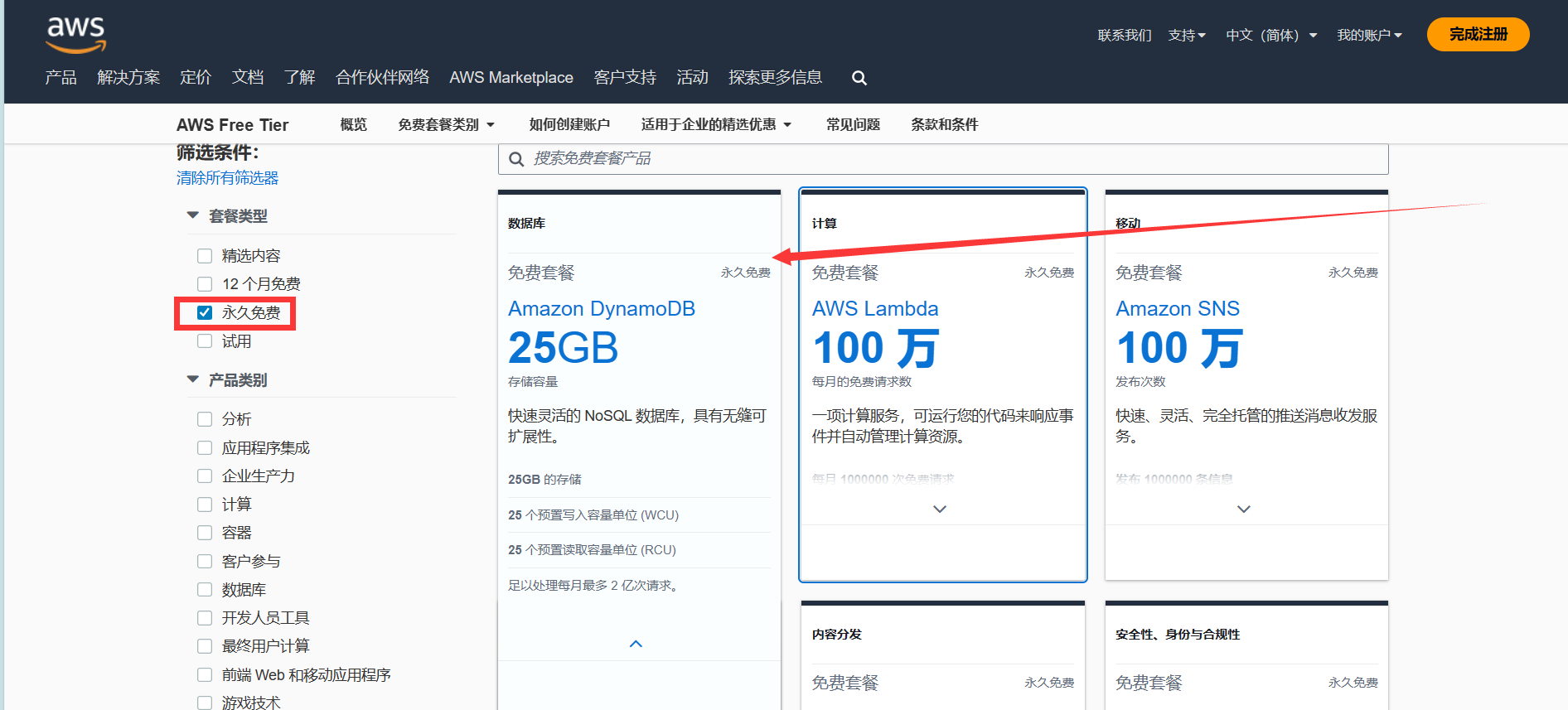

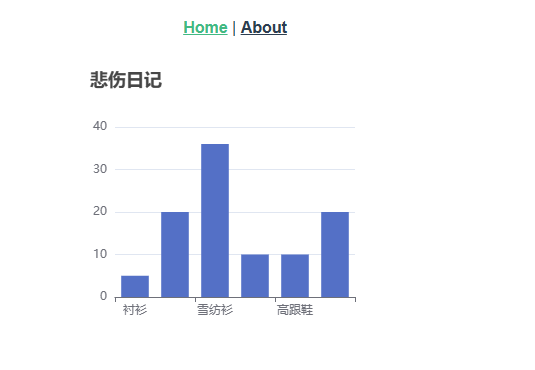

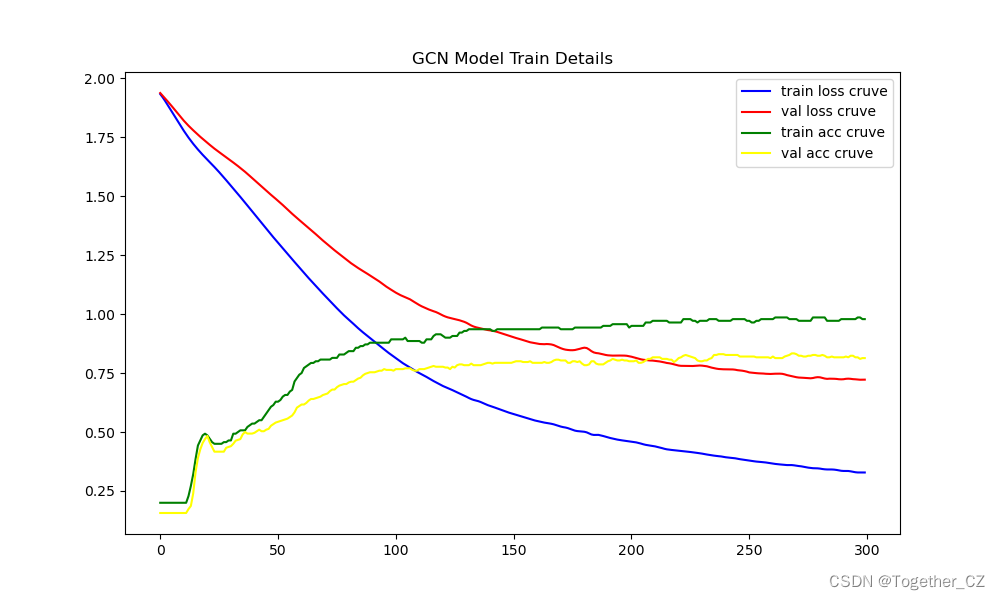

wait += 1最后对模型进行测试评估与可视化分析,如下所示:

# 测试

test_loss, test_acc = evaluate_preds(preds, [y_test], [idx_test])

print("Test set results:",

"loss= {:.4f}".format(test_loss[0]),

"accuracy= {:.4f}".format(test_acc[0]))

# 可视化

plt.clf()

plt.figure(figsize=(10, 6))

plt.plot(train_loss_list,c='blue',label="train loss cruve")

plt.plot(val_loss_list,c='red',label="val loss cruve")

plt.plot(train_acc_list,c='green',label="train acc cruve")

plt.plot(val_acc_list,c='yellow',label="val acc cruve")

plt.legend()

plt.title("GCN Model Train Details")

plt.savefig("gcn_train.png")训练详情日志信息如下所示:

Epoch: 0001 train_loss= 1.9328 train_acc= 0.2000 val_loss= 1.9372 val_acc= 0.1567 time= 0.3660

Epoch: 0002 train_loss= 1.9194 train_acc= 0.2000 val_loss= 1.9261 val_acc= 0.1567 time= 0.0680

Epoch: 0003 train_loss= 1.9047 train_acc= 0.2000 val_loss= 1.9152 val_acc= 0.1567 time= 0.0720

Epoch: 0004 train_loss= 1.8888 train_acc= 0.2000 val_loss= 1.9036 val_acc= 0.1567 time= 0.0710

Epoch: 0005 train_loss= 1.8731 train_acc= 0.2000 val_loss= 1.8922 val_acc= 0.1567 time= 0.0660

Epoch: 0006 train_loss= 1.8571 train_acc= 0.2000 val_loss= 1.8802 val_acc= 0.1567 time= 0.0660

Epoch: 0007 train_loss= 1.8408 train_acc= 0.2000 val_loss= 1.8681 val_acc= 0.1567 time= 0.0630

Epoch: 0008 train_loss= 1.8246 train_acc= 0.2000 val_loss= 1.8557 val_acc= 0.1567 time= 0.0600

Epoch: 0009 train_loss= 1.8084 train_acc= 0.2000 val_loss= 1.8434 val_acc= 0.1567 time= 0.0670

Epoch: 0010 train_loss= 1.7926 train_acc= 0.2000 val_loss= 1.8316 val_acc= 0.1567 time= 0.0630

Epoch: 0011 train_loss= 1.7773 train_acc= 0.2000 val_loss= 1.8200 val_acc= 0.1567 time= 0.0640

Epoch: 0012 train_loss= 1.7624 train_acc= 0.2000 val_loss= 1.8089 val_acc= 0.1567 time= 0.0740

Epoch: 0013 train_loss= 1.7482 train_acc= 0.2286 val_loss= 1.7985 val_acc= 0.1733 time= 0.0670

Epoch: 0014 train_loss= 1.7347 train_acc= 0.2714 val_loss= 1.7886 val_acc= 0.1867 time= 0.0680

Epoch: 0015 train_loss= 1.7218 train_acc= 0.3214 val_loss= 1.7791 val_acc= 0.2400 time= 0.0660

Epoch: 0016 train_loss= 1.7094 train_acc= 0.3857 val_loss= 1.7698 val_acc= 0.3300 time= 0.0700

Epoch: 0017 train_loss= 1.6977 train_acc= 0.4429 val_loss= 1.7605 val_acc= 0.3900 time= 0.0750

Epoch: 0018 train_loss= 1.6863 train_acc= 0.4643 val_loss= 1.7515 val_acc= 0.4267 time= 0.0740

Epoch: 0019 train_loss= 1.6754 train_acc= 0.4857 val_loss= 1.7429 val_acc= 0.4533 time= 0.0700

Epoch: 0020 train_loss= 1.6648 train_acc= 0.4929 val_loss= 1.7344 val_acc= 0.4700 time= 0.0650

Epoch: 0021 train_loss= 1.6543 train_acc= 0.4857 val_loss= 1.7260 val_acc= 0.4833 time= 0.0640

Epoch: 0022 train_loss= 1.6441 train_acc= 0.4714 val_loss= 1.7176 val_acc= 0.4567 time= 0.0630

Epoch: 0023 train_loss= 1.6339 train_acc= 0.4571 val_loss= 1.7094 val_acc= 0.4367 time= 0.0630

Epoch: 0024 train_loss= 1.6235 train_acc= 0.4500 val_loss= 1.7015 val_acc= 0.4167 time= 0.0630

Epoch: 0025 train_loss= 1.6128 train_acc= 0.4500 val_loss= 1.6939 val_acc= 0.4167 time= 0.0630

Epoch: 0026 train_loss= 1.6018 train_acc= 0.4500 val_loss= 1.6864 val_acc= 0.4167 time= 0.0640

Epoch: 0027 train_loss= 1.5905 train_acc= 0.4500 val_loss= 1.6792 val_acc= 0.4167 time= 0.0660

Epoch: 0028 train_loss= 1.5791 train_acc= 0.4571 val_loss= 1.6719 val_acc= 0.4167 time= 0.0650

Epoch: 0029 train_loss= 1.5675 train_acc= 0.4571 val_loss= 1.6646 val_acc= 0.4333 time= 0.0740

Epoch: 0030 train_loss= 1.5558 train_acc= 0.4643 val_loss= 1.6571 val_acc= 0.4367 time= 0.0670

Epoch: 0031 train_loss= 1.5440 train_acc= 0.4643 val_loss= 1.6499 val_acc= 0.4400 time= 0.0670

Epoch: 0032 train_loss= 1.5322 train_acc= 0.4929 val_loss= 1.6425 val_acc= 0.4500 time= 0.0670

Epoch: 0033 train_loss= 1.5204 train_acc= 0.4929 val_loss= 1.6350 val_acc= 0.4633 time= 0.0699

Epoch: 0034 train_loss= 1.5085 train_acc= 0.5000 val_loss= 1.6273 val_acc= 0.4667 time= 0.0750

Epoch: 0035 train_loss= 1.4967 train_acc= 0.5071 val_loss= 1.6194 val_acc= 0.4700 time= 0.0650

Epoch: 0036 train_loss= 1.4847 train_acc= 0.5071 val_loss= 1.6114 val_acc= 0.4900 time= 0.0610

Epoch: 0037 train_loss= 1.4726 train_acc= 0.5071 val_loss= 1.6031 val_acc= 0.5000 time= 0.0640

Epoch: 0038 train_loss= 1.4603 train_acc= 0.5214 val_loss= 1.5946 val_acc= 0.4933 time= 0.0610

Epoch: 0039 train_loss= 1.4480 train_acc= 0.5286 val_loss= 1.5856 val_acc= 0.4933 time= 0.0630

Epoch: 0040 train_loss= 1.4357 train_acc= 0.5357 val_loss= 1.5767 val_acc= 0.4933 time= 0.0630

Epoch: 0041 train_loss= 1.4235 train_acc= 0.5357 val_loss= 1.5677 val_acc= 0.4967 time= 0.0650

Epoch: 0042 train_loss= 1.4112 train_acc= 0.5429 val_loss= 1.5586 val_acc= 0.5033 time= 0.0680

Epoch: 0043 train_loss= 1.3989 train_acc= 0.5500 val_loss= 1.5496 val_acc= 0.5100 time= 0.0650

Epoch: 0044 train_loss= 1.3866 train_acc= 0.5500 val_loss= 1.5406 val_acc= 0.5033 time= 0.0670

Epoch: 0045 train_loss= 1.3742 train_acc= 0.5643 val_loss= 1.5317 val_acc= 0.5033 time= 0.0680

Epoch: 0046 train_loss= 1.3619 train_acc= 0.5786 val_loss= 1.5228 val_acc= 0.5100 time= 0.0660

Epoch: 0047 train_loss= 1.3497 train_acc= 0.5929 val_loss= 1.5140 val_acc= 0.5133 time= 0.0670

Epoch: 0048 train_loss= 1.3376 train_acc= 0.6071 val_loss= 1.5056 val_acc= 0.5267 time= 0.0730

Epoch: 0049 train_loss= 1.3258 train_acc= 0.6143 val_loss= 1.4973 val_acc= 0.5333 time= 0.0720

Epoch: 0050 train_loss= 1.3140 train_acc= 0.6286 val_loss= 1.4887 val_acc= 0.5400 time= 0.0655

Epoch: 0051 train_loss= 1.3024 train_acc= 0.6286 val_loss= 1.4800 val_acc= 0.5433 time= 0.0610

Epoch: 0052 train_loss= 1.2908 train_acc= 0.6357 val_loss= 1.4714 val_acc= 0.5467 time= 0.0630

Epoch: 0053 train_loss= 1.2790 train_acc= 0.6500 val_loss= 1.4623 val_acc= 0.5500 time= 0.0640

Epoch: 0054 train_loss= 1.2672 train_acc= 0.6571 val_loss= 1.4529 val_acc= 0.5533 time= 0.0620

Epoch: 0055 train_loss= 1.2556 train_acc= 0.6571 val_loss= 1.4435 val_acc= 0.5567 time= 0.0640

Epoch: 0056 train_loss= 1.2441 train_acc= 0.6714 val_loss= 1.4342 val_acc= 0.5633 time= 0.0640

Epoch: 0057 train_loss= 1.2325 train_acc= 0.6786 val_loss= 1.4251 val_acc= 0.5700 time= 0.0740

Epoch: 0058 train_loss= 1.2208 train_acc= 0.7143 val_loss= 1.4162 val_acc= 0.5833 time= 0.0670

Epoch: 0059 train_loss= 1.2093 train_acc= 0.7286 val_loss= 1.4074 val_acc= 0.6033 time= 0.0650

Epoch: 0060 train_loss= 1.1977 train_acc= 0.7429 val_loss= 1.3988 val_acc= 0.6100 time= 0.0670

Epoch: 0061 train_loss= 1.1861 train_acc= 0.7500 val_loss= 1.3904 val_acc= 0.6167 time= 0.0680

Epoch: 0062 train_loss= 1.1745 train_acc= 0.7714 val_loss= 1.3818 val_acc= 0.6167 time= 0.0670

Epoch: 0063 train_loss= 1.1633 train_acc= 0.7786 val_loss= 1.3735 val_acc= 0.6233 time= 0.0690

Epoch: 0064 train_loss= 1.1524 train_acc= 0.7857 val_loss= 1.3652 val_acc= 0.6333 time= 0.0724

Epoch: 0065 train_loss= 1.1414 train_acc= 0.7929 val_loss= 1.3566 val_acc= 0.6400 time= 0.0760

Epoch: 0066 train_loss= 1.1304 train_acc= 0.7929 val_loss= 1.3477 val_acc= 0.6400 time= 0.0660

Epoch: 0067 train_loss= 1.1195 train_acc= 0.8000 val_loss= 1.3389 val_acc= 0.6433 time= 0.0640

Epoch: 0068 train_loss= 1.1086 train_acc= 0.8000 val_loss= 1.3298 val_acc= 0.6467 time= 0.0630

Epoch: 0069 train_loss= 1.0978 train_acc= 0.8071 val_loss= 1.3208 val_acc= 0.6500 time= 0.0650

Epoch: 0070 train_loss= 1.0873 train_acc= 0.8071 val_loss= 1.3121 val_acc= 0.6567 time= 0.0620

Epoch: 0071 train_loss= 1.0767 train_acc= 0.8071 val_loss= 1.3036 val_acc= 0.6600 time= 0.0620

Epoch: 0072 train_loss= 1.0662 train_acc= 0.8071 val_loss= 1.2952 val_acc= 0.6633 time= 0.0650

Epoch: 0073 train_loss= 1.0556 train_acc= 0.8071 val_loss= 1.2866 val_acc= 0.6733 time= 0.0660

Epoch: 0074 train_loss= 1.0452 train_acc= 0.8143 val_loss= 1.2783 val_acc= 0.6800 time= 0.0665

Epoch: 0075 train_loss= 1.0348 train_acc= 0.8143 val_loss= 1.2703 val_acc= 0.6800 time= 0.0670

Epoch: 0076 train_loss= 1.0245 train_acc= 0.8143 val_loss= 1.2625 val_acc= 0.6900 time= 0.0670

Epoch: 0077 train_loss= 1.0144 train_acc= 0.8286 val_loss= 1.2548 val_acc= 0.6967 time= 0.0670

Epoch: 0078 train_loss= 1.0043 train_acc= 0.8286 val_loss= 1.2469 val_acc= 0.7000 time= 0.0690

Epoch: 0079 train_loss= 0.9946 train_acc= 0.8286 val_loss= 1.2391 val_acc= 0.7033 time= 0.0710

Epoch: 0080 train_loss= 0.9852 train_acc= 0.8357 val_loss= 1.2309 val_acc= 0.7033 time= 0.0680

Epoch: 0081 train_loss= 0.9764 train_acc= 0.8429 val_loss= 1.2230 val_acc= 0.7100 time= 0.0625

Epoch: 0082 train_loss= 0.9676 train_acc= 0.8429 val_loss= 1.2155 val_acc= 0.7133 time= 0.0620

Epoch: 0083 train_loss= 0.9585 train_acc= 0.8429 val_loss= 1.2086 val_acc= 0.7133 time= 0.0640

Epoch: 0084 train_loss= 0.9491 train_acc= 0.8571 val_loss= 1.2017 val_acc= 0.7200 time= 0.0610

Epoch: 0085 train_loss= 0.9400 train_acc= 0.8571 val_loss= 1.1952 val_acc= 0.7267 time= 0.0620

Epoch: 0086 train_loss= 0.9314 train_acc= 0.8643 val_loss= 1.1888 val_acc= 0.7300 time= 0.0612

Epoch: 0087 train_loss= 0.9229 train_acc= 0.8643 val_loss= 1.1826 val_acc= 0.7400 time= 0.0650

Epoch: 0088 train_loss= 0.9149 train_acc= 0.8714 val_loss= 1.1767 val_acc= 0.7467 time= 0.0670

Epoch: 0089 train_loss= 0.9069 train_acc= 0.8714 val_loss= 1.1703 val_acc= 0.7500 time= 0.0650

Epoch: 0090 train_loss= 0.8989 train_acc= 0.8786 val_loss= 1.1639 val_acc= 0.7533 time= 0.0670

Epoch: 0091 train_loss= 0.8907 train_acc= 0.8786 val_loss= 1.1571 val_acc= 0.7533 time= 0.0670

Epoch: 0092 train_loss= 0.8828 train_acc= 0.8786 val_loss= 1.1506 val_acc= 0.7533 time= 0.0660

Epoch: 0093 train_loss= 0.8749 train_acc= 0.8786 val_loss= 1.1444 val_acc= 0.7567 time= 0.0680

Epoch: 0094 train_loss= 0.8671 train_acc= 0.8786 val_loss= 1.1380 val_acc= 0.7600 time= 0.0700

Epoch: 0095 train_loss= 0.8591 train_acc= 0.8786 val_loss= 1.1309 val_acc= 0.7600 time= 0.0680

Epoch: 0096 train_loss= 0.8506 train_acc= 0.8786 val_loss= 1.1233 val_acc= 0.7667 time= 0.0640

Epoch: 0097 train_loss= 0.8425 train_acc= 0.8786 val_loss= 1.1160 val_acc= 0.7633 time= 0.0620

Epoch: 0098 train_loss= 0.8349 train_acc= 0.8786 val_loss= 1.1093 val_acc= 0.7633 time= 0.0630

Epoch: 0099 train_loss= 0.8276 train_acc= 0.8929 val_loss= 1.1032 val_acc= 0.7633 time= 0.0650

Epoch: 0100 train_loss= 0.8205 train_acc= 0.8929 val_loss= 1.0970 val_acc= 0.7600 time= 0.0640

Epoch: 0101 train_loss= 0.8130 train_acc= 0.8929 val_loss= 1.0907 val_acc= 0.7667 time= 0.0618

Epoch: 0102 train_loss= 0.8055 train_acc= 0.8929 val_loss= 1.0851 val_acc= 0.7667 time= 0.0640

Epoch: 0103 train_loss= 0.7983 train_acc= 0.8929 val_loss= 1.0800 val_acc= 0.7667 time= 0.0670

Epoch: 0104 train_loss= 0.7916 train_acc= 0.8929 val_loss= 1.0757 val_acc= 0.7667 time= 0.0680

Epoch: 0105 train_loss= 0.7855 train_acc= 0.9000 val_loss= 1.0716 val_acc= 0.7700 time= 0.0679

Epoch: 0106 train_loss= 0.7794 train_acc= 0.8857 val_loss= 1.0675 val_acc= 0.7700 time= 0.0660

Epoch: 0107 train_loss= 0.7734 train_acc= 0.8857 val_loss= 1.0626 val_acc= 0.7700 time= 0.0660

Epoch: 0108 train_loss= 0.7670 train_acc= 0.8857 val_loss= 1.0566 val_acc= 0.7633 time= 0.0690

Epoch: 0109 train_loss= 0.7607 train_acc= 0.8857 val_loss= 1.0501 val_acc= 0.7600 time= 0.0709

Epoch: 0110 train_loss= 0.7549 train_acc= 0.8857 val_loss= 1.0439 val_acc= 0.7600 time= 0.0685

Epoch: 0111 train_loss= 0.7496 train_acc= 0.8857 val_loss= 1.0380 val_acc= 0.7667 time= 0.0650

Epoch: 0112 train_loss= 0.7448 train_acc= 0.8786 val_loss= 1.0327 val_acc= 0.7667 time= 0.0620

Epoch: 0113 train_loss= 0.7394 train_acc= 0.8786 val_loss= 1.0281 val_acc= 0.7667 time= 0.0650

Epoch: 0114 train_loss= 0.7335 train_acc= 0.8929 val_loss= 1.0236 val_acc= 0.7700 time= 0.0630

Epoch: 0115 train_loss= 0.7276 train_acc= 0.8929 val_loss= 1.0194 val_acc= 0.7733 time= 0.0630

Epoch: 0116 train_loss= 0.7218 train_acc= 0.8929 val_loss= 1.0156 val_acc= 0.7767 time= 0.0651

Epoch: 0117 train_loss= 0.7161 train_acc= 0.9071 val_loss= 1.0120 val_acc= 0.7800 time= 0.0650

Epoch: 0118 train_loss= 0.7108 train_acc= 0.9143 val_loss= 1.0086 val_acc= 0.7767 time= 0.0650

Epoch: 0119 train_loss= 0.7054 train_acc= 0.9143 val_loss= 1.0041 val_acc= 0.7767 time= 0.0660

Epoch: 0120 train_loss= 0.6999 train_acc= 0.9143 val_loss= 0.9987 val_acc= 0.7767 time= 0.0660

Epoch: 0121 train_loss= 0.6949 train_acc= 0.9071 val_loss= 0.9939 val_acc= 0.7767 time= 0.0670

Epoch: 0122 train_loss= 0.6907 train_acc= 0.9000 val_loss= 0.9896 val_acc= 0.7733 time= 0.0660

Epoch: 0123 train_loss= 0.6869 train_acc= 0.9000 val_loss= 0.9861 val_acc= 0.7733 time= 0.0660

Epoch: 0124 train_loss= 0.6825 train_acc= 0.9000 val_loss= 0.9834 val_acc= 0.7667 time= 0.0710

Epoch: 0125 train_loss= 0.6777 train_acc= 0.9071 val_loss= 0.9810 val_acc= 0.7767 time= 0.0700

Epoch: 0126 train_loss= 0.6730 train_acc= 0.9071 val_loss= 0.9786 val_acc= 0.7733 time= 0.0650

Epoch: 0127 train_loss= 0.6682 train_acc= 0.9071 val_loss= 0.9763 val_acc= 0.7833 time= 0.0630

Epoch: 0128 train_loss= 0.6634 train_acc= 0.9214 val_loss= 0.9737 val_acc= 0.7867 time= 0.0620

Epoch: 0129 train_loss= 0.6587 train_acc= 0.9214 val_loss= 0.9705 val_acc= 0.7867 time= 0.0620

Epoch: 0130 train_loss= 0.6542 train_acc= 0.9286 val_loss= 0.9672 val_acc= 0.7833 time= 0.0640

Epoch: 0131 train_loss= 0.6493 train_acc= 0.9286 val_loss= 0.9628 val_acc= 0.7833 time= 0.0630

Epoch: 0132 train_loss= 0.6442 train_acc= 0.9357 val_loss= 0.9570 val_acc= 0.7833 time= 0.0650

Epoch: 0133 train_loss= 0.6396 train_acc= 0.9357 val_loss= 0.9516 val_acc= 0.7900 time= 0.0680

Epoch: 0134 train_loss= 0.6360 train_acc= 0.9357 val_loss= 0.9472 val_acc= 0.7833 time= 0.0650

Epoch: 0135 train_loss= 0.6334 train_acc= 0.9357 val_loss= 0.9440 val_acc= 0.7833 time= 0.0670

Epoch: 0136 train_loss= 0.6308 train_acc= 0.9357 val_loss= 0.9418 val_acc= 0.7833 time= 0.0650

Epoch: 0137 train_loss= 0.6271 train_acc= 0.9357 val_loss= 0.9392 val_acc= 0.7833 time= 0.0665

Epoch: 0138 train_loss= 0.6227 train_acc= 0.9357 val_loss= 0.9368 val_acc= 0.7867 time= 0.0683

Epoch: 0139 train_loss= 0.6180 train_acc= 0.9357 val_loss= 0.9344 val_acc= 0.7900 time= 0.0730

Epoch: 0140 train_loss= 0.6140 train_acc= 0.9357 val_loss= 0.9323 val_acc= 0.7933 time= 0.0710

Epoch: 0141 train_loss= 0.6106 train_acc= 0.9357 val_loss= 0.9309 val_acc= 0.7933 time= 0.0645

Epoch: 0142 train_loss= 0.6072 train_acc= 0.9286 val_loss= 0.9290 val_acc= 0.7900 time= 0.0620

Epoch: 0143 train_loss= 0.6037 train_acc= 0.9286 val_loss= 0.9270 val_acc= 0.7933 time= 0.0630

Epoch: 0144 train_loss= 0.6000 train_acc= 0.9357 val_loss= 0.9244 val_acc= 0.7933 time= 0.0630

Epoch: 0145 train_loss= 0.5961 train_acc= 0.9357 val_loss= 0.9211 val_acc= 0.7933 time= 0.0629

Epoch: 0146 train_loss= 0.5924 train_acc= 0.9357 val_loss= 0.9179 val_acc= 0.7933 time= 0.0650

Epoch: 0147 train_loss= 0.5885 train_acc= 0.9357 val_loss= 0.9149 val_acc= 0.7933 time= 0.0640

Epoch: 0148 train_loss= 0.5851 train_acc= 0.9357 val_loss= 0.9112 val_acc= 0.7933 time= 0.0670

Epoch: 0149 train_loss= 0.5821 train_acc= 0.9357 val_loss= 0.9079 val_acc= 0.7933 time= 0.0700

Epoch: 0150 train_loss= 0.5790 train_acc= 0.9357 val_loss= 0.9048 val_acc= 0.7933 time= 0.0675

Epoch: 0151 train_loss= 0.5761 train_acc= 0.9357 val_loss= 0.9016 val_acc= 0.7967 time= 0.0660

Epoch: 0152 train_loss= 0.5732 train_acc= 0.9357 val_loss= 0.8985 val_acc= 0.8000 time= 0.0670

Epoch: 0153 train_loss= 0.5703 train_acc= 0.9357 val_loss= 0.8958 val_acc= 0.8000 time= 0.0670

Epoch: 0154 train_loss= 0.5672 train_acc= 0.9357 val_loss= 0.8928 val_acc= 0.8000 time= 0.0720

Epoch: 0155 train_loss= 0.5640 train_acc= 0.9357 val_loss= 0.8896 val_acc= 0.7967 time= 0.0700

Epoch: 0156 train_loss= 0.5606 train_acc= 0.9357 val_loss= 0.8863 val_acc= 0.7967 time= 0.0630

Epoch: 0157 train_loss= 0.5575 train_acc= 0.9357 val_loss= 0.8832 val_acc= 0.7967 time= 0.0631

Epoch: 0158 train_loss= 0.5545 train_acc= 0.9357 val_loss= 0.8805 val_acc= 0.8000 time= 0.0640

Epoch: 0159 train_loss= 0.5514 train_acc= 0.9357 val_loss= 0.8785 val_acc= 0.7933 time= 0.0620

Epoch: 0160 train_loss= 0.5489 train_acc= 0.9357 val_loss= 0.8764 val_acc= 0.7933 time= 0.0720

Epoch: 0161 train_loss= 0.5466 train_acc= 0.9357 val_loss= 0.8747 val_acc= 0.7933 time= 0.0640

Epoch: 0162 train_loss= 0.5444 train_acc= 0.9357 val_loss= 0.8731 val_acc= 0.7933 time= 0.0640

Epoch: 0163 train_loss= 0.5422 train_acc= 0.9429 val_loss= 0.8726 val_acc= 0.7933 time= 0.0680

Epoch: 0164 train_loss= 0.5400 train_acc= 0.9429 val_loss= 0.8723 val_acc= 0.7967 time= 0.0670

Epoch: 0165 train_loss= 0.5384 train_acc= 0.9429 val_loss= 0.8730 val_acc= 0.7933 time= 0.0660

Epoch: 0166 train_loss= 0.5367 train_acc= 0.9429 val_loss= 0.8725 val_acc= 0.7933 time= 0.0680

Epoch: 0167 train_loss= 0.5349 train_acc= 0.9429 val_loss= 0.8712 val_acc= 0.7967 time= 0.0670

Epoch: 0168 train_loss= 0.5325 train_acc= 0.9429 val_loss= 0.8685 val_acc= 0.8033 time= 0.0650

Epoch: 0169 train_loss= 0.5293 train_acc= 0.9429 val_loss= 0.8643 val_acc= 0.8067 time= 0.0720

Epoch: 0170 train_loss= 0.5260 train_acc= 0.9429 val_loss= 0.8596 val_acc= 0.8067 time= 0.0720

Epoch: 0171 train_loss= 0.5230 train_acc= 0.9357 val_loss= 0.8553 val_acc= 0.8033 time= 0.0640

Epoch: 0172 train_loss= 0.5207 train_acc= 0.9357 val_loss= 0.8517 val_acc= 0.8033 time= 0.0620

Epoch: 0173 train_loss= 0.5190 train_acc= 0.9357 val_loss= 0.8492 val_acc= 0.8033 time= 0.0641

Epoch: 0174 train_loss= 0.5164 train_acc= 0.9357 val_loss= 0.8478 val_acc= 0.7933 time= 0.0630

Epoch: 0175 train_loss= 0.5130 train_acc= 0.9357 val_loss= 0.8469 val_acc= 0.7933 time= 0.0630

Epoch: 0176 train_loss= 0.5093 train_acc= 0.9357 val_loss= 0.8468 val_acc= 0.8000 time= 0.0620

Epoch: 0177 train_loss= 0.5059 train_acc= 0.9429 val_loss= 0.8477 val_acc= 0.8000 time= 0.0640

Epoch: 0178 train_loss= 0.5040 train_acc= 0.9429 val_loss= 0.8499 val_acc= 0.7967 time= 0.0695

Epoch: 0179 train_loss= 0.5028 train_acc= 0.9429 val_loss= 0.8526 val_acc= 0.8000 time= 0.0650

Epoch: 0180 train_loss= 0.5020 train_acc= 0.9429 val_loss= 0.8555 val_acc= 0.7900 time= 0.0670

Epoch: 0181 train_loss= 0.5010 train_acc= 0.9429 val_loss= 0.8575 val_acc= 0.7833 time= 0.0680

Epoch: 0182 train_loss= 0.4985 train_acc= 0.9429 val_loss= 0.8560 val_acc= 0.7833 time= 0.0660

Epoch: 0183 train_loss= 0.4940 train_acc= 0.9429 val_loss= 0.8501 val_acc= 0.7867 time= 0.0660

Epoch: 0184 train_loss= 0.4896 train_acc= 0.9429 val_loss= 0.8427 val_acc= 0.8000 time= 0.0700

Epoch: 0185 train_loss= 0.4876 train_acc= 0.9429 val_loss= 0.8374 val_acc= 0.8000 time= 0.0710

Epoch: 0186 train_loss= 0.4878 train_acc= 0.9429 val_loss= 0.8348 val_acc= 0.7900 time= 0.0670

Epoch: 0187 train_loss= 0.4879 train_acc= 0.9429 val_loss= 0.8337 val_acc= 0.7867 time= 0.0630

Epoch: 0188 train_loss= 0.4856 train_acc= 0.9429 val_loss= 0.8316 val_acc= 0.7867 time= 0.0610

Epoch: 0189 train_loss= 0.4830 train_acc= 0.9500 val_loss= 0.8292 val_acc= 0.7867 time= 0.0630

Epoch: 0190 train_loss= 0.4801 train_acc= 0.9500 val_loss= 0.8268 val_acc= 0.7933 time= 0.0660

Epoch: 0191 train_loss= 0.4773 train_acc= 0.9500 val_loss= 0.8251 val_acc= 0.8000 time= 0.0650

Epoch: 0192 train_loss= 0.4746 train_acc= 0.9500 val_loss= 0.8244 val_acc= 0.8033 time= 0.0624

Epoch: 0193 train_loss= 0.4722 train_acc= 0.9571 val_loss= 0.8239 val_acc= 0.8100 time= 0.0670

Epoch: 0194 train_loss= 0.4699 train_acc= 0.9571 val_loss= 0.8241 val_acc= 0.8067 time= 0.0660

Epoch: 0195 train_loss= 0.4678 train_acc= 0.9571 val_loss= 0.8241 val_acc= 0.8033 time= 0.0670

Epoch: 0196 train_loss= 0.4661 train_acc= 0.9571 val_loss= 0.8242 val_acc= 0.8033 time= 0.0660

Epoch: 0197 train_loss= 0.4646 train_acc= 0.9571 val_loss= 0.8242 val_acc= 0.8067 time= 0.0730

Epoch: 0198 train_loss= 0.4632 train_acc= 0.9571 val_loss= 0.8239 val_acc= 0.8033 time= 0.0670

Epoch: 0199 train_loss= 0.4618 train_acc= 0.9571 val_loss= 0.8232 val_acc= 0.8033 time= 0.0710

Epoch: 0200 train_loss= 0.4603 train_acc= 0.9429 val_loss= 0.8214 val_acc= 0.8000 time= 0.0730

Epoch: 0201 train_loss= 0.4587 train_acc= 0.9500 val_loss= 0.8192 val_acc= 0.8000 time= 0.0670

Epoch: 0202 train_loss= 0.4574 train_acc= 0.9500 val_loss= 0.8165 val_acc= 0.8000 time= 0.0640

Epoch: 0203 train_loss= 0.4560 train_acc= 0.9500 val_loss= 0.8137 val_acc= 0.8033 time= 0.0621

Epoch: 0204 train_loss= 0.4537 train_acc= 0.9500 val_loss= 0.8109 val_acc= 0.7933 time= 0.0649

Epoch: 0205 train_loss= 0.4510 train_acc= 0.9500 val_loss= 0.8082 val_acc= 0.7933 time= 0.0620

Epoch: 0206 train_loss= 0.4480 train_acc= 0.9500 val_loss= 0.8063 val_acc= 0.7967 time= 0.0650

Epoch: 0207 train_loss= 0.4456 train_acc= 0.9643 val_loss= 0.8053 val_acc= 0.8033 time= 0.0620

Epoch: 0208 train_loss= 0.4437 train_acc= 0.9643 val_loss= 0.8039 val_acc= 0.8100 time= 0.0660

Epoch: 0209 train_loss= 0.4423 train_acc= 0.9643 val_loss= 0.8031 val_acc= 0.8100 time= 0.0670

Epoch: 0210 train_loss= 0.4408 train_acc= 0.9714 val_loss= 0.8028 val_acc= 0.8167 time= 0.0660

Epoch: 0211 train_loss= 0.4391 train_acc= 0.9714 val_loss= 0.8017 val_acc= 0.8167 time= 0.0680

Epoch: 0212 train_loss= 0.4367 train_acc= 0.9714 val_loss= 0.8003 val_acc= 0.8167 time= 0.0730

Epoch: 0213 train_loss= 0.4343 train_acc= 0.9714 val_loss= 0.7991 val_acc= 0.8167 time= 0.0680

Epoch: 0214 train_loss= 0.4316 train_acc= 0.9714 val_loss= 0.7973 val_acc= 0.8100 time= 0.0690

Epoch: 0215 train_loss= 0.4288 train_acc= 0.9714 val_loss= 0.7951 val_acc= 0.8100 time= 0.0710

Epoch: 0216 train_loss= 0.4266 train_acc= 0.9714 val_loss= 0.7927 val_acc= 0.8100 time= 0.0670

Epoch: 0217 train_loss= 0.4252 train_acc= 0.9643 val_loss= 0.7914 val_acc= 0.8067 time= 0.0620

Epoch: 0218 train_loss= 0.4240 train_acc= 0.9643 val_loss= 0.7896 val_acc= 0.8067 time= 0.0630

Epoch: 0219 train_loss= 0.4229 train_acc= 0.9643 val_loss= 0.7869 val_acc= 0.7967 time= 0.0640

Epoch: 0220 train_loss= 0.4217 train_acc= 0.9643 val_loss= 0.7839 val_acc= 0.8033 time= 0.0710

Epoch: 0221 train_loss= 0.4206 train_acc= 0.9643 val_loss= 0.7818 val_acc= 0.8133 time= 0.0650

Epoch: 0222 train_loss= 0.4194 train_acc= 0.9643 val_loss= 0.7806 val_acc= 0.8167 time= 0.0640

Epoch: 0223 train_loss= 0.4187 train_acc= 0.9786 val_loss= 0.7804 val_acc= 0.8233 time= 0.0660

Epoch: 0224 train_loss= 0.4178 train_acc= 0.9786 val_loss= 0.7806 val_acc= 0.8267 time= 0.0650

Epoch: 0225 train_loss= 0.4168 train_acc= 0.9786 val_loss= 0.7803 val_acc= 0.8233 time= 0.0700

Epoch: 0226 train_loss= 0.4153 train_acc= 0.9786 val_loss= 0.7799 val_acc= 0.8200 time= 0.0670

Epoch: 0227 train_loss= 0.4138 train_acc= 0.9714 val_loss= 0.7798 val_acc= 0.8167 time= 0.0680

Epoch: 0228 train_loss= 0.4126 train_acc= 0.9714 val_loss= 0.7807 val_acc= 0.8133 time= 0.0660

Epoch: 0229 train_loss= 0.4113 train_acc= 0.9643 val_loss= 0.7817 val_acc= 0.8033 time= 0.0690

Epoch: 0230 train_loss= 0.4099 train_acc= 0.9714 val_loss= 0.7819 val_acc= 0.8000 time= 0.0720

Epoch: 0231 train_loss= 0.4083 train_acc= 0.9714 val_loss= 0.7813 val_acc= 0.8000 time= 0.0690

Epoch: 0232 train_loss= 0.4062 train_acc= 0.9714 val_loss= 0.7800 val_acc= 0.8033 time= 0.0620

Epoch: 0233 train_loss= 0.4046 train_acc= 0.9714 val_loss= 0.7787 val_acc= 0.8033 time= 0.0620

Epoch: 0234 train_loss= 0.4029 train_acc= 0.9786 val_loss= 0.7758 val_acc= 0.8100 time= 0.0620

Epoch: 0235 train_loss= 0.4014 train_acc= 0.9786 val_loss= 0.7730 val_acc= 0.8133 time= 0.0630

Epoch: 0236 train_loss= 0.3999 train_acc= 0.9786 val_loss= 0.7708 val_acc= 0.8267 time= 0.0620

Epoch: 0237 train_loss= 0.3985 train_acc= 0.9786 val_loss= 0.7687 val_acc= 0.8267 time= 0.0620

Epoch: 0238 train_loss= 0.3975 train_acc= 0.9714 val_loss= 0.7671 val_acc= 0.8300 time= 0.0680

Epoch: 0239 train_loss= 0.3964 train_acc= 0.9714 val_loss= 0.7663 val_acc= 0.8300 time= 0.0664

Epoch: 0240 train_loss= 0.3946 train_acc= 0.9714 val_loss= 0.7659 val_acc= 0.8300 time= 0.0650

Epoch: 0241 train_loss= 0.3930 train_acc= 0.9714 val_loss= 0.7658 val_acc= 0.8267 time= 0.0690

Epoch: 0242 train_loss= 0.3918 train_acc= 0.9714 val_loss= 0.7661 val_acc= 0.8267 time= 0.0670

Epoch: 0243 train_loss= 0.3907 train_acc= 0.9714 val_loss= 0.7660 val_acc= 0.8267 time= 0.0670

Epoch: 0244 train_loss= 0.3898 train_acc= 0.9786 val_loss= 0.7652 val_acc= 0.8267 time= 0.0710

Epoch: 0245 train_loss= 0.3886 train_acc= 0.9786 val_loss= 0.7641 val_acc= 0.8267 time= 0.0690

Epoch: 0246 train_loss= 0.3866 train_acc= 0.9786 val_loss= 0.7621 val_acc= 0.8267 time= 0.0700

Epoch: 0247 train_loss= 0.3848 train_acc= 0.9786 val_loss= 0.7611 val_acc= 0.8200 time= 0.0630

Epoch: 0248 train_loss= 0.3833 train_acc= 0.9786 val_loss= 0.7599 val_acc= 0.8200 time= 0.0631

Epoch: 0249 train_loss= 0.3818 train_acc= 0.9786 val_loss= 0.7581 val_acc= 0.8200 time= 0.0630

Epoch: 0250 train_loss= 0.3803 train_acc= 0.9714 val_loss= 0.7558 val_acc= 0.8200 time= 0.0650

Epoch: 0251 train_loss= 0.3788 train_acc= 0.9714 val_loss= 0.7526 val_acc= 0.8200 time= 0.0640

Epoch: 0252 train_loss= 0.3773 train_acc= 0.9643 val_loss= 0.7515 val_acc= 0.8200 time= 0.0630

Epoch: 0253 train_loss= 0.3760 train_acc= 0.9643 val_loss= 0.7506 val_acc= 0.8200 time= 0.0650

Epoch: 0254 train_loss= 0.3747 train_acc= 0.9714 val_loss= 0.7496 val_acc= 0.8167 time= 0.0660

Epoch: 0255 train_loss= 0.3739 train_acc= 0.9714 val_loss= 0.7487 val_acc= 0.8167 time= 0.0670

Epoch: 0256 train_loss= 0.3729 train_acc= 0.9786 val_loss= 0.7484 val_acc= 0.8167 time= 0.0670

Epoch: 0257 train_loss= 0.3719 train_acc= 0.9786 val_loss= 0.7478 val_acc= 0.8167 time= 0.0670

Epoch: 0258 train_loss= 0.3709 train_acc= 0.9786 val_loss= 0.7469 val_acc= 0.8167 time= 0.0660

Epoch: 0259 train_loss= 0.3693 train_acc= 0.9786 val_loss= 0.7465 val_acc= 0.8167 time= 0.0700

Epoch: 0260 train_loss= 0.3678 train_acc= 0.9786 val_loss= 0.7461 val_acc= 0.8133 time= 0.0705

Epoch: 0261 train_loss= 0.3661 train_acc= 0.9786 val_loss= 0.7466 val_acc= 0.8200 time= 0.0690

Epoch: 0262 train_loss= 0.3647 train_acc= 0.9857 val_loss= 0.7471 val_acc= 0.8133 time= 0.0640

Epoch: 0263 train_loss= 0.3635 train_acc= 0.9857 val_loss= 0.7472 val_acc= 0.8133 time= 0.0630

Epoch: 0264 train_loss= 0.3626 train_acc= 0.9857 val_loss= 0.7474 val_acc= 0.8133 time= 0.0620

Epoch: 0265 train_loss= 0.3617 train_acc= 0.9857 val_loss= 0.7467 val_acc= 0.8133 time= 0.0640

Epoch: 0266 train_loss= 0.3606 train_acc= 0.9857 val_loss= 0.7444 val_acc= 0.8200 time= 0.0640

Epoch: 0267 train_loss= 0.3599 train_acc= 0.9857 val_loss= 0.7412 val_acc= 0.8233 time= 0.0690

Epoch: 0268 train_loss= 0.3600 train_acc= 0.9786 val_loss= 0.7390 val_acc= 0.8267 time= 0.0675

Epoch: 0269 train_loss= 0.3599 train_acc= 0.9786 val_loss= 0.7366 val_acc= 0.8333 time= 0.0690

Epoch: 0270 train_loss= 0.3588 train_acc= 0.9786 val_loss= 0.7343 val_acc= 0.8333 time= 0.0690

Epoch: 0271 train_loss= 0.3572 train_acc= 0.9786 val_loss= 0.7323 val_acc= 0.8300 time= 0.0680

Epoch: 0272 train_loss= 0.3557 train_acc= 0.9714 val_loss= 0.7309 val_acc= 0.8233 time= 0.0670

Epoch: 0273 train_loss= 0.3546 train_acc= 0.9714 val_loss= 0.7301 val_acc= 0.8233 time= 0.0660

Epoch: 0274 train_loss= 0.3527 train_acc= 0.9714 val_loss= 0.7298 val_acc= 0.8200 time= 0.0690

Epoch: 0275 train_loss= 0.3507 train_acc= 0.9714 val_loss= 0.7292 val_acc= 0.8200 time= 0.0710

Epoch: 0276 train_loss= 0.3490 train_acc= 0.9714 val_loss= 0.7283 val_acc= 0.8233 time= 0.0680

Epoch: 0277 train_loss= 0.3476 train_acc= 0.9714 val_loss= 0.7277 val_acc= 0.8233 time= 0.0630

Epoch: 0278 train_loss= 0.3466 train_acc= 0.9857 val_loss= 0.7289 val_acc= 0.8267 time= 0.0630

Epoch: 0279 train_loss= 0.3463 train_acc= 0.9857 val_loss= 0.7312 val_acc= 0.8267 time= 0.0620

Epoch: 0280 train_loss= 0.3459 train_acc= 0.9857 val_loss= 0.7325 val_acc= 0.8233 time= 0.0621

Epoch: 0281 train_loss= 0.3449 train_acc= 0.9857 val_loss= 0.7319 val_acc= 0.8233 time= 0.0660

Epoch: 0282 train_loss= 0.3431 train_acc= 0.9857 val_loss= 0.7291 val_acc= 0.8267 time= 0.0660

Epoch: 0283 train_loss= 0.3419 train_acc= 0.9857 val_loss= 0.7267 val_acc= 0.8233 time= 0.0640

Epoch: 0284 train_loss= 0.3413 train_acc= 0.9714 val_loss= 0.7253 val_acc= 0.8167 time= 0.0660

Epoch: 0285 train_loss= 0.3413 train_acc= 0.9714 val_loss= 0.7261 val_acc= 0.8167 time= 0.0660

Epoch: 0286 train_loss= 0.3412 train_acc= 0.9714 val_loss= 0.7259 val_acc= 0.8200 time= 0.0670

Epoch: 0287 train_loss= 0.3406 train_acc= 0.9714 val_loss= 0.7257 val_acc= 0.8167 time= 0.0670

Epoch: 0288 train_loss= 0.3392 train_acc= 0.9714 val_loss= 0.7251 val_acc= 0.8167 time= 0.0670

Epoch: 0289 train_loss= 0.3372 train_acc= 0.9714 val_loss= 0.7238 val_acc= 0.8167 time= 0.0650

Epoch: 0290 train_loss= 0.3356 train_acc= 0.9786 val_loss= 0.7233 val_acc= 0.8167 time= 0.0730

Epoch: 0291 train_loss= 0.3349 train_acc= 0.9786 val_loss= 0.7238 val_acc= 0.8167 time= 0.0690

Epoch: 0292 train_loss= 0.3349 train_acc= 0.9786 val_loss= 0.7255 val_acc= 0.8200 time= 0.0630

Epoch: 0293 train_loss= 0.3348 train_acc= 0.9786 val_loss= 0.7262 val_acc= 0.8167 time= 0.0660

Epoch: 0294 train_loss= 0.3333 train_acc= 0.9786 val_loss= 0.7255 val_acc= 0.8233 time= 0.0620

Epoch: 0295 train_loss= 0.3313 train_acc= 0.9786 val_loss= 0.7241 val_acc= 0.8233 time= 0.0630

Epoch: 0296 train_loss= 0.3295 train_acc= 0.9786 val_loss= 0.7235 val_acc= 0.8167 time= 0.0623

Epoch: 0297 train_loss= 0.3285 train_acc= 0.9857 val_loss= 0.7224 val_acc= 0.8167 time= 0.0640

Epoch: 0298 train_loss= 0.3286 train_acc= 0.9857 val_loss= 0.7217 val_acc= 0.8100 time= 0.0660

Epoch: 0299 train_loss= 0.3284 train_acc= 0.9786 val_loss= 0.7219 val_acc= 0.8133 time= 0.0650

Epoch: 0300 train_loss= 0.3284 train_acc= 0.9786 val_loss= 0.7220 val_acc= 0.8133 time= 0.0640

Test set results: loss= 0.7690 accuracy= 0.8090我们监控整个训练过程中的loss和accuracy指标,对其进行对比可视化,如下所示:

感兴趣都可以自行尝试实践下!