环境说明

Ubuntu 18+conda环境python3.9+cuda10.2,硬件平台是Jetson tx2 nx

前提你已经能运行YOLOV5代码后,再配置tensorRT进行加速。

目前只试了图片检测和C++打开USB摄像头进行视频检测,希望是使用python配合D435i深度相机来实现检测,后续再更新。

一、安装TensorRT

- 安装git和cmake(已经安装了忽略这一步)

sudo apt-get install libpython3-dev python3-numpy

- 克隆源码

连接不上就挂个梯子

git clone https://github.com/dusty-nv/jetson-inference

- 安装附属文件

git submodule update --init

- 添加jetson-inference需要的包

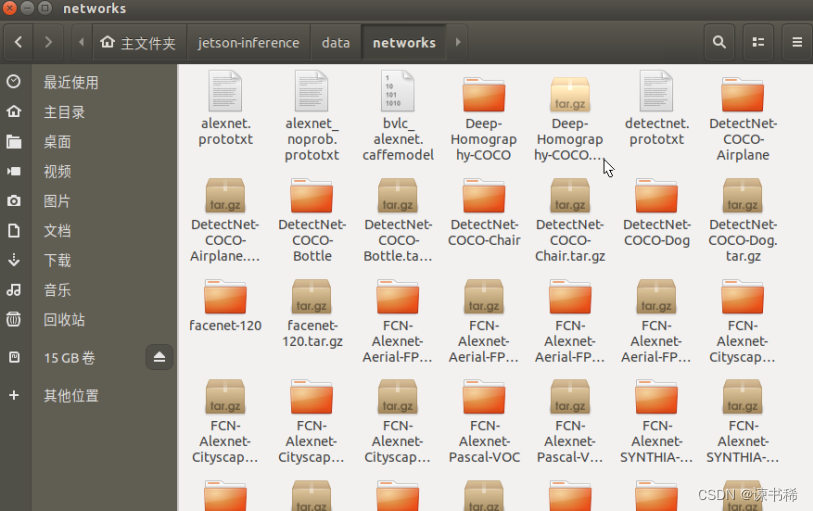

- 先下载相关包:百度网盘分享 提取码:s75z

下载后拷贝到jetson tx2中,将所有包复制到刚刚克隆的jetson-inference下的data/networks

- 然后cd进入到data/networks文件夹,解压相关包

cd jetson-inference/data/networks

for tar in *.tar.gz; do tar xvf $tar; done

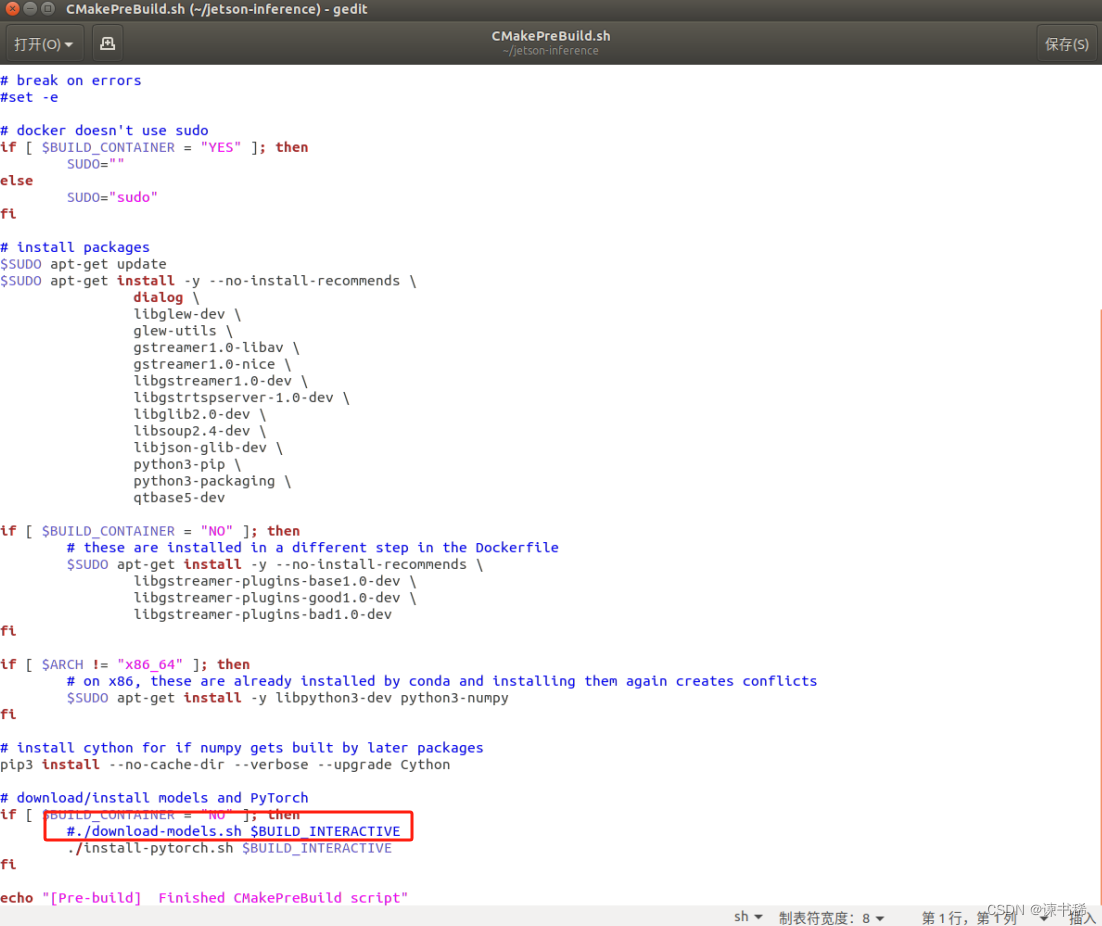

- 再编辑 jetson-inference/CMakePrebuild.sh文件,把./download-models.sh 注释掉

- 编译

- 在 jetson-inference文件夹里面创建build文件夹

mkdir build

- 进入build中进行cmake

cd build

cmake ../

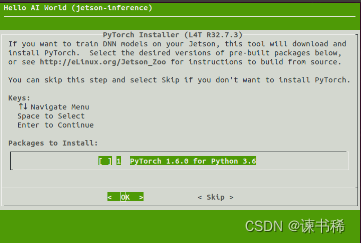

运行过程中弹出该页面跳过即可

cmake过程中报错,克隆不成功,就删除jetson-inference文件夹,再试一遍

- cmake成功后

# 可能有点慢,耐心等待

make

make成功后

sudo make install

- 测试

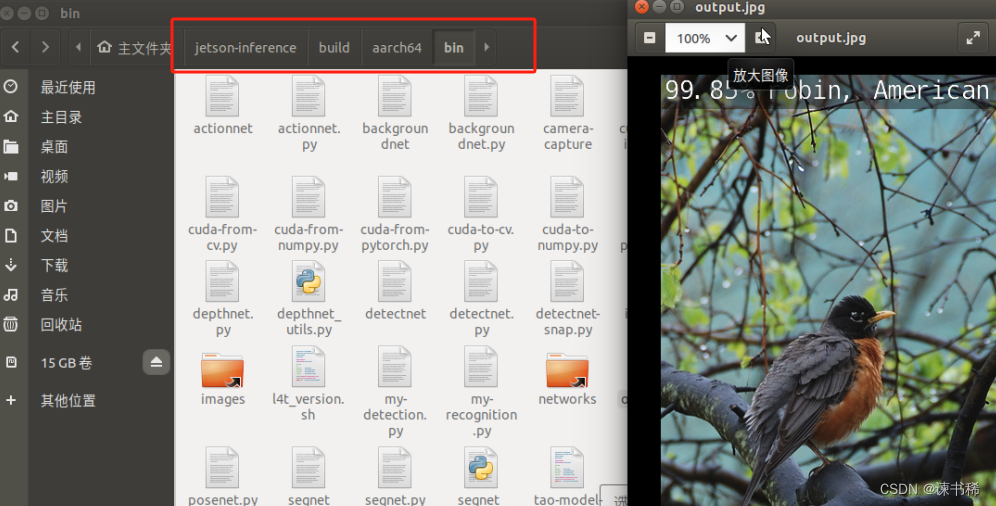

安装成功后,进行测试

cd jetson-inference/build/aarch64/bin

./imagenet-console ./images/bird_0.jpg output.jpg

参考博主:https://blog.csdn.net/qq_42078934/article/details/129669965?spm=1001.2014.3001.5506

- 如果出现在下载Googlenet.tar.gz相关,并且最终下载失败,报错,需要先中断执行;

- 然后在networks文件夹中新建Googlenet文件夹,将networks文件夹中的bvlc_googlenet.caffemodel、googlenet.prototxt和googlenet_noprob.prototxt剪切到新建的Googlenet文件夹;

- 最后在Googlenet文件夹新建networks文件夹,将ilsvrc12_synset_words.txt剪切到新建networks文件夹

再次进行测试:

二、TensorRT加速YOLOV5

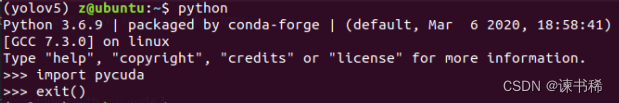

- 安装pycuda包

这个包是使用python编写加速的一个包,本文还只实验了C++版本的,但可以先把这个包安装上

python3 -m pip install 'pycuda<2020.1'

用这个命令直接安装的2019.1版本的,网上有些教程是下载包再进行安装,我这样发现安装的包在虚拟环境中用不了,建议直接进入到需要安装的虚拟环境中,直接用这条命令进行安装。

安装完后测试:

- 下载tensorrt的YOLOV5代码

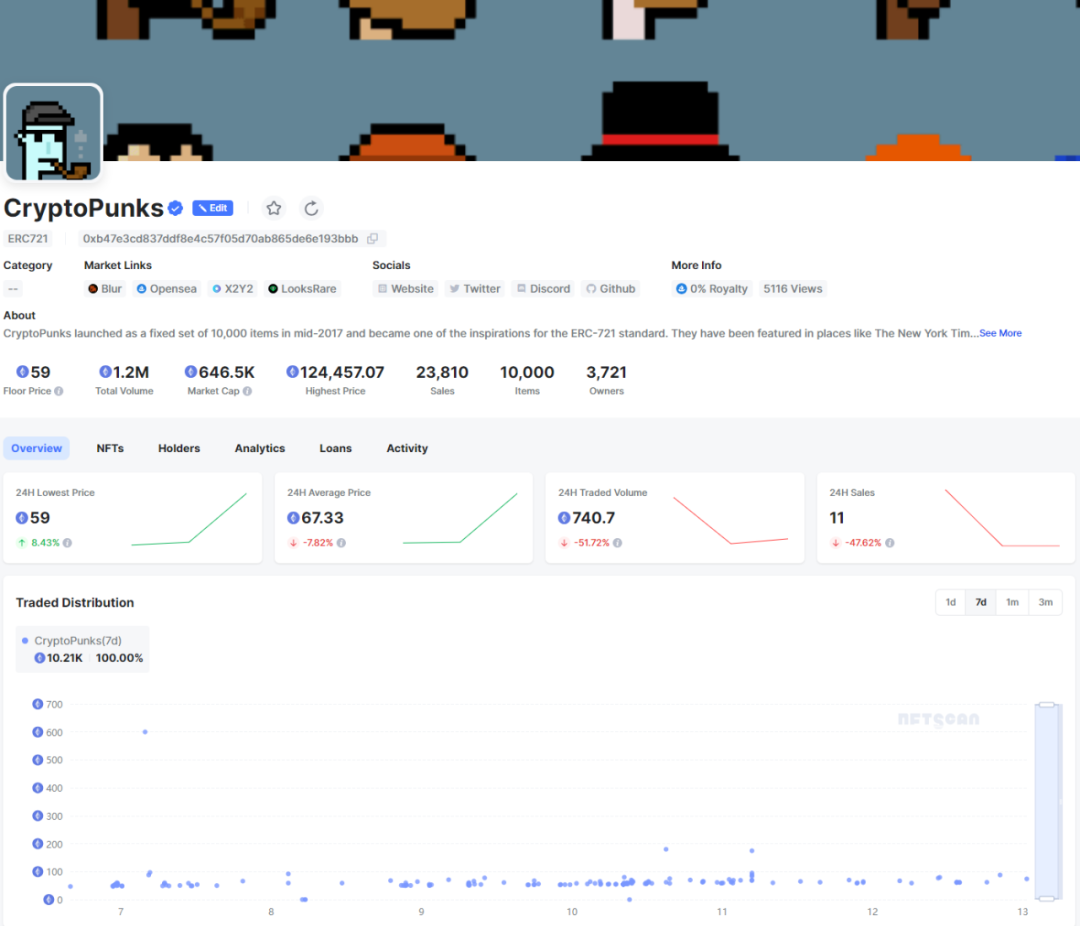

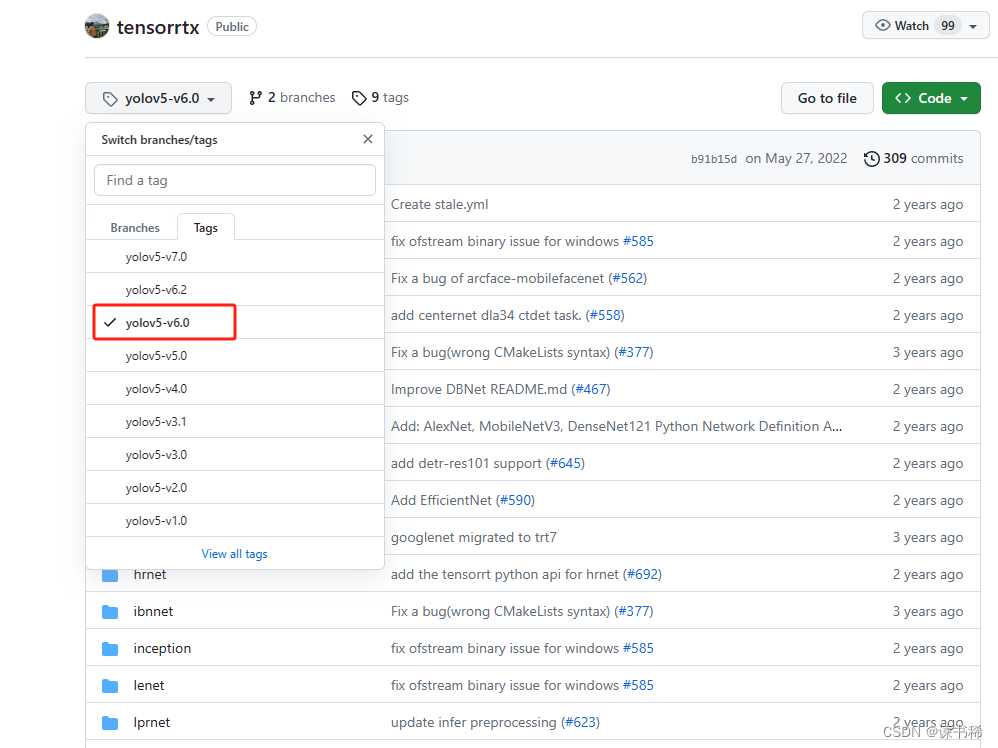

这里我是想把自己训练好的模型,用tensorrt做一个加速,自己训练模型的yolov5版本是6.0,所以这里也下载6.0版本的tensorrt yolov5。下载链接

- 生成wts文件

自己训练的模型是.pt,这里先转换成.wts文件。

- 把刚刚下载的tensorrt yolo文件中yolov5下的gen_wts.py复制到你自己yolov5代码的文件夹下(这里应该存放了你自己训练的.pt权重文件)

- 执行gen_wts.py生成.wts文件。

python3 gen_wts.py weights/yolov5s.pt # 后面是自己的权重的名字

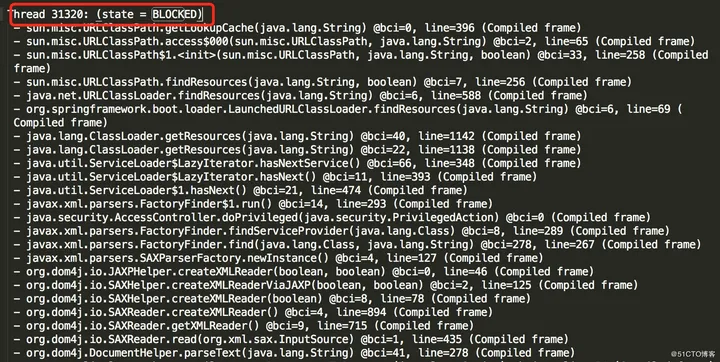

这里如果遇到报错,参考解决办法:解决办法

- 生成部署引擎

- 先将yolov5s.wts文件(上一步生成的文件)放到tensorrtx-yolov5-v6.0/yolov5文件夹中。

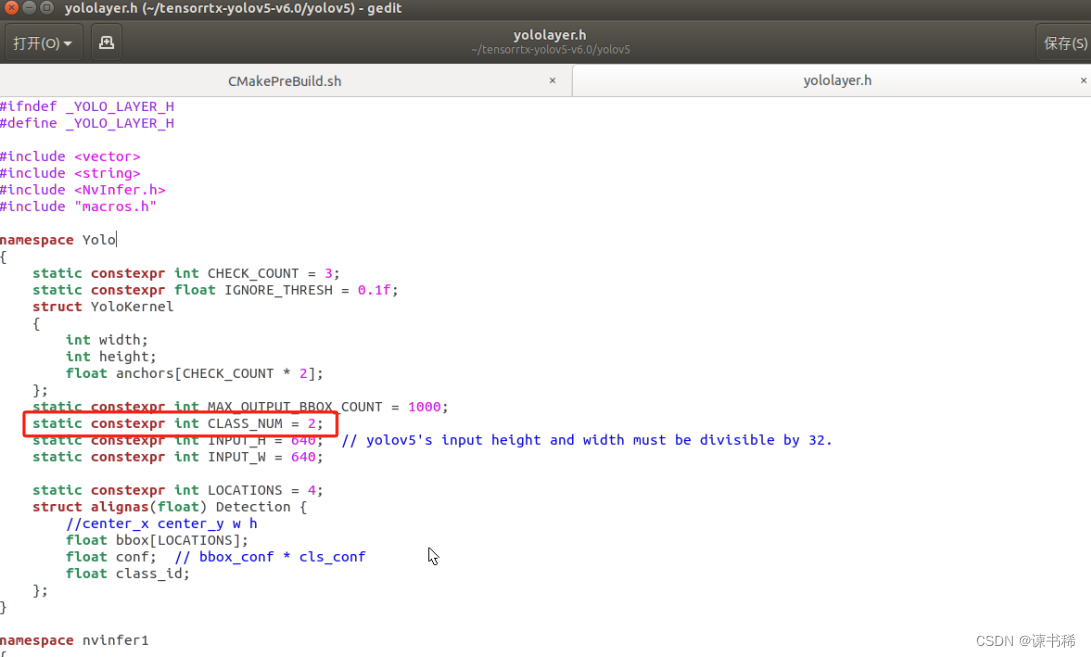

- 然后打开yololayer.h文件,修改num总数,根据你训练模型的类个数来,这里我是两类,所以改为2

- 编译相关

cd tensorrtx-yolov5-v6.0/yolov5

mkdir build

cd build

cmake ..

make

sudo ./yolov5 -s ../yolov5s.wts yolov5s.engine s

# sudo ./yolov5 -s [.wts] [.engine] [s/m/l/x/s6/m6/l6/x6 or c/c6 gd gw]

# s代表用的是yolov5s,是什么就改成什么

到这里便通过tensorrt生成了基于C++的engine部署引擎文件,后缀.engine

- 使用图片测试

将yolov5源代码的data文件夹中的images文件夹整个复制到tensorrtx/yolov5文件夹,在build文件夹里执行下面的代码。

sudo ./yolov5 -d yolov5s.engine ../samples

#sudo ./yolov5 -d [.engine] [image folder]

执行后,结果会在build中看到。如果图形没有画框,可能是因为s模型所产生的置信度一般在0.2-0.4之间,在yolov5.cpp文件中置信度conf_thresh设置在0.5,低于0.5的检测框会被排除。

- 使用USB摄像头

这里是采用的C++的版本,替换tensorrtx-yolov5-v6.0\yolov5\yolov5.cpp文件中的内容为(注意修改为自己的分类类别):

#include <iostream>

#include <chrono>

#include "cuda_utils.h"

#include "logging.h"

#include "common.hpp"

#include "utils.h"

#include "calibrator.h"

#define USE_FP16 // set USE_INT8 or USE_FP16 or USE_FP32

#define DEVICE 0 // GPU id

#define NMS_THRESH 0.4

#define CONF_THRESH 0.5

#define BATCH_SIZE 1

// stuff we know about the network and the input/output blobs

static const int INPUT_H = Yolo::INPUT_H;

static const int INPUT_W = Yolo::INPUT_W;

static const int CLASS_NUM = Yolo::CLASS_NUM;

static const int OUTPUT_SIZE = Yolo::MAX_OUTPUT_BBOX_COUNT * sizeof(Yolo::Detection) / sizeof(float) + 1; // we assume the yololayer outputs no more than MAX_OUTPUT_BBOX_COUNT boxes that conf >= 0.1

const char* INPUT_BLOB_NAME = "data";

const char* OUTPUT_BLOB_NAME = "prob";

static Logger gLogger;

//修改为自己的类别

char *my_classes[]={"person", "bicycle"};

static int get_width(int x, float gw, int divisor = 8) {

//return math.ceil(x / divisor) * divisor

if (int(x * gw) % divisor == 0) {

return int(x * gw);

}

return (int(x * gw / divisor) + 1) * divisor;

}

static int get_depth(int x, float gd) {

if (x == 1) {

return 1;

}

else {

return round(x * gd) > 1 ? round(x * gd) : 1;

}

}

//#创建engine和network

ICudaEngine* build_engine(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt, float& gd, float& gw, std::string& wts_name) {

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{ 3, INPUT_H, INPUT_W });

assert(data);

std::map<std::string, Weights> weightMap = loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 = focus(network, weightMap, *data, 3, get_width(64, gw), 3, "model.0");

auto conv1 = convBlock(network, weightMap, *focus0->getOutput(0), get_width(128, gw), 3, 2, 1, "model.1");

auto bottleneck_CSP2 = C3(network, weightMap, *conv1->getOutput(0), get_width(128, gw), get_width(128, gw), get_depth(3, gd), true, 1, 0.5, "model.2");

auto conv3 = convBlock(network, weightMap, *bottleneck_CSP2->getOutput(0), get_width(256, gw), 3, 2, 1, "model.3");

auto bottleneck_csp4 = C3(network, weightMap, *conv3->getOutput(0), get_width(256, gw), get_width(256, gw), get_depth(9, gd), true, 1, 0.5, "model.4");

auto conv5 = convBlock(network, weightMap, *bottleneck_csp4->getOutput(0), get_width(512, gw), 3, 2, 1, "model.5");

auto bottleneck_csp6 = C3(network, weightMap, *conv5->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(9, gd), true, 1, 0.5, "model.6");

auto conv7 = convBlock(network, weightMap, *bottleneck_csp6->getOutput(0), get_width(1024, gw), 3, 2, 1, "model.7");

auto spp8 = SPP(network, weightMap, *conv7->getOutput(0), get_width(1024, gw), get_width(1024, gw), 5, 9, 13, "model.8");

/* ------ yolov5 head ------ */

auto bottleneck_csp9 = C3(network, weightMap, *spp8->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.9");

auto conv10 = convBlock(network, weightMap, *bottleneck_csp9->getOutput(0), get_width(512, gw), 1, 1, 1, "model.10");

auto upsample11 = network->addResize(*conv10->getOutput(0));

assert(upsample11);

upsample11->setResizeMode(ResizeMode::kNEAREST);

upsample11->setOutputDimensions(bottleneck_csp6->getOutput(0)->getDimensions());

ITensor* inputTensors12[] = { upsample11->getOutput(0), bottleneck_csp6->getOutput(0) };

auto cat12 = network->addConcatenation(inputTensors12, 2);

auto bottleneck_csp13 = C3(network, weightMap, *cat12->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.13");

auto conv14 = convBlock(network, weightMap, *bottleneck_csp13->getOutput(0), get_width(256, gw), 1, 1, 1, "model.14");

auto upsample15 = network->addResize(*conv14->getOutput(0));

assert(upsample15);

upsample15->setResizeMode(ResizeMode::kNEAREST);

upsample15->setOutputDimensions(bottleneck_csp4->getOutput(0)->getDimensions());

ITensor* inputTensors16[] = { upsample15->getOutput(0), bottleneck_csp4->getOutput(0) };

auto cat16 = network->addConcatenation(inputTensors16, 2);

auto bottleneck_csp17 = C3(network, weightMap, *cat16->getOutput(0), get_width(512, gw), get_width(256, gw), get_depth(3, gd), false, 1, 0.5, "model.17");

// yolo layer 0

IConvolutionLayer* det0 = network->addConvolutionNd(*bottleneck_csp17->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.0.weight"], weightMap["model.24.m.0.bias"]);

auto conv18 = convBlock(network, weightMap, *bottleneck_csp17->getOutput(0), get_width(256, gw), 3, 2, 1, "model.18");

ITensor* inputTensors19[] = { conv18->getOutput(0), conv14->getOutput(0) };

auto cat19 = network->addConcatenation(inputTensors19, 2);

auto bottleneck_csp20 = C3(network, weightMap, *cat19->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.20");

//yolo layer 1

IConvolutionLayer* det1 = network->addConvolutionNd(*bottleneck_csp20->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.1.weight"], weightMap["model.24.m.1.bias"]);

auto conv21 = convBlock(network, weightMap, *bottleneck_csp20->getOutput(0), get_width(512, gw), 3, 2, 1, "model.21");

ITensor* inputTensors22[] = { conv21->getOutput(0), conv10->getOutput(0) };

auto cat22 = network->addConcatenation(inputTensors22, 2);

auto bottleneck_csp23 = C3(network, weightMap, *cat22->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.23");

IConvolutionLayer* det2 = network->addConvolutionNd(*bottleneck_csp23->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.2.weight"], weightMap["model.24.m.2.bias"]);

auto yolo = addYoLoLayer(network, weightMap, "model.24", std::vector<IConvolutionLayer*>{det0, det1, det2});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16 * (1 << 20)); // 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout << "Your platform support int8: " << (builder->platformHasFastInt8() ? "true" : "false") << std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator = new Int8EntropyCalibrator2(1, INPUT_W, INPUT_H, "./coco_calib/", "int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout << "Building engine, please wait for a while..." << std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

std::cout << "Build engine successfully!" << std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for (auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

ICudaEngine* build_engine_p6(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt, float& gd, float& gw, std::string& wts_name) {

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{ 3, INPUT_H, INPUT_W });

assert(data);

std::map<std::string, Weights> weightMap = loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 = focus(network, weightMap, *data, 3, get_width(64, gw), 3, "model.0");

auto conv1 = convBlock(network, weightMap, *focus0->getOutput(0), get_width(128, gw), 3, 2, 1, "model.1");

auto c3_2 = C3(network, weightMap, *conv1->getOutput(0), get_width(128, gw), get_width(128, gw), get_depth(3, gd), true, 1, 0.5, "model.2");

auto conv3 = convBlock(network, weightMap, *c3_2->getOutput(0), get_width(256, gw), 3, 2, 1, "model.3");

auto c3_4 = C3(network, weightMap, *conv3->getOutput(0), get_width(256, gw), get_width(256, gw), get_depth(9, gd), true, 1, 0.5, "model.4");

auto conv5 = convBlock(network, weightMap, *c3_4->getOutput(0), get_width(512, gw), 3, 2, 1, "model.5");

auto c3_6 = C3(network, weightMap, *conv5->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(9, gd), true, 1, 0.5, "model.6");

auto conv7 = convBlock(network, weightMap, *c3_6->getOutput(0), get_width(768, gw), 3, 2, 1, "model.7");

auto c3_8 = C3(network, weightMap, *conv7->getOutput(0), get_width(768, gw), get_width(768, gw), get_depth(3, gd), true, 1, 0.5, "model.8");

auto conv9 = convBlock(network, weightMap, *c3_8->getOutput(0), get_width(1024, gw), 3, 2, 1, "model.9");

auto spp10 = SPP(network, weightMap, *conv9->getOutput(0), get_width(1024, gw), get_width(1024, gw), 3, 5, 7, "model.10");

auto c3_11 = C3(network, weightMap, *spp10->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.11");

/* ------ yolov5 head ------ */

auto conv12 = convBlock(network, weightMap, *c3_11->getOutput(0), get_width(768, gw), 1, 1, 1, "model.12");

auto upsample13 = network->addResize(*conv12->getOutput(0));

assert(upsample13);

upsample13->setResizeMode(ResizeMode::kNEAREST);

upsample13->setOutputDimensions(c3_8->getOutput(0)->getDimensions());

ITensor* inputTensors14[] = { upsample13->getOutput(0), c3_8->getOutput(0) };

auto cat14 = network->addConcatenation(inputTensors14, 2);

auto c3_15 = C3(network, weightMap, *cat14->getOutput(0), get_width(1536, gw), get_width(768, gw), get_depth(3, gd), false, 1, 0.5, "model.15");

auto conv16 = convBlock(network, weightMap, *c3_15->getOutput(0), get_width(512, gw), 1, 1, 1, "model.16");

auto upsample17 = network->addResize(*conv16->getOutput(0));

assert(upsample17);

upsample17->setResizeMode(ResizeMode::kNEAREST);

upsample17->setOutputDimensions(c3_6->getOutput(0)->getDimensions());

ITensor* inputTensors18[] = { upsample17->getOutput(0), c3_6->getOutput(0) };

auto cat18 = network->addConcatenation(inputTensors18, 2);

auto c3_19 = C3(network, weightMap, *cat18->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.19");

auto conv20 = convBlock(network, weightMap, *c3_19->getOutput(0), get_width(256, gw), 1, 1, 1, "model.20");

auto upsample21 = network->addResize(*conv20->getOutput(0));

assert(upsample21);

upsample21->setResizeMode(ResizeMode::kNEAREST);

upsample21->setOutputDimensions(c3_4->getOutput(0)->getDimensions());

ITensor* inputTensors21[] = { upsample21->getOutput(0), c3_4->getOutput(0) };

auto cat22 = network->addConcatenation(inputTensors21, 2);

auto c3_23 = C3(network, weightMap, *cat22->getOutput(0), get_width(512, gw), get_width(256, gw), get_depth(3, gd), false, 1, 0.5, "model.23");

auto conv24 = convBlock(network, weightMap, *c3_23->getOutput(0), get_width(256, gw), 3, 2, 1, "model.24");

ITensor* inputTensors25[] = { conv24->getOutput(0), conv20->getOutput(0) };

auto cat25 = network->addConcatenation(inputTensors25, 2);

auto c3_26 = C3(network, weightMap, *cat25->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.26");

auto conv27 = convBlock(network, weightMap, *c3_26->getOutput(0), get_width(512, gw), 3, 2, 1, "model.27");

ITensor* inputTensors28[] = { conv27->getOutput(0), conv16->getOutput(0) };

auto cat28 = network->addConcatenation(inputTensors28, 2);

auto c3_29 = C3(network, weightMap, *cat28->getOutput(0), get_width(1536, gw), get_width(768, gw), get_depth(3, gd), false, 1, 0.5, "model.29");

auto conv30 = convBlock(network, weightMap, *c3_29->getOutput(0), get_width(768, gw), 3, 2, 1, "model.30");

ITensor* inputTensors31[] = { conv30->getOutput(0), conv12->getOutput(0) };

auto cat31 = network->addConcatenation(inputTensors31, 2);

auto c3_32 = C3(network, weightMap, *cat31->getOutput(0), get_width(2048, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.32");

/* ------ detect ------ */

IConvolutionLayer* det0 = network->addConvolutionNd(*c3_23->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.0.weight"], weightMap["model.33.m.0.bias"]);

IConvolutionLayer* det1 = network->addConvolutionNd(*c3_26->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.1.weight"], weightMap["model.33.m.1.bias"]);

IConvolutionLayer* det2 = network->addConvolutionNd(*c3_29->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.2.weight"], weightMap["model.33.m.2.bias"]);

IConvolutionLayer* det3 = network->addConvolutionNd(*c3_32->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.3.weight"], weightMap["model.33.m.3.bias"]);

auto yolo = addYoLoLayer(network, weightMap, "model.33", std::vector<IConvolutionLayer*>{det0, det1, det2, det3});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16 * (1 << 20)); // 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout << "Your platform support int8: " << (builder->platformHasFastInt8() ? "true" : "false") << std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator = new Int8EntropyCalibrator2(1, INPUT_W, INPUT_H, "./coco_calib/", "int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout << "Building engine, please wait for a while..." << std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

std::cout << "Build engine successfully!" << std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for (auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

void APIToModel(unsigned int maxBatchSize, IHostMemory** modelStream, float& gd, float& gw, std::string& wts_name) {

// Create builder

IBuilder* builder = createInferBuilder(gLogger);

IBuilderConfig* config = builder->createBuilderConfig();

// Create model to populate the network, then set the outputs and create an engine

ICudaEngine* engine = build_engine(maxBatchSize, builder, config, DataType::kFLOAT, gd, gw, wts_name);

assert(engine != nullptr);

// Serialize the engine

(*modelStream) = engine->serialize();

// Close everything down

engine->destroy();

builder->destroy();

config->destroy();

}

void doInference(IExecutionContext& context, cudaStream_t& stream, void** buffers, float* input, float* output, int batchSize) {

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CUDA_CHECK(cudaMemcpyAsync(buffers[0], input, batchSize * 3 * INPUT_H * INPUT_W * sizeof(float), cudaMemcpyHostToDevice, stream));

context.enqueue(batchSize, buffers, stream, nullptr);

CUDA_CHECK(cudaMemcpyAsync(output, buffers[1], batchSize * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

}

bool parse_args(int argc, char** argv, std::string& engine) {

if (argc < 3) return false;

if (std::string(argv[1]) == "-v" && argc == 3) {

engine = std::string(argv[2]);

}

else {

return false;

}

return true;

}

int main(int argc, char** argv) {

cudaSetDevice(DEVICE);

//std::string wts_name = "";

std::string engine_name = "";

//float gd = 0.0f, gw = 0.0f;

//std::string img_dir;

if (!parse_args(argc, argv, engine_name)) {

std::cerr << "arguments not right!" << std::endl;

std::cerr << "./yolov5 -v [.engine] // run inference with camera" << std::endl;

return -1;

}

std::ifstream file(engine_name, std::ios::binary);

if (!file.good()) {

std::cerr << " read " << engine_name << " error! " << std::endl;

return -1;

}

char* trtModelStream{ nullptr };

size_t size = 0;

file.seekg(0, file.end);

size = file.tellg();

file.seekg(0, file.beg);

trtModelStream = new char[size];

assert(trtModelStream);

file.read(trtModelStream, size);

file.close();

// prepare input data ---------------------------

static float data[BATCH_SIZE * 3 * INPUT_H * INPUT_W];

//for (int i = 0; i < 3 * INPUT_H * INPUT_W; i++)

// data[i] = 1.0;

static float prob[BATCH_SIZE * OUTPUT_SIZE];

IRuntime* runtime = createInferRuntime(gLogger);

assert(runtime != nullptr);

ICudaEngine* engine = runtime->deserializeCudaEngine(trtModelStream, size);

assert(engine != nullptr);

IExecutionContext* context = engine->createExecutionContext();

assert(context != nullptr);

delete[] trtModelStream;

assert(engine->getNbBindings() == 2);

void* buffers[2];

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine->getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine->getBindingIndex(OUTPUT_BLOB_NAME);

assert(inputIndex == 0);

assert(outputIndex == 1);

// Create GPU buffers on device

CUDA_CHECK(cudaMalloc(&buffers[inputIndex], BATCH_SIZE * 3 * INPUT_H * INPUT_W * sizeof(float)));

CUDA_CHECK(cudaMalloc(&buffers[outputIndex], BATCH_SIZE * OUTPUT_SIZE * sizeof(float)));

// Create stream

cudaStream_t stream;

CUDA_CHECK(cudaStreamCreate(&stream));

//#读取本地视频

//cv::VideoCapture capture("/home/nano/Videos/video.mp4");

//#调用本地usb摄像头,我的默认参数为1,如果1报错,可修改为0.

cv::VideoCapture capture(0);

if (!capture.isOpened()) {

std::cout << "Error opening video stream or file" << std::endl;

return -1;

}

int key;

int fcount = 0;

while (1)

{

cv::Mat frame;

capture >> frame;

if (frame.empty())

{

std::cout << "Fail to read image from camera!" << std::endl;

break;

}

fcount++;

//if (fcount < BATCH_SIZE && f + 1 != (int)file_names.size()) continue;

for (int b = 0; b < fcount; b++) {

//cv::Mat img = cv::imread(img_dir + "/" + file_names[f - fcount + 1 + b]);

cv::Mat img = frame;

if (img.empty()) continue;

cv::Mat pr_img = preprocess_img(img, INPUT_W, INPUT_H); // letterbox BGR to RGB

int i = 0;

for (int row = 0; row < INPUT_H; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;

for (int col = 0; col < INPUT_W; ++col) {

data[b * 3 * INPUT_H * INPUT_W + i] = (float)uc_pixel[2] / 255.0;

data[b * 3 * INPUT_H * INPUT_W + i + INPUT_H * INPUT_W] = (float)uc_pixel[1] / 255.0;

data[b * 3 * INPUT_H * INPUT_W + i + 2 * INPUT_H * INPUT_W] = (float)uc_pixel[0] / 255.0;

uc_pixel += 3;

++i;

}

}

}

// Run inference

auto start = std::chrono::system_clock::now();//#获取模型推理开始时间

doInference(*context, stream, buffers, data, prob, BATCH_SIZE);

auto end = std::chrono::system_clock::now();//#结束时间

//std::cout << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

int fps = 1000.0 / std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count();

std::vector<std::vector<Yolo::Detection>> batch_res(fcount);

for (int b = 0; b < fcount; b++) {

auto& res = batch_res[b];

nms(res, &prob[b * OUTPUT_SIZE], CONF_THRESH, NMS_THRESH);

}

for (int b = 0; b < fcount; b++) {

auto& res = batch_res[b];

//std::cout << res.size() << std::endl;

//cv::Mat img = cv::imread(img_dir + "/" + file_names[f - fcount + 1 + b]);

for (size_t j = 0; j < res.size(); j++) {

cv::Rect r = get_rect(frame, res[j].bbox);

cv::rectangle(frame, r, cv::Scalar(0x27, 0xC1, 0x36), 2);

std::string label = my_classes[(int)res[j].class_id];

cv::putText(frame, label, cv::Point(r.x, r.y - 1), cv::FONT_HERSHEY_PLAIN, 1.2, cv::Scalar(0xFF, 0xFF, 0xFF), 2);

std::string jetson_fps = "FPS: " + std::to_string(fps);

cv::putText(frame, jetson_fps, cv::Point(11, 80), cv::FONT_HERSHEY_PLAIN, 3, cv::Scalar(0, 0, 255), 2, cv::LINE_AA);

}

//cv::imwrite("_" + file_names[f - fcount + 1 + b], img);

}

cv::imshow("yolov5", frame);

key = cv::waitKey(1);

if (key == 'q') {

break;

}

fcount = 0;

}

capture.release();

// Release stream and buffers

cudaStreamDestroy(stream);

CUDA_CHECK(cudaFree(buffers[inputIndex]));

CUDA_CHECK(cudaFree(buffers[outputIndex]));

// Destroy the engine

context->destroy();

engine->destroy();

runtime->destroy();

return 0;

}

修改完后执行:

cd build

make

sudo ./yolov5 -v yolov5s.engine # 后面是自己生成的部署引擎文件