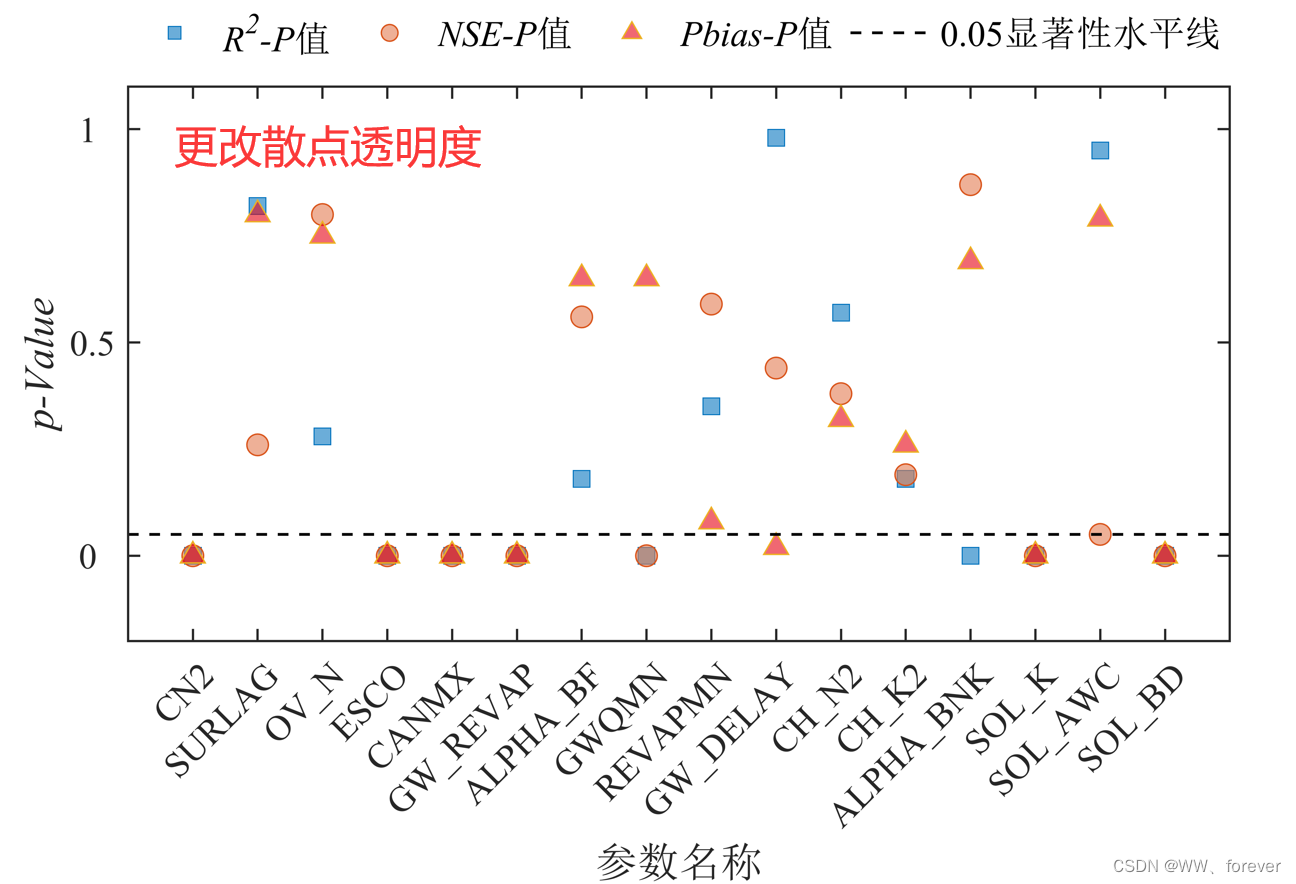

(a)Mask RCNN总体流程

一.Mask RCNN 架构

自己整理了一份Mask RCNN架构图如下,其中绿色模块只有推理过程才会涉及。

核心模块包括:数据预处理,骨干网络,区域提议网络,FastRCNN分支,Mask分支,数据后处理等。

二.网络核心流程

class FasterRCNNBase(nn.Module):

def __init__(self, backbone, rpn, roi_heads, transform):

super(FasterRCNNBase, self).__init__()

self.transform = transform

self.backbone = backbone

self.rpn = rpn

self.roi_heads = roi_heads

# used only on torchscript mode

self._has_warned = False

@torch.jit.unused

def eager_outputs(self, losses, detections):

# type: (Dict[str, Tensor], List[Dict[str, Tensor]]) -> Union[Dict[str, Tensor], List[Dict[str, Tensor]]]

if self.training:

return losses

return detections

def forward(self, images, targets=None):

# type: (List[Tensor], Optional[List[Dict[str, Tensor]]]) -> Tuple[Dict[str, Tensor], List[Dict[str, Tensor]]]

if self.training and targets is None:

raise ValueError("In training mode, targets should be passed")

if self.training:

assert targets is not None

for target in targets: # 进一步判断传入的target的boxes参数是否符合规定

boxes = target["boxes"]

if isinstance(boxes, torch.Tensor):

if len(boxes.shape) != 2 or boxes.shape[-1] != 4:

raise ValueError("Expected target boxes to be a tensor"

"of shape [N, 4], got {:}.".format(

boxes.shape))

else:

raise ValueError("Expected target boxes to be of type "

"Tensor, got {:}.".format(type(boxes)))

original_image_sizes = torch.jit.annotate(List[Tuple[int, int]], [])

for img in images:

val = img.shape[-2:]

assert len(val) == 2 # 防止输入的是个一维向量

original_image_sizes.append((val[0], val[1]))

# original_image_sizes = [img.shape[-2:] for img in images]

images, targets = self.transform(images, targets) # 对图像进行预处理

# print(images.tensors.shape)

features = self.backbone(images.tensors) # 将图像输入backbone得到特征图

if isinstance(features, torch.Tensor): # 若只在一层特征层上预测,将feature放入有序字典中,并编号为‘0’

features = OrderedDict([('0', features)]) # 若在多层特征层上预测,传入的就是一个有序字典

# 将特征层以及标注target信息传入rpn中

# proposals: List[Tensor], Tensor_shape: [num_proposals, 4],

# 每个proposals是绝对坐标,且为(x1, y1, x2, y2)格式

proposals, proposal_losses = self.rpn(images, features, targets)

# 将rpn生成的数据以及标注target信息传入fast rcnn后半部分

detections, detector_losses = self.roi_heads(features, proposals, images.image_sizes, targets)

# 对网络的预测结果进行后处理(主要将bboxes还原到原图像尺度上)

detections = self.transform.postprocess(detections, images.image_sizes, original_image_sizes)

losses = {}

losses.update(detector_losses)

losses.update(proposal_losses)

if torch.jit.is_scripting():

if not self._has_warned:

warnings.warn("RCNN always returns a (Losses, Detections) tuple in scripting")

self._has_warned = True

return losses, detections

else:

return self.eager_outputs(losses, detections)

# if self.training:

# return losses

#

# return detections

FasterRCNNBase是RCNN检测算法的基类,FasterRCNN类要继承FasterRCNNBase类,而MaskRCNN类又要继承FasterRCNN类,所以当实例化一个model并传入数据x时,会调用FasterRCNNBase的forward函数:

model = MaskRCNN(backbone,num_classes)

model(images,targets)

FasterRCNNBase的 init() 函数:

def __init__(self, backbone, rpn, roi_heads, transform):

super(FasterRCNNBase, self).__init__()

self.transform = transform

self.backbone = backbone

self.rpn = rpn

self.roi_heads = roi_heads

# used only on torchscript mode

self._has_warned = False

传入参数包括:

(1)backbone:

resnet50

resnet101

resnet50+fpn

resnet101+fpn

(2)rpn:

区域提议网络

(3)roi_haeds:

box roi pooling/align

two MLP head

box predictor

mask roi pool

mask head

mask predictor

(4)transforms:

GeneraRCNNtransforms类的实例,用于数据预处理

FasterRCNNBase的 forward() 函数:

def forward(self, images, targets=None):

# type: (List[Tensor], Optional[List[Dict[str, Tensor]]]) -> Tuple[Dict[str, Tensor], List[Dict[str, Tensor]]]

if self.training and targets is None:

raise ValueError("In training mode, targets should be passed")

if self.training:

assert targets is not None

for target in targets: # 进一步判断传入的target的boxes参数是否符合规定

boxes = target["boxes"]

if isinstance(boxes, torch.Tensor):

if len(boxes.shape) != 2 or boxes.shape[-1] != 4:

raise ValueError("Expected target boxes to be a tensor"

"of shape [N, 4], got {:}.".format(

boxes.shape))

else:

raise ValueError("Expected target boxes to be of type "

"Tensor, got {:}.".format(type(boxes)))

original_image_sizes = torch.jit.annotate(List[Tuple[int, int]], [])

for img in images:

val = img.shape[-2:]

assert len(val) == 2 # 防止输入的是个一维向量

original_image_sizes.append((val[0], val[1]))

# original_image_sizes = [img.shape[-2:] for img in images]

images, targets = self.transform(images, targets) # 对图像进行预处理

# print(images.tensors.shape)

features = self.backbone(images.tensors) # 将图像输入backbone得到特征图

if isinstance(features, torch.Tensor): # 若只在一层特征层上预测,将feature放入有序字典中,并编号为‘0’

features = OrderedDict([('0', features)]) # 若在多层特征层上预测,传入的就是一个有序字典

# 将特征层以及标注target信息传入rpn中

# proposals: List[Tensor], Tensor_shape: [num_proposals, 4],

# 每个proposals是绝对坐标,且为(x1, y1, x2, y2)格式

proposals, proposal_losses = self.rpn(images, features, targets)

# 将rpn生成的数据以及标注target信息传入fast rcnn后半部分

detections, detector_losses = self.roi_heads(features, proposals, images.image_sizes, targets)

# 对网络的预测结果进行后处理(主要将bboxes还原到原图像尺度上)

detections = self.transform.postprocess(detections, images.image_sizes, original_image_sizes)

losses = {}

losses.update(detector_losses)

losses.update(proposal_losses)

if torch.jit.is_scripting():

if not self._has_warned:

warnings.warn("RCNN always returns a (Losses, Detections) tuple in scripting")

self._has_warned = True

return losses, detections

else:

return self.eager_outputs(losses, detections)

首先增加一些容错机制,保住输入数据格式符合模型要求,然后将images和targets输入transforms中进行数据格式的预处理;然后将images输入到backbone中,得到特征图features;将features,images,targets输入rpn网络中,得到proposals和proposals_loss;然后将proposals,images,features等输入到roi_heads得到detections和detector_loss;如果在训练模式下,则返回loss(proposals_loss和detection_loss),在推理模式下,则返回detections。