常规卷积操作

对于一张5×5像素、三通道(shape为5×5×3),经过3×3卷积核的卷积层(假设输出通道数为4,则卷积核shape为3×3×3×4,最终输出4个Feature Map,如果有same padding则尺寸与输入层相同(5×5),如果没有则为尺寸变为3×3

深度可分离卷积

- 逐通道卷积Depthwise Convolution

Depthwise Convolution的一个卷积核负责一个通道,一个通道只被一个卷积核卷积。

一张5×5像素、三通道彩色输入图片(shape为5×5×3),Depthwise Convolution首先经过第一次卷积运算,DW完全是在二维平面内进行。卷积核的数量与上一层的通道数相同(通道和卷积核一一对应)。所以一个三通道的图像经过运算后生成了3个Feature map(如果有same padding则尺寸与输入层相同为5×5),如下图所示。

Depthwise Convolution完成后的Feature map数量与输入层的通道数相同,无法扩展Feature map。而且这种运算对输入层的每个通道独立进行卷积运算,没有有效的利用不同通道在相同空间位置上的feature信息。因此需要Pointwise Convolution来将这些Feature map进行组合生成新的Feature map

- 逐点卷积Pointwise Convolution

Pointwise Convolution的运算与常规卷积运算非常相似,它的卷积核的尺寸为 1×1×M,M为上一层的通道数。所以这里的卷积运算会将上一步的map在深度方向上进行加权组合,生成新的Feature map。有几个卷积核就有几个输出Feature map

经过Pointwise Convolution之后,同样输出了4张Feature map,与常规卷积的输出维度相同

YOLOV5s中Conv、BottleNeck、C3的代码如下:

原始common.py配置

class Conv(nn.Module):

# Standard convolution 通用卷积模块,包括1卷积1BN1激活,激活默认SiLU,可用变量指定,不激活时用nn.Identity()占位,直接返回输入

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

super(Conv, self).__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def fuseforward(self, x):

return self.act(self.conv(x))

class Bottleneck(nn.Module):

# Standard bottleneck 残差块

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5): # ch_in, ch_out, shortcut, groups, expansion

super(Bottleneck, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_, c2, 3, 1, g=g)

self.add = shortcut and c1 == c2

def forward(self, x): # 如果shortcut并且输入输出通道相同则跳层相加

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

class C3(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super(C3, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1) # act=FReLU(c2)

self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)]) # n个残差组件(Bottleneck)

# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])

def forward(self, x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))

1.轻量化C3模块

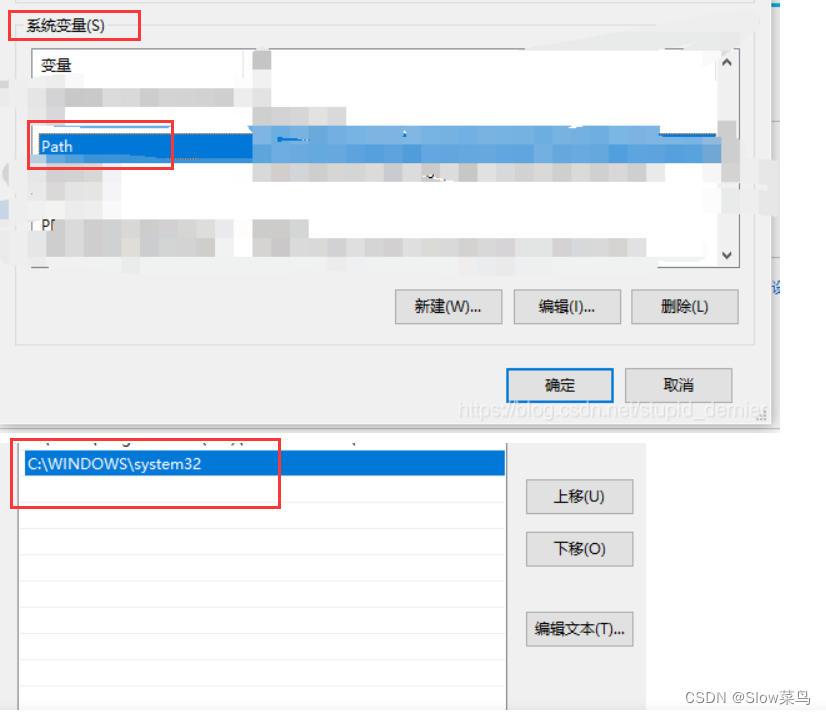

在models/common.py文件中按以下思路修改代码:

轻量化C3的改进思路是将原C3模块中使用的普通卷积,全部替换为深度可分离卷积,其余结构不变,改进后的DP_Conv、DP_BottleNeck、DP_C3的代码如下:

class DP_Conv(nn.Module):

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

super(DP_Conv, self).__init__()

self.conv1 = nn.Conv2d(c1, c1, kernel_size=3, stride=1, padding=1, groups=c1)

self.conv2 = nn.Conv2d(c1, c2, kernel_size=1, stride=s)

self.bn = nn.BatchNorm2d(c2)

self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())

def forward(self, x):

return self.act(self.bn(self.conv2(self.conv1(x))))

def fuseforward(self, x):

return self.act(self.conv(x))

class DP_Bottleneck(nn.Module):

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5): # ch_in, ch_out, shortcut, groups, expansion

super(DP_Bottleneck, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = DP_Conv(c1, c_, 1)

self.cv2 = DP_Conv(c_, c2, 1)

self.add = shortcut and c1 == c2

def forward(self, x): # 如果shortcut并且输入输出通道相同则跳层相加

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

class DP_C3(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super(DP_C3, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = DP_Conv(c1, c_, 1)

self.cv2 = DP_Conv(c1, c_, 1)

self.cv3 = DP_Conv(2 * c_, c2, 1) # act=FReLU(c2)

self.m = nn.Sequential(*[DP_Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)]) # n个残差组件(Bottleneck)

# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])

def forward(self, x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))

2.添加DP_C3.yaml文件

添加至/models/文件中

# parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# anchors

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 backbone

backbone:

# [from, number, module, args]

[[-1, 1, DP_Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, DP_Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, DP_C3, [128]],

[-1, 1, DP_Conv, [256, 3, 2]], # 3-P3/8

[-1, 9, DP_C3, [256]],

[-1, 1, DP_Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, DP_C3, [512]],

[-1, 1, DP_Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, DP_C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 head

head:

[[-1, 1, DP_Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4 # PANet是add, yolov5是concat

[-1, 3, C3, [512, False]], # 13

[-1, 1, DP_Conv, [256, 1,1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, DP_Conv, [256, 3,2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, DP_Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5) 必须在最后一层, 原代码很多默认了Detect是最后, 并没有全改

]

3.yolo.py配置

找到 models/yolo.py 文件中 parse_model() 类 for i, (f, n, m, args) in enumerate(d['backbone'] + d['head']):,在列表中添加DP_Conv、DP_BottleNeck、DP_C3,这样可以获得我们要传入的参数。

if m in {

Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x,Attention, CondConv, DP_Conv, DP_BottleNeck, DP_C3}:

c1, c2 = ch[f], args[0]

if c2 != no: # if not output

c2 = make_divisible(c2 * gw, 8)

args = [c1, c2, *args[1:]]

if m in {BottleneckCSP, C3, C3TR, C3Ghost, C3x, DP_C3}:

args.insert(2, n) # number of repeats

n = 1

elif m is nn.BatchNorm2d:

args = [ch[f]]

elif m is Concat:

c2 = sum(ch[x] for x in f)

# TODO: channel, gw, gd

4.训练模型

python train.py --cfg DP_C3.yaml