SpringCloud-Alibaba-Seata

注意:最好使用JDK1.8,使用JDK17整合seata会出现一些问题!!!

Docker部署Seata1.5.2

- 1:拉取Seata1.5.2镜像:

docker pull seataio/seata-server:1.5.2

- 2:在MySQL数据库上执行下面的SQL,生成Seata所需要的数据库表:

-- 创建名为seata的数据库

CREATE DATABASE seata;

-- 使用seata数据库

USE seata;

-- -------------------------------- The script used when storeMode is 'db' --------------------------------

-- the table to store GlobalSession data

CREATE TABLE IF NOT EXISTS `global_table`

(

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`status` TINYINT NOT NULL,

`application_id` VARCHAR(32),

`transaction_service_group` VARCHAR(32),

`transaction_name` VARCHAR(128),

`timeout` INT,

`begin_time` BIGINT,

`application_data` VARCHAR(2000),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`xid`),

KEY `idx_status_gmt_modified` (`status` , `gmt_modified`),

KEY `idx_transaction_id` (`transaction_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8mb4;

-- the table to store BranchSession data

CREATE TABLE IF NOT EXISTS `branch_table`

(

`branch_id` BIGINT NOT NULL,

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`resource_group_id` VARCHAR(32),

`resource_id` VARCHAR(256),

`branch_type` VARCHAR(8),

`status` TINYINT,

`client_id` VARCHAR(64),

`application_data` VARCHAR(2000),

`gmt_create` DATETIME(6),

`gmt_modified` DATETIME(6),

PRIMARY KEY (`branch_id`),

KEY `idx_xid` (`xid`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8mb4;

-- the table to store lock data

CREATE TABLE IF NOT EXISTS `lock_table`

(

`row_key` VARCHAR(128) NOT NULL,

`xid` VARCHAR(128),

`transaction_id` BIGINT,

`branch_id` BIGINT NOT NULL,

`resource_id` VARCHAR(256),

`table_name` VARCHAR(32),

`pk` VARCHAR(36),

`status` TINYINT NOT NULL DEFAULT '0' COMMENT '0:locked ,1:rollbacking',

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`row_key`),

KEY `idx_status` (`status`),

KEY `idx_branch_id` (`branch_id`),

KEY `idx_xid_and_branch_id` (`xid` , `branch_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8mb4;

CREATE TABLE IF NOT EXISTS `distributed_lock`

(

`lock_key` CHAR(20) NOT NULL,

`lock_value` VARCHAR(20) NOT NULL,

`expire` BIGINT,

primary key (`lock_key`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8mb4;

INSERT INTO `distributed_lock` (lock_key, lock_value, expire) VALUES ('AsyncCommitting', ' ', 0);

INSERT INTO `distributed_lock` (lock_key, lock_value, expire) VALUES ('RetryCommitting', ' ', 0);

INSERT INTO `distributed_lock` (lock_key, lock_value, expire) VALUES ('RetryRollbacking', ' ', 0);

INSERT INTO `distributed_lock` (lock_key, lock_value, expire) VALUES ('TxTimeoutCheck', ' ', 0);

- 3:为了获取Seata的配置文件,先运行一下Seata容器:

docker run -d --name seata-server-1.5.2 -p 8091:8091 -p 7091:7091 seataio/seata-server:1.5.2

- 4:创建一个存放Seata配置文件的目录:

mkdir -p /usr/local/seata1.5.2/

- 5:拷贝Seata容器中配置:

docker cp seata-server-1.5.2:/seata-server/resources /usr/local/seata1.5.2/

- 6:删除宿主机resources目录下原有的application.yml文件:

rm -f /usr/local/seata1.5.2/resources/application.yml

-

7:重新创建application.yml文件,修改注册中心和配置中心相关信息:

-

vi /usr/local/seata1.5.2/resources/application.yml -

内容如下:

-

修改处1:修改下面config.nacos.server-addr的值为nacos配置中心的ip:端口。

-

修改处2:修改下面config.nacos.username的值为nacos配置中心的帐号。

-

修改处3:修改下面config.nacos.password的值为nacos配置中心的密码。

-

修改处4:修改下面registry.nacos.server-addr的值为nacos注册中心的ip:端口。

-

修改处5:修改下面registry.nacos.cluster的值为集群名(后面配置seata要指定这个集群名)

-

修改处6:修改下面registry.nacos.username的值为nacos注册中心的帐号。

-

修改处7:修改下面registry.nacos.password的值为nacos注册中心的密码。

-

-

server: port: 7091 spring: application: name: seata-server # 日志配置 logging: config: classpath:logback-spring.xml file: path: ${user.home}/logs/seata # seata可视化界面帐号/密码 console: user: username: seata password: seata seata: config: # support: nacos, consul, apollo, zk, etcd3 type: nacos nacos: server-addr: 192.168.184.100:7747 #修改处1:nacos配置中心的ip:端口 # namespace必须为空,否则不能注册到nacos中 namespace: group: SEATA_GROUP # seata分组 username: nacos # 修改处2:nacos配置中心的帐号 password: nacos # 修改处3:nacos配置中心的密码 # 该data-id需要在nacos中在进行配置 data-id: seataServer.properties # seata在nacos配置中心上的配置文件的data-id # Seata接入Nacos注册中心 registry: # support: nacos, eureka, redis, zk, consul, etcd3, sofa type: nacos preferred-networks: 30.240.* nacos: application: seata-server server-addr: 192.168.184.100:7747 #修改处4:nacos注册中心的ip:端口 group: SEATA_GROUP # seata分组 # namespace必须为空,否则不能注册到nacos中 namespace: cluster: default # 修改处5:集群名 username: nacos #修改处6:nacos注册中心的帐号 password: nacos #修改处7:nacos注册中心的密码 # server: # service-port: 8091 #If not configured, the default is '${server.port} + 1000' security: secretKey: SeataSecretKey0c382ef121d778043159209298fd40bf3850a017 tokenValidityInMilliseconds: 1800000 ignore: urls: /,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-fe/public/**,/api/v1/auth/login

-

-

8:在Nacos配置中心的public命名空间(必须是public命名空间,否则注册不到nacos中)、SEATA_GROUP分组上创建名为seataServer.properties配置文件:(内容如下👇)

- 修改处1:修改store.db.dbType的值为数据库类型(默认mysql)

- 修改处2:修改store.db.driverClassName的值为数据库驱动全类名(例如mysql8.0的驱动为com.mysql.cj.jdbc.Driver)

- 修改处3:修改store.db.url的值为数据库的url(如果是mysql,则只需修改url中的数据库ip地址、端口、seata数据库名)

- 修改处4:修改store.db.user的值为数据库用户名

- 修改处5:修改store.db.password的值为数据库密码

- 修改处6:修改service.vgroupMapping.springcloud-alibaba-demo-group=default (只需修改事务分组名、Seata集群名即可)

- 格式为:service.vgroupMapping.事务分组名(通常为SpringCloud顶层项目名-group)=Seata集群名(seata目录下的application.yml中的registry.nacos.cluster的值)

store.mode=db

store.lock.mode=db

store.session.mode=db

store.db.datasource=druid

# 修改处1:数据库类型

store.db.dbType=mysql

# 修改处2:数据库驱动全类名

store.db.driverClassName=com.mysql.cj.jdbc.Driver

# 修改处3:数据库的url

store.db.url=jdbc:mysql://192.168.184.100:3308/seata?useUnicode=true&rewriteBatchedStatements=true

# 修改处4:数据库用户名

store.db.user=root

# 修改处5:数据库密码

store.db.password=123456

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.distributedLockTable=distributed_lock

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

# 修改处6: 配置事务组,格式为service.vgroupMapping.事务分组名(通常为SpringCloud顶层项目名-group)=Seata集群名(seata目录下的application.yml中的registry.nacos.cluster的值),例如下面springcloud-alibaba-demo是我们的SpringCloud顶层项目名,default是我们的Seata集群名

service.vgroupMapping.springcloud-alibaba-demo-group=default

################下面的配置都不用修改,全都为默认即可!######################

#Transaction rule configuration, only for the server

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

server.distributedLockExpireTime=10000

server.xaerNotaRetryTimeout=60000

server.session.branchAsyncQueueSize=5000

server.session.enableBranchAsyncRemove=false

#Transaction rule configuration, only for the client

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=true

client.rm.tableMetaCheckerInterval=60000

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.rm.sagaJsonParser=fastjson

client.rm.tccActionInterceptorOrder=-2147482648

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

client.tm.interceptorOrder=-2147482648

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.undo.compress.enable=true

client.undo.compress.type=zip

client.undo.compress.threshold=64k

#For TCC transaction mode

tcc.fence.logTableName=tcc_fence_log

tcc.fence.cleanPeriod=1h

#Log rule configuration, for client and server

log.exceptionRate=100

#Metrics configuration, only for the server

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableTmClientBatchSendRequest=false

transport.enableRmClientBatchSendRequest=true

transport.enableTcServerBatchSendResponse=false

transport.rpcRmRequestTimeout=30000

transport.rpcTmRequestTimeout=30000

transport.rpcTcRequestTimeout=30000

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

transport.serialization=seata

transport.compressor=none

- 9:删除刚刚创建的Seata容器:

docker rm -f seata-server-1.5.2

- 10:重新运行Seata容器:

- 修改处1:修改SEATA_IP的值为docker部署Seata所在的宿主机的IP地址

- 修改处2:修改SEATA_PORT的值为Seata对外提供访问的端口(可以不修改)

docker run -d \

--name seata-server-1.5.2 \

--restart=always \

-p 8091:8091 \

-p 7091:7091 \

-e SEATA_IP=192.168.184.100 \

-e SEATA_PORT=8091 \

-v /usr/local/seata1.5.2/resources/:/seata-server/resources \

seataio/seata-server:1.5.2

- 11:查看seata容器日志,查看是否启动成功:

docker logs -f seata-server-1.5.2

Seata+MybatisPlus整合Nacos项目实战(AT模式)⭐

数据库基础搭建

创建order_db数据库

CREATE DATABASE order_db;

USE order_db;

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

DROP TABLE IF EXISTS `order`;

CREATE TABLE `order` (

`id` bigint(0) NOT NULL,

`product_id` bigint(0) NOT NULL,

`count` int(0) NOT NULL,

`create_time` datetime(0) NOT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

DROP TABLE IF EXISTS `undo_log`;

CREATE TABLE `undo_log` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(0) NOT NULL,

`xid` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`context` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(0) NOT NULL,

`log_created` datetime(0) NOT NULL,

`log_modified` datetime(0) NOT NULL,

`ext` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE,

UNIQUE INDEX `ux_undo_log`(`xid`, `branch_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = DYNAMIC;

SET FOREIGN_KEY_CHECKS = 1;

创建product_db数据库

CREATE DATABASE product_db;

USE product_db;

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

DROP TABLE IF EXISTS `product`;

CREATE TABLE `product` (

`id` bigint(0) NOT NULL,

`product_name` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`price` decimal(10, 2) NOT NULL,

`number` int(0) NOT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

INSERT INTO `product` VALUES (1001, 'iPhone14 pro max', 7999.00, 100);

DROP TABLE IF EXISTS `undo_log`;

CREATE TABLE `undo_log` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(0) NOT NULL,

`xid` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`context` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(0) NOT NULL,

`log_created` datetime(0) NOT NULL,

`log_modified` datetime(0) NOT NULL,

`ext` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE,

UNIQUE INDEX `ux_undo_log`(`xid`, `branch_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = DYNAMIC;

SET FOREIGN_KEY_CHECKS = 1;

给每个需要分布式事务的数据库中执行下面的SQL:(创建回滚表)

CREATE TABLE `undo_log` (

`id` bigint NOT NULL AUTO_INCREMENT,

`branch_id` bigint NOT NULL,

`xid` varchar(100) CHARACTER SET utf8mb3 COLLATE utf8mb3_general_ci NOT NULL,

`context` varchar(128) CHARACTER SET utf8mb3 COLLATE utf8mb3_general_ci NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

`ext` varchar(100) DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE,

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb3 ROW_FORMAT=DYNAMIC;

顶层项目的Pom.xml

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<!-- SpringBoot版本-->

<spring.boot.version>2.6.11</spring.boot.version>

<!-- SpringCloud版本-->

<spring.cloud.version>2021.0.4</spring.cloud.version>

<!-- SpringCloud-Alibaba版本-->

<spring.cloud.alibaba.version>2021.0.4.0</spring.cloud.alibaba.version>

</properties>

<!-- 依赖管理-->

<dependencyManagement>

<dependencies>

<!-- SpringBoot依赖-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${spring.boot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!-- SpringCloud依赖-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring.cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!-- SpringCloud-Alibaba依赖-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>${spring.cloud.alibaba.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

seata-order、seata-product微服务都有的代码

DataSourceProxyConfig.class

package com.cloud.alibaba.config;

@Configuration

public class DataSourceProxyConfig {

@Bean

@ConfigurationProperties(prefix = "spring.datasource")

public DataSource druidDataSource() {

return new DruidDataSource();

}

}

FeignConfig.class

package com.cloud.alibaba.config;

public class FeignConfig {

@Bean

public Logger.Level feignLoggerLevel(){

return Logger.Level.FULL;

}

}

ResponseType.enum

package com.cloud.alibaba.enums;

public enum ResponseType {

SUCCESS(200,"接口请求成功"),

ERROR(500,"接口请求失败");

private int code;

private String message;

ResponseType(int code, String message) {

this.code = code;

this.message = message;

}

public int getCode() {

return code;

}

public void setCode(int code) {

this.code = code;

}

public String getMessage() {

return message;

}

public void setMessage(String message) {

this.message = message;

}

}

ResponseResult.class

package com.cloud.alibaba.utils;

@JsonInclude(JsonInclude.Include.NON_NULL) //为null的字段不进行序列化

@Data

@Accessors(chain = true)

public class ResponseResult<T> {

/**

* 响应状态码

*/

private Integer code;

/**

* 响应状态码对应的信息提示

*/

private String msg;

/**

* 返回给前端的数据

*/

private T data;

public ResponseResult() {

}

public ResponseResult(Integer code, String msg) {

this.code = code;

this.msg = msg;

}

public ResponseResult(Integer code, T data) {

this.code = code;

this.data = data;

}

public ResponseResult(Integer code, String msg, T data) {

this.code = code;

this.msg=msg;

this.data = data;

}

//泛型方法。快速封装成功的响应对象

public static<D> ResponseResult<D> ok (D data){

return new ResponseResult<D>()

.setCode(ResponseType.SUCCESS.getCode())

.setMsg(ResponseType.SUCCESS.getMessage())

.setData(data);

}

//泛型方法。快速封装失败的响应对象

public static<D> ResponseResult<D> fail (D data){

return new ResponseResult<D>()

.setCode(ResponseType.ERROR.getCode())

.setMsg(ResponseType.ERROR.getMessage())

.setData(data);

}

}

SnowId.class

package com.cloud.alibaba.utils;

public class SnowId {

private static IdGeneratorOptions options = new IdGeneratorOptions((short) 1);

static {

//默认为6:50w并发需要8秒;

//设置为10:50w并发只需要0.5秒,提升巨大

options.SeqBitLength=10;

YitIdHelper.setIdGenerator(options);

}

//调用即可生成分布式id。

public static long nextId(){

return YitIdHelper.nextId();

}

}

pom.xml

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<seata.version>1.5.2</seata.version>

<druid.version>1.2.15</druid.version>

<mybatis.plus.version>3.5.2</mybatis.plus.version>

<lombok.version>1.18.24</lombok.version>

<fastjson2.version>2.0.25</fastjson2.version>

</properties>

<dependencies>

<!-- SpringCloud Alibaba Nacos注册中心依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!-- SpringCloud Alibaba Seata依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<exclusions>

<exclusion>

<artifactId>seata-spring-boot-starter</artifactId>

<groupId>io.seata</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>${seata.version}</version>

</dependency>

<!-- openFeign-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<!-- SpringCloud负载均衡器。使用openfeign要导入这个依赖!只有导入了这个依赖RestTemplate才能通过spring.application.name代替ip和端口去调用其他接口-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-loadbalancer</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<!-- 阿里巴巴的druid数据源 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>${druid.version}</version>

</dependency>

<!-- mybatis-plus-->

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>${mybatis.plus.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<!-- lombok-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>${lombok.version}</version>

</dependency>

<!-- 单元测试-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- alibaba fastjson2 -->

<dependency>

<groupId>com.alibaba.fastjson2</groupId>

<artifactId>fastjson2</artifactId>

<version>${fastjson2.version}</version>

</dependency>

<!-- 雪花漂移算法(雪花算法增强版)-->

<dependency>

<groupId>com.github.yitter</groupId>

<artifactId>yitter-idgenerator</artifactId>

<version>1.0.6</version>

</dependency>

</dependencies>

<!-- 配置Maven打包插件-->

<build>

<finalName>${project.artifactId}</finalName>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>${spring.boot.version}</version>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

seata-order微服务搭建⭐

OrderController.class

package com.cloud.alibaba.controller;

@RestController

@RequestMapping(path = "/order")

public class OrderController {

private OrderService orderService;

@Autowired

@Qualifier("orderServiceImpl")

public void setOrderService(OrderService orderService) {

this.orderService = orderService;

}

/**

* 生成订单

*

* @param order 订单

* @return {@link ResponseResult}<{@link String}>

*/

@PostMapping(path = "/generateOrder")

public ResponseResult<String> generateOrder(@RequestBody Order order){

int i = 10 / 0 ;

return orderService.generateOrder(order)?

ResponseResult.ok("生成订单成功"):ResponseResult.fail("生成订单失败");

}

}

Order.class

package com.cloud.alibaba.entity;

@Data

@AllArgsConstructor

@NoArgsConstructor

@TableName("`order`")

@Builder

@Accessors(chain = true)

public class Order implements Serializable {

private static final long serialVersionUID = 1L;

@JsonSerialize(using = ToStringSerializer.class)

@TableId("id")

private Long id;

@JsonSerialize(using = ToStringSerializer.class)

@TableField("product_id")

private Long productId;

/**

* 该订单购买商品数量

*/

@TableField("count")

private Integer count;

@TableField("create_time")

private LocalDateTime createTime;

}

OrderMapper.class

package com.cloud.alibaba.mapper;

@Mapper

@Repository

public interface OrderMapper extends BaseMapper<Order> {

}

OrderService.class

package com.cloud.alibaba.service;

public interface OrderService extends IService<Order> {

boolean generateOrder(Order order);

}

OrderServiceImpl.class

package com.cloud.alibaba.service.impl;

@Service

public class OrderServiceImpl extends ServiceImpl<OrderMapper, Order> implements OrderService {

@Override

public boolean generateOrder(Order order) {

try {

order.setId(SnowId.nextId())

.setCreateTime(LocalDateTime.now());

this.save(order);

return true;

}catch (Exception e){

e.printStackTrace();

return false;

}

}

}

SeataOrderApplication.class

package com.cloud.alibaba;

@EnableDiscoveryClient

@SpringBootApplication

public class SeataOrderApplication {

public static void main(String[] args) {

SpringApplication.run(SeataOrderApplication.class,args);

}

}

application.yml

server:

port: 5288

# SpringCloud配置

spring:

application:

name: seata-order

#这里我们使用的是mysql8.0的配置

datasource:

type: com.alibaba.druid.pool.DruidDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://192.168.184.100:3308/order_db?serverTimezone=GMT%2B8&characterEncoding=utf-8&useSSL=false

username: root

password: 123456

#druid数据库连接池配置

druid:

# 初始连接数

initial-size: 5

# 最小连接池数量

minIdle: 5

# 最大连接池数量

max-active: 20

# 配置获取连接等待超时的时间(单位:毫秒)

max-wait: 60000

# 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

time-between-eviction-runs-millis: 60000

# 配置一个连接在池中最小生存的时间,单位是毫秒

min-evictable-idle-time-millis: 300000

# 配置一个连接在池中最大生存的时间,单位是毫秒

max-evictable-idle-time-millis: 900000

# 用来测试连接是否可用的SQL语句,默认值每种数据库都不相同,这是mysql

validationQuery: SELECT 1

# 应用向连接池申请连接,并且testOnBorrow为false时,连接池将会判断连接是否处于空闲状态,如果是,则验证这条连接是否可用

testWhileIdle: true

# 如果为true,默认是false,应用向连接池申请连接时,连接池会判断这条连接是否是可用的

testOnBorrow: false

# 如果为true(默认false),当应用使用完连接,连接池回收连接的时候会判断该连接是否还可用

testOnReturn: false

# 是否缓存preparedStatement,也就是PSCache。PSCache对支持游标的数据库性能提升巨大,比如说oracle

poolPreparedStatements: true

# 要启用PSCache,必须配置大于0,当大于0时, poolPreparedStatements自动触发修改为true,

# 在Druid中,不会存在Oracle下PSCache占用内存过多的问题,

# 可以把这个数值配置大一些,比如说100

maxOpenPreparedStatements: 20

# 连接池中的minIdle数量以内的连接,空闲时间超过minEvictableIdleTimeMillis,则会执行keepAlive操作

keepAlive: true

# 启用内置过滤器(第一个 stat必须,否则监控不到SQL)

filters: stat,wall

# 自己配置监控统计拦截的filter

filter:

# 开启druid-datasource的状态监控

stat:

enabled: true

db-type: mysql

# 开启慢sql监控,超过1s 就认为是慢sql,记录到日志中

log-slow-sql: true

slow-sql-millis: 1000

# wall配置

wall:

config:

multi-statement-allow: true

#配置WebStatFilter,用于采集web关联监控的数据

web-stat-filter:

enabled: true # 启动 StatFilter

#配置StatViewServlet(监控页面),用于展示Druid的统计信息

stat-view-servlet:

enabled: true # 启用StatViewServlet

url-pattern: /druid/* # 访问内置监控页面的路径,内置监控页面的首页是/druid/index.html

reset-enable: false # 不允许清空统计数据,重新计算

login-username: root # 配置监控页面访问帐号

login-password: 123456 # 配置监控页面访问密码

allow: # 设置IP白名单,不填则允许所有访问

deny: # IP 黑名单,若白名单也存在,则优先使用

cloud:

# nacos配置

nacos:

# nacos的ip:端口

server-addr: 192.168.184.100:7747

discovery:

# nacos用户名

username: nacos

# nacos密码

password: nacos

# nacos命名空间

namespace:

# nacos的分组

group: DEFAULT_GROUP

main:

#解决多个feign接口连接相同的微服务则会报错的问题

allow-bean-definition-overriding: true

# Seata配置

seata:

# 开启seata

enabled: true

application-id: ${spring.application.name}

# seata事务分组名(格式为: service.vgroupMapping.事务分组名(通常为SpringCloud顶层项目名-group)-group=Seata集群名)

# 因为我们在nacos配置中心上配置了service.vgroupMapping.springcloud-alibaba-demo-group=default

tx-service-group: springcloud-alibaba-demo-group

service:

vgroup-mapping:

# key是Seata事务分组名(springcloud-alibaba-demo-group),value是Seata集群名(default)

# 格式为: service.vgroupMapping.事务分组名(通常为SpringCloud顶层项目名-group)=Seata集群名

# 因为我们在nacos配置中心上配置了service.vgroupMapping.springcloud-alibaba-demo-group=default

springcloud-alibaba-demo-group: default

grouplist:

# 集群名为"default"的Seata所在的服务器IP+Seata的服务端口(Seata可视化界面的端口是7091、服务端口是8091)

default: 192.168.184.100:8091

# 关闭自动代理

enable-auto-data-source-proxy: true

# Seata接入Nacos配置中心

config:

# 配置中心类型(nacos)

type: nacos

nacos:

server-addr: ${spring.cloud.nacos.server-addr}

# seata配置文件所在的nacos分组

group: SEATA_GROUP

# seata配置文件所在的nacos命名空间(必须为空,如果不为空会出现一些问题)

namespace:

# nacos帐号

username: nacos

# nacos密码

password: nacos

# seata在nacos配置中心上的配置文件的data-id

data-id: seataServer.properties

# Seata接入Nacos注册中心

registry:

# 注册中心类型(nacos)

type: nacos

nacos:

# seata服务端在nacos注册的应用名(seata 服务名)

application: seata-server

server-addr: ${spring.cloud.nacos.server-addr}

# seata服务端被注册到nacos哪个分组

group: SEATA_GROUP

# seata服务端被注册到nacos哪个命名空间(必须为空,如果不为空会出现一些问题)

namespace:

# seata集群名(默认为default)

cluster: default

# nacos帐号

username: nacos

# nacos密码

password: nacos

mybatis-plus:

configuration:

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

mapper-locations: classpath*:/mapper/**/*.xml

#设置openFeign客户端超时时间(OpenFeign默认支持ribbon)

ribbon:

# 连接超时时间

ConnectTimeout: 3000

ReadTimeout: 5000

# 配置openfeign日志

logging:

level:

com.cloud.alibaba.feign: debug

# 开启端点

management:

endpoints:

web:

exposure:

include: "*"

seata-product微服务搭建⭐

ProductController.class

package com.cloud.alibaba.controller;

@RestController

@RequestMapping(path = "/product")

public class ProductController {

@Autowired

private ProductService productService;

@GetMapping(path = "/buyProduct/{productId}")

public ResponseResult<String> buyProduct(@PathVariable("productId") Long productId){

try {

boolean flag = productService.buyProduct(productId);

if(flag){

return ResponseResult.ok("购买成功");

}

return ResponseResult.fail("购买失败");

}catch (Exception e){

return ResponseResult.fail("购买失败");

}

}

}

Order.class

package com.cloud.alibaba.dto;

@Data

@AllArgsConstructor

@NoArgsConstructor

@Builder

@Accessors(chain = true)

public class Order implements Serializable {

private static final long serialVersionUID = 1L;

private Long id;

private Long productId;

/**

* 该订单购买商品数量

*/

private Integer count;

private LocalDateTime createTime;

}

Product.class

package com.cloud.alibaba.entity;

@Data

@AllArgsConstructor

@NoArgsConstructor

@TableName("product")

@Builder

@Accessors(chain = true)

public class Product implements Serializable {

private static final long serialVersionUID = 1L;

@JsonSerialize(using = ToStringSerializer.class)

@TableId("id")

private Long id;

@TableField("product_name")

private String productName;

@TableField("price")

private BigDecimal price;

/**

* 该商品剩余数量

*/

@TableField("number")

private Integer number;

}

OrderFeignService.class

package com.cloud.alibaba.feign;

/**

* seata-order服务上的OrderController的远程调用feign接口

*

* @author youzhengjie

* @date 2023/03/25 11:51:30

*/

//@FeignClient的value是被调用方的spring.application.name

//@FeignClient的path是被调用接口的类上的@RequestMapping的path,如果被调用接口的类上没有@RequestMapping则可以不用写这个属性

@FeignClient(value = "seata-order",path = "/order",configuration = FeignConfig.class)

public interface OrderFeignService {

@PostMapping(path = "/generateOrder")

public ResponseResult<String> generateOrder(@RequestBody Order order);

}

ProductMapper.class

package com.cloud.alibaba.mapper;

@Mapper

@Repository

public interface ProductMapper extends BaseMapper<Product> {

/**

* 减少number

*

* @param productId 产品id

* @param number 数量

* @return int

*/

int descNumber(@Param("productId") Long productId,@Param("number") int number);

}

ProductService.class

package com.cloud.alibaba.service;

public interface ProductService extends IService<Product> {

boolean buyProduct(Long productId);

}

ProductServiceImpl.class

package com.cloud.alibaba.service.impl;

@Service

public class ProductServiceImpl extends ServiceImpl<ProductMapper, Product> implements ProductService {

private final Logger logger = LoggerFactory.getLogger(ProductServiceImpl.class);

@Autowired

private ProductMapper productMapper;

@Autowired

private OrderFeignService orderFeignService;

/**

* 购买产品(---分布式事务---)

*

* @param productId 产品id

* @return boolean

*/

@Override

@GlobalTransactional(rollbackFor = Exception.class) //开启seata分布式事务

public boolean buyProduct(Long productId) {

try {

//product数量-1

productMapper.descNumber(productId,1);

//远程调用order的feign接口(生成订单)<<<----分布式事务

Order order = Order.builder()

.productId(productId)

.count(1)

.build();

orderFeignService.generateOrder(order);

logger.info("购买成功");

return true;

}catch (Exception e){

e.printStackTrace();

logger.error("购买失败,进行分布式事务回滚");

throw new RuntimeException("购买失败,进行分布式事务回滚");

}

}

}

SeataProductApplication.class

package com.cloud.alibaba;

@EnableFeignClients //开启openfeign功能

@SpringBootApplication

@EnableDiscoveryClient

public class SeataProductApplication {

public static void main(String[] args) {

SpringApplication.run(SeataProductApplication.class,args);

}

}

ProductMapper.xml

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd" >

<mapper namespace="com.cloud.alibaba.mapper.ProductMapper">

<update id="descNumber">

UPDATE product SET number = number - #{number} WHERE id = #{productId}

</update>

</mapper>

application.yml

server:

port: 5188

# SpringCloud配置

spring:

application:

name: seata-product

#这里我们使用的是mysql8.0的配置

datasource:

type: com.alibaba.druid.pool.DruidDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://192.168.184.100:3308/product_db?serverTimezone=GMT%2B8&characterEncoding=utf-8&useSSL=false

username: root

password: 123456

#druid数据库连接池配置

druid:

# 初始连接数

initial-size: 5

# 最小连接池数量

minIdle: 5

# 最大连接池数量

max-active: 20

# 配置获取连接等待超时的时间(单位:毫秒)

max-wait: 60000

# 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

time-between-eviction-runs-millis: 60000

# 配置一个连接在池中最小生存的时间,单位是毫秒

min-evictable-idle-time-millis: 300000

# 配置一个连接在池中最大生存的时间,单位是毫秒

max-evictable-idle-time-millis: 900000

# 用来测试连接是否可用的SQL语句,默认值每种数据库都不相同,这是mysql

validationQuery: SELECT 1

# 应用向连接池申请连接,并且testOnBorrow为false时,连接池将会判断连接是否处于空闲状态,如果是,则验证这条连接是否可用

testWhileIdle: true

# 如果为true,默认是false,应用向连接池申请连接时,连接池会判断这条连接是否是可用的

testOnBorrow: false

# 如果为true(默认false),当应用使用完连接,连接池回收连接的时候会判断该连接是否还可用

testOnReturn: false

# 是否缓存preparedStatement,也就是PSCache。PSCache对支持游标的数据库性能提升巨大,比如说oracle

poolPreparedStatements: true

# 要启用PSCache,必须配置大于0,当大于0时, poolPreparedStatements自动触发修改为true,

# 在Druid中,不会存在Oracle下PSCache占用内存过多的问题,

# 可以把这个数值配置大一些,比如说100

maxOpenPreparedStatements: 20

# 连接池中的minIdle数量以内的连接,空闲时间超过minEvictableIdleTimeMillis,则会执行keepAlive操作

keepAlive: true

# 启用内置过滤器(第一个 stat必须,否则监控不到SQL)

filters: stat,wall

# 自己配置监控统计拦截的filter

filter:

# 开启druid-datasource的状态监控

stat:

enabled: true

db-type: mysql

# 开启慢sql监控,超过1s 就认为是慢sql,记录到日志中

log-slow-sql: true

slow-sql-millis: 1000

# wall配置

wall:

config:

multi-statement-allow: true

#配置WebStatFilter,用于采集web关联监控的数据

web-stat-filter:

enabled: true # 启动 StatFilter

#配置StatViewServlet(监控页面),用于展示Druid的统计信息

stat-view-servlet:

enabled: true # 启用StatViewServlet

url-pattern: /druid/* # 访问内置监控页面的路径,内置监控页面的首页是/druid/index.html

reset-enable: false # 不允许清空统计数据,重新计算

login-username: root # 配置监控页面访问帐号

login-password: 123456 # 配置监控页面访问密码

allow: # 设置IP白名单,不填则允许所有访问

deny: # IP 黑名单,若白名单也存在,则优先使用

cloud:

# nacos配置

nacos:

# nacos的ip:端口

server-addr: 192.168.184.100:7747

discovery:

# nacos用户名

username: nacos

# nacos密码

password: nacos

# nacos命名空间

namespace:

# nacos的分组

group: DEFAULT_GROUP

main:

#解决多个feign接口连接相同的微服务则会报错的问题

allow-bean-definition-overriding: true

# Seata配置

seata:

# 开启seata

enabled: true

application-id: ${spring.application.name}

# seata事务分组名(格式为: service.vgroupMapping.事务分组名(通常为SpringCloud顶层项目名-group)-group=Seata集群名)

# 因为我们在nacos配置中心上配置了service.vgroupMapping.springcloud-alibaba-demo-group=default

tx-service-group: springcloud-alibaba-demo-group

service:

vgroup-mapping:

# key是Seata事务分组名(springcloud-alibaba-demo-group),value是Seata集群名(default)

# 格式为: service.vgroupMapping.事务分组名(通常为SpringCloud顶层项目名-group)=Seata集群名

# 因为我们在nacos配置中心上配置了service.vgroupMapping.springcloud-alibaba-demo-group=default

springcloud-alibaba-demo-group: default

grouplist:

# 集群名为"default"的Seata所在的服务器IP+Seata的服务端口(Seata可视化界面的端口是7091、服务端口是8091)

default: 192.168.184.100:8091

# 关闭自动代理

enable-auto-data-source-proxy: true

# Seata接入Nacos配置中心

config:

# 配置中心类型(nacos)

type: nacos

nacos:

server-addr: ${spring.cloud.nacos.server-addr}

# seata配置文件所在的nacos分组

group: SEATA_GROUP

# seata配置文件所在的nacos命名空间(必须为空,如果不为空会出现一些问题)

namespace:

# nacos帐号

username: nacos

# nacos密码

password: nacos

# seata在nacos配置中心上的配置文件的data-id

data-id: seataServer.properties

# Seata接入Nacos注册中心

registry:

# 注册中心类型(nacos)

type: nacos

nacos:

# seata服务端在nacos注册的应用名(seata 服务名)

application: seata-server

server-addr: ${spring.cloud.nacos.server-addr}

# seata服务端被注册到nacos哪个分组

group: SEATA_GROUP

# seata服务端被注册到nacos哪个命名空间(必须为空,如果不为空会出现一些问题)

namespace:

# seata集群名(默认为default)

cluster: default

# nacos帐号

username: nacos

# nacos密码

password: nacos

mybatis-plus:

configuration:

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

mapper-locations: classpath*:/mapper/**/*.xml

#设置openFeign客户端超时时间(OpenFeign默认支持ribbon)

ribbon:

# 连接超时时间

ConnectTimeout: 3000

ReadTimeout: 5000

# 配置openfeign日志

logging:

level:

com.cloud.alibaba.feign: debug

# 开启端点

management:

endpoints:

web:

exposure:

include: "*"

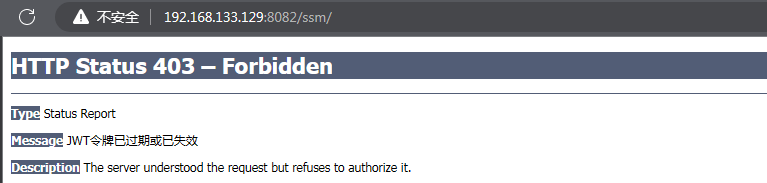

访问分布式事务测试接口 ⭐

- http://localhost:5188/product/buyProduct/1001