❤️作者简介:2022新星计划第三季云原生与云计算赛道Top5🏅、华为云享专家🏅、云原生领域潜力新星🏅

💛博客首页:C站个人主页🌞

💗作者目的:如有错误请指正,将来会不断的完善笔记,帮助更多的Java爱好者入门,共同进步!

文章目录

- 分库分表-ShardingSphere 4.x(2)

- ShardingSphere概述

- Sharding-JDBC实战教程(下)

- Sharding-JDBC广播表

- Config实体类

- ConfigMapper接口

- application.properties

- junit测试类

- 测试1:往ds1.t_config这个广播表插入数据

- Sharding-JDBC读写分离⭐

- Docker搭建MySQL主从复制(1主1从)⭐

- Master主节点配置

- Slave从节点配置

- 主从同步失败问题(Slave_SQL_Running:No)

- Slave_SQL_Running:No的解决办法

- 给Master和Slave的MySQL各自插入一张表⭐

- 数据库(orderdb1)

- 数据库表(orderdb1.order)

- 开始配置Sharding-JDBC主从复制和读写分离⭐

- application.properties

- junit测试类

- 测试1:测试主从同步,读写分离(写操作)⭐

- 测试2:测试读写分离(读操作)⭐

- 注意事项(遇到的bug的解决方案⭐)

- 问题1:Druid数据源问题

- Sharding-Proxy实战教程

- Linux下载Sharding-Proxy4.1.1(window版本也是一样的安装方式)⭐

- 方式1:官网下载(缺点是:需要修改很多地方,而且下载很慢,不推荐!)

- 方式2:sharding-proxy的改进版(优点:拿来即用,不用修改任何东西,下载快。推荐⭐)

- Linux安装JDK8(Sharding-Proxy需要JDK环境⭐)

- Sharding-Proxy基本配置⭐

- Sharding-Proxy分库分表⭐

- 数据库(orderdb1和orderdb2)

- Docker启动一个MySQL,并执行上面的命令

- 开始配置分库分表

- Sharding-Proxy读写分离⭐

- Docker搭建MySQL主从复制(1主1从)⭐

- Master主节点配置

- Slave从节点配置

- 主从同步失败问题(Slave_SQL_Running:No)

- Slave_SQL_Running:No的解决办法

- 给Master和Slave的MySQL各自插入一张表⭐

- 数据库(orderdb1)

- 数据库表(orderdb1.order)

- 开始配置Sharding-Proxy读写分离⭐

分库分表-ShardingSphere 4.x(2)

项目所在仓库

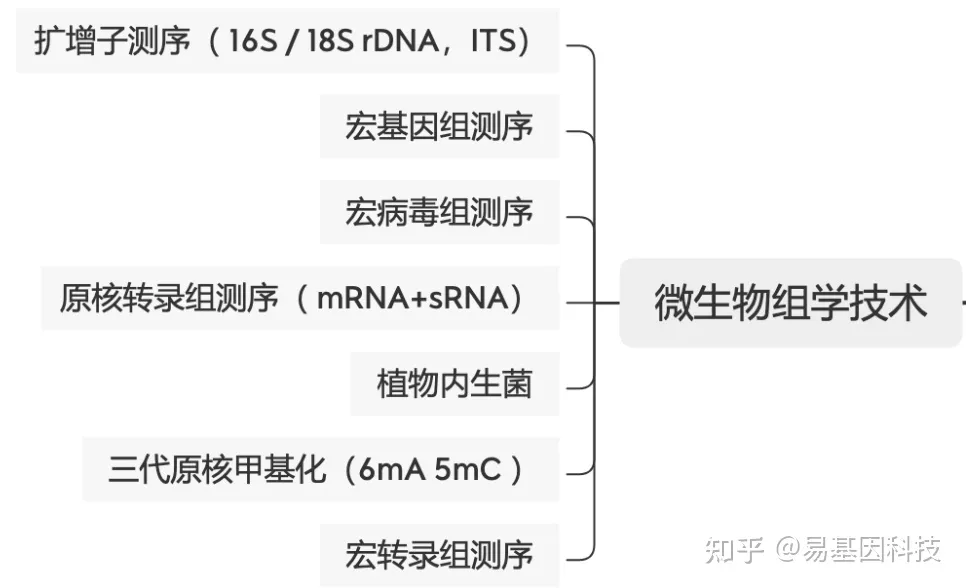

ShardingSphere概述

Apache ShardingSphere 是一款开源的分布式数据库生态项目,由 JDBC 和 Proxy 两款产品组成。 其核心采用可插拔架构,通过组件扩展功能。 对上以数据库协议及 SQL 方式提供诸多增强功能,包括数据分片、访问路由、数据安全等;对下原生支持 MySQL、PostgreSQL、SQL Server、Oracle 等多种数据存储引擎。 Apache ShardingSphere 项目理念,是提供数据库增强计算服务平台,进而围绕其上构建生态。 充分利用现有数据库的计算与存储能力,通过插件化方式增强其核心能力,为企业解决在数字化转型中面临的诸多使用难点,为加速数字化应用赋能。

Sharding-JDBC实战教程(下)

Sharding-JDBC广播表

Config实体类

package com.boot.entity;

import com.baomidou.mybatisplus.annotation.TableField;

import com.baomidou.mybatisplus.annotation.TableName;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import lombok.experimental.Accessors;

import java.io.Serializable;

/**

* TODO: 2022/8/8

* @author youzhengjie

*/

//lombok注解简化开发

@Data

@AllArgsConstructor

@NoArgsConstructor

@Accessors(chain = true) //开启链式编程

@TableName("t_config")

public class Config implements Serializable {

@TableField("config_id")

private Long configId;

@TableField("config_info")

private String configInfo;

}

ConfigMapper接口

package com.boot.dao;

import com.baomidou.mybatisplus.core.mapper.BaseMapper;

import com.boot.entity.Config;

import org.apache.ibatis.annotations.Mapper;

import org.springframework.stereotype.Repository;

@Mapper

@Repository

public interface ConfigMapper extends BaseMapper<Config> {

}

application.properties

#配置ShardingSphere数据源,定义一个或多个数据源名称

spring.shardingsphere.datasource.names=ds1,ds2

#配置ds1的数据源(对应orderdb1数据库)

spring.shardingsphere.datasource.ds1.type=com.alibaba.druid.pool.DruidDataSource

spring.shardingsphere.datasource.ds1.driver-class-name=com.mysql.jdbc.Driver

spring.shardingsphere.datasource.ds1.url=jdbc:mysql://localhost:3306/orderdb1?useSSL=false&autoReconnect=true&characterEncoding=UTF-8&serverTimezone=UTC

spring.shardingsphere.datasource.ds1.username=root

spring.shardingsphere.datasource.ds1.password=18420163207

#配置ds2的数据源(对应orderdb2数据库)

spring.shardingsphere.datasource.ds2.type=com.alibaba.druid.pool.DruidDataSource

spring.shardingsphere.datasource.ds2.driver-class-name=com.mysql.jdbc.Driver

spring.shardingsphere.datasource.ds2.url=jdbc:mysql://localhost:3306/orderdb2?useSSL=false&autoReconnect=true&characterEncoding=UTF-8&serverTimezone=UTC

spring.shardingsphere.datasource.ds2.username=root

spring.shardingsphere.datasource.ds2.password=18420163207

# 配置广播表主键生成策略

spring.shardingsphere.sharding.tables.t_config.key-generator.column= config_id

spring.shardingsphere.sharding.tables.t_config.key-generator.type=SNOWFLAKE

# 配置广播表表名(广播表最核心的就是这个),上面配置了两个数据源(ds1和ds2)

# 广播表就是说当我们插入数据到ds1.t_config或者ds2.t_config中的随便那一个,另外一个数据源的t_config表也会自动插入相同的数据(其他操作都是一样会自动同步)

# 广播表会找到spring.shardingsphere.datasource.names配置的数据源列表对所有t_config进行同步。

spring.shardingsphere.sharding.broadcast-tables=t_config

#开启ShardingSphere的SQL输出日志

spring.shardingsphere.props.sql.show=true

# 在映射实体或者属性时,将数据库中表名和字段名中的下划线去掉,按照驼峰命名法映射 order_id ---> orderId

mybatis-plus.configuration.map-underscore-to-camel-case=true

# 这个配置一定要加(注意)

spring.main.allow-bean-definition-overriding= true

junit测试类

测试1:往ds1.t_config这个广播表插入数据

@Autowired

private ConfigMapper configMapper;

@Test

void addConfigToBroadcastTable(){

Config config = new Config();

config.setConfigInfo("配置信息123");

configMapper.insert(config);

}

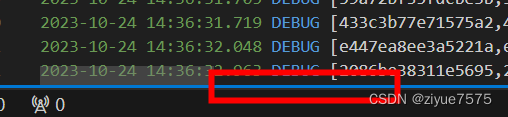

- 输出日志:

- 查看数据库:

Sharding-JDBC读写分离⭐

Docker搭建MySQL主从复制(1主1从)⭐

Master主节点配置

- 1:运行一个mysql容器实例。作为Master节点(如果没有mysql镜像则会自动拉取)

docker run -p 3307:3306 \

-v /my-sql/mysql-master/log:/var/log/mysql \

-v /my-sql/mysql-master/data:/var/lib/mysql \

-v /my-sql/mysql-master/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=123456 \

--name mysql-master \

-d mysql:5.7

- 2:创建my.cnf文件(也就是mysql的配置文件)

vim /my-sql/mysql-master/conf/my.cnf

将内容粘贴进my.cnf文件

[client]

# 指定编码格式为utf8,默认的MySQL会有中文乱码问题

default_character_set=utf8

[mysqld]

collation_server=utf8_general_ci

character_set_server=utf8

# 全局唯一id(不允许有相同的)

server_id=200

binlog-ignore-db=mysql

# 指定MySQL二进制日志(可以修改)

log-bin=order-mysql-bin

# binlog最大容量

binlog_cache_size=1M

# 二进制日志格式(这里指定的是混合日志)

binlog_format=mixed

# binlog的有效期(单位:天)

expire_logs_days=7

slave_skip_errors=1062

- 3:重启该mysql容器实例

docker restart mysql-master

- 4:进入容器内部,并登陆mysql(密码默认是123456)

$ docker exec -it mysql-master /bin/bash

$ mysql -uroot -p

- 5:在Master节点的MySQL中创建用户和分配权限

在主数据库创建的该帐号密码只是用来进行同步数据。

create user 'slave'@'%' identified by '123456';

grant replication slave, replication client on *.* to 'slave'@'%';

Slave从节点配置

- 1:运行一个MySQL容器实例,作为slave节点(从节点)

docker run -p 3308:3306 \

-v /my-sql/mysql-slave/log:/var/log/mysql \

-v /my-sql/mysql-slave/data:/var/lib/mysql \

-v /my-sql/mysql-slave/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=123456 \

--name mysql-slave \

-d mysql:5.7

- 2:创建my.cnf文件(也就是mysql的配置文件)

vim /my-sql/mysql-slave/conf/my.cnf

将内容粘贴进my.cnf文件

[client]

# 指定编码格式为utf8,默认的MySQL会有中文乱码问题

default_character_set=utf8

[mysqld]

collation_server=utf8_general_ci

character_set_server=utf8

# 全局唯一id(不允许有相同的)

server_id=201

binlog-ignore-db=mysql

# 指定MySQL二进制日志(可以修改)

log-bin=order-mysql-bin

# binlog最大容量

binlog_cache_size=1M

# 二进制日志格式(这里指定的是混合日志)

binlog_format=mixed

# binlog的有效期(单位:天)

expire_logs_days=7

slave_skip_errors=1062

# 表示slave将复制事件写进自己的二进制日志

log_slave_updates=1

# 表示从机只能读

read_only=1

- 3:重启该mysql容器实例

docker restart mysql-slave

- 4:查看容器实例是否都是up

[root@k8s-master ~]# docker ps | grep mysql

3be6e547ab4e mysql:5.7 "docker-entrypoint.s…" About a minute ago Up 24 seconds 33060/tcp, 0.0.0.0:3308->3306/tcp, :::3308->3306/tcp mysql-slave

214fed096342 mysql:5.7 "docker-entrypoint.s…" 7 minutes ago Up 4 minutes 33060/tcp, 0.0.0.0:3307->3306/tcp, :::3307->3306/tcp mysql-master

- 5:进入从机MySQL

$ docker exec -it mysql-slave /bin/bash

$ mysql -uroot -p

- 6:查询Master数据库所在的服务器(复制起来):

[Master数据库所在的服务器 ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.184.100 netmask 255.255.255.0 broadcast 192.168.184.255

inet6 fe80::224c:e6cc:c9ab:f4e0 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:af:f8:9f txqueuelen 1000 (Ethernet)

RX packets 113915 bytes 164657385 (157.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 12073 bytes 970949 (948.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

- 7:在从数据库(slave)配置主从同步(记住下面这个命令要在从(slave)数据库执行)

在Master节点中找到ens33的ip地址并放到下面的master_host中(记住这个ip是Master数据库所在的服务器ip,不是从Slave数据库的ip):

change master to master_host='192.168.184.100',master_user='slave',master_password='123456',master_port=3307,master_log_file='order-mysql-bin.000001',master_log_pos=617,master_connect_retry=30;

配置参数解析

master_host:主数据库(Master)的 ip 地址

master_user:在Master数据库中创建的用来进行同步的帐号

master_password:在Master数据库中创建的用来进行同步的帐号的密码

master_port:Master数据库的 MySQL端口号,这里是3307

master_log_file:MySQL的 binlog文件名

master_log_pos:binlog读取位置

- 8:在从数据库(slave)查看主从同步状态:

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State:

Master_Host: 192.168.184.100

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: order-mysql-bin.000001

Read_Master_Log_Pos: 617

Relay_Log_File: 3be6e547ab4e-relay-bin.000001

Relay_Log_Pos: 4

Relay_Master_Log_File: order-mysql-bin.000001

Slave_IO_Running: No

Slave_SQL_Running: No

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 617

Relay_Log_Space: 154

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: NULL

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 0

Master_UUID:

Master_Info_File: /var/lib/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State:

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

1 row in set (0.15 sec)

ERROR:

No query specified

我们找到里面的Slave_IO_Running: No,Slave_SQL_Running: No属性,发现都是No的状态,证明主从同步还没有开始。。。

- 9:在从数据库(slave)正式开启主从同步

start slave;

- 10:再次在从数据库中查看主从同步状态

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.184.100

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: order-mysql-bin.000001

Read_Master_Log_Pos: 617

Relay_Log_File: 3be6e547ab4e-relay-bin.000002

Relay_Log_Pos: 326

Relay_Master_Log_File: order-mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 617

Relay_Log_Space: 540

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 200

Master_UUID: a606bab7-1736-11ed-9207-0242ac110002

Master_Info_File: /var/lib/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

1 row in set (0.09 sec)

ERROR:

No query specified

我们可以看到已经都为yes了,说明主从同步已经开启。

也可以用Navicat去连接这两个数据库。如果Navicat出现连接不了docker的mysql,则可以:

方法一:关闭防火墙(测试环境用,生产环境不可以用)

sudo systemctl stop firewalld

方法二:开放防火墙对应端口,比如master数据库的3307和slave数据库的3308(生产环境用这个)

主从同步失败问题(Slave_SQL_Running:No)

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.184.132

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: order-mysql-bin.000001

Read_Master_Log_Pos: 3752

Relay_Log_File: 2c4136668536-relay-bin.000002

Relay_Log_Pos: 326

Relay_Master_Log_File: order-mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: No

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 1007

Last_Error: Error 'Can't create database 'mall'; database exists' on query. Default database: 'mall'. Query: 'create database mall'

- Slave_SQL_Running为No的状态,那么这是为什么呢?

- 可以看到Last_Error: Error ‘Can’t create database ‘mall’; database exists’ on query. Default database: ‘mall’. Query: ‘create database mall’

- 原因是主数据库(Master)和从数据库(slave)不一致造成的,我在从数据库执行了命令导致数据不一致,最终导致主从复制失败。

Slave_SQL_Running:No的解决办法

1:把主数据库和从数据库变成一模一样,也就是把多余的数据库和表删除掉,变成默认的mysql状态。(切记生产环境下要做好数据备份!!!)

2:结束同步:

stop slave;

3:再次开启同步:

start slave;

4:搞定!

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.184.132

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: order-mysql-bin.000001

Read_Master_Log_Pos: 3752

Relay_Log_File: 2c4136668536-relay-bin.000003

Relay_Log_Pos: 326

Relay_Master_Log_File: order-mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

给Master和Slave的MySQL各自插入一张表⭐

数据库(orderdb1)

CREATE DATABASE orderdb1;

数据库表(orderdb1.order)

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

DROP TABLE IF EXISTS `order`;

CREATE TABLE `order` (

`order_id` bigint(20) NOT NULL,

`order_info` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`user_id` bigint(20) NOT NULL,

PRIMARY KEY (`order_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = DYNAMIC;

SET FOREIGN_KEY_CHECKS = 1;

- 查看数据库:

开始配置Sharding-JDBC主从复制和读写分离⭐

application.properties

#配置ShardingSphere数据源,定义一个或多个数据源名称

spring.shardingsphere.datasource.names=master01,slave01

#配置master01的数据源(对应master主数据库)

spring.shardingsphere.datasource.master01.type=com.alibaba.druid.pool.DruidDataSource

spring.shardingsphere.datasource.master01.driver-class-name=com.mysql.jdbc.Driver

spring.shardingsphere.datasource.master01.url=jdbc:mysql://192.168.184.100:3307/orderdb1?useSSL=false&autoReconnect=true&characterEncoding=UTF-8&serverTimezone=UTC

spring.shardingsphere.datasource.master01.username=root

spring.shardingsphere.datasource.master01.password=123456

#配置slave01的数据源(对应slave从数据库)

spring.shardingsphere.datasource.slave01.type=com.alibaba.druid.pool.DruidDataSource

spring.shardingsphere.datasource.slave01.driver-class-name=com.mysql.jdbc.Driver

spring.shardingsphere.datasource.slave01.url=jdbc:mysql://192.168.184.100:3308/orderdb1?useSSL=false&autoReconnect=true&characterEncoding=UTF-8&serverTimezone=UTC

spring.shardingsphere.datasource.slave01.username=root

spring.shardingsphere.datasource.slave01.password=123456

# 配置order表主键生成策略

spring.shardingsphere.sharding.tables.order.key-generator.column=order_id

spring.shardingsphere.sharding.tables.order.key-generator.type=SNOWFLAKE

# 主从复制+读写分离三大配置(核心)

# 主从复制数据源定义为:master-slave-datasource01(其实都可以)

# master-data-source-name:为上面定义的master主数据源的名称(只能写一个主库),写操作全部到master01数据源

spring.shardingsphere.sharding.master-slave-rules.master-slave-datasource01.master-data-source-name=master01

# slave-data-source-names:为上面定义的slave从数据源的名称列表(**可以写多个,用逗号分隔**),读操作全部到slave01数据源

spring.shardingsphere.sharding.master-slave-rules.master-slave-datasource01.slave-data-source-names=slave01

# order分表策略,固定分配至 master-slave-datasource01 的 order 真实表

spring.shardingsphere.sharding.tables.order.actual-data-nodes=master-slave-datasource01.order

#开启ShardingSphere的SQL输出日志

spring.shardingsphere.props.sql.show=true

# 在映射实体或者属性时,将数据库中表名和字段名中的下划线去掉,按照驼峰命名法映射 order_id ---> orderId

mybatis-plus.configuration.map-underscore-to-camel-case=true

# 这个配置一定要加(注意)

spring.main.allow-bean-definition-overriding= true

junit测试类

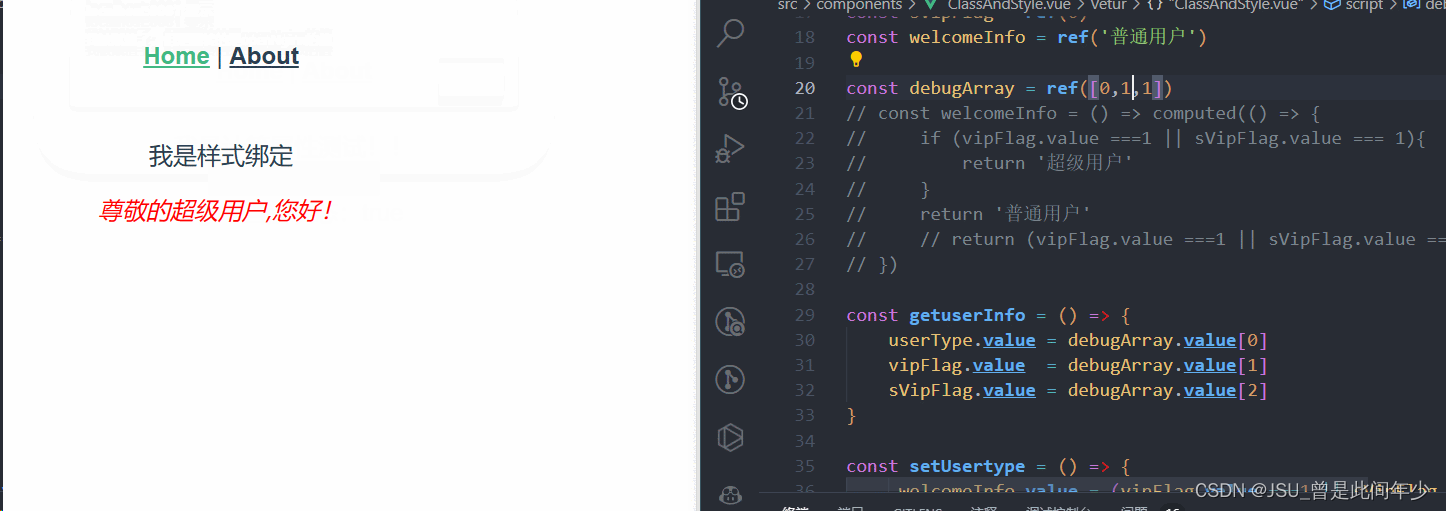

测试1:测试主从同步,读写分离(写操作)⭐

@Autowired

private OrderMapper orderMapper;

//测试主从同步,读写分离(写操作)

@Test

void addOrder(){

Order order = new Order();

order.setOrderInfo("测试主从复制")

.setUserId(1001L);

orderMapper.insert(order);

}

- 输出日志:(查看写操作是到哪个数据源中执行)

- 查看数据库:(查看主从复制是否成功)

测试2:测试读写分离(读操作)⭐

// 测试读写分离(读操作)

@Test

void selectOrder(){

List<Order> orders = orderMapper.selectList(null);

orders.forEach(System.out::println);

}

- 输出日志:(查看读操作是到哪个数据源中执行)

注意事项(遇到的bug的解决方案⭐)

问题1:Druid数据源问题

不要使用druid-spring-boot-starter这个依赖,否则启动会出现问题(会有冲突)。

解决方案:(使用下面的Druid数据源,可以自己调整版本,其他不动!)

<!-- 要使用下面这个druid依赖,不能使用druid-spring-boot-starter的依赖-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.2.6</version>

</dependency>

Sharding-Proxy实战教程

ShardingSphere-Proxy 是 Apache ShardingSphere 的第二个产品。 它定位为透明化的数据库代理端,通过实现数据库二进制协议,对异构语言提供支持。 目前提供 MySQL 和 PostgreSQL 协议,透明化数据库操作,对 DBA 更加友好。

ShardingSphere-Proxy 的优势在于对异构语言的支持,以及为 DBA 提供可操作入口。

Linux下载Sharding-Proxy4.1.1(window版本也是一样的安装方式)⭐

方式1:官网下载(缺点是:需要修改很多地方,而且下载很慢,不推荐!)

- 1:进入官网下载:

shardingsphere所有版本

- 2:解压Sharding-Proxy包,然后把lib目录下的所有文件后缀名都改成.jar(这里是一个坑,有一些文件后缀名不是.jar,此时我们需要更改过来)

- 3:下载mysql的jar包放到Sharding-Proxy的lib目录下,然后打成zip包上传到服务器:

mysql-connector-java-8快速下载地址

- 4:查看sharding-proxy的zip包是否上传成功:

[root@k8s-master sharding-proxy]# pwd

/root/sharding-proxy

[root@k8s-master sharding-proxy]# ls

apache-shardingsphere-4.1.1-sharding-proxy-bin.zip

- 5:由于这个sharding-proxy是zip包,所以要下载unzip进行解压缩。

yum -y install unzip

- 6:解压sharding-proxy

unzip apache-shardingsphere-4.1.1-sharding-proxy-bin.zip

- 7:给文件夹改个名:

mv apache-shardingsphere-4.1.1-sharding-proxy-bin sharding-proxy-4.1.1

方式2:sharding-proxy的改进版(优点:拿来即用,不用修改任何东西,下载快。推荐⭐)

下载地址

-

1:将zip包上传到服务器:

-

2:查看sharding-proxy的zip包是否上传成功:

[root@k8s-master sharding-proxy]# pwd

/root/sharding-proxy

[root@k8s-master sharding-proxy]# ls

apache-shardingsphere-4.1.1-sharding-proxy-bin.zip

- 3:由于这个sharding-proxy是zip包,所以要下载unzip进行解压缩。

yum -y install unzip

- 4:解压sharding-proxy

unzip apache-shardingsphere-4.1.1-sharding-proxy-bin.zip

- 5:给文件夹改个名:

mv apache-shardingsphere-4.1.1-sharding-proxy-bin sharding-proxy-4.1.1

Linux安装JDK8(Sharding-Proxy需要JDK环境⭐)

- 1:去官网下载JDK8的tar.gz包;或者下载我们下面给的地址的zip包。然后上传到Linux服务器(我们拿下面的zip包举例)

快速下载JDK8的Linux包

[root@k8s-master jdk]# pwd

/root/jdk

[root@k8s-master jdk]# ls

jdk1.8-linux.zip

- 2:解压zip包:

unzip jdk1.8-linux.zip

- 3:在/usr/local下创建文件夹:

mkdir -p /usr/local/java

- 4:将刚刚解压到的jdk复制过去:

cp -rf jdk1.8-linux /usr/local/java

- 5:当前jdk目录:

[root@k8s-master java]# pwd

/usr/local/java

[root@k8s-master java]# ls

jdk1.8-linux

- 6:配置环境变量:

在最后一行粘贴:(注意:JAVA_HOME=/usr/local/java/jdk1.8-linux 就是你刚刚copy到/usr/local/java下的java目录文件夹)

export JAVA_HOME=/usr/local/java/jdk1.8-linux

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

- 7:让刚刚设置的环境变量生效并检查是否安装成功

source /etc/profile

- 8:给/usr/local/java/jdk1.8-linux/bin/java目录权限:

chmod +x /usr/local/java/jdk1.8-linux/bin/java

- 9:查看是否安装成功:

[root@k8s-master java]# java -version

java version "1.8.0_333"

Java(TM) SE Runtime Environment (build 1.8.0_333-b02)

Java HotSpot(TM) 64-Bit Server VM (build 25.333-b02, mixed mode)

Sharding-Proxy基本配置⭐

- 1:进入sharding-proxy的配置文件所在目录:

cd /root/sharding-proxy/sharding-proxy-4.1.1/conf

- 2:删除文件 server.yaml

rm -f /root/sharding-proxy/sharding-proxy-4.1.1/conf/server.yaml

- 3:重新编辑server.yaml

vi /root/sharding-proxy/sharding-proxy-4.1.1/conf/server.yaml

内容如下:

# orchestration配置服务治理(例如nacos、zookeeper等等都可以接入进去)

#orchestration:

# orchestration_ds:

# orchestrationType: registry_center,config_center # 服务治理的类型(一般就是注册中心和配置中心)

# instanceType: zookeeper #服务治理的类型(例如nacos、zookeeper等等)

# serverLists: localhost:2181 #服务治理的url地址(也就是nacos、zookeeper的地址)

# namespace: orchestration

# props:

# overwrite: false

# retryIntervalMilliseconds: 500

# timeToLiveSeconds: 60

# maxRetries: 3

# operationTimeoutMilliseconds: 500

# 配置用户帐号密码权限:

authentication:

users:

root: # 这个代表帐号为:root

password: root # 帐号为root的用户密码为root (并且权限最高)

sharding: # 这个代表帐号为:sharding

password: sharding # 帐号为sharding的用户密码为sharding

authorizedSchemas: sharding_db # 帐号为sharding的用户只能操作sharding_db数据库

# 下面的配置不用管,把注释去掉即可。

props:

max.connections.size.per.query: 1

acceptor.size: 16 # The default value is available processors count * 2.

executor.size: 16 # Infinite by default.

proxy.frontend.flush.threshold: 128 # The default value is 128.

# LOCAL: Proxy will run with LOCAL transaction.

# XA: Proxy will run with XA transaction.

# BASE: Proxy will run with B.A.S.E transaction.

proxy.transaction.type: LOCAL

proxy.opentracing.enabled: false

proxy.hint.enabled: false

query.with.cipher.column: true

sql.show: false

allow.range.query.with.inline.sharding: false

Sharding-Proxy分库分表⭐

数据库(orderdb1和orderdb2)

CREATE DATABASE orderdb1;

CREATE DATABASE orderdb2;

数据库逻辑表:(orderdb1和orderdb2数据库都要执行下面的语句)

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

DROP TABLE IF EXISTS `order`;

CREATE TABLE `order` (

`order_id` bigint(20) NOT NULL,

`order_info` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`user_id` bigint(20) NOT NULL,

PRIMARY KEY (`order_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = DYNAMIC;

DROP TABLE IF EXISTS `order_1`;

CREATE TABLE `order_1` (

`order_id` bigint(20) NOT NULL,

`order_info` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`user_id` bigint(20) NOT NULL,

PRIMARY KEY (`order_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = DYNAMIC;

DROP TABLE IF EXISTS `order_2`;

CREATE TABLE `order_2` (

`order_id` bigint(20) NOT NULL,

`order_info` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`user_id` bigint(20) NOT NULL,

PRIMARY KEY (`order_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = DYNAMIC;

DROP TABLE IF EXISTS `t_config`;

CREATE TABLE `t_config` (

`config_id` bigint(20) NOT NULL,

`config_info` text CHARACTER SET utf8 COLLATE utf8_general_ci NULL,

PRIMARY KEY (`config_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

SET FOREIGN_KEY_CHECKS = 1;

Docker启动一个MySQL,并执行上面的命令

docker run -p 3311:3306 \

-v /my-sql/mysql01/log:/var/log/mysql \

-v /my-sql/mysql01/data:/var/lib/mysql \

-v /my-sql/mysql01/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=123456 \

--name mysql01 \

-d mysql:5.7

- 效果图:

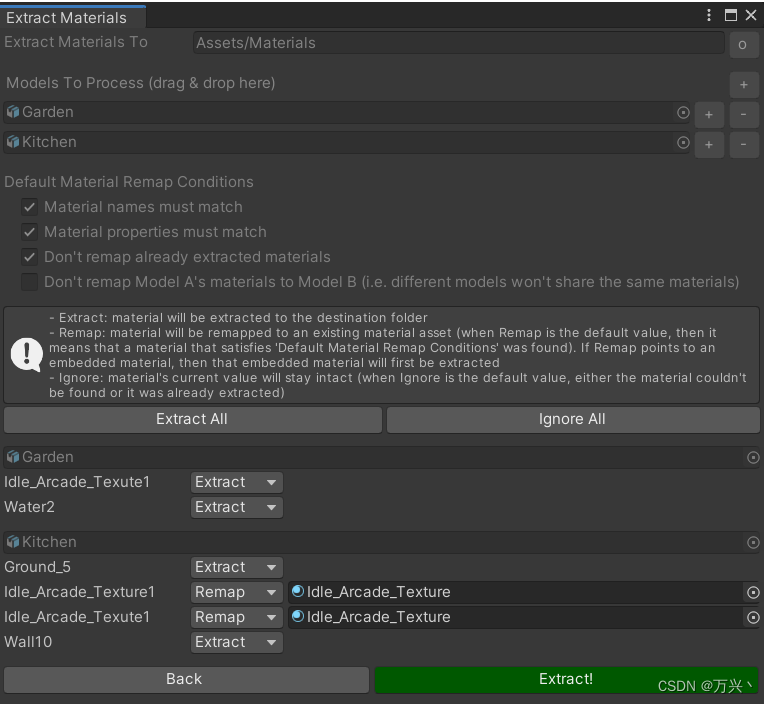

开始配置分库分表

- 1:删除 config-sharding.yaml(或者直接编辑都可以)

rm -f /root/sharding-proxy/sharding-proxy-4.1.1/conf/config-sharding.yaml

- 2:重新编辑config-sharding.yaml:

vi /root/sharding-proxy/sharding-proxy-4.1.1/conf/config-sharding.yaml

内容如下:

# sharding-proxy分库分表默认的代理数据库,通过这个数据库可以访问我们真实的数据库(不用改它)

schemaName: sharding_db

# 配置数据源

dataSources:

ds1: # 定义一个ds1数据源

url: jdbc:mysql://192.168.184.100:3311/orderdb1?serverTimezone=UTC&useSSL=false #mysql的url

username: root # mysql帐号

password: 123456 # mysql密码

connectionTimeoutMilliseconds: 30000

idleTimeoutMilliseconds: 60000

maxLifetimeMilliseconds: 1800000

maxPoolSize: 50

ds2: # 定义一个ds2数据源

url: jdbc:mysql://192.168.184.100:3311/orderdb2?serverTimezone=UTC&useSSL=false #mysql的url

username: root # mysql帐号

password: 123456 # mysql密码

connectionTimeoutMilliseconds: 30000

idleTimeoutMilliseconds: 60000

maxLifetimeMilliseconds: 1800000

maxPoolSize: 50

#配置分片规则

shardingRule:

tables:

order: # 逻辑表名(我们的逻辑表是order)

actualDataNodes: ds${1..2}.order_${1..2} #数据节点分布(没有真实表的话sharding-proxy会自动生成,所以只需要逻辑表即可)

databaseStrategy: #分库策略

inline: # 指定inline分库策略

shardingColumn: order_id #分片键

algorithmExpression: ds${order_id % 2 + 1} # 分片算法

tableStrategy: # 分表策略

inline: # 指定inline分表策略

shardingColumn: order_id #分片键

algorithmExpression: order_${order_id % 2 + 1} # 分片算法

keyGenerator: # 主键生成策略

type: SNOWFLAKE #雪花算法

column: order_id #主键字段

- 3:给sharding-proxy启动脚本权限:

[root@k8s-master bin]# cd /root/sharding-proxy/sharding-proxy-4.1.1/bin

[root@k8s-master bin]# chmod u+x *.sh

- 4:启动 Sharding-Proxy 服务(指定sharding-proxy的端口为3366,不要使用默认端口)

[root@k8s-master bin]# pwd

/root/sharding-proxy/sharding-proxy-4.1.1/bin

[root@k8s-master bin]# ./start.sh 3366

Starting the Sharding-Proxy ...

The port is 3366

The classpath is /root/sharding-proxy/sharding-proxy-4.1.1/conf:.:..:/root/sharding-proxy/sharding-proxy-4.1.1/lib/*:/root/sharding-proxy/sharding-proxy-4.1.1/lib/*:/root/sharding-proxy/sharding-proxy-4.1.1/ext-lib/*

Please check the STDOUT file: /root/sharding-proxy/sharding-proxy-4.1.1/logs/stdout.log

- 5:查看是否启动成功:

cat /root/sharding-proxy/sharding-proxy-4.1.1/logs/stdout.log

- 6:application.properties:(注意:下面的账号、密码、IP、端口、数据库全部要换成sharding-proxy的)

#关闭shardingsphere-JDBC(由于我们不使用shardingsphere-JDBC所以需要先关闭)

spring.shardingsphere.enabled=false

# 配置普通的JDBC数据源(去连接sharding-proxy的分库分表代理数据库)

spring.datasource.type=com.alibaba.druid.pool.DruidDataSource

spring.datasource.driver-class-name=com.mysql.jdbc.Driver

# 将url换成sharding-proxy代理数据库的IP和端口(3366),数据库统一换成sharding_db(sharding-proxy的分库分表代理数据库)-----重点

spring.datasource.url=jdbc:mysql://192.168.184.100:3366/sharding_db?useSSL=false&autoReconnect=true&characterEncoding=UTF-8&serverTimezone=UTC

# sharding-proxy的账号

spring.datasource.username=root

# sharding-proxy的密码

spring.datasource.password=root

# 在映射实体或者属性时,将数据库中表名和字段名中的下划线去掉,按照驼峰命名法映射 order_id ---> orderId

mybatis-plus.configuration.map-underscore-to-camel-case=true

# 这个配置一定要加(注意)

spring.main.allow-bean-definition-overriding= true

- 7:测试方法:

@Test

void addOrder(){

for (int i = 0; i < 9; i++) {

Order order = new Order();

order.setOrderId(Long.valueOf("1000"+i))

. setOrderInfo("Sharding-Proxy success")

.setUserId(1001L);

orderMapper.insert(order);

}

- 8:查看数据库:

Sharding-Proxy读写分离⭐

Docker搭建MySQL主从复制(1主1从)⭐

Master主节点配置

- 1:运行一个mysql容器实例。作为Master节点(如果没有mysql镜像则会自动拉取)

docker run -p 3307:3306 \

-v /my-sql/mysql-master/log:/var/log/mysql \

-v /my-sql/mysql-master/data:/var/lib/mysql \

-v /my-sql/mysql-master/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=123456 \

--name mysql-master \

-d mysql:5.7

- 2:创建my.cnf文件(也就是mysql的配置文件)

vim /my-sql/mysql-master/conf/my.cnf

将内容粘贴进my.cnf文件

[client]

# 指定编码格式为utf8,默认的MySQL会有中文乱码问题

default_character_set=utf8

[mysqld]

collation_server=utf8_general_ci

character_set_server=utf8

# 全局唯一id(不允许有相同的)

server_id=200

binlog-ignore-db=mysql

# 指定MySQL二进制日志(可以修改)

log-bin=order-mysql-bin

# binlog最大容量

binlog_cache_size=1M

# 二进制日志格式(这里指定的是混合日志)

binlog_format=mixed

# binlog的有效期(单位:天)

expire_logs_days=7

slave_skip_errors=1062

- 3:重启该mysql容器实例

docker restart mysql-master

- 4:进入容器内部,并登陆mysql(密码默认是123456)

$ docker exec -it mysql-master /bin/bash

$ mysql -uroot -p

- 5:在Master节点的MySQL中创建用户和分配权限

在主数据库创建的该帐号密码只是用来进行同步数据。

create user 'slave'@'%' identified by '123456';

grant replication slave, replication client on *.* to 'slave'@'%';

Slave从节点配置

- 1:运行一个MySQL容器实例,作为slave节点(从节点)

docker run -p 3308:3306 \

-v /my-sql/mysql-slave/log:/var/log/mysql \

-v /my-sql/mysql-slave/data:/var/lib/mysql \

-v /my-sql/mysql-slave/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=123456 \

--name mysql-slave \

-d mysql:5.7

- 2:创建my.cnf文件(也就是mysql的配置文件)

vim /my-sql/mysql-slave/conf/my.cnf

将内容粘贴进my.cnf文件

[client]

# 指定编码格式为utf8,默认的MySQL会有中文乱码问题

default_character_set=utf8

[mysqld]

collation_server=utf8_general_ci

character_set_server=utf8

# 全局唯一id(不允许有相同的)

server_id=201

binlog-ignore-db=mysql

# 指定MySQL二进制日志(可以修改)

log-bin=order-mysql-bin

# binlog最大容量

binlog_cache_size=1M

# 二进制日志格式(这里指定的是混合日志)

binlog_format=mixed

# binlog的有效期(单位:天)

expire_logs_days=7

slave_skip_errors=1062

# 表示slave将复制事件写进自己的二进制日志

log_slave_updates=1

# 表示从机只能读

read_only=1

- 3:重启该mysql容器实例

docker restart mysql-slave

- 4:查看容器实例是否都是up

[root@k8s-master ~]# docker ps | grep mysql

3be6e547ab4e mysql:5.7 "docker-entrypoint.s…" About a minute ago Up 24 seconds 33060/tcp, 0.0.0.0:3308->3306/tcp, :::3308->3306/tcp mysql-slave

214fed096342 mysql:5.7 "docker-entrypoint.s…" 7 minutes ago Up 4 minutes 33060/tcp, 0.0.0.0:3307->3306/tcp, :::3307->3306/tcp mysql-master

- 5:进入从机MySQL

$ docker exec -it mysql-slave /bin/bash

$ mysql -uroot -p

- 6:查询Master数据库所在的服务器(复制起来):

[Master数据库所在的服务器 ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.184.100 netmask 255.255.255.0 broadcast 192.168.184.255

inet6 fe80::224c:e6cc:c9ab:f4e0 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:af:f8:9f txqueuelen 1000 (Ethernet)

RX packets 113915 bytes 164657385 (157.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 12073 bytes 970949 (948.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

- 7:在从数据库(slave)配置主从同步(记住下面这个命令要在从(slave)数据库执行)

在Master节点中找到ens33的ip地址并放到下面的master_host中(记住这个ip是Master数据库所在的服务器ip,不是从Slave数据库的ip):

change master to master_host='192.168.184.100',master_user='slave',master_password='123456',master_port=3307,master_log_file='order-mysql-bin.000001',master_log_pos=617,master_connect_retry=30;

配置参数解析

master_host:主数据库(Master)的 ip 地址

master_user:在Master数据库中创建的用来进行同步的帐号

master_password:在Master数据库中创建的用来进行同步的帐号的密码

master_port:Master数据库的 MySQL端口号,这里是3307

master_log_file:MySQL的 binlog文件名

master_log_pos:binlog读取位置

- 8:在从数据库(slave)查看主从同步状态:

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State:

Master_Host: 192.168.184.100

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: order-mysql-bin.000001

Read_Master_Log_Pos: 617

Relay_Log_File: 3be6e547ab4e-relay-bin.000001

Relay_Log_Pos: 4

Relay_Master_Log_File: order-mysql-bin.000001

Slave_IO_Running: No

Slave_SQL_Running: No

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 617

Relay_Log_Space: 154

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: NULL

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 0

Master_UUID:

Master_Info_File: /var/lib/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State:

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

1 row in set (0.15 sec)

ERROR:

No query specified

我们找到里面的Slave_IO_Running: No,Slave_SQL_Running: No属性,发现都是No的状态,证明主从同步还没有开始。。。

- 9:在从数据库(slave)正式开启主从同步

start slave;

- 10:再次在从数据库中查看主从同步状态

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.184.100

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: order-mysql-bin.000001

Read_Master_Log_Pos: 617

Relay_Log_File: 3be6e547ab4e-relay-bin.000002

Relay_Log_Pos: 326

Relay_Master_Log_File: order-mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 617

Relay_Log_Space: 540

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 200

Master_UUID: a606bab7-1736-11ed-9207-0242ac110002

Master_Info_File: /var/lib/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

1 row in set (0.09 sec)

ERROR:

No query specified

我们可以看到已经都为yes了,说明主从同步已经开启。

也可以用Navicat去连接这两个数据库。如果Navicat出现连接不了docker的mysql,则可以:

方法一:关闭防火墙(测试环境用,生产环境不可以用)

sudo systemctl stop firewalld

方法二:开放防火墙对应端口,比如master数据库的3307和slave数据库的3308(生产环境用这个)

主从同步失败问题(Slave_SQL_Running:No)

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.184.132

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: order-mysql-bin.000001

Read_Master_Log_Pos: 3752

Relay_Log_File: 2c4136668536-relay-bin.000002

Relay_Log_Pos: 326

Relay_Master_Log_File: order-mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: No

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 1007

Last_Error: Error 'Can't create database 'mall'; database exists' on query. Default database: 'mall'. Query: 'create database mall'

- Slave_SQL_Running为No的状态,那么这是为什么呢?

- 可以看到Last_Error: Error ‘Can’t create database ‘mall’; database exists’ on query. Default database: ‘mall’. Query: ‘create database mall’

- 原因是主数据库(Master)和从数据库(slave)不一致造成的,我在从数据库执行了命令导致数据不一致,最终导致主从复制失败。

Slave_SQL_Running:No的解决办法

1:把主数据库和从数据库变成一模一样,也就是把多余的数据库和表删除掉,变成默认的mysql状态。(切记生产环境下要做好数据备份!!!)

2:结束同步:

stop slave;

3:再次开启同步:

start slave;

4:搞定!

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.184.132

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: order-mysql-bin.000001

Read_Master_Log_Pos: 3752

Relay_Log_File: 2c4136668536-relay-bin.000003

Relay_Log_Pos: 326

Relay_Master_Log_File: order-mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

给Master和Slave的MySQL各自插入一张表⭐

数据库(orderdb1)

CREATE DATABASE orderdb1;

数据库表(orderdb1.order)

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

DROP TABLE IF EXISTS `order`;

CREATE TABLE `order` (

`order_id` bigint(20) NOT NULL,

`order_info` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`user_id` bigint(20) NOT NULL,

PRIMARY KEY (`order_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = DYNAMIC;

SET FOREIGN_KEY_CHECKS = 1;

- 查看数据库:

开始配置Sharding-Proxy读写分离⭐

- 1:如果刚刚启动了Sharding-Proxy,则先stop一下:

[root@k8s-master bin]# pwd

/root/sharding-proxy/sharding-proxy-4.1.1/bin

[root@k8s-master bin]# ./stop.sh

- 2:删除config-master_slave.yaml文件

rm -rf /root/sharding-proxy/sharding-proxy-4.1.1/conf/config-master_slave.yaml

- 3:重新编辑config-master_slave.yaml文件

vi /root/sharding-proxy/sharding-proxy-4.1.1/conf/config-master_slave.yaml

内容如下:

# 设置sharding-proxy读写分离代理数据库(默认为master_slave_db)

schemaName: master_slave_db

# 配置数据源()

dataSources:

master_ds: # 定义一个名为master_ds的数据源

url: jdbc:mysql://192.168.184.100:3307/orderdb1?serverTimezone=UTC&useSSL=false

username: root

password: 123456

connectionTimeoutMilliseconds: 30000

idleTimeoutMilliseconds: 60000

maxLifetimeMilliseconds: 1800000

maxPoolSize: 50

slave_ds_1: # 定义一个名为slave_ds_1的数据源

url: jdbc:mysql://192.168.184.100:3308/orderdb1?serverTimezone=UTC&useSSL=false

username: root

password: 123456

connectionTimeoutMilliseconds: 30000

idleTimeoutMilliseconds: 60000

maxLifetimeMilliseconds: 1800000

maxPoolSize: 50

masterSlaveRule: #这里才是真正配置读写分离的配置

name: ms_ds01

masterDataSourceName: master_ds #配置master主数据源名称(在上面配置数据源可以找到)

slaveDataSourceNames: #配置slave从数据源的集合(在上面配置数据源可以找到)

- slave_ds_1 # 从数据源名称

- 4:启动sharding-proxy:

[root@k8s-master bin]# pwd

/root/sharding-proxy/sharding-proxy-4.1.1/bin

[root@k8s-master bin]# ./start.sh 3366

Starting the Sharding-Proxy ...

The port is 3366

The classpath is /root/sharding-proxy/sharding-proxy-4.1.1/conf:.:..:/root/sharding-proxy/sharding-proxy-4.1.1/lib/*:/root/sharding-proxy/sharding-proxy-4.1.1/lib/*:/root/sharding-proxy/sharding-proxy-4.1.1/ext-lib/*

Please check the STDOUT file: /root/sharding-proxy/sharding-proxy-4.1.1/logs/stdout.log

- 5:application.properties:

#关闭shardingsphere-JDBC(由于我们不使用shardingsphere-JDBC所以需要先关闭)

spring.shardingsphere.enabled=false

# 配置普通的JDBC数据源(去连接sharding-proxy的读写分离代理数据库master_slave_db)

spring.datasource.type=com.alibaba.druid.pool.DruidDataSource

spring.datasource.driver-class-name=com.mysql.jdbc.Driver

# 将url换成sharding-proxy代理数据库的IP和端口(3366),数据库统一换成master_slave_db(sharding-proxy的读写分离代理数据库)-----重点

spring.datasource.url=jdbc:mysql://192.168.184.100:3366/master_slave_db?useSSL=false&autoReconnect=true&characterEncoding=UTF-8&serverTimezone=UTC

# sharding-proxy的账号

spring.datasource.username=root

# sharding-proxy的密码

spring.datasource.password=root

# 在映射实体或者属性时,将数据库中表名和字段名中的下划线去掉,按照驼峰命名法映射 order_id ---> orderId

mybatis-plus.configuration.map-underscore-to-camel-case=true

# 这个配置一定要加(注意)

spring.main.allow-bean-definition-overriding= true

- 6:测试方法:

//测试主从同步,读写分离(写操作)

@Test

void addOrder(){

Order order = new Order();

order.setOrderId(Long.valueOf("166666"))

.setOrderInfo("测试sharding-proxy主从复制-读写分离")

.setUserId(1002L);

orderMapper.insert(order);

}

// 测试读写分离(读操作)

@Test

void selectOrder(){

List<Order> orders = orderMapper.selectList(null);

orders.forEach(System.out::println);

}