华为bug汇报:华为NPU竟成“遥遥领先”?

本文为我汇报在Ascend / pytorch 社区的一个bug,其中对NPU的实际算力进行了测试,并发现了华为NPU实际显存与销售宣传时存在着较大差差距的问题(算力问题见问题一、显存问题见问题二)。

究竟是遥遥领先,还是?

bug描述汇总

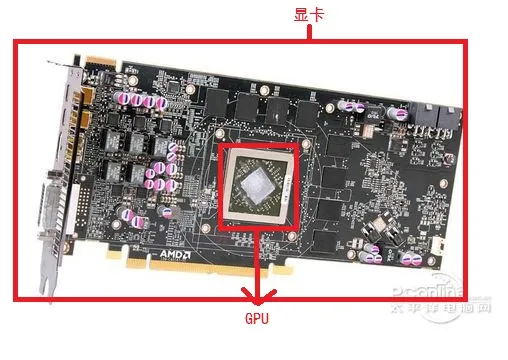

本机NPU为Atlas 300I Pro

本issue一共汇报两个问题,并在最后附上自己的环境信息,希望尽快寻得解释

问题一:推理速度慢且最终报错,只有1.20it/s

问题二:NPU显存计算与GPU似乎不同,原因为何?

问题1:

报错代码:

使用NPU进行fp16

import torch

import torch_npu

from accelerate import Accelerator

accelerator = Accelerator()

from accelerate import dispatch_model

device = accelerator.device

# source '/home/HwHiAiUser/Ascend/ascend-toolkit/set_env.sh'

x = torch.randn(2, 2).npu()

y = torch.randn(2, 2).npu()

z = x.mm(y)

print(z)

print(device)

# from modelscope import Model, AutoTokenizer

# model = Model.from_pretrained("modelscope/Llama-2-7b-ms", revision='v1.0.1', device_map=device, torch_dtype=torch.float16)

# tokenizer = AutoTokenizer.from_pretrained("modelscope/Llama-2-7b-ms", revision='v1.0.1')

# prompt = "Hey, are you conscious? Can you talk to me?"

# inputs = tokenizer(prompt, return_tensors="pt")

# # Generate

# generate_ids = model.generate(inputs.input_ids.to(model.device), max_length=30)

# print(tokenizer.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0])

from modelscope import AutoModelForCausalLM, AutoTokenizer, snapshot_download

from modelscope import GenerationConfig

# Note: The default behavior now has injection attack prevention off.

model_dir = snapshot_download("qwen/Qwen-7B-Chat", revision = 'v1.1.4')

tokenizer = AutoTokenizer.from_pretrained(model_dir, trust_remote_code=True)

# use bf16

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map="auto", trust_remote_code=True, bf16=True).eval()

# use fp16

model = AutoModelForCausalLM.from_pretrained(model_dir, device_map=device, trust_remote_code=True, fp16=True).eval()

# use cpu only

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map="cpu", trust_remote_code=True).eval()

# use auto mode, automatically select precision based on the device.

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map=device, trust_remote_code=True).eval()

# Specify hyperparameters for generation

model.generation_config = GenerationConfig.from_pretrained(model_dir, trust_remote_code=True) # 可指定不同的生成长度、top_p等相关超参

# 第一轮对话 1st dialogue turn

response, history = model.chat(tokenizer, "你好", history=None)

print(response)

# 你好!很高兴为你提供帮助。

# 第二轮对话 2nd dialogue turn

response, history = model.chat(tokenizer, "给我讲一个年轻人奋斗创业最终取得成功的故事。", history=history)

print(response)

# 这是一个关于一个年轻人奋斗创业最终取得成功的故事。

# 故事的主人公叫李明,他来自一个普通的家庭,父母都是普通的工人。从小,李明就立下了一个目标:要成为一名成功的企业家。

# 为了实现这个目标,李明勤奋学习,考上了大学。在大学期间,他积极参加各种创业比赛,获得了不少奖项。他还利用课余时间去实习,积累了宝贵的经验。

# 毕业后,李明决定开始自己的创业之路。他开始寻找投资机会,但多次都被拒绝了。然而,他并没有放弃。他继续努力,不断改进自己的创业计划,并寻找新的投资机会。

# 最终,李明成功地获得了一笔投资,开始了自己的创业之路。他成立了一家科技公司,专注于开发新型软件。在他的领导下,公司迅速发展起来,成为了一家成功的科技企业。

# 李明的成功并不是偶然的。他勤奋、坚韧、勇于冒险,不断学习和改进自己。他的成功也证明了,只要努力奋斗,任何人都有可能取得成功。

# 第三轮对话 3rd dialogue turn

response, history = model.chat(tokenizer, "给这个故事起一个标题", history=history)

print(response)

# 《奋斗创业:一个年轻人的成功之路》

补充:我对代码进行了修改,使得能够区分报错发生在哪一步,具体控制台输出均在报错内容中

import torch

import torch_npu

from accelerate import Accelerator

accelerator = Accelerator()

from accelerate import dispatch_model

device = accelerator.device

# source '/home/HwHiAiUser/Ascend/ascend-toolkit/set_env.sh'

x = torch.randn(2, 2).npu()

y = torch.randn(2, 2).npu()

z = x.mm(y)

print(z)

print(device)

# from modelscope import Model, AutoTokenizer

# model = Model.from_pretrained("modelscope/Llama-2-7b-ms", revision='v1.0.1', device_map=device, torch_dtype=torch.float16)

# tokenizer = AutoTokenizer.from_pretrained("modelscope/Llama-2-7b-ms", revision='v1.0.1')

# prompt = "Hey, are you conscious? Can you talk to me?"

# inputs = tokenizer(prompt, return_tensors="pt")

# # Generate

# generate_ids = model.generate(inputs.input_ids.to(model.device), max_length=30)

# print(tokenizer.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0])

from modelscope import AutoModelForCausalLM, AutoTokenizer, snapshot_download

from modelscope import GenerationConfig

# Note: The default behavior now has injection attack prevention off.

model_dir = snapshot_download("qwen/Qwen-7B-Chat", revision = 'v1.1.4')

tokenizer = AutoTokenizer.from_pretrained(model_dir, trust_remote_code=True)

# use bf16

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map="auto", trust_remote_code=True, bf16=True).eval()

# use fp16

model = AutoModelForCausalLM.from_pretrained(model_dir, device_map=device, trust_remote_code=True, fp16=True).eval()

# use cpu only

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map="cpu", trust_remote_code=True).eval()

# use auto mode, automatically select precision based on the device.

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map=device, trust_remote_code=True).eval()

# Specify hyperparameters for generation

model.generation_config = GenerationConfig.from_pretrained(model_dir, trust_remote_code=True) # 可指定不同的生成长度、top_p等相关超参

response, history = model.chat(tokenizer, input("请输入问题:"), history=None)

print(response)

while True:

# 第二轮对话 2nd dialogue turn

response, history = model.chat(tokenizer, input("请输入问题:"), history=history)

print(response)

报错内容:

上述代码推理占用时间近似纯CPU推理,且第三轮对话报错,控制台输出如下:

(NPU) (base) [HwHiAiUser@bogon Code]$ /home/HwHiAiUser/下载/yes/envs/NPU/bin/python /home/HwHiAiUser/Code/main.py

Warning: Device do not support double dtype now, dtype cast repalce with float.

tensor([[-1.8972, -0.0742],

[-0.6470, -0.0174]], device='npu:0')

npu

2023-10-23 00:27:21,121 - modelscope - INFO - PyTorch version 2.1.0+cpu Found.

2023-10-23 00:27:21,122 - modelscope - INFO - Loading ast index from /home/HwHiAiUser/.cache/modelscope/ast_indexer

2023-10-23 00:27:21,141 - modelscope - INFO - Loading done! Current index file version is 1.9.3, with md5 068f7e60e6f05d224ec8ad9a969f5922 and a total number of 943 components indexed

2023-10-23 00:27:21,675 - modelscope - INFO - Use user-specified model revision: v1.1.4

/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/tiktoken/core.py:50: ResourceWarning: unclosed <ssl.SSLSocket fd=123, family=AddressFamily.AF_INET, type=SocketKind.SOCK_STREAM, proto=6, laddr=('192.168.175.2', 57384), raddr=('39.101.130.40', 443)>

self._core_bpe = _tiktoken.CoreBPE(mergeable_ranks, special_tokens, pat_str)

Warning: please make sure that you are using the latest codes and checkpoints, especially if you used Qwen-7B before 09.25.2023.请使用最新模型和代码,尤其如果你在9月25日前已经开始使用Qwen-7B,千万注意不要使用错误代码和模型。

Warning: import flash_attn rotary fail, please install FlashAttention rotary to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/rotary

Warning: import flash_attn rms_norm fail, please install FlashAttention layer_norm to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/layer_norm

Warning: import flash_attn fail, please install FlashAttention to get higher efficiency https://github.com/Dao-AILab/flash-attention

Loading checkpoint shards: 100%|██████████████████████| 8/8 [00:06<00:00, 1.20it/s]

[W OpCommand.cpp:75] Warning: [Check][offset] Check input storage_offset[%ld] = 0 failed, result is untrustworthy4096 (function operator())

/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/transformers/generation/logits_process.py:407: UserWarning: AutoNonVariableTypeMode is deprecated and will be removed in 1.10 release. For kernel implementations please use AutoDispatchBelowADInplaceOrView instead, If you are looking for a user facing API to enable running your inference-only workload, please use c10::InferenceMode. Using AutoDispatchBelowADInplaceOrView in user code is under risk of producing silent wrong result in some edge cases. See Note [AutoDispatchBelowAutograd] for more details. (Triggered internally at /opt/_internal/cpython-3.9.0/lib/python3.9/site-packages/torch/include/ATen/core/LegacyTypeDispatch.h:74.)

sorted_indices_to_remove[..., -self.min_tokens_to_keep :] = 0

[W AddKernelNpu.cpp:86] Warning: The oprator of add is executed, Currently High Accuracy but Low Performance OP with 64-bit has been used, Please Do Some Cast at Python Functions with 32-bit for Better Performance! (function operator())

[W NeKernelNpu.cpp:28] Warning: The oprator of ne is executed, Currently High Accuracy but Low Performance OP with 64-bit has been used, Please Do Some Cast at Python Functions with 32-bit for Better Performance! (function operator())

你好!有什么我能为你做的吗?

好的,我给你讲一个年轻人奋斗创业最终取得成功的故事。这个故事叫做《奋斗》。

故事的主人公是一个叫做李明的年轻人,他出生在一个普通的家庭,但他有一个梦想,那就是成为一名企业家。他从小就对创业有着浓厚的兴趣,经常参加各种创业比赛,也曾经在大学期间创办过一家小型的创业公司。

然而,创业的道路并不容易,李明经历了许多挫折和困难。他的公司一度面临破产的危险,但他并没有放弃,而是更加努力地工作,寻找新的机会和资源。

最终,李明的努力得到了回报,他的公司开始慢慢发展起来,他也因此获得了许多荣誉和奖励。他的故事告诉我们,只要有梦想,有勇气,有毅力,就一定能够实现自己的创业梦想。

EZ9999: Inner Error!

EZ9999 Kernel task happen error, retCode=0x28, [aicpu timeout].[FUNC:PreCheckTaskErr][FILE:task_info.cc][LINE:1574]

TraceBack (most recent call last):

rtStreamSynchronizeWithTimeout execute failed, reason=[aicpu timeout][FUNC:FuncErrorReason][FILE:error_message_manage.cc][LINE:50]

synchronize stream failed, runtime result = 507017[FUNC:ReportCallError][FILE:log_inner.cpp][LINE:161]

DEVICE[0] PID[18400]:

EXCEPTION STREAM:

Exception info:TGID=18400, model id=65535, stream id=3, stream phase=3

Message info[0]:RTS_HWTS: Aicpu timeout, slot_id=12, stream_id=3, task_id=6200

Other info[0]:time=2023-10-23-00:50:30.892.993, function=process_hwts_timeout_exception, line=3745, error code=0x28

Traceback (most recent call last):

File "/home/HwHiAiUser/Code/main.py", line 66, in <module>

response, history = model.chat(tokenizer, "给这个故事起一个标题", history=history)

File "/home/HwHiAiUser/.cache/huggingface/modules/transformers_modules/Qwen-7B-Chat/modeling_qwen.py", line 1199, in chat

outputs = self.generate(

File "/home/HwHiAiUser/.cache/huggingface/modules/transformers_modules/Qwen-7B-Chat/modeling_qwen.py", line 1318, in generate

return super().generate(

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/torch/utils/_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/transformers/generation/utils.py", line 1652, in generate

return self.sample(

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/transformers/generation/utils.py", line 2793, in sample

if unfinished_sequences.max() == 0:

RuntimeError: ACL stream synchronize failed.

[W NPUStream.cpp:372] Warning: NPU warning, error code is 507017[Error]:

[Error]: The aicpu execution times out.

Rectify the fault based on the error information in the log, or you can ask us at follwing gitee link by issues: https://gitee.com/ascend/pytorch/issue

EH9999: Inner Error!

rtDeviceSynchronize execute failed, reason=[aicpu timeout][FUNC:FuncErrorReason][FILE:error_message_manage.cc][LINE:50]

EH9999 wait for compute device to finish failed, runtime result = 507017.[FUNC:ReportCallError][FILE:log_inner.cpp][LINE:161]

TraceBack (most recent call last):

(function npuSynchronizeDevice)

[W NPUStream.cpp:372] Warning: NPU warning, error code is 507017[Error]:

[Error]: The aicpu execution times out.

Rectify the fault based on the error information in the log, or you can ask us at follwing gitee link by issues: https://gitee.com/ascend/pytorch/issue

EH9999: Inner Error!

rtDeviceSynchronize execute failed, reason=[aicpu timeout][FUNC:FuncErrorReason][FILE:error_message_manage.cc][LINE:50]

EH9999 wait for compute device to finish failed, runtime result = 507017.[FUNC:ReportCallError][FILE:log_inner.cpp][LINE:161]

TraceBack (most recent call last):

(function npuSynchronizeDevice)

/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/tempfile.py:821: ResourceWarning: Implicitly cleaning up <TemporaryDirectory '/tmp/tmpaxnr032b'>

_warnings.warn(warn_message, ResourceWarning)

修改后的代码:

(NPU) (base) [HwHiAiUser@bogon Code]$ /home/HwHiAiUser/下载/yes/envs/NPU/bin/python /home/HwHiAiUser/Code/main.py

Warning: Device do not support double dtype now, dtype cast repalce with float.

tensor([[-0.3798, 0.5290],

[-0.7580, -0.6727]], device='npu:0')

npu

2023-10-23 08:28:35,064 - modelscope - INFO - PyTorch version 2.1.0+cpu Found.

2023-10-23 08:28:35,065 - modelscope - INFO - Loading ast index from /home/HwHiAiUser/.cache/modelscope/ast_indexer

2023-10-23 08:28:35,084 - modelscope - INFO - Loading done! Current index file version is 1.9.3, with md5 068f7e60e6f05d224ec8ad9a969f5922 and a total number of 943 components indexed

2023-10-23 08:28:35,538 - modelscope - INFO - Use user-specified model revision: v1.1.4

/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/tiktoken/core.py:50: ResourceWarning: unclosed <ssl.SSLSocket fd=124, family=AddressFamily.AF_INET, type=SocketKind.SOCK_STREAM, proto=6, laddr=('192.168.175.2', 58846), raddr=('39.101.130.40', 443)>

self._core_bpe = _tiktoken.CoreBPE(mergeable_ranks, special_tokens, pat_str)

Warning: please make sure that you are using the latest codes and checkpoints, especially if you used Qwen-7B before 09.25.2023.请使用最新模型和代码,尤其如果你在9月25日前已经开始使用Qwen-7B,千万注意不要使用错误代码和模型。

Warning: import flash_attn rotary fail, please install FlashAttention rotary to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/rotary

Warning: import flash_attn rms_norm fail, please install FlashAttention layer_norm to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/layer_norm

Warning: import flash_attn fail, please install FlashAttention to get higher efficiency https://github.com/Dao-AILab/flash-attention

Loading checkpoint shards: 100%|██████████████████████| 8/8 [00:05<00:00, 1.37it/s]

请输入问题:你好!你是谁

[W OpCommand.cpp:75] Warning: [Check][offset] Check input storage_offset[%ld] = 0 failed, result is untrustworthy4096 (function operator())

/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/transformers/generation/logits_process.py:407: UserWarning: AutoNonVariableTypeMode is deprecated and will be removed in 1.10 release. For kernel implementations please use AutoDispatchBelowADInplaceOrView instead, If you are looking for a user facing API to enable running your inference-only workload, please use c10::InferenceMode. Using AutoDispatchBelowADInplaceOrView in user code is under risk of producing silent wrong result in some edge cases. See Note [AutoDispatchBelowAutograd] for more details. (Triggered internally at /opt/_internal/cpython-3.9.0/lib/python3.9/site-packages/torch/include/ATen/core/LegacyTypeDispatch.h:74.)

sorted_indices_to_remove[..., -self.min_tokens_to_keep :] = 0

[W AddKernelNpu.cpp:86] Warning: The oprator of add is executed, Currently High Accuracy but Low Performance OP with 64-bit has been used, Please Do Some Cast at Python Functions with 32-bit for Better Performance! (function operator())

[W NeKernelNpu.cpp:28] Warning: The oprator of ne is executed, Currently High Accuracy but Low Performance OP with 64-bit has been used, Please Do Some Cast at Python Functions with 32-bit for Better Performance! (function operator())

我是通义千问,由阿里云开发的AI助手。我被设计用来回答各种问题、提供信息和与用户进行对话。有什么我可以帮助你的吗?

请输入问题:

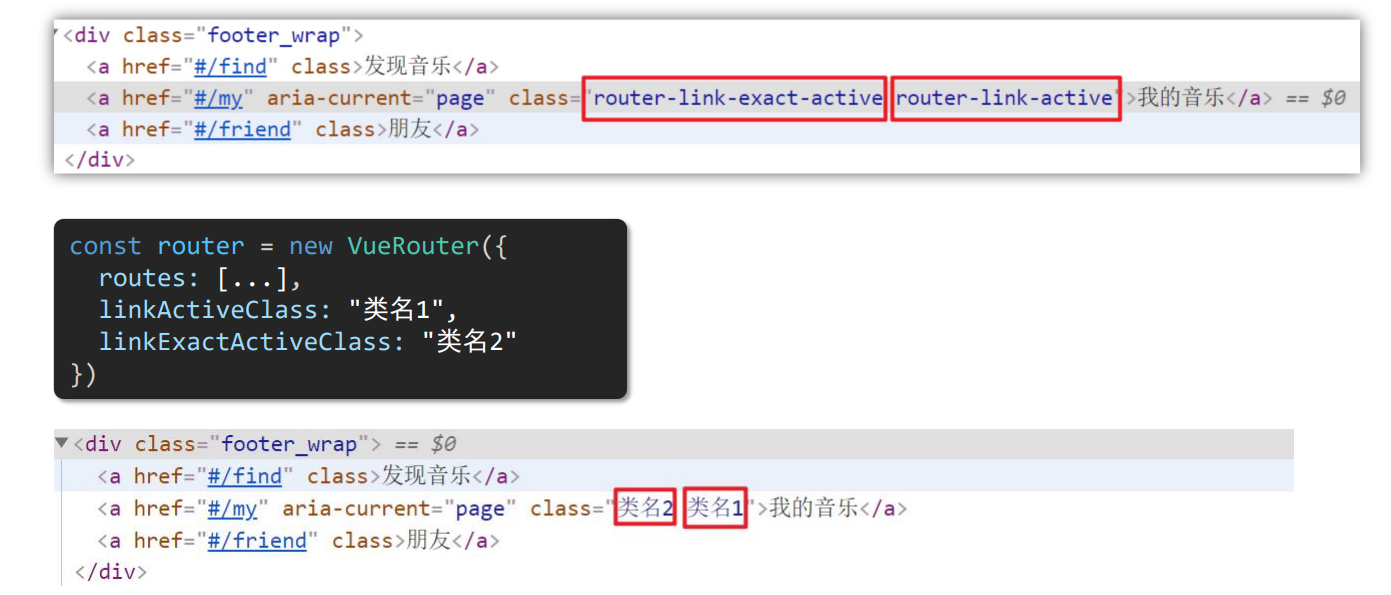

问题二:

报错代码:

import torch

import torch_npu

from accelerate import Accelerator

accelerator = Accelerator()

from accelerate import dispatch_model

device = accelerator.device

# source '/home/HwHiAiUser/Ascend/ascend-toolkit/set_env.sh'

x = torch.randn(2, 2).npu()

y = torch.randn(2, 2).npu()

z = x.mm(y)

print(z)

print(device)

# from modelscope import Model, AutoTokenizer

# model = Model.from_pretrained("modelscope/Llama-2-7b-ms", revision='v1.0.1', device_map=device, torch_dtype=torch.float16)

# tokenizer = AutoTokenizer.from_pretrained("modelscope/Llama-2-7b-ms", revision='v1.0.1')

# prompt = "Hey, are you conscious? Can you talk to me?"

# inputs = tokenizer(prompt, return_tensors="pt")

# # Generate

# generate_ids = model.generate(inputs.input_ids.to(model.device), max_length=30)

# print(tokenizer.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0])

from modelscope import AutoModelForCausalLM, AutoTokenizer, snapshot_download

from modelscope import GenerationConfig

# Note: The default behavior now has injection attack prevention off.

model_dir = snapshot_download("qwen/Qwen-7B-Chat", revision = 'v1.1.4')

tokenizer = AutoTokenizer.from_pretrained(model_dir, trust_remote_code=True)

# use bf16

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map="auto", trust_remote_code=True, bf16=True).eval()

# use fp16

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map=device, trust_remote_code=True, fp16=True).eval()

# use cpu only

# model = AutoModelForCausalLM.from_pretrained(model_dir, device_map="cpu", trust_remote_code=True).eval()

# use auto mode, automatically select precision based on the device.

model = AutoModelForCausalLM.from_pretrained(model_dir, device_map=device, trust_remote_code=True).eval()

# Specify hyperparameters for generation

model.generation_config = GenerationConfig.from_pretrained(model_dir, trust_remote_code=True) # 可指定不同的生成长度、top_p等相关超参

# 第一轮对话 1st dialogue turn

response, history = model.chat(tokenizer, "你好", history=None)

print(response)

# 你好!很高兴为你提供帮助。

# 第二轮对话 2nd dialogue turn

response, history = model.chat(tokenizer, "给我讲一个年轻人奋斗创业最终取得成功的故事。", history=history)

print(response)

# 这是一个关于一个年轻人奋斗创业最终取得成功的故事。

# 故事的主人公叫李明,他来自一个普通的家庭,父母都是普通的工人。从小,李明就立下了一个目标:要成为一名成功的企业家。

# 为了实现这个目标,李明勤奋学习,考上了大学。在大学期间,他积极参加各种创业比赛,获得了不少奖项。他还利用课余时间去实习,积累了宝贵的经验。

# 毕业后,李明决定开始自己的创业之路。他开始寻找投资机会,但多次都被拒绝了。然而,他并没有放弃。他继续努力,不断改进自己的创业计划,并寻找新的投资机会。

# 最终,李明成功地获得了一笔投资,开始了自己的创业之路。他成立了一家科技公司,专注于开发新型软件。在他的领导下,公司迅速发展起来,成为了一家成功的科技企业。

# 李明的成功并不是偶然的。他勤奋、坚韧、勇于冒险,不断学习和改进自己。他的成功也证明了,只要努力奋斗,任何人都有可能取得成功。

# 第三轮对话 3rd dialogue turn

response, history = model.chat(tokenizer, "给这个故事起一个标题", history=history)

print(response)

# 《奋斗创业:一个年轻人的成功之路》

报错内容:

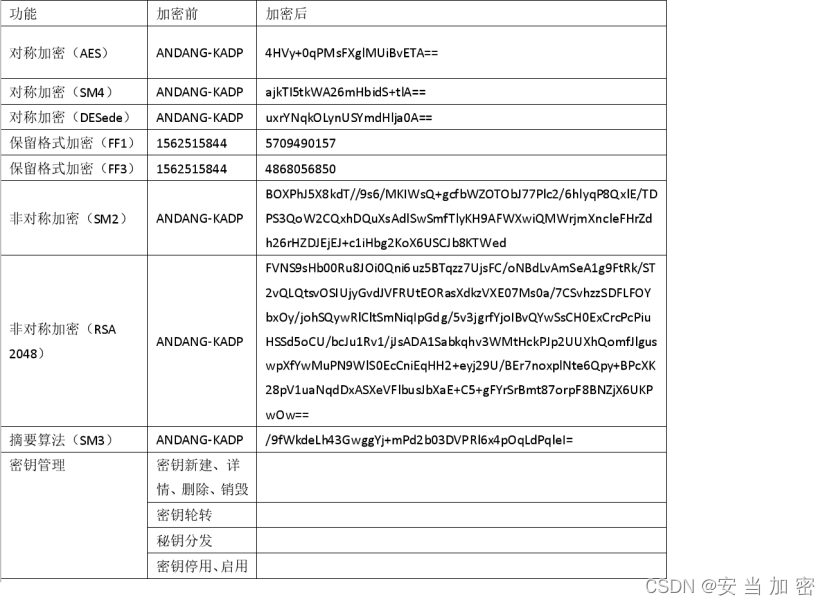

加载一个不量化全精度的7B模型进行推理,使用GPU加载占用的显存绝对不会超过20G,然而使用GPU却超显存了。

同时,Atlas 300I Pro在我购买时,标注的是24G显存,实际到手只有20G,我需要一个合理的解释。

以下是第一个代码段的执行结果与报错

(NPU) (base) [HwHiAiUser@bogon Code]$ /home/HwHiAiUser/下载/yes/envs/NPU/bin/python /home/HwHiAiUser/Code/main.py

Warning: Device do not support double dtype now, dtype cast repalce with float.

tensor([[ 0.0766, 0.2028],

[-2.3419, -1.6132]], device='npu:0')

npu

2023-10-23 07:56:42,901 - modelscope - INFO - PyTorch version 2.1.0+cpu Found.

2023-10-23 07:56:42,901 - modelscope - INFO - Loading ast index from /home/HwHiAiUser/.cache/modelscope/ast_indexer

2023-10-23 07:56:42,919 - modelscope - INFO - Loading done! Current index file version is 1.9.3, with md5 068f7e60e6f05d224ec8ad9a969f5922 and a total number of 943 components indexed

2023-10-23 07:56:43,931 - modelscope - INFO - Use user-specified model revision: v1.1.4

/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/tiktoken/core.py:50: ResourceWarning: unclosed <ssl.SSLSocket fd=124, family=AddressFamily.AF_INET, type=SocketKind.SOCK_STREAM, proto=6, laddr=('192.168.175.2', 57986), raddr=('39.101.130.40', 443)>

self._core_bpe = _tiktoken.CoreBPE(mergeable_ranks, special_tokens, pat_str)

Warning: please make sure that you are using the latest codes and checkpoints, especially if you used Qwen-7B before 09.25.2023.请使用最新模型和代码,尤其如果你在9月25日前已经开始使用Qwen-7B,千万注意不要使用错误代码和模型。

Flash attention will be disabled because it does NOT support fp32.

Warning: import flash_attn rotary fail, please install FlashAttention rotary to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/rotary

Warning: import flash_attn rms_norm fail, please install FlashAttention layer_norm to get higher efficiency https://github.com/Dao-AILab/flash-attention/tree/main/csrc/layer_norm

Warning: import flash_attn fail, please install FlashAttention to get higher efficiency https://github.com/Dao-AILab/flash-attention

Loading checkpoint shards: 62%|█████████████▊ | 5/8 [00:06<00:04, 1.38s/it]

Traceback (most recent call last):

File "/home/HwHiAiUser/Code/main.py", line 45, in <module>

model = AutoModelForCausalLM.from_pretrained(model_dir, device_map=device, trust_remote_code=True).eval()

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/modelscope/utils/hf_util.py", line 181, in from_pretrained

module_obj = module_class.from_pretrained(model_dir, *model_args,

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/transformers/models/auto/auto_factory.py", line 560, in from_pretrained

return model_class.from_pretrained(

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/modelscope/utils/hf_util.py", line 78, in from_pretrained

return ori_from_pretrained(cls, model_dir, *model_args, **kwargs)

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/transformers/modeling_utils.py", line 3307, in from_pretrained

) = cls._load_pretrained_model(

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/transformers/modeling_utils.py", line 3695, in _load_pretrained_model

new_error_msgs, offload_index, state_dict_index = _load_state_dict_into_meta_model(

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/transformers/modeling_utils.py", line 741, in _load_state_dict_into_meta_model

set_module_tensor_to_device(model, param_name, param_device, **set_module_kwargs)

File "/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/site-packages/accelerate/utils/modeling.py", line 317, in set_module_tensor_to_device

new_value = value.to(device)

RuntimeError: NPU out of memory. Tried to allocate 66.00 MiB (NPU 0; 0 bytes total capacity; 19.09 GiB already allocated; 19.09 GiB current active; 0 bytes free; 19.31 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation.

/home/HwHiAiUser/下载/yes/envs/NPU/lib/python3.9/tempfile.py:821: ResourceWarning: Implicitly cleaning up <TemporaryDirectory '/tmp/tmpuhsmwj2v'>

_warnings.warn(warn_message, ResourceWarning)

环境信息

固件版本检查

(NPU) [HwHiAiUser@localhost ~]$ sudo /usr/local/Ascend/driver/tools/upgrade-tool --device_index -1 --component -1 --version

{

Get component version(6.4.12.1.241) succeed for deviceId(0), componentType(11).

{"device_id":0, "component":hboot1a, "version":6.4.12.1.241}

Get component version(6.4.12.1.241) succeed for deviceId(0), componentType(12).

{"device_id":0, "component":hboot1b, "version":6.4.12.1.241}

Get component version(6.4.12.1.241) succeed for deviceId(0), componentType(18).

{"device_id":0, "component":hlink, "version":6.4.12.1.241}

}

npu-smi info

(NPU) [HwHiAiUser@localhost ~]$ npu-smi info

+--------------------------------------------------------------------------------------------------------+

| npu-smi 23.0.rc2 Version: 23.0.rc2 |

+-------------------------------+-----------------+------------------------------------------------------+

| NPU Name | Health | Power(W) Temp(C) Hugepages-Usage(page) |

| Chip Device | Bus-Id | AICore(%) Memory-Usage(MB) |

+===============================+=================+======================================================+

| 8 310P3 | OK | NA 37 0 / 0 |

| 0 0 | 0000:01:00.0 | 0 1700 / 21527 |

+===============================+=================+======================================================+

+-------------------------------+-----------------+------------------------------------------------------+

| NPU Chip | Process id | Process name | Process memory(MB) |

+===============================+=================+======================================================+

| No running processes found in NPU 8 |

+===============================+=================+======================================================+

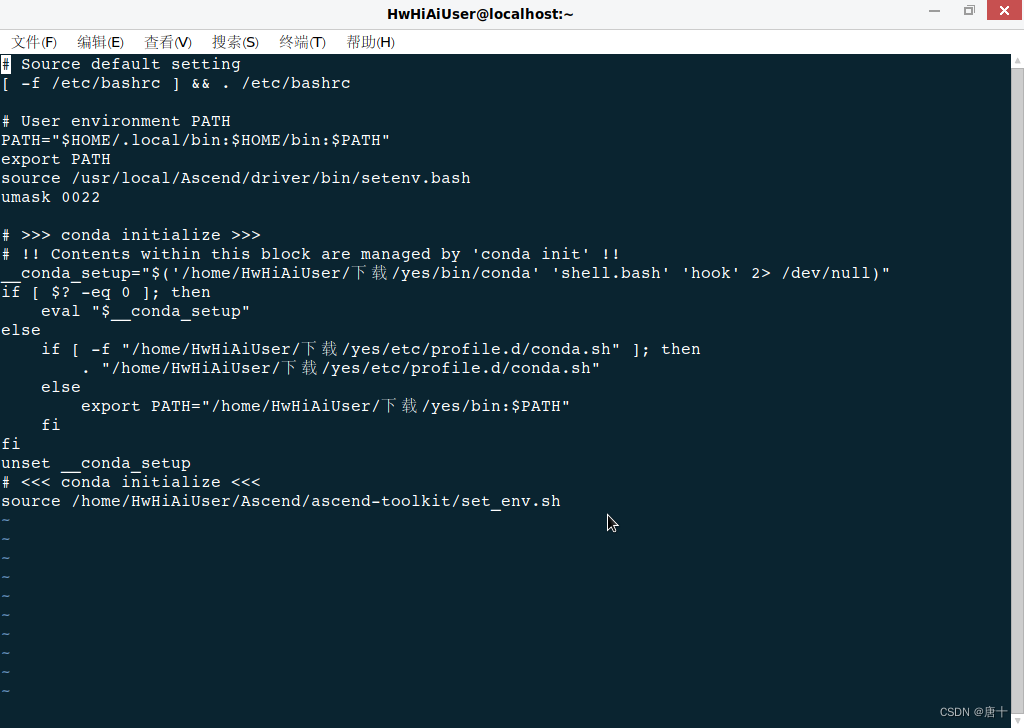

CANN安装

已安装适应pytorch2.1.0版本的CANN7.0.RC1.alpha003,并且环境配置正确,如下代码运行正常:

import torch

import torch_npu

# source '/home/HwHiAiUser/Ascend/ascend-toolkit/set_env.sh'

x = torch.randn(2, 2).npu()

y = torch.randn(2, 2).npu()

z = x.mm(y)

print(z)

运行结果:

(NPU) (base) [HwHiAiUser@bogon Code]$ /home/HwHiAiUser/下载/yes/envs/NPU/bin/python /home/HwHiAiUser/Code/main.py

Warning: Device do not support double dtype now, dtype cast repalce with float.

tensor([[ 0.0766, 0.2028],

[-2.3419, -1.6132]], device='npu:0')