一.数据集准备

新建一个项目文件夹ResNet,并在里面建立data_set文件夹用来保存数据集,在data_set文件夹下创建新文件夹"flower_data",点击链接下载花分类数据集https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz,会下载一个压缩包,将它解压到flower_data文件夹下,执行"split_data.py"脚本自动将数据集划分成训练集train和验证集val。

split.py如下:

import os

from shutil import copy, rmtree

import random

def mk_file(file_path: str):

if os.path.exists(file_path):

# 如果文件夹存在,则先删除原文件夹在重新创建

rmtree(file_path)

os.makedirs(file_path)

def main():

# 保证随机可复现

random.seed(0)

# 将数据集中10%的数据划分到验证集中

split_rate = 0.1

# 指向你解压后的flower_photos文件夹

cwd = os.getcwd()

data_root = os.path.join(cwd, "flower_data")

origin_flower_path = os.path.join(data_root, "flower_photos")

assert os.path.exists(origin_flower_path), "path '{}' does not exist.".format(origin_flower_path)

flower_class = [cla for cla in os.listdir(origin_flower_path)

if os.path.isdir(os.path.join(origin_flower_path, cla))]

# 建立保存训练集的文件夹

train_root = os.path.join(data_root, "train")

mk_file(train_root)

for cla in flower_class:

# 建立每个类别对应的文件夹

mk_file(os.path.join(train_root, cla))

# 建立保存验证集的文件夹

val_root = os.path.join(data_root, "val")

mk_file(val_root)

for cla in flower_class:

# 建立每个类别对应的文件夹

mk_file(os.path.join(val_root, cla))

for cla in flower_class:

cla_path = os.path.join(origin_flower_path, cla)

images = os.listdir(cla_path)

num = len(images)

# 随机采样验证集的索引

eval_index = random.sample(images, k=int(num*split_rate))

for index, image in enumerate(images):

if image in eval_index:

# 将分配至验证集中的文件复制到相应目录

image_path = os.path.join(cla_path, image)

new_path = os.path.join(val_root, cla)

copy(image_path, new_path)

else:

# 将分配至训练集中的文件复制到相应目录

image_path = os.path.join(cla_path, image)

new_path = os.path.join(train_root, cla)

copy(image_path, new_path)

print("\r[{}] processing [{}/{}]".format(cla, index+1, num), end="") # processing bar

print()

print("processing done!")

if __name__ == '__main__':

main()

之后会在文件夹下生成train和val数据集,到此,完成了数据集的准备。

二.定义网络

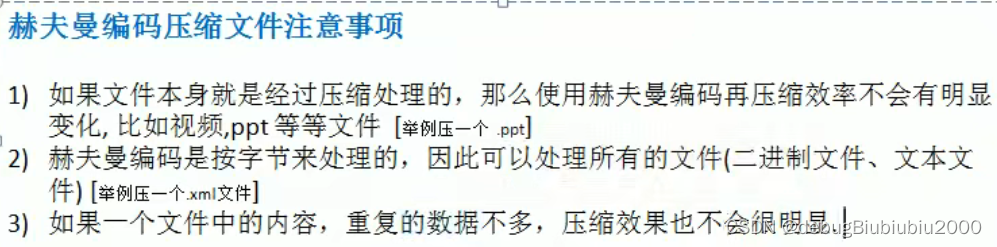

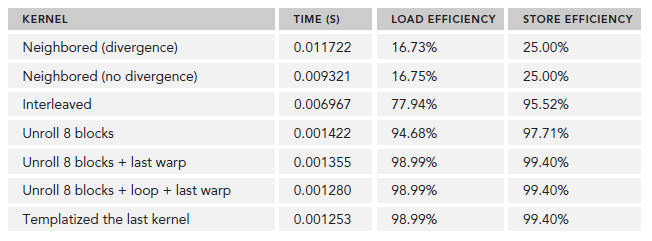

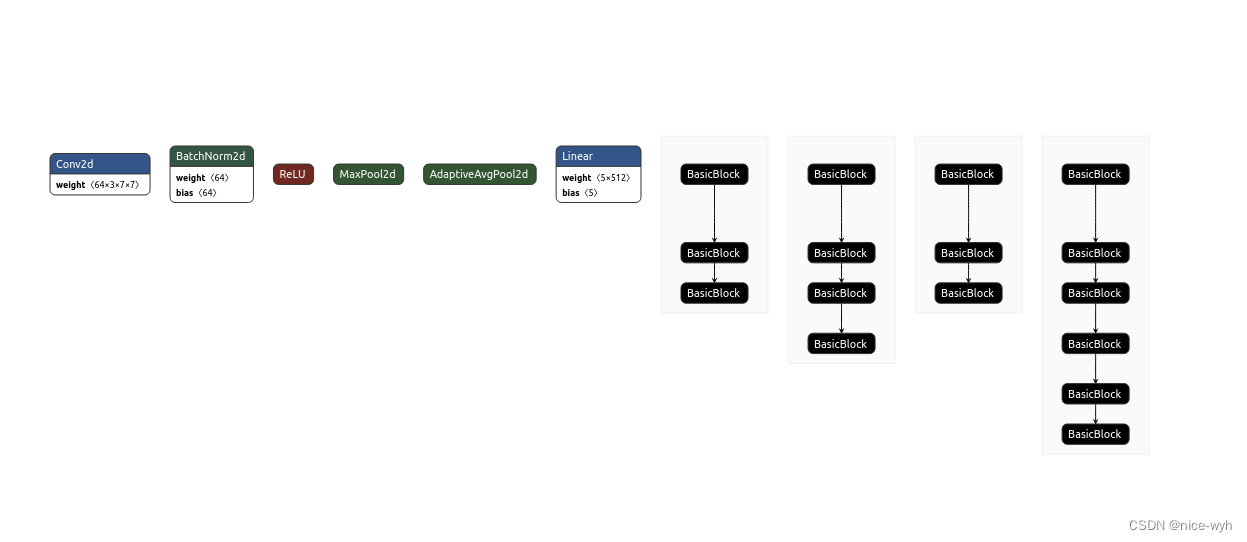

新建model.py,参照ResNet的网络结构和pytorch官方给出的代码,对代码进行略微的修改即可,首先定义了两个类BasicBlock和Bottleneck,分别对应着ResNet18、34和ResNet50、101、152,从下面这个图就可以区别开来。

可见,18和34层的网络,他们的conv2_x,conv3_x,conv4_x,conv5_x是相同的,不同的是每一个block的数量([2 2 2 2]和[3 4 6 3]),50和101和152层的网络,多了1*1卷积核,block数量也不尽相同。

接着定义了ResNet类,进行前向传播。对于34层的网络(这里借用了知乎牧酱老哥的图,18和34的block相同,所以用18的进行讲解),conv2_x和conv3_x对应的残差块对应的残差快在右侧展示出来(可以注意一下stride),当计算特征图尺寸时,要特别注意。在下方代码计算尺寸的部分我都进行了注释。

pytorch官方ResNet代码

修改后的train.py:

import torch.nn as nn

import torch

class BasicBlock(nn.Module): #18 34层残差结构, 残差块

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None, **kwargs):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel, kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x) # 不为none,对应虚线残差结构(下需要1*1卷积调整维度),为none,对应实线残差结构(不需要1*1卷积)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

class Bottleneck(nn.Module): #50 101 152层残差结构

"""

注意:原论文中,在虚线残差结构的主分支上,第一个1x1卷积层的步距是2,第二个3x3卷积层步距是1。

但在pytorch官方实现过程中是第一个1x1卷积层的步距是1,第二个3x3卷积层步距是2,

这么做的好处是能够在top1上提升大概0.5%的准确率。

可参考Resnet v1.5 https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch

"""

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None, groups=1, width_per_group=64):

super(Bottleneck, self).__init__()

width = int(out_channel * (width_per_group / 64.)) * groups

# squeeze channels

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=width, kernel_size=1, stride=1, bias=False)

self.bn1 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv2 = nn.Conv2d(in_channels=width, out_channels=width, groups=groups,

kernel_size=3, stride=stride, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(width)

# -----------------------------------------

# unsqueeze channels

self.conv3 = nn.Conv2d(in_channels=width, out_channels=out_channel*self.expansion, kernel_size=1, stride=1, bias=False)

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None: # 不为none,对应虚线残差结构(下需要1*1卷积调整维度),为none,对应实线残差结构(不需要1*1卷积)

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, blocks_num, num_classes=1000, include_top=True, groups=1, width_per_group=64):

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.groups = groups

self.width_per_group = width_per_group

# (channel height width)

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2, padding=3, bias=False) # (3 224 224) -> (64 112 112)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1) # (64 112 112) -> (64 56 56)

# 对于每一个block,第一次的两个卷积层stride=1和1,第二次stride=1和1

self.layer1 = self._make_layer(block, 64, blocks_num[0]) # (64 56 56) -> (64 56 56)

# 对于每一个block,第一次的两个卷积层stride=2和1,第二次stride=1和1

self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2) # (64 56 56) -> (128 28 28)

self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2) # (128 28 28) -> (256 14 14)

self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2) # (256 28 28) -> (512 14 14)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

def _make_layer(self, block, channel, block_num, stride=1): # channel为当前block所使用的卷积核个数

downsample = None

if stride != 1 or self.in_channel != channel * block.expansion: # 18和32不满足判断条件,会跳过;50 101 152会执行这部分

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channel * block.expansion))

layers = []

layers.append(block(self.in_channel,

channel,

downsample=downsample,

stride=stride,

groups=self.groups,

width_per_group=self.width_per_group))

self.in_channel = channel * block.expansion

for _ in range(1, block_num):

layers.append(block(self.in_channel,

channel,

groups=self.groups,

width_per_group=self.width_per_group))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resnet34(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet34-333f7ec4.pth

return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet50(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet50-19c8e357.pth

return ResNet(Bottleneck, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet101(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet101-5d3b4d8f.pth

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)

def resnext50_32x4d(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth

groups = 32

width_per_group = 4

return ResNet(Bottleneck, [3, 4, 6, 3],

num_classes=num_classes,

include_top=include_top,

groups=groups,

width_per_group=width_per_group)

def resnext101_32x8d(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth

groups = 32

width_per_group = 8

return ResNet(Bottleneck, [3, 4, 23, 3],

num_classes=num_classes,

include_top=include_top,

groups=groups,

width_per_group=width_per_group)

if __name__ == "__main__":

resnet = ResNet(BasicBlock, [3, 4, 6, 3], num_classes=5)

in_data = torch.randn(1, 3, 224, 224)

out = resnet(in_data)

print(out)完成网络的定义之后,可以单独执行一下这个文件,用来验证网络定义的是否正确。如果可以正确输出,就没问题。

在这里输出为

tensor([[-0.4490, 0.5792, -0.5026, -0.6024, 0.1399]],

grad_fn=<AddmmBackward0>)

说明网络定义正确。

三.开始训练

加载数据集

首先定义一个字典,用于用于对train和val进行预处理,包括裁剪成224*224大小,训练集随机水平翻转(一般验证集不需要此操作),转换成张量,图像归一化。

然后利用DataLoader模块加载数据集,并设置batch_size为16,同时,设置数据加载器的工作进程数nw,加快速度。

import os

import sys

import json

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import transforms, datasets

from torch.utils.data import DataLoader

from tqdm import tqdm

from model import resnet34

def main():

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"using {device} device.")

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

# 获取数据集路径

image_path = os.path.join(os.getcwd(), "data_set", "flower_data")

assert os.path.exists(image_path), f"{image_path} path does not exist."

# 加载数据集,准备读取

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"), transform=data_transform["train"])

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"), transform=data_transform["val"])

nw = min([os.cpu_count(), 16 if 16 > 1 else 0, 8]) # number of workers,加速图像预处理

print(f'Using {nw} dataloader workers every process')

# 加载数据集

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=16, shuffle=True, num_workers=nw)

validate_loader = torch.utils.data.DataLoader(validate_dataset, batch_size=16, shuffle=False, num_workers=nw)

train_num = len(train_dataset)

val_num = len(validate_dataset)

print(f"using {train_num} images for training, {val_num} images for validation.")生成json文件

将训练数据集的类别标签转换为字典格式,并将其写入名为'class_indices.json'的文件中。

- 从

train_dataset中获取类别标签到索引的映射关系,存储在flower_list变量中。 - 使用列表推导式将

flower_list中的键值对反转,得到一个新的字典cla_dict,其中键是原始类别标签,值是对应的索引。 - 使用

json.dumps()函数将cla_dict转换为JSON格式的字符串,设置缩进为4个空格。 - 使用

with open()语句以写入模式打开名为'class_indices.json'的文件,并将JSON字符串写入文件

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4} 雏菊 蒲公英 玫瑰 向日葵 郁金香

# 从训练集中获取类别标签到索引的映射关系,存储在flower_list变量

flower_list = train_dataset.class_to_idx

# 使用列表推导式将flower_list中的键值对反转,得到一个新的字典cla_dict

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)加载预训练模型开始训练

首先定义网络对象net,在这里我们使用了迁移学习来使网络训练效果更好;使用net.fc = nn.Linear(in_channel, 5)设置输出类别数(这里为5);训练10轮,并使用train_bar = tqdm(train_loader, file=sys.stdout)来可视化训练进度条,之后再进行反向传播和参数更新;同时,每一轮训练完成都要进行学习率更新;之后开始对验证集进行计算精确度,完成后保存模型。

# load pretrain weights

# download url: https://download.pytorch.org/models/resnet34-333f7ec4.pth

net = resnet34()

model_weight_path = "./resnet34-pre.pth"

assert os.path.exists(model_weight_path), f"file {model_weight_path} does not exist."

net.load_state_dict(torch.load(model_weight_path, map_location='cpu'))

# change fc layer structure

in_channel = net.fc.in_features

net.fc = nn.Linear(in_channel, 5)

net.to(device)

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam([p for p in net.parameters() if p.requires_grad], lr=0.0001)

epochs = 10

best_acc = 0.0

train_steps = len(train_loader)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

logits = net(images.to(device))

loss = loss_function(logits, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{epoch + 1}/{epochs}] loss:{loss:.3f}"

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

# loss = loss_function(outputs, test_labels)

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_bar.desc = f"valid epoch[{epoch + 1}/{epochs}]"

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net, "./resnet.pth")

print('Finished Training')

最后对代码进行整理,完整的train.py如下

import os

import sys

import json

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import transforms, datasets

from torch.utils.data import DataLoader

from tqdm import tqdm

from model import resnet34

def main():

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"using {device} device.")

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

# 获取数据集路径

image_path = os.path.join(os.getcwd(), "data_set", "flower_data")

assert os.path.exists(image_path), f"{image_path} path does not exist."

# 加载数据集,准备读取

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"), transform=data_transform["train"])

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"), transform=data_transform["val"])

nw = min([os.cpu_count(), 16 if 16 > 1 else 0, 8]) # number of workers,加速图像预处理

print(f'Using {nw} dataloader workers every process')

# 加载数据集

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=16, shuffle=True, num_workers=nw)

validate_loader = torch.utils.data.DataLoader(validate_dataset, batch_size=16, shuffle=False, num_workers=nw)

train_num = len(train_dataset)

val_num = len(validate_dataset)

print(f"using {train_num} images for training, {val_num} images for validation.")

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4} 雏菊 蒲公英 玫瑰 向日葵 郁金香

# 从训练集中获取类别标签到索引的映射关系,存储在flower_list变量

flower_list = train_dataset.class_to_idx

# 使用列表推导式将flower_list中的键值对反转,得到一个新的字典cla_dict

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

# load pretrain weights

# download url: https://download.pytorch.org/models/resnet34-333f7ec4.pth

net = resnet34()

model_weight_path = "./resnet34-pre.pth"

assert os.path.exists(model_weight_path), f"file {model_weight_path} does not exist."

net.load_state_dict(torch.load(model_weight_path, map_location='cpu'))

# change fc layer structure

in_channel = net.fc.in_features

net.fc = nn.Linear(in_channel, 5)

net.to(device)

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam([p for p in net.parameters() if p.requires_grad], lr=0.0001)

epochs = 10

best_acc = 0.0

train_steps = len(train_loader)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

logits = net(images.to(device))

loss = loss_function(logits, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{epoch + 1}/{epochs}] loss:{loss:.3f}"

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

# loss = loss_function(outputs, test_labels)

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_bar.desc = f"valid epoch[{epoch + 1}/{epochs}]"

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net, "./resnet.pth")

print('Finished Training')

if __name__ == '__main__':

main()

四.模型预测

新建一个predict.py文件用于预测,将输入图像处理后转换成张量格式,img = torch.unsqueeze(img, dim=0)是在输入图像张量 img 的第一个维度上增加一个大小为1的维度,因此将图像张量的形状从 [通道数, 高度, 宽度 ] 转换为 [1, 通道数, 高度, 宽度]。然后加载模型进行预测,并打印出结果,同时可视化。

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import resnet34

def main():

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

data_transform = transforms.Compose(

[transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

# load image

img = Image.open("./2536282942_b5ca27577e.jpg")

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

# 在输入图像张量 img 的第一个维度上增加一个大小为1的维度

# 将图像张量的形状从 [通道数, 高度, 宽度 ] 转换为 [1, 通道数, 高度, 宽度]

img = torch.unsqueeze(img, dim=0)

# read class_indict

with open('./class_indices.json', "r") as f:

class_indict = json.load(f)

# create model

model = resnet34(num_classes=5).to(device)

model = torch.load("./resnet34.pth")

# prediction

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

predict_class = torch.argmax(predict).numpy()

print_result = f"class: {class_indict[str(predict_class)]} prob: {predict[predict_class].numpy():.3}"

plt.title(print_result)

for i in range(len(predict)):

print(f"class: {class_indict[str(i)]:10} prob: {predict[i].numpy():.3}")

plt.show()

if __name__ == '__main__':

main()

预测结果

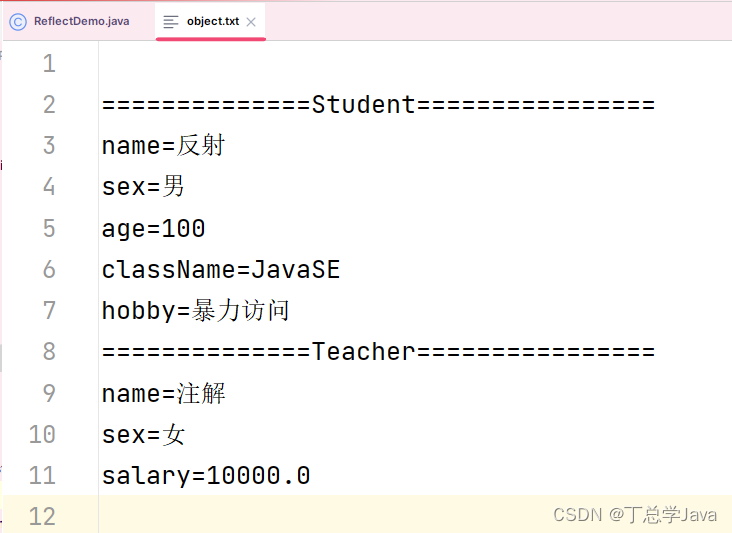

五.模型可视化

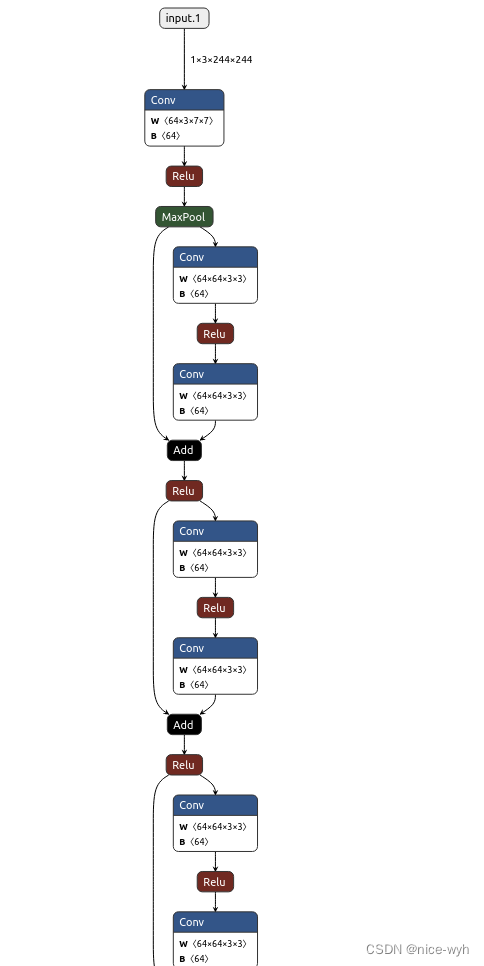

将生成的pth文件导入netron工具,可视化结果为

发现很不清晰,因此将它转换成多用于嵌入式设备部署的onnx格式

编写onnx.py

import torch

import torchvision

from model import resnet34

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = resnet34(num_classes=5).to(device)

model=torch.load("/home/lm/Resnet/resnet34.pth")

model.eval()

example = torch.ones(1, 3, 244, 244)

example = example.to(device)

torch.onnx.export(model, example, "resnet34.onnx", verbose=True, opset_version=11)

将生成的onnx文件导入,这样的可视化清晰了许多

六.批量数据预测

现在新建一个dta文件夹,里面放入五类带预测的样本,编写代码完成对整个文件夹下所有样本的预测,即批量预测。

batch_predict.py如下:

import os

import json

import torch

from PIL import Image

from torchvision import transforms

from model import resnet34

def main():

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

data_transform = transforms.Compose(

[transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

# load image

# 指向需要遍历预测的图像文件夹

imgs_root = "./data/imgs"

# 读取指定文件夹下所有jpg图像路径

img_path_list = [os.path.join(imgs_root, i) for i in os.listdir(imgs_root) if i.endswith(".jpg")]

# read class_indict

json_file = open('./class_indices.json', "r")

class_indict = json.load(json_file)

# create model

model = resnet34(num_classes=5).to(device)

model = torch.load("./resnet34.pth")

# prediction

model.eval()

batch_size = 8 # 每次预测时将多少张图片打包成一个batch

with torch.no_grad():

for ids in range(0, len(img_path_list) // batch_size):

img_list = []

for img_path in img_path_list[ids * batch_size: (ids + 1) * batch_size]:

img = Image.open(img_path)

img = data_transform(img)

img_list.append(img)

# batch img

# 将img_list列表中的所有图像打包成一个batch

batch_img = torch.stack(img_list, dim=0)

# predict class

output = model(batch_img.to(device)).cpu()

predict = torch.softmax(output, dim=1)

probs, classes = torch.max(predict, dim=1)

for idx, (pro, cla) in enumerate(zip(probs, classes)):

print(f"image: {img_path_list[ids*batch_size+idx]} class: {class_indict[str(cla.numpy())]} prob: {pro.numpy():.3}")

if __name__ == '__main__':

main()

运行之后,输出

image: ./data/imgs/455728598_c5f3e7fc71_m.jpg class: dandelion prob: 0.989

image: ./data/imgs/3464015936_6845f46f64.jpg class: dandelion prob: 0.999

image: ./data/imgs/3461986955_29a1abc621.jpg class: dandelion prob: 0.996

image: ./data/imgs/8223949_2928d3f6f6_n.jpg class: dandelion prob: 0.991

image: ./data/imgs/10919961_0af657c4e8.jpg class: dandelion prob: 1.0

image: ./data/imgs/10443973_aeb97513fc_m.jpg class: dandelion prob: 0.906

image: ./data/imgs/8475758_4c861ab268_m.jpg class: dandelion prob: 0.805

image: ./data/imgs/3857059749_fe8ca621a9.jpg class: dandelion prob: 1.0

image: ./data/imgs/2457473644_5242844e52_m.jpg class: dandelion prob: 1.0

image: ./data/imgs/146023167_f905574d97_m.jpg class: dandelion prob: 0.998

image: ./data/imgs/2502627784_4486978bcf.jpg class: dandelion prob: 0.488

image: ./data/imgs/2481428401_bed64dd043.jpg class: dandelion prob: 1.0

image: ./data/imgs/13920113_f03e867ea7_m.jpg class: dandelion prob: 1.0

image: ./data/imgs/2535769822_513be6bbe9.jpg class: dandelion prob: 0.997

image: ./data/imgs/3954167682_128398bf79_m.jpg class: dandelion prob: 1.0

image: ./data/imgs/2516714633_87f28f0314.jpg class: dandelion prob: 0.998

image: ./data/imgs/2634665077_597910235f_m.jpg class: dandelion prob: 0.996

image: ./data/imgs/3502447188_ab4a5055ac_m.jpg class: dandelion prob: 0.999

image: ./data/imgs/425800274_27dba84fac_n.jpg class: dandelion prob: 0.422

image: ./data/imgs/3365850019_8158a161a8_n.jpg class: dandelion prob: 1.0

image: ./data/imgs/674407101_57676c40fb.jpg class: dandelion prob: 1.0

image: ./data/imgs/2628514700_b6d5325797_n.jpg class: dandelion prob: 0.999

image: ./data/imgs/3688128868_031e7b53e1_n.jpg class: dandelion prob: 0.962

image: ./data/imgs/2502613166_2c231b47cb_n.jpg class: dandelion prob: 1.0

完成预期功能(这里我的样本都是dandelion,当然混合的也可以)

七.模型改进

当不加载预训练模型,而从头开始训练的话,当epoch为50时,经实际训练,准确率为80%多,但当加载预训练模型时,完成第一次迭代准确率就已达到了90%,这也正说明了迁移学习的好处。

同时,这里采用的是Resnet34,也可以尝试更深的50、101、152层网络。

还有其他方法会在之后进行补充。