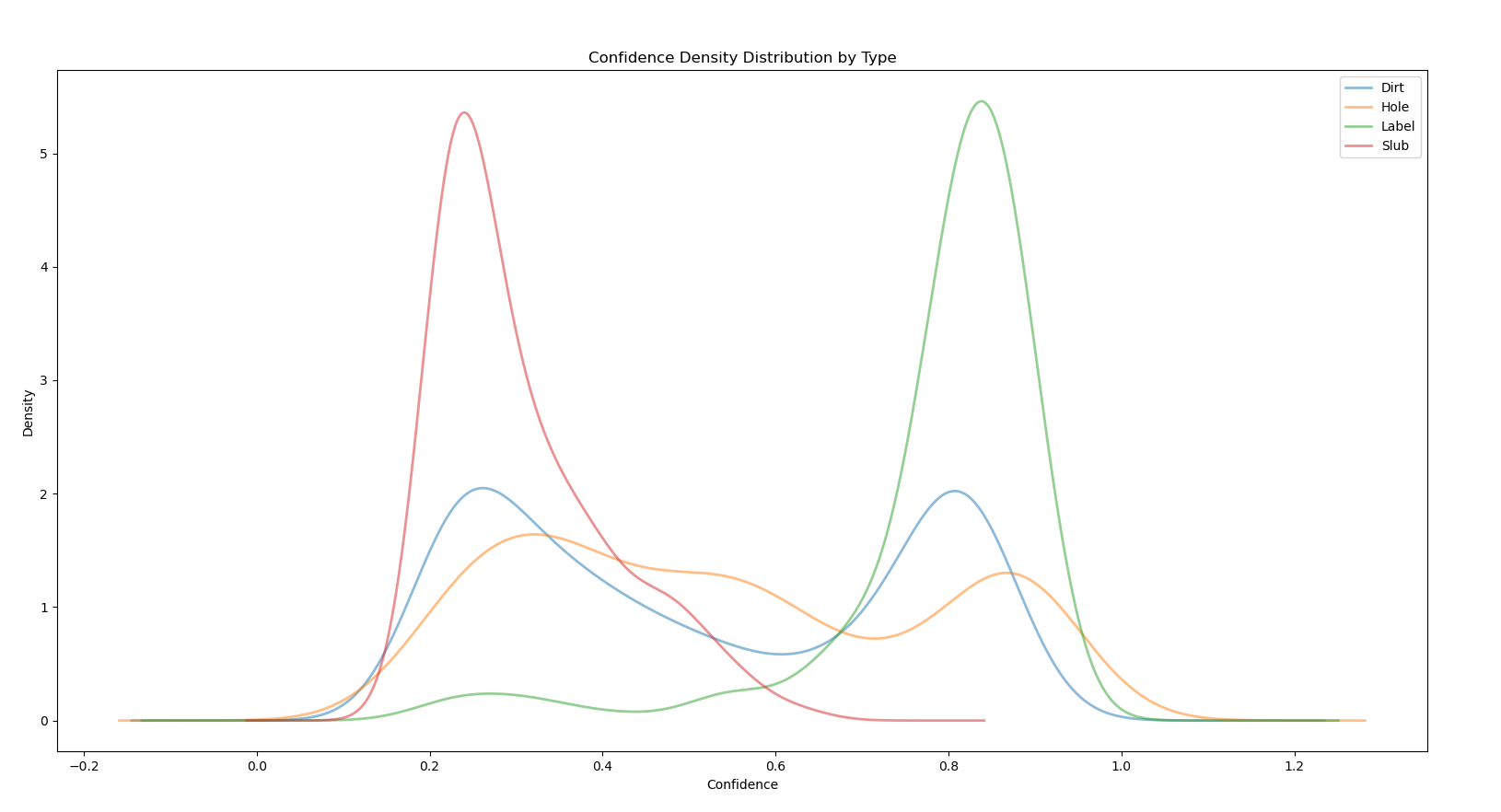

神经网络-损失函数与反向传播

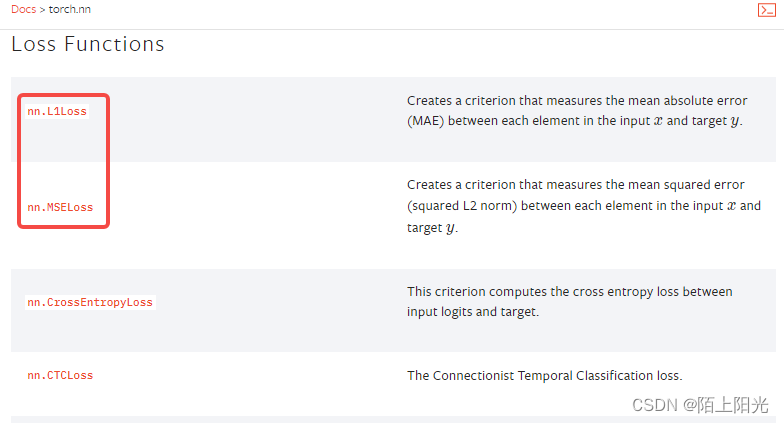

- 官网

- 损失函数

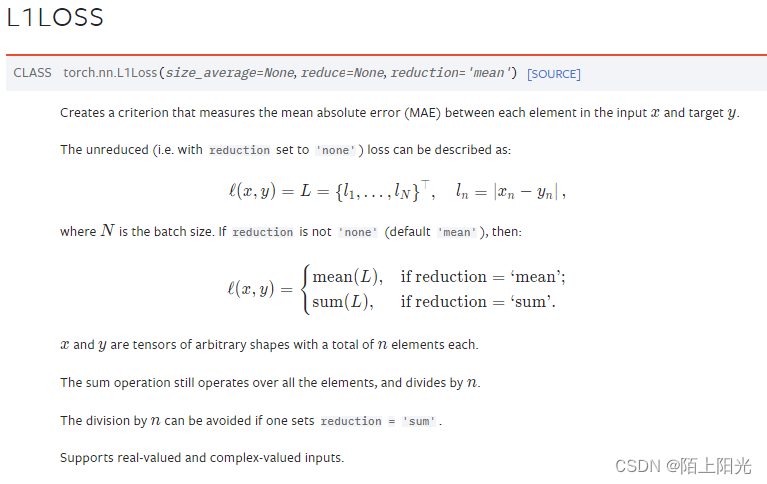

- L1Loss MAE 平均

- MSELoss 平方差

- CROSSENTROPYLOSS 交叉熵损失

- 注意

- code

- 反向传播

- 在debug中的显示

- code

B站小土堆pytorch视频学习

官网

https://pytorch.org/docs/stable/nn.html#loss-functions

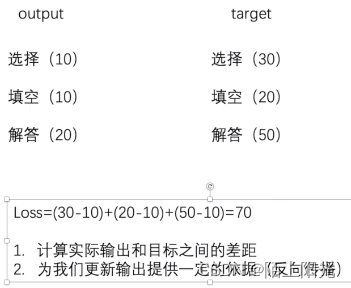

损失函数

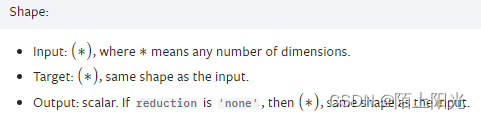

L1Loss MAE 平均

import torch

input = torch.tensor([1, 2, 3], dtype=float)

# target = torch.tensor([1, 2, 5], dtype=float)

target = torch.tensor([[[[1, 2, 5]]]], dtype=float) # shape [1, 1, 1, 3]

input = torch.reshape(input, (1,1,1,3))

# target = torch.reshape(target, (1,1,1,3))

print(input.shape)

print(target.shape)

loss1 = torch.nn.L1Loss()

loss2 = torch.nn.L1Loss(reduction="sum")

result1 = loss1(input, target)

print(result1) # tensor(0.6667, dtype=torch.float64)

result2 = loss2(input, target)

print(result2) # tensor(2., dtype=torch.float64)

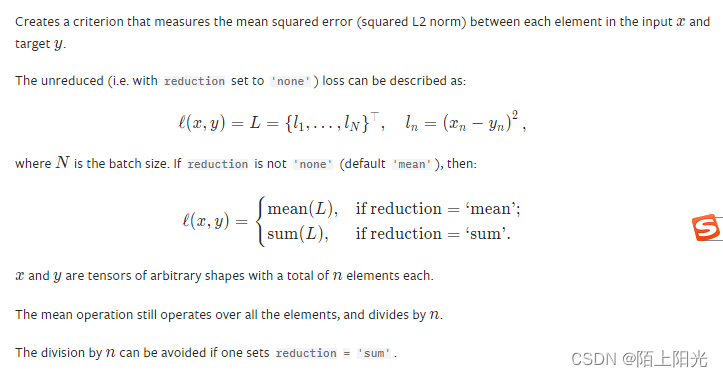

MSELoss 平方差

import torch

input = torch.tensor([1, 2, 3], dtype=float)

# target = torch.tensor([1, 2, 5], dtype=float)

target = torch.tensor([[[[1, 2, 5]]]], dtype=float) # shape [1, 1, 1, 3]

input = torch.reshape(input, (1,1,1,3))

# target = torch.reshape(target, (1,1,1,3))

print(input.shape)

print(target.shape)

loss_mse = torch.nn.MSELoss(reduction='mean')

result_mse = loss_mse(input, target)

print(result_mse) # tensor(1.3333, dtype=torch.float64)

loss_mse2 = torch.nn.MSELoss(reduction='sum')

result_mse2 = loss_mse2(input, target)

print(result_mse2) # tensor(4., dtype=torch.float64)

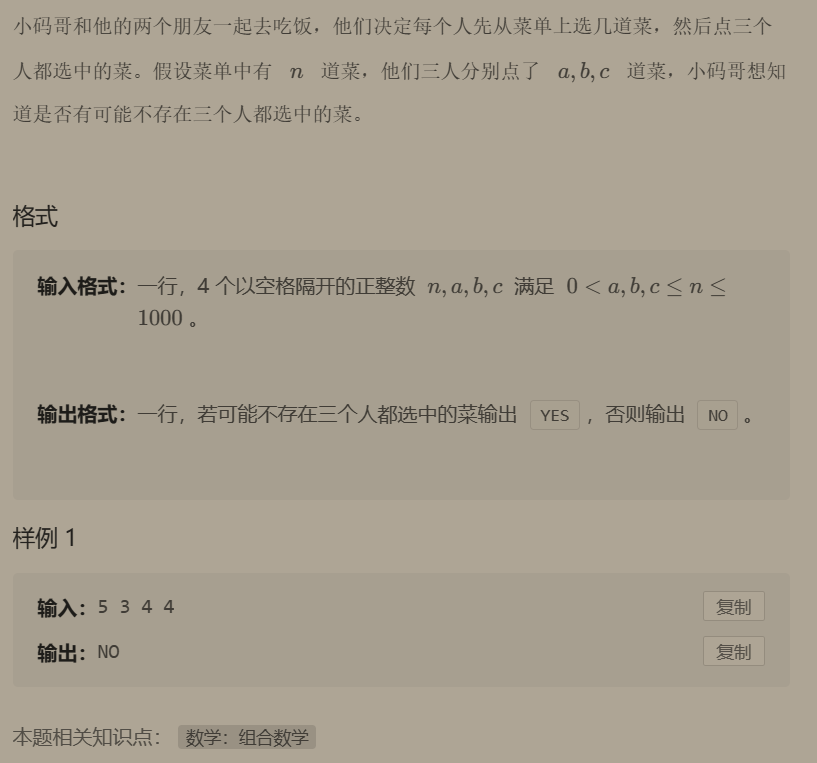

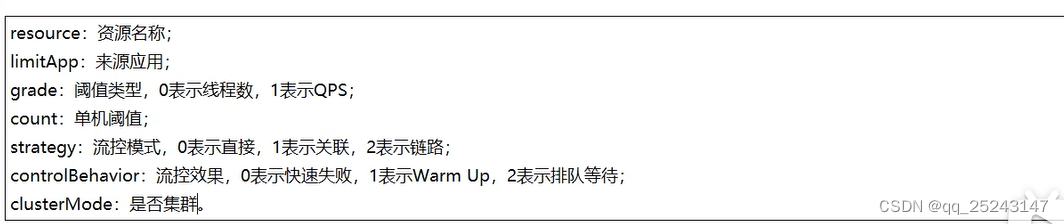

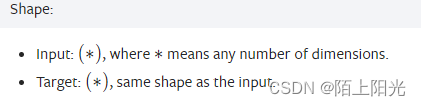

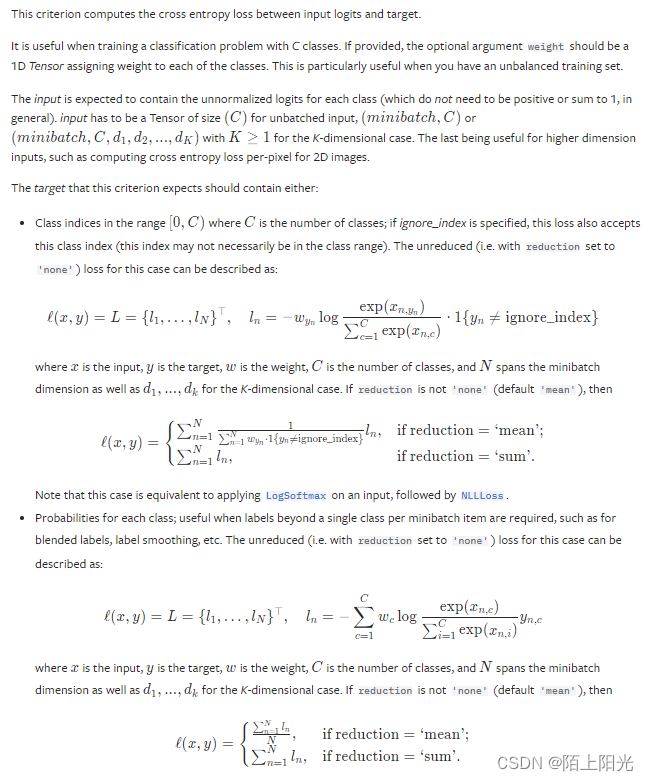

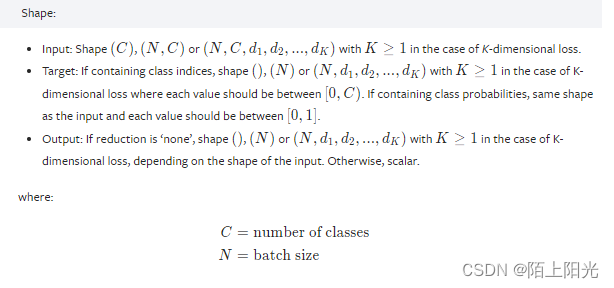

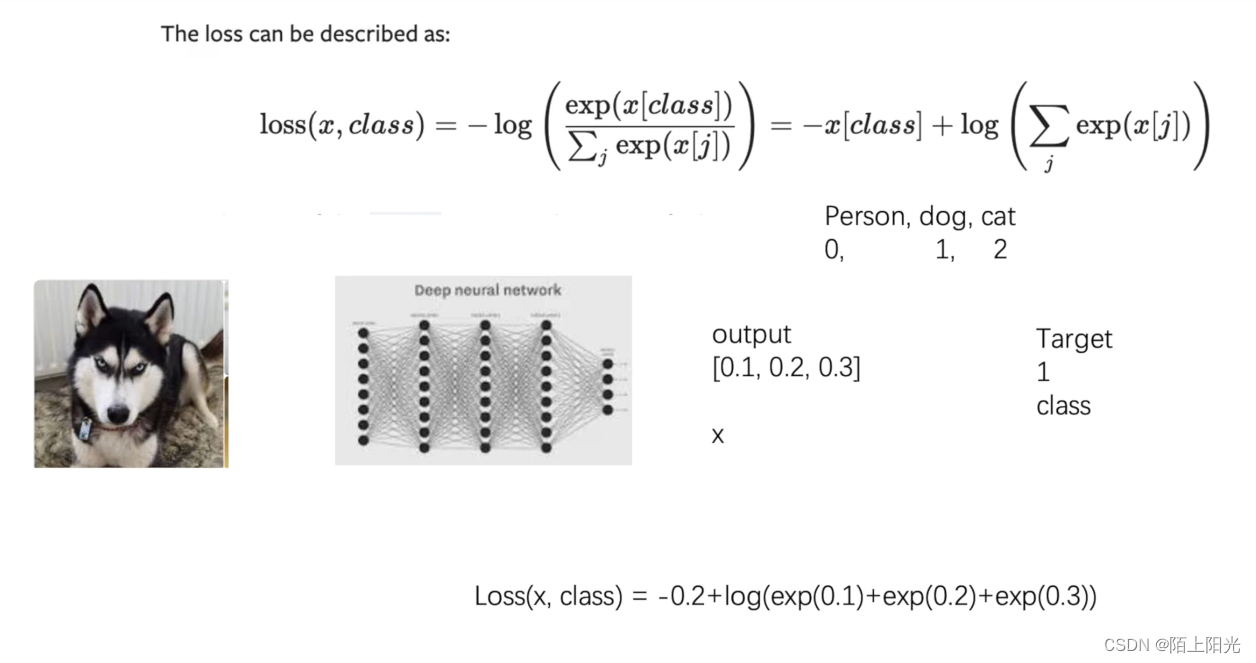

CROSSENTROPYLOSS 交叉熵损失

https://pytorch.org/docs/stable/generated/torch.nn.CrossEntropyLoss.html#torch.nn.CrossEntropyLoss

在神经网络中,默认log是以e为底的,所以也可以写成ln

注意

- 根据需求选择对应的loss函数

- 注意loss函数的输入输出shape

code

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_set = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(test_set, batch_size=1)

class MySeq(nn.Module):

def __init__(self):

super(MySeq, self).__init__()

self.model1 = Sequential(Conv2d(3, 32, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(32, 32, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(32, 64, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

myseq = MySeq()

print(myseq)

for data in dataloader:

imgs, targets = data

print(imgs.shape)

output = myseq(imgs)

result = loss(output, targets)

print(result)

反向传播

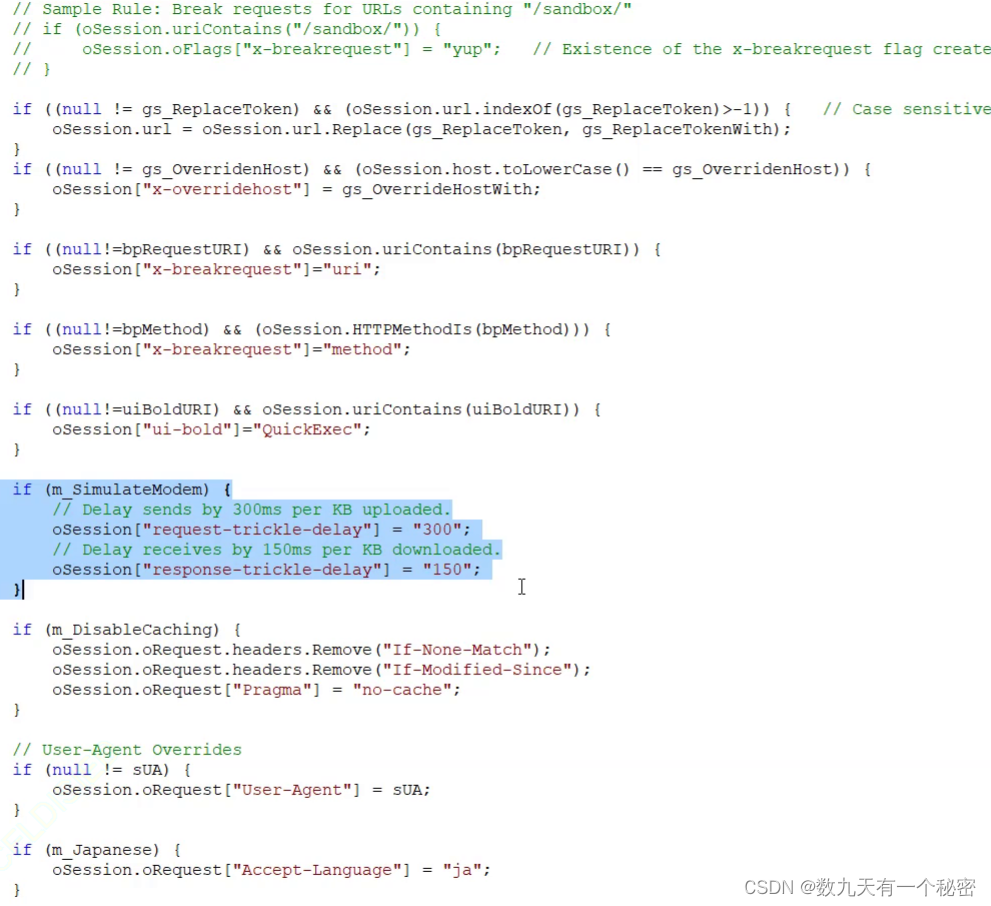

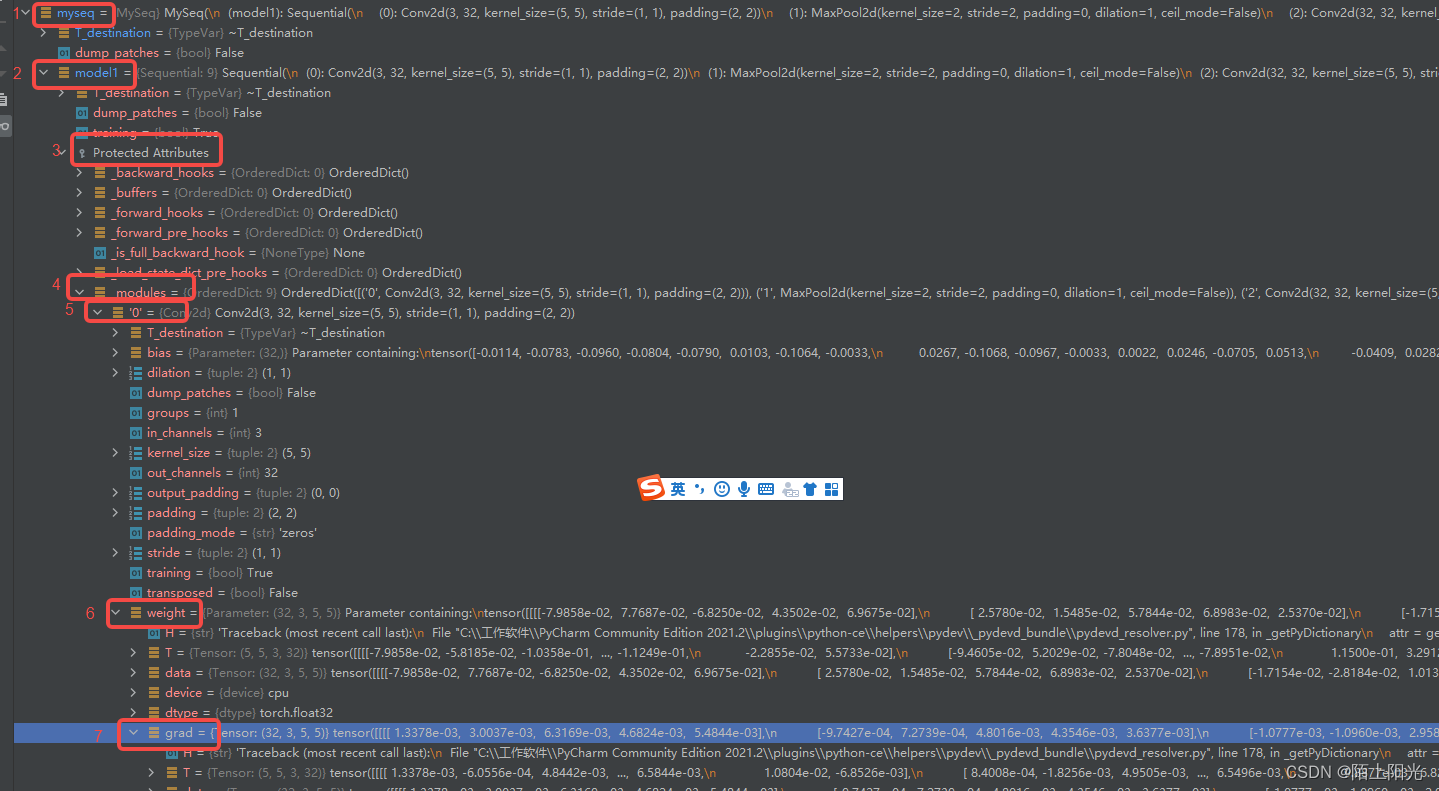

在debug中的显示

显示在网络结构中,每一层的保护属性中,都有weight属性,梯度属性在weitht属性里面

先找模型结构 在找每一层 在找weight权重,梯度在weight权重里面

code

核心代码:result_loss.backward() # 要在最后获取 backward函数要挂在通过loss函数计算后的结果上。

# 模型定义、数据加载 同上个代码

for data in dataloader:

imgs, targets = data

print(imgs.shape)

output = myseq(imgs)

result_loss= loss(output, targets)

result_loss.backward() # 要在最后获取

print(result_loss)

print(result_loss.grad)