深度学习基础知识 学习率调度器的用法解析

- 1、自定义学习率调度器**:**torch.optim.lr_scheduler.LambdaLR

- 2、正儿八经的模型搭建流程以及学习率调度器的使用设置

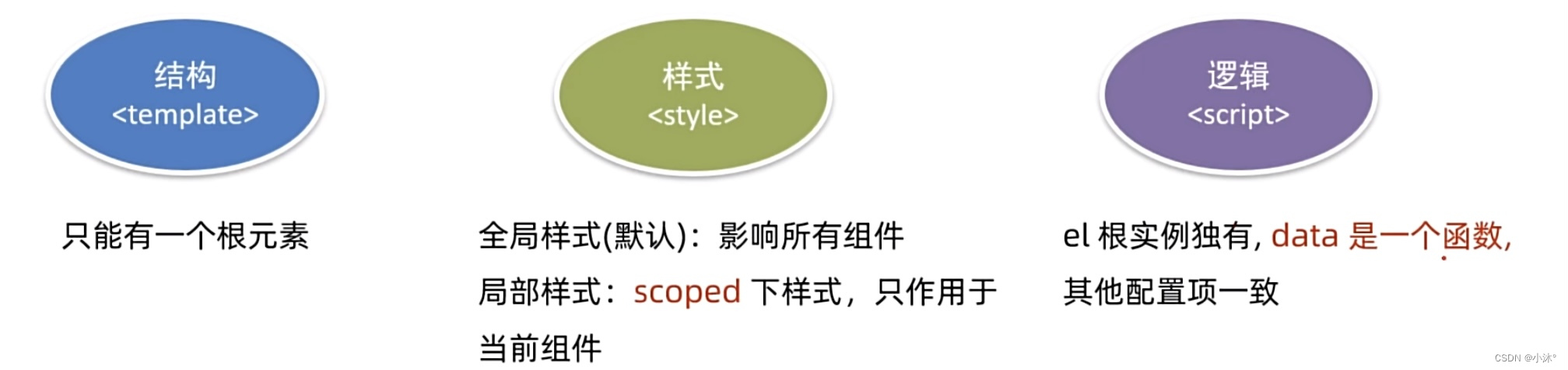

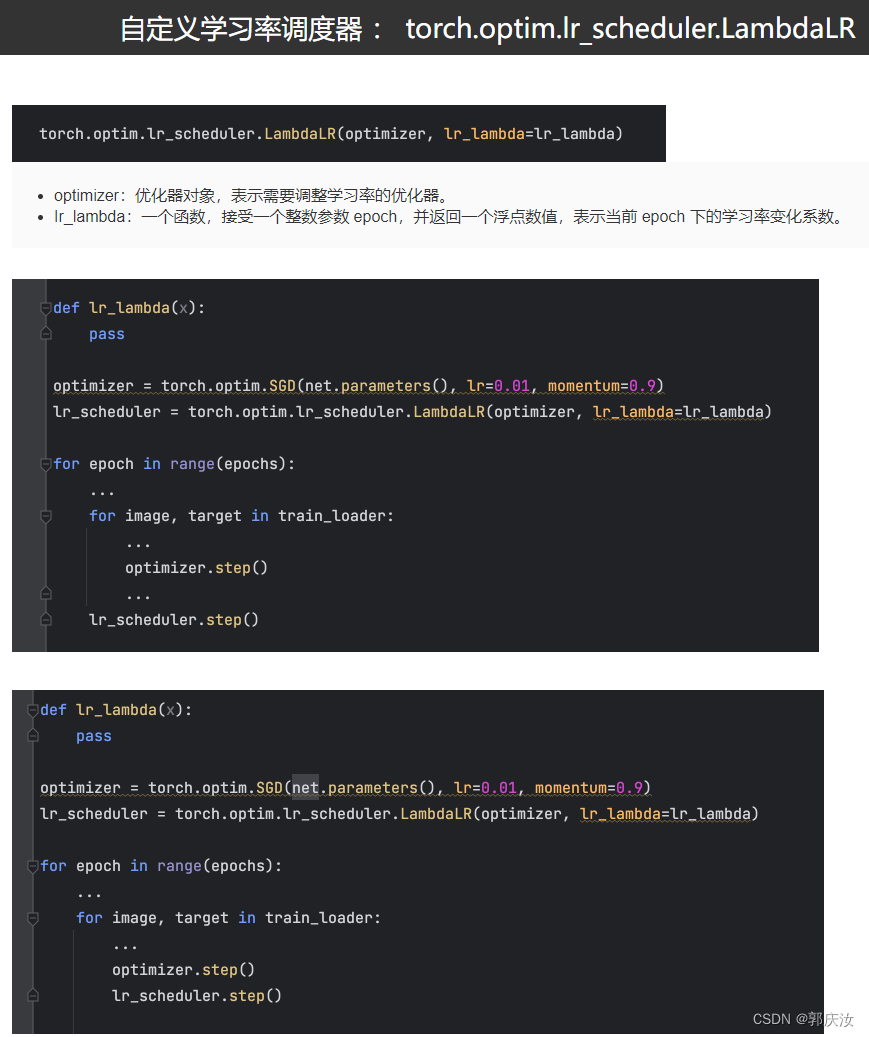

1、自定义学习率调度器**:**torch.optim.lr_scheduler.LambdaLR

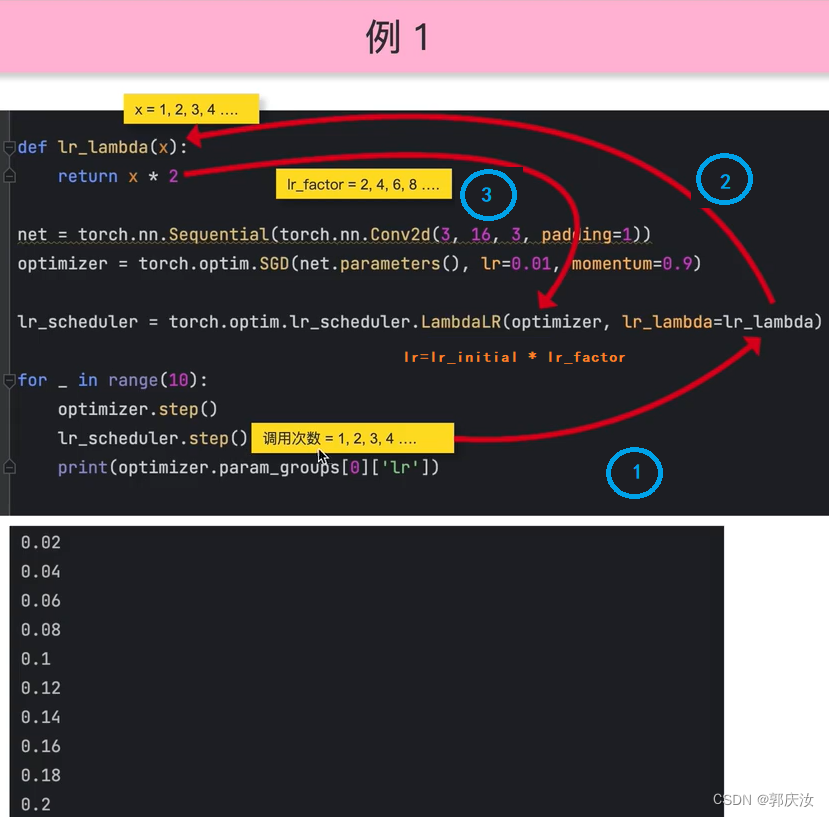

实验代码:

import torch

import torch.nn as nn

def lr_lambda(x):

return x*2

net=nn.Sequential(nn.Conv2d(3,16,3,1,1))

optimizer=torch.optim.SGD(net.parameters(),lr=0.01,momentum=0.9)

lr_scheduler=torch.optim.lr_scheduler.LambdaLR(optimizer,lr_lambda=lr_lambda)

for _ in range(10):

optimizer.step()

lr_scheduler.step()

print(optimizer.param_groups[0]['lr'])

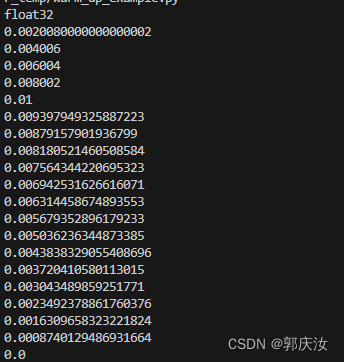

打印结果:

分析数据变化如下图所示:

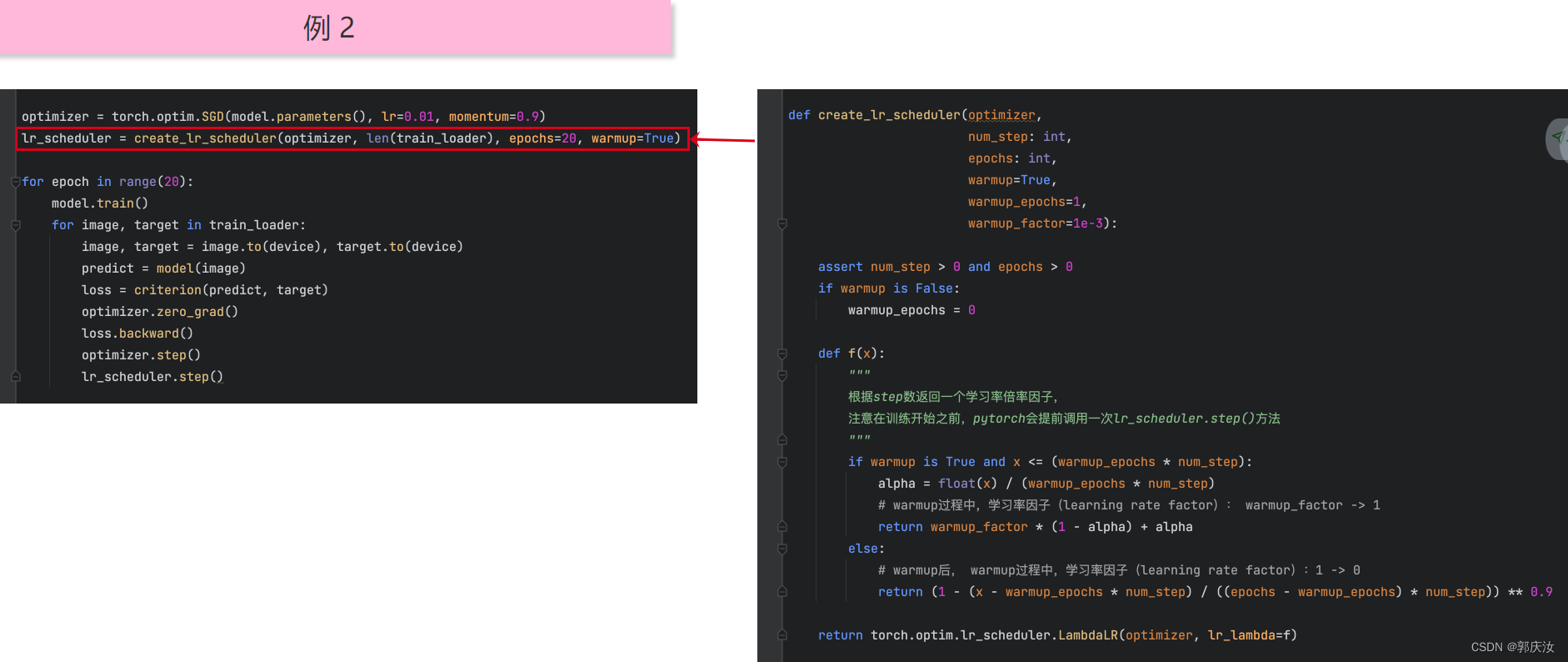

2、正儿八经的模型搭建流程以及学习率调度器的使用设置

代码:

import torch

import torch.nn as nn

import numpy as np

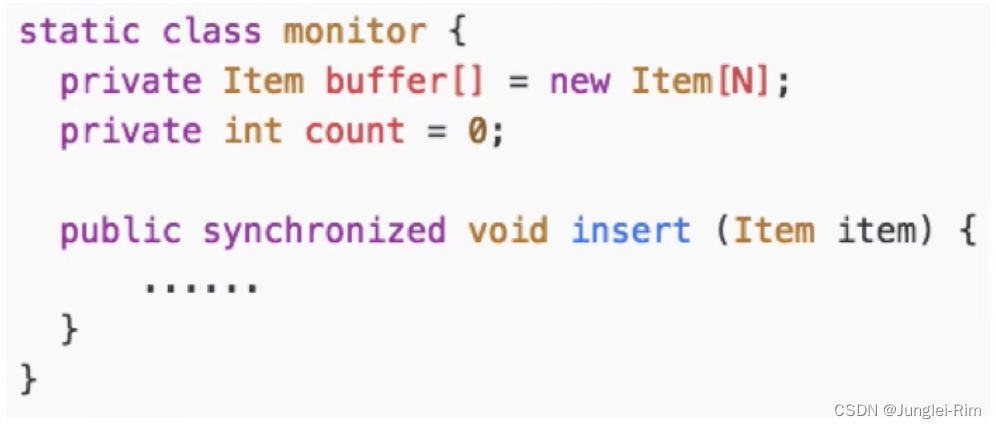

def create_lr_scheduler(optimizer,

num_step:int,

epochs:int,

warmup=True,

warmup_epochs=1,

warmup_factor=1e-3):

assert num_step>0 and epochs>0

if warmup is False:

warmup_epochs=0

def f(x):

"""

根据step数,返回一个学习率倍率因子,

注意在训练开始之前,pytorch会提前调用一次create_lr_scheduler.step()方法

"""

if warmup is True and x <= (warmup_epochs * num_step):

alpha=float(x) / (warmup_epochs * num_step)

# warmup过程中,学习率因子(learning rate factor):warmup_factor -----> 1

return warmup_factor * (1-alpha) + alpha

else:

# warmup后,学习率因子(learning rate factor):warmup_factor -----> 0

return (1-(x - warmup_epochs * num_step) / (epochs-warmup_epochs * num_step)) ** 0.9

return torch.optim.lr_scheduler.LambdaLR(optimizer,lr_lambda=f)

net=nn.Sequential(nn.Conv2d(3,16,1,1))

optimizer=torch.optim.SGD(net.parameters(),lr=0.01,momentum=0.9)

lr_scheduler=create_lr_scheduler(optimizer=optimizer,num_step=5,epochs=20,warmup=True)

image=(np.random.rand(1,3,64,64)).astype(np.float32)

image_tensor=torch.tensor(image.copy(),dtype=torch.float32)

print(image.dtype)

for epoch in range(20):

net.train()

predict=net(image_tensor)

optimizer.zero_grad()

optimizer.step()

lr_scheduler.step()

print(optimizer.param_groups[0]['lr']) # 打印学习率变化情况