Ubuntu20.04LTS环境docker+cephadm方式部署Ceph 17.2.5

- 1. 前言

- 2. 环境准备

- 2.1. 主机信息

- 2.2. NTP时间同步

- 2.3. 关闭 iptable 和 firewalld

- 2.4. 关闭 SElinux

- 2.5. 生成SSH证书,并分发到其他节点

- 2.6. 依赖安装

- 3. 安装部署Ceph17.2.5

- 3.1. 安装cephadm,拉取ceph镜像

- 3.2. 创建集群

- 3.2.1. 初始化 mon 节点

- 3.2.2. cephadm shell 介绍

- 3.2.3. 向集群加入其他节点

- 3.2.4. 添加OSD

- 4. 删除集群

- 5. 部署遇到的问题以及解决办法

- 5.1. ERROR: Cannot infer an fsid, one must be specified: ['xx', 'xxx', 'xxxx']

- 5.2. ceph-mon起来后,ceph -s 命令无任何输出,卡住

- 5.3. 指定磁盘添加OSD时报错Device /dev/xxx has a filesystem.

1. 前言

参照本文档将指导您,如何在Ubuntu20.0.4服务器采用docker+cephadm方式安装 17.2版本的Ceph。

2. 环境准备

2.1. 主机信息

| 主机名称 | IP | 系统版本 | 服务信息 |

|---|---|---|---|

| controller | 192.168.201.34 | ubuntu20.04 | mon,osd |

| compute1 | 192.168.201.35 | ubuntu20.04 | mgr,osd,mds |

| compute2 | 192.168.201.36 | ubuntu20.04 | raw,osd |

2.2. NTP时间同步

-

在controller上执行以下命令,从阿里云的NTP服务器上同步时间。

apt -y install chrony # 备份NTP服务的原始配置文件 mv /etc/chrony/chrony.conf /etc/chrony/chrony.conf.bak # 编写一个空的配置文件,文件只有两行配置 vim /etc/chrony/chrony.conf -------------------- server ntp.aliyun.com iburst allow 192.168.201.34/24 # 保存退出 #重启系统的ntp服务 service chrony restart -

再配置computu1和computu2的NTP服务,从controller上拉去时间。

apt -y install chrony # 备份NTP服务的原始配置文件 mv /etc/chrony/chrony.conf /etc/chrony/chrony.conf.bak # 编写一个空的配置文件,文件只有一行配置 vim /etc/chrony/chrony.conf -------------------- server controller iburst #重启系统的ntp服务 service chrony restart -

三台节点执行以下命令。

chronyc sources

2.3. 关闭 iptable 和 firewalld

systemctl stop iptables

systemctl stop firewalld

systemctl disable iptables

systemctl disable firewalld

2.4. 关闭 SElinux

修改/etc/selinux/config文件中设置为

SELINUX=disabled

2.5. 生成SSH证书,并分发到其他节点

ssh-keygen -t rsa -P ''

ssh-copy-id -i .ssh/id_rsa.pub cephadm@192.168.201.35

ssh-copy-id -i .ssh/id_rsa.pub cephadm@192.168.201.36

2.6. 依赖安装

cephadm 部署 ceph 集群时,需要如下依赖:

- python3

- 安装Docker/Podman

- systemd

- lvm2

如有缺失请自行安装依赖,本文不做过多阐述。

3. 安装部署Ceph17.2.5

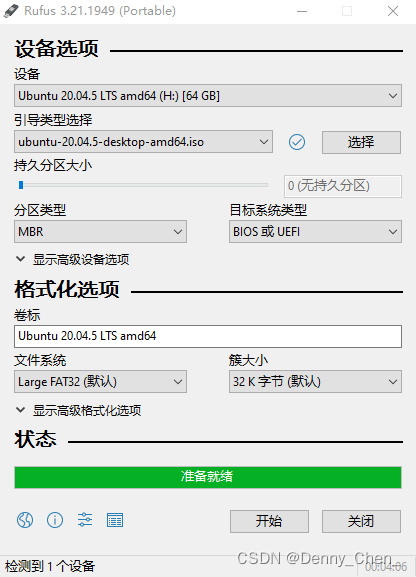

3.1. 安装cephadm,拉取ceph镜像

以下命令在controller节点执行

# 下面的命令会自动安装 docker 等依赖

apt install -y cephadm

# 拉取ceph镜像

docker pull quay.io/ceph/ceph:v17.2

# 查看cpeh版本

$ cephadm version

ceph version 17.2.5 (98318ae89f1a893a6ded3a640405cdbb33e08757) quincy (stable)

# 查看docker镜像

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/ceph/ceph v17.2 cc65afd6173a 2 months ago 1.36GB

# 添加控制节点主机

ceph orch host add controller --addr 192.168.201.34

配置 ceph 源,可以将 /etc/apt/sources.list.d/ceph.list 中的地址替换为国内的

cephadm add-repo --release octopus

3.2. 创建集群

使用 cephadm 创建 Ceph 集群的流程为:

- 初始化第一个 mon 节点

- 配置 ceph 命令行

- 扩展集群 osd 节点

3.2.1. 初始化 mon 节点

$ cephadm --image quay.io/ceph/ceph:v17.2 bootstrap --mon-ip 192.168.201.34 --allow-fqdn-hostname --skip-firewalld --skip-mon-network --dashboard-password-noupdate --skip-monitoring-stack --allow-overwrite

Ceph Dashboard is now available at:

URL: https://controller:8443/

User: admin

Password: q3hfarwiar

You can access the Ceph CLI with:

sudo /usr/sbin/cephadm shell --fsid 7b3a8c96-80da-11ed-9433-570310474c9e -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/master/mgr/telemetry/

Bootstrap complete.

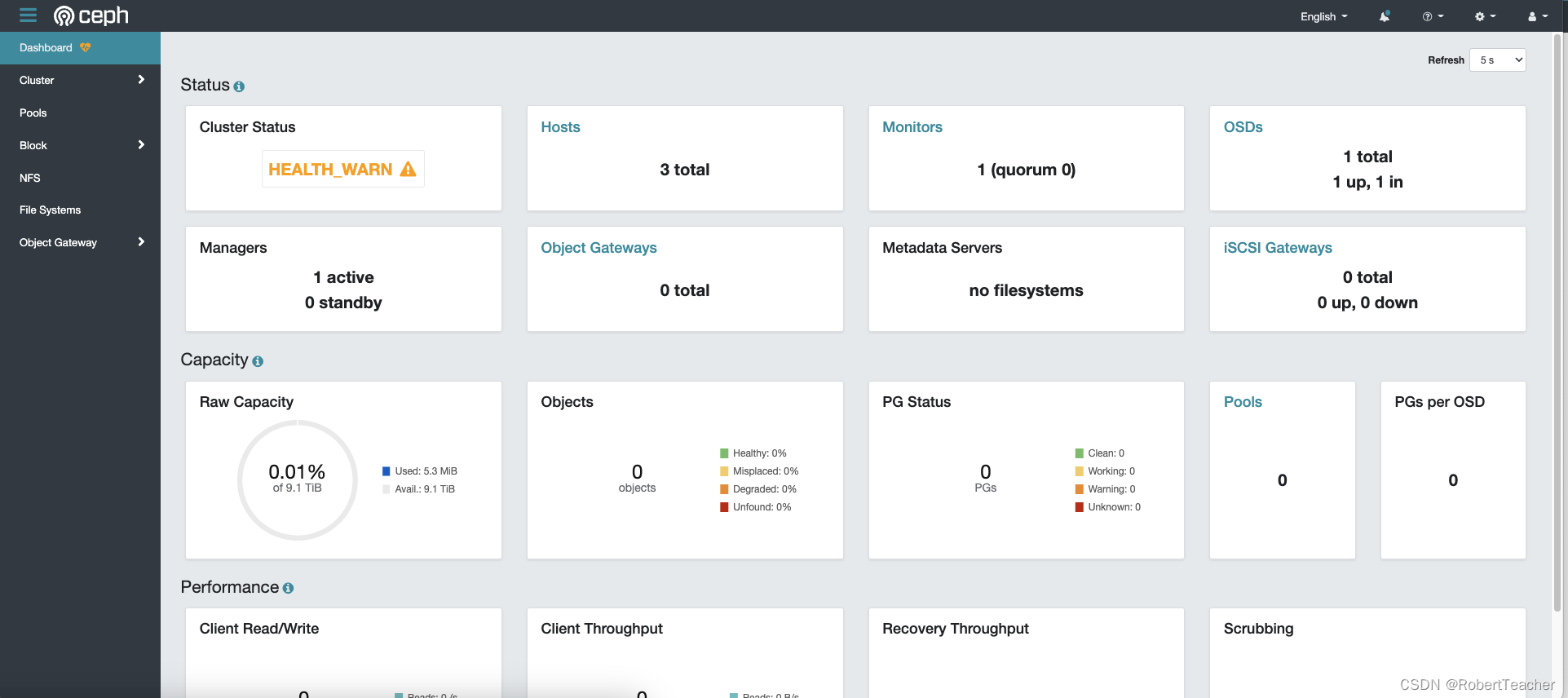

显示出 Bootstrap complete. 表示部署成功。使用:

- –image quay.io/ceph/ceph:v17.2 指定镜像地址

- –registry-url 指定仓库 https://hub.docker.com/u/quayioceph

- –single-host-defaults

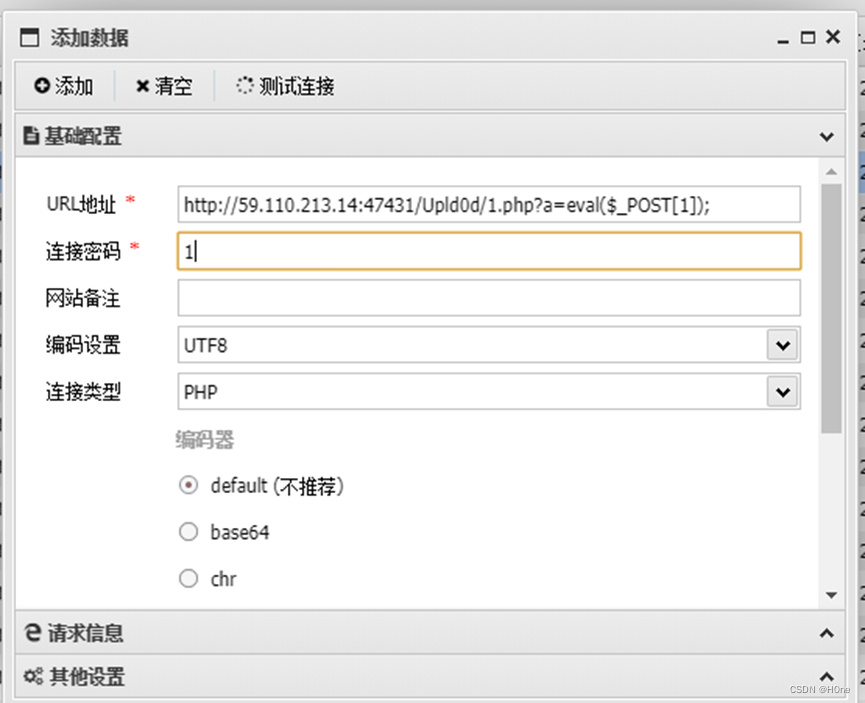

可以登陆输出的地址输入账号密码进入dashboard界面查看ceph运行情况如下图所示。

3.2.2. cephadm shell 介绍

使用 cephadm 安装的环境,执行 cephadm shell 时实际是启动一个容器并进入,使用如下命令进入容器:

$ cephadm shell

Inferring fsid 7b3a8c96-80da-11ed-9433-570310474c9e

Inferring config /var/lib/ceph/7b3a8c96-80da-11ed-9433-570310474c9e/mon.controller/config

Using recent ceph image quay.io/ceph/ceph@sha256:0560b16bec6e84345f29fb6693cd2430884e6efff16a95d5bdd0bb06d7661c45

root@controller:/# ceph -s

cluster:

id: 7b3a8c96-80da-11ed-9433-570310474c9e

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum controller (age 29s)

mgr: controller.idxakq(active, since 2h)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

root@controller:/# ceph -v

ceph version 17.2.5 (98318ae89f1a893a6ded3a640405cdbb33e08757) quincy (stable)

也可以在宿主机上安装 ceph 的 client 客户端:

apt install -y ceph-common

3.2.3. 向集群加入其他节点

复制 mon 容器的公钥到其他节点

ssh-copy-id -f -i /etc/ceph/ceph.pub compute1

ssh-copy-id -f -i /etc/ceph/ceph.pub compute2

添加主机到集群

root@controller:/etc/selinux# ceph orch host label add controller _admin

Added label _admin to host controller

root@controller:/etc/selinux# ceph orch host add compute1 192.168.201.34

Added host 'compute1' with addr '192.168.201.35'

root@controller:/etc/selinux# ceph orch host add compute2 192.168.201.34

Added host 'compute2' with addr '192.168.201.36'

说明:

- controller 为主机的 hostname,使用 hostname 命令获取

- 192.168.201.34 为 public network 的 ip

- _admin 为 label,带有 _admin 的会自动赋值 ceph.conf 指定的 keyring 文件,即 Ceph 集群的管理权限

3.2.4. 添加OSD

一个机器可以有多个磁盘,每一个磁盘都需要启动一个OSD进程进行管理。cephadm 支持两种方式:

- 批量磁盘添加,OSD是无序的

- 指定磁盘添加,可控

查看每个机器上的块设备

root@controller:/etc/selinux# ceph orch device ls

HOST PATH TYPE DEVICE ID SIZE AVAILABLE REFRESHED REJECT REASONS

compute1 /dev/sdb hdd TOSHIBA_MG06ACA1_3250A1XRFKQE 10.0T Yes 106s ago

compute1 /dev/sdc hdd TOSHIBA_MG06ACA1_3250A10CFKQE 10.0T Yes 106s ago

compute2 /dev/sdb hdd TOSHIBA_MG06ACA1_3250A1XRFKQE 10.0T Yes 103s ago

compute2 /dev/sdc hdd TOSHIBA_MG06ACA1_3250A10CFKQE 10.0T Yes 103s ago

controller /dev/sdb hdd TOSHIBA_MG06ACA1_3250A1XRFKQE 10.0T Yes 9m ago

controller /dev/sdc hdd TOSHIBA_MG06ACA1_3250A10CFKQE 10.0T Yes 9m ago

磁盘通过 ceph-volume 容器在机器上周期扫描获取的,可使用的磁盘需满足如下条件:

- The device must have no partitions.

- The device must not have any LVM state.

- The device must not be mounted.

- The device must not contain a file system.

- The device must not contain a Ceph BlueStore OSD.

- The device must be larger than 5 GB.

一次性添加所有磁盘,该方式一旦设置,只有机器上新增磁盘就会自动创建对象的OSD

root@controller:/etc/selinux# ceph orch apply osd --all-available-devices --dry-run

WARNING! Dry-Runs are snapshots of a certain point in time and are bound

to the current inventory setup. If any of these conditions change, the

preview will be invalid. Please make sure to have a minimal

timeframe between planning and applying the specs.

####################

SERVICESPEC PREVIEWS

####################

+---------+------+--------+-------------+

|SERVICE |NAME |ADD_TO |REMOVE_FROM |

+---------+------+--------+-------------+

+---------+------+--------+-------------+

################

OSDSPEC PREVIEWS

################

+---------+------+------+------+----+-----+

|SERVICE |NAME |HOST |DATA |DB |WAL |

+---------+------+------+------+----+-----+

+---------+------+------+------+----+-----+

关闭自动发现磁盘自动创建OSD

root@controller:/etc/selinux# ceph orch apply osd --all-available-devices --unmanaged=true

Scheduled osd.all-available-devices update...

指定磁盘添加OSD

root@controller:/var/lib/ceph/osd# ceph orch daemon add osd controller:/dev/sdc

4. 删除集群

cephadm rm-cluster --fsid <fsid> --force

5. 部署遇到的问题以及解决办法

5.1. ERROR: Cannot infer an fsid, one must be specified: [‘xx’, ‘xxx’, ‘xxxx’]

此问题推断为多个ceph集群数据存在,导致cephadm shell命令 无法推断打开哪一个cpeh集群命令行。

解决方法为删除掉old集群数据,只留新集群文件夹即可。

root@controller:/etc/ceph# cd /var/lib/ceph

root@controller:/var/lib/ceph# ls

7b3a8c96-80da-11ed-9433-570310474c9e e9a3d784-80d8-11ed-9433-570310474c9e f8646be8-80d9-11ed-9433-570310474c9e

root@controller:/var/lib/ceph# rm -rf e9a3d784-80d8-11ed-9433-570310474c9e/

root@controller:/var/lib/ceph# rm -rf f8646be8-80d9-11ed-9433-570310474c9e/

root@controller:/var/lib/ceph# ll

total 12

drwxr-x--- 3 ceph ceph 4096 Dec 21 11:56 ./

drwxr-xr-x 75 root root 4096 Dec 21 10:06 ../

drwx------ 7 167 167 4096 Dec 21 10:54 7b3a8c96-80da-11ed-9433-570310474c9e/

5.2. ceph-mon起来后,ceph -s 命令无任何输出,卡住

问题原因:此类问题出在mon服务上

解决办法:

可以查看/etc/ceph/ceph.conf文件mon_host地址是否正确

可以尝试重启ceph-mon服务

本文中遇到的问题为ceph-mon服务没有正确启动,正确启动后问题解决。

root@controller:/etc/selinux# systemctl |grep ceph

ceph-7b3a8c96-80da-11ed-9433-570310474c9e@crash.controller.service loaded active running Ceph crash.controller for 7b3a8c96-80da-11ed-9433-570310474c9e

● ceph-7b3a8c96-80da-11ed-9433-570310474c9e@mgr.controller.idxakq.service loaded failed failed Ceph mgr.controller.idxakq for 7b3a8c96-80da-11ed-9433-570310474c9e

● ceph-7b3a8c96-80da-11ed-9433-570310474c9e@mon.controller.service loaded failed failed Ceph mon.controller for 7b3a8c96-80da-11ed-9433-570310474c9e

● ceph-e9a3d784-80d8-11ed-9433-570310474c9e@mgr.controller.pmdlwp.service loaded failed failed Ceph mgr.controller.pmdlwp for e9a3d784-80d8-11ed-9433-570310474c9e

● ceph-e9a3d784-80d8-11ed-9433-570310474c9e@mon.controller.service loaded failed failed Ceph mon.controller for e9a3d784-80d8-11ed-9433-570310474c9e

ceph-f8646be8-80d9-11ed-9433-570310474c9e@mgr.controller.zblhlv.service loaded active running Ceph mgr.controller.zblhlv for f8646be8-80d9-11ed-9433-570310474c9e

● ceph-f8646be8-80d9-11ed-9433-570310474c9e@mon.controller.service loaded failed failed Ceph mon.controller for f8646be8-80d9-11ed-9433-570310474c9e

system-ceph\x2d7b3a8c96\x2d80da\x2d11ed\x2d9433\x2d570310474c9e.slice loaded active active system-ceph\x2d7b3a8c96\x2d80da\x2d11ed\x2d9433\x2d570310474c9e.slice

system-ceph\x2de9a3d784\x2d80d8\x2d11ed\x2d9433\x2d570310474c9e.slice loaded active active system-ceph\x2de9a3d784\x2d80d8\x2d11ed\x2d9433\x2d570310474c9e.slice

system-ceph\x2df8646be8\x2d80d9\x2d11ed\x2d9433\x2d570310474c9e.slice loaded active active system-ceph\x2df8646be8\x2d80d9\x2d11ed\x2d9433\x2d570310474c9e.slice

ceph-7b3a8c96-80da-11ed-9433-570310474c9e.target loaded active active Ceph cluster 7b3a8c96-80da-11ed-9433-570310474c9e

ceph-e9a3d784-80d8-11ed-9433-570310474c9e.target loaded active active Ceph cluster e9a3d784-80d8-11ed-9433-570310474c9e

ceph-f8646be8-80d9-11ed-9433-570310474c9e.target loaded active active Ceph cluster f8646be8-80d9-11ed-9433-570310474c9e

ceph.target loaded active active All Ceph clusters and services

root@controller:/etc/selinux# systemctl start ceph-7b3a8c96-80da-11ed-9433-570310474c9e@mon.controller.service

5.3. 指定磁盘添加OSD时报错Device /dev/xxx has a filesystem.

问题原因:磁盘上有一个文件系统存在

解决办法:格式化磁盘后在挂载osd

root@controller:/etc/selinux# mkfs.xfs -f /dev/sdc

meta-data=/dev/sdc isize=512 agcount=10, agsize=268435455 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=2441609216, imaxpct=5

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=521728, version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0