文章目录

- 1 camera open总结

- 2 Camera Open 代码流程分析

- 2.1 Java 层 Camera Class 使用介绍

- 2.2 Frameworks 层 Camera.java 分析

- 2.2.1 \[JNI] CameraService初始化 native\_setup( ) —> android\_hardware\_Camera\_native\_setup( )

- 2.2.2 \[AIDL] interface ICameraService

- 2.2.3 \[Native] CameraService.cpp

- 2.2.3.1 \[CameraService] 创建 Camera 客户端 makeClient( )

- 2.2.3.2 \[Camera2Client] Camera2Client初始化 client->initialize(mModule))

- 2.2.3.2.1 \[Camera3Device] Camera device 初始化 mDevice->initialize(module)

- 2.2.3.2.2 \[CameraProviderManager] manager->openSession

- 2.2.3.3 \[CameraDevice] CameraDevice::open

- 2.2.3.4 \[CameraModule] CameraModule::open

- 2.2.3.4.1 \[hardware] camera\_module\_t

- 2.2.3.5 \[Hardware] 打开设备 QCamera2Factory::cameraDeviceOpen()

- 2.2.3.5.1 camera\_open((uint8\_t)mCameraId, \&mCameraHandle)

- 2.2.3.5.2 Camera 操作节构体 cam\_obj->vtbl.ops

- 2.2.3.5.3 \[Hardware] 初始化Framewroks层的Callback 函数 QCamera3HardwareInterface::initialize( )

- 2.2.4 EventHandler( )

- 2.3 内核层msm\_open

- 3 OpenSession 流程分析

- 3.1 mm-camera2框架

- 3.2 media controller线程

- 3.2.1 mm\_camera\_module\_open\_session

- 3.2.2 mct\_shimlayer\_process\_module\_init

- 3.2.3 mct\_shimlayer\_start\_session

- 3.2.4 mct\_controller\_new

- 3.2.4.1 mct\_pipeline\_new

- 3.2.4.2 mct\_pipeline\_start\_session

- 3.2.4.3 mct\_pipeline\_modules\_start

- 3.2.4.4 mct\_pipeline\_start\_session\_thread

- 3.3 else

- 3.3 else

1 camera open总结

整个Camera Open 过程总结如下:

Java APP 层

调用 Frameworks 层 Camera.java 中的 open() 方法,

在open camera 后,才开始调用对应的 getParameters(),setParameters(), startPreview() 等 函数,这些后续分析

Frameworks 层 Camera.java 中的 open()

(1) 在Camera open() 函数中,如果没有指定open 对应的camera id,则默认打开后摄

(2) 根据传入的 camera id 进行初始化

(3) 注册 EventHandler 循环监听Camera 事件

(4) 调用 native_setup() JNI 函数,并下发 camera id 、当前包名、hal层版本号

JNI 层 android_hardware_Camera_native_setup

(1) 调用 Camera::connect() 函数,connect 为 AIDL 方法,通过binder 调用到服务端CameraService.cpp中的 CameraService::connect() 方法中

(2) 获取 Camera Class,建立专门负责与java Camera对象通信的对象

(3) 如果没有设置屏幕显示方向,则默认为0,如果不为0,调用sendCommand 下发屏幕显示方向

C++ Native 层 CameraService.cpp 中

(1) CameraService.cpp 是camera 初始化中讲过了,在开机时会注册好对应的 cameraservice 服务

(2) 前面AIDL中,调用到 CameraService.cpp中的 CameraService::connect() 方法 在该方法中,

(3) 首先检查是否存在 client ,如果存在的话直接返回

(4) 关闭所有的 flashlight 对象

(5) 调用makeClient() 创建 Camera Client 对象。 client是通过 Camera2ClientBase() 进行创建的,同时创建Camera3Device 对象

(6) 调用client->initialize对前面创建好的 Camera client 进行初始化

(7) 初始化前先对device 进行初始化 ,在Camera3Device->initialize()中打开对应的 Camera 节点/dev/videox,进行初始化,获取到 Camera 的操作方法

(8) 初始化 Camera 默认参数

(9) 接着对创建 Camera 运行时的六大线程,

处理preview和录像 stream的线程、处理3A和人脸识别的线程、拍照流程状态机线程、处理拍照 jpeg stream 线程、处理零延时zsl stream使用的线程、处理callback的线程

2 Camera Open 代码流程分析

2.1 Java 层 Camera Class 使用介绍

先看下谷歌官方对 Camera Class 的介绍:

The Camera class is used to set image capture settings, start/stop preview,

snap pictures, and retrieve frames for encoding for video.

This Class is a client for the Camera service, which manages the actual camera hardware.

2.2 Frameworks 层 Camera.java 分析

调用 Frameworks 层 Camera.java 中的 open() 方法,

在open camera 后,才开始调用对应的 getParameters(),setParameters(), startPreview() 等 函数,这些后续分析

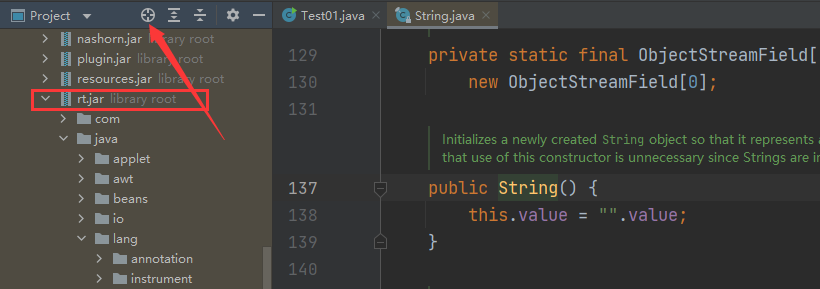

Java 层中调用的 Camera Class 源码位于 frameworks/base/core/java/android/hardware/Camera.java,

整个过程,主要工作如下:

(1) 在Camera open() 函数中,如果没有指定open 对应的camera id,则默认打开后摄

(2) 根据传入的 camera id 进行初始化

(3) 注册 EventHandler 循环监听Camera 事件

(4) 调用 native_setup() JNI 函数,并下发 camera id 、当前包名、hal层版本号 @ frameworks/base/core/java/android/hardware/Camera.java

public class Camera {

private static final String TAG = "Camera";

private CameraDataCallback mCameraDataCallback;

private CameraMetaDataCallback mCameraMetaDataCallback;

public static final int CAMERA_HAL_API_VERSION_1_0 = 0x100;

public static class CameraInfo {

public static final int CAMERA_FACING_BACK = 0;

public static final int CAMERA_FACING_FRONT = 1;

public static final int CAMERA_SUPPORT_MODE_ZSL = 2;

public static final int CAMERA_SUPPORT_MODE_NONZSL = 3;

public int facing;

public int orientation;

public boolean canDisableShutterSound;

};

// 1. 找开指定 id 的Camera

public static Camera open(int cameraId) {

return new Camera(cameraId);

}

// 2. 在Camera open() 函数中,如果没有指定open 对应的camera id,则默认打开后摄

public static Camera open() {

int numberOfCameras = getNumberOfCameras();

CameraInfo cameraInfo = new CameraInfo();

for (int i = 0; i < numberOfCameras; i++) {

getCameraInfo(i, cameraInfo);

if (cameraInfo.facing == CameraInfo.CAMERA_FACING_BACK) {

return new Camera(i);

}

}

return null;

}

// 3. 调用 cameraInitNormal() 初始对应 id 的Camera

/** used by Camera#open, Camera#open(int) */

Camera(int cameraId) {

int err = cameraInitNormal(cameraId);

}

private int cameraInitNormal(int cameraId) {

return cameraInitVersion(cameraId, CAMERA_HAL_API_VERSION_NORMAL_CONNECT); // -2

}

private int cameraInitVersion(int cameraId, int halVersion) {

mShutterCallback = null;

mRawImageCallback = null;

mJpegCallback = null;

mPreviewCallback = null;

mPostviewCallback = null;

mUsingPreviewAllocation = false;

mZoomListener = null;

/* ### QC ADD-ONS: START */

mCameraDataCallback = null;

mCameraMetaDataCallback = null;

/* ### QC ADD-ONS: END */

// 1. 注册 EventHandler 循环监听Camera 事件

Looper looper;

if ((looper = Looper.myLooper()) != null) {

mEventHandler = new EventHandler(this, looper);

} else if ((looper = Looper.getMainLooper()) != null) {

mEventHandler = new EventHandler(this, looper);

} else {

mEventHandler = null;

}

// 2. 获取当前包名

String packageName = ActivityThread.currentOpPackageName();

// 3. 如果定义了 prop 属性 "camera.hal1.packagelist" , 则强制使用 HAL1

//Force HAL1 if the package name falls in this bucket

String packageList = SystemProperties.get("camera.hal1.packagelist", "");

if (packageList.length() > 0) {

TextUtils.StringSplitter splitter = new TextUtils.SimpleStringSplitter(',');

splitter.setString(packageList);

for (String str : splitter) {

if (packageName.equals(str)) {

halVersion = CAMERA_HAL_API_VERSION_1_0;

break;

}

}

}

// 4. 调用 native_setup() JNI 函数,并下发 camera id 、当前包名、hal层版本号

return native_setup(new WeakReference<Camera>(this), cameraId, halVersion, packageName);

}

2.2.1 [JNI] CameraService初始化 native_setup( ) —> android_hardware_Camera_native_setup( )

java 中通过JNI 调用 native 方法,Camera JNI 代码位于 @ frameworks/base/core/jni/android_hardware_Camera.cpp

//cameraId = 0

//halVersion = -2

native_setup(new WeakReference<Camera>(this), cameraId, halVersion, packageName);

static const JNINativeMethod camMethods[] = {

{ "_getNumberOfCameras", "()I", (void *)android_hardware_Camera_getNumberOfCameras },

{ "_getCameraInfo", "(ILandroid/hardware/Camera$CameraInfo;)V", (void*)android_hardware_Camera_getCameraInfo },

{ "native_setup", "(Ljava/lang/Object;IILjava/lang/String;)I", (void*)android_hardware_Camera_native_setup },

{ "native_release", "()V", (void*)android_hardware_Camera_release },

{ "setPreviewSurface", "(Landroid/view/Surface;)V", (void *)android_hardware_Camera_setPreviewSurface },

{ "setPreviewTexture", "(Landroid/graphics/SurfaceTexture;)V", (void *)android_hardware_Camera_setPreviewTexture },

{ "setPreviewCallbackSurface", "(Landroid/view/Surface;)V", (void *)android_hardware_Camera_setPreviewCallbackSurface },

{ "startPreview", "()V", (void *)android_hardware_Camera_startPreview },

{ "_stopPreview", "()V", (void *)android_hardware_Camera_stopPreview },

{ "previewEnabled", "()Z", (void *)android_hardware_Camera_previewEnabled },

{ "setHasPreviewCallback","(ZZ)V", (void *)android_hardware_Camera_setHasPreviewCallback },

{ "_addCallbackBuffer", "([BI)V", (void *)android_hardware_Camera_addCallbackBuffer },

{ "native_autoFocus", "()V", (void *)android_hardware_Camera_autoFocus },

{ "native_cancelAutoFocus","()V", (void *)android_hardware_Camera_cancelAutoFocus },

{ "native_takePicture", "(I)V", (void *)android_hardware_Camera_takePicture },

{ "native_setHistogramMode","(Z)V", (void *)android_hardware_Camera_setHistogramMode },

{ "native_setMetadataCb", "(Z)V", (void *)android_hardware_Camera_setMetadataCb },

{ "native_sendHistogramData","()V", (void *)android_hardware_Camera_sendHistogramData },

{ "native_setLongshot", "(Z)V", (void *)android_hardware_Camera_setLongshot },

{ "native_setParameters", "(Ljava/lang/String;)V", (void *)android_hardware_Camera_setParameters },

{ "native_getParameters", "()Ljava/lang/String;", (void *)android_hardware_Camera_getParameters },

{ "reconnect", "()V", (void*)android_hardware_Camera_reconnect },

{ "lock", "()V", (void*)android_hardware_Camera_lock },

{ "unlock", "()V", (void*)android_hardware_Camera_unlock },

{ "startSmoothZoom", "(I)V", (void *)android_hardware_Camera_startSmoothZoom },

{ "stopSmoothZoom", "()V", (void *)android_hardware_Camera_stopSmoothZoom },

{ "setDisplayOrientation","(I)V", (void *)android_hardware_Camera_setDisplayOrientation },

{ "_enableShutterSound", "(Z)Z", (void *)android_hardware_Camera_enableShutterSound },

{ "_startFaceDetection", "(I)V", (void *)android_hardware_Camera_startFaceDetection },

{ "_stopFaceDetection", "()V", (void *)android_hardware_Camera_stopFaceDetection},

{ "enableFocusMoveCallback","(I)V", (void *)android_hardware_Camera_enableFocusMoveCallback},

};

在

// connect to camera service

static jint android_hardware_Camera_native_setup(JNIEnv *env, jobject thiz, jobject weak_this, jint cameraId, jint halVersion, jstring clientPackageName)

{

// Convert jstring to String16

const char16_t *rawClientName = reinterpret_cast<const char16_t*>(env->GetStringChars(clientPackageName, NULL));

jsize rawClientNameLen = env->GetStringLength(clientPackageName);

String16 clientName(rawClientName, rawClientNameLen);

env->ReleaseStringChars(clientPackageName, reinterpret_cast<const jchar*>(rawClientName));

sp<Camera> camera;

if (halVersion == CAMERA_HAL_API_VERSION_NORMAL_CONNECT) { // -2

// Default path: hal version is don't care, do normal camera connect.

// 调用 Camera::connect() 函数,connect 为 AIDL 方法,

// 通过binder 调用到服务端CameraService.cpp中的 CameraService::connect() 方法中

camera = Camera::connect(cameraId, clientName, Camera::USE_CALLING_UID, Camera::USE_CALLING_PID);

======================>

+ // frameworks/av/camera/Camera.cpp

+ return CameraBaseT::connect(cameraId, clientPackageName, clientUid, clientPid);

+ ========>

+ // frameworks/av/camera/CameraBase.cpp

+ sp<TCam> CameraBase<TCam, TCamTraits>::connect(int cameraId, const String16& clientPackageName, int clientUid, int clientPid)

+ {

+ - sp<TCam> c = new TCam(cameraId);

+ - sp<TCamCallbacks> cl = c;

+ const sp<::android::hardware::ICameraService> cs = getCameraService();

+ TCamConnectService fnConnectService = TCamTraits::fnConnectService;

+ ret = (cs.get()->*fnConnectService)(cl, cameraId, clientPackageName, clientUid, clientPid, /*out*/ &c->mCamera);

+ --------->

+ CameraTraits<Camera>::TCamConnectService CameraTraits<Camera>::fnConnectService =

+ &::android::hardware::ICameraService::connect;

+

+ IInterface::asBinder(c->mCamera)->linkToDeath(c);

+ return c;

+ }

+ <========

<======================

} else {

jint status = Camera::connectLegacy(cameraId, halVersion, clientName, Camera::USE_CALLING_UID, camera);

if (status != NO_ERROR) {

return status;

}

}

// make sure camera hardware is alive

if (camera->getStatus() != NO_ERROR) {

return NO_INIT;

}

// 获取 Camera Class

jclass clazz = env->GetObjectClass(thiz);

if (clazz == NULL) {

// This should never happen

jniThrowRuntimeException(env, "Can't find android/hardware/Camera");

return INVALID_OPERATION;

}

// We use a weak reference so the Camera object can be garbage collected.

// The reference is only used as a proxy for callbacks.

sp<JNICameraContext> context = new JNICameraContext(env, weak_this, clazz, camera);

context->incStrong((void*)android_hardware_Camera_native_setup);

camera->setListener(context); // 专门负责与java Camera对象通信的对象

// save context in opaque field

env->SetLongField(thiz, fields.context, (jlong)context.get());

// Update default display orientation in case the sensor is reverse-landscape

CameraInfo cameraInfo;

status_t rc = Camera::getCameraInfo(cameraId, &cameraInfo);

int defaultOrientation = 0;

switch (cameraInfo.orientation) {

case 0: break;

case 90:

if (cameraInfo.facing == CAMERA_FACING_FRONT) {

defaultOrientation = 180;

}

break;

case 180:

defaultOrientation = 180;

break;

case 270:

if (cameraInfo.facing != CAMERA_FACING_FRONT) {

defaultOrientation = 180;

}

break;

default:

ALOGE("Unexpected camera orientation %d!", cameraInfo.orientation);

break;

}

// 如果没有设置屏幕显示方向,则默认为0,如果不为0,调用sendCommand 下发屏幕显示方向

if (defaultOrientation != 0) {

ALOGV("Setting default display orientation to %d", defaultOrientation);

rc = camera->sendCommand(CAMERA_CMD_SET_DISPLAY_ORIENTATION,defaultOrientation, 0);

}

return NO_ERROR;

}

2.2.2 [AIDL] interface ICameraService

在前面代码中, 经过如下一系列调用,最终走到了 AIDL 层。

native_setup() —> android_hardware_Camera_native_setup() —> CameraBaseT::connect —> sp<TCam> CameraBase<TCam, TCamTraits>::connect —> ret = (cs.get()->*fnConnectService) —> ::android::hardware::ICameraService::connect()

fnConnectService 定义如下:

CameraTraits<Camera>::TCamConnectService CameraTraits<Camera>::fnConnectService = &::android::hardware::ICameraService::connect;

@frameworks/av/camera/aidl/android/hardware/ICameraService.aidl

/**

* Binder interface for the native camera service running in mediaserver.

* @hide

*/

interface ICameraService

{

/** Open a camera device through the old camera API */

ICamera connect(ICameraClient client, int cameraId, String opPackageName, int clientUid, int clientPid);

/** Open a camera device through the new camera API Only supported for device HAL versions >= 3.2 */

ICameraDeviceUser connectDevice(ICameraDeviceCallbacks callbacks, int cameraId,String opPackageName,int clientUid);

}

AIDL 中,我们走的是老方法, ICamera connect().

AIDL——Android Interface Definition Language,是一种接口定义语言,用于生成可以在Android设备上两个进程间进行通信的代码。

Android Java Service Framework提供的大多数系统服务都是使用AIDL语言生成的。使用AIDL语言,可以自动生成服务接口、服务代理、服务Stub代码。

2.2.3 [Native] CameraService.cpp

通过 AIDL 的 Binder 通信,跳转到 CameraService.cpp 中。CameraService.cpp 是在开机时会注册好对应的 cameraservice 服务。

此时通过Binder通信,调用CameraService 服务中的 connect 方法: @frameworks/av/services/camera/libcameraservice/CameraService.cpp

Status CameraService::connect(

const sp<ICameraClient>& cameraClient,

int cameraId,

const String16& clientPackageName,

int clientUid,

int clientPid,

/*out*/

sp<ICamera>* device) {

String8 id = String8::format("%d", cameraId);

sp<Client> client = nullptr;

ret = connectHelper<ICameraClient,Client>(cameraClient, id,

CAMERA_HAL_API_VERSION_UNSPECIFIED, clientPackageName, clientUid, clientPid, API_1,

/*legacyMode*/ false, /*shimUpdateOnly*/ false,

/*out*/client);

*device = client;

return ret;

}

进入 connectHelper 函数看下:

1.检查 Client 的权限

2.如果已存在 client 则直接返回

3.在打开Camera 前,所有Flashlight 都应该被关闭

4.调用makeClient() 创建 Camera Client 对象。 client是通过 Camera2ClientBase() 进行创建的,同时创建Camera3Device 对象

5.调用client->initialize对前面创建好的 Camera client 进行初始化

template<class CALLBACK, class CLIENT>

binder::Status CameraService::connectHelper(const sp<CALLBACK>& cameraCb, const String8& cameraId,

int halVersion, const String16& clientPackageName, int clientUid, int clientPid,

apiLevel effectiveApiLevel, bool legacyMode, bool shimUpdateOnly,

/*out*/sp<CLIENT>& device) {

binder::Status ret = binder::Status::ok();

String8 clientName8(clientPackageName);

int originalClientPid = 0;

//这里可以打印 camera的包名 cameraId 以及camera API version

ALOGI("CameraService::connect call (PID %d \"%s\", camera ID %s) for HAL version %s and "

"Camera API version %d", clientPid, clientName8.string(), cameraId.string(),

(halVersion == -1) ? "default" : std::to_string(halVersion).c_str(), static_cast<int>(effectiveApiLevel));

sp<CLIENT> client = nullptr;

{

// 1. 检查 Client 的权限

// Enforce client permissions and do basic sanity checks

if(!(ret = validateConnectLocked(cameraId, clientName8, /*inout*/clientUid, /*inout*/clientPid, /*out*/originalClientPid)).isOk()) {

return ret;

}

sp<BasicClient> clientTmp = nullptr;

std::shared_ptr<resource_policy::ClientDescriptor<String8, sp<BasicClient>>> partial;

err = handleEvictionsLocked(cameraId, originalClientPid, effectiveApiLevel,

IInterface::asBinder(cameraCb), clientName8, /*out*/&clientTmp, /*out*/&partial));

// 2. 如果已存在 client 则直接返回

if (clientTmp.get() != nullptr) {

// Handle special case for API1 MediaRecorder where the existing client is returned

device = static_cast<CLIENT*>(clientTmp.get());

return ret;

}

// 3. 在打开Camera 前,所有Flashlight 都应该被关闭

// give flashlight a chance to close devices if necessary.

mFlashlight->prepareDeviceOpen(cameraId);

// TODO: Update getDeviceVersion + HAL interface to use strings for Camera IDs

int id = cameraIdToInt(cameraId);

int facing = -1;

int deviceVersion = getDeviceVersion(id, /*out*/&facing);

sp<BasicClient> tmp = nullptr;

// 4. 调用makeClient() 创建 Camera Client 对象。 client是通过 Camera2ClientBase() 进行创建的,同时创建Camera3Device 对象

if(!(ret = makeClient(this, cameraCb, clientPackageName, id, facing, clientPid,

clientUid, getpid(), legacyMode, halVersion, deviceVersion, effectiveApiLevel,

/*out*/&tmp)).isOk()) {

return ret;

}

client = static_cast<CLIENT*>(tmp.get()); // Camera2Client

LOG_ALWAYS_FATAL_IF(client.get() == nullptr, "%s: CameraService in invalid state",

__FUNCTION__);

// 5. 调用client->initialize对前面创建好的 Camera client 进行初始化

if ((err = client->initialize(mModule)) != OK) {

ALOGE("%s: Could not initialize client from HAL module.", __FUNCTION__);

// Errors could be from the HAL module open call or from AppOpsManager

switch(err) {

case BAD_VALUE:

return STATUS_ERROR_FMT(ERROR_ILLEGAL_ARGUMENT,

"Illegal argument to HAL module for camera \"%s\"", cameraId.string());

case -EBUSY:

return STATUS_ERROR_FMT(ERROR_CAMERA_IN_USE,

"Camera \"%s\" is already open", cameraId.string());

case -EUSERS:

return STATUS_ERROR_FMT(ERROR_MAX_CAMERAS_IN_USE,

"Too many cameras already open, cannot open camera \"%s\"",

cameraId.string());

case PERMISSION_DENIED:

return STATUS_ERROR_FMT(ERROR_PERMISSION_DENIED,

"No permission to open camera \"%s\"", cameraId.string());

case -EACCES:

return STATUS_ERROR_FMT(ERROR_DISABLED,

"Camera \"%s\" disabled by policy", cameraId.string());

case -ENODEV:

default:

return STATUS_ERROR_FMT(ERROR_INVALID_OPERATION,

"Failed to initialize camera \"%s\": %s (%d)", cameraId.string(),

strerror(-err), err);

}

}

// Update shim paremeters for legacy clients

if (effectiveApiLevel == API_1) {

// Assume we have always received a Client subclass for API1

sp<Client> shimClient = reinterpret_cast<Client*>(client.get());

String8 rawParams = shimClient->getParameters();

CameraParameters params(rawParams);

auto cameraState = getCameraState(cameraId);

if (cameraState != nullptr) {

cameraState->setShimParams(params);

} else {

ALOGE("%s: Cannot update shim parameters for camera %s, no such device exists.",

__FUNCTION__, cameraId.string());

}

}

if (shimUpdateOnly) {

// If only updating legacy shim parameters, immediately disconnect client

mServiceLock.unlock();

client->disconnect();

mServiceLock.lock();

} else {

// Otherwise, add client to active clients list

finishConnectLocked(client, partial);

}

} // lock is destroyed, allow further connect calls

// Important: release the mutex here so the client can call back into the service from its

// destructor (can be at the end of the call)

device = client;

return ret;

}

2.2.3.1 [CameraService] 创建 Camera 客户端 makeClient( )

接着调用

makeClient(this, cameraCb, clientPackageName, id, facing, clientPid, clientUid, getpid(), legacyMode, halVersion, deviceVersion, effectiveApiLevel, /out/&tmp))

根据 deviceVersion选择Camera1 or Camera2 API route @ frameworks/av/services/camera/libcameraservice/CameraService.cpp

Status CameraService::makeClient(const sp<CameraService>& cameraService,

const sp<IInterface>& cameraCb, const String16& packageName, int cameraId,

int facing, int clientPid, uid_t clientUid, int servicePid, bool legacyMode,

int halVersion, int deviceVersion, apiLevel effectiveApiLevel,

/*out*/sp<BasicClient>* client) {

if (halVersion < 0 || halVersion == deviceVersion) {

// Default path: HAL version is unspecified by caller, create CameraClient

// based on device version reported by the HAL.

switch(deviceVersion) {

case CAMERA_DEVICE_API_VERSION_1_0:

if (effectiveApiLevel == API_1) { // Camera1 API route

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new CameraClient(cameraService, tmp, packageName, cameraId, facing,

clientPid, clientUid, getpid(), legacyMode);

} else { // Camera2 API route

ALOGW("Camera using old HAL version: %d", deviceVersion);

return STATUS_ERROR_FMT(ERROR_DEPRECATED_HAL,

"Camera device \"%d\" HAL version %d does not support camera2 API",

cameraId, deviceVersion);

}

break;

case CAMERA_DEVICE_API_VERSION_3_0:

case CAMERA_DEVICE_API_VERSION_3_1:

case CAMERA_DEVICE_API_VERSION_3_2:

case CAMERA_DEVICE_API_VERSION_3_3:

case CAMERA_DEVICE_API_VERSION_3_4:

if (effectiveApiLevel == API_1) {

// Camera1 API route

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new Camera2Client(cameraService, tmp, packageName, cameraId, facing,

clientPid, clientUid, servicePid, legacyMode);

} else {

// Camera2 API route

sp<hardware::camera2::ICameraDeviceCallbacks> tmp =

static_cast<hardware::camera2::ICameraDeviceCallbacks*>(cameraCb.get());

*client = new CameraDeviceClient(cameraService, tmp, packageName, cameraId,

facing, clientPid, clientUid, servicePid);

}

break;

}

} else {

// A particular HAL version is requested by caller. Create CameraClient

// based on the requested HAL version.

if (deviceVersion > CAMERA_DEVICE_API_VERSION_1_0 &&

halVersion == CAMERA_DEVICE_API_VERSION_1_0) {

// Only support higher HAL version device opened as HAL1.0 device.

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new CameraClient(cameraService, tmp, packageName, cameraId, facing,

clientPid, clientUid, servicePid, legacyMode);

} else {

// Other combinations (e.g. HAL3.x open as HAL2.x) are not supported yet.

ALOGE("Invalid camera HAL version %x: HAL %x device can only be"

" opened as HAL %x device", halVersion, deviceVersion,

CAMERA_DEVICE_API_VERSION_1_0);

return STATUS_ERROR_FMT(ERROR_ILLEGAL_ARGUMENT,

"Camera device \"%d\" (HAL version %d) cannot be opened as HAL version %d",

cameraId, deviceVersion, halVersion);

}

}

return Status::ok();

}

接着调用 *client = new Camera2Client(cameraService, tmp, packageName, cameraId, facing, clientPid, clientUid, servicePid, legacyMode);

client是通过 Camera2ClientBase() 进行创建的 @ frameworks/av/services/camera/libcameraservice/api1/Camera2Client.cpp

Camera2Client::Camera2Client(const sp<CameraService>& cameraService,

const sp<hardware::ICameraClient>& cameraClient,

const String16& clientPackageName,

int cameraId,

int cameraFacing,

int clientPid,

uid_t clientUid,

int servicePid,

bool legacyMode):

Camera2ClientBase(cameraService, cameraClient, clientPackageName,

cameraId, cameraFacing, clientPid, clientUid, servicePid),

mParameters(cameraId, cameraFacing)

{

ATRACE_CALL();

SharedParameters::Lock l(mParameters);

l.mParameters.state = Parameters::DISCONNECTED;

mLegacyMode = legacyMode;

}

创建Camera3Device 对象

// Interface used by CameraService

template <typename TClientBase>

Camera2ClientBase<TClientBase>::Camera2ClientBase(

const sp<CameraService>& cameraService,

const sp<TCamCallbacks>& remoteCallback,

const String16& clientPackageName,

int cameraId,

int cameraFacing,

int clientPid,

uid_t clientUid,

int servicePid):

TClientBase(cameraService, remoteCallback, clientPackageName,cameraId, cameraFacing, clientPid, clientUid, servicePid),

mSharedCameraCallbacks(remoteCallback),

mDeviceVersion(cameraService->getDeviceVersion(cameraId)),

mDeviceActive(false)

{

ALOGI("Camera %d: Opened. Client: %s (PID %d, UID %d)", cameraId, String8(clientPackageName).string(), clientPid, clientUid);

mInitialClientPid = clientPid;

mDevice = new Camera3Device(cameraId);

LOG_ALWAYS_FATAL_IF(mDevice == 0, "Device should never be NULL here.");

}

Camera3Device的有参构造 @ frameworks/av/services/camera/libcameraservice/device3/Camera3Device.cpp

Camera3Device::Camera3Device(int id):

mId(id),

mIsConstrainedHighSpeedConfiguration(false),

mHal3Device(NULL),

mStatus(STATUS_UNINITIALIZED),

mStatusWaiters(0),

mUsePartialResult(false),

mNumPartialResults(1),

mTimestampOffset(0),

mNextResultFrameNumber(0),

mNextReprocessResultFrameNumber(0),

mNextShutterFrameNumber(0),

mNextReprocessShutterFrameNumber(0),

mListener(NULL)

{

ATRACE_CALL();

camera3_callback_ops::notify = &sNotify;

camera3_callback_ops::process_capture_result = &sProcessCaptureResult;

ALOGV("%s: Created device for camera %d", __FUNCTION__, id);

}

2.2.3.2 [Camera2Client] Camera2Client初始化 client->initialize(mModule))

- 1 Camera2ClientBase::initialize里面会调用 Camera3Device::initialize 对/dev/videox 调备节点进行初始化,获取对应的设备的opt 操作函数

- 2 初始化 Camera 默认参数

- 3 创建 Camera 运行时的六大线程

- 3.1 StreamingProcessor:用来处理preview和录像 stream的线程。

- 3.2 FrameProcessor:用来处理3A和人脸识别的线程。

- 3.3 CaptureSequencer:拍照流程状态机线程,拍照的场景非常多,后面会发出状态机的运转流程。

- 3.4 JpegProcessor:用来处理拍照 jpeg stream 线程

- 3.5 ZslProcessor3:这个处理零延时zsl stream使用的线程

- 3.6 mCallbackProcessor:处理callback的线程,主要包含对 callback stream 的创建函数 updateStream()以及处理 HAL 层传上来的 callback stream 的线程

@ frameworks/av/services/camera/libcameraservice/api1/Camera2Client.cpp

status_t Camera2Client::initialize(CameraModule *module)

{

ATRACE_CALL();

ALOGV("%s: Initializing client for camera %d", __FUNCTION__, mCameraId);

status_t res;

// 1. Camera2ClientBase::initialize调用 Camera3Device::initialize 进行设备初始化,获取对应的 camera device 的opt 操作函数

res = Camera2ClientBase::initialize(module);

==============>

@ frameworks/av/services/camera/libcameraservice/device3/Camera3Device.cpp

mDevice->initialize(module);

mDevice->setNotifyCallback(weakThis);

<==============

// 2. 初始化 Camera 默认参数

{

SharedParameters::Lock l(mParameters);

res = l.mParameters.initialize(&(mDevice->info()), mDeviceVersion);

================>

+ @ frameworks/av/services/camera/libcameraservice/api1/client2/Parameters.cpp

+ status_t Parameters::initialize(const CameraMetadata *info, int deviceVersion) {

+ ......

+ videoWidth = previewWidth;

+ videoHeight = previewHeight;

+ params.setPreviewSize(previewWidth, previewHeight);

+ params.setVideoSize(videoWidth, videoHeight);

+ params.set(CameraParameters::KEY_PREFERRED_PREVIEW_SIZE_FOR_VIDEO,

+ String8::format("%dx%d", previewWidth, previewHeight));

+ params.set(CameraParameters::KEY_SUPPORTED_PREVIEW_FORMATS, supportedPreviewFormats);

+ ...... 省略一系列 参数初始化代码

<================

}

String8 threadName;

// 3. 创建 Camera 运行时的六大线程

// 3.1 StreamingProcessor:用来处理preview和录像 stream的线程。

mStreamingProcessor = new StreamingProcessor(this);

threadName = String8::format("C2-%d-StreamProc", mCameraId);

mFrameProcessor->run(threadName.string());

// 3.2 FrameProcessor:用来处理3A和人脸识别的线程。

mFrameProcessor = new FrameProcessor(mDevice, this);

threadName = String8::format("C2-%d-FrameProc",mCameraId);

mFrameProcessor->run(threadName.string());

// 3.3 CaptureSequencer:拍照流程状态机线程,拍照的场景非常多,后面会发出状态机的运转流程。

mCaptureSequencer = new CaptureSequencer(this);

threadName = String8::format("C2-%d-CaptureSeq",mCameraId);

mCaptureSequencer->run(threadName.string());

// 3.4 JpegProcessor:用来处理拍照 jpeg stream 线程

mJpegProcessor = new JpegProcessor(this, mCaptureSequencer);

threadName = String8::format("C2-%d-JpegProc",mCameraId);

mJpegProcessor->run(threadName.string());

// 3.5 ZslProcessor3:这个处理零延时zsl stream使用的线程

mZslProcessor = new ZslProcessor(this, mCaptureSequencer);

threadName = String8::format("C2-%d-ZslProc",mCameraId);

mZslProcessor->run(threadName.string());

// 3.6 mCallbackProcessor:处理callback的线程,主要包含对 callback stream 的创建函数 updateStream()以及处理 HAL 层传上来的 callback stream 的线程

mCallbackProcessor = new CallbackProcessor(this);

threadName = String8::format("C2-%d-CallbkProc",mCameraId);

mCallbackProcessor->run(threadName.string());

return OK;

}

2.2.3.2.1 [Camera3Device] Camera device 初始化 mDevice->initialize(module)

- 1 创建session

- 2 调用CameraProviderManager的opensession方法 @frameworks/av/services/camera/libcameraservice/device3/Camera3Device.cpp

status_t Camera3Device::initialize(sp<CameraProviderManager> manager, const String8& monitorTags) {

ATRACE_CALL();

Mutex::Autolock il(mInterfaceLock);

Mutex::Autolock l(mLock);

ALOGE("sundpmy_ %s: Initializing HIDL device for camera %s", __FUNCTION__, mId.string());

if (mStatus != STATUS_UNINITIALIZED) {

CLOGE("Already initialized!");

return INVALID_OPERATION;

}

if (manager == nullptr) return INVALID_OPERATION;

sp<ICameraDeviceSession> session;

ATRACE_BEGIN("CameraHal::openSession");

ALOGE("sundpmy_ manager->openSession");

status_t res = manager->openSession(mId.string(), this,

/*out*/ &session);

ATRACE_END();

if (res != OK) {

SET_ERR_L("Could not open camera session: %s (%d)", strerror(-res), res);

return res;

}

......

}

2.2.3.2.2 [CameraProviderManager] manager->openSession

- 1 根据CameraDevice的版本,从所有Provider中,找到对应的DeviceInfo

- 2 根据刚刚deviceInfo中对应的provider的信息,获取到对应CameraProvider服务 @frameworks/av/services/camera/libcameraservice/common/CameraProviderManager.cpp

status_t CameraProviderManager::openSession(const std::string &id,

const sp<device::V3_2::ICameraDeviceCallback>& callback,

/*out*/

sp<device::V3_2::ICameraDeviceSession> *session) {

std::lock_guard<std::mutex> lock(mInterfaceMutex);

// 根据CameraDevice的版本,从所有Provider中,找到对应的DeviceInfo

auto deviceInfo = findDeviceInfoLocked(id,

/*minVersion*/ {3,0}, /*maxVersion*/ {4,0});

if (deviceInfo == nullptr) return NAME_NOT_FOUND;

// 根据刚刚deviceInfo中对应的provider的信息,获取到对应CameraProvider服务

auto *deviceInfo3 = static_cast<ProviderInfo::DeviceInfo3*>(deviceInfo);

const sp<provider::V2_4::ICameraProvider> provider =

deviceInfo->mParentProvider->startProviderInterface();

if (provider == nullptr) {

return DEAD_OBJECT;

}

saveRef(DeviceMode::CAMERA, id, provider);

Status status;

hardware::Return<void> ret;

// 通过CameraProvider的getCameraDeviceInterface_V3_x()实例化一个CameraDevice。这一步是通过HIDL调用到了Hal层

auto interface = deviceInfo3->startDeviceInterface<

CameraProviderManager::ProviderInfo::DeviceInfo3::InterfaceT>();

if (interface == nullptr) {

return DEAD_OBJECT;

}

ALOGE("sundpmy_ openSession");

// 这个interface就是前面startDeviceInterface()得到的CameraDevice,这里是要通过CameraDevicce::open()创建一个有效的CameraDeviceSession。这一步也是通过HIDL调用到了HAL

ret = interface->open(callback, [&status, &session]

(Status s, const sp<device::V3_2::ICameraDeviceSession>& cameraSession) {

status = s;

if (status == Status::OK) {

*session = cameraSession;

}

});

if (!ret.isOk()) {

removeRef(DeviceMode::CAMERA, id);

ALOGE("%s: Transaction error opening a session for camera device %s: %s",

__FUNCTION__, id.c_str(), ret.description().c_str());

return DEAD_OBJECT;

}

return mapToStatusT(status);

}

2.2.3.3 [CameraDevice] CameraDevice::open

- 1 通过CameraModule的对象mModule继续open

- 2 通过CameraModule的对象mModule调用getCameraInfo得到camera的一些信息

- 3 创建CameraDeviceSession @hardware/interfaces/camera/device/3.2/default/CameraDevice.cpp

Return<void> CameraDevice::open(const sp<ICameraDeviceCallback>& callback,

ICameraDevice::open_cb _hidl_cb) {

Status status = initStatus();

sp<CameraDeviceSession> session = nullptr;

ALOGE("sundpmy_ 3.2 CameraDevice::open");

if (callback == nullptr) {

ALOGE("%s: cannot open camera %s. callback is null!",

__FUNCTION__, mCameraId.c_str());

_hidl_cb(Status::ILLEGAL_ARGUMENT, nullptr);

return Void();

}

if (status != Status::OK) {

// Provider will never pass initFailed device to client, so

// this must be a disconnected camera

ALOGE("%s: cannot open camera %s. camera is disconnected!",

__FUNCTION__, mCameraId.c_str());

_hidl_cb(Status::CAMERA_DISCONNECTED, nullptr);

return Void();

} else {

mLock.lock();

ALOGE("sundpmy_%s: Initializing device for camera %d", __FUNCTION__, mCameraIdInt);

session = mSession.promote();

if (session != nullptr && !session->isClosed()) {

ALOGE("%s: cannot open an already opened camera!", __FUNCTION__);

mLock.unlock();

_hidl_cb(Status::CAMERA_IN_USE, nullptr);

return Void();

}

/** Open HAL device */

status_t res;

camera3_device_t *device;

ATRACE_BEGIN("camera3->open");

//通过的CameraModule的对象mModule继续open

res = mModule->open(mCameraId.c_str(),

reinterpret_cast<hw_device_t**>(&device));

ATRACE_END();

if (res != OK) {

ALOGE("%s: cannot open camera %s!", __FUNCTION__, mCameraId.c_str());

mLock.unlock();

_hidl_cb(getHidlStatus(res), nullptr);

return Void();

}

/** Cross-check device version */

if (device->common.version < CAMERA_DEVICE_API_VERSION_3_2) {

ALOGE("%s: Could not open camera: "

"Camera device should be at least %x, reports %x instead",

__FUNCTION__,

CAMERA_DEVICE_API_VERSION_3_2,

device->common.version);

device->common.close(&device->common);

mLock.unlock();

_hidl_cb(Status::ILLEGAL_ARGUMENT, nullptr);

return Void();

}

struct camera_info info;

//通过CameraModule的对象mModule调用getCameraInfo得到camera的一些信息

res = mModule->getCameraInfo(mCameraIdInt, &info);

if (res != OK) {

ALOGE("%s: Could not open camera: getCameraInfo failed", __FUNCTION__);

device->common.close(&device->common);

mLock.unlock();

_hidl_cb(Status::ILLEGAL_ARGUMENT, nullptr);

return Void();

}

//创建CameraDeviceSession

session = createSession(

device, info.static_camera_characteristics, callback);

if (session == nullptr) {

ALOGE("%s: camera device session allocation failed", __FUNCTION__);

mLock.unlock();

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

if (session->isInitFailed()) {

ALOGE("%s: camera device session init failed", __FUNCTION__);

session = nullptr;

mLock.unlock();

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

mSession = session;

IF_ALOGV() {

session->getInterface()->interfaceChain([](

::android::hardware::hidl_vec<::android::hardware::hidl_string> interfaceChain) {

ALOGV("Session interface chain:");

for (const auto& iface : interfaceChain) {

ALOGV(" %s", iface.c_str());

}

});

}

mLock.unlock();

}

_hidl_cb(status, session->getInterface());

return Void();

}

2.2.3.4 [CameraModule] CameraModule::open

@hardware/interfaces/camera/common/1.0/default/CameraModule.cpp

int CameraModule::open(const char* id, struct hw_device_t** device) {

int res;

ATRACE_BEGIN("camera_module->open");

res = filterOpenErrorCode(mModule->common.methods->open(&mModule->common, id, device));

ATRACE_END();

return res;

}

filterOpenErrorCode(mModule->common.methods->open(&mModule->common, id, device));

的参数mModule->common.methods->open(&mModule->common, id, device)

首先关注一下mModule,它是在CameraModule构造的时候赋值的

bool LegacyCameraProviderImpl_2_4::initialize() {

camera_module_t *rawModule;

int err = hw_get_module(CAMERA_HARDWARE_MODULE_ID,

(const hw_module_t **)&rawModule);

if (err < 0) {

ALOGE("Could not load camera HAL module: %d (%s)", err, strerror(-err));

return true;

}

//CameraModule构造的时候赋值

mModule = new CameraModule(rawModule);

err = mModule->init();

if (err != OK) {

ALOGE("Could not initialize camera HAL module: %d (%s)", err, strerror(-err));

mModule.clear();

return true;

}

ALOGI("Loaded \"%s\" camera module", mModule->getModuleName());

// Setup vendor tags here so HAL can setup vendor keys in camera characteristics

VendorTagDescriptor::clearGlobalVendorTagDescriptor();

if (!setUpVendorTags()) {

ALOGE("%s: Vendor tag setup failed, will not be available.", __FUNCTION__);

}

......

}

2.2.3.4.1 [hardware] camera_module_t

@hardware/qcom/camera/QCamera2/QCamera2Hal.cpp

static hw_module_t camera_common = {

.tag = HARDWARE_MODULE_TAG,

.module_api_version = CAMERA_MODULE_API_VERSION_2_4,

.hal_api_version = HARDWARE_HAL_API_VERSION,

.id = CAMERA_HARDWARE_MODULE_ID,

.name = "QCamera Module",

.author = "Qualcomm Innovation Center Inc",

.methods = &qcamera::QCamera2Factory::mModuleMethods,

.dso = NULL,

.reserved = {0}

};

camera_module_t HAL_MODULE_INFO_SYM = {

.common = camera_common,

.get_number_of_cameras = qcamera::QCamera2Factory::get_number_of_cameras,

.get_camera_info = qcamera::QCamera2Factory::get_camera_info,

.set_callbacks = qcamera::QCamera2Factory::set_callbacks,

.get_vendor_tag_ops = qcamera::QCamera3VendorTags::get_vendor_tag_ops,

.open_legacy = (qcamera::QCameraCommon::needHAL1Support()) ?

qcamera::QCamera2Factory::open_legacy : NULL,

.set_torch_mode = qcamera::QCamera2Factory::set_torch_mode,

.init = NULL,

.reserved = {0}

};

mModule->common.methods->open(&mModule->common, id, device)的open,

就是:

camera_common.&qcamera::QCamera2Factory::mModuleMethods.open

即:camera_device_open

struct hw_module_methods_t QCamera2Factory::mModuleMethods = {

.open = QCamera2Factory::camera_device_open,

};

2.2.3.5 [Hardware] 打开设备 QCamera2Factory::cameraDeviceOpen()

@ hardware/qcom/camera/QCamera2/QCamera2Factory.cpp

int QCamera2Factory::cameraDeviceOpen(int camera_id, struct hw_device_t **hw_device)

{

LOGI("Open camera id %d API version %d",camera_id, mHalDescriptors[camera_id].device_version);

if ( mHalDescriptors[camera_id].device_version == CAMERA_DEVICE_API_VERSION_3_0 ) {

CAMSCOPE_INIT(CAMSCOPE_SECTION_HAL);

QCamera3HardwareInterface *hw = new QCamera3HardwareInterface(mHalDescriptors[camera_id].cameraId, mCallbacks);

rc = hw->openCamera(hw_device);

}

#ifdef QCAMERA_HAL1_SUPPORT

else if (mHalDescriptors[camera_id].device_version == CAMERA_DEVICE_API_VERSION_1_0) {

QCamera2HardwareInterface *hw = new QCamera2HardwareInterface((uint32_t)camera_id);

rc = hw->openCamera(hw_device);

}

#endif

return rc;

}

初始化 QCamera3HardwareInterface() 可以不看 @ hardware/qcom/camera/QCamera2/HAL3/QCamera3HWI.cpp

QCamera3HardwareInterface::QCamera3HardwareInterface(uint32_t cameraId, const camera_module_callbacks_t *callbacks)

: mCameraId(cameraId),

mCameraHandle(NULL),

mCameraInitialized(false),

mCallbacks(callbacks),

...... //省略一系列Camera 初始化默认值

{

// 1. 获取HAL 层 log 等级

getLogLevel();

=========>

property_get("persist.camera.hal.debug", prop, "0");

property_get("persist.camera.kpi.debug", prop, "0");

property_get("persist.camera.global.debug", prop, "0");

mCommon.init(gCamCapability[cameraId]);

mCameraDevice.common.tag = HARDWARE_DEVICE_TAG;

mCameraDevice.common.version = CAMERA_DEVICE_API_VERSION_3_4;

mCameraDevice.common.close = close_camera_device;

mCameraDevice.ops = &mCameraOps;

===⇒ @ hardware/qcom/camera/QCamera2/HAL3/QCamera3HWI.cpp

mCameraDevice.priv = this;

gCamCapability[cameraId]->version = CAM_HAL_V3;

// TODO: hardcode for now until mctl add support for min_num_pp_bufs

//TBD - To see if this hardcoding is needed. Check by printing if this is filled by mctl to 3

gCamCapability[cameraId]->min_num_pp_bufs = 3;

pthread_condattr_t mCondAttr;

pthread_condattr_init(&mCondAttr);

pthread_condattr_setclock(&mCondAttr, CLOCK_MONOTONIC);

pthread_cond_init(&mBuffersCond, &mCondAttr);

pthread_cond_init(&mRequestCond, &mCondAttr);

pthread_cond_init(&mHdrRequestCond, &mCondAttr);

pthread_condattr_destroy(&mCondAttr);

mPendingLiveRequest = 0;

mCurrentRequestId = -1;

pthread_mutex_init(&mMutex, NULL);

for (size_t i = 0; i < CAMERA3_TEMPLATE_COUNT; i++)

mDefaultMetadata[i] = NULL;

// Getting system props of different kinds

char prop[PROPERTY_VALUE_MAX];

memset(prop, 0, sizeof(prop));

// 获取 persist.camera.raw.dump,是否使能 RAW dump 功能

property_get("persist.camera.raw.dump", prop, "0");

mEnableRawDump = atoi(prop);

// 获取 persist.camera.hal3.force.hdr,是否使能 hdr 功能

property_get("persist.camera.hal3.force.hdr", prop, "0");

mForceHdrSnapshot = atoi(prop);

if (mEnableRawDump)

LOGD("Raw dump from Camera HAL enabled");

memset(&mInputStreamInfo, 0, sizeof(mInputStreamInfo));

memset(mLdafCalib, 0, sizeof(mLdafCalib));

memset(prop, 0, sizeof(prop));

property_get("persist.camera.tnr.preview", prop, "0");

m_bTnrPreview = (uint8_t)atoi(prop);

memset(prop, 0, sizeof(prop));

property_get("persist.camera.swtnr.preview", prop, "1");

m_bSwTnrPreview = (uint8_t)atoi(prop);

memset(prop, 0, sizeof(prop));

property_get("persist.camera.tnr.video", prop, "0");

m_bTnrVideo = (uint8_t)atoi(prop);

memset(prop, 0, sizeof(prop));

property_get("persist.camera.avtimer.debug", prop, "0");

m_debug_avtimer = (uint8_t)atoi(prop);

LOGI("AV timer enabled: %d", m_debug_avtimer);

memset(prop, 0, sizeof(prop));

property_get("persist.camera.cacmode.disable", prop, "0");

m_cacModeDisabled = (uint8_t)atoi(prop);

mRdiModeFmt = gCamCapability[mCameraId]->rdi_mode_stream_fmt;

//Load and read GPU library.

lib_surface_utils = NULL;

LINK_get_surface_pixel_alignment = NULL;

mSurfaceStridePadding = CAM_PAD_TO_32;

// 打开 libadreno_utils.so

lib_surface_utils = dlopen("libadreno_utils.so", RTLD_NOW);

if (lib_surface_utils) {

*(void **)&LINK_get_surface_pixel_alignment =

dlsym(lib_surface_utils, "get_gpu_pixel_alignment");

if (LINK_get_surface_pixel_alignment) {

mSurfaceStridePadding = LINK_get_surface_pixel_alignment();

}

dlclose(lib_surface_utils);

}

if (gCamCapability[cameraId]->is_quadracfa_sensor) {

LOGI("Sensor support Quadra CFA mode");

m_bQuadraCfaSensor = true;

}

m_bQuadraCfaRequest = false;

m_bQuadraSizeConfigured = false;

memset(&mStreamList, 0, sizeof(camera3_stream_configuration_t));

}

接下来,调用 hw->openCamera(hw_device); @ hardware/qcom/camera/QCamera2/HAL3/QCamera3HWI.cpp

int QCamera3HardwareInterface::openCamera(struct hw_device_t **hw_device)

{

......

if (mState != CLOSED) {

*hw_device = NULL;

return PERMISSION_DENIED;

}

mPerfLockMgr.acquirePerfLock(PERF_LOCK_OPEN_CAMERA);

LOGI("[KPI Perf]: E PROFILE_OPEN_CAMERA camera id %d",mCameraId);

rc = openCamera();

if (rc == 0) {

*hw_device = &mCameraDevice.common;

} else {

*hw_device = NULL;

}

LOGI("[KPI Perf]: X PROFILE_OPEN_CAMERA camera id %d, rc: %d", mCameraId, rc);

if (rc == NO_ERROR) {

mState = OPENED;

}

return rc;

}

接下来调用 int QCamera3HardwareInterface::openCamera() @ hardware/qcom/camera/QCamera2/HAL3/QCamera3HWI.cpp

int QCamera3HardwareInterface::openCamera()

{

KPI_ATRACE_CAMSCOPE_CALL(CAMSCOPE_HAL3_OPENCAMERA);

if (mCameraHandle) {

LOGE("Failure: Camera already opened");

return ALREADY_EXISTS;

}

rc = QCameraFlash::getInstance().reserveFlashForCamera(mCameraId);

if (rc < 0) {

LOGE("Failed to reserve flash for camera id: %d",

mCameraId);

return UNKNOWN_ERROR;

}

rc = camera_open((uint8_t)mCameraId, &mCameraHandle);

rc = mCameraHandle->ops->register_event_notify(mCameraHandle->camera_handle, camEvtHandle, (void *)this);

mExifParams.debug_params = (mm_jpeg_debug_exif_params_t *) malloc (sizeof(mm_jpeg_debug_exif_params_t));

if (mExifParams.debug_params) {

memset(mExifParams.debug_params, 0, sizeof(mm_jpeg_debug_exif_params_t));

} else {

LOGE("Out of Memory. Allocation failed for 3A debug exif params");

return NO_MEMORY;

}

mFirstConfiguration = true;

......

}

2.2.3.5.1 camera_open((uint8_t)mCameraId, &mCameraHandle)

打开Camera 成功后,hardware 层中将Camera 所有信息保存在 g_cam_ctrl.cam_obj[cam_idx] 中,同时返回对应的camera 的opt 操作函数

@ hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_interface.c

int32_t camera_open(uint8_t camera_idx, mm_camera_vtbl_t **camera_vtbl)

{

int32_t rc = 0;

mm_camera_obj_t *cam_obj = NULL;

uint32_t cam_idx = camera_idx;

uint32_t aux_idx = 0;

uint8_t is_multi_camera = 0;

#ifdef QCAMERA_REDEFINE_LOG

mm_camera_debug_open();

#endif

LOGD("E camera_idx = %d\n", camera_idx);

//判断是否是双摄camera

if (is_dual_camera_by_idx(camera_idx)) {

is_multi_camera = 1;

cam_idx = mm_camera_util_get_handle_by_num(0,g_cam_ctrl.cam_index[camera_idx]);

aux_idx = (get_aux_camera_handle(g_cam_ctrl.cam_index[camera_idx]) >> MM_CAMERA_HANDLE_SHIFT_MASK);

LOGH("Dual Camera: Main ID = %d Aux ID = %d", cam_idx, aux_idx);

}

pthread_mutex_lock(&g_intf_lock);

/* opened already */

if(NULL != g_cam_ctrl.cam_obj[cam_idx] && g_cam_ctrl.cam_obj[cam_idx]->ref_count != 0) {

pthread_mutex_unlock(&g_intf_lock);

LOGE("Camera %d is already open", cam_idx);

return -EBUSY;

}

cam_obj = (mm_camera_obj_t *)malloc(sizeof(mm_camera_obj_t));

/* 初始化cam_obj的信息*/

memset(cam_obj, 0, sizeof(mm_camera_obj_t));

cam_obj->ctrl_fd = -1;

cam_obj->ds_fd = -1;

cam_obj->ref_count++;

cam_obj->my_num = 0;

cam_obj->my_hdl = mm_camera_util_generate_handler(cam_idx);

cam_obj->vtbl.camera_handle = cam_obj->my_hdl; /* set handler */

cam_obj->vtbl.ops = &mm_camera_ops;

pthread_mutex_init(&cam_obj->cam_lock, NULL);

pthread_mutex_init(&cam_obj->muxer_lock, NULL);

/* unlock global interface lock, if not, in dual camera use case,

* current open will block operation of another opened camera obj*/

pthread_mutex_lock(&cam_obj->cam_lock);

pthread_mutex_unlock(&g_intf_lock);

//here

rc = mm_camera_open(cam_obj);

if (is_multi_camera) {

/*Open Aux camer's*/

pthread_mutex_lock(&g_intf_lock);

if(NULL != g_cam_ctrl.cam_obj[aux_idx] &&

g_cam_ctrl.cam_obj[aux_idx]->ref_count != 0) {

pthread_mutex_unlock(&g_intf_lock);

LOGE("Camera %d is already open", aux_idx);

rc = -EBUSY;

} else {

pthread_mutex_lock(&cam_obj->muxer_lock);

pthread_mutex_unlock(&g_intf_lock);

rc = mm_camera_muxer_camera_open(aux_idx, cam_obj);

}

}

LOGH("Open succeded: handle = %d", cam_obj->vtbl.camera_handle);

g_cam_ctrl.cam_obj[cam_idx] = cam_obj;

*camera_vtbl = &cam_obj->vtbl;

return 0;

}

继续跟进分析:

终于来到最底层的实现了,mm_camera_open 主要工作是填充 my_obj,并且启动、初始化一些线程相关的东西,关于线程的部分我这里就省略掉了。

此时Open 对应的 /dev/video 节点,获得对应的session_id,创建好相关的 socket ,最终所有信息,保存在 mm_camera_obj_t * cam_obj节构体中。

@ hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera.c

int32_t mm_camera_open(mm_camera_obj_t *my_obj)

{

char dev_name[MM_CAMERA_DEV_NAME_LEN];

int8_t n_try=MM_CAMERA_DEV_OPEN_TRIES;

uint8_t sleep_msec=MM_CAMERA_DEV_OPEN_RETRY_SLEEP;

int cam_idx = 0;

const char *dev_name_value = NULL;

LOGD("begin\n");

dev_name_value = mm_camera_util_get_dev_name_by_num(my_obj->my_num, my_obj->my_hdl);

snprintf(dev_name, sizeof(dev_name), "/dev/%s",dev_name_value);

sscanf(dev_name, "/dev/video%d", &cam_idx);

LOGD("dev name = %s, cam_idx = %d", dev_name, cam_idx);

do{

n_try--;

errno = 0;

my_obj->ctrl_fd = open(dev_name, O_RDWR | O_NONBLOCK);

l_errno = errno;

LOGD("ctrl_fd = %d, errno == %d", my_obj->ctrl_fd, l_errno);

if((my_obj->ctrl_fd >= 0) || (errno != EIO && errno != ETIMEDOUT) || (n_try <= 0 )) {

break;

}

LOGE("Failed with %s error, retrying after %d milli-seconds", strerror(errno), sleep_msec);

usleep(sleep_msec * 1000U);

}while (n_try > 0);

mm_camera_get_session_id(my_obj, &my_obj->sessionid);

LOGH("Camera Opened id = %d sessionid = %d", cam_idx, my_obj->sessionid);

#ifdef DAEMON_PRESENT

/* open domain socket*/

n_try = MM_CAMERA_DEV_OPEN_TRIES;

do {

n_try--;

my_obj->ds_fd = mm_camera_socket_create(cam_idx, MM_CAMERA_SOCK_TYPE_UDP);

l_errno = errno;

LOGD("ds_fd = %d, errno = %d", my_obj->ds_fd, l_errno);

if((my_obj->ds_fd >= 0) || (n_try <= 0 )) {

LOGD("opened, break out while loop");

break;

}

LOGD("failed with I/O error retrying after %d milli-seconds", sleep_msec);

usleep(sleep_msec * 1000U);

} while (n_try > 0);

#else /* DAEMON_PRESENT */

cam_status_t cam_status;

cam_status = mm_camera_module_open_session(my_obj->sessionid,mm_camera_module_event_handler);

#endif /* DAEMON_PRESENT */

pthread_condattr_init(&cond_attr);

pthread_condattr_setclock(&cond_attr, CLOCK_MONOTONIC);

pthread_mutex_init(&my_obj->msg_lock, NULL);

pthread_mutex_init(&my_obj->cb_lock, NULL);

pthread_mutex_init(&my_obj->evt_lock, NULL);

pthread_cond_init(&my_obj->evt_cond, &cond_attr);

pthread_condattr_destroy(&cond_attr);

LOGD("Launch evt Thread in Cam Open");

snprintf(my_obj->evt_thread.threadName, THREAD_NAME_SIZE, "CAM_Dispatch");

mm_camera_cmd_thread_launch(&my_obj->evt_thread, mm_camera_dispatch_app_event, (void *)my_obj);

/* launch event poll thread

* we will add evt fd into event poll thread upon user first register for evt */

LOGD("Launch evt Poll Thread in Cam Open");

snprintf(my_obj->evt_poll_thread.threadName, THREAD_NAME_SIZE, "CAM_evntPoll");

mm_camera_poll_thread_launch(&my_obj->evt_poll_thread, MM_CAMERA_POLL_TYPE_EVT);

mm_camera_evt_sub(my_obj, TRUE);

/* unlock cam_lock, we need release global intf_lock in camera_open(),

* in order not block operation of other Camera in dual camera use case.*/

pthread_mutex_unlock(&my_obj->cam_lock);

LOGD("end (rc = %d)\n", rc);

return rc;

}

2.2.3.5.2 Camera 操作节构体 cam_obj->vtbl.ops

@ hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_interface.c

static mm_camera_ops_t mm_camera_ops = {

.query_capability = mm_camera_intf_query_capability,

.register_event_notify = mm_camera_intf_register_event_notify,

.close_camera = mm_camera_intf_close,

.set_parms = mm_camera_intf_set_parms,

.get_parms = mm_camera_intf_get_parms,

.do_auto_focus = mm_camera_intf_do_auto_focus,

.cancel_auto_focus = mm_camera_intf_cancel_auto_focus,

.prepare_snapshot = mm_camera_intf_prepare_snapshot,

.start_zsl_snapshot = mm_camera_intf_start_zsl_snapshot,

.stop_zsl_snapshot = mm_camera_intf_stop_zsl_snapshot,

.map_buf = mm_camera_intf_map_buf,

.map_bufs = mm_camera_intf_map_bufs,

.unmap_buf = mm_camera_intf_unmap_buf,

.add_channel = mm_camera_intf_add_channel,

.delete_channel = mm_camera_intf_del_channel,

.get_bundle_info = mm_camera_intf_get_bundle_info,

.add_stream = mm_camera_intf_add_stream,

.link_stream = mm_camera_intf_link_stream,

.delete_stream = mm_camera_intf_del_stream,

.config_stream = mm_camera_intf_config_stream,

.qbuf = mm_camera_intf_qbuf,

.cancel_buffer = mm_camera_intf_cancel_buf,

.get_queued_buf_count = mm_camera_intf_get_queued_buf_count,

.map_stream_buf = mm_camera_intf_map_stream_buf,

.map_stream_bufs = mm_camera_intf_map_stream_bufs,

.unmap_stream_buf = mm_camera_intf_unmap_stream_buf,

.set_stream_parms = mm_camera_intf_set_stream_parms,

.get_stream_parms = mm_camera_intf_get_stream_parms,

.start_channel = mm_camera_intf_start_channel,

.stop_channel = mm_camera_intf_stop_channel,

.request_super_buf = mm_camera_intf_request_super_buf,

.cancel_super_buf_request = mm_camera_intf_cancel_super_buf_request,

.flush_super_buf_queue = mm_camera_intf_flush_super_buf_queue,

.configure_notify_mode = mm_camera_intf_configure_notify_mode,

.process_advanced_capture = mm_camera_intf_process_advanced_capture,

.get_session_id = mm_camera_intf_get_session_id,

.set_dual_cam_cmd = mm_camera_intf_set_dual_cam_cmd,

.flush = mm_camera_intf_flush,

.register_stream_buf_cb = mm_camera_intf_register_stream_buf_cb,

.register_frame_sync = mm_camera_intf_reg_frame_sync,

.handle_frame_sync_cb = mm_camera_intf_handle_frame_sync_cb

};

2.2.3.5.3 [Hardware] 初始化Framewroks层的Callback 函数 QCamera3HardwareInterface::initialize( )

@ hardware/qcom/camera/QCamera2/HAL3/QCamera3HWI.cpp

/*===========================================================================

* FUNCTION : initialize

* DESCRIPTION: Initialize frameworks callback functions

* @callback_ops : callback function to frameworks

*==========================================================================*/

int QCamera3HardwareInterface::initialize(const struct camera3_callback_ops *callback_ops)

{

ATRACE_CAMSCOPE_CALL(CAMSCOPE_HAL3_INIT);

LOGI("E :mCameraId = %d mState = %d", mCameraId, mState);

rc = initParameters();

mCallbackOps = callback_ops;

=====> 该Callback 是从 frameworks/av/services/camera/libcameraservice/CameraService.cpp 中传递下来的

if (mModule->getModuleApiVersion() >= CAMERA_MODULE_API_VERSION_2_1) {

mModule->setCallbacks(this);

}

mChannelHandle = mCameraHandle->ops->add_channel( mCameraHandle->camera_handle, NULL, NULL, this);

mCameraInitialized = true;

mState = INITIALIZED;

LOGI("X");

return 0;

}

2.2.4 EventHandler( )

在 EventHandler 中,根据具体的事件,调用不同的 callback 函数

@ frameworks/base/core/java/android/hardware/Camera.java

private class EventHandler extends Handler

{

private final Camera mCamera;

public EventHandler(Camera c, Looper looper) {

super(looper);

mCamera = c;

}

@Override

public void handleMessage(Message msg) {

switch(msg.what) {

case CAMERA_MSG_SHUTTER:

if (mShutterCallback != null) {

mShutterCallback.onShutter();

}

return;

case CAMERA_MSG_RAW_IMAGE:

if (mRawImageCallback != null) {

mRawImageCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_COMPRESSED_IMAGE:

if (mJpegCallback != null) {

mJpegCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_PREVIEW_FRAME:

PreviewCallback pCb = mPreviewCallback;

if (pCb != null) {

if (mOneShot) {

// Clear the callback variable before the callback

// in case the app calls setPreviewCallback from

// the callback function

mPreviewCallback = null;

} else if (!mWithBuffer) {

// We're faking the camera preview mode to prevent

// the app from being flooded with preview frames.

// Set to oneshot mode again.

setHasPreviewCallback(true, false);

}

pCb.onPreviewFrame((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_POSTVIEW_FRAME:

if (mPostviewCallback != null) {

mPostviewCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_FOCUS:

AutoFocusCallback cb = null;

synchronized (mAutoFocusCallbackLock) {

cb = mAutoFocusCallback;

}

if (cb != null) {

boolean success = msg.arg1 == 0 ? false : true;

cb.onAutoFocus(success, mCamera);

}

return;

case CAMERA_MSG_ZOOM:

if (mZoomListener != null) {

mZoomListener.onZoomChange(msg.arg1, msg.arg2 != 0, mCamera);

}

return;

case CAMERA_MSG_PREVIEW_METADATA:

if (mFaceListener != null) {

mFaceListener.onFaceDetection((Face[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_ERROR :

Log.e(TAG, "Error " + msg.arg1);

if (mErrorCallback != null) {

mErrorCallback.onError(msg.arg1, mCamera);

}

return;

case CAMERA_MSG_FOCUS_MOVE:

if (mAutoFocusMoveCallback != null) {

mAutoFocusMoveCallback.onAutoFocusMoving(msg.arg1 == 0 ? false : true, mCamera);

}

return;

/* ### QC ADD-ONS: START */

case CAMERA_MSG_STATS_DATA:

int statsdata[] = new int[257];

for(int i =0; i<257; i++ ) {

statsdata[i] = byteToInt( (byte[])msg.obj, i*4);

}

if (mCameraDataCallback != null) {

mCameraDataCallback.onCameraData(statsdata, mCamera);

}

return;

case CAMERA_MSG_META_DATA:

if (mCameraMetaDataCallback != null) {

mCameraMetaDataCallback.onCameraMetaData((byte[])msg.obj, mCamera);

}

return;

/* ### QC ADD-ONS: END */

default:

Log.e(TAG, "Unknown message type " + msg.what);

return;

}

}

}

2.3 内核层msm_open

从上文的my_obj->ctrl_fd = open(dev_name, O_RDWR | O_NONBLOCK);

调用到内核:@kernel/msm-4.14/drivers/media/platform/msm/camera_v2/msm.c

static int msm_open(struct file *filep)

{

int rc = -1;

unsigned long flags;

struct msm_video_device *pvdev = video_drvdata(filep);

DEBUG("enter");

if (WARN_ON(!pvdev))

return rc;

/* !!! only ONE open is allowed !!! */

if (atomic_cmpxchg(&pvdev->opened, 0, 1))

return -EBUSY;

spin_lock_irqsave(&msm_pid_lock, flags);

msm_pid = get_pid(task_pid(current));

spin_unlock_irqrestore(&msm_pid_lock, flags);

/* create event queue */

rc = v4l2_fh_open(filep);

if (rc < 0)

return rc;

spin_lock_irqsave(&msm_eventq_lock, flags);

msm_eventq = filep->private_data;

spin_unlock_irqrestore(&msm_eventq_lock, flags);

/* register msm_v4l2_pm_qos_request */

msm_pm_qos_add_request();

return rc;

}

后面的流程就不看了。

至此,整个open Camera 流程就完了

3 OpenSession 流程分析

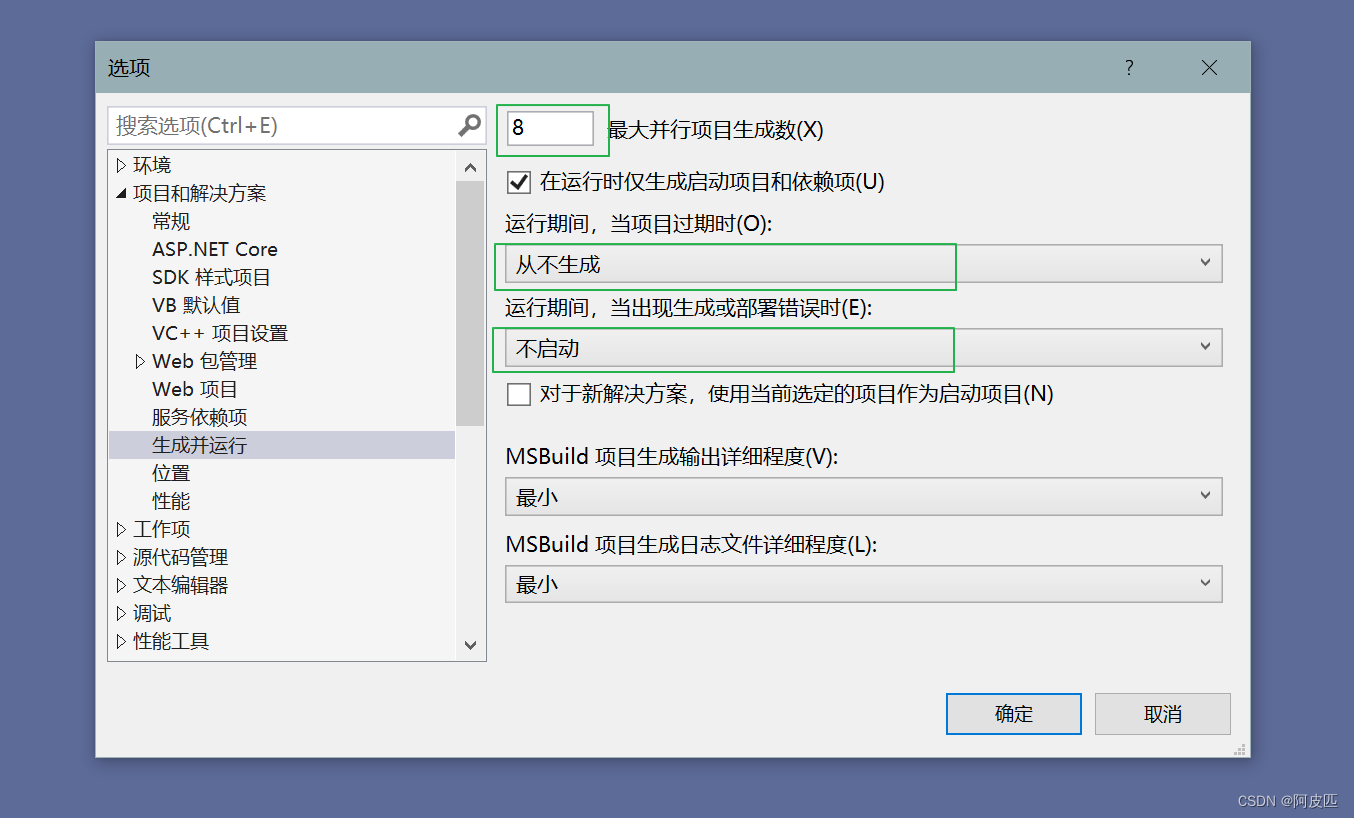

open过程中会 start session 大概流程如下:

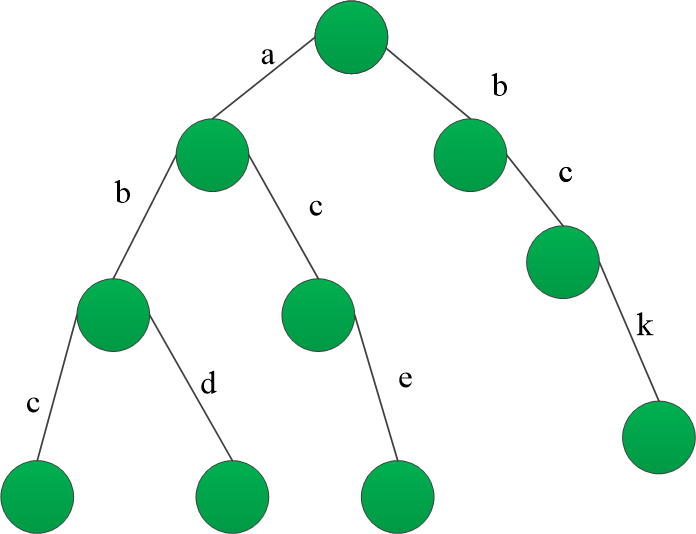

3.1 mm-camera2框架

mm-camera架构有2个版本,我们这里研究的是新版本:

(1)最老的版本是有一个守护进程mm-qcamera-daemon的,

如msm8909平台,后来新版的架构改过,移除了这个守护进程,如msm8937(sdm429)平台。

(2) Android O 中,系统启动时,就会启动 CameraProvider 服务。它将 Camera HAL 从 cameraserver 进程中分离出来,做为一个独立进程 android.hardware.camera.provider@2.4-service 来控制 HAL。这两个进程之间经过 HIDL 机制进行通讯。

mm-camera代码位于 vendor/qcom/proprietary/mm-camera/mm-camera2 目录下,

在此目录下有 media-controller,server-tuning,server-imaging,我们需要关注的是 media-controller 目录,整个树形结构如下:

|- mct ——应该就是camera的引擎() 里面包含了引擎、pipiline、bus、module、stream及event等定义及封装。

|- modules —— 这里面就是划分好的一些模块代码,各模块大致功能如下 :

|- sensor —— sensor 的驱动模块(src模块)

|- actuator_libs,actuators 马达基本配置以及效果参数

|- sensor_libs,chromatix camera模组基本配置以及效果参数,模组这块最重要的两部分

|- eeprom_libs,eeprom eeprom配置以及参数,现在基本不用

|- strobe_flash,led_flash strobe,led flash驱动

|- iface —— ISP interface模块

|- isp —— 主要是ISP的处理,其内部又包含了众多的模块(inter模块)

|- stats —— 一些统计算法模块,如3A,ASD,AFD,IS,GRRO等数据统计的处理(sink模块)

|- pproc —— post process处理(inter模块)

|- imglib —— 主要是图片的一些后端处理,如HDR,人脸识别等(sink模块)

3.2 media controller线程

MCT线程是camera新架构的引擎部分,负责对管道的监控,由此来完成一个camera设备的控制运转。下面看的是mct controller线程的创建

3.2.1 mm_camera_module_open_session

Open阶段完成后,会继续opensession。

@hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera.c

int32_t mm_camera_open(mm_camera_obj_t *my_obj)

{

......

const char *dev_name_value = NULL;

dev_name_value = mm_camera_util_get_dev_name_by_num(my_obj->my_num,

my_obj->my_hdl);

snprintf(dev_name, sizeof(dev_name), "/dev/%s",

dev_name_value);

my_obj->ctrl_fd = open(dev_name, O_RDWR | O_NONBLOCK);

cam_status_t cam_status;

......

cam_status = mm_camera_module_open_session(my_obj->sessionid,

mm_camera_module_event_handler);

......

}

@ hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_interface.c

/*===========================================================================

* FUNCTION : mm_camera_module_open_session

*

* DESCRIPTION: wrapper function to call shim layer API to open session.

*

* PARAMETERS :

* @sessionid : sessionID to open session

* @evt_cb : Event callback function

*

* RETURN : int32_t type of status

* 0 -- success

* non-zero error code -- failure

*==========================================================================*/

cam_status_t mm_camera_module_open_session(int sessionid,

mm_camera_shim_event_handler_func evt_cb)

{

cam_status_t rc = -1;

if(g_cam_ctrl.cam_shim_ops.mm_camera_shim_open_session) {

rc = g_cam_ctrl.cam_shim_ops.mm_camera_shim_open_session(

sessionid, evt_cb);

}

return rc;

}

g_cam_ctrl.cam_shim_ops.mm_camera_shim_open_session

1 @hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_interface.c

static mm_camera_ctrl_t g_cam_ctrl;

2 @hardware/qcom/camera/QCamera2/stack/mm-camera-interface/inc/mm_camera.h

typedef struct {

int8_t num_cam;

mm_camera_shim_ops_t cam_shim_ops;

int8_t num_cam_to_expose;

char video_dev_name[MM_CAMERA_MAX_NUM_SENSORS][MM_CAMERA_DEV_NAME_LEN];

mm_camera_obj_t *cam_obj[MM_CAMERA_MAX_NUM_SENSORS];

struct camera_info info[MM_CAMERA_MAX_NUM_SENSORS];

cam_sync_type_t cam_type[MM_CAMERA_MAX_NUM_SENSORS];

cam_sync_mode_t cam_mode[MM_CAMERA_MAX_NUM_SENSORS];

uint8_t is_yuv[MM_CAMERA_MAX_NUM_SENSORS]; // 1=CAM_SENSOR_YUV, 0=CAM_SENSOR_RAW

uint32_t cam_index[MM_CAMERA_MAX_NUM_SENSORS]; //Actual cam index are stored in bits

} mm_camera_ctrl_t;

3 @hardware/qcom/camera/QCamera2/stack/common/mm_camera_shim.h

typedef struct {

cam_status_t (*mm_camera_shim_open_session) (int session,

mm_camera_shim_event_handler_func evt_cb);

int32_t (*mm_camera_shim_close_session)(int session);

int32_t (*mm_camera_shim_send_cmd)(cam_shim_packet_t *event);

} mm_camera_shim_ops_t;

3.2.2 mct_shimlayer_process_module_init

上面其中的mm_camera_shim_open_session就是mct_shimlayer_start_session @vendor/qcom/proprietary/mm-camera/mm-camera2/media-controller/mct_shim_layer/mct_shim_layer.c

int mct_shimlayer_process_module_init(mm_camera_shim_ops_t

*shim_ops_tbl)

{

...

shim_ops_tbl->mm_camera_shim_open_session = mct_shimlayer_start_session;

shim_ops_tbl->mm_camera_shim_close_session = mct_shimlayer_stop_session;

shim_ops_tbl->mm_camera_shim_send_cmd = mct_shimlayer_process_event;

...

}

3.2.3 mct_shimlayer_start_session

@vendor/qcom/proprietary/mm-camera/mm-camera2/media-controller/mct_shim_layer/mct_shim_layer.c

1.创建mct_controller

cam_status_t mct_shimlayer_start_session(int session,

mm_camera_shim_event_handler_func event_cb)

{

int32_t enabled_savemem;

char savemem[128];

cam_status_t ret = CAM_STATUS_FAILED;

char prop[PROPERTY_VALUE_MAX];

int enable_memleak = 0;

//内存泄漏检查

#ifdef MEMLEAK_FLAG

property_get("persist.vendor.camera.memleak.enable", prop, "0");

enable_memleak = atoi(prop);

if (enable_memleak) {

CLOGH(CAM_MCT_MODULE, "Memory leak tracking enabled.");

enable_memleak_trace(0);

}

#endif

pthread_mutex_lock(&session_mutex);

property_get("vendor.camera.cameradaemon.SaveMemAtBoot", savemem, "0");

enabled_savemem = atoi(savemem);

if (enabled_savemem == 1) {

if (mct_shimlayer_module_init() == FALSE) {

pthread_mutex_unlock(&session_mutex);

return CAM_STATUS_FAILED;

}

}

//创建mct_controller

ret = mct_controller_new(modules, session, config_fd, event_cb);

if (ret == CAM_STATUS_BUSY || ret == CAM_STATUS_FAILED) {

pthread_mutex_unlock(&session_mutex);

CLOGE(CAM_SHIM_LAYER,"Session creation for session =%d failed with err %d",

session, ret);

/*Signalling memleak thread to print if any memory leak present */

#ifdef MEMLEAK_FLAG

if (enable_memleak) {

CLOGH(CAM_MCT_MODULE, "Signal to print memory leak");

if (pthread_mutex_trylock (&server_memleak_mut) == 0) {

server_memleak_event = PRINT_LEAK_MEMORY;

pthread_cond_signal(&server_memleak_con);

pthread_mutex_unlock (&server_memleak_mut);

}

}

#endif

return ret;

}

pthread_mutex_unlock(&session_mutex);

return ret;

}

3.2.4 mct_controller_new

1.创建mct_pipeline

2.mct_pipeline 启动session @vendor/qcom/proprietary/mm-camera/mm-camera2/media-controller/mct/pipeline/mct_pipeline.c

cam_status_t mct_controller_new(mct_list_t *mods,

unsigned int session_idx, int serv_fd, void *event_cb)

{

mct_controller_t *mct = NULL;

mct = (mct_controller_t *)malloc(sizeof(mct_controller_t));

memset(mct, 0, sizeof(mct_controller_t));

//创建mct_pipeline

mct->pipeline = mct_pipeline_new(session_idx, mct);

...

//mct_pipeline 启动session

ret_type = mct_pipeline_start_session(mct->pipeline);

}

3.2.4.1 mct_pipeline_new

1.创建mct_pipeline

2.初始化pipeline的信息

mct_pipeline_t* mct_pipeline_new (unsigned int session_idx,

mct_controller_t *pController)

{

//创建mct_pipeline

mct_pipeline_t *pipeline;

pipeline = malloc(sizeof(mct_pipeline_t));

memset(pipeline, 0, sizeof(mct_pipeline_t));

//初始化pipeline的信息

}

3.2.4.2 mct_pipeline_start_session

cam_status_t mct_pipeline_start_session(mct_pipeline_t *pipeline)

{

boolean rc;

int ret;

int rdi_standalone_streams = 0;

struct timespec timeToWait;

char prop[PROPERTY_VALUE_MAX];

CLOGE(CAM_MCT_MODULE, "sundp_ mct_pipeline_start_session\n");

if (!pipeline) {

CLOGE(CAM_MCT_MODULE, "NULL pipeline ptr");

return CAM_STATUS_FAILED;

}

property_get("persist.vendor.camera.mct.multirdi", prop, "0");

rdi_standalone_streams = atoi (prop);

CLOGE(CAM_MCT_MODULE, "sundp_ rdi_standalone_streams = %d\n",rdi_standalone_streams);

ATRACE_CAMSCOPE_BEGIN(CAMSCOPE_MCT_START_SESSION);

pthread_mutex_init(&pipeline->thread_data.mutex, NULL);

pthread_condattr_init(&pipeline->thread_data.condattr);

pthread_condattr_setclock(&pipeline->thread_data.condattr, CLOCK_MONOTONIC);

pthread_cond_init(&pipeline->thread_data.cond_v, &pipeline->thread_data.condattr);

pipeline->thread_data.started_num = 0;

pipeline->thread_data.modules_num = 0;

pipeline->thread_data.started_num_success = 0;

//获得module的数量,这里的module就是sensor,iface等等

rc = mct_list_traverse(pipeline->modules, mct_pipeline_get_module_num,

pipeline);

//开启module

rc &= mct_list_traverse(pipeline->modules, mct_pipeline_modules_start,

pipeline);

rc = mct_util_get_timeout(MCT_THREAD_TIMEOUT, &timeToWait);

3.2.4.3 mct_pipeline_modules_start

主要通过调用pthread_create,创建6大线程:

pthread_create(sensor)

pthread_create(iface)

pthread_create(isp)

pthread_create(stats)

pthread_create(pproc)

pthread_create(imglib)

然后在start这些session

static boolean mct_pipeline_modules_start(void *data1, void *data2)

{

int rc = 0;

pthread_attr_t attr;

char thread_name[20];

mct_pipeline_t *pipeline = (mct_pipeline_t *)data2;

mct_pipeline_thread_data_t *thread_data = &(pipeline->thread_data);

thread_data->module = (mct_module_t *)data1;

thread_data->session_id = pipeline->session;

pthread_attr_init(&attr);

pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_DETACHED);

pthread_mutex_lock(&thread_data->mutex);

CLOGE(CAM_MCT_MODULE,"sundp_ pthread_create\n");

//主要通过调用pthread_create,创建6大线程

rc = pthread_create(&pipeline->thread_data.pid, &attr,

&mct_pipeline_start_session_thread, (void *)thread_data);

snprintf(thread_name, sizeof(thread_name), "CAM_start%s",

MCT_MODULE_NAME(thread_data->module));

CLOGE(CAM_MCT_MODULE,"sundp_ %s\n",thread_name);

if(!rc) {

pthread_setname_np(pipeline->thread_data.pid,thread_name);

pthread_cond_wait(&thread_data->cond_v, &thread_data->mutex);

}

pthread_mutex_unlock(&thread_data->mutex);

return TRUE;

}

3.2.4.4 mct_pipeline_start_session_thread

start_session

static void* mct_pipeline_start_session_thread(void *data)

{

mct_pipeline_thread_data_t *thread_data = (mct_pipeline_thread_data_t*)data;

mct_module_t *module = thread_data->module;

unsigned int session_id = thread_data->session_id;

boolean rc = FALSE;

ATRACE_BEGIN_SNPRINTF(30, "Camera:MCTModStart:%s",

MCT_MODULE_NAME(thread_data->module));

CLOGI(CAM_MCT_MODULE, "sundp_ E %s" , MCT_MODULE_NAME(thread_data->module));

pthread_mutex_lock(&thread_data->mutex);

pthread_cond_signal(&thread_data->cond_v);

pthread_mutex_unlock(&thread_data->mutex);

if (module->start_session) {

CLOGI(CAM_MCT_MODULE, "sudp_ Calling start_session on Module %s",

MCT_MODULE_NAME(module));

rc = module->start_session(module, session_id);

CLOGI(CAM_MCT_MODULE, "Module %s start_session rc = %d",

MCT_MODULE_NAME(module), rc);

}

pthread_mutex_lock(&thread_data->mutex);

thread_data->started_num++;

if (rc == TRUE)

thread_data->started_num_success++;

CLOGI(CAM_MCT_MODULE, "started_num = %d, success = %d",

thread_data->started_num, thread_data->started_num_success);

if(thread_data->started_num == thread_data->modules_num)

pthread_cond_signal(&thread_data->cond_v);

pthread_mutex_unlock(&thread_data->mutex);

ATRACE_END();

CLOGI(CAM_MCT_MODULE, "sundp_ X %s" , MCT_MODULE_NAME(module));

return NULL;

}

3.3 else

后边的就不看了。到这open也open成功了。调用流程大概是

flowchart LR

mm_camera_open -->

open;

mm_camera_open --> mm_camera_module_open_session --> mct_shimlayer_start_session --> mct_controller_new

mct_controller_new --> mct_pipeline_new

mct_controller_new --> mct_pipeline_start_session

mct_pipeline_start_session --> mct_pipeline_get_module_num

mct_pipeline_start_session --> mct_pipeline_modules_start

mct_pipeline_modules_start --> sensor --> start_session

mct_pipeline_modules_start --> iface --> start_session

mct_pipeline_modules_start --> isp --> start_session

mct_pipeline_modules_start --> stats --> start_session

mct_pipeline_modules_start --> pproc --> start_session

d_cond_signal(&thread_data->cond_v);

pthread_mutex_unlock(&thread_data->mutex);

if (module->start_session) {

CLOGI(CAM_MCT_MODULE, "sudp_ Calling start_session on Module %s",

MCT_MODULE_NAME(module));

rc = module->start_session(module, session_id);

CLOGI(CAM_MCT_MODULE, "Module %s start_session rc = %d",

MCT_MODULE_NAME(module), rc);

}

pthread_mutex_lock(&thread_data->mutex);

thread_data->started_num++;

if (rc == TRUE)

thread_data->started_num_success++;

CLOGI(CAM_MCT_MODULE, "started_num = %d, success = %d",

thread_data->started_num, thread_data->started_num_success);

if(thread_data->started_num == thread_data->modules_num)

pthread_cond_signal(&thread_data->cond_v);

pthread_mutex_unlock(&thread_data->mutex);

ATRACE_END();

CLOGI(CAM_MCT_MODULE, "sundp_ X %s" , MCT_MODULE_NAME(module));

return NULL;

}

3.3 else

后边的就不看了。到这open也open成功了。调用流程大概是