🤯 新增了 14 种架构

在这次发布中,我们添加了大量的新架构:BLOOM、MPT、BeiT、CamemBERT、CodeLlama、GPT NeoX、GPT-J、HerBERT、mBART、mBART-50、OPT、ResNet、WavLM 和 XLM。这将支持架构的总数提升到了 46 个!以下是一些示例代码以帮助你入门:

文本生成(MPT 模型):

import { pipeline } from '@xenova/transformers';

const generator = await pipeline('text-generation', 'Xenova/ipt-350m', {

quantized: false, // 使用非量化以确保与 Python 版本匹配

});

const output = await generator('La nostra azienda');

// { generated_text: "La nostra azienda è specializzata nella vendita di prodotti per l'igiene orale e per la salute." }其他文本生成模型:BLOOM、GPT-NeoX、CodeLlama、GPT-J、OPT。

CamemBERT 用于遮罩语言模型、文本分类、令牌分类、问题回答和特征提取(模型)。例如:

import { pipeline } from '@xenova/transformers';

let pipe = await pipeline('token-classification', 'Xenova/camembert-ner-with-dates');

let output = await pipe("Je m'appelle jean-baptiste et j'habite à montréal depuis fevr 2012");

// [

// { entity: 'I-PER', score: 0.9258053302764893, index: 5, word: 'jean' },

// { entity: 'I-PER', score: 0.9048717617988586, index: 6, word: '-' },

// { entity: 'I-PER', score: 0.9227054119110107, index: 7, word: 'ba' },

// { entity: 'I-PER', score: 0.9385354518890381, index: 8, word: 'pt' },

// { entity: 'I-PER', score: 0.9139659404754639, index: 9, word: 'iste' },

// { entity: 'I-LOC', score: 0.9877734780311584, index: 15, word: 'montré' },

// { entity: 'I-LOC', score: 0.9891639351844788, index: 16, word: 'al' },

// { entity: 'I-DATE', score: 0.9858269691467285, index: 18, word: 'fe' },

// { entity: 'I-DATE', score: 0.9780661463737488, index: 19, word: 'vr' },

// { entity: 'I-DATE', score: 0.980688214302063, index: 20, word: '2012' }

// ]WavLM 用于特征提取(模型)。例如:

import { AutoProcessor, AutoModel, read_audio } from '@xenova/transformers';

// Read and preprocess audio

const processor = await AutoProcessor.from_pretrained('Xenova/wavlm-base');

const audio = await read_audio('https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/jfk.wav', 16000);

const inputs = await processor(audio);

// Run model with inputs

const model = await AutoModel.from_pretrained('Xenova/wavlm-base');

const output = await model(inputs);

// {

// last_hidden_state: Tensor {

// dims: [ 1, 549, 768 ],

// type: 'float32',

// data: Float32Array(421632) [-0.349443256855011, -0.39341306686401367, 0.022836603224277496, ...],

// size: 421632

// }

// }mBART 和 mBart50 用于多语言翻译(模型)。例如:

import { pipeline } from '@xenova/transformers';

let translator = await pipeline('translation', 'Xenova/mbart-large-50-many-to-many-mmt');

let output = await translator('संयुक्त राष्ट्र के प्रमुख का कहना है कि सीरिया में कोई सैन्य समाधान नहीं है', {

src_lang: 'hi_IN', // 印地语

tgt_lang: 'fr_XX', // 法语

});

// [{ translation_text: 'Le chef des Nations affirme qu 'il n 'y a military solution in Syria.' }]BeiT 用于图像分类(模型):

import { pipeline } from '@xenova/transformers';

let url = 'https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/tiger.jpg';

let pipe = await pipeline('image-classification', 'Xenova/beit-base-patch16-224');

let output = await pipe(url);

// [{ label: 'tiger, Panthera tigris', score: 0.7168469429016113 }]ResNet 用于图像分类(模型):

import { pipeline } from '@xenova/transformers';

let url = 'https://huggingface.co/datasets/Xenova/transformers.js-docs/resolve/main/tiger.jpg';

let pipe = await pipeline('image-classification', 'Xenova/resnet-50');

let output = await pipe(url);

// [{ label: 'tiger, Panthera tigris', score: 0.7576608061790466 }]😍 超过 150 个新转换的模型

为了开始使用这些新架构(以及扩展其他模型的覆盖范围),我们在 Hugging Face Hub 上发布了超过 150 个新模型!点击这里查看完整列表。https://huggingface.co/models?library=transformers.js

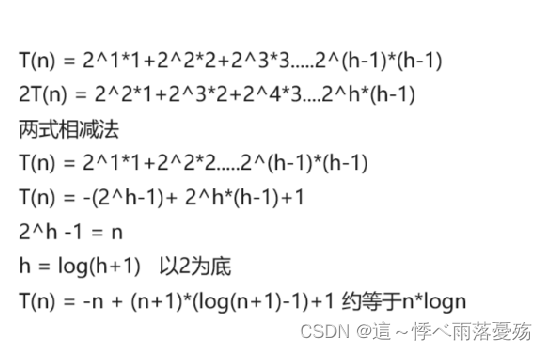

🏋️ 模型大小大幅度减少(最多 -40%)

借助 🤗 Optimum 的最新更新,我们能够在各种模型中去除重复的权重。在某些情况下,比如 whisper-tiny 的解码器,这导致了大小减少 40%!以下是我们看到的一些改进:

Whisper-tiny 解码器:50MB → 30MB(-40%)

NLLB 解码器:732MB → 476MB(-35%)

bloom:819MB → 562MB(-31%)

T5 解码器:59MB → 42MB(-28%)

distilbert-base:91MB → 68MB(-25%)

bart-base 解码器:207MB → 155MB(-25%)

roberta-base:165MB → 126MB(-24%)

gpt2:167MB → 127MB(-24%)

bert-base:134MB → 111MB(-17%)

还有更多!

在这里尝试一些较小的 whisper 模型(用于自动语音识别)。

其他更新

文档更新,加入了 Transformers.js 与 LangChain JS 的集成。

文档页面:https://js.langchain.com/docs/modules/data_connection/text_embedding/integrations/transformers

import { HuggingFaceTransformersEmbeddings } from "langchain/embeddings/hf_transformers";

const model = new HuggingFaceTransformersEmbeddings({

modelName: "Xenova/all-MiniLM-L6-v2",

});

/* Embed queries */

const res = await model.embedQuery(

"What would be a good company name for a company that makes colorful socks?"

);

console.log({ res });

/* Embed documents */

const documentRes = await model.embedDocuments(["Hello world", "Bye bye"]);

console.log({ documentRes });重构了 PreTrainedModel 以在添加新模型时显著减少代码量。