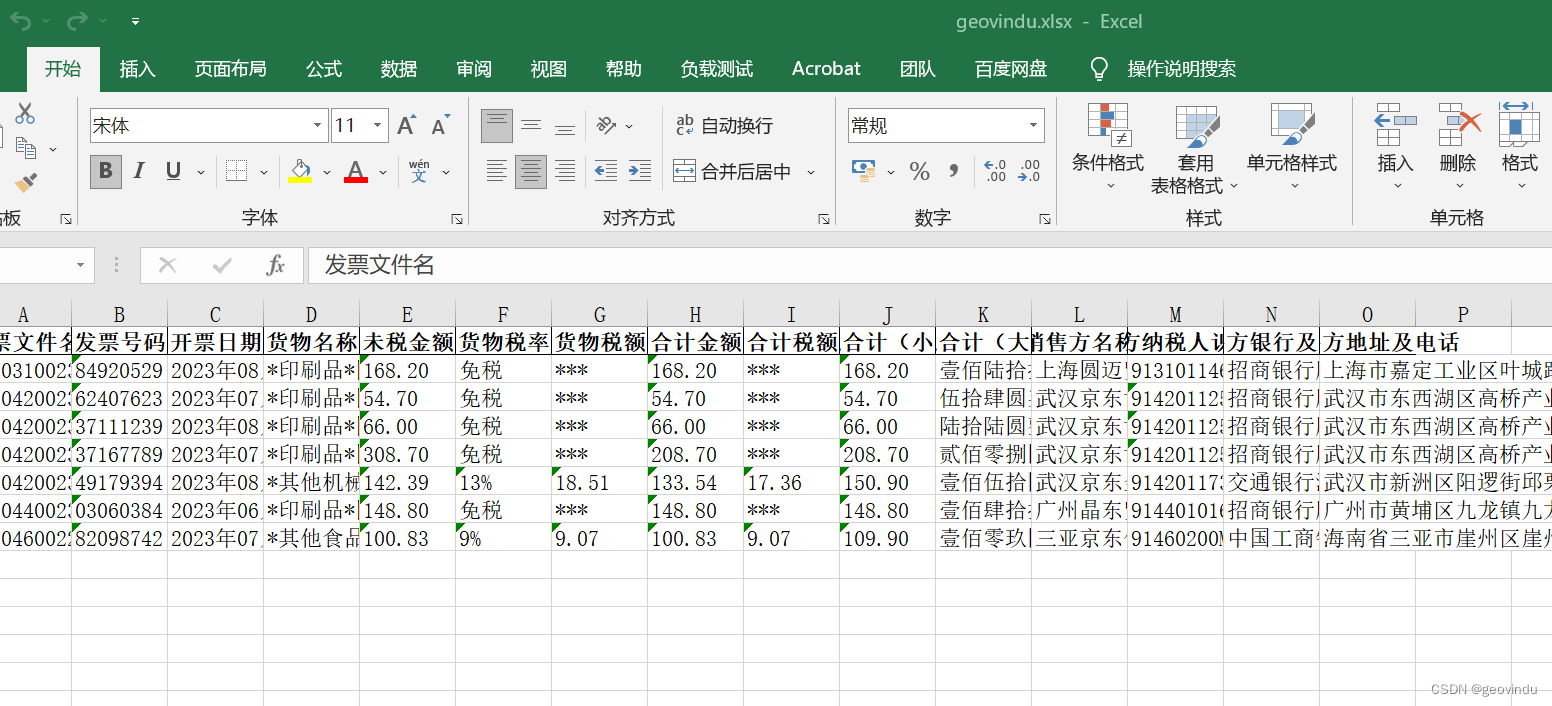

1、代码演示

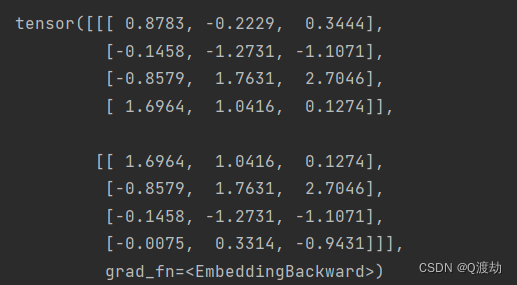

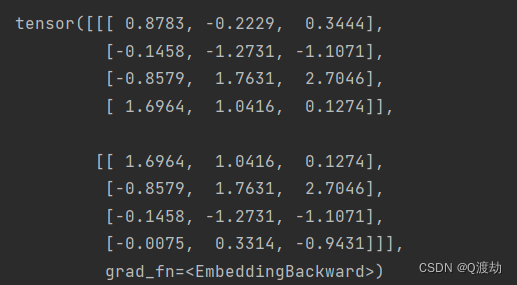

embedding = nn.Embedding(10,3)

print(embedding)

input = torch.LongTensor([[1,2,3,4],[4,3,2,9]])

embedding(input)

2、构建Embeddings类来实现文本嵌入层

# 构建Embedding类来实现文本嵌入层

class Embeddings(nn.Module):

def __init__(self,d_model,vocab):

"""

:param d_model: 词嵌入的维度

:param vocab: 词表的大小

"""

super(Embeddings,self).__init__()

self.lut = nn.Embedding(vocab,d_model)

self.d_model = d_model

def forward(self,x):

"""

:param x: 因为Embedding层是首层,所以代表输入给模型的文本通过词汇映射后的张量

:return:

"""

return self.lut(x) * math.sqrt(self.d_model)

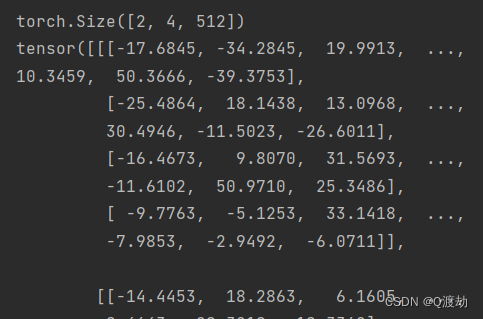

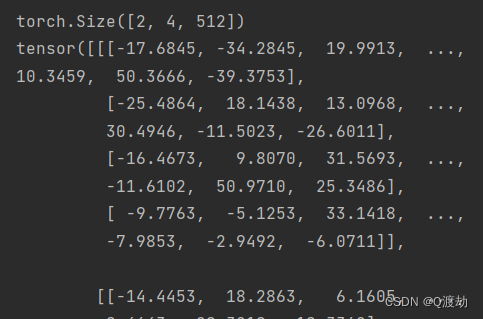

x = Variable(torch.LongTensor([[100,2,42,508],[491,998,1,221]]))

emb = Embeddings(512,1000)

embr = emb(x)

print(embr.shape) # torch.Size([2, 4, 512])

print(embr)

print(embr[0][0].shape) # torch.Size([512])