这几天又在玩树莓派,先是搞了个物联网,又在尝试在树莓派上搞一些简单的神经网络,这次搞得是卷积识别mnist手写数字识别

训练代码在电脑上,cpu就能训练,很快的:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

import numpy as np

# 设置随机种子

torch.manual_seed(42)

# 定义数据预处理

transform = transforms.Compose([

transforms.ToTensor(),

# transforms.Normalize((0.1307,), (0.3081,))

])

# 加载训练数据集

train_dataset = datasets.MNIST('data', train=True, download=True, transform=transform)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=64, shuffle=True)

# 构建卷积神经网络模型

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.pool = nn.MaxPool2d(2)

self.fc = nn.Linear(10 * 12 * 12, 10)

def forward(self, x):

x = self.pool(torch.relu(self.conv1(x)))

x = x.view(-1, 10 * 12 * 12)

x = self.fc(x)

return x

model = Net()

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

# 训练模型

def train(model, device, train_loader, optimizer, criterion, epochs):

model.train()

for epoch in range(epochs):

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

if batch_idx % 100 == 0:

print(f'Train Epoch: {epoch+1} [{batch_idx * len(data)}/{len(train_loader.dataset)} '

f'({100. * batch_idx / len(train_loader):.0f}%)]\tLoss: {loss.item():.6f}')

# 在GPU上训练(如果可用),否则使用CPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

# 训练模型

train(model, device, train_loader, optimizer, criterion, epochs=5)

# 保存模型为NumPy数据

model_state = model.state_dict()

numpy_model_state = {key: value.cpu().numpy() for key, value in model_state.items()}

np.savez('model.npz', **numpy_model_state)

print("Model saved as model.npz")然后需要自己在dataset里导出一些图片:我保存在了mnist_pi文件夹下,“_”后面的是标签,主要是在pc端导出保存到树莓派下

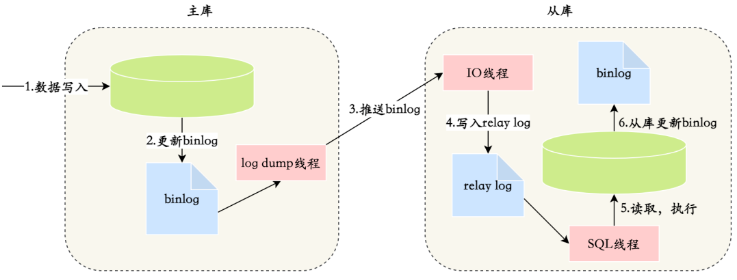

树莓派推理端的代码,需要numpy手动重新搭建网络,并且需要手动实现conv2d卷积神经网络和maxpool2d最大池化,然后加载那些保存的矩阵参数,做矩阵乘法和加法

![]()

import numpy as np

import os

from PIL import Image

def conv2d(input, weight, bias, stride=1, padding=0):

batch_size, in_channels, in_height, in_width = input.shape

out_channels, in_channels, kernel_size, _ = weight.shape

# 计算输出特征图的大小

out_height = (in_height + 2 * padding - kernel_size) // stride + 1

out_width = (in_width + 2 * padding - kernel_size) // stride + 1

# 添加padding

padded_input = np.pad(input, ((0, 0), (0, 0), (padding, padding), (padding, padding)), mode='constant')

# 初始化输出特征图

output = np.zeros((batch_size, out_channels, out_height, out_width))

# 执行卷积操作

for b in range(batch_size):

for c_out in range(out_channels):

for h_out in range(out_height):

for w_out in range(out_width):

h_start = h_out * stride

h_end = h_start + kernel_size

w_start = w_out * stride

w_end = w_start + kernel_size

# 提取对应位置的输入图像区域

input_region = padded_input[b, :, h_start:h_end, w_start:w_end]

# 计算卷积结果

x = input_region * weight[c_out]

bia = bias[c_out]

conv_result = np.sum(x, axis=(0,1, 2)) + bia

# 将卷积结果存储到输出特征图中

output[b, c_out, h_out, w_out] = conv_resu