map简析

map是一个集数组与链表(美貌与智慧)特性于一身的数据结构,其增删改查时间复杂度都非常优秀,在很多场景下用其替代树结构。关于map的特性请自行学习。

Go语言在语言层面就支持了map,而非其他语言(如Java)通过外置类库的方式实现。

使用 map[KeyType]ValueType 方式定义map类型变量。如 var mp map[string]int。

在使用map之前,必须先初始化map,使用 mp = make(map[string]int,cap)等形式。

一个未经初始化的map类型变量是可以读的,但读出来的内容皆为ValueType类型的零值。

map类型不支持并发的读写,但可以在非写入的状态并发的读取其中内容。

map的初始化同样是编译时与运行时共同努力的成果。

常用的位运算操作

移位 左移1位相当于原数乘以2,右移1位相当于原数除以2

B = 5

1 << B = 00000001 << 5 = 00100000 = 32

2的B次幂 - 1 相当于 将2的B-1次幂 + 2的B-2次幂 +…+ 2的0次幂

1 << B - 1 = 00100000 - 00000001 = 00011111 = 31

位清空M &^= N

01010101 &^ 00001111 = 01010000

位异或 M ^ N

01010101 ^ 10101010 = 11111111

11111111 ^ 10101010 = 01010101

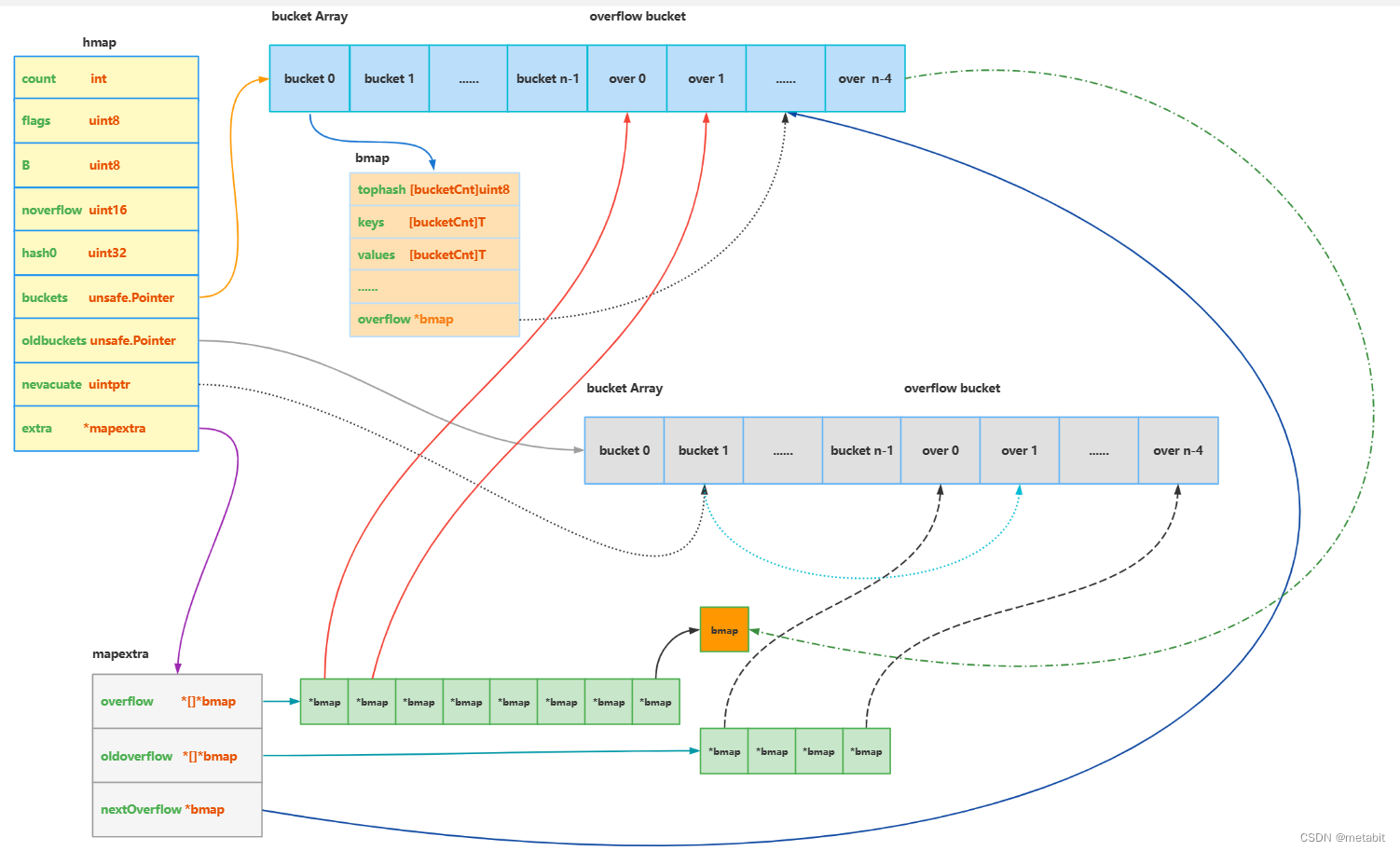

Go map整体结构图

go map源码里的介绍

// This file contains the implementation of Go's map type.

//

// A map is just a hash table. The data is arranged

// into an array of buckets. Each bucket contains up to

// 8 key/elem pairs. The low-order bits of the hash are

// used to select a bucket. Each bucket contains a few

// high-order bits of each hash to distinguish the entries

// within a single bucket.

//

// If more than 8 keys hash to a bucket, we chain on

// extra buckets.

//

// When the hashtable grows, we allocate a new array

// of buckets twice as big. Buckets are incrementally

// copied from the old bucket array to the new bucket array.

//

// Map iterators walk through the array of buckets and

// return the keys in walk order (bucket #, then overflow

// chain order, then bucket index). To maintain iteration

// semantics, we never move keys within their bucket (if

// we did, keys might be returned 0 or 2 times). When

// growing the table, iterators remain iterating through the

// old table and must check the new table if the bucket

// they are iterating through has been moved ("evacuated")

// to the new table.

// Picking loadFactor: too large and we have lots of overflow

// buckets, too small and we waste a lot of space. I wrote

// a simple program to check some stats for different loads:

// (64-bit, 8 byte keys and elems)

// loadFactor %overflow bytes/entry hitprobe missprobe

// 4.00 2.13 20.77 3.00 4.00

// 4.50 4.05 17.30 3.25 4.50

// 5.00 6.85 14.77 3.50 5.00

// 5.50 10.55 12.94 3.75 5.50

// 6.00 15.27 11.67 4.00 6.00

// 6.50 20.90 10.79 4.25 6.50

// 7.00 27.14 10.15 4.50 7.00

// 7.50 34.03 9.73 4.75 7.50

// 8.00 41.10 9.40 5.00 8.00

//

// %overflow = percentage of buckets which have an overflow bucket

// bytes/entry = overhead bytes used per key/elem pair

// hitprobe = # of entries to check when looking up a present key

// missprobe = # of entries to check when looking up an absent key

//

// Keep in mind this data is for maximally loaded tables, i.e. just

// before the table grows. Typical tables will be somewhat less loaded.

哈希冲突解决方法

- 开放寻址法:线性探查、二次方探查、双散列等

- 拉链法 (Go语言中的map 使用了该方法解决哈希冲突)

负载因子 OverLoadFactor

map中 负载因子 = 元素的数量 / 桶的数量, Go语言中map的负载因子为6.5

负载因子超过既定的值会引发扩容操作

map运行时的结构

以下代码若未标注出处,则均来自src/runtime/map.go文件

// A header for a Go map.

type hmap struct {

// Note: the format of the hmap is also encoded in cmd/compile/internal/reflectdata/reflect.go.

// Make sure this stays in sync with the compiler's definition.

count int // # live cells == size of map. Must be first (used by len() builtin)

flags uint8

B uint8 // log_2 of # of buckets (can hold up to loadFactor * 2^B items)

noverflow uint16 // approximate number of overflow buckets; see incrnoverflow for details

hash0 uint32 // hash seed

buckets unsafe.Pointer // array of 2^B Buckets. may be nil if count==0.

oldbuckets unsafe.Pointer // previous bucket array of half the size, non-nil only when growing

nevacuate uintptr // progress counter for evacuation (buckets less than this have been evacuated)

extra *mapextra // optional fields

}

- count map中元素的数量,用内置函数len()获取的map数量就是该数量

- flags 标记map状态的字段,如正在写入,正在迭代等

- B 2的指数,map中一共有1 << B 个桶

- noverflow 溢出桶的个数

- hash0 哈希种子,对key做哈希时,会加入该种子,确保map的安全性

- buckets 桶数组的指针

- oldbuckets 旧桶数组的指针

- nevacuate 该字段表示迁移进度,或当前应迁移桶的下标,即小于该字段值的桶都已迁移完成。

- extra 溢出桶的指针

type mapextra struct {

// If both key and elem do not contain pointers and are inline, then we mark bucket

// type as containing no pointers. This avoids scanning such maps.

// However, bmap.overflow is a pointer. In order to keep overflow buckets

// alive, we store pointers to all overflow buckets in hmap.extra.overflow and hmap.extra.oldoverflow.

// overflow and oldoverflow are only used if key and elem do not contain pointers.

// overflow contains overflow buckets for hmap.buckets.

// oldoverflow contains overflow buckets for hmap.oldbuckets.

// The indirection allows to store a pointer to the slice in hiter.

overflow *[]*bmap

oldoverflow *[]*bmap

// nextOverflow holds a pointer to a free overflow bucket.

nextOverflow *bmap

}

- overflow 溢出桶数组指针,仅当key和elem非指针时才使用

- oldoverflow 旧的溢出桶数组指针,仅当key和elem非指针时才使用

- nextOverflow 下一个可用的溢出桶地址

// A bucket for a Go map.

type bmap struct {

// tophash generally contains the top byte of the hash value

// for each key in this bucket. If tophash[0] < minTopHash,

// tophash[0] is a bucket evacuation state instead.

tophash [bucketCnt]uint8

// Followed by bucketCnt keys and then bucketCnt elems.

// NOTE: packing all the keys together and then all the elems together makes the

// code a bit more complicated than alternating key/elem/key/elem/... but it allows

// us to eliminate padding which would be needed for, e.g., map[int64]int8.

// Followed by an overflow pointer.

}

- tophash 存储哈希值的高八位,加快增删改查寻址效率

- key [bucketCnt]KeyType 存储key的数组

- elem [bucketCnt]ElementType 存储value的数组

- …

- overflow *bmap 溢出桶指针

bmap是Go语言中map的桶类型,在源码中,bmap类型只有一个tophash字段。但在编译时期,Go编译器会根据用户代码自动注入相应的key,value等结构。bmap中最后一个字段是当前桶的溢出桶指针,一个桶可能会有多个溢出桶,他们是以链表的形式连接的。

map中需要的常量

const (

// Maximum number of key/elem pairs a bucket can hold.

bucketCntBits = 3 // bucketCount 左移 的位数

bucketCnt = 1 << bucketCntBits // 每个bucket中存储八组键值对

// Maximum average load of a bucket that triggers growth is 6.5.

// Represent as loadFactorNum/loadFactorDen, to allow integer math.

loadFactorNum = 13 // 负载因子分子

loadFactorDen = 2 // 负载因子分母

// Maximum key or elem size to keep inline (instead of mallocing per element).

// Must fit in a uint8.

// Fast versions cannot handle big elems - the cutoff size for

// fast versions in cmd/compile/internal/gc/walk.go must be at most this elem.

maxKeySize = 128 //Key 最大的size

maxElemSize = 128 //Value(Elem)的最大size

// data offset should be the size of the bmap struct, but needs to be

// aligned correctly. For amd64p32 this means 64-bit alignment

// even though pointers are 32 bit.

dataOffset = unsafe.Offsetof(struct { //key在内存中的偏移量

b bmap

v int64

}{}.v)

// Possible tophash values. We reserve a few possibilities for special marks.

// Each bucket (including its overflow buckets, if any) will have either all or none of its

// entries in the evacuated* states (except during the evacuate() method, which only happens

// during map writes and thus no one else can observe the map during that time).

emptyRest = 0 // this cell is empty, and there are no more non-empty cells at higher indexes or overflows. // tophash 数组中 emptyRest 代表其后单元格均为空,包括其后的所有溢出桶

emptyOne = 1 // this cell is empty // tophash 数组中 代表当前单元格为空

evacuatedX = 2 // key/elem is valid. Entry has been evacuated to first half of larger table. // 迁移后的元素落入的新桶的index 与 旧桶一致 位于 旧桶的 tophash 数组中

evacuatedY = 3 // same as above, but evacuated to second half of larger table. // 迁移后的元素落入的新桶的index 是 该元素在旧桶中的index的二倍 位于 旧桶的 tophash 数组中

evacuatedEmpty = 4 // cell is empty, bucket is evacuated. // 迁移后的旧桶中无元素,代表迁移完成 位于 旧桶的 tophash 数组中

minTopHash = 5 // minimum tophash for a normal filled cell. // hash 计算完成后在若小于该值则 加上该值 保证 0 ~ 4 是预留的tophash数组中的标记状态

// flags

iterator = 1 // there may be an iterator using buckets // 一个迭代器在使用 buckets

oldIterator = 2 // there may be an iterator using oldbuckets // 同上,oldbuckets

hashWriting = 4 // a goroutine is writing to the map // 一个协程正在向哈希表写内容

sameSizeGrow = 8 // the current map growth is to a new map of the same size // 相等大小扩容

// sentinel bucket ID for iterator checks

noCheck = 1<<(8*goarch.PtrSize) - 1 // 哨兵 bucket ID 用于 迭代器检查, 表示无需检查的buckket

)

const maxZero = 1024 // must match value in reflect/value.go:maxZero cmd/compile/internal/gc/walk.go:zeroValSize

var zeroVal [maxZero]byte // 1024字节大小的空内存区域,作为返回的零值使用

`

检测 tophash 代表的单元格是否为空

// isEmpty reports whether the given tophash array entry represents an empty bucket entry.

func isEmpty(x uint8) bool {

return x <= emptyOne // <= 1 1 or 0 -> empty

}

bucketShift 1 << b 代表桶的数量 即 2 ^ b

// bucketShift returns 1<<b, optimized for code generation.

func bucketShift(b uint8) uintptr {

// Masking the shift amount allows overflow checks to be elided.

return uintptr(1) << (b & (goarch.PtrSize*8 - 1))

}

bucketMask 1 << b - 1 用hash的低B位与该值相与,即可得到hash对应的桶号

// bucketMask returns 1<<b - 1, optimized for code generation.

func bucketMask(b uint8) uintptr {

return bucketShift(b) - 1

}

tophash hash的高八位用于确定桶中存放数据的具体单元格位置,若该值 < minTopHash则加之,为单元格预留表示状态的值

// tophash calculates the tophash value for hash.

func tophash(hash uintptr) uint8 {

top := uint8(hash >> (goarch.PtrSize*8 - 8))

if top < minTopHash {

top += minTopHash

}

return top

}

evacuated 检查传入的桶是否已经迁移完

func evacuated(b *bmap) bool {

h := b.tophash[0]

return h > emptyOne && h < minTopHash // 若迁移完成,则tophash[0]的状态会被修正,从下往上扫描,见后续, 此处检查状态即可

}

overflow 获取b的溢出桶指针,位于桶的低goarch.PtrSize位,长度为goarch.PtrSize

func (b *bmap) overflow(t *maptype) *bmap {

return *(**bmap)(add(unsafe.Pointer(b), uintptr(t.bucketsize)-goarch.PtrSize))

}

setoverflow 设置b的溢出桶,将桶的溢出桶指针字段设置成ovf

func (b *bmap) setoverflow(t *maptype, ovf *bmap) {

*(**bmap)(add(unsafe.Pointer(b), uintptr(t.bucketsize)-goarch.PtrSize)) = ovf

}

keys 根据偏移量拿到key的指针

func (b *bmap) keys() unsafe.Pointer {

return add(unsafe.Pointer(b), dataOffset)

}

incrnoverflow 增加溢出桶个数,若溢出桶 小于 2 ^ 16个则直接 + 1,否则有1/(1<<(h.B-15))的概率 + 1

// incrnoverflow increments h.noverflow.

// noverflow counts the number of overflow buckets.

// This is used to trigger same-size map growth.

// See also tooManyOverflowBuckets.

// To keep hmap small, noverflow is a uint16.

// When there are few buckets, noverflow is an exact count.

// When there are many buckets, noverflow is an approximate count.

func (h *hmap) incrnoverflow() {

// We trigger same-size map growth if there are

// as many overflow buckets as buckets.

// We need to be able to count to 1<<h.B.

if h.B < 16 {

h.noverflow++

return

}

// Increment with probability 1/(1<<(h.B-15)).

// When we reach 1<<15 - 1, we will have approximately

// as many overflow buckets as buckets.

mask := uint32(1)<<(h.B-15) - 1

// Example: if h.B == 18, then mask == 7,

// and fastrand & 7 == 0 with probability 1/8.

if fastrand()&mask == 0 {

h.noverflow++

}

}

newoverflow 创建一个溢出桶,如果是bucketArray中最后一个可用的溢出桶,该桶的溢出桶指针置空,h.extra.nextOverflow 置空,表示无可用溢出桶

func (h *hmap) newoverflow(t *maptype, b *bmap) *bmap {

var ovf *bmap

if h.extra != nil && h.extra.nextOverflow != nil { // hmap中溢出桶字段非空

// We have preallocated overflow buckets available.

// See makeBucketArray for more details.

ovf = h.extra.nextOverflow

if ovf.overflow(t) == nil { //溢出桶的溢出桶指针为空,代表有可用的溢出桶,在makebucketArray的时候,将最后一个溢出桶的溢出桶指针指向了bucket的头部位置,用该状态代表已经是最后一个溢出桶。

// We're not at the end of the preallocated overflow buckets. Bump the pointer.

h.extra.nextOverflow = (*bmap)(add(unsafe.Pointer(ovf), uintptr(t.bucketsize))) //下移可用的溢出桶指针

} else { //代表已经是最后一个溢出桶了

// This is the last preallocated overflow bucket.

// Reset the overflow pointer on this bucket,

// which was set to a non-nil sentinel value.

ovf.setoverflow(t, nil) //其指针置空

h.extra.nextOverflow = nil // 可用溢出桶指针置空

}

} else { // hmap中溢出桶字段为空

ovf = (*bmap)(newobject(t.bucket)) // 创建一个1个单位的新的溢出桶

}

h.incrnoverflow() //增长溢出桶个数

if t.bucket.ptrdata == 0 { //若 桶中的元素非指针类型

h.createOverflow() // 为hmap创建新的溢出桶,保证溢出桶字段非空

*h.extra.overflow = append(*h.extra.overflow, ovf) // 将溢出桶指针加入overflow数组

}

b.setoverflow(t, ovf) // 为b设置溢出桶指针为ovf

return ovf // 返回ovf

}

createOverflow 为hmap创建新的溢出桶,原字段为空则创建

func (h *hmap) createOverflow() {

if h.extra == nil { // 为空则创建

h.extra = new(mapextra)

}

if h.extra.overflow == nil {

h.extra.overflow = new([]*bmap)

}

}

makemap64 & makemap_small 对makemap的封装,使用场景不一

func makemap64(t *maptype, hint int64, h *hmap) *hmap {

if int64(int(hint)) != hint { // 32位机器?

hint = 0

}

return makemap(t, int(hint), h)

}

// makemap_small implements Go map creation for make(map[k]v) and

// make(map[k]v, hint) when hint is known to be at most bucketCnt

// at compile time and the map needs to be allocated on the heap.

func makemap_small() *hmap { // 桶的size 最多为bucketcount时...

h := new(hmap)

h.hash0 = fastrand()

return h

}

makemap 创建一个map,内置函数make的封装

// makemap implements Go map creation for make(map[k]v, hint).

// If the compiler has determined that the map or the first bucket

// can be created on the stack, h and/or bucket may be non-nil.

// If h != nil, the map can be created directly in h.

// If h.buckets != nil, bucket pointed to can be used as the first bucket.

func makemap(t *maptype, hint int, h *hmap) *hmap {

mem, overflow := math.MulUintptr(uintptr(hint), t.bucket.size) //根据桶数量和桶大小计算所需开辟内存,及其是否溢出

if overflow || mem > maxAlloc {

hint = 0

}

// initialize Hmap

if h == nil {

h = new(hmap)

}

h.hash0 = fastrand() // 初始化哈希种子,该算法使用了wangyi_fudan的库,是国内大佬的

// Find the size parameter B which will hold the requested # of elements.

// For hint < 0 overLoadFactor returns false since hint < bucketCnt.

B := uint8(0)

for overLoadFactor(hint, B) { //根据负载因子公式计算B的个数,保证其不大不小,刚刚好

B++

}

h.B = B

// allocate initial hash table

// if B == 0, the buckets field is allocated lazily later (in mapassign)

// If hint is large zeroing this memory could take a while.

if h.B != 0 {

var nextOverflow *bmap

h.buckets, nextOverflow = makeBucketArray(t, h.B, nil) // 创建buckets,将其与第一个可用的溢出桶指针一同返回

if nextOverflow != nil { // hmap的溢出桶赋值

h.extra = new(mapextra)

h.extra.nextOverflow = nextOverflow

}

}

return h

}

overLoadFactor 返回count是否符合负载因子

// overLoadFactor reports whether count items placed in 1<<B buckets is over loadFactor.

func overLoadFactor(count int, B uint8) bool {

return count > bucketCnt && uintptr(count) > loadFactorNum*(bucketShift(B)/loadFactorDen)

}

makeBucketArray 创建buckets,并返回其后的第一个可用的溢出桶指针

// makeBucketArray initializes a backing array for map buckets.

// 1<<b is the minimum number of buckets to allocate.

// dirtyalloc should either be nil or a bucket array previously

// allocated by makeBucketArray with the same t and b parameters.

// If dirtyalloc is nil a new backing array will be alloced and

// otherwise dirtyalloc will be cleared and reused as backing array.

func makeBucketArray(t *maptype, b uint8, dirtyalloc unsafe.Pointer) (buckets unsafe.Pointer, nextOverflow *bmap) {

base := bucketShift(b)

nbuckets := base

// For small b, overflow buckets are unlikely.

// Avoid the overhead of the calculation.

if b >= 4 {

// Add on the estimated number of overflow buckets

// required to insert the median number of elements

// used with this value of b.

nbuckets += bucketShift(b - 4)

sz := t.bucket.size * nbuckets

up := roundupsize(sz) // 向上取整

if up != sz {

nbuckets = up / t.bucket.size // 最终所有桶的个数

}

}

if dirtyalloc == nil { // 脏的内存为空

buckets = newarray(t.bucket, int(nbuckets)) // 直接分配

} else { // 复用内存

// dirtyalloc was previously generated by

// the above newarray(t.bucket, int(nbuckets))

// but may not be empty.

buckets = dirtyalloc

size := t.bucket.size * nbuckets

if t.bucket.ptrdata != 0 { // 桶内保存的是key value的指针

memclrHasPointers(buckets, size) // 清理内存

} else {

memclrNoHeapPointers(buckets, size)

}

}

if base != nbuckets { // 证明创建过了溢出桶

// We preallocated some overflow buckets.

// To keep the overhead of tracking these overflow buckets to a minimum,

// we use the convention that if a preallocated overflow bucket's overflow

// pointer is nil, then there are more available by bumping the pointer.

// We need a safe non-nil pointer for the last overflow bucket; just use buckets.

nextOverflow = (*bmap)(add(buckets, base*uintptr(t.bucketsize))) // 第一个可用的溢出桶指针

last := (*bmap)(add(buckets, (nbuckets-1)*uintptr(t.bucketsize))) // 最后一个溢出桶的指针

last.setoverflow(t, (*bmap)(buckets)) // 最后一个溢出桶的指针,指向桶数组开头,用该状态代表溢出桶用尽,该桶为最后一个溢出桶。

}

return buckets, nextOverflow

}

- 若B > 4 则创建 B - 4 个溢出桶

- 溢出桶与桶使用的是同一片连续的内存区域

- 最终所有桶的个数:t.bucket.size * (桶的个数 + 溢出桶的个数 ) 向上取整后除以t.bucket.size。

mapaccess1 相当于 v := Map[key] 即返回key 对应的 v

// mapaccess1 returns a pointer to h[key]. Never returns nil, instead

// it will return a reference to the zero object for the elem type if

// the key is not in the map.

// NOTE: The returned pointer may keep the whole map live, so don't

// hold onto it for very long.

func mapaccess1(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {

if raceenabled && h != nil {

callerpc := getcallerpc()

pc := abi.FuncPCABIInternal(mapaccess1)

racereadpc(unsafe.Pointer(h), callerpc, pc)

raceReadObjectPC(t.key, key, callerpc, pc)

}

if msanenabled && h != nil {

msanread(key, t.key.size)

}

if asanenabled && h != nil {

asanread(key, t.key.size)

}

if h == nil || h.count == 0 { // map 是空的

if t.hashMightPanic() {

t.hasher(key, 0) // see issue 23734

}

return unsafe.Pointer(&zeroVal[0]) // 返回一个零值

}

if h.flags&hashWriting != 0 { // 若map有其他协程写入,则panic

fatal("concurrent map read and map write")

}

hash := t.hasher(key, uintptr(h.hash0)) // 使用key 和 哈希种子 计算哈希 算法位于 src/runtime/alg.go 文件中

m := bucketMask(h.B) // 计算桶的掩码, 若B为10 则m为 1 << 10 - 1 = 0b111111111 = 1023

b := (*bmap)(add(h.buckets, (hash&m)*uintptr(t.bucketsize))) // hash & m 计算哈希应落入哪个桶 再 * t.bucketsize 即为第N个桶的偏移地址 加上h.buckets起始地址,b为选中桶的起始地址

if c := h.oldbuckets; c != nil { // 若旧桶不为空

if !h.sameSizeGrow() { // 非等量扩容

// There used to be half as many buckets; mask down one more power of two.

m >>= 1 // 旧桶的下标

}

oldb := (*bmap)(add(c, (hash&m)*uintptr(t.bucketsize))) // 旧桶的地址

if !evacuated(oldb) { // 如果未迁移完成

b = oldb

}

}

top := tophash(hash) // 计算高八位哈希

bucketloop:

for ; b != nil; b = b.overflow(t) { //遍历 桶 和 溢出桶

for i := uintptr(0); i < bucketCnt; i++ { // 遍历桶中八组单元

if b.tophash[i] != top { // tophash 不相等的情况下检查当前存储单元标记是否为emtyRest

if b.tophash[i] == emptyRest { // i 后无有效值

break bucketloop // 退出

}

continue

}

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize)) // 根据偏移量计算当前单元key的地址

if t.indirectkey() { // 如果key是间接引用 即指针

k = *((*unsafe.Pointer)(k)) // 解引用

}

if t.key.equal(key, k) { // 算法位于src/runtime/alg.go文件中,比较key是否相等

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize)) // 计算value / elem 的地址

if t.indirectelem() { // 解引用value / elem

e = *((*unsafe.Pointer)(e))

}

return e // 返回value的地址

}

}

}

return unsafe.Pointer(&zeroVal[0]) // 返回一片空的内存区域的起始地址

}

访问map原理

- 若hmap为空或hmap的元素数量等于0,则返回空的内存区域代表零值,即空的map也可以访问,但返回的是“零值”

- 调用hash := t.hasher(key, uintptr(h.hash0)) 计算哈希值

- 使用m := bucketMask(h.B)计算桶位置的掩码

- 使用hash&m计算桶的位置,相当于取余操作hash % m

- b := (*bmap)(add(h.buckets, (hash&m)*uintptr(t.bucketsize))) 桶起始地址 + 偏移地址 = 目标桶的起始地址

- 如果hmap有旧桶,若是(非等量扩容)2倍扩容,则m除以2,得到所在原桶中索引

- 若旧桶未迁移完成,并且查询的元素所在的桶号位于旧桶,则去旧桶中查询 b = oldb

- top := tophash(hash) 得到高八位哈希

- 查询的步骤是两层循环,外层循环遍历桶及溢出桶,链式结构。内存循环遍历桶内的八组单元,对比tophash,若某个tophash 等于 emptyRest,则证明后续单元格及溢出桶均为空,则break掉两层循环。否则计算k单元格的偏移量,取出k 和 传入的key做比较,若相同计算elem的偏移量,并返回。

src/runtime/alg.go 中定义了具体类型的hash等算法

// in asm_*.s

func memhash(p unsafe.Pointer, h, s uintptr) uintptr

func memhash32(p unsafe.Pointer, h uintptr) uintptr

func memhash64(p unsafe.Pointer, h uintptr) uintptr

func strhash(p unsafe.Pointer, h uintptr) uintptr

func strhashFallback(a unsafe.Pointer, h uintptr) uintptr {

x := (*stringStruct)(a)

return memhashFallback(x.str, h, uintptr(x.len))

}

mapaccess2 相当于 v, ok := Map[key] 即返回key 对应的 v, 及key是否在map中存在,原理同mapaccess1 一致, 只不过返回值中多了一个bool变量

func mapaccess2(t *maptype, h *hmap, key unsafe.Pointer) (unsafe.Pointer, bool) {

if raceenabled && h != nil {

callerpc := getcallerpc()

pc := abi.FuncPCABIInternal(mapaccess2)

racereadpc(unsafe.Pointer(h), callerpc, pc)

raceReadObjectPC(t.key, key, callerpc, pc)

}

if msanenabled && h != nil {

msanread(key, t.key.size)

}

if asanenabled && h != nil {

asanread(key, t.key.size)

}

if h == nil || h.count == 0 {

if t.hashMightPanic() {

t.hasher(key, 0) // see issue 23734

}

return unsafe.Pointer(&zeroVal[0]), false

}

if h.flags&hashWriting != 0 {

fatal("concurrent map read and map write")

}

hash := t.hasher(key, uintptr(h.hash0))

m := bucketMask(h.B)

b := (*bmap)(add(h.buckets, (hash&m)*uintptr(t.bucketsize)))

if c := h.oldbuckets; c != nil {

if !h.sameSizeGrow() {

// There used to be half as many buckets; mask down one more power of two.

m >>= 1

}

oldb := (*bmap)(add(c, (hash&m)*uintptr(t.bucketsize)))

if !evacuated(oldb) {

b = oldb

}

}

top := tophash(hash)

bucketloop:

for ; b != nil; b = b.overflow(t) {

for i := uintptr(0); i < bucketCnt; i++ {

if b.tophash[i] != top {

if b.tophash[i] == emptyRest {

break bucketloop

}

continue

}

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

if t.indirectkey() {

k = *((*unsafe.Pointer)(k))

}

if t.key.equal(key, k) {

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize))

if t.indirectelem() {

e = *((*unsafe.Pointer)(e))

}

return e, true // 存在

}

}

}

return unsafe.Pointer(&zeroVal[0]), false // 不存在

}

mapaccessK 同时返回key 和 elem 原理同上

// returns both key and elem. Used by map iterator

func mapaccessK(t *maptype, h *hmap, key unsafe.Pointer) (unsafe.Pointer, unsafe.Pointer) {

if h == nil || h.count == 0 {

return nil, nil

}

hash := t.hasher(key, uintptr(h.hash0))

m := bucketMask(h.B)

b := (*bmap)(add(h.buckets, (hash&m)*uintptr(t.bucketsize)))

if c := h.oldbuckets; c != nil {

if !h.sameSizeGrow() {

// There used to be half as many buckets; mask down one more power of two.

m >>= 1

}

oldb := (*bmap)(add(c, (hash&m)*uintptr(t.bucketsize)))

if !evacuated(oldb) {

b = oldb

}

}

top := tophash(hash)

bucketloop:

for ; b != nil; b = b.overflow(t) {

for i := uintptr(0); i < bucketCnt; i++ {

if b.tophash[i] != top {

if b.tophash[i] == emptyRest {

break bucketloop

}

continue

}

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

if t.indirectkey() {

k = *((*unsafe.Pointer)(k))

}

if t.key.equal(key, k) {

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize))

if t.indirectelem() {

e = *((*unsafe.Pointer)(e))

}

return k, e

}

}

}

return nil, nil

}

mapaccess1_fat, mapaccess2_fat 对 mapaccess1 的封装

func mapaccess1_fat(t *maptype, h *hmap, key, zero unsafe.Pointer) unsafe.Pointer {

e := mapaccess1(t, h, key)

if e == unsafe.Pointer(&zeroVal[0]) {

return zero

}

return e

}

func mapaccess2_fat(t *maptype, h *hmap, key, zero unsafe.Pointer) (unsafe.Pointer, bool) {

e := mapaccess1(t, h, key)

if e == unsafe.Pointer(&zeroVal[0]) {

return zero, false

}

return e, true

}

mapassign map[key] = value

// Like mapaccess, but allocates a slot for the key if it is not present in the map.

func mapassign(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {

if h == nil { // 向nil的map中写值 触发panic

panic(plainError("assignment to entry in nil map"))

}

if raceenabled {

callerpc := getcallerpc()

pc := abi.FuncPCABIInternal(mapassign)

racewritepc(unsafe.Pointer(h), callerpc, pc)

raceReadObjectPC(t.key, key, callerpc, pc)

}

if msanenabled {

msanread(key, t.key.size)

}

if asanenabled {

asanread(key, t.key.size)

}

if h.flags&hashWriting != 0 { // 多协程写入 panic

fatal("concurrent map writes")

}

hash := t.hasher(key, uintptr(h.hash0)) // 计算哈希

// Set hashWriting after calling t.hasher, since t.hasher may panic,

// in which case we have not actually done a write.

h.flags ^= hashWriting // 标记为写入状态

if h.buckets == nil { // 如果没有桶则new一个1个单位的桶

h.buckets = newobject(t.bucket) // newarray(t.bucket, 1)

}

again:

bucket := hash & bucketMask(h.B) // 计算桶的下标

if h.growing() { // 桶正在扩容

growWork(t, h, bucket) // 优先迁移当前桶

}

b := (*bmap)(add(h.buckets, bucket*uintptr(t.bucketsize))) // 计算桶地址

top := tophash(hash) // 获取tophash

var inserti *uint8 // 指向将要插入tophash[i]的地址

var insertk unsafe.Pointer // 将要插入的key的地址

var elem unsafe.Pointer // 将要插入的elem的地址

bucketloop:

for {

for i := uintptr(0); i < bucketCnt; i++ {

if b.tophash[i] != top {

if isEmpty(b.tophash[i]) && inserti == nil { // tohash[i] 为空 并且 inserti 还没有指向,则找到一个合适的单元格,计算key 和 elem的地址

inserti = &b.tophash[i]

insertk = add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

elem = add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize))

}

if b.tophash[i] == emptyRest { // 如果单元格其后均为空,包括溢出桶,则返回即可

break bucketloop

}

continue // 否则找下一个位置

}

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

if t.indirectkey() {

k = *((*unsafe.Pointer)(k))

}

if !t.key.equal(key, k) { // 已经存在的 k 与 key 相同

continue

}

// already have a mapping for key. Update it.

if t.needkeyupdate() { // 更新k

typedmemmove(t.key, k, key)

}

elem = add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize)) // 计算elem的地址

goto done // 跳转,准备返回

}

ovf := b.overflow(t) // 上边的桶没有位置了,去溢出桶里存储

if ovf == nil { // 如果溢出桶为空,则证明无可用溢出桶,需要添加溢出桶,或扩容

break

}

b = ovf // 去溢出桶里继续找合适的位置插入或更新

}

// Did not find mapping for key. Allocate new cell & add entry.

// If we hit the max load factor or we have too many overflow buckets,

// and we're not already in the middle of growing, start growing.

if !h.growing() && (overLoadFactor(h.count+1, h.B) || tooManyOverflowBuckets(h.noverflow, h.B)) { // 非扩容阶段,并且 负载因子被超过,或 有太多的溢出桶

hashGrow(t, h) // 扩容

goto again // Growing the table invalidates everything, so try again //从头来一遍

}

if inserti == nil { // 当前桶的所有溢出桶都满了,

// The current bucket and all the overflow buckets connected to it are full, allocate a new one.

newb := h.newoverflow(t, b) // 创建一个溢出桶 挂在 b的溢出桶后边

inserti = &newb.tophash[0] // 重新计算i,k, e地址

insertk = add(unsafe.Pointer(newb), dataOffset)

elem = add(insertk, bucketCnt*uintptr(t.keysize))

}

// store new key/elem at insert position

if t.indirectkey() { // 间接引用 ,开辟并挂载新空间

kmem := newobject(t.key)

*(*unsafe.Pointer)(insertk) = kmem

insertk = kmem

}

if t.indirectelem() { // 同上

vmem := newobject(t.elem)

*(*unsafe.Pointer)(elem) = vmem

}

typedmemmove(t.key, insertk, key)

*inserti = top // 赋tophash值

h.count++ // 元素个数+1

done:

if h.flags&hashWriting == 0 { //如果写状态被修改,则证明多个协程同时写,则panic

fatal("concurrent map writes")

}

h.flags &^= hashWriting // 清除写状态标记

if t.indirectelem() { // 解引用

elem = *((*unsafe.Pointer)(elem))

}

return elem // 返回的elem 可以被赋value的值了,value是在该方法外部赋值的

}

mapdelete delete(Map,key)

func mapdelete(t *maptype, h *hmap, key unsafe.Pointer) {

if raceenabled && h != nil {

callerpc := getcallerpc()

pc := abi.FuncPCABIInternal(mapdelete)

racewritepc(unsafe.Pointer(h), callerpc, pc)

raceReadObjectPC(t.key, key, callerpc, pc)

}

if msanenabled && h != nil {

msanread(key, t.key.size)

}

if asanenabled && h != nil {

asanread(key, t.key.size)

}

if h == nil || h.count == 0 {

if t.hashMightPanic() {

t.hasher(key, 0) // see issue 23734

}

return

}

if h.flags&hashWriting != 0 { // 删除也置写状态标记,若已有其他协程操作map写入,则panic

fatal("concurrent map writes")

}

hash := t.hasher(key, uintptr(h.hash0)) // 计算hash

// Set hashWriting after calling t.hasher, since t.hasher may panic,

// in which case we have not actually done a write (delete).

h.flags ^= hashWriting //置写状态标记

bucket := hash & bucketMask(h.B) //计算桶索引

if h.growing() { // 若正在扩容

growWork(t, h, bucket) //优先迁移当前要操作的旧桶

}

b := (*bmap)(add(h.buckets, bucket*uintptr(t.bucketsize))) // 计算桶位置

bOrig := b // 原桶 与操作桶 做对比

top := tophash(hash) // hash高八位

search:

for ; b != nil; b = b.overflow(t) { // 外层遍历桶及溢出桶

for i := uintptr(0); i < bucketCnt; i++ { // 内层遍历桶内的八组单元

if b.tophash[i] != top {

if b.tophash[i] == emptyRest { // 桶内没有该key

break search

}

continue

}

// top 相等的情况

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize)) // 计算key的地址

k2 := k

if t.indirectkey() { // 若间接引用则解引用

k2 = *((*unsafe.Pointer)(k2))

}

if !t.key.equal(key, k2) { // 传入key 和 已有k 不等

continue

}

// Only clear key if there are pointers in it.

if t.indirectkey() { // 置空key的内存

*(*unsafe.Pointer)(k) = nil

} else if t.key.ptrdata != 0 {

memclrHasPointers(k, t.key.size)

}

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize)) // 计算e的内存地址

if t.indirectelem() { //置空e的内存

*(*unsafe.Pointer)(e) = nil

} else if t.elem.ptrdata != 0 {

memclrHasPointers(e, t.elem.size)

} else {

memclrNoHeapPointers(e, t.elem.size)

}

b.tophash[i] = emptyOne //置空当前tophash单元格

// If the bucket now ends in a bunch of emptyOne states,

// change those to emptyRest states.

// It would be nice to make this a separate function, but

// for loops are not currently inlineable.

if i == bucketCnt-1 { //最后一个单元格

if b.overflow(t) != nil && b.overflow(t).tophash[0] != emptyRest {

goto notLast //非最后一个桶(溢出桶)

}

} else {

if b.tophash[i+1] != emptyRest { //下一个单元非emptyRest状态

goto notLast

}

}

for { //为桶及溢出桶,合理的位置,置emptyRest

b.tophash[i] = emptyRest // 当前位置置空

if i == 0 { // 到达第1个单元

if b == bOrig { // 当前桶和原桶一致, 置空完毕

break // beginning of initial bucket, we're done.

}

// Find previous bucket, continue at its last entry.

c := b

for b = bOrig; b.overflow(t) != c; b = b.overflow(t) { // 寻找b的上一个桶

}

i = bucketCnt - 1 //从最后一个单元向上扫描

} else {

i-- //向上扫描

}

if b.tophash[i] != emptyOne { // 当前格子的状态非emptyOne 则返回

break

}

}

notLast:

h.count-- //map中元素个数-1

// Reset the hash seed to make it more difficult for attackers to

// repeatedly trigger hash collisions. See issue 25237.

if h.count == 0 { // map元素个数空了,重新计算hash种子

h.hash0 = fastrand()

}

break search

}

}

if h.flags&hashWriting == 0 { // 判定是否多协程操作

fatal("concurrent map writes")

}

h.flags &^= hashWriting //清除写状态

}

mapclear 从一个map中删除所有key

// mapclear deletes all keys from a map.

func mapclear(t *maptype, h *hmap) {

if raceenabled && h != nil {

callerpc := getcallerpc()

pc := abi.FuncPCABIInternal(mapclear)

racewritepc(unsafe.Pointer(h), callerpc, pc)

}

if h == nil || h.count == 0 {

return

}

if h.flags&hashWriting != 0 {

fatal("concurrent map writes")

}

h.flags ^= hashWriting // 写状态

h.flags &^= sameSizeGrow //各种清空

h.oldbuckets = nil

h.nevacuate = 0

h.noverflow = 0

h.count = 0

// Reset the hash seed to make it more difficult for attackers to

// repeatedly trigger hash collisions. See issue 25237.

h.hash0 = fastrand() //重置hash种子

// Keep the mapextra allocation but clear any extra information.

if h.extra != nil { // 溢出桶字段清空

*h.extra = mapextra{}

}

// makeBucketArray clears the memory pointed to by h.buckets

// and recovers any overflow buckets by generating them

// as if h.buckets was newly alloced.

_, nextOverflow := makeBucketArray(t, h.B, h.buckets) //重置nextOverflow

if nextOverflow != nil {

// If overflow buckets are created then h.extra

// will have been allocated during initial bucket creation.

h.extra.nextOverflow = nextOverflow

}

if h.flags&hashWriting == 0 {

fatal("concurrent map writes")

}

h.flags &^= hashWriting //清空写状态

}

hashGrow 哈希扩容,触发桶的迁移

func hashGrow(t *maptype, h *hmap) {

// If we've hit the load factor, get bigger.

// Otherwise, there are too many overflow buckets,

// so keep the same number of buckets and "grow" laterally.

bigger := uint8(1)

if !overLoadFactor(h.count+1, h.B) { // 等量扩容

bigger = 0

h.flags |= sameSizeGrow

}

oldbuckets := h.buckets

newbuckets, nextOverflow := makeBucketArray(t, h.B+bigger, nil) //重新规划新桶和溢出桶

flags := h.flags &^ (iterator | oldIterator) // 新flag 是 清空 迭代器和旧迭代器标记 的值

if h.flags&iterator != 0 { // 正在被迭代

flags |= oldIterator // 加上旧迭代器的标记

}

// commit the grow (atomic wrt gc)

h.B += bigger // B += 1 or 0

h.flags = flags

h.oldbuckets = oldbuckets // buckets 迁移给 oldbuckets

h.buckets = newbuckets // 新开辟的空间给buckets

h.nevacuate = 0 // 迁移进度置0,表示当前应迁移0号旧桶

h.noverflow = 0 // 溢出桶个数置0

if h.extra != nil && h.extra.overflow != nil { //转移旧的溢出桶指针数组

// Promote current overflow buckets to the old generation.

if h.extra.oldoverflow != nil {

throw("oldoverflow is not nil")

}

h.extra.oldoverflow = h.extra.overflow

h.extra.overflow = nil

}

if nextOverflow != nil { //重置nextOVerflow 可用溢出桶指针

if h.extra == nil {

h.extra = new(mapextra)

}

h.extra.nextOverflow = nextOverflow

}

// the actual copying of the hash table data is done incrementally

// by growWork() and evacuate().

}

tooManyOverflowBuckets 溢出桶个数超过既定值,触发等量扩容 noverflow > 1 << 15

// tooManyOverflowBuckets reports whether noverflow buckets is too many for a map with 1<<B buckets.

// Note that most of these overflow buckets must be in sparse use;

// if use was dense, then we'd have already triggered regular map growth.

func tooManyOverflowBuckets(noverflow uint16, B uint8) bool {

// If the threshold is too low, we do extraneous work.

// If the threshold is too high, maps that grow and shrink can hold on to lots of unused memory.

// "too many" means (approximately) as many overflow buckets as regular buckets.

// See incrnoverflow for more details.

if B > 15 {

B = 15

}

// The compiler doesn't see here that B < 16; mask B to generate shorter shift code.

return noverflow >= uint16(1)<<(B&15) // noverflow > 1 << 15

}

growing 是否正在扩容 检查旧桶是否为空即可

// growing reports whether h is growing. The growth may be to the same size or bigger.

func (h *hmap) growing() bool {

return h.oldbuckets != nil

}

sameSizeGrow 检查是否等量扩容

// sameSizeGrow reports whether the current growth is to a map of the same size.

func (h *hmap) sameSizeGrow() bool {

return h.flags&sameSizeGrow != 0

}

noldbuckets 旧桶个数,非等量扩容则是新桶的一半,等量扩容则与新桶一致

// noldbuckets calculates the number of buckets prior to the current map growth.

func (h *hmap) noldbuckets() uintptr {

oldB := h.B

if !h.sameSizeGrow() {

oldB-- // 指数-1 相当于 个数/2

}

return bucketShift(oldB) // 1 << oldB

}

oldbucketmask 旧桶的掩码

// oldbucketmask provides a mask that can be applied to calculate n % noldbuckets().

func (h *hmap) oldbucketmask() uintptr {

return h.noldbuckets() - 1

}

growWork

数据的迁移用到了copy on write的思想,即cow写时复制,在插入和删除时均使用了cow

func growWork(t *maptype, h *hmap, bucket uintptr) {

// make sure we evacuate the oldbucket corresponding

// to the bucket we're about to use

evacuate(t, h, bucket&h.oldbucketmask()) //先迁移将用到的桶

// evacuate one more oldbucket to make progress on growing

if h.growing() { // 再迁移进度中的一个桶

evacuate(t, h, h.nevacuate)

}

}

bucketEvacuated 检查桶是否迁移完毕

func bucketEvacuated(t *maptype, h *hmap, bucket uintptr) bool {

b := (*bmap)(add(h.oldbuckets, bucket*uintptr(t.bucketsize)))

return evacuated(b)

}

evacuate 桶迁移过程

// evacDst is an evacuation destination.

type evacDst struct { //迁移目的地结构体

b *bmap // current destination bucket //当前目标桶

i int // key/elem index into b //正在迁移桶中的第i个单元

k unsafe.Pointer // pointer to current key storage // 当前的key指针

e unsafe.Pointer // pointer to current elem storage // 当前的 elem指针

}

func evacuated(b *bmap) bool { // 检测是否迁移过

h := b.tophash[0]

return h > emptyOne && h < minTopHash

}

func evacuate(t *maptype, h *hmap, oldbucket uintptr) {

b := (*bmap)(add(h.oldbuckets, oldbucket*uintptr(t.bucketsize))) //计算要迁移的旧桶的地址

newbit := h.noldbuckets() //计算旧桶个数

if !evacuated(b) { // 未迁移过

// TODO: reuse overflow buckets instead of using new ones, if there

// is no iterator using the old buckets. (If !oldIterator.)

// xy contains the x and y (low and high) evacuation destinations.

var xy [2]evacDst // 等量扩容时,只用到了x (low 目标地址),非等量扩容时,用到了x,y(low,high) 2个目标地址,即一个旧桶中的值会被分配到两个新桶中,根据tophhash的oldB位的值,0 -> x 1 -> y 当遇见NaN的情况则取低位的 0 或 1

x := &xy[0] //x 目标地址是一定会被使用到的

x.b = (*bmap)(add(h.buckets, oldbucket*uintptr(t.bucketsize))) //新桶索引与旧桶索引相同

x.k = add(unsafe.Pointer(x.b), dataOffset) // k地址

x.e = add(x.k, bucketCnt*uintptr(t.keysize)) // e地址

if !h.sameSizeGrow() { // 非等量扩容,会用到y 目标地址

// Only calculate y pointers if we're growing bigger.

// Otherwise GC can see bad pointers.

y := &xy[1]

y.b = (*bmap)(add(h.buckets, (oldbucket+newbit)*uintptr(t.bucketsize))) // 旧桶索引 + 旧桶个数的偏移

y.k = add(unsafe.Pointer(y.b), dataOffset)

y.e = add(y.k, bucketCnt*uintptr(t.keysize))

}

for ; b != nil; b = b.overflow(t) { //外层遍历桶及其溢出桶

k := add(unsafe.Pointer(b), dataOffset)) // k偏移地址

e := add(k, bucketCnt*uintptr(t.keysize)) // e偏移地址

for i := 0; i < bucketCnt; i, k, e = i+1, add(k, uintptr(t.keysize)), add(e, uintptr(t.elemsize)) { // 内层遍历桶内的八组单元

top := b.tophash[i]

if isEmpty(top) {

b.tophash[i] = evacuatedEmpty // top为空赋 evacuatedEmpty 表示 迁移过的空单元

continue

}

if top < minTopHash {

throw("bad map state")

}

k2 := k

if t.indirectkey() {

k2 = *((*unsafe.Pointer)(k2))

}

var useY uint8 // 初始值为 0

if !h.sameSizeGrow() { // 非等量扩容 即2倍扩容

// Compute hash to make our evacuation decision (whether we need

// to send this key/elem to bucket x or bucket y).

hash := t.hasher(k2, uintptr(h.hash0)) //重新计算hash 因为k2可能是解引用后的值

if h.flags&iterator != 0 && !t.reflexivekey() && !t.key.equal(k2, k2) { // 处理浮点数 NaN问题

// If key != key (NaNs), then the hash could be (and probably

// will be) entirely different from the old hash. Moreover,

// it isn't reproducible. Reproducibility is required in the

// presence of iterators, as our evacuation decision must

// match whatever decision the iterator made.

// Fortunately, we have the freedom to send these keys either

// way. Also, tophash is meaningless for these kinds of keys.

// We let the low bit of tophash drive the evacuation decision.

// We recompute a new random tophash for the next level so

// these keys will get evenly distributed across all buckets

// after multiple grows.

useY = top & 1 // 取低位

top = tophash(hash) //重新计算top

} else {

if hash&newbit != 0 { // 取旧hash的

useY = 1

}

}

}

if evacuatedX+1 != evacuatedY || evacuatedX^1 != evacuatedY {

throw("bad evacuatedN")

}

b.tophash[i] = evacuatedX + useY // evacuatedX + 1 == evacuatedY //标记原桶迁移的目的地

dst := &xy[useY] // evacuation destination //向迁移目标地址迁移

if dst.i == bucketCnt { //当前桶可用单元用尽

dst.b = h.newoverflow(t, dst.b) // 挂一个溢出桶

dst.i = 0 //从溢出桶0位置开始写

dst.k = add(unsafe.Pointer(dst.b), dataOffset) //取k的地址

dst.e = add(dst.k, bucketCnt*uintptr(t.keysize)) // 取e的地址

}

dst.b.tophash[dst.i&(bucketCnt-1)] = top // mask dst.i as an optimization, to avoid a bounds check //赋topahsh 为top

if t.indirectkey() { //间接引用解引用

*(*unsafe.Pointer)(dst.k) = k2 // copy pointer //复制指针

} else {

typedmemmove(t.key, dst.k, k) // copy elem //复制内容

}

if t.indirectelem() { //同上

*(*unsafe.Pointer)(dst.e) = *(*unsafe.Pointer)(e)

} else {

typedmemmove(t.elem, dst.e, e)

}

dst.i++ //迁移的单元索引+1

// These updates might push these pointers past the end of the

// key or elem arrays. That's ok, as we have the overflow pointer

// at the end of the bucket to protect against pointing past the

// end of the bucket.

dst.k = add(dst.k, uintptr(t.keysize)) //dst 中下一轮的k地址

dst.e = add(dst.e, uintptr(t.elemsize))//dst 中下一轮的e地址

}

}

// Unlink the overflow buckets & clear key/elem to help GC.

if h.flags&oldIterator == 0 && t.bucket.ptrdata != 0 { //迁移完成 对旧桶 手动GC

b := add(h.oldbuckets, oldbucket*uintptr(t.bucketsize))

// Preserve b.tophash because the evacuation

// state is maintained there.

ptr := add(b, dataOffset)

n := uintptr(t.bucketsize) - dataOffset

memclrHasPointers(ptr, n)

}

}

if oldbucket == h.nevacuate { //如果刚好,当前桶索引 等于 要迁移的桶

advanceEvacuationMark(h, t, newbit) // 修改迁移进度

}

}

advanceEvacuationMark 修改迁移进度,释放旧桶资源

func advanceEvacuationMark(h *hmap, t *maptype, newbit uintptr) {

h.nevacuate++ //迁移进度+1

// Experiments suggest that 1024 is overkill by at least an order of magnitude.

// Put it in there as a safeguard anyway, to ensure O(1) behavior.

stop := h.nevacuate + 1024

if stop > newbit {

stop = newbit

}

for h.nevacuate != stop && bucketEvacuated(t, h, h.nevacuate) {

h.nevacuate++

}

if h.nevacuate == newbit { // newbit == # of oldbuckets //迁移完成了

// Growing is all done. Free old main bucket array.

h.oldbuckets = nil // 旧桶置空

// Can discard old overflow buckets as well.

// If they are still referenced by an iterator,

// then the iterator holds a pointers to the slice.

if h.extra != nil {

h.extra.oldoverflow = nil //旧桶的溢出桶置空

}

h.flags &^= sameSizeGrow //清空等量扩容标记

}

}

hiter 迭代器结构体

// A hash iteration structure.

// If you modify hiter, also change cmd/compile/internal/reflectdata/reflect.go

// and reflect/value.go to match the layout of this structure.

type hiter struct {

key unsafe.Pointer // Must be in first position. Write nil to indicate iteration end (see cmd/compile/internal/walk/range.go). //当前key

elem unsafe.Pointer // Must be in second position (see cmd/compile/internal/walk/range.go). //当前elem

t *maptype //map类型

h *hmap //hmap指针,指向将遍历的hmap

buckets unsafe.Pointer // bucket ptr at hash_iter initialization time //指向hmap.buckets将要遍历的桶

bptr *bmap // current bucket //当前的桶指针

overflow *[]*bmap // keeps overflow buckets of hmap.buckets alive //溢出桶指针

oldoverflow *[]*bmap // keeps overflow buckets of hmap.oldbuckets alive //旧溢出桶指针

startBucket uintptr // bucket iteration started at //随机桶起始位置

offset uint8 // intra-bucket offset to start from during iteration (should be big enough to hold bucketCnt-1) //随机桶内单元偏移位置

wrapped bool // already wrapped around from end of bucket array to beginning //标识bucket遍历到末尾由回到了开头

B uint8 // hmap中的B

i uint8 // 这个i有点任性,当前桶内单元的索引

bucket uintptr // 起始的随机桶号,当前遍历的桶

checkBucket uintptr // 需检查的bucket,有扩容操作时使用

}

mapiterinit map迭代器初始化函数,保证遍历的随机性,随机的桶及桶中单元起始位置

// mapiterinit initializes the hiter struct used for ranging over maps.

// The hiter struct pointed to by 'it' is allocated on the stack

// by the compilers order pass or on the heap by reflect_mapiterinit.

// Both need to have zeroed hiter since the struct contains pointers.

func mapiterinit(t *maptype, h *hmap, it *hiter) {

if raceenabled && h != nil {

callerpc := getcallerpc()

racereadpc(unsafe.Pointer(h), callerpc, abi.FuncPCABIInternal(mapiterinit))

}

it.t = t

if h == nil || h.count == 0 {

return

}

if unsafe.Sizeof(hiter{})/goarch.PtrSize != 12 {

throw("hash_iter size incorrect") // see cmd/compile/internal/reflectdata/reflect.go

}

it.h = h

// grab snapshot of bucket state

it.B = h.B

it.buckets = h.buckets

if t.bucket.ptrdata == 0 {

// Allocate the current slice and remember pointers to both current and old.

// This preserves all relevant overflow buckets alive even if

// the table grows and/or overflow buckets are added to the table

// while we are iterating.

h.createOverflow()

it.overflow = h.extra.overflow

it.oldoverflow = h.extra.oldoverflow

}

// decide where to start

var r uintptr

if h.B > 31-bucketCntBits {

r = uintptr(fastrand64())

} else {

r = uintptr(fastrand())

}

it.startBucket = r & bucketMask(h.B) //随机桶号,向后遍历后从前向后遍历,做到闭环

it.offset = uint8(r >> h.B & (bucketCnt - 1)) // 随机桶内单元,向后遍历后从前向后遍历,做到闭环

// iterator state

it.bucket = it.startBucket

// Remember we have an iterator.

// Can run concurrently with another mapiterinit().

if old := h.flags; old&(iterator|oldIterator) != iterator|oldIterator { //并发读取

atomic.Or8(&h.flags, iterator|oldIterator)

}

mapiternext(it)

}

mapiternext 咱就把迭代器不断的指向下一个位置

func mapiternext(it *hiter) {

h := it.h

if raceenabled {

callerpc := getcallerpc()

racereadpc(unsafe.Pointer(h), callerpc, abi.FuncPCABIInternal(mapiternext))

}

if h.flags&hashWriting != 0 {

fatal("concurrent map iteration and map write")

}

t := it.t

bucket := it.bucket

b := it.bptr

i := it.i

checkBucket := it.checkBucket

next:

if b == nil {

if bucket == it.startBucket && it.wrapped { // 遍历完一圈了

// end of iteration

it.key = nil

it.elem = nil

return

}

if h.growing() && it.B == h.B { //若正在扩容

// Iterator was started in the middle of a grow, and the grow isn't done yet.

// If the bucket we're looking at hasn't been filled in yet (i.e. the old

// bucket hasn't been evacuated) then we need to iterate through the old

// bucket and only return the ones that will be migrated to this bucket.

oldbucket := bucket & it.h.oldbucketmask() // 旧桶的既定的 偏移(随机偏移)值

b = (*bmap)(add(h.oldbuckets, oldbucket*uintptr(t.bucketsize))) //相应旧桶的地址

if !evacuated(b) { //若未迁移完成

checkBucket = bucket // 需要检查的桶 为 bucket

} else { //迁移完成了,就去新桶相应的位置迭代

b = (*bmap)(add(it.buckets, bucket*uintptr(t.bucketsize)))

checkBucket = noCheck // 无需checkBucket

}

} else { //迁移完成了,就去新桶相应的位置迭代

b = (*bmap)(add(it.buckets, bucket*uintptr(t.bucketsize)))

checkBucket = noCheck // 无需checkBucket

}

bucket++ //向后迭代,下一个桶

if bucket == bucketShift(it.B) { //迭代到最后一个桶

bucket = 0 //下一次访问第个桶,形成闭环,苦海无涯,回头是岸

it.wrapped = true // 标记置true 代表已经从桶数组的结尾转到开头

}

i = 0 // i 置零

}

for ; i < bucketCnt; i++ {

offi := (i + it.offset) & (bucketCnt - 1) //(i + it.offset) & (bucketCnt - 1) 在 0 ~ 7 之间形成了环,所有单元都会遍历到

if isEmpty(b.tophash[offi]) || b.tophash[offi] == evacuatedEmpty { //越过绵绵的高山。呸,越过空单元

// TODO: emptyRest is hard to use here, as we start iterating

// in the middle of a bucket. It's feasible, just tricky.

continue

}

k := add(unsafe.Pointer(b), dataOffset+uintptr(offi)*uintptr(t.keysize)) // 取k地址

if t.indirectkey() {

k = *((*unsafe.Pointer)(k))

}

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+uintptr(offi)*uintptr(t.elemsize)) //取e地址

if checkBucket != noCheck && !h.sameSizeGrow() { //倦了,直接机器翻译吧。。。特殊情况:迭代器在增长到更大的大小时启动//而且生长还没有完成。我们正在研究一个桶//旧桶还没有被疏散。或者至少不是//当我们启动水桶时撤离。所以我们在迭代//通过旧桶,跳过任何将要删除的键//到另一个新存储桶(每个旧存储桶扩展为两个//生长期间的桶

// Special case: iterator was started during a grow to a larger size

// and the grow is not done yet. We're working on a bucket whose

// oldbucket has not been evacuated yet. Or at least, it wasn't

// evacuated when we started the bucket. So we're iterating

// through the oldbucket, skipping any keys that will go

// to the other new bucket (each oldbucket expands to two

// buckets during a grow).

if t.reflexivekey() || t.key.equal(k, k) { 如果旧存储桶中的项目未指定用于//迭代中的当前新桶,跳过它。

// If the item in the oldbucket is not destined for

// the current new bucket in the iteration, skip it.

hash := t.hasher(k, uintptr(h.hash0))

if hash&bucketMask(it.B) != checkBucket {

continue

}

} else {

// Hash isn't repeatable if k != k (NaNs). We need a

// repeatable and randomish choice of which direction

// to send NaNs during evacuation. We'll use the low

// bit of tophash to decide which way NaNs go.

// NOTE: this case is why we need two evacuate tophash

// values, evacuatedX and evacuatedY, that differ in

// their low bit.

if checkBucket>>(it.B-1) != uintptr(b.tophash[offi]&1) { //处理NaN问题

continue

}

}

}

if (b.tophash[offi] != evacuatedX && b.tophash[offi] != evacuatedY) ||

!(t.reflexivekey() || t.key.equal(k, k)) { //赋值it.key 和 it.elem

// This is the golden data, we can return it.

// OR

// key!=key, so the entry can't be deleted or updated, so we can just return it.

// That's lucky for us because when key!=key we can't look it up successfully.

it.key = k

if t.indirectelem() {

e = *((*unsafe.Pointer)(e))

}

it.elem = e

} else {

// The hash table has grown since the iterator was started.

// The golden data for this key is now somewhere else.

// Check the current hash table for the data.

// This code handles the case where the key

// has been deleted, updated, or deleted and reinserted.

// NOTE: we need to regrab the key as it has potentially been

// updated to an equal() but not identical key (e.g. +0.0 vs -0.0).

rk, re := mapaccessK(t, h, k) //通过key 取的key 和 elem

if rk == nil {

continue // key has been deleted

}

it.key = rk

it.elem = re

}

it.bucket = bucket //下一个迭代的bucket

if it.bptr != b { // avoid unnecessary write barrier; see issue 14921

it.bptr = b

}

it.i = i + 1 //下一个单元

it.checkBucket = checkBucket //状态转移

return

}

b = b.overflow(t) //获取溢出桶

i = 0 // i 置零

goto next // 跳转,代替了递归

}

reflect 相关的map操作,就是对以上操作的封装,不做介绍

// Reflect stubs. Called from ../reflect/asm_*.s

//go:linkname reflect_makemap reflect.makemap

func reflect_makemap(t *maptype, cap int) *hmap {

// Check invariants and reflects math.

if t.key.equal == nil {

throw("runtime.reflect_makemap: unsupported map key type")

}

if t.key.size > maxKeySize && (!t.indirectkey() || t.keysize != uint8(goarch.PtrSize)) ||

t.key.size <= maxKeySize && (t.indirectkey() || t.keysize != uint8(t.key.size)) {

throw("key size wrong")

}

if t.elem.size > maxElemSize && (!t.indirectelem() || t.elemsize != uint8(goarch.PtrSize)) ||

t.elem.size <= maxElemSize && (t.indirectelem() || t.elemsize != uint8(t.elem.size)) {

throw("elem size wrong")

}

if t.key.align > bucketCnt {

throw("key align too big")

}

if t.elem.align > bucketCnt {

throw("elem align too big")

}

if t.key.size%uintptr(t.key.align) != 0 {

throw("key size not a multiple of key align")

}

if t.elem.size%uintptr(t.elem.align) != 0 {

throw("elem size not a multiple of elem align")

}

if bucketCnt < 8 {

throw("bucketsize too small for proper alignment")

}

if dataOffset%uintptr(t.key.align) != 0 {

throw("need padding in bucket (key)")

}

if dataOffset%uintptr(t.elem.align) != 0 {

throw("need padding in bucket (elem)")

}

return makemap(t, cap, nil)

}

//go:linkname reflect_mapaccess reflect.mapaccess

func reflect_mapaccess(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {

elem, ok := mapaccess2(t, h, key)

if !ok {

// reflect wants nil for a missing element

elem = nil

}

return elem

}

//go:linkname reflect_mapaccess_faststr reflect.mapaccess_faststr

func reflect_mapaccess_faststr(t *maptype, h *hmap, key string) unsafe.Pointer {

elem, ok := mapaccess2_faststr(t, h, key)

if !ok {

// reflect wants nil for a missing element

elem = nil

}

return elem

}

//go:linkname reflect_mapassign reflect.mapassign

func reflect_mapassign(t *maptype, h *hmap, key unsafe.Pointer, elem unsafe.Pointer) {

p := mapassign(t, h, key)

typedmemmove(t.elem, p, elem)

}

//go:linkname reflect_mapassign_faststr reflect.mapassign_faststr

func reflect_mapassign_faststr(t *maptype, h *hmap, key string, elem unsafe.Pointer) {

p := mapassign_faststr(t, h, key)

typedmemmove(t.elem, p, elem)

}

//go:linkname reflect_mapdelete reflect.mapdelete

func reflect_mapdelete(t *maptype, h *hmap, key unsafe.Pointer) {

mapdelete(t, h, key)

}

//go:linkname reflect_mapdelete_faststr reflect.mapdelete_faststr

func reflect_mapdelete_faststr(t *maptype, h *hmap, key string) {

mapdelete_faststr(t, h, key)

}

//go:linkname reflect_mapiterinit reflect.mapiterinit

func reflect_mapiterinit(t *maptype, h *hmap, it *hiter) {

mapiterinit(t, h, it)

}

//go:linkname reflect_mapiternext reflect.mapiternext

func reflect_mapiternext(it *hiter) {

mapiternext(it)

}

//go:linkname reflect_mapiterkey reflect.mapiterkey

func reflect_mapiterkey(it *hiter) unsafe.Pointer {

return it.key

}

//go:linkname reflect_mapiterelem reflect.mapiterelem

func reflect_mapiterelem(it *hiter) unsafe.Pointer {

return it.elem

}

//go:linkname reflect_maplen reflect.maplen

func reflect_maplen(h *hmap) int {

if h == nil {

return 0

}

if raceenabled {

callerpc := getcallerpc()

racereadpc(unsafe.Pointer(h), callerpc, abi.FuncPCABIInternal(reflect_maplen))

}

return h.count

}

//go:linkname reflectlite_maplen internal/reflectlite.maplen

func reflectlite_maplen(h *hmap) int {

if h == nil {

return 0

}

if raceenabled {

callerpc := getcallerpc()

racereadpc(unsafe.Pointer(h), callerpc, abi.FuncPCABIInternal(reflect_maplen))

}

return h.count

}

![[附源码]计算机毕业设计Python的家政服务平台(程序+源码+LW文档)](https://img-blog.csdnimg.cn/1e3ffa1acaac4d28a430c26be8ce9bcb.png)