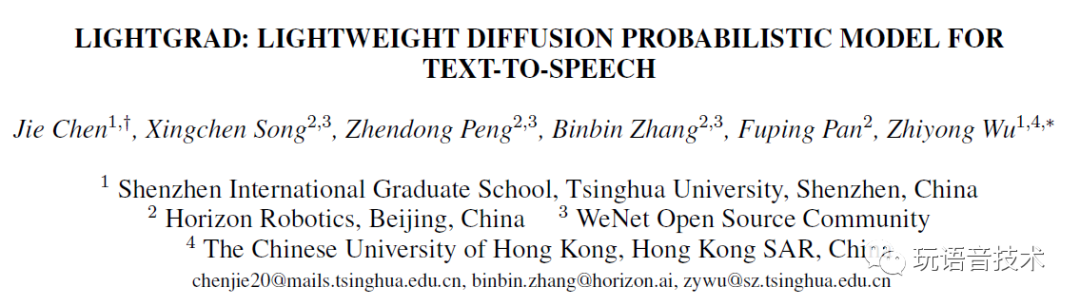

论文链接:

https://arxiv.org/abs/2308.16569

代码地址:

https://github.com/thuhcsi/LightGrad

数据支持:

针对BZNSYP和LJSpeech提供训练脚本

针对Grad-TTS提出两个问题:

-

DPMs are not lightweight enough for resource-constrained devices.

-

DPMs require many denoising steps in inference, which increases latency.

提出解决方案:

-

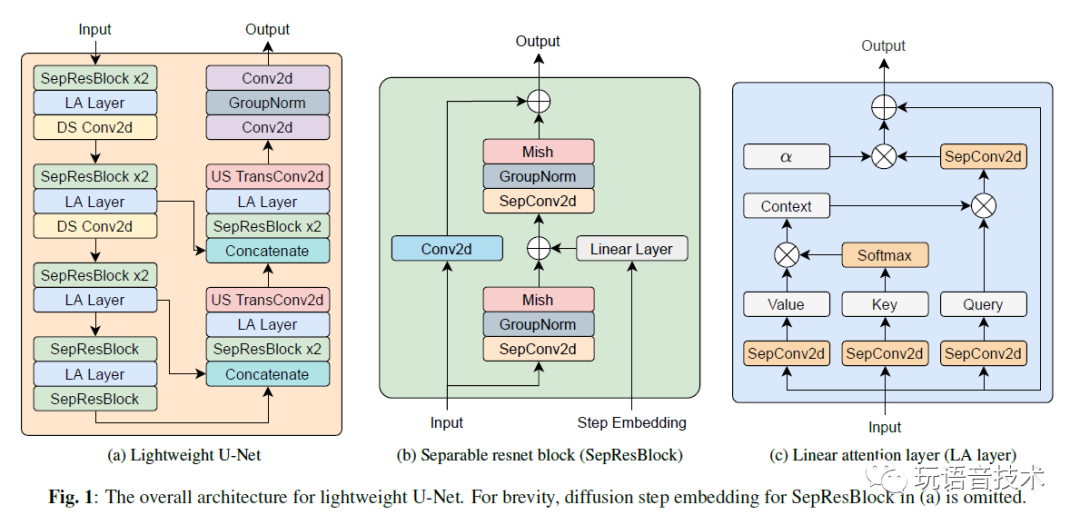

To reduce model parameters, regular convolution networks in diffusion decoder are substituted with depthwise separable convolutions.

-

To accelerate the inference procedure, we adopt a training-free fast sampling technique for DPMs (DPM-solver).

-

Streaming inference is also implemented in LightGrad to reduce latency further.

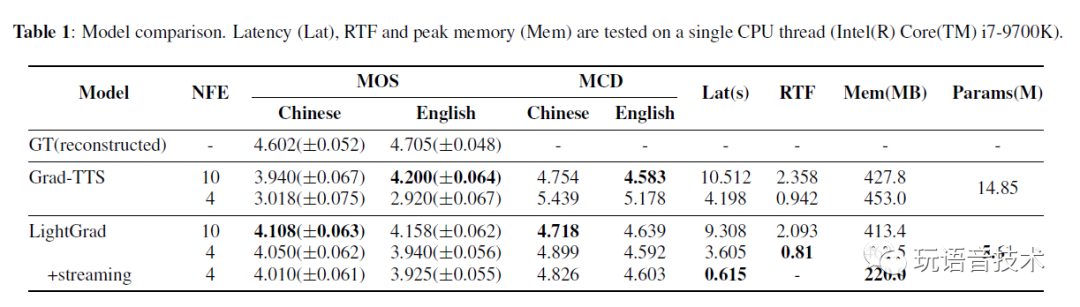

Compared with Grad-TTS, LightGrad achieves 62.2% reduction in paramters, 65.7% reduction in latency, while preserving comparable speech quality on both Chinese Mandarin and English in 4 denoising steps.

LightGrad流式方案(基于三星论文):

论文链接:

https://arxiv.org/abs/2111.09052

具体实现:

-

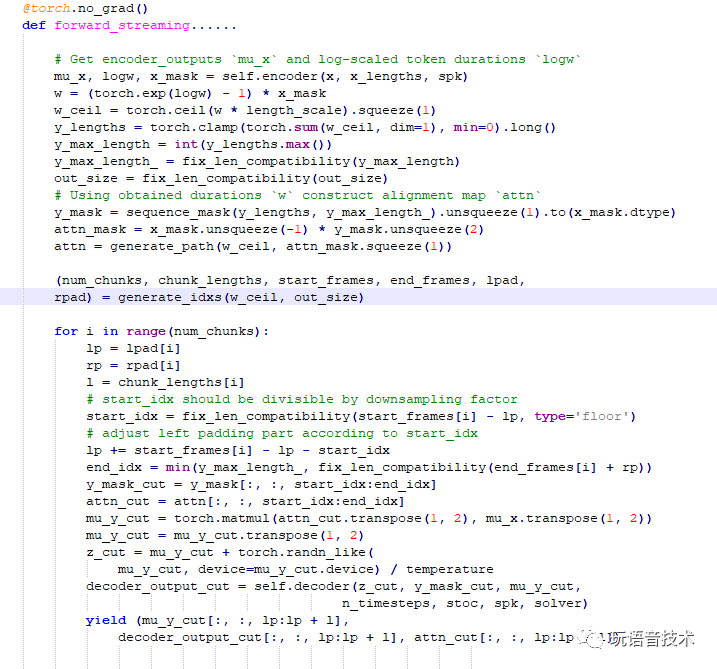

Decoder input is chopped into chunks at phoneme boundaries to cover several consecutive phonemes and the chunk lengths are limited to a predefined range.

-

To incorporate context information into decoder, last phoneme of the previous chunk and first phoneme of the following chunk are padded to the head and tail of the current chunk.

-

Then, the decoder generates mel-spectrogram for each padded chunk.

-

After this, mel-spectrogram frames corresponding to the padded phonemes are removed to reverse the changes to each chunk.

![详解梯度下降从BGD到ADAM - [北邮鲁鹏]](https://img-blog.csdnimg.cn/043591377130437e907be8d9fc18275d.gif)