文章目录

- 16.评分机制详解

- 16.1 评分机制 TF\IDF

- 16.2 Doc value

- 16.3 query phase

- 16.4 fetch phase

- 16.5 搜索参数小总结

- 17.聚合入门

- 17.1 聚合示例

- 17.2 bucket和metric

- 17.3 电视案例

- 18.java api实现聚合

- 19.es7 sql新特性

- 19.1 快速入门

- 19.2 启动方式

- 19.3 显示方式

- 19.4 sql 翻译

- 19.5 与其他DSL结合

- 19.6 java代码实现sql功能

- 20.Logstash学习

- 20.1 基本语法组成

- 20.2 输入插件(input)

- 20.3 过滤器插件(Filter)

- 20.4 输出插件(output)

- 20.5 综合案例

- 21.kibana学习

- 21.1 基本查询

- 21.2 可视化

- 21.3 仪表盘

- 21.4 使用模板数据指导绘图

- 21.5 其他功能

- 22.集群部署

- 23.项目实战

- 23.1 项目一:ELK用于日志分析

- 23.2 项目二:学成在线站内搜索

16.评分机制详解

16.1 评分机制 TF\IDF

16.1.1 算法介绍

relevance score算法,就是计算出一个索引中的文本,与搜索文本,他们之间的关联匹配程度。

Elasticsearch使用的是 term frequency/inverse document frequency算法,简称为TF/IDF算法。TF词频(Term Frequency),IDF逆向文件频率(Inverse Document Frequency)

Term frequency

搜索文本中的各个词条在field文本中出现了多少次,出现次数越多,就越相关。

举例:搜索请求:hello world

doc1 : hello you and me,and world is very good.

doc2 : hello,how are you

Inverse document frequency

搜索文本中的各个词条在整个索引的所有文档中出现了多少次,出现的次数越多,就越不相关.

举例:搜索请求:hello world

doc1 : hello ,today is very good

doc2 : hi world ,how are you

整个index中1亿条数据。hello的document 1000个,有world的document 有100个。

doc2 更相关

Field-length norm

field长度,field越长,相关度越弱

举例:搜索请求:hello world

doc1 : {“title”:“hello article”,"content ":“balabalabal 1万个”}

doc2 : {“title”:“my article”,"content ":“balabalabal 1万个,world”}

16.1.2 _score是如何被计算出来的

GET /book/_search?explain=true

{

"query": {

"match": {

"description": "java程序员"

}

}

}

返回

{

"took" : 5,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 2,

"relation" : "eq"

},

"max_score" : 2.137549,

"hits" : [

{

"_shard" : "[book][0]",

"_node" : "MDA45-r6SUGJ0ZyqyhTINA",

"_index" : "book",

"_type" : "_doc",

"_id" : "3",

"_score" : 2.137549,

"_source" : {

"name" : "spring开发基础",

"description" : "spring 在java领域非常流行,java程序员都在用。",

"studymodel" : "201001",

"price" : 88.6,

"timestamp" : "2019-08-24 19:11:35",

"pic" : "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags" : [

"spring",

"java"

]

},

"_explanation" : {

"value" : 2.137549,

"description" : "sum of:",

"details" : [

{

"value" : 0.7936629,

"description" : "weight(description:java in 0) [PerFieldSimilarity], result of:",

"details" : [

{

"value" : 0.7936629,

"description" : "score(freq=2.0), product of:",

"details" : [

{

"value" : 2.2,

"description" : "boost",

"details" : [ ]

},

{

"value" : 0.47000363,

"description" : "idf, computed as log(1 + (N - n + 0.5) / (n + 0.5)) from:",

"details" : [

{

"value" : 2,

"description" : "n, number of documents containing term",

"details" : [ ]

},

{

"value" : 3,

"description" : "N, total number of documents with field",

"details" : [ ]

}

]

},

{

"value" : 0.7675597,

"description" : "tf, computed as freq / (freq + k1 * (1 - b + b * dl / avgdl)) from:",

"details" : [

{

"value" : 2.0,

"description" : "freq, occurrences of term within document",

"details" : [ ]

},

{

"value" : 1.2,

"description" : "k1, term saturation parameter",

"details" : [ ]

},

{

"value" : 0.75,

"description" : "b, length normalization parameter",

"details" : [ ]

},

{

"value" : 12.0,

"description" : "dl, length of field",

"details" : [ ]

},

{

"value" : 35.333332,

"description" : "avgdl, average length of field",

"details" : [ ]

}

]

}

]

}

]

},

{

"value" : 1.3438859,

"description" : "weight(description:程序员 in 0) [PerFieldSimilarity], result of:",

"details" : [

{

"value" : 1.3438859,

"description" : "score(freq=1.0), product of:",

"details" : [

{

"value" : 2.2,

"description" : "boost",

"details" : [ ]

},

{

"value" : 0.98082924,

"description" : "idf, computed as log(1 + (N - n + 0.5) / (n + 0.5)) from:",

"details" : [

{

"value" : 1,

"description" : "n, number of documents containing term",

"details" : [ ]

},

{

"value" : 3,

"description" : "N, total number of documents with field",

"details" : [ ]

}

]

},

{

"value" : 0.6227967,

"description" : "tf, computed as freq / (freq + k1 * (1 - b + b * dl / avgdl)) from:",

"details" : [

{

"value" : 1.0,

"description" : "freq, occurrences of term within document",

"details" : [ ]

},

{

"value" : 1.2,

"description" : "k1, term saturation parameter",

"details" : [ ]

},

{

"value" : 0.75,

"description" : "b, length normalization parameter",

"details" : [ ]

},

{

"value" : 12.0,

"description" : "dl, length of field",

"details" : [ ]

},

{

"value" : 35.333332,

"description" : "avgdl, average length of field",

"details" : [ ]

}

]

}

]

}

]

}

]

}

},

{

"_shard" : "[book][0]",

"_node" : "MDA45-r6SUGJ0ZyqyhTINA",

"_index" : "book",

"_type" : "_doc",

"_id" : "2",

"_score" : 0.57961315,

"_source" : {

"name" : "java编程思想",

"description" : "java语言是世界第一编程语言,在软件开发领域使用人数最多。",

"studymodel" : "201001",

"price" : 68.6,

"timestamp" : "2019-08-25 19:11:35",

"pic" : "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags" : [

"java",

"dev"

]

},

"_explanation" : {

"value" : 0.57961315,

"description" : "sum of:",

"details" : [

{

"value" : 0.57961315,

"description" : "weight(description:java in 0) [PerFieldSimilarity], result of:",

"details" : [

{

"value" : 0.57961315,

"description" : "score(freq=1.0), product of:",

"details" : [

{

"value" : 2.2,

"description" : "boost",

"details" : [ ]

},

{

"value" : 0.47000363,

"description" : "idf, computed as log(1 + (N - n + 0.5) / (n + 0.5)) from:",

"details" : [

{

"value" : 2,

"description" : "n, number of documents containing term",

"details" : [ ]

},

{

"value" : 3,

"description" : "N, total number of documents with field",

"details" : [ ]

}

]

},

{

"value" : 0.56055,

"description" : "tf, computed as freq / (freq + k1 * (1 - b + b * dl / avgdl)) from:",

"details" : [

{

"value" : 1.0,

"description" : "freq, occurrences of term within document",

"details" : [ ]

},

{

"value" : 1.2,

"description" : "k1, term saturation parameter",

"details" : [ ]

},

{

"value" : 0.75,

"description" : "b, length normalization parameter",

"details" : [ ]

},

{

"value" : 19.0,

"description" : "dl, length of field",

"details" : [ ]

},

{

"value" : 35.333332,

"description" : "avgdl, average length of field",

"details" : [ ]

}

]

}

]

}

]

}

]

}

}

]

}

}

16.1.3 分析一个document是如何被匹配上的

GET /book/_explain/3

{

"query": {

"match": {

"description": "java程序员"

}

}

}

16.2 Doc value

搜索的时候,要依靠倒排索引;排序的时候,需要依靠正排索引,看到每个document的每个field,然后进行排序,所谓的正排索引,其实就是doc values

在建立索引的时候,一方面会建立倒排索引,以供搜索用;一方面会建立正排索引,也就是doc values,以供排序,聚合,过滤等操作使用

doc values是被保存在磁盘上的,此时如果内存足够,os会自动将其缓存在内存中,性能还是会很高;如果内存不足够,os会将其写入磁盘上

倒排索引

doc1: hello world you and me

doc2: hi, world, how are you

| term | doc1 | doc2 |

|---|---|---|

| hello | * | |

| world | * | * |

| you | * | * |

| and | * | |

| me | * | |

| hi | * | |

| how | * | |

| are | * |

搜索时:

hello you --> hello, you

hello --> doc1

you --> doc1,doc2

doc1: hello world you and me

doc2: hi, world, how are you

sort by 出现问题

正排索引

doc1: { “name”: “jack”, “age”: 27 }

doc2: { “name”: “tom”, “age”: 30 }

| document | name | age |

|---|---|---|

| doc1 | jack | 27 |

| doc2 | tom | 30 |

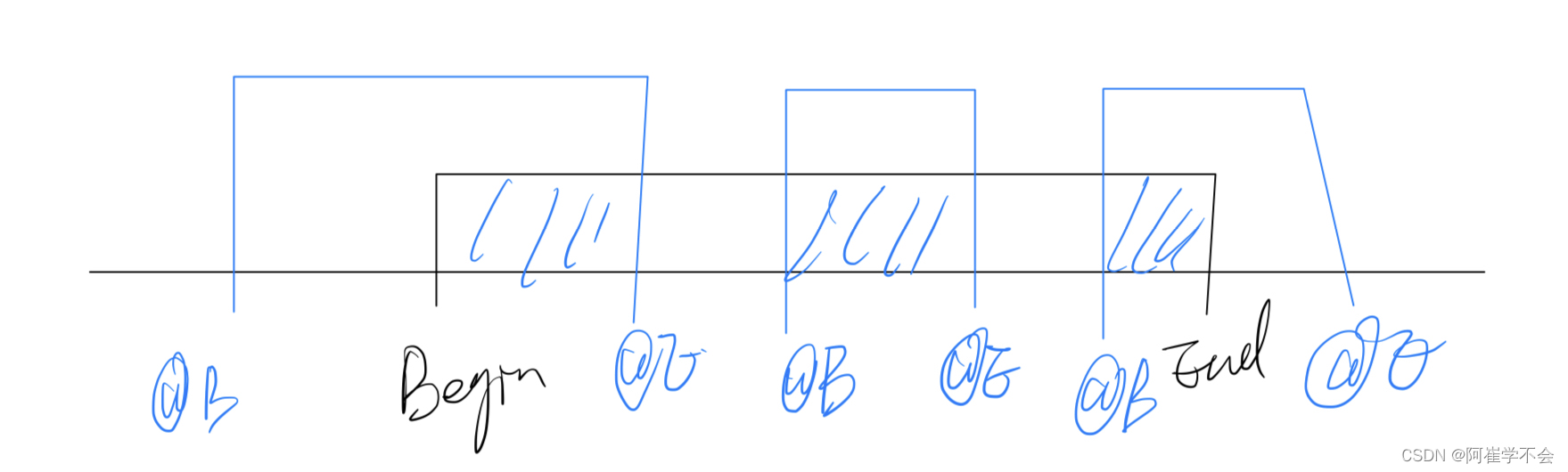

16.3 query phase

1、query phase

(1)搜索请求发送到某一个coordinate node,构构建一个priority queue,长度以paging操作from和size为准,默认为10

(2)coordinate node将请求转发到所有shard,每个shard本地搜索,并构建一个本地的priority queue

(3)各个shard将自己的priority queue返回给coordinate node,并构建一个全局的priority queue

2、replica shard如何提升搜索吞吐量

一次请求要打到所有shard的一个replica/primary上去,如果每个shard都有多个replica,那么同时并发过来的搜索请求可以同时打到其他的replica上去

16.4 fetch phase

1、fetch phbase工作流程

(1)coordinate node构建完priority queue之后,就发送mget请求去所有shard上获取对应的document

(2)各个shard将document返回给coordinate node

(3)coordinate node将合并后的document结果返回给client客户端

2、一般搜索,如果不加from和size,就默认搜索前10条,按照_score排序

16.5 搜索参数小总结

1、preference

决定了哪些shard会被用来执行搜索操作

_primary, _primary_first, _local, _only_node:xyz, _prefer_node:xyz, _shards:2,3

bouncing results问题,两个document排序,field值相同;不同的shard上,可能排序不同;每次请求轮询打到不同的replica shard上;每次页面上看到的搜索结果的排序都不一样。这就是bouncing result,也就是跳跃的结果。

搜索的时候,是轮询将搜索请求发送到每一个replica shard(primary shard),但是在不同的shard上,可能document的排序不同

解决方案:将preference设置为一个字符串,比如说user_id,让每个user每次搜索的时候,都使用同一个replica shard去执行,就不会看到bouncing results

GET /_search?preference=_shards:2,3

2、timeout

已经讲解过原理了,主要就是限定在一定时间内,将部分获取到的数据直接返回,避免查询耗时过长

GET /_search?timeout=10ms

3、routing

document文档路由,_id路由,routing=user_id,这样的话可以让同一个user对应的数据到一个shard上去

GET /_search?routing=user123

4、search_type

default:query_then_fetch

dfs_query_then_fetch,可以提升revelance sort精准度

17.聚合入门

17.1 聚合示例

17.1.1 需求:计算每个studymodel下的商品数量

sql语句: select studymodel,count(*) from book group by studymodel

GET /book/_search

{

"size": 0,

"query": {

"match_all": {}

},

"aggs": {

"group_by_model": {

"terms": { "field": "studymodel" }

}

}

}

17.1.2 需求:计算每个tags下的商品数量

设置字段"fielddata": true

PUT /book/_mapping/

{

"properties": {

"tags": {

"type": "text",

"fielddata": true

}

}

}

查询

GET /book/_search

{

"size": 0,

"query": {

"match_all": {}

},

"aggs": {

"group_by_tags": {

"terms": { "field": "tags" }

}

}

}

17.1.3 需求:加上搜索条件,计算每个tags下的商品数量

GET /book/_search

{

"size": 0,

"query": {

"match": {

"description": "java程序员"

}

},

"aggs": {

"group_by_tags": {

"terms": { "field": "tags" }

}

}

}

17.1.4 需求:先分组,再算每组的平均值,计算每个tag下的商品的平均价格

GET /book/_search

{

"size": 0,

"aggs" : {

"group_by_tags" : {

"terms" : {

"field" : "tags"

},

"aggs" : {

"avg_price" : {

"avg" : { "field" : "price" }

}

}

}

}

}

17.1.5 需求:计算每个tag下的商品的平均价格,并且按照平均价格降序排序

GET /book/_search

{

"size": 0,

"aggs" : {

"group_by_tags" : {

"terms" : {

"field" : "tags",

"order": {

"avg_price": "desc"

}

},

"aggs" : {

"avg_price" : {

"avg" : { "field" : "price" }

}

}

}

}

}

17.1.6 需求:按照指定的价格范围区间进行分组,然后在每组内再按照tag进行分组,最后再计算每组的平均价格

GET /book/_search

{

"size": 0,

"aggs": {

"group_by_price": {

"range": {

"field": "price",

"ranges": [

{

"from": 0,

"to": 40

},

{

"from": 40,

"to": 60

},

{

"from": 60,

"to": 80

}

]

},

"aggs": {

"group_by_tags": {

"terms": {

"field": "tags"

},

"aggs": {

"average_price": {

"avg": {

"field": "price"

}

}

}

}

}

}

}

}

17.2 bucket和metric

17.2.1 bucket:一个数据分组

| city | name |

|---|---|

| 北京 | 张三 |

| 北京 | 李四 |

| 天津 | 王五 |

| 天津 | 赵六 |

| 天津 | 王麻子 |

划分出来两个bucket,一个是北京bucket,一个是天津bucket

北京bucket:包含了2个人,张三,李四

上海bucket:包含了3个人,王五,赵六,王麻子

17.2.2 metric:对一个数据分组执行的统计

metric,就是对一个bucket执行的某种聚合分析的操作,比如说求平均值,求最大值,求最小值

select count(*) from book group by studymodel

bucket:group by studymodel --> 那些studymodel相同的数据,就会被划分到一个bucket中

metric:count(*),对每个user_id bucket中所有的数据,计算一个数量。还有avg(),sum(),max(),min()

17.3 电视案例

创建索引及映射

PUT /tvs

PUT /tvs/_search

{

"properties": {

"price": {

"type": "long"

},

"color": {

"type": "keyword"

},

"brand": {

"type": "keyword"

},

"sold_date": {

"type": "date"

}

}

}

插入数据

POST /tvs/_bulk

{ "index": {}}

{ "price" : 1000, "color" : "红色", "brand" : "长虹", "sold_date" : "2019-10-28" }

{ "index": {}}

{ "price" : 2000, "color" : "红色", "brand" : "长虹", "sold_date" : "2019-11-05" }

{ "index": {}}

{ "price" : 3000, "color" : "绿色", "brand" : "小米", "sold_date" : "2019-05-18" }

{ "index": {}}

{ "price" : 1500, "color" : "蓝色", "brand" : "TCL", "sold_date" : "2019-07-02" }

{ "index": {}}

{ "price" : 1200, "color" : "绿色", "brand" : "TCL", "sold_date" : "2019-08-19" }

{ "index": {}}

{ "price" : 2000, "color" : "红色", "brand" : "长虹", "sold_date" : "2019-11-05" }

{ "index": {}}

{ "price" : 8000, "color" : "红色", "brand" : "三星", "sold_date" : "2020-01-01" }

{ "index": {}}

{ "price" : 2500, "color" : "蓝色", "brand" : "小米", "sold_date" : "2020-02-12" }

需求1 统计哪种颜色的电视销量最高

GET /tvs/_search

{

"size" : 0,

"aggs" : {

"popular_colors" : {

"terms" : {

"field" : "color"

}

}

}

}

查询条件解析:

- size:只获取聚合结果,而不要执行聚合的原始数据

- aggs:固定语法,要对一份数据执行分组聚合操作

- popular_colors:就是对每个aggs,都要起一个名字

- terms:根据字段的值进行分组

- field:根据指定的字段的值进行分组

返回

{

"took" : 18,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 8,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"popular_colors" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "红色",

"doc_count" : 4

},

{

"key" : "绿色",

"doc_count" : 2

},

{

"key" : "蓝色",

"doc_count" : 2

}

]

}

}

}

返回结果解析:

- hits.hits:我们指定了size是0,所以hits.hits就是空的

- aggregations:聚合结果

- popular_color:我们指定的某个聚合的名称

- buckets:根据我们指定的field划分出的buckets

- key:每个bucket对应的那个值

- doc_count:这个bucket分组内,有多少个数据,数量就是这种颜色的销量

每种颜色对应的bucket中的数据的默认的排序规则:按照doc_count降序排序

需求2 统计每种颜色电视平均价格

GET /tvs/_search

{

"size" : 0,

"aggs": {

"colors": {

"terms": {

"field": "color"

},

"aggs": {

"avg_price": {

"avg": {

"field": "price"

}

}

}

}

}

}

在一个aggs执行的bucket操作(terms),平级的json结构下,再加一个aggs,这个第二个aggs内部,同样取个名字,执行一个metric操作,avg,对之前的每个bucket中的数据的指定的field,price field,求一个平均值

返回:

{

"took" : 4,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 8,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"colors" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "红色",

"doc_count" : 4,

"avg_price" : {

"value" : 3250.0

}

},

{

"key" : "绿色",

"doc_count" : 2,

"avg_price" : {

"value" : 2100.0

}

},

{

"key" : "蓝色",

"doc_count" : 2,

"avg_price" : {

"value" : 2000.0

}

}

]

}

}

}

- buckets,除了key和doc_count

- avg_price:我们自己取的metric aggs的名字

- value:我们的metric计算的结果,每个bucket中的数据的price字段求平均值后的结果

相当于sql: select avg(price) from tvs group by color

需求3 继续下钻分析

每个颜色下,平均价格及每个颜色下,每个品牌的平均价格

GET /tvs/_search

{

"size": 0,

"aggs": {

"group_by_color": {

"terms": {

"field": "color"

},

"aggs": {

"color_avg_price": {

"avg": {

"field": "price"

}

},

"group_by_brand": {

"terms": {

"field": "brand"

},

"aggs": {

"brand_avg_price": {

"avg": {

"field": "price"

}

}

}

}

}

}

}

}

需求4:更多的metric

求出每个颜色的销售数量、平均价格、最大价格、最小价格、价格总和

- count:bucket,terms,自动就会有一个doc_count,就相当于是count

- avg:avg aggs,求平均值

- max:求一个bucket内,指定field值最大的那个数据

- min:求一个bucket内,指定field值最小的那个数据

- sum:求一个bucket内,指定field值的总和

GET /tvs/_search

{

"size" : 0,

"aggs": {

"colors": {

"terms": {

"field": "color"

},

"aggs": {

"avg_price": { "avg": { "field": "price" } },

"min_price" : { "min": { "field": "price"} },

"max_price" : { "max": { "field": "price"} },

"sum_price" : { "sum": { "field": "price" } }

}

}

}

}

需求5:划分范围 histogram

求出价格每2000为一个区间,每个区间的销售总额

GET /tvs/_search

{

"size" : 0,

"aggs":{

"price":{

"histogram":{

"field": "price",

"interval": 2000

},

"aggs":{

"income": {

"sum": {

"field" : "price"

}

}

}

}

}

}

histogram:类似于terms,也是进行bucket分组操作,接收一个field,按照这个field的值的各个范围区间,进行bucket分组操作

"histogram":{

"field": "price",

"interval": 2000

}

interval:2000,划分范围,02000,20004000,40006000,60008000,8000~10000,buckets

bucket有了之后,一样的,去对每个bucket执行avg,count,sum,max,min,等各种metric操作,聚合分析

需求6:按照日期分组聚合

求出每个月销售个数

-

date_histogram,按照我们指定的某个date类型的日期field,以及日期interval,按照一定的日期间隔,去划分bucket

-

min_doc_count:即使某个日期interval,2017-01-01~2017-01-31中,一条数据都没有,那么这个区间也是要返回的,不然默认是会过滤掉这个区间的

-

extended_bounds,min,max:划分bucket的时候,会限定在这个起始日期,和截止日期内

GET /tvs/_search

{

"size" : 0,

"aggs": {

"sales": {

"date_histogram": {

"field": "sold_date",

"interval": "month",

"format": "yyyy-MM-dd",

"min_doc_count" : 0,

"extended_bounds" : {

"min" : "2019-01-01",

"max" : "2020-12-31"

}

}

}

}

}

需求7 统计每季度每个品牌的销售额以及每个季度销售总额

GET /tvs/_search

{

"size": 0,

"aggs": {

"group_by_sold_date": {

"date_histogram": {

"field": "sold_date",

"interval": "quarter",

"format": "yyyy-MM-dd",

"min_doc_count": 0,

"extended_bounds": {

"min": "2019-01-01",

"max": "2020-12-31"

}

},

"aggs": {

"group_by_brand": {

"terms": {

"field": "brand"

},

"aggs": {

"sum_price": {

"sum": {

"field": "price"

}

}

}

},

"total_sum_price": {

"sum": {

"field": "price"

}

}

}

}

}

}

需求8 :搜索与聚合结合,查询某个品牌按颜色销量

搜索与聚合可以结合起来。

sql select count(*) from tvs where brand like “%小米%” group by color

es aggregation,scope,任何的聚合,都必须在搜索出来的结果数据中之行,搜索结果,就是聚合分析操作的scope

GET /tvs/_search

{

"size": 0,

"query": {

"term": {

"brand": {

"value": "小米"

}

}

},

"aggs": {

"group_by_color": {

"terms": {

"field": "color"

}

}

}

}

需求9 global bucket:单个品牌与所有品牌销量对比

aggregation,scope,一个聚合操作,必须在query的搜索结果范围内执行

出来两个结果,一个结果,是基于query搜索结果来聚合的;一个结果,是对所有数据执行聚合的

GET /tvs/_search

{

"size": 0,

"query": {

"term": {

"brand": {

"value": "小米"

}

}

},

"aggs": {

"single_brand_avg_price": {

"avg": {

"field": "price"

}

},

"all": {

"global": {},

"aggs": {

"all_brand_avg_price": {

"avg": {

"field": "price"

}

}

}

}

}

}

需求10:过滤+聚合:统计价格大于1200的电视平均价格

搜索+聚合

过滤+聚合

GET /tvs/_search

{

"size": 0,

"query": {

"constant_score": {

"filter": {

"range": {

"price": {

"gte": 1200

}

}

}

}

},

"aggs": {

"avg_price": {

"avg": {

"field": "price"

}

}

}

}

需求11 bucket filter:统计品牌最近一个月的平均价格

GET /tvs/_search

{

"size": 0,

"query": {

"term": {

"brand": {

"value": "小米"

}

}

},

"aggs": {

"recent_150d": {

"filter": {

"range": {

"sold_date": {

"gte": "now-150d"

}

}

},

"aggs": {

"recent_150d_avg_price": {

"avg": {

"field": "price"

}

}

}

},

"recent_140d": {

"filter": {

"range": {

"sold_date": {

"gte": "now-140d"

}

}

},

"aggs": {

"recent_140d_avg_price": {

"avg": {

"field": "price"

}

}

}

},

"recent_130d": {

"filter": {

"range": {

"sold_date": {

"gte": "now-130d"

}

}

},

"aggs": {

"recent_130d_avg_price": {

"avg": {

"field": "price"

}

}

}

}

}

}

aggs.filter,针对的是聚合去做的

如果放query里面的filter,是全局的,会对所有的数据都有影响

比如说,要统计长虹电视,最近1个月的平均值;最近3个月的平均值;最近6个月的平均值

bucket filter:对不同的bucket下的aggs,进行filter

需求12 排序:按每种颜色的平均销售额降序排序

GET /tvs/_search

{

"size": 0,

"aggs": {

"group_by_color": {

"terms": {

"field": "color",

"order": {

"avg_price": "asc"

}

},

"aggs": {

"avg_price": {

"avg": {

"field": "price"

}

}

}

}

}

}

相当于sql子表数据字段可以立刻使用。

需求13 排序:按每种颜色的每种品牌平均销售额降序排序

GET /tvs/_search

{

"size": 0,

"aggs": {

"group_by_color": {

"terms": {

"field": "color"

},

"aggs": {

"group_by_brand": {

"terms": {

"field": "brand",

"order": {

"avg_price": "desc"

}

},

"aggs": {

"avg_price": {

"avg": {

"field": "price"

}

}

}

}

}

}

}

}

18.java api实现聚合

package com.itheima.es;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.aggregations.Aggregation;

import org.elasticsearch.search.aggregations.AggregationBuilders;

import org.elasticsearch.search.aggregations.Aggregations;

import org.elasticsearch.search.aggregations.bucket.histogram.*;

import org.elasticsearch.search.aggregations.bucket.terms.Terms;

import org.elasticsearch.search.aggregations.bucket.terms.TermsAggregationBuilder;

import org.elasticsearch.search.aggregations.metrics.*;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import java.io.IOException;

import java.util.List;

/**

* creste by itheima.itcast

*/

@SpringBootTest

@RunWith(SpringRunner.class)

public class TestAggs {

@Autowired

RestHighLevelClient client;

//需求一:按照颜色分组,计算每个颜色卖出的个数

@Test

public void testAggs() throws IOException {

// GET /tvs/_search

// {

// "size": 0,

// "query": {"match_all": {}},

// "aggs": {

// "group_by_color": {

// "terms": {

// "field": "color"

// }

// }

// }

// }

//1 构建请求

SearchRequest searchRequest=new SearchRequest("tvs");

//请求体

SearchSourceBuilder searchSourceBuilder=new SearchSourceBuilder();

searchSourceBuilder.size(0);

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

TermsAggregationBuilder termsAggregationBuilder = AggregationBuilders.terms("group_by_color").field("color");

searchSourceBuilder.aggregation(termsAggregationBuilder);

//请求体放入请求头

searchRequest.source(searchSourceBuilder);

//2 执行

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3 获取结果

// "aggregations" : {

// "group_by_color" : {

// "doc_count_error_upper_bound" : 0,

// "sum_other_doc_count" : 0,

// "buckets" : [

// {

// "key" : "红色",

// "doc_count" : 4

// },

// {

// "key" : "绿色",

// "doc_count" : 2

// },

// {

// "key" : "蓝色",

// "doc_count" : 2

// }

// ]

// }

Aggregations aggregations = searchResponse.getAggregations();

Terms group_by_color = aggregations.get("group_by_color");

List<? extends Terms.Bucket> buckets = group_by_color.getBuckets();

for (Terms.Bucket bucket : buckets) {

String key = bucket.getKeyAsString();

System.out.println("key:"+key);

long docCount = bucket.getDocCount();

System.out.println("docCount:"+docCount);

System.out.println("=================================");

}

}

// #需求二:按照颜色分组,计算每个颜色卖出的个数,每个颜色卖出的平均价格

@Test

public void testAggsAndAvg() throws IOException {

// GET /tvs/_search

// {

// "size": 0,

// "query": {"match_all": {}},

// "aggs": {

// "group_by_color": {

// "terms": {

// "field": "color"

// },

// "aggs": {

// "avg_price": {

// "avg": {

// "field": "price"

// }

// }

// }

// }

// }

// }

//1 构建请求

SearchRequest searchRequest=new SearchRequest("tvs");

//请求体

SearchSourceBuilder searchSourceBuilder=new SearchSourceBuilder();

searchSourceBuilder.size(0);

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

TermsAggregationBuilder termsAggregationBuilder = AggregationBuilders.terms("group_by_color").field("color");

//terms聚合下填充一个子聚合

AvgAggregationBuilder avgAggregationBuilder = AggregationBuilders.avg("avg_price").field("price");

termsAggregationBuilder.subAggregation(avgAggregationBuilder);

searchSourceBuilder.aggregation(termsAggregationBuilder);

//请求体放入请求头

searchRequest.source(searchSourceBuilder);

//2 执行

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3 获取结果

// {

// "key" : "红色",

// "doc_count" : 4,

// "avg_price" : {

// "value" : 3250.0

// }

// }

Aggregations aggregations = searchResponse.getAggregations();

Terms group_by_color = aggregations.get("group_by_color");

List<? extends Terms.Bucket> buckets = group_by_color.getBuckets();

for (Terms.Bucket bucket : buckets) {

String key = bucket.getKeyAsString();

System.out.println("key:"+key);

long docCount = bucket.getDocCount();

System.out.println("docCount:"+docCount);

Aggregations aggregations1 = bucket.getAggregations();

Avg avg_price = aggregations1.get("avg_price");

double value = avg_price.getValue();

System.out.println("value:"+value);

System.out.println("=================================");

}

}

// #需求三:按照颜色分组,计算每个颜色卖出的个数,以及每个颜色卖出的平均值、最大值、最小值、总和。

@Test

public void testAggsAndMore() throws IOException {

// GET /tvs/_search

// {

// "size" : 0,

// "aggs": {

// "group_by_color": {

// "terms": {

// "field": "color"

// },

// "aggs": {

// "avg_price": { "avg": { "field": "price" } },

// "min_price" : { "min": { "field": "price"} },

// "max_price" : { "max": { "field": "price"} },

// "sum_price" : { "sum": { "field": "price" } }

// }

// }

// }

// }

//1 构建请求

SearchRequest searchRequest=new SearchRequest("tvs");

//请求体

SearchSourceBuilder searchSourceBuilder=new SearchSourceBuilder();

searchSourceBuilder.size(0);

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

TermsAggregationBuilder termsAggregationBuilder = AggregationBuilders.terms("group_by_color").field("color");

//termsAggregationBuilder里放入多个子聚合

AvgAggregationBuilder avgAggregationBuilder = AggregationBuilders.avg("avg_price").field("price");

MinAggregationBuilder minAggregationBuilder = AggregationBuilders.min("min_price").field("price");

MaxAggregationBuilder maxAggregationBuilder = AggregationBuilders.max("max_price").field("price");

SumAggregationBuilder sumAggregationBuilder = AggregationBuilders.sum("sum_price").field("price");

termsAggregationBuilder.subAggregation(avgAggregationBuilder);

termsAggregationBuilder.subAggregation(minAggregationBuilder);

termsAggregationBuilder.subAggregation(maxAggregationBuilder);

termsAggregationBuilder.subAggregation(sumAggregationBuilder);

searchSourceBuilder.aggregation(termsAggregationBuilder);

//请求体放入请求头

searchRequest.source(searchSourceBuilder);

//2 执行

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3 获取结果

// {

// "key" : "红色",

// "doc_count" : 4,

// "max_price" : {

// "value" : 8000.0

// },

// "min_price" : {

// "value" : 1000.0

// },

// "avg_price" : {

// "value" : 3250.0

// },

// "sum_price" : {

// "value" : 13000.0

// }

// }

Aggregations aggregations = searchResponse.getAggregations();

Terms group_by_color = aggregations.get("group_by_color");

List<? extends Terms.Bucket> buckets = group_by_color.getBuckets();

for (Terms.Bucket bucket : buckets) {

String key = bucket.getKeyAsString();

System.out.println("key:"+key);

long docCount = bucket.getDocCount();

System.out.println("docCount:"+docCount);

Aggregations aggregations1 = bucket.getAggregations();

Max max_price = aggregations1.get("max_price");

double maxPriceValue = max_price.getValue();

System.out.println("maxPriceValue:"+maxPriceValue);

Min min_price = aggregations1.get("min_price");

double minPriceValue = min_price.getValue();

System.out.println("minPriceValue:"+minPriceValue);

Avg avg_price = aggregations1.get("avg_price");

double avgPriceValue = avg_price.getValue();

System.out.println("avgPriceValue:"+avgPriceValue);

Sum sum_price = aggregations1.get("sum_price");

double sumPriceValue = sum_price.getValue();

System.out.println("sumPriceValue:"+sumPriceValue);

System.out.println("=================================");

}

}

// #需求四:按照售价每2000价格划分范围,算出每个区间的销售总额 histogram

@Test

public void testAggsAndHistogram() throws IOException {

// GET /tvs/_search

// {

// "size" : 0,

// "aggs":{

// "by_histogram":{

// "histogram":{

// "field": "price",

// "interval": 2000

// },

// "aggs":{

// "income": {

// "sum": {

// "field" : "price"

// }

// }

// }

// }

// }

// }

//1 构建请求

SearchRequest searchRequest=new SearchRequest("tvs");

//请求体

SearchSourceBuilder searchSourceBuilder=new SearchSourceBuilder();

searchSourceBuilder.size(0);

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

HistogramAggregationBuilder histogramAggregationBuilder = AggregationBuilders.histogram("by_histogram").field("price").interval(2000);

SumAggregationBuilder sumAggregationBuilder = AggregationBuilders.sum("income").field("price");

histogramAggregationBuilder.subAggregation(sumAggregationBuilder);

searchSourceBuilder.aggregation(histogramAggregationBuilder);

//请求体放入请求头

searchRequest.source(searchSourceBuilder);

//2 执行

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3 获取结果

// {

// "key" : 0.0,

// "doc_count" : 3,

// income" : {

// "value" : 3700.0

// }

// }

Aggregations aggregations = searchResponse.getAggregations();

Histogram group_by_color = aggregations.get("by_histogram");

List<? extends Histogram.Bucket> buckets = group_by_color.getBuckets();

for (Histogram.Bucket bucket : buckets) {

String keyAsString = bucket.getKeyAsString();

System.out.println("keyAsString:"+keyAsString);

long docCount = bucket.getDocCount();

System.out.println("docCount:"+docCount);

Aggregations aggregations1 = bucket.getAggregations();

Sum income = aggregations1.get("income");

double value = income.getValue();

System.out.println("value:"+value);

System.out.println("=================================");

}

}

// #需求五:计算每个季度的销售总额

@Test

public void testAggsAndDateHistogram() throws IOException {

// GET /tvs/_search

// {

// "size" : 0,

// "aggs": {

// "sales": {

// "date_histogram": {

// "field": "sold_date",

// "interval": "quarter",

// "format": "yyyy-MM-dd",

// "min_doc_count" : 0,

// "extended_bounds" : {

// "min" : "2019-01-01",

// "max" : "2020-12-31"

// }

// },

// "aggs": {

// "income": {

// "sum": {

// "field": "price"

// }

// }

// }

// }

// }

// }

//1 构建请求

SearchRequest searchRequest=new SearchRequest("tvs");

//请求体

SearchSourceBuilder searchSourceBuilder=new SearchSourceBuilder();

searchSourceBuilder.size(0);

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

DateHistogramAggregationBuilder dateHistogramAggregationBuilder = AggregationBuilders.dateHistogram("date_histogram").field("sold_date").calendarInterval(DateHistogramInterval.QUARTER)

.format("yyyy-MM-dd").minDocCount(0).extendedBounds(new ExtendedBounds("2019-01-01", "2020-12-31"));

SumAggregationBuilder sumAggregationBuilder = AggregationBuilders.sum("income").field("price");

dateHistogramAggregationBuilder.subAggregation(sumAggregationBuilder);

searchSourceBuilder.aggregation(dateHistogramAggregationBuilder);

//请求体放入请求头

searchRequest.source(searchSourceBuilder);

//2 执行

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3 获取结果

// {

// "key_as_string" : "2019-01-01",

// "key" : 1546300800000,

// "doc_count" : 0,

// "income" : {

// "value" : 0.0

// }

// }

Aggregations aggregations = searchResponse.getAggregations();

ParsedDateHistogram date_histogram = aggregations.get("date_histogram");

List<? extends Histogram.Bucket> buckets = date_histogram.getBuckets();

for (Histogram.Bucket bucket : buckets) {

String keyAsString = bucket.getKeyAsString();

System.out.println("keyAsString:"+keyAsString);

long docCount = bucket.getDocCount();

System.out.println("docCount:"+docCount);

Aggregations aggregations1 = bucket.getAggregations();

Sum income = aggregations1.get("income");

double value = income.getValue();

System.out.println("value:"+value);

System.out.println("====================");

}

}

}

19.es7 sql新特性

19.1 快速入门

POST /_sql?format=txt

{

"query": "SELECT * FROM tvs "

}

19.2 启动方式

-

http 请求

-

客户端:elasticsearch-sql-cli.bat

-

代码

19.3 显示方式

19.4 sql 翻译

POST /_sql/translate

{

"query": "SELECT * FROM tvs "

}

返回:

{

"size" : 1000,

"_source" : false,

"stored_fields" : "_none_",

"docvalue_fields" : [

{

"field" : "brand"

},

{

"field" : "color"

},

{

"field" : "price"

},

{

"field" : "sold_date",

"format" : "epoch_millis"

}

],

"sort" : [

{

"_doc" : {

"order" : "asc"

}

}

]

}

19.5 与其他DSL结合

POST /_sql?format=txt

{

"query": "SELECT * FROM tvs",

"filter": {

"range": {

"price": {

"gte" : 1200,

"lte" : 2000

}

}

}

}

19.6 java代码实现sql功能

1 前提 es拥有白金版功能

kibana中管理 -> 许可管理 开启白金版试用

2 导入依赖

<dependency>

<groupId>org.elasticsearch.plugin</groupId>

<artifactId>x-pack-sql-jdbc</artifactId>

<version>7.3.0</version>

</dependency>

<repositories>

<repository>

<id>elastic.co</id>

<url>https://artifacts.elastic.co/maven</url>

</repository>

</repositories>

3 代码

public static void main(String[] args) {

try {

// 1创建连接

Connection connection = DriverManager.getConnection("jdbc:es://http://localhost:9200");

// 2创建statement

Statement statement = connection.createStatement();

// 3执行sql

ResultSet results = statement.executeQuery("select * from tvs");

// 4获取结果

while(results.next()){

System.out.println(results.getString(1));

System.out.println(results.getString(2));

System.out.println(results.getString(3));

System.out.println(results.getString(4));

System.out.println("============================");

}

}catch (Exception e){

e.printStackTrace();

}

}

大型企业可以购买白金版,增加Machine Learning、高级安全性x-pack。

20.Logstash学习

20.1 基本语法组成

1 Logstash介绍

logstash是一个数据抽取工具,将数据从一个地方转移到另一个地方。如hadoop生态圈的sqoop等。下载地址:https://www.elastic.co/cn/downloads/logstash

logstash之所以功能强大和流行,还与其丰富的过滤器插件是分不开的,过滤器提供的并不单单是过滤的功能,还可以对进入过滤器的原始数据进行复杂的逻辑处理,甚至添加独特的事件到后续流程中。

Logstash配置文件有如下三部分组成,其中input、output部分是必须配置,filter部分是可选配置,而filter就是过滤器插件,在这部分实现各种日志过滤功能。

2 配置文件

input {

#输入插件

}

filter {

#过滤匹配插件

}

output {

#输出插件

}

3 启动操作

logstash.bat -e 'input{stdin{}} output{stdout{}}'

为了好维护,将配置写入文件,启动

logstash.bat -f ../config/test1.conf

20.2 输入插件(input)

https://www.elastic.co/guide/en/logstash/current/input-plugins.html

1、标准输入(Stdin)

input{

stdin{

}

}

output {

stdout{

codec=>rubydebug

}

}

2、读取文件(File)

logstash使用一个名为filewatch的ruby gem库来监听文件变化,并通过一个叫.sincedb的数据库文件来记录被监听的日志文件的读取进度(时间戳),这个sincedb数据文件的默认路径在 <path.data>/plugins/inputs/file下面,文件名类似于.sincedb_123456,而<path.data>表示logstash插件存储目录,默认是LOGSTASH_HOME/data。

input {

file {

path => ["/var/*/*"]

start_position => "beginning"

}

}

output {

stdout{

codec=>rubydebug

}

}

默认情况下,logstash会从文件的结束位置开始读取数据,也就是说logstash进程会以类似tail -f命令的形式逐行获取数据。

3、读取TCP网络数据

input {

tcp {

port => "1234"

}

}

filter {

grok {

match => { "message" => "%{SYSLOGLINE}" }

}

}

output {

stdout{

codec=>rubydebug

}

}

20.3 过滤器插件(Filter)

https://www.elastic.co/guide/en/logstash/current/filter-plugins.html

20.3.1 Grok 正则捕获

grok是一个十分强大的logstash filter插件,他可以通过正则解析任意文本,将非结构化日志数据弄成结构化和方便查询的结构。他是目前logstash中解析非结构化日志数据最好的方式。

Grok 的语法规则是:

%{语法: 语义}

例如输入的内容为:

172.16.213.132 [07/Feb/2019:16:24:19 +0800] "GET / HTTP/1.1" 403 5039

%{IP:clientip}匹配模式将获得的结果为:clientip: 172.16.213.132

%{HTTPDATE:timestamp}匹配模式将获得的结果为:timestamp: 07/Feb/2018:16:24:19 +0800

而%{QS:referrer}匹配模式将获得的结果为:referrer: “GET / HTTP/1.1”

下面是一个组合匹配模式,它可以获取上面输入的所有内容:

%{IP:clientip}\ \[%{HTTPDATE:timestamp}\]\ %{QS:referrer}\ %{NUMBER:response}\ %{NUMBER:bytes}

通过上面这个组合匹配模式,我们将输入的内容分成了五个部分,即五个字段,将输入内容分割为不同的数据字段,这对于日后解析和查询日志数据非常有用,这正是使用grok的目的。

例子:

input{

stdin{}

}

filter{

grok{

match => ["message","%{IP:clientip}\ \[%{HTTPDATE:timestamp}\]\ %{QS:referrer}\ %{NUMBER:response}\ %{NUMBER:bytes}"]

}

}

output{

stdout{

codec => "rubydebug"

}

}

输入内容:

172.16.213.132 [07/Feb/2019:16:24:19 +0800] "GET / HTTP/1.1" 403 5039

20.3.2 时间处理(Date)

date插件是对于排序事件和回填旧数据尤其重要,它可以用来转换日志记录中的时间字段,变成LogStash::Timestamp对象,然后转存到@timestamp字段里,这在之前已经做过简单的介绍。

下面是date插件的一个配置示例(这里仅仅列出filter部分):

filter {

grok {

match => ["message", "%{HTTPDATE:timestamp}"]

}

date {

match => ["timestamp", "dd/MMM/yyyy:HH:mm:ss Z"]

}

}

20.3.3 数据修改(Mutate)

(1)正则表达式替换匹配字段

gsub可以通过正则表达式替换字段中匹配到的值,只对字符串字段有效,下面是一个关于mutate插件中gsub的示例(仅列出filter部分):

filter {

mutate {

gsub => ["filed_name_1", "/" , "_"]

}

}

这个示例表示将filed_name_1字段中所有"/“字符替换为”_"。

(2)分隔符分割字符串为数组

split可以通过指定的分隔符分割字段中的字符串为数组,下面是一个关于mutate插件中split的示例(仅列出filter部分):

filter {

mutate {

split => ["filed_name_2", "|"]

}

}

这个示例表示将filed_name_2字段以"|"为区间分隔为数组。

(3)重命名字段

rename可以实现重命名某个字段的功能,下面是一个关于mutate插件中rename的示例(仅列出filter部分):

filter {

mutate {

rename => { "old_field" => "new_field" }

}

}

这个示例表示将字段old_field重命名为new_field。

(4)删除字段

remove_field可以实现删除某个字段的功能,下面是一个关于mutate插件中remove_field的示例(仅列出filter部分):

filter {

mutate {

remove_field => ["timestamp"]

}

}

这个示例表示将字段timestamp删除。

(5)GeoIP地址查询归类

filter {

geoip {

source => "ip_field"

}

}

综合例子:

input {

stdin {}

}

filter {

grok {

match => { "message" => "%{IP:clientip}\ \[%{HTTPDATE:timestamp}\]\ %{QS:referrer}\ %{NUMBER:response}\ %{NUMBER:bytes}" }

remove_field => [ "message" ]

}

date {

match => ["timestamp", "dd/MMM/yyyy:HH:mm:ss Z"]

}

mutate {

convert => [ "response","float" ]

rename => { "response" => "response_new" }

gsub => ["referrer","\"",""]

split => ["clientip", "."]

}

}

output {

stdout {

codec => "rubydebug"

}

}

20.4 输出插件(output)

https://www.elastic.co/guide/en/logstash/current/output-plugins.html

output是Logstash的最后阶段,一个事件可以经过多个输出,而一旦所有输出处理完成,整个事件就执行完成。 一些常用的输出包括:

- file: 表示将日志数据写入磁盘上的文件。

- elasticsearch:表示将日志数据发送给Elasticsearch。Elasticsearch可以高效方便和易于查询的保存数据。

1、输出到标准输出(stdout)

output {

stdout {

codec => rubydebug

}

}

2、保存为文件(file)

output {

file {

path => "/data/log/%{+yyyy-MM-dd}/%{host}_%{+HH}.log"

}

}

3、输出到elasticsearch

output {

elasticsearch {

host => ["192.168.1.1:9200","172.16.213.77:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

}

- host:是一个数组类型的值,后面跟的值是elasticsearch节点的地址与端口,默认端口是9200。可添加多个地址。

- index:写入elasticsearch的索引的名称,这里可以使用变量。Logstash提供了%{+YYYY.MM.dd}这种写法。在语法解析的时候,看到以+ 号开头的,就会自动认为后面是时间格式,尝试用时间格式来解析后续字符串。这种以天为单位分割的写法,可以很容易的删除老的数据或者搜索指定时间范围内的数据。此外,注意索引名中不能有大写字母。

- manage_template:用来设置是否开启logstash自动管理模板功能,如果设置为false将关闭自动管理模板功能。如果我们自定义了模板,那么应该设置为false。

- template_name:这个配置项用来设置在Elasticsearch中模板的名称。

20.5 综合案例

input {

file {

path => ["D:/ES/logstash-7.3.0/nginx.log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{IP:clientip}\ \[%{HTTPDATE:timestamp}\]\ %{QS:referrer}\ %{NUMBER:response}\ %{NUMBER:bytes}" }

remove_field => [ "message" ]

}

date {

match => ["timestamp", "dd/MMM/yyyy:HH:mm:ss Z"]

}

mutate {

rename => { "response" => "response_new" }

convert => [ "response","float" ]

gsub => ["referrer","\"",""]

remove_field => ["timestamp"]

split => ["clientip", "."]

}

}

output {

stdout {

codec => "rubydebug"

}

elasticsearch {

host => ["localhost:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

}

21.kibana学习

21.1 基本查询

-

是什么:elk中数据展现工具

-

下载:https://www.elastic.co/cn/downloads/kibana

-

使用:建立索引模式,index partten

discover 中使用DSL搜索。

21.2 可视化

绘制图形。

21.3 仪表盘

将各种可视化图形放入,形成大屏幕。

21.4 使用模板数据指导绘图

点击主页的添加模板数据,可以看到很多模板数据以及绘图。

21.5 其他功能

监控,日志,APM等功能非常丰富。

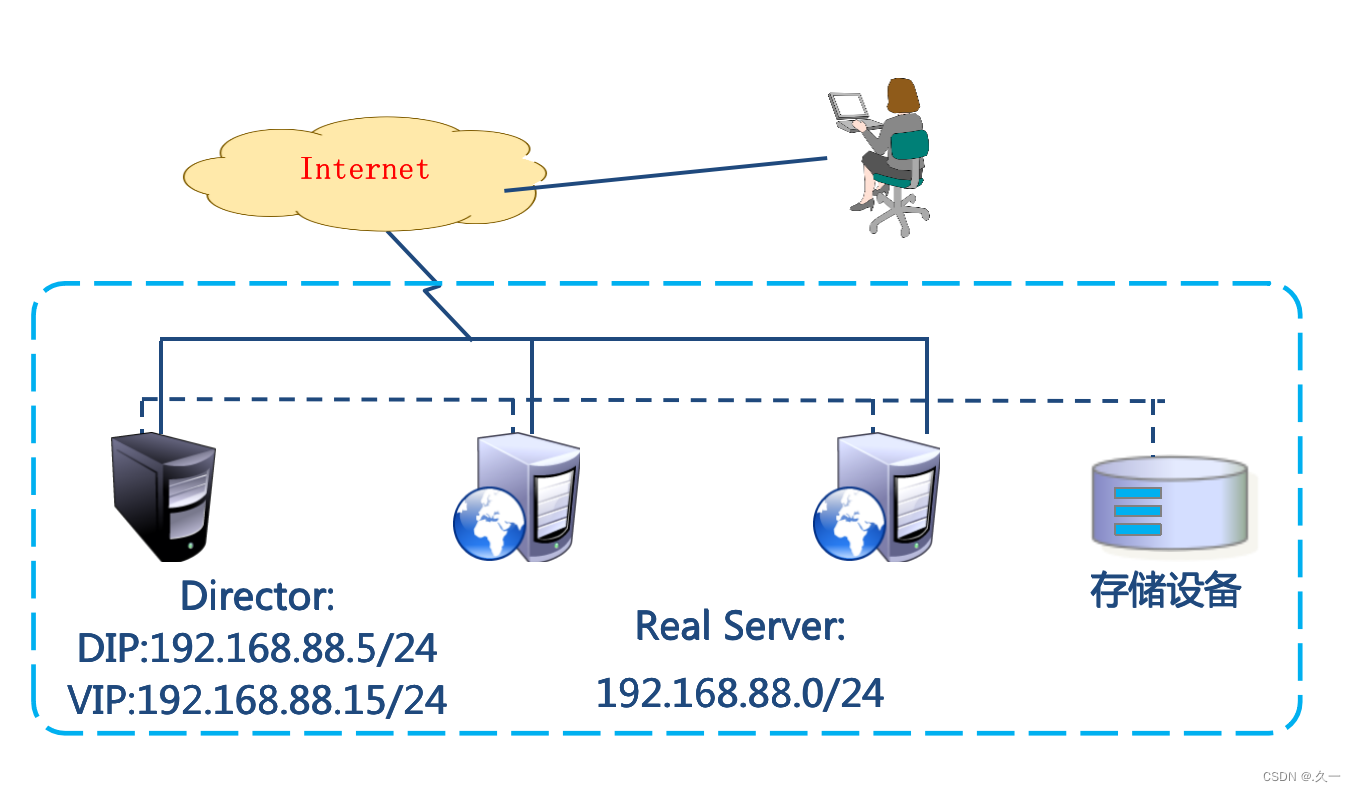

22.集群部署

结点的三个角色

主结点:master节点主要用于集群的管理及索引 比如新增结点、分片分配、索引的新增和删除等。

数据结点:data 节点上保存了数据分片,它负责索引和搜索操作。

客户端结点:client 节点仅作为请求客户端存在,client的作用也作为负载均衡器,client 节点不存数据,只是将请求均衡转发到其它结点。

通过下边两项参数来配置结点的功能:

node.master: #是否允许为主结点

node.data: #允许存储数据作为数据结点

node.ingest: #是否允许成为协调节点

四种组合方式:

master=true,data=true:即是主结点又是数据结点

master=false,data=true:仅是数据结点

master=true,data=false:仅是主结点,不存储数据

master=false,data=false:即不是主结点也不是数据结点,此时可设置ingest为true表示它是一个客户端

23.项目实战

23.1 项目一:ELK用于日志分析

需求:集中收集分布式服务的日志

- 逻辑模块程序随时输出日志

package com.itheima.es;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import java.util.Random;

/**

* creste by itheima.itcast

*/

@SpringBootTest

@RunWith(SpringRunner.class)

public class TestLog {

private static final Logger LOGGER = LoggerFactory.getLogger(TestLog.class);

@Test

public void testLog(){

Random random = new Random();

while (true){

int userid = random.nextInt(10);

LOGGER.info("userId:{},send:{}",userid,"hello world.I am "+userid);

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

- logstash收集日志到es

input {

file {

path => ["D:/logs/log-*.log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{DATA:datetime}\ \[%DATA:thread)\]\ %{DATA:level}\ \ %{DATA:class} - %{GREEDYDATA:logger}" }

remove_field => [ "message" ]

}

date {

match => ["datetime", "yyyy-MM-dd HH:mm:ss.SSS"]

}

if "_grokparsefailure" in [tags] {

drop { }

}

}

output {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "logger-%{+YYYY.MM.dd}"

}

}

grok 内置类型

USERNAME [a-zA-Z0-9._-]+

USER %{USERNAME}

INT (?:[+-]?(?:[0-9]+))

BASE10NUM (?<![0-9.+-])(?>[+-]?(?:(?:[0-9]+(?:\.[0-9]+)?)|(?:\.[0-9]+)))

NUMBER (?:%{BASE10NUM})

BASE16NUM (?<![0-9A-Fa-f])(?:[+-]?(?:0x)?(?:[0-9A-Fa-f]+))

BASE16FLOAT \b(?<![0-9A-Fa-f.])(?:[+-]?(?:0x)?(?:(?:[0-9A-Fa-f]+(?:\.[0-9A-Fa-f]*)?)|(?:\.[0-9A-Fa-f]+)))\b

POSINT \b(?:[1-9][0-9]*)\b

NONNEGINT \b(?:[0-9]+)\b

WORD \b\w+\b

NOTSPACE \S+

SPACE \s*

DATA .*?

GREEDYDATA .*

QUOTEDSTRING (?>(?<!\\)(?>"(?>\\.|[^\\"]+)+"|""|(?>'(?>\\.|[^\\']+)+')|''|(?>`(?>\\.|[^\\`]+)+`)|``))

UUID [A-Fa-f0-9]{8}-(?:[A-Fa-f0-9]{4}-){3}[A-Fa-f0-9]{12}

# Networking

MAC (?:%{CISCOMAC}|%{WINDOWSMAC}|%{COMMONMAC})

CISCOMAC (?:(?:[A-Fa-f0-9]{4}\.){2}[A-Fa-f0-9]{4})

WINDOWSMAC (?:(?:[A-Fa-f0-9]{2}-){5}[A-Fa-f0-9]{2})

COMMONMAC (?:(?:[A-Fa-f0-9]{2}:){5}[A-Fa-f0-9]{2})

IPV6 ((([0-9A-Fa-f]{1,4}:){7}([0-9A-Fa-f]{1,4}|:))|(([0-9A-Fa-f]{1,4}:){6}(:[0-9A-Fa-f]{1,4}|((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){5}(((:[0-9A-Fa-f]{1,4}){1,2})|:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){4}(((:[0-9A-Fa-f]{1,4}){1,3})|((:[0-9A-Fa-f]{1,4})?:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){3}(((:[0-9A-Fa-f]{1,4}){1,4})|((:[0-9A-Fa-f]{1,4}){0,2}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){2}(((:[0-9A-Fa-f]{1,4}){1,5})|((:[0-9A-Fa-f]{1,4}){0,3}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){1}(((:[0-9A-Fa-f]{1,4}){1,6})|((:[0-9A-Fa-f]{1,4}){0,4}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(:(((:[0-9A-Fa-f]{1,4}){1,7})|((:[0-9A-Fa-f]{1,4}){0,5}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:)))(%.+)?

IPV4 (?<![0-9])(?:(?:25[0-5]|2[0-4][0-9]|[0-1]?[0-9]{1,2})[.](?:25[0-5]|2[0-4][0-9]|[0-1]?[0-9]{1,2})[.](?:25[0-5]|2[0-4][0-9]|[0-1]?[0-9]{1,2})[.](?:25[0-5]|2[0-4][0-9]|[0-1]?[0-9]{1,2}))(?![0-9])

IP (?:%{IPV6}|%{IPV4})

HOSTNAME \b(?:[0-9A-Za-z][0-9A-Za-z-]{0,62})(?:\.(?:[0-9A-Za-z][0-9A-Za-z-]{0,62}))*(\.?|\b)

HOST %{HOSTNAME}

IPORHOST (?:%{HOSTNAME}|%{IP})

HOSTPORT %{IPORHOST}:%{POSINT}

# paths

PATH (?:%{UNIXPATH}|%{WINPATH})

UNIXPATH (?>/(?>[\w_%!$@:.,-]+|\\.)*)+

TTY (?:/dev/(pts|tty([pq])?)(\w+)?/?(?:[0-9]+))

WINPATH (?>[A-Za-z]+:|\\)(?:\\[^\\?*]*)+

URIPROTO [A-Za-z]+(\+[A-Za-z+]+)?

URIHOST %{IPORHOST}(?::%{POSINT:port})?

# uripath comes loosely from RFC1738, but mostly from what Firefox

# doesn't turn into %XX

URIPATH (?:/[A-Za-z0-9$.+!*'(){},~:;=@#%_\-]*)+

#URIPARAM \?(?:[A-Za-z0-9]+(?:=(?:[^&]*))?(?:&(?:[A-Za-z0-9]+(?:=(?:[^&]*))?)?)*)?

URIPARAM \?[A-Za-z0-9$.+!*'|(){},~@#%&/=:;_?\-\[\]]*

URIPATHPARAM %{URIPATH}(?:%{URIPARAM})?

URI %{URIPROTO}://(?:%{USER}(?::[^@]*)?@)?(?:%{URIHOST})?(?:%{URIPATHPARAM})?

# Months: January, Feb, 3, 03, 12, December

MONTH \b(?:Jan(?:uary)?|Feb(?:ruary)?|Mar(?:ch)?|Apr(?:il)?|May|Jun(?:e)?|Jul(?:y)?|Aug(?:ust)?|Sep(?:tember)?|Oct(?:ober)?|Nov(?:ember)?|Dec(?:ember)?)\b

MONTHNUM (?:0?[1-9]|1[0-2])

MONTHNUM2 (?:0[1-9]|1[0-2])

MONTHDAY (?:(?:0[1-9])|(?:[12][0-9])|(?:3[01])|[1-9])

# Days: Monday, Tue, Thu, etc...

DAY (?:Mon(?:day)?|Tue(?:sday)?|Wed(?:nesday)?|Thu(?:rsday)?|Fri(?:day)?|Sat(?:urday)?|Sun(?:day)?)

# Years?

YEAR (?>\d\d){1,2}

HOUR (?:2[0123]|[01]?[0-9])

MINUTE (?:[0-5][0-9])

# '60' is a leap second in most time standards and thus is valid.

SECOND (?:(?:[0-5]?[0-9]|60)(?:[:.,][0-9]+)?)

TIME (?!<[0-9])%{HOUR}:%{MINUTE}(?::%{SECOND})(?![0-9])

# datestamp is YYYY/MM/DD-HH:MM:SS.UUUU (or something like it)

DATE_US %{MONTHNUM}[/-]%{MONTHDAY}[/-]%{YEAR}

DATE_EU %{MONTHDAY}[./-]%{MONTHNUM}[./-]%{YEAR}

ISO8601_TIMEZONE (?:Z|[+-]%{HOUR}(?::?%{MINUTE}))

ISO8601_SECOND (?:%{SECOND}|60)

TIMESTAMP_ISO8601 %{YEAR}-%{MONTHNUM}-%{MONTHDAY}[T ]%{HOUR}:?%{MINUTE}(?::?%{SECOND})?%{ISO8601_TIMEZONE}?

DATE %{DATE_US}|%{DATE_EU}

DATESTAMP %{DATE}[- ]%{TIME}

TZ (?:[PMCE][SD]T|UTC)

DATESTAMP_RFC822 %{DAY} %{MONTH} %{MONTHDAY} %{YEAR} %{TIME} %{TZ}

DATESTAMP_RFC2822 %{DAY}, %{MONTHDAY} %{MONTH} %{YEAR} %{TIME} %{ISO8601_TIMEZONE}

DATESTAMP_OTHER %{DAY} %{MONTH} %{MONTHDAY} %{TIME} %{TZ} %{YEAR}

DATESTAMP_EVENTLOG %{YEAR}%{MONTHNUM2}%{MONTHDAY}%{HOUR}%{MINUTE}%{SECOND}

# Syslog Dates: Month Day HH:MM:SS

SYSLOGTIMESTAMP %{MONTH} +%{MONTHDAY} %{TIME}

PROG (?:[\w._/%-]+)

SYSLOGPROG %{PROG:program}(?:\[%{POSINT:pid}\])?

SYSLOGHOST %{IPORHOST}

SYSLOGFACILITY <%{NONNEGINT:facility}.%{NONNEGINT:priority}>

HTTPDATE %{MONTHDAY}/%{MONTH}/%{YEAR}:%{TIME} %{INT}

# Shortcuts

QS %{QUOTEDSTRING}

# Log formats

SYSLOGBASE %{SYSLOGTIMESTAMP:timestamp} (?:%{SYSLOGFACILITY} )?%{SYSLOGHOST:logsource} %{SYSLOGPROG}:

COMMONAPACHELOG %{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-)

COMBINEDAPACHELOG %{COMMONAPACHELOG} %{QS:referrer} %{QS:agent}

# Log Levels

LOGLEVEL ([Aa]lert|ALERT|[Tt]race|TRACE|[Dd]ebug|DEBUG|[Nn]otice|NOTICE|[Ii]nfo|INFO|[Ww]arn?(?:ing)?|WARN?(?:ING)?|[Ee]rr?(?:or)?|ERR?(?:OR)?|[Cc]rit?(?:ical)?|CRIT?(?:ICAL)?|[Ff]atal|FATAL|[Ss]evere|SEVERE|EMERG(?:ENCY)?|[Ee]merg(?:ency)?)

写logstash配置文件。

- kibana展现数据

23.2 项目二:学成在线站内搜索

- mysql导入course_pub表

- 创建索引xc_course

- 创建映射

PUT /xc_course

{

"settings": {

"number_of_shards": 1,

"number_of_replicas": 0

},

"mappings": {

"properties": {

"description" : {

"analyzer" : "ik_max_word",

"search_analyzer": "ik_smart",

"type" : "text"

},

"grade" : {

"type" : "keyword"

},

"id" : {

"type" : "keyword"

},

"mt" : {

"type" : "keyword"

},

"name" : {

"analyzer" : "ik_max_word",

"search_analyzer": "ik_smart",

"type" : "text"

},

"users" : {

"index" : false,

"type" : "text"

},

"charge" : {

"type" : "keyword"

},

"valid" : {

"type" : "keyword"

},

"pic" : {

"index" : false,

"type" : "keyword"

},

"qq" : {

"index" : false,

"type" : "keyword"

},

"price" : {

"type" : "float"

},

"price_old" : {

"type" : "float"

},

"st" : {

"type" : "keyword"

},

"status" : {

"type" : "keyword"

},

"studymodel" : {

"type" : "keyword"

},

"teachmode" : {

"type" : "keyword"

},

"teachplan" : {

"analyzer" : "ik_max_word",

"search_analyzer": "ik_smart",

"type" : "text"

},

"expires" : {

"type" : "date",

"format": "yyyy-MM-dd HH:mm:ss"

},

"pub_time" : {

"type" : "date",

"format": "yyyy-MM-dd HH:mm:ss"

},

"start_time" : {

"type" : "date",

"format": "yyyy-MM-dd HH:mm:ss"

},

"end_time" : {

"type" : "date",

"format": "yyyy-MM-dd HH:mm:ss"

}

}

}

}

- logstash创建模板文件

Logstash的工作是从MySQL中读取数据,向ES中创建索引,这里需要提前创建mapping的模板文件以便logstash使用。

在logstach的config目录创建xc_course_template.json,内容如下:

{

"mappings" : {

"doc" : {

"properties" : {

"charge" : {

"type" : "keyword"

},

"description" : {

"analyzer" : "ik_max_word",

"search_analyzer" : "ik_smart",

"type" : "text"

},

"end_time" : {

"format" : "yyyy-MM-dd HH:mm:ss",

"type" : "date"

},

"expires" : {

"format" : "yyyy-MM-dd HH:mm:ss",

"type" : "date"

},

"grade" : {

"type" : "keyword"

},

"id" : {

"type" : "keyword"

},

"mt" : {

"type" : "keyword"

},

"name" : {

"analyzer" : "ik_max_word",

"search_analyzer" : "ik_smart",

"type" : "text"

},

"pic" : {

"index" : false,

"type" : "keyword"

},

"price" : {

"type" : "float"

},

"price_old" : {

"type" : "float"

},

"pub_time" : {

"format" : "yyyy-MM-dd HH:mm:ss",

"type" : "date"

},

"qq" : {

"index" : false,

"type" : "keyword"

},

"st" : {

"type" : "keyword"

},

"start_time" : {

"format" : "yyyy-MM-dd HH:mm:ss",

"type" : "date"

},

"status" : {

"type" : "keyword"

},

"studymodel" : {

"type" : "keyword"

},

"teachmode" : {

"type" : "keyword"

},

"teachplan" : {

"analyzer" : "ik_max_word",

"search_analyzer" : "ik_smart",

"type" : "text"

},

"users" : {

"index" : false,

"type" : "text"

},

"valid" : {

"type" : "keyword"

}

}

}

},

"template" : "xc_course"

}

- logstash配置mysql.conf

1、ES采用UTC时区问题

ES采用UTC 时区,比北京时间早8小时,所以ES读取数据时让最后更新时间加8小时

where timestamp > date_add(:sql_last_value,INTERVAL 8 HOUR)

2、logstash每个执行完成会在/config/logstash_metadata记录执行时间下次以此时间为基准进行增量同步数据到索引库。

- 启动

.\logstash.bat -f ..\config\mysql.conf

- 后端代码

7.1 Controller

@RestController

@RequestMapping("/search/course")

public class EsCourseController {

@Autowired

EsCourseService esCourseService;

@GetMapping(value="/list/{page}/{size}")

public QueryResponseResult<CoursePub> list(@PathVariable("page") int page, @PathVariable("size") int size, CourseSearchParam courseSearchParam) {

return esCourseService.list(page,size,courseSearchParam);

}

}

7.2 Service

@Service

public class EsCourseService {

@Value("${heima.course.source_field}")

private String source_field;

@Autowired

RestHighLevelClient restHighLevelClient;

//课程搜索

public QueryResponseResult<CoursePub> list(int page, int size, CourseSearchParam courseSearchParam) {

if (courseSearchParam == null) {

courseSearchParam = new CourseSearchParam();

}

//1创建搜索请求对象

SearchRequest searchRequest = new SearchRequest("xc_course");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//过虑源字段

String[] source_field_array = source_field.split(",");

searchSourceBuilder.fetchSource(source_field_array, new String[]{});

//创建布尔查询对象

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();

//搜索条件

//根据关键字搜索

if (StringUtils.isNotEmpty(courseSearchParam.getKeyword())) {

MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders.multiMatchQuery(courseSearchParam.getKeyword(), "name", "description", "teachplan")

.minimumShouldMatch("70%")

.field("name", 10);

boolQueryBuilder.must(multiMatchQueryBuilder);

}

if (StringUtils.isNotEmpty(courseSearchParam.getMt())) {

//根据一级分类

boolQueryBuilder.filter(QueryBuilders.termQuery("mt", courseSearchParam.getMt()));

}

if (StringUtils.isNotEmpty(courseSearchParam.getSt())) {

//根据二级分类

boolQueryBuilder.filter(QueryBuilders.termQuery("st", courseSearchParam.getSt()));

}

if (StringUtils.isNotEmpty(courseSearchParam.getGrade())) {

//根据难度等级

boolQueryBuilder.filter(QueryBuilders.termQuery("grade", courseSearchParam.getGrade()));

}

//设置boolQueryBuilder到searchSourceBuilder

searchSourceBuilder.query(boolQueryBuilder);

//设置分页参数

if (page <= 0) {

page = 1;

}

if (size <= 0) {

size = 12;

}

//起始记录下标

int from = (page - 1) * size;

searchSourceBuilder.from(from);

searchSourceBuilder.size(size);

//设置高亮

HighlightBuilder highlightBuilder = new HighlightBuilder();

highlightBuilder.preTags("<font class='eslight'>");

highlightBuilder.postTags("</font>");

//设置高亮字段

// <font class='eslight'>node</font>学习

highlightBuilder.fields().add(new HighlightBuilder.Field("name"));

searchSourceBuilder.highlighter(highlightBuilder);

searchRequest.source(searchSourceBuilder);

QueryResult<CoursePub> queryResult = new QueryResult();

List<CoursePub> list = new ArrayList<CoursePub>();

try {

//2执行搜索

SearchResponse searchResponse = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);

//3获取响应结果

SearchHits hits = searchResponse.getHits();

long totalHits=hits.getTotalHits().value;

//匹配的总记录数

// long totalHits = hits.totalHits;

queryResult.setTotal(totalHits);

SearchHit[] searchHits = hits.getHits();

for (SearchHit hit : searchHits) {

CoursePub coursePub = new CoursePub();

//源文档

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

//取出id

String id = (String) sourceAsMap.get("id");

coursePub.setId(id);

//取出name

String name = (String) sourceAsMap.get("name");

//取出高亮字段name

Map<String, HighlightField> highlightFields = hit.getHighlightFields();

if (highlightFields != null) {

HighlightField highlightFieldName = highlightFields.get("name");

if (highlightFieldName != null) {

Text[] fragments = highlightFieldName.fragments();

StringBuffer stringBuffer = new StringBuffer();

for (Text text : fragments) {

stringBuffer.append(text);

}

name = stringBuffer.toString();

}

}

coursePub.setName(name);

//图片

String pic = (String) sourceAsMap.get("pic");

coursePub.setPic(pic);

//价格

Double price = null;

try {

if (sourceAsMap.get("price") != null) {

price = (Double) sourceAsMap.get("price");

}

} catch (Exception e) {

e.printStackTrace();

}

coursePub.setPrice(price);

//旧价格

Double price_old = null;

try {

if (sourceAsMap.get("price_old") != null) {

price_old = (Double) sourceAsMap.get("price_old");

}

} catch (Exception e) {

e.printStackTrace();

}

coursePub.setPrice_old(price_old);

//将coursePub对象放入list

list.add(coursePub);

}

} catch (IOException e) {

e.printStackTrace();

}

queryResult.setList(list);

QueryResponseResult<CoursePub> queryResponseResult = new QueryResponseResult<CoursePub>(CommonCode.SUCCESS, queryResult);

return queryResponseResult;

}

}