神经网络-卷积层

- 官网

- 卷积数据公式

- 参数说明

- 卷积运算演示

- 输入输出channel

- 代码

- **注意点:**

- code

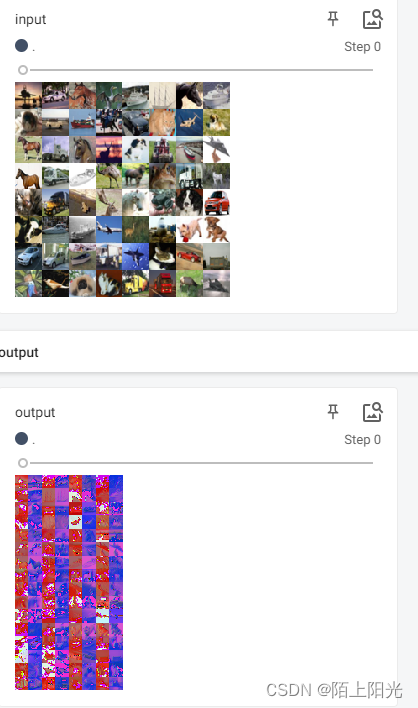

- 执行结果

官网

https://pytorch.org/docs/stable/nn.html#convolution-layers

图像识别常用conv2d 二维卷积 nn.Conv2d

https://pytorch.org/docs/stable/generated/torch.nn.Conv2d.html#torch.nn.Conv2d

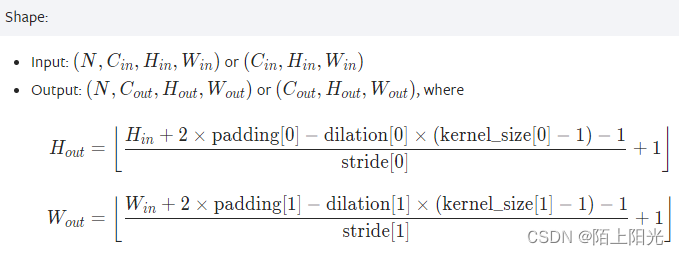

卷积数据公式

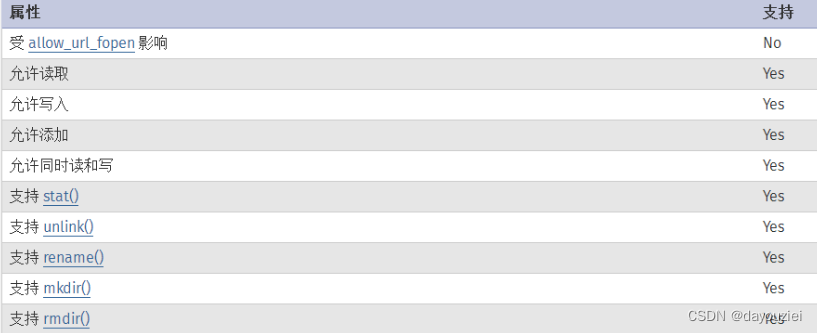

参数说明

Parameters:

-

in_channels (int) – Number of channels in the input image 输入通道

-

out_channels (int) – Number of channels produced by the convolution 输出通道

-

kernel_size (int or tuple) – Size of the convolving kernel 卷积核大小

-

stride (int or tuple, optional) – Stride of the convolution. Default: 1 每次卷积走多少步,横向纵向的步径大小

-

padding (int, tuple or str, optional) – Padding added to all four sides of the input. Default: 0 是否在卷积过程中对输入图像的边缘进行填充

-

padding_mode (str, optional) – ‘zeros’, ‘reflect’, ‘replicate’ or ‘circular’. Default: ‘zeros’ 填充数据的模式是什么,默认为zeros,填充的都是0

-

dilation (int or tuple, optional) – Spacing between kernel elements. Default: 1 卷积核中间的距离? 一般不改 不常用

-

groups (int, optional) – Number of blocked connections from input channels to output channels. Default: 1 一般不改 不常用

-

bias (bool, optional) – If True, adds a learnable bias to the output. Default: True 添加偏置值,默认为True添加偏置值

卷积运算演示

https://github.com/vdumoulin/conv_arithmetic/blob/master/README.md

绿色方格:表示输出图像

蓝色方格:表示输入图像

蓝色方格中的深色阴影部分:表示kernel 卷积核

白色虚线:表示padding填充

动画中深色阴影上下左右整体移动的方格数,表示stride的大小

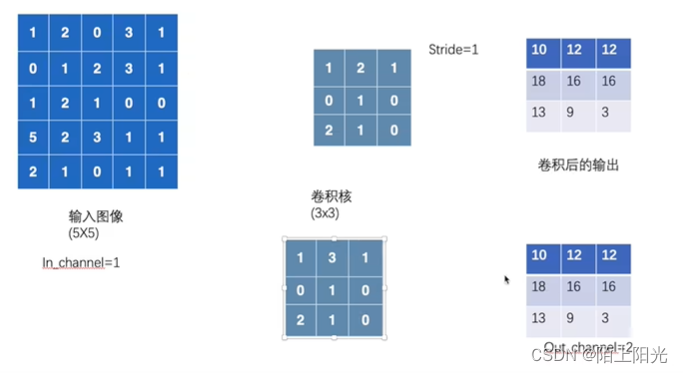

输入输出channel

两个卷积核做两次卷积,叠加输出一起是out_channel=2

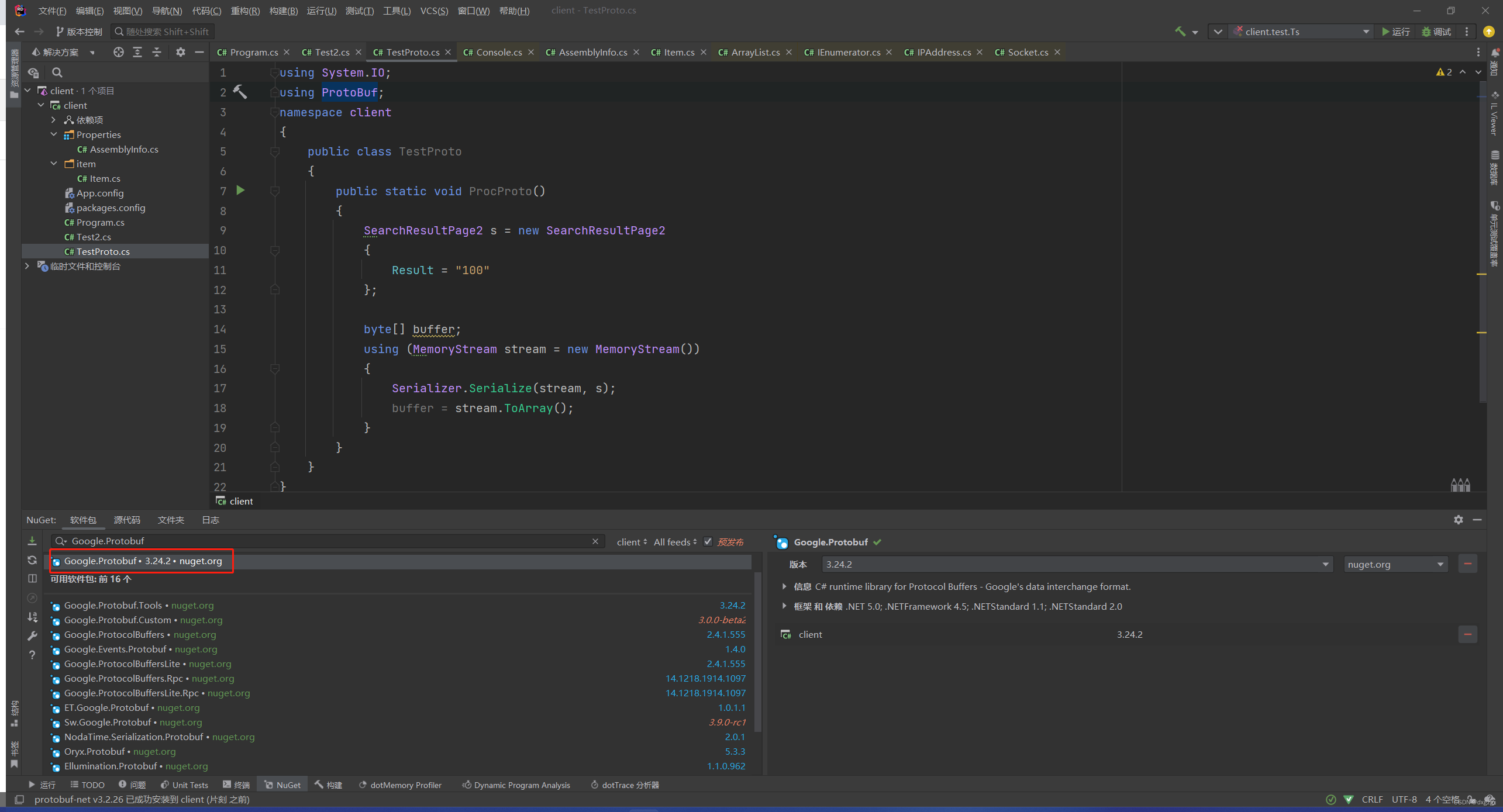

代码

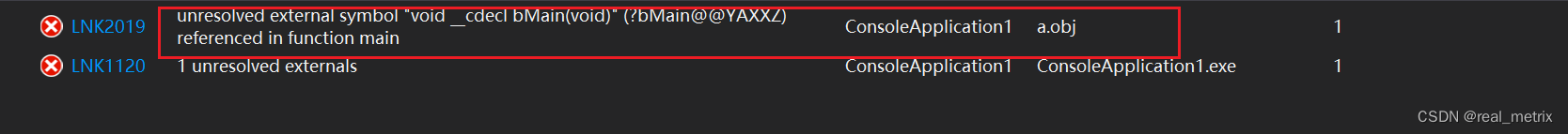

注意点:

- super()括号里面没有内容,自动填充的self应该去掉

super().__init__()

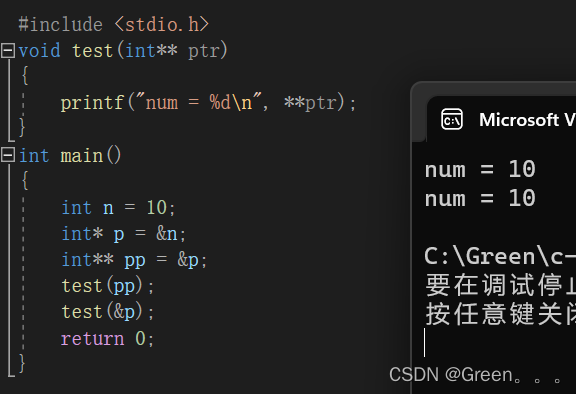

code

import torch

import torchvision

from torch import nn

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_set = torchvision.datasets.CIFAR10(root='./dataset', train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(test_set, batch_size=64, shuffle=False)

class NnConv2d(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

nnconvd = NnConv2d()

writer = SummaryWriter('./logs')

step = 0

for data in dataloader:

imgs, targets = data

output = nnconvd(imgs)

print(imgs.shape)

print(output.shape)

writer.add_images("input", imgs)

output = output.reshape([-1, 3, 30, 30])

writer.add_images("output", output)

step += 1

执行结果

......

torch.Size([64, 6, 30, 30])

torch.Size([64, 3, 32, 32])

torch.Size([64, 6, 30, 30])

torch.Size([64, 3, 32, 32])

torch.Size([64, 6, 30, 30])

torch.Size([64, 3, 32, 32])

torch.Size([64, 6, 30, 30])

torch.Size([64, 3, 32, 32])

torch.Size([64, 6, 30, 30])

torch.Size([64, 3, 32, 32])

torch.Size([64, 6, 30, 30])

torch.Size([16, 3, 32, 32])

torch.Size([16, 6, 30, 30]) # 最后一个batch16是因为drop_last默认为False,最后没除尽的也要保留,参与训练