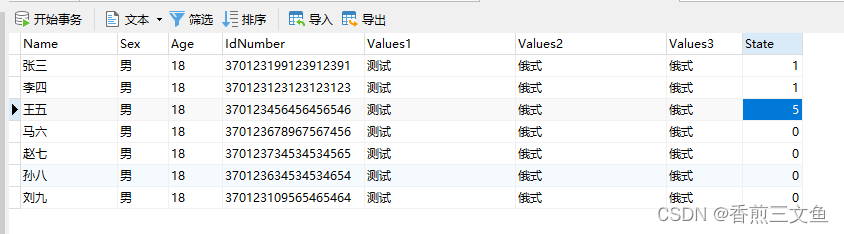

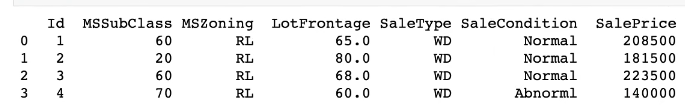

Kaggle房价数据集,前四个为房价特征,最后一个为标签(房价)。

一、下载数据集

import numpy as np

import pandas as pd

import torch

from torch import nn

from d2l import torch as d2l

import hashlib

import os

import tarfile

import zipfile

import requests

# 数据集下载

DATA_HUB = dict()

DATA_URL = 'http://d2l-data.s3-accelerate.amazonaws.com/'

def download(name, cache_dir=os.path.join('.', 'data')): # @save

"""下载一个DATA_HUB中的文件,返回本地文件名"""

assert name in DATA_HUB, f"{name} 不存在于 {DATA_HUB}"

url, sha1_hash = DATA_HUB[name]

os.makedirs(cache_dir, exist_ok=True)

fname = os.path.join(cache_dir, url.split('/')[-1])

if os.path.exists(fname):

sha1 = hashlib.sha1()

with open(fname, 'rb') as f:

while True:

data = f.read(1048576)

if not data:

break

sha1.update(data)

if sha1.hexdigest() == sha1_hash:

return fname # 命中缓存

print(f'正在从{url}下载{fname}...')

r = requests.get(url, stream=True, verify=True)

with open(fname, 'wb') as f:

f.write(r.content)

return fname

def download_extract(name, folder=None): # @save

"""下载并解压zip/tar文件"""

fname = download(name)

base_dir = os.path.dirname(fname)

data_dir, ext = os.path.splitext(fname)

if ext == '.zip':

fp = zipfile.ZipFile(fname, 'r')

elif ext in ('.tar', '.gz'):

fp = tarfile.open(fname, 'r')

else:

assert False, '只有zip/tar文件可以被解压缩'

fp.extractall(base_dir)

return os.path.join(base_dir, folder) if folder else data_dir

def download_all(): # @save

"""下载DATA_HUB中的所有文件"""

for name in DATA_HUB:

download(name)

DATA_HUB['kaggle_house_train'] = (

DATA_URL + 'kaggle_house_pred_train.csv',

'585e9cc93e70b39160e7921475f9bcd7d31219ce')

DATA_HUB['kaggle_house_test'] = (

DATA_URL + 'kaggle_house_pred_test.csv',

'fa19780a7b011d9b009e8bff8e99922a8ee2eb90')

train_data = pd.read_csv(download('kaggle_house_train'))

test_data = pd.read_csv(download('kaggle_house_test')) # 读表

查看数据集大小和部分样本:

print(train_data.shape)

print(test_data.shape)

print(train_data.iloc[0:4, [0, 1, 2, 3, -3, -2, -1]])(1460, 81)

(1459, 80)

Id MSSubClass MSZoning LotFrontage SaleType SaleCondition SalePrice

0 1 60 RL 65.0 WD Normal 208500

1 2 20 RL 80.0 WD Normal 181500

2 3 60 RL 68.0 WD Normal 223500

3 4 70 RL 60.0 WD Abnorml 140000

二、数据预处理

""" 数据预处理 """

all_features = pd.concat((train_data.iloc[:, 1:-1], test_data.iloc[:, 1:])) # 去掉id列

# 将所有缺失的值替换为相应特征的平均值。通过将特征重新缩放到零均值和单位方差来标准化数据

numeric_features = all_features.dtypes[all_features.dtypes != 'object'].index

all_features[numeric_features] = all_features[numeric_features].apply(

lambda x: (x - x.mean()) / (x.std())) # 标准化,将所有特征的均值变为0和方差变为1

all_features[numeric_features] = all_features[numeric_features].fillna(0) # 将缺失项设置为0

# “Dummy_na=True”将“na”(缺失值)视为有效的特征值,并为其创建指示符特征

all_features = pd.get_dummies(all_features, dummy_na=True) # 为离散值生成独热编码,并增加一列表示空缺值

# 从pandas格式中提取NumPy格式,并将其转换为张量表示

n_train = train_data.shape[0]

train_features = torch.tensor(all_features[:n_train].values, dtype=torch.float32)

test_features = torch.tensor(all_features[n_train:].values, dtype=torch.float32)

train_labels = torch.tensor(

train_data.SalePrice.values.reshape(-1, 1), dtype=torch.float32)

查看特征总数大小:

print(all_features.shape)(2919, 331)

可以看到经过数据预处理会将特征总数由79增加到331。

三、训练函数

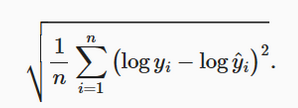

房价就像股票价格一样,我们关心的是相对误差,而不是绝对误差。比如说,农村的房价原本为12.5万,误差10万,和在市中心豪宅区的房价原本为420万,误差10万,显然使用绝对误差对结果评估的影响是不一样的,我们希望使用一种误差测量方法不受样本大小波动的影响,预测昂贵房屋和廉价房屋的误差能够同等影响预测结果,因此需要使用相对误差的测量方法,我们采用均方根损失来测量房价预测的相对误差。

""" 训练 """

loss = nn.MSELoss()

in_features = train_features.shape[1] # 输入特征总数为331

def get_net():

net = nn.Sequential(nn.Linear(in_features, 1))

return net

def log_rmse(net, features, labels):

# 为了在取对数时进一步稳定该值,将小于1的值设置为1

clipped_preds = torch.clamp(net(features), 1, float('inf'))

rmse = torch.sqrt(loss(torch.log(clipped_preds),

torch.log(labels)))

return rmse.item()均方根损失函数:

# 均方根损失

def log_rmse(net, features, labels):

# 为了在取对数时进一步稳定该值,将小于1的值设置为1

clipped_preds = torch.clamp(net(features), 1, float('inf'))

rmse = torch.sqrt(loss(torch.log(clipped_preds),

torch.log(labels)))

return rmse.item()训练函数:

训练函数使用Adam优化器。

# 训练函数

def train(net, train_features, train_labels, test_features, test_labels,

num_epochs, learning_rate, weight_decay, batch_size):

train_ls, test_ls = [], []

train_iter = d2l.load_array((train_features, train_labels), batch_size) # 加载训练数据

# 这里使用的是Adam优化算法

optimizer = torch.optim.Adam(net.parameters(),

lr = learning_rate,

weight_decay = weight_decay)

for epoch in range(num_epochs):

for X, y in train_iter:

optimizer.zero_grad()

l = loss(net(X), y)

l.backward()

optimizer.step()

train_ls.append(log_rmse(net, train_features, train_labels))

if test_labels is not None:

test_ls.append(log_rmse(net, test_features, test_labels))

return train_ls, test_ls

四、K折交叉验证(可选,炼丹步骤)

def get_k_fold_data(k, i, X, y):

assert k > 1

fold_size = X.shape[0] // k

X_train, y_train = None, None

for j in range(k):

idx = slice(j * fold_size, (j + 1) * fold_size)

X_part, y_part = X[idx, :], y[idx]

if j == i:

X_valid, y_valid = X_part, y_part

elif X_train is None:

X_train, y_train = X_part, y_part

else:

X_train = torch.cat([X_train, X_part], 0)

y_train = torch.cat([y_train, y_part], 0)

return X_train, y_train, X_valid, y_valid

当我们在K折交叉验证中训练K次后,返回训练和验证误差的平均值。

def k_fold(k, X_train, y_train, num_epochs, learning_rate, weight_decay,

batch_size):

train_l_sum, valid_l_sum = 0, 0 # 用于存储训练误差和验证误差的总和

for i in range(k):

data = get_k_fold_data(k, i, X_train, y_train)

net = get_net() #选择模型

train_ls, valid_ls = train(net, *data, num_epochs, learning_rate,

weight_decay, batch_size) # 训练模型

train_l_sum += train_ls[-1] # 将当前训练误差的最后一个值累加到train_l_sum变量中

valid_l_sum += valid_ls[-1]

if i == 0: # 第一次循环

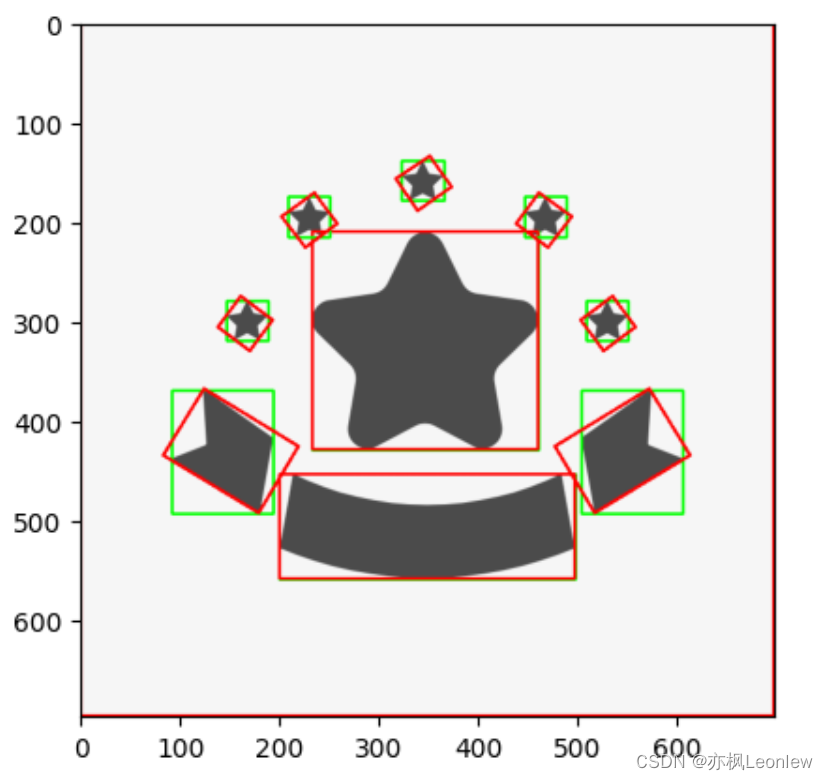

d2l.plot(list(range(1, num_epochs + 1)), [train_ls, valid_ls],

xlabel='epoch', ylabel='rmse', xlim=[1, num_epochs],

legend=['train', 'valid'], yscale='log')

print(f'折{i + 1},训练log rmse{float(train_ls[-1]):f}, '

f'验证log rmse{float(valid_ls[-1]):f}')

return train_l_sum / k, valid_l_sum / k

五、模型选择(可选,炼丹步骤)

不断的更换超参数,保留最优的超参数。

k, num_epochs, lr, weight_decay, batch_size = 5, 100, 5, 0, 64

train_l, valid_l = k_fold(k, train_features, train_labels, num_epochs, lr,

weight_decay, batch_size)

print(f'{k}-折验证: 平均训练log rmse: {float(train_l):f}, '

f'平均验证log rmse: {float(valid_l):f}')

六、训练

""" 训练与预测 """

def train_and_pred(train_features, test_features, train_labels, test_data,

num_epochs, lr, weight_decay, batch_size):

net = get_net()

train_ls, _ = train(net, train_features, train_labels, None, None,

num_epochs, lr, weight_decay, batch_size)

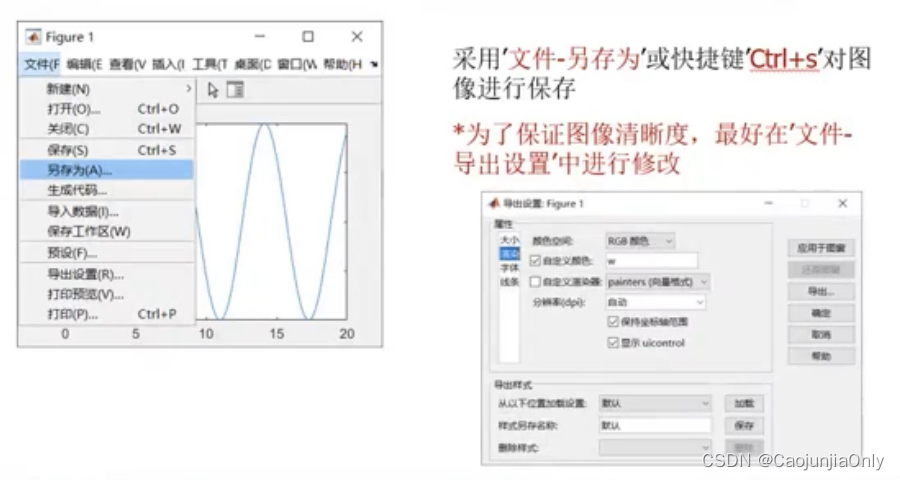

d2l.plot(np.arange(1, num_epochs + 1), [train_ls], xlabel='epoch',

ylabel='log rmse', xlim=[1, num_epochs], yscale='log')

print(f'训练log rmse:{float(train_ls[-1]):f}')

# 将网络应用于测试集。

preds = net(test_features).detach().numpy()

# 将其重新格式化以导出到Kaggle

test_data['SalePrice'] = pd.Series(preds.reshape(1, -1)[0])

submission = pd.concat([test_data['Id'], test_data['SalePrice']], axis=1)

submission.to_csv('submission.csv', index=False)

k, num_epochs, lr, weight_decay, batch_size = 5, 100, 5, 0, 64

train_and_pred(train_features, test_features, train_labels, test_data,

num_epochs, lr, weight_decay, batch_size)