Overview

Manifold Space vs Tangent Space

Jacobian w.r.t Error State

Jacobian w.r.t Error State vs True State

According 1 2.4,

The idea is that for a x ∈ N x \in N x∈N the function g ( δ ) : = f ( x ⊞ δ ) g(\delta) := f (x \boxplus \delta) g(δ):=f(x⊞δ) behaves locally in 0 0 0 like f f f does in x x x. In particular ∥ f ( x ) ∥ 2 \|f(x)\|^2 ∥f(x)∥2 has a minimum in x x x if and only if ∥ g ( δ ) ∥ 2 \|g(\delta)\|^2 ∥g(δ)∥2 has a minimum in 0 0 0. Therefore finding a local optimum of g g g, δ = arg min δ ∥ g ( δ ) ∥ 2 \delta = \arg \min_{\delta} \|g(\delta)\|^2 δ=argminδ∥g(δ)∥2 implies x ⊞ δ = arg min ξ ∥ f ( ξ ) ∥ 2 x \boxplus \delta = \arg \min_{\xi} \|f(\xi)\|^2 x⊞δ=argminξ∥f(ξ)∥2.

f ( x ⊞ δ ) = f ( x ) + J x δ + O ( ∥ δ ∥ 2 ) f(x \boxplus \delta)=f(x)+J_x \delta+\mathcal{O}\left(\|\delta\|^2\right) f(x⊞δ)=f(x)+Jxδ+O(∥δ∥2)

where

J = ∂ f ( x ⊞ δ ) ∂ δ ∣ δ = 0 ⟷ J = ∂ f ( x ) ∂ x ∣ x J = \left. \frac{\partial f(x \boxplus \delta)}{\partial \delta} \right|_{\delta=0} \quad \longleftrightarrow \quad J = \left. \frac{\partial f(x)}{\partial x} \right|_{x} J=∂δ∂f(x⊞δ) δ=0⟷J=∂x∂f(x) x

ESKF 2 6.1.1: Jacobian computation

H ≜ ∂ h ∂ δ x ∣ x = ∂ h ∂ x t ∣ x ∂ x t ∂ δ x ∣ x = H x X δ x \left.\mathbf{H} \triangleq \frac{\partial h}{\partial \delta \mathbf{x}}\right|_{\mathbf{x}}=\left.\left.\frac{\partial h}{\partial \mathbf{x}_t}\right|_{\mathbf{x}} \frac{\partial \mathbf{x}_t}{\partial \delta \mathbf{x}}\right|_{\mathbf{x}}=\mathbf{H}_{\mathbf{x}} \mathbf{X}_{\delta \mathbf{x}} H≜∂δx∂h x=∂xt∂h x∂δx∂xt x=HxXδx

- x t x_t xt: true state

- x x x: normal state

- δ x \delta x δx: error state

lifting and retraction:

X δ x ≜ ∂ x t ∂ δ x ∣ x = [ I 6 0 0 0 Q δ θ 0 0 0 I 9 ] \left.\mathbf{X}_{\delta \mathbf{x}} \triangleq \frac{\partial \mathbf{x}_t}{\partial \delta \mathbf{x}}\right|_{\mathbf{x}}=\left[\begin{array}{ccc} \mathbf{I}_6 & 0 & 0 \\ 0 & \mathbf{Q}_{\delta \boldsymbol{\theta}} & 0 \\ 0 & 0 & \mathbf{I}_9 \end{array}\right] Xδx≜∂δx∂xt x= I6000Qδθ000I9

the quaternion term

Q δ θ ≜ ∂ ( q ⊗ δ q ) ∂ δ θ ∣ q = ∂ ( q ⊗ δ q ) ∂ δ q ∣ q ∂ δ q ∂ δ θ ∣ δ θ ^ = 0 = ∂ ( [ q ] L δ q ) ∂ δ q ∣ q ∂ [ 1 1 2 δ θ ] ∂ δ θ ∣ δ ^ = 0 = [ q ] L 1 2 [ 0 0 0 1 0 0 0 1 0 0 0 1 ] \begin{aligned} \left.\mathbf{Q}_{\delta \boldsymbol{\theta}} \triangleq \frac{\partial(\mathbf{q} \otimes \delta \mathbf{q})}{\partial \delta \boldsymbol{\theta}}\right|_{\mathbf{q}} &=\left.\left.\frac{\partial(\mathbf{q} \otimes \delta \mathbf{q})}{\partial \delta \mathbf{q}}\right|_{\mathbf{q}} \frac{\partial \delta \mathbf{q}}{\partial \delta \boldsymbol{\theta}}\right|_{\delta \hat{\boldsymbol{\theta}}=0} \\ &=\left.\left.\frac{\partial\left([\mathbf{q}]_L \delta \mathbf{q}\right)}{\partial \delta \mathbf{q}}\right|_{\mathbf{q}} \frac{\partial\left[\begin{array}{c} 1 \\ \frac{1}{2} \delta \boldsymbol{\theta} \end{array}\right]}{\partial \delta \boldsymbol{\theta}}\right|_{\hat{\delta}=0} \\ &=[\mathbf{q}]_L \frac{1}{2}\left[\begin{array}{lll} 0 & 0 & 0 \\ 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{array}\right] \end{aligned} Qδθ≜∂δθ∂(q⊗δq) q=∂δq∂(q⊗δq) q∂δθ∂δq δθ^=0=∂δq∂([q]Lδq) q∂δθ∂[121δθ] δ^=0=[q]L21 010000100001

Least Squares on a Manifold 3

Local Parameterization in Ceres Solver 4 5 6 7 8

class LocalParameterization {

public:

virtual ~LocalParameterization() = default;

virtual bool Plus(const double* x,

const double* delta,

double* x_plus_delta) const = 0;

virtual bool ComputeJacobian(const double* x, double* jacobian) const = 0;

virtual bool MultiplyByJacobian(const double* x,

const int num_rows,

const double* global_matrix,

double* local_matrix) const;

virtual int GlobalSize() const = 0;

virtual int LocalSize() const = 0;

};

Plus

Retraction

⊞ ( x , Δ ) = x Exp ( Δ ) \boxplus(x, \Delta)=x \operatorname{Exp}(\Delta) ⊞(x,Δ)=xExp(Δ)

ComputeJacobian

global w.r.t local

J G L = ∂ x G ∂ x L = D 2 ⊞ ( x , 0 ) = ∂ ⊞ ( x , Δ ) ∂ Δ ∣ Δ = 0 J_{GL} = \frac{\partial x_G}{\partial x_L} = D_2 \boxplus(x, 0) = \left. \frac{\partial \boxplus(x, \Delta)}{\partial \Delta} \right|_{\Delta = 0} JGL=∂xL∂xG=D2⊞(x,0)=∂Δ∂⊞(x,Δ) Δ=0

参考 9

r r r w.r.t x L x_{L} xL

在 ceres::CostFunction 处提供 residuals 对 Manifold 上变量的导数

J r G = ∂ r ∂ x G J_{rG} = \frac{\partial r}{\partial x_G} JrG=∂xG∂r

则 对 Tangent Space 上变量的导数

J r L = ∂ r ∂ x L = ∂ r ∂ x G ⋅ J G L J_{rL} = \frac{\partial r}{\partial x_L} = \frac{\partial r}{\partial x_G} \cdot J_{GL} JrL=∂xL∂r=∂xG∂r⋅JGL

Sub Class

- QuaternionParameterization

- EigenQuaternionParameterization

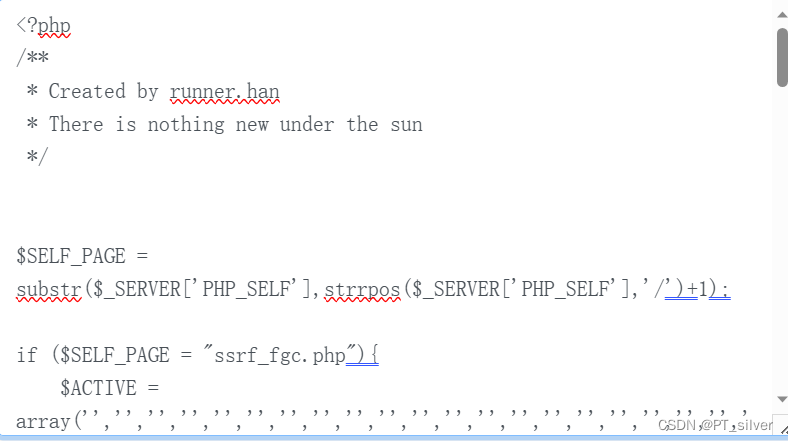

自定义 QuaternionParameterization

参考 7

Summary

- QuaternionParameterization 的 Plus 与 ComputeJacobian 共同决定使用左扰动或使用右扰动形式

Quaternion in Eigen

Quaterniond q1(1, 2, 3, 4); // wxyz

Quaterniond q2(Vector4d(1, 2, 3, 4)); // xyzw

Quaterniond q3(tmp_q); // xyzw, double tmp_q[4];

q.coeffs(); // xyzw

Quaternion in Ceres Solver

- order:

wxyz - Ceres Solver 中 Quaternion 是 Hamilton Quaternion,遵循 Hamilton 乘法法则

- 矩阵 raw memory 存储方式是 Row Major

A Framework for Sparse, Non-Linear Least Squares Problems on Manifolds ↩︎

Quaternion kinematics for the error-state Kalman filter, Joan Solà ↩︎

A Tutorial on Graph-Based SLAM ↩︎

http://ceres-solver.org/nnls_modeling.html#localparameterization ↩︎

On-Manifold Optimization Demo using Ceres Solver ↩︎

Matrix Manifold Local Parameterizations for Ceres Solver ↩︎

[ceres-solver] From QuaternionParameterization to LocalParameterization 😄 ↩︎ ↩︎

LocalParameterization子类说明:QuaternionParameterization类和EigenQuaternionParameterization类 ↩︎

优化库——ceres(二)深入探索ceres::Problem ↩︎