文章目录

- 前言

- MNIST手写数字识别

- 数据的准备工作

- 数据的处理

- 主干网络的定义

- 损失函数的使用(修改)

- 训练和预测

- 运行

前言

这个是我在学习中的其中一种方式实现MNIST手写的识别,思路我觉得比较清晰,后面会把另外的方法代码整理发布。

MNIST手写数字识别

数据的准备工作

非常重要,但是只使用MNIST学习过程,所以并不需要深究,不同的数据集的处理都不一样。

因为MNIST数据集很简单,由28×28的灰度图像组成,所以每张图片都是784个灰度数字。

有脚本可以直接下载:

"""

download_mnist.py

下载数据

"""

from pathlib import Path

import requests

DATA_PATH = Path("data")

PATH = DATA_PATH / "mnist"

PATH.mkdir(parents=True, exist_ok=True)

URL = "http://deeplearning.net/data/mnist/"

FILENAME = "mnist.pkl.gz"

if not (PATH / FILENAME).exists():

content = requests.get(URL + FILENAME).content

(PATH / FILENAME).open("wb").write(content)

但是经常有网络问题,也可以去找MNIST的pkl格式的数据。

mnist.pkl.gz百度网盘链接

链接:https://pan.baidu.com/s/1nx2k5IPAnP1u6CkRR8NXfw?pwd=zbqy

提取码:zbqy

"""

path_setting.py

设置数据所在路径

"""

import pickle

import gzip

from pathlib import Path

"""保存路径data/mnist/mnist.pkl.gz"""

DATA_PATH = Path("data")

PATH = DATA_PATH / "mnist"

FILENAME = "mnist.pkl.gz"

如果想看下数据集的内容,可以使用下面的方式:

"""

show.py

查看数据内容

"""

import matplotlib.pyplot as plt

import pylab

from path_setting import *

"""读取图像"""

with gzip.open((PATH / FILENAME).as_posix(), "rb") as f:

((x_train, y_train), (x_valid, y_valid), _) = pickle.load(f, encoding="latin-1") # 读取数据

"""

x_train: 训练数据

y_train: 训练标签

x_valid: 测试数据

y_valid: 测试标签

"""

print(x_train.shape) # 查看x_train的形状: (50000, 784)

print(x_valid.shape) # 查看x_valid的形状: (10000, 784)

print(y_train.shape) # 查看y_train的形状: (50000, )

print(x_train[0]) # 0号数据(第一个数据)的784个灰度值

print(y_train[0]) # 0号数据(第一个数据)的标签: 5

print(x_train[0].shape) # 看一下x_train中一个图像的形状: (784,) 784个灰度值

plt.imshow(x_train[0].reshape(28, 28), cmap="gray") # 更改图像的形状为28 × 28

pylab.show() # 展示图像

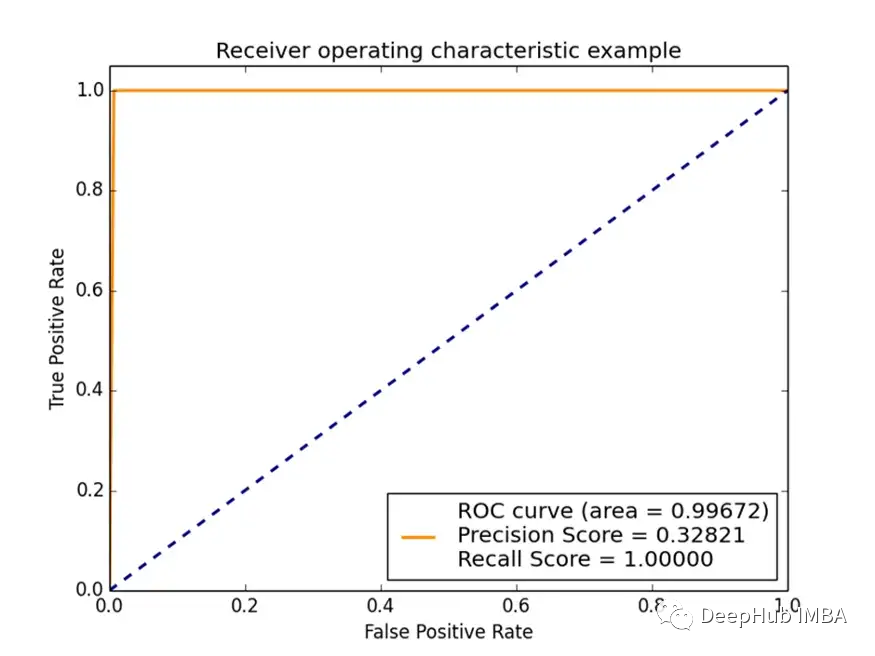

运行结果:

数据的处理

获取数据之后,数据此时并不是张量,而是数组,需要将数据转换成张量

torch也提供了方法

- TensorDataset

- Dataloader

"""

data_process.py

数据处理

"""

import torch

from torch.utils.data import DataLoader

from torch.utils.data import TensorDataset

from path_setting import *

import pickle

import gzip

bs = 64 # batch_size

with gzip.open((PATH / FILENAME).as_posix(), "rb") as f:

((x_train, y_train), (x_valid, y_valid), _) = pickle.load(f, encoding="latin-1")

x_train, y_train, x_valid, y_valid = map(

torch.tensor, (x_train, y_train, x_valid, y_valid) # 将numpy数组转换为tensor

)

train_ds = TensorDataset(x_train, y_train)

train_dl = DataLoader(train_ds, batch_size=bs, shuffle=True) # 在所有的训练数据中以bs个样本为单位随机取数据

valid_ds = TensorDataset(x_valid, y_valid)

valid_dl = DataLoader(valid_ds, batch_size=bs * 2)

主干网络的定义

"""

backbone.py

主干神经网络

"""

import torch.nn as nn

import torch.nn.functional as F

class FCnet(nn.Module):

"""input -> hidden1 -> hidden2 -> out"""

def __init__(self):

super().__init__()

self.hidden1 = nn.Linear(784, 128)

self.hidden2 = nn.Linear(128, 256)

self.out = nn.Linear(256, 10)

self.dropout = nn.Dropout(0.5) # dropout的概率

def forward(self, x):

x = F.relu(self.hidden1(x))

x = self.dropout(x)

x = F.relu(self.hidden2(x))

x = self.dropout(x)

x = self.out(x)

return x

使用输入层->全连接层->输出这样的全连接神经网络。

基本的代码格式就按上面的模板来

初始化函数是设置各个层次的结构

forward函数为前向传播,需要手动设置

损失函数的使用(修改)

PyTorch中有两种快速使用一些常用损失函数的方法:

torch.nn.functionalnn.Module

损失函数传入的参数一般为两个,伪代码如下:

import torch.nn.functional as F

loss_func = F.cross_entropy

loss_func(预测值, 实际值)

"""

loss_batch.py

计算loss和梯度更新

"""

from torch import optim

def loss_batch(module, loss_func, data, label, opt=None):

loss = loss_func(module(data), label) # 计算当前损失

if opt is not None:

loss.backward() # 每一层的权重参数运算,得出梯度

opt.step() # 根据执行参数更新

opt.zero_grad() # 重新将梯度置为0

return loss.item(), len(data)

训练和预测

"""

fit.py

训练和验证过程

"""

import numpy as np

import torch

from loss_batch import loss_batch

def fit(epochs, module, loss_func, opt, train_dl, valid_dl):

for epoch in range(epochs):

module.train()

for train_data, train_label in train_dl:

loss_batch(module, loss_func, train_data, train_label, opt)

module.eval()

with torch.no_grad():

losses, nums = zip(

*[loss_batch(module, loss_func, valid_data, valid_label) for valid_data, valid_label in valid_dl]

)

val_loss = np.sum(np.multiply(losses, nums)) / np.sum(nums)

print(f"step: {str(epoch)} , loss in valid : {str(val_loss)}")

correct = 0

total = 0

for vd, vl in valid_dl:

outputs = module(vd) # (128, 10)一个batch64,两个128,每一个的结果是隶属于十个分类的概率

_, predicted = torch.max(outputs.data, 1) # 1代表行,每个样本中取最大的

total += vl.size(0)

correct += (predicted == vl).sum().item()

print(f"accuracy: {correct / total}")

运行

"""

run.py

"""

from torch import optim

from backbone import FCnet

from data_process import *

from fit import fit

from data_process import *

import torch.nn.functional as F

net = FCnet()

loss_func = F.cross_entropy

opt = optim.SGD(net.parameters(), lr=0.001)

fit(50, net, loss_func, opt, train_dl, valid_dl)

运行结果: