什么是 Helm

每个成功的软件平台都有一个优秀的打包系统,比如Debian、Ubuntu 的 apt,RedHat、CentOS 的 yum。Helm 则是 Kubernetes上 的包管理器,方便我们更好的管理应用。

在没使用 helm 之前,向 kubernetes 部署应用,我们要依次部署 deployment、svc 等,步骤较繁琐。 况且随着很多项目微服务化,复杂的应用在容器中部署以及管理显得较为复杂,helm 通过打包的方式,支持发布的版本管理和控制, 很大程度上简化了 Kubernetes 应用的部署和管理。

Helm本质就是让K8s的应用管理(Deployment、Service等)可配置,可以通过类似于传递环境变量的方式能动态生成。通过动态生成K8s资源清单文件(deployment.yaml、service.yaml)。 然后调用 Kubectl 自动执行 K8s 资源部署。

Helm 是官方提供的类似于 YUM 的包管理器,是部署环境的流程封装。

Helm 有三个重要的概念

●Chart:Helm 的软件包,采用 TAR 格式。是创建一个应用的信息集合,包括各种 Kubernetes 对象的配置模板、参数定义、依赖关系、文档说明等。chart 是应用部署的自包含逻辑单元。 可以将 chart 想象成 apt、yum 中的软件安装包。

●Release:是 chart 的运行实例,代表了一个正在运行的应用。当 chart 被安装到 Kubernetes 集群,就生成一个 release。chart 能够多次安装到同一个集群,每次安装都是一个 release。

●Repository(仓库):Charts 仓库,用于集中存储和分发 Charts。Repository 本质上是一个 Web 服务器,该服务器保存了一系列的 Chart 软件包以供用户下载,并且提供了一个该 Repository 的 Chart 包的清单文件以供查询。Helm 可以同时管理多个不同的 Repository。

总结:Helm 安装 charts 到 Kubernetes 集群中,每次安装都会创建一个新的 release。你可以在 Helm 的 chart repositories 中寻找新的 chart。

Helm3 与 Helm2 的区别

Helm2 是 C/S 架构,主要分为客户端 helm 和服务端 Tiller。在 Helm 2 中,Tiller 是作为一个 Deployment 部署在 kube-system 命名空间中,很多情况下,我们会为 Tiller 准备一个 ServiceAccount ,这个 ServiceAccount 通常拥有集群的所有权限。

用户可以使用本地 Helm 命令,自由地连接到 Tiller 中并通过 Tiller 创建、修改、删除任意命名空间下的任意资源。

在 Helm 3 中,Tiller 被移除了。新的 Helm 客户端会像 kubectl 命令一样,读取本地的 kubeconfig 文件,使用我们在 kubeconfig 中预先定义好的权限来进行一系列操作。

Helm 的官方网站 https://helm.sh/

Helm 部署

1、安装 helm

下载二进制 Helm client 安装包

https://github.com/helm/helm/tags

tar -zxvf helm-v3.6.0-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

helm version命令补全

source <(helm completion bash)2、使用 helm 安装 Chart

添加常用的 chart 仓库

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo add stable http://mirror.azure.cn/kubernetes/charts

helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

helm repo add incubator https://charts.helm.sh/incubator更新 charts 列表

helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "aliyun" chart repository

...Successfully got an update from the "stable" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "incubator" chart repository

Update Complete. ⎈Happy Helming!⎈

helm repo list

NAME URL

bitnami https://charts.bitnami.com/bitnami

stable http://mirror.azure.cn/kubernetes/charts

aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

incubator https://charts.helm.sh/incubator

查看 stable 仓库可用的 charts 列表

helm search repo stable删除 incubator 仓库

helm repo remove incubator

"incubator" has been removed from your repositories

查看 chart 信息

helm show chart bitnami/nginx #查看指定 chart 的基本信息

annotations:

category: Infrastructure

images: |

- name: git

image: docker.io/bitnami/git:2.41.0-debian-11-r74

- name: nginx-exporter

image: docker.io/bitnami/nginx-exporter:0.11.0-debian-11-r322

- name: nginx

image: docker.io/bitnami/nginx:1.25.2-debian-11-r0

licenses: Apache-2.0

apiVersion: v2

appVersion: 1.25.2

dependencies:

- name: common

repository: oci://registry-1.docker.io/bitnamicharts

tags:

- bitnami-common

version: 2.x.x

description: NGINX Open Source is a web server that can be also used as a reverse

proxy, load balancer, and HTTP cache. Recommended for high-demanding sites due to

its ability to provide faster content.

home: https://bitnami.com

icon: https://bitnami.com/assets/stacks/nginx/img/nginx-stack-220x234.png

keywords:

- nginx

- http

- web

- www

- reverse proxy

maintainers:

- name: VMware, Inc.

url: https://github.com/bitnami/charts

name: nginx

sources:

- https://github.com/bitnami/charts/tree/main/bitnami/nginx

version: 15.1.4

helm show all bitnami/nginx #获取指定 chart 的所有信息安装 chart

helm install my-redis bitnami/redis [-n default] #指定 release 的名字为 my-redis,-n 指定部署到 k8s 的 namespace

helm install bitnami/redis --generate-name #不指定 release 的名字时,需使用 –generate-name 随机生成一个名字查看所有 release

helm ls

helm list查看指定的 release 状态

helm status my-redis

NAME: my-redis

LAST DEPLOYED: Mon Aug 21 16:58:01 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: redis

CHART VERSION: 17.15.6

APP VERSION: 7.2.0

** Please be patient while the chart is being deployed **

Redis® can be accessed on the following DNS names from within your cluster:

my-redis-master.default.svc.cluster.local for read/write operations (port 6379)

my-redis-replicas.default.svc.cluster.local for read-only operations (port 6379)

To get your password run:

export REDIS_PASSWORD=$(kubectl get secret --namespace default my-redis -o jsonpath="{.data.redis-password}" | base64 -d)

To connect to your Redis® server:

1. Run a Redis® pod that you can use as a client:

kubectl run --namespace default redis-client --restart='Never' --env REDIS_PASSWORD=$REDIS_PASSWORD --image docker.io/bitnami/redis:7.2.0-debian-11-r3 --command -- sleep infinity

Use the following command to attach to the pod:

kubectl exec --tty -i redis-client \

--namespace default -- bash

2. Connect using the Redis® CLI:

REDISCLI_AUTH="$REDIS_PASSWORD" redis-cli -h my-redis-master

REDISCLI_AUTH="$REDIS_PASSWORD" redis-cli -h my-redis-replicas

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/my-redis-master 6379:6379 &

REDISCLI_AUTH="$REDIS_PASSWORD" redis-cli -h 127.0.0.1 -p 6379

[root@master01 ~]# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

my-redis default 1 2023-08-21 16:58:01.401566444 +0800 CST deployed redis-17.15.6 7.2.0

nginx-1692608048 default 1 2023-08-21 16:54:11.469723569 +0800 CST deployed nginx-15.1.4 1.25.2

[root@master01 ~]# helm list

NAME NAMESPACE REVISION UPDATED

my-redis default 1 2023-08-21 16:58:01.401566444 +0

nginx-1692608048 default 1 2023-08-21 16:54:11.469723569 +0

[root@master01 ~]# helm status my-redis

NAME: my-redis

LAST DEPLOYED: Mon Aug 21 16:58:01 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: redis

CHART VERSION: 17.15.6

APP VERSION: 7.2.0

** Please be patient while the chart is being deployed **

Redis® can be accessed on the following DNS names from within your cluster:

my-redis-master.default.svc.cluster.local for read/write operations (port 6379)

my-redis-replicas.default.svc.cluster.local for read-only operations (port 6379)

To get your password run:

export REDIS_PASSWORD=$(kubectl get secret --namespace default my-redis -o jsonpath="{.data.redis-password}" | base64 -d)

To connect to your Redis® server:

1. Run a Redis® pod that you can use as a client:

kubectl run --namespace default redis-client --restart='Never' --env REDIS_PASSWORD=$REDIS_PASSWORD --image docker.io/bitnami/redis:7.2.0-debian-11-r3 --command -- sleep infinity

Use the following command to attach to the pod:

kubectl exec --tty -i redis-client \

--namespace default -- bash

2. Connect using the Redis® CLI:

REDISCLI_AUTH="$REDIS_PASSWORD" redis-cli -h my-redis-master

REDISCLI_AUTH="$REDIS_PASSWORD" redis-cli -h my-redis-replicas

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace default svc/my-redis-master 6379:6379 &

REDISCLI_AUTH="$REDIS_PASSWORD" redis-cli -h 127.0.0.1 -p 6379

删除指定的 release

helm uninstall my-redis

release "my-redis" uninstalled

Helm 自定义模板

charts 除了可以在 repo 中下载,还可以自己自定义,创建完成后通过 helm 部署到 k8s。

拉取 chart

mkdir /opt/helm

cd /opt/helmhelm pull bitnami/nginx

ls

nginx-15.1.4.tgz

tar xf nginx-15.1.4.tgz

yum install -y tree

tree ngixn

nginx

├── Chart.lock

├── charts

│ └── common

│ ├── Chart.yaml

│ ├── README.md

│ ├── templates

│ │ ├── _affinities.tpl

│ │ ├── _capabilities.tpl

│ │ ├── _errors.tpl

│ │ ├── _images.tpl

│ │ ├── _ingress.tpl

│ │ ├── _labels.tpl

│ │ ├── _names.tpl

│ │ ├── _secrets.tpl

│ │ ├── _storage.tpl

│ │ ├── _tplvalues.tpl

│ │ ├── _utils.tpl

│ │ ├── validations

│ │ │ ├── _cassandra.tpl

│ │ │ ├── _mariadb.tpl

│ │ │ ├── _mongodb.tpl

│ │ │ ├── _mysql.tpl

│ │ │ ├── _postgresql.tpl

│ │ │ ├── _redis.tpl

│ │ │ └── _validations.tpl

│ │ └── _warnings.tpl

│ └── values.yaml

├── Chart.yaml

├── README.md

├── templates

│ ├── deployment.yaml

│ ├── extra-list.yaml

│ ├── health-ingress.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── pdb.yaml

│ ├── prometheusrules.yaml

│ ├── server-block-configmap.yaml

│ ├── serviceaccount.yaml

│ ├── servicemonitor.yaml

│ ├── svc.yaml

│ └── tls-secrets.yaml

├── values.schema.json

└── values.yaml

5 directories, 41 files

可以看到,一个 chart 包就是一个文件夹的集合,文件夹名称就是 chart 包的名称。

chart 是包含至少两项内容的helm软件包

(1)软件包自描述文件 Chart.yaml,这个文件必须有 name 和 version(chart版本) 的定义

(2)一个或多个模板,其中包含 Kubernetes 清单文件:

●NOTES.txt:chart 的“帮助文本”,在用户运行 helm install 时显示给用户

●deployment.yaml:创建 deployment 的资源清单文件

●service.yaml:为 deployment 创建 service 的资源清单文件

●ingress.yaml: 创建 ingress 对象的资源清单文件

●_helpers.tpl:放置模板助手的地方,可以在整个 chart 中重复使用

创建自定义的 chart

helm create nginx

tree nginx

nginx

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yamlcat nginx/templates/deployment.yaml

#在 templates 目录下 yaml 文件模板中的变量(go template语法)的值默认是在 nginx/values.yaml 中定义的,只需要修改 nginx/values.yaml 的内容,也就完成了 templates 目录下 yaml 文件的配置。

比如在 deployment.yaml 中定义的容器镜像:

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"cat nginx/values.yaml | grep repository

repository: nginx

#以上变量值是在 create chart 的时候就自动生成的默认值,你可以根据实际情况进行修改。修改 chart

vim nginx/Chart.yaml

apiVersion: v2

name: nginx #chart名字

description: A Helm chart for Kubernetes

type: application #chart类型,application或library

version: 0.1.0 #chart版本

appVersion: 1.16.0 #application部署版本vim nginx/values.yaml

replicaCount: 1

image:

repository: nginx

pullPolicy: IfNotPresent

tag: "latest" #设置镜像标签

imagePullSecrets: []

nameOverride: ""

fullnameOverride: ""

serviceAccount:

create: true

annotations: {}

name: ""

podAnnotations: {}

podSecurityContext: {}

# fsGroup: 2000

securityContext: {}

# capabilities:

# drop:

# - ALL

# readOnlyRootFilesystem: true

# runAsNonRoot: true

# runAsUser: 1000

service:

type: ClusterIP

port: 80

ingress:

enabled: true #开启 ingress

className: ""

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

hosts:

- host: www.kgc.com #指定ingress域名

paths:

- path: /

pathType: Prefix #指定ingress路径类型

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

resources:

limits:

cpu: 100m

memory: 128Mi

requests:

cpu: 100m

memory: 128Mi

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 100

targetCPUUtilizationPercentage: 80

# targetMemoryUtilizationPercentage: 80

nodeSelector: {}

tolerations: []

affinity: {}打包 chart

helm lint nginx #检查依赖和模版配置是否正确

helm package nginx #打包 chart,会在当前目录下生成压缩包 nginx-0.1.0.tgz部署 chart

helm install nginx ./nginx --dry-run --debug #使用 --dry-run 参数验证 Chart 的配置,并不执行安装

helm install nginx ./nginx -n default #部署 chart,release 版本默认为 1

或者

helm install nginx ./nginx-0.1.0.tgz可根据不同的配置来 install,默认是 values.yaml

helm install nginx ./nginx -f ./nginx/values-prod.yamlhelm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx-1 default 3 2023-08-21 18:46:10.991059082 +0800 CST deployednginx-0.1.0 1.16.0kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/load-generator 1/1 Running 0 25h

pod/myapp 1/1 Running 1 25h

pod/myapp2 1/1 Running 0 25h

pod/nginx-1-b57dc6896-ljgv8 1/1 Running 0 45m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d3h

service/nginx-1 ClusterIP 10.96.154.69 <none> 80/TCP 45m

service/php-apache ClusterIP 10.96.55.210 <none> 80/TCP 25h

部署 ingress

wget https://gitee.com/mirrors/ingress-nginx/raw/nginx-0.30.0/deploy/static/mandatory.yaml

wget https://gitee.com/mirrors/ingress-nginx/raw/nginx-0.30.0/deploy/static/provider/baremetal/service-nodeport.yaml

kubectl apply -f mandatory.yaml

kubectl apply -f service-nodeport.yamlvim deploy-1.3.0.yaml

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-controller-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

externalTrafficPolicy: Cluster

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

nodePort: 30080

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

nodePort: 30443

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.0@sha256:d1707ca76d3b044ab8a28277a2466a02100ee9f58a86af1535a3edf9323ea1b5

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1@sha256:64d8c73dca984af206adf9d6d7e46aa550362b1d7a01f3a0a91b20cc67868660

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1@sha256:64d8c73dca984af206adf9d6d7e46aa550362b1d7a01f3a0a91b20cc67868660

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: nginx

annotations:

ingressclass.kubernetes.io/is-default-class: "true"

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.0

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

vim service-nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

kubectl get pod,svc -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-k54lt 0/1 Completed 0 47h

pod/ingress-nginx-admission-patch-4zh89 0/1 Completed 0 47h

pod/ingress-nginx-controller-clcr7 1/1 Running 6 47h

pod/ingress-nginx-controller-kpmh7 1/1 Running 0 47h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx NodePort 10.96.241.250 <none> 80:31673/TCP,443:31232/TCP 7s

service/ingress-nginx-controller LoadBalancer 10.96.81.204 <pending> 80:30916/TCP,443:32643/TCP 47h

service/ingress-nginx-controller-admission ClusterIP 10.96.79.213 <none> 443/TCP 47h

vim ingress-app.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-app-ingress

spec:

ingressClassName: "nginx"

rules:

- host: www.ggl.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: ingress-nginx

port:

number: 80vim deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ingress-nginx-deployment

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

containers:

- name: ingress-nginx

image: nginx:latest

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-app-ingress nginx www.ggl.com 80 13s

vim /etc/hosts

.....

192.168.10.30 node02 www.ggl.comcurl http://www.ggl.com:31998

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>修改为 NodePort 访问后,升级

vim nginx/values.yaml

service:

type: NodePort

port: 80

nodePort: 30080

ingress:

enabled: false

vim nginx/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ include "nginx.fullname" . }}

labels:

{{- include "nginx.labels" . | nindent 4 }}

spec:

type: {{ .Values.service.type }}

ports:

- port: {{ .Values.service.port }}

targetPort: http

protocol: TCP

name: http

nodePort: {{ .Values.service.nodePort }} #指定 nodePort

selector:

{{- include "nginx.selectorLabels" . | nindent 4 }}升级 release,release 版本加 1

helm upgrade nginx nginx kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d2h

nginx-1 NodePort 10.96.154.69 <none> 80:30080/TCP 9m17s

php-apache ClusterIP 10.96.55.210 <none> 80/TCP 24h

curl 192.168.10.10:30080

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

回滚

根据 release 版本回滚

helm history nginx-1 #查看 release 版本历史

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Mon Aug 21 18:32:39 2023 superseded nginx-0.1.0 1.16.0 Install complete

2 Mon Aug 21 18:41:35 2023 deployed nginx-0.1.0 1.16.0 Upgrade complete

helm rollback nginx-1 1 #回滚 release 到版本1

Rollback was a success! Happy Helming!helm history nginx-1 #nginx release 已经回滚到版本 1

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Mon Aug 21 18:32:39 2023 superseded nginx-0.1.0 1.16.0 Install complete

2 Mon Aug 21 18:41:35 2023 superseded nginx-0.1.0 1.16.0 Upgrade complete

3 Mon Aug 21 18:46:10 2023 deployed nginx-0.1.0 1.16.0 Rollback to 1

#通常情况下,在配置好 templates 目录下的 kubernetes 清单文件后,后续维护一般只需要修改 Chart.yaml 和 values.yaml 即可。

//在命令行使用 --set 指定参数来部署(install,upgrade)release

#注:此参数值会覆盖掉在 values.yaml 中的值,如需了解其它的预定义变量参数,可查看 helm 官方文档。

helm upgrade nginx nginx --set image.tag='1.15'Helm 仓库

helm 可以使用 harbor 作为本地仓库,将自定义的 chart 推送至 harbor 仓库。

安装 harbor

#上传 harbor-offline-installer-v1.9.1.tgz 和 docker-compose 文件到 /opt 目录

cd /opt

cp docker-compose /usr/local/bin/

chmod +x /usr/local/bin/docker-compose

tar zxf harbor-offline-installer-v1.10.18.tgz

cd harbor/vim harbor.yml

hostname: 192.168.10.10

harbor_admin_password: Harbor12345 #admin用户初始密码

data_volume: /data #数据存储路径,自动创建

chart:

absolute_url: enabled #在chart中启用绝对url

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /var/log/harbor #日志路径

#安装带有 Clair service 和 chart 仓库服务的 Harbor

./install.sh --with-clair --with-chartmuseum安装 push 插件

在线安装

helm plugin install https://github.com/chartmuseum/helm-push离线安装

wget https://github.com/chartmuseum/helm-push/releases/download/v0.8.1/helm-push_0.8.1_linux_amd64.tar.gzmkdir ~/.local/share/helm/plugins/helm-push

tar -zxvf helm-push_0.8.1_linux_amd64.tar.gz -C ~/.local/share/helm/plugins/helm-pushhelm repo ls

NAME URL

bitnami https://charts.bitnami.com/bitnami

stable http://mirror.azure.cn/kubernetes/charts

aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

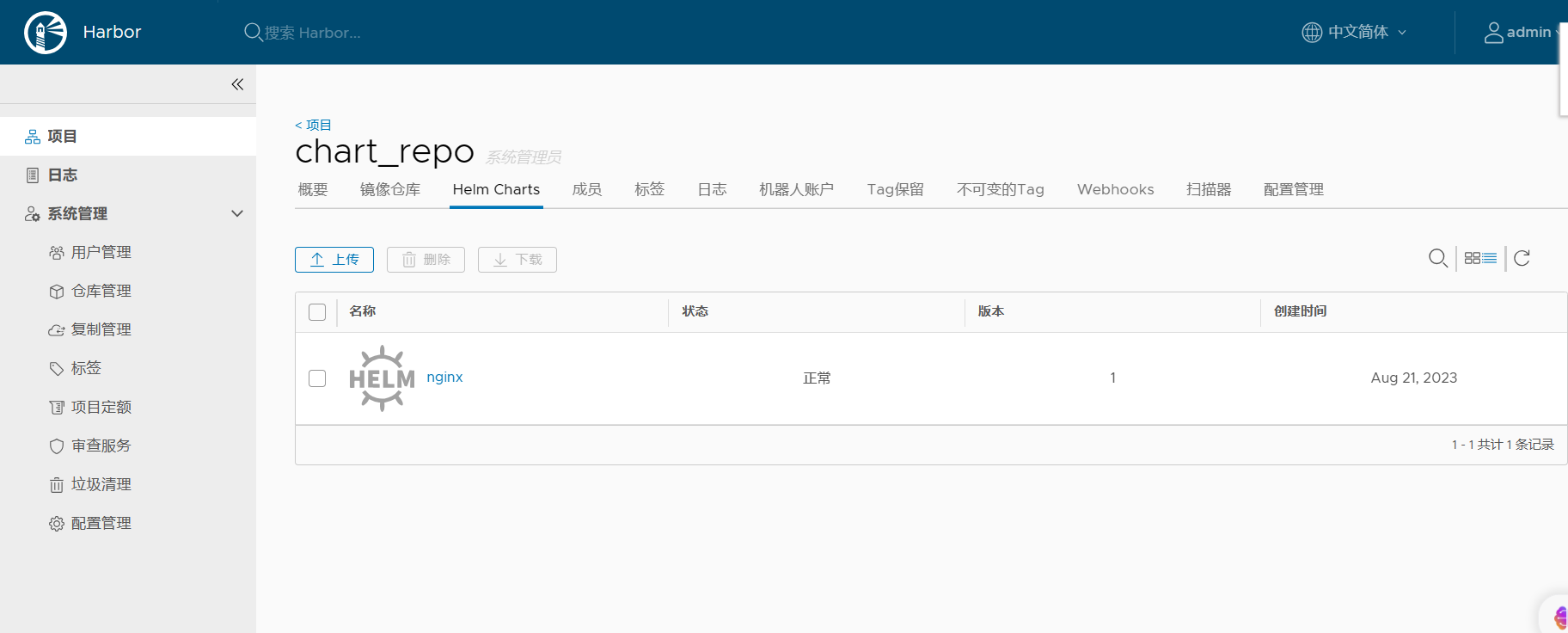

登录 Harbor WEB UI 界面,创建一个新项目

浏览器访问:http://192.168.10.10 ,默认的管理员用户名和密码是 admin/Harbor12345

点击 “+新建项目” 按钮

填写项目名称为 “chart_repo”,访问级别勾选 “公开”,点击 “确定” 按钮,创建新项目

添加仓库

helm repo add harbor http://192.168.10.10:85/chartrepo/chart_repo --username=admin --password=Harbor12345注:这里的 repo 的地址是<Harbor URL>/chartrepo/<项目名称>,Harbor 中每个项目是分开的 repo。如果不提供项目名称, 则默认使用 library 这个项目。

推送 chart 到 harbor 中

cd /opt/helm

helm push nginx harbor

Pushing nginx-0.1.0.tgz to harbor...

Done.