若该文为原创文章,转载请注明原文出处。

一、项目介绍

随着人工智能时代的到来,许多技术得到了空前的发展,让人们更加认识到了线上虚拟技术的强大。

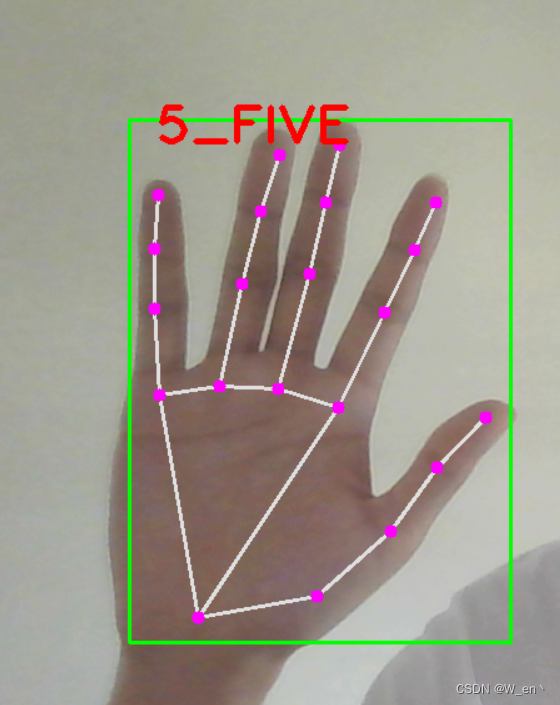

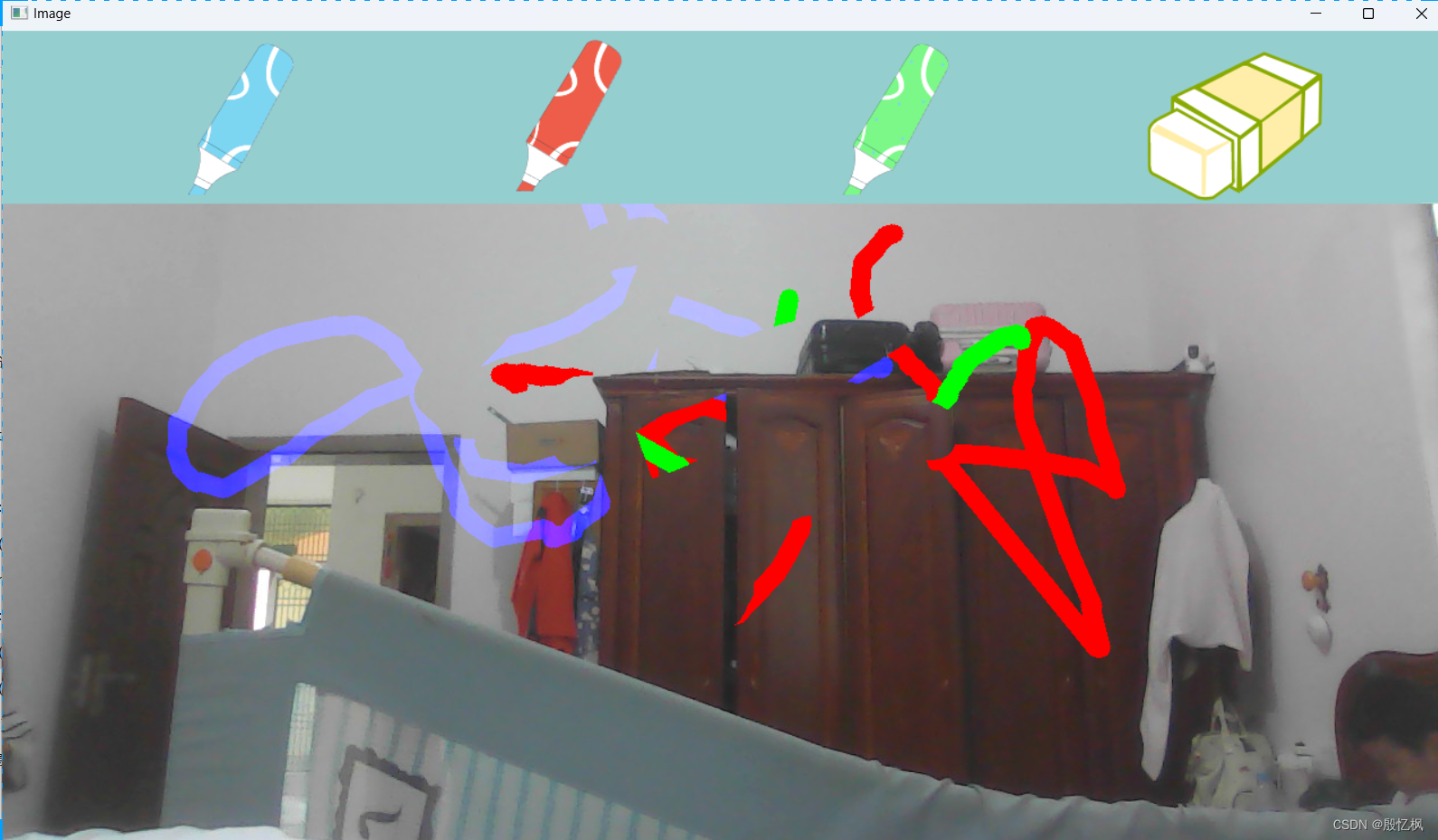

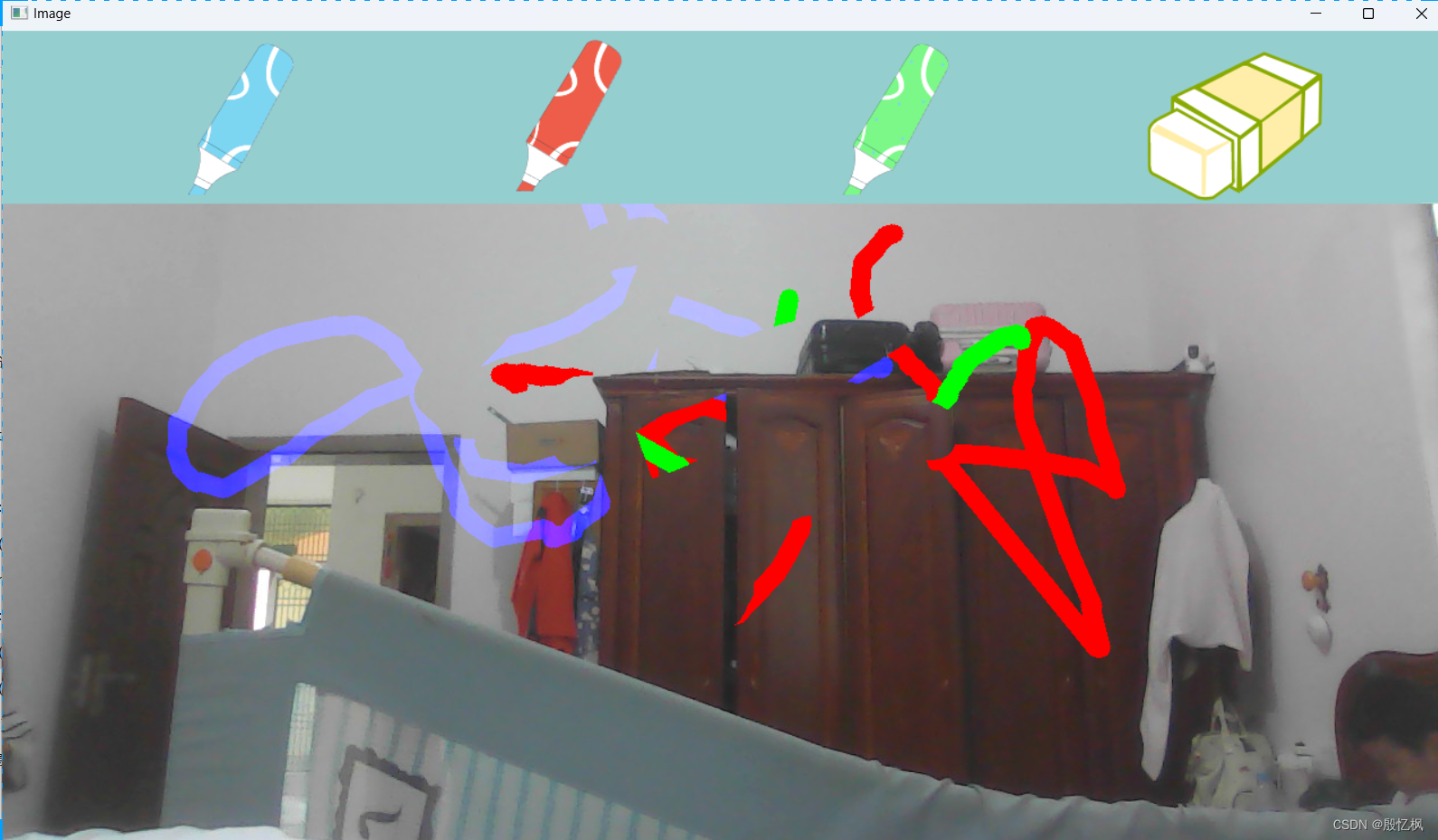

通过mediapipe识别手的关键点,检测中指,实现隔空画画的操作。

通过两个手指的间距,实现点击选择颜色或橡皮檫。

二、运行

1、环境

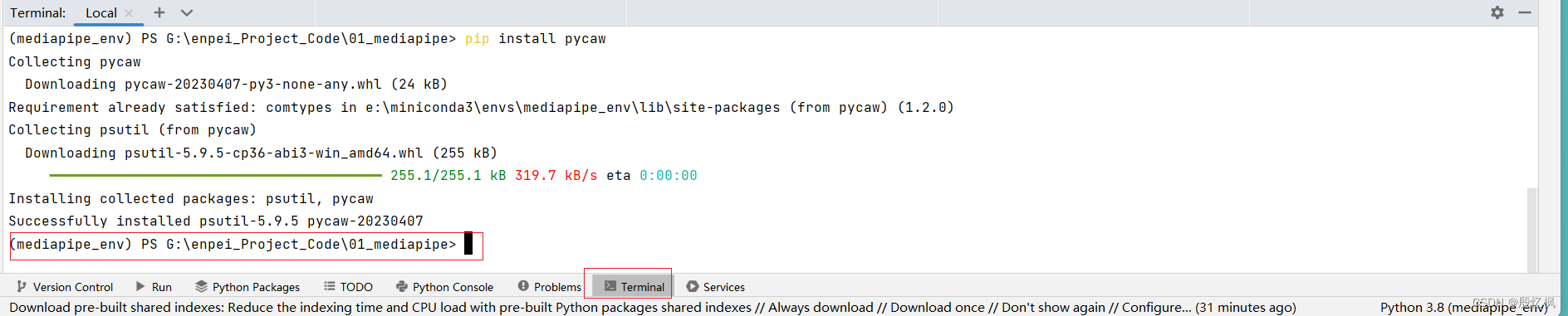

环境搭建使用的是上一篇搭建的环境mediapipe_env

打开终端,输入下面命令,激活环境:

conda activate mediapipe这次使用的是pycharm,软件自行安装,安装社区版本。

建立一个目录,把项目导入到pycharm里,然后设置环境

点击Add New Interpreter

选择环境的python.exe

终端选择,一定要powershell.exe

设置好可以在pycharm里的终端操作

2、运行

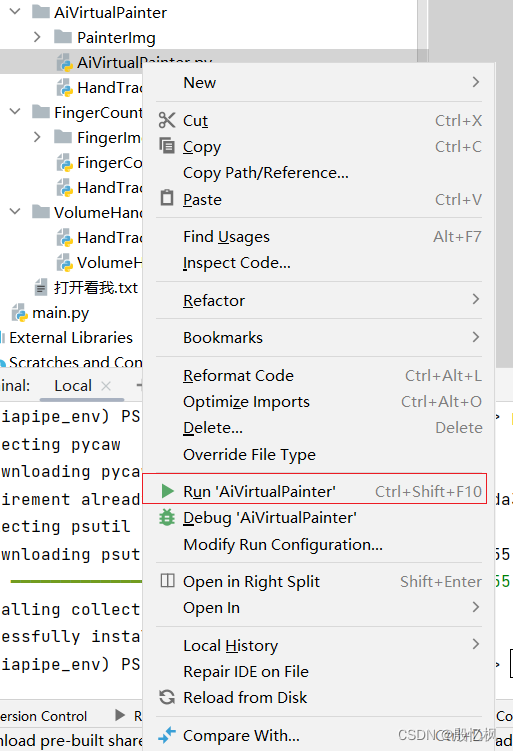

导入代码,右键运行

3 、结果

三、代码

这里不介绍原理及过程,想学习的人不会因为这些而去不学习,所以需要自行了解,项目难度不大,代码也相对的不复杂。直接上源码

一共两个文件,AiVirtualPainter.py和HandTrackingModule.py

AiVirtualPainter.py实现的是绘画功能,HandTrackingModule.py实现的是手关键点识别。

AiVirtualPainter.py

import cv2

import HandTrackingModule as htm

import os

import numpy as np

folderPath = "PainterImg/"

mylist = os.listdir(folderPath)

overlayList = []

for imPath in mylist:

image = cv2.imread(f'{folderPath}/{imPath}')

overlayList.append(image)

header = overlayList[0]

color = [255, 0, 0]

brushThickness = 15

eraserThickness = 40

cap = cv2.VideoCapture(0) # 若使用笔记本自带摄像头则编号为0 若使用外接摄像头 则更改为1或其他编号

cap.set(3, 1280)

cap.set(4, 720)

# cap.set(3, 800)

# cap.set(4, 500)

detector = htm.handDetector()

xp, yp = 0, 0

imgCanvas = np.zeros((720, 1280, 3), np.uint8) # 新建一个画板

# imgCanvas = np.zeros((500, 800, 3), np.uint8) # 新建一个画板

while True:

# 1.import image

success, img = cap.read()

img = cv2.flip(img, 1) # 翻转

# 2.find hand landmarks

img = detector.findHands(img)

lmList = detector.findPosition(img, draw=True)

if len(lmList) != 0:

x1, y1 = lmList[8][1:]

x2, y2 = lmList[12][1:]

# 3. Check which fingers are up

fingers = detector.fingersUp()

# 4. If Selection Mode – Two finger are up

if fingers[1] and fingers[2]:

if y1 < 153:

if 0 < x1 < 320:

header = overlayList[0]

color = [50, 128, 250]

elif 320 < x1 < 640:

header = overlayList[1]

color = [0, 0, 255]

elif 640 < x1 < 960:

header = overlayList[2]

color = [0, 255, 0]

elif 960 < x1 < 1280:

header = overlayList[3]

color = [0, 0, 0]

img[0:1280][0:153] = header

# 5. If Drawing Mode – Index finger is up

if fingers[1] and fingers[2] == False:

cv2.circle(img, (x1, y1), 15, color, cv2.FILLED)

print("Drawing Mode")

if xp == 0 and yp == 0:

xp, yp = x1, y1

if color == [0, 0, 0]:

cv2.line(img, (xp, yp), (x1, y1), color, eraserThickness) # ??

cv2.line(imgCanvas, (xp, yp), (x1, y1), color, eraserThickness)

else:

cv2.line(img, (xp, yp), (x1, y1), color, brushThickness) # ??

cv2.line(imgCanvas, (xp, yp), (x1, y1), color, brushThickness)

xp, yp = x1, y1

# Clear Canvas when all fingers are up

# if all (x >= 1 for x in fingers):

# imgCanvas = np.zeros((720, 1280, 3), np.uint8)

# 实时显示画笔轨迹的实现

imgGray = cv2.cvtColor(imgCanvas, cv2.COLOR_BGR2GRAY)

_, imgInv = cv2.threshold(imgGray, 50, 255, cv2.THRESH_BINARY_INV)

imgInv = cv2.cvtColor(imgInv, cv2.COLOR_GRAY2BGR)

img = cv2.bitwise_and(img, imgInv)

img = cv2.bitwise_or(img, imgCanvas)

img[0:1280][0:153] = header

cv2.imshow("Image", img)

# cv2.imshow("Canvas", imgCanvas)

# cv2.imshow("Inv", imgInv)

cv2.waitKey(1)HandTrackingModule.py

import cv2

import mediapipe as mp

import time

import math

class handDetector():

def __init__(self, mode=False, maxHands=2, detectionCon=0.8, trackCon=0.8):

self.mode = mode

self.maxHands = maxHands

self.detectionCon = detectionCon

self.trackCon = trackCon

self.mpHands = mp.solutions.hands

self.hands = self.mpHands.Hands(self.mode, self.maxHands, self.detectionCon, self.trackCon)

self.mpDraw = mp.solutions.drawing_utils

self.tipIds = [4, 8, 12, 16, 20]

def findHands(self, img, draw=True):

imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

self.results = self.hands.process(imgRGB)

print(self.results.multi_handedness) # 获取检测结果中的左右手标签并打印

if self.results.multi_hand_landmarks:

for handLms in self.results.multi_hand_landmarks:

if draw:

self.mpDraw.draw_landmarks(img, handLms, self.mpHands.HAND_CONNECTIONS)

return img

def findPosition(self, img, draw=True):

self.lmList = []

if self.results.multi_hand_landmarks:

for handLms in self.results.multi_hand_landmarks:

for id, lm in enumerate(handLms.landmark):

h, w, c = img.shape

cx, cy = int(lm.x * w), int(lm.y * h)

# print(id, cx, cy)

self.lmList.append([id, cx, cy])

if draw:

cv2.circle(img, (cx, cy), 12, (255, 0, 255), cv2.FILLED)

return self.lmList

def fingersUp(self):

fingers = []

# 大拇指

if self.lmList[self.tipIds[0]][1] > self.lmList[self.tipIds[0] - 1][1]:

fingers.append(1)

else:

fingers.append(0)

# 其余手指

for id in range(1, 5):

if self.lmList[self.tipIds[id]][2] < self.lmList[self.tipIds[id] - 2][2]:

fingers.append(1)

else:

fingers.append(0)

# totalFingers = fingers.count(1)

return fingers

def findDistance(self, p1, p2, img, draw=True, r=15, t=3):

x1, y1 = self.lmList[p1][1:]

x2, y2 = self.lmList[p2][1:]

cx, cy = (x1 + x2) // 2, (y1 + y2) // 2

if draw:

cv2.line(img, (x1, y1), (x2, y2), (255, 0, 255), t)

cv2.circle(img, (x1, y1), r, (255, 0, 255), cv2.FILLED)

cv2.circle(img, (x2, y2), r, (255, 0, 255), cv2.FILLED)

cv2.circle(img, (cx, cy), r, (0, 0, 255), cv2.FILLED)

length = math.hypot(x2 - x1, y2 - y1)

return length, img, [x1, y1, x2, y2, cx, cy]

def main():

pTime = 0

cTime = 0

cap = cv2.VideoCapture(0)

detector = handDetector()

while True:

success, img = cap.read()

img = detector.findHands(img) # 检测手势并画上骨架信息

lmList = detector.findPosition(img) # 获取得到坐标点的列表

if len(lmList) != 0:

print(lmList[4])

cTime = time.time()

fps = 1 / (cTime - pTime)

pTime = cTime

cv2.putText(img, 'fps:' + str(int(fps)), (10, 70), cv2.FONT_HERSHEY_PLAIN, 3, (255, 0, 255), 3)

cv2.imshow('Image', img)

cv2.waitKey(1)

if __name__ == "__main__":

main()

源码网上有很多,有不懂可以联系博主,一起相互交流。

学习永无止境,AI是未来的发展方向,每天进步一点点,实现终身学习。

如有侵权,或需要完整代码,请及时联系博主。