Fast SAM C++推理部署—TensorRT

核心源代码在结尾处有获取方式

晓理紫

0 XX开局一张图,剩下…

1 为什么需要trt部署

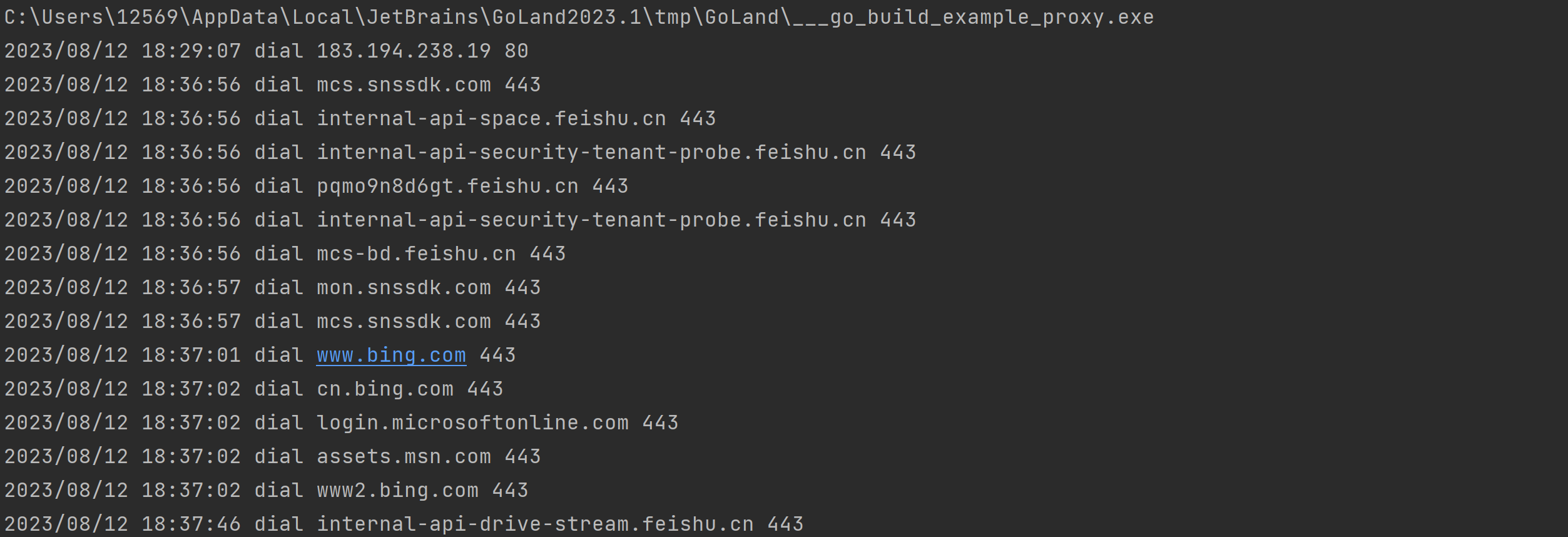

主要是在GPU上推理可以获得更高的推理速度。可与onnxruntim推理向比较一下

对比视频

2 TensorRt部署

2.1 环境与条件

- 需要配置TensorRt相关环境

这个就需要有显卡,安装驱动,CUDA以及TensorRT

- 需要把原始权重模型转为trt模型

2.2 trt模型转换

trt模型转换有多种方式,本文采用的是先把pt模型转成onnx模型(),再把onnx通过trtexec工具进行转换。这里假设已经有onxx模型,转换命令如下:

trtexec --onnx=fastsam.onnx --saveEngine=fasrsam.engine

注意: trtexec -h查看帮助,转fp16或者int8等参数

2.3 部署核心代码

模型转换完成以后,剩下的就是部署推理。部署推理里面最为重要也是最难搞的是数据解析部分。其中模型加载是很标准的流程,当然我这里不一定是标准的。

- 加载模型并初始化核心代码

std::ifstream file(engine_file_path, std::ios::binary);

assert(file.good());

file.seekg(0, std::ios::end);

auto size = file.tellg();

std::ostringstream fmt;

file.seekg(0, std::ios::beg);

char *trtModelStream = new char[size];

assert(trtModelStream);

file.read(trtModelStream, size);

file.close();

initLibNvInferPlugins(&this->gLogger, "");

this->runtime = nvinfer1::createInferRuntime(this->gLogger);

assert(this->runtime != nullptr);

this->engine = this->runtime->deserializeCudaEngine(trtModelStream, size);

assert(this->engine != nullptr);

this->context = this->engine->createExecutionContext();

assert(this->context != nullptr);

cudaStreamCreate(&this->stream);

const nvinfer1::Dims input_dims =

this->engine->getBindingDimensions(this->engine->getBindingIndex(INPUT));

this->in_size = get_size_by_dims(input_dims);

CHECK(cudaMalloc(&this->buffs[0], this->in_size * sizeof(float)));

this->context->setBindingDimensions(0, input_dims);

const int32_t output0_idx = this->engine->getBindingIndex(OUTPUT0);

const nvinfer1::Dims output0_dims =

this->context->getBindingDimensions(output0_idx);

this->out_sizes[output0_idx - NUM_INPUT].first =

get_size_by_dims(output0_dims);

this->out_sizes[output0_idx - NUM_INPUT].second =

DataTypeToSize(this->engine->getBindingDataType(output0_idx));

const int32_t output1_idx = this->engine->getBindingIndex(OUTPUT1);

const nvinfer1::Dims output1_dims =

this->context->getBindingDimensions(output1_idx);

this->out_sizes[output1_idx - NUM_INPUT].first =

get_size_by_dims(output1_dims);

this->out_sizes[output1_idx - NUM_INPUT].second =

DataTypeToSize(this->engine->getBindingDataType(output1_idx));

const int32_t Reshape_1252_idx = this->engine->getBindingIndex(Reshape_1252);

const nvinfer1::Dims Reshape_1252_dims =

this->context->getBindingDimensions(Reshape_1252_idx);

this->out_sizes[Reshape_1252_idx - NUM_INPUT].first =

get_size_by_dims(Reshape_1252_dims);

this->out_sizes[Reshape_1252_idx - NUM_INPUT].second =

DataTypeToSize(this->engine->getBindingDataType(Reshape_1252_idx));

const int32_t Reshape_1271_idx = this->engine->getBindingIndex(Reshape_1271);

const nvinfer1::Dims Reshape_1271_dims =

this->context->getBindingDimensions(Reshape_1271_idx);

this->out_sizes[Reshape_1271_idx - NUM_INPUT].first =

get_size_by_dims(Reshape_1271_dims);

this->out_sizes[Reshape_1271_idx - NUM_INPUT].second =

DataTypeToSize(this->engine->getBindingDataType(Reshape_1271_idx));

const int32_t Concat_1213_idx = this->engine->getBindingIndex(Concat_1213);

const nvinfer1::Dims Concat_1213_dims =

this->context->getBindingDimensions(Concat_1213_idx);

this->out_sizes[Concat_1213_idx - NUM_INPUT].first =

get_size_by_dims(Concat_1213_dims);

this->out_sizes[Concat_1213_idx - NUM_INPUT].second =

DataTypeToSize(this->engine->getBindingDataType(Concat_1213_idx));

const int32_t OUTPUT1167_idx = this->engine->getBindingIndex(OUTPUT1167);

const nvinfer1::Dims OUTPUT1167_dims =

this->context->getBindingDimensions(OUTPUT1167_idx);

this->out_sizes[OUTPUT1167_idx - NUM_INPUT].first =

get_size_by_dims(OUTPUT1167_dims);

this->out_sizes[OUTPUT1167_idx - NUM_INPUT].second =

DataTypeToSize(this->engine->getBindingDataType(OUTPUT1167_idx));

for (int i = 0; i < NUM_OUTPUT; i++) {

const int osize = this->out_sizes[i].first * out_sizes[i].second;

CHECK(cudaHostAlloc(&this->outputs[i], osize, 0));

CHECK(cudaMalloc(&this->buffs[NUM_INPUT + i], osize));

}

if (warmup) {

for (int i = 0; i < 10; i++) {

size_t isize = this->in_size * sizeof(float);

auto *tmp = new float[isize];

CHECK(cudaMemcpyAsync(this->buffs[0], tmp, isize, cudaMemcpyHostToDevice,

this->stream));

this->xiaoliziinfer();

}

}

模型加载以后,就可以送入数据进行推理

- 送入数据并推理

float height = (float)image.rows;

float width = (float)image.cols;

float r = std::min(INPUT_H / height, INPUT_W / width);

int padw = (int)std::round(width * r);

int padh = (int)std::round(height * r);

if ((int)width != padw || (int)height != padh) {

cv::resize(image, tmp, cv::Size(padw, padh));

} else {

tmp = image.clone();

}

float _dw = INPUT_W - padw;

float _dh = INPUT_H - padh;

_dw /= 2.0f;

_dh /= 2.0f;

int top = int(std::round(_dh - 0.1f));

int bottom = int(std::round(_dh + 0.1f));

int left = int(std::round(_dw - 0.1f));

int right = int(std::round(_dw + 0.1f));

cv::copyMakeBorder(tmp, tmp, top, bottom, left, right, cv::BORDER_CONSTANT,

PAD_COLOR);

cv::dnn::blobFromImage(tmp, tmp, 1 / 255.f, cv::Size(), cv::Scalar(0, 0, 0),

true, false, CV_32F);

CHECK(cudaMemcpyAsync(this->buffs[0], tmp.ptr<float>(),

this->in_size * sizeof(float), cudaMemcpyHostToDevice,

this->stream));

this->context->enqueueV2(buffs.data(), this->stream, nullptr);

for (int i = 0; i < NUM_OUTPUT; i++) {

const int osize = this->out_sizes[i].first * out_sizes[i].second;

CHECK(cudaMemcpyAsync(this->outputs[i], this->buffs[NUM_INPUT + i], osize,

cudaMemcpyDeviceToHost, this->stream));

}

cudaStreamSynchronize(this->stream);

推理以后就可以获取数据并进行解析

- 数据获取

cv::Mat matData(37, OUTPUT0w, CV_32F, pdata);

matVec.push_back(matData);

float *pdata1 = nullptr;

pdata1 = static_cast<float *>(this->outputs[2]);

if (pdata1 == nullptr) {

return;

}

cv::Mat matData1(105, OUTPUT1w * OUTPUT1w, CV_32F, pdata1);

matVec.push_back(matData1);

float *pdata2 = nullptr;

pdata2 = static_cast<float *>(this->outputs[3]);

if (pdata2 == nullptr) {

return;

}

cv::Mat matData2(105, Reshape_1252w * Reshape_1252w, CV_32F, pdata2);

matVec.push_back(matData2);

float *pdata3 = nullptr;

pdata3 = static_cast<float *>(this->outputs[4]);

if (pdata3 == nullptr) {

return;

}

cv::Mat matData3(105, Reshape_1271w * Reshape_1271w, CV_32F, pdata3);

matVec.push_back(matData3);

float *pdata4 = nullptr;

pdata4 = static_cast<float *>(this->outputs[1]);

if (pdata4 == nullptr) {

return;

}

cv::Mat matData4(Concat_1213w, 32, CV_32F, pdata4);

matVec.push_back(matData4);

float *pdata5 = nullptr;

pdata5 = static_cast<float *>(this->outputs[0]);

if (pdata5 == nullptr) {

return;

}

cv::Mat matData5(32, OUTPUT1167w * OUTPUT1167w, CV_32F, pdata5);

matVec.push_back(matData5);

- 数据解析

首先是对数据进行分割处理并进行NMS获取box、lab以及mask相关信息

cv::Mat box;

cv::Mat cls;

cv::Mat mask;

box = temData.colRange(0, 4).clone();

cls = temData.colRange(4, 5).clone();

mask = temData.colRange(5, temData.cols).clone();

cv::Mat j = cv::Mat::zeros(cls.size(), CV_32F);

cv::Mat dst;

cv::hconcat(box, cls, dst); // dst=[A B]

cv::hconcat(dst, j, dst);

cv::hconcat(dst, mask, dst);

std::vector<float> scores;

std::vector<cv::Rect> boxes;

pxvec = dst.ptr<float>(0);

for (int i = 0; i < dst.rows; i++) {

pxvec = dst.ptr<float>(i);

boxes.push_back(cv::Rect(pxvec[0], pxvec[1], pxvec[2], pxvec[3]));

scores.push_back(pxvec[4]);

}

std::vector<int> indices;

xiaoliziNMSBoxes(boxes, scores, conf_thres, iou_thres, indices);

cv::Mat reMat;

for (int i = 0; i < indices.size() && i < max_det; i++) {

int index = indices[i];

reMat.push_back(dst.rowRange(index, index + 1).clone());

}

box = reMat.colRange(0, 6).clone();

xiaolizixywh2xyxy(box);

mask = reMat.colRange(6, reMat.cols).clone();

其次是获取mask相关数据

for (int i = 0; i < bboxes.rows; i++) {

pxvec = bboxes.ptr<float>(i);

cv::Mat dest, mask;

cv::exp(-maskChannels[i], dest);

dest = 1.0 / (1.0 + dest);

dest = dest(roi);

cv::resize(dest, mask, frmae.size(), cv::INTER_LINEAR);

cv::Rect roi(pxvec[0], pxvec[1], pxvec[2] - pxvec[0], pxvec[3] - pxvec[1]);

cv::Mat temmask = mask(roi);

cv::Mat boxMask = cv::Mat(frmae.size(), mask.type(), cv::Scalar(0.0));

float rx = std::max(pxvec[0], 0.0f);

float ry = std::max(pxvec[1], 0.0f);

for (int y = ry, my = 0; my < temmask.rows; y++, my++) {

float *ptemmask = temmask.ptr<float>(my);

float *pboxmask = boxMask.ptr<float>(y);

for (int x = rx, mx = 0; mx < temmask.cols; x++, mx++) {

pboxmask[x] = ptemmask[mx] > 0.5 ? 1.0 : 0.0;

}

}

vremat.push_back(boxMask);

}

最后是画出相关信息

cv::Mat bbox = vremat[0];

float *pxvec = bbox.ptr<float>(0);

for (int i = 0; i < bbox.rows; i++) {

pxvec = bbox.ptr<float>(i);

cv::rectangle(image, cv::Point(pxvec[0], pxvec[1]),

cv::Point(int(pxvec[2]), int(pxvec[3])),

cv::Scalar(0, 0, 255), 2);

}

for (int i = 1; i < vremat.size(); i++) {

cv::Mat mask = vremat[i];

int indx = (rand() % (80 - 0)) + 0;

for (int y = 0; y < mask.rows; y++) {

const float *mp = mask.ptr<float>(y);

uchar *p = image.ptr<uchar>(y);

for (int x = 0; x < mask.cols; x++) {

if (mp[x] == 1.0) {

p[0] = cv::saturate_cast<uchar>(p[0] * 0.5 + COLORS[indx][0] * 0.5);

p[1] = cv::saturate_cast<uchar>(p[1] * 0.5 + COLORS[indx][1] * 0.5);

p[2] = cv::saturate_cast<uchar>(p[2] * 0.5 + COLORS[indx][2] * 0.5);

}

p += 3;

}

}

}

3 核心代码

扫一扫,关注并回复fastsamtrt获取核心代码

晓理紫爱学习爱记录爱分享!