安装flannel

Master 节点NotReady 的原因就是因为没有使用任何的网络插件,此时Node 和Master的连接还不正常。目前最流行的Kubernetes 网络插件有Flannel、Calico、Canal、Weave 这里选择使用flannel。

flannel提取链接:https://pan.baidu.com/s/1fLJKhBtcONFCln6_u3xMPQ?pwd=1mf2

提取码:1mf2

所有主机:

master上传kube-flannel.yml,所有主机上传flannel_v0.12.0-amd64.tar

[root@k8s-master ~]# docker load < flannel_v0.12.0-amd64.tar

256a7af3acb1: Loading layer 5.844MB/5.844MB

d572e5d9d39b: Loading layer 10.37MB/10.37MB

57c10be5852f: Loading layer 2.249MB/2.249MB

7412f8eefb77: Loading layer 35.26MB/35.26MB

05116c9ff7bf: Loading layer 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

在所有主机上上传解压cni-plugins-linux-amd64-v0.8.6.tgz

[root@k8s-master ~]# tar xf cni-plugins-linux-amd64-v0.8.6.tgz

[root@k8s-master ~]# cp flannel /opt/cni/bin/

master主机:

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io/flannel created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 19h v1.20.0

k8s-node1 Ready <none> 19h v1.20.0

k8s-node2 Ready <none> 19h v1.20.0

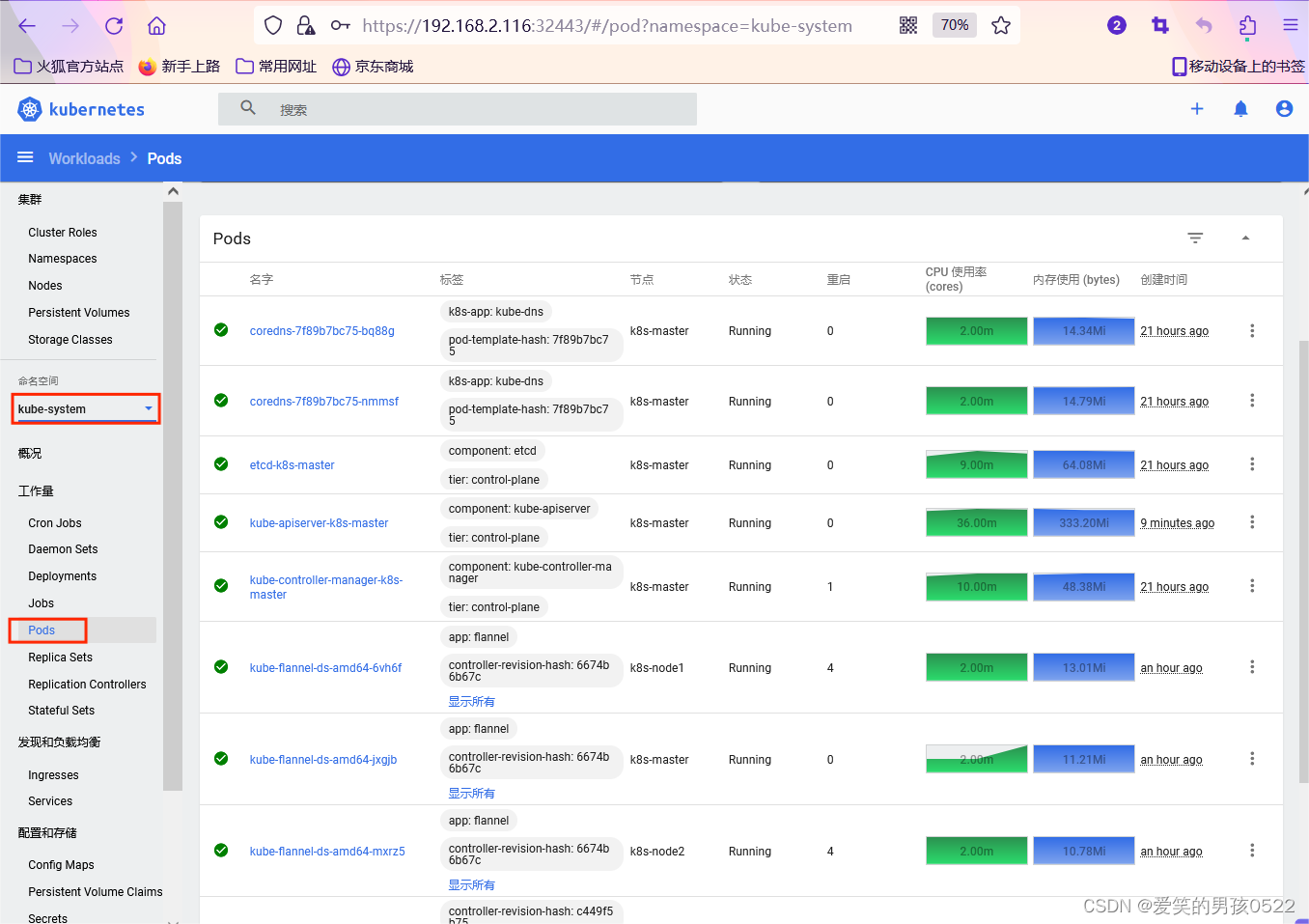

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-bq88g 1/1 Running 0 19h

coredns-7f89b7bc75-nmmsf 1/1 Running 0 19h

etcd-k8s-master 1/1 Running 0 19h

kube-apiserver-k8s-master 1/1 Running 0 19h

kube-controller-manager-k8s-master 1/1 Running 0 19h

kube-flannel-ds-amd64-6vh6f 1/1 Running 4 36m

kube-flannel-ds-amd64-jxgjb 1/1 Running 0 36m

kube-flannel-ds-amd64-mxrz5 1/1 Running 4 36m

kube-proxy-94cl4 1/1 Running 0 19h

kube-proxy-ncwg6 1/1 Running 0 19h

kube-proxy-nvnr6 1/1 Running 0 19h

kube-scheduler-k8s-master 1/1 Running 0 19h

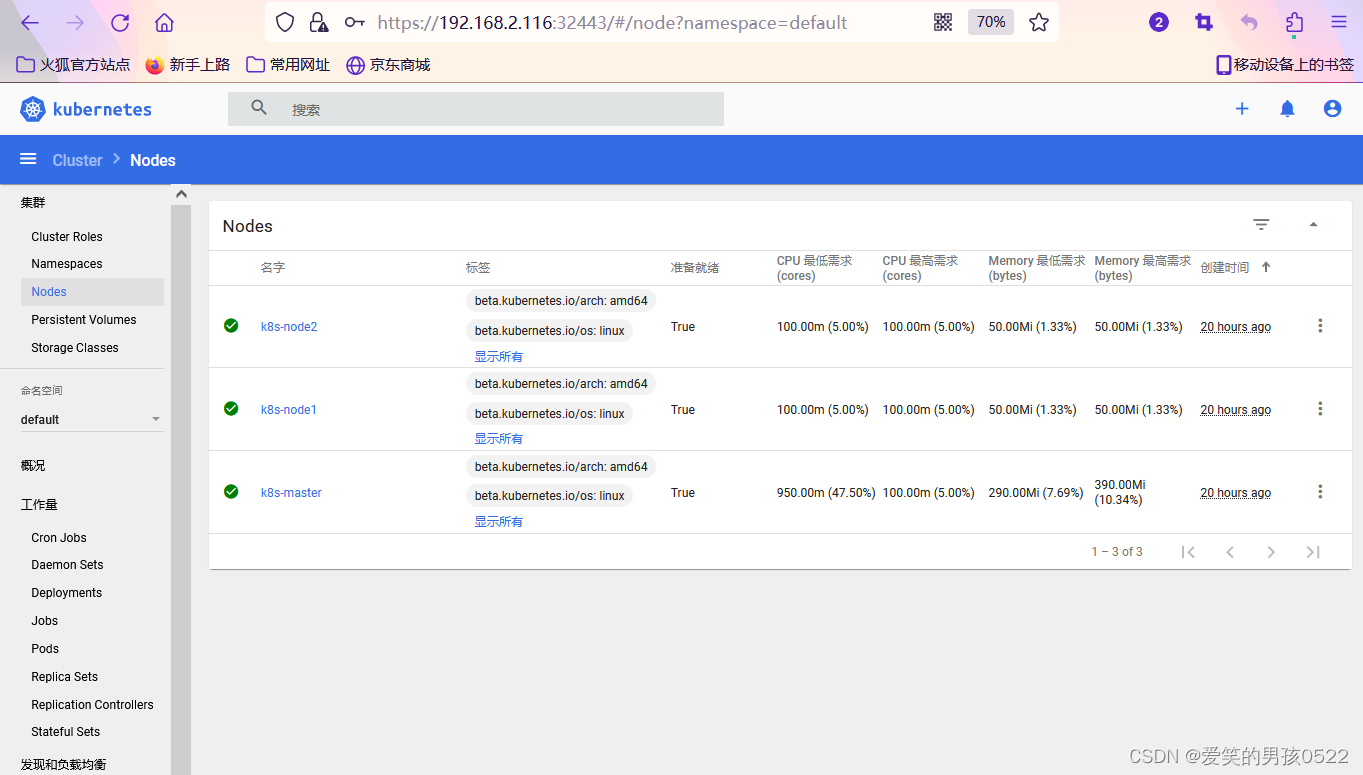

已经是ready状态

三、安装Dashboard UI

3.1、部署Dashboard

dashboard的github仓库地址:https://github.com/kubernetes/dashboard

代码仓库当中,有给出安装示例的相关部署文件,我们可以直接获取之后,直接部署即可

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml

--2023-08-10 15:46:20-- https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.111.133, 185.199.108.133, ...

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... 已连接。

无法建立 SSL 连接。

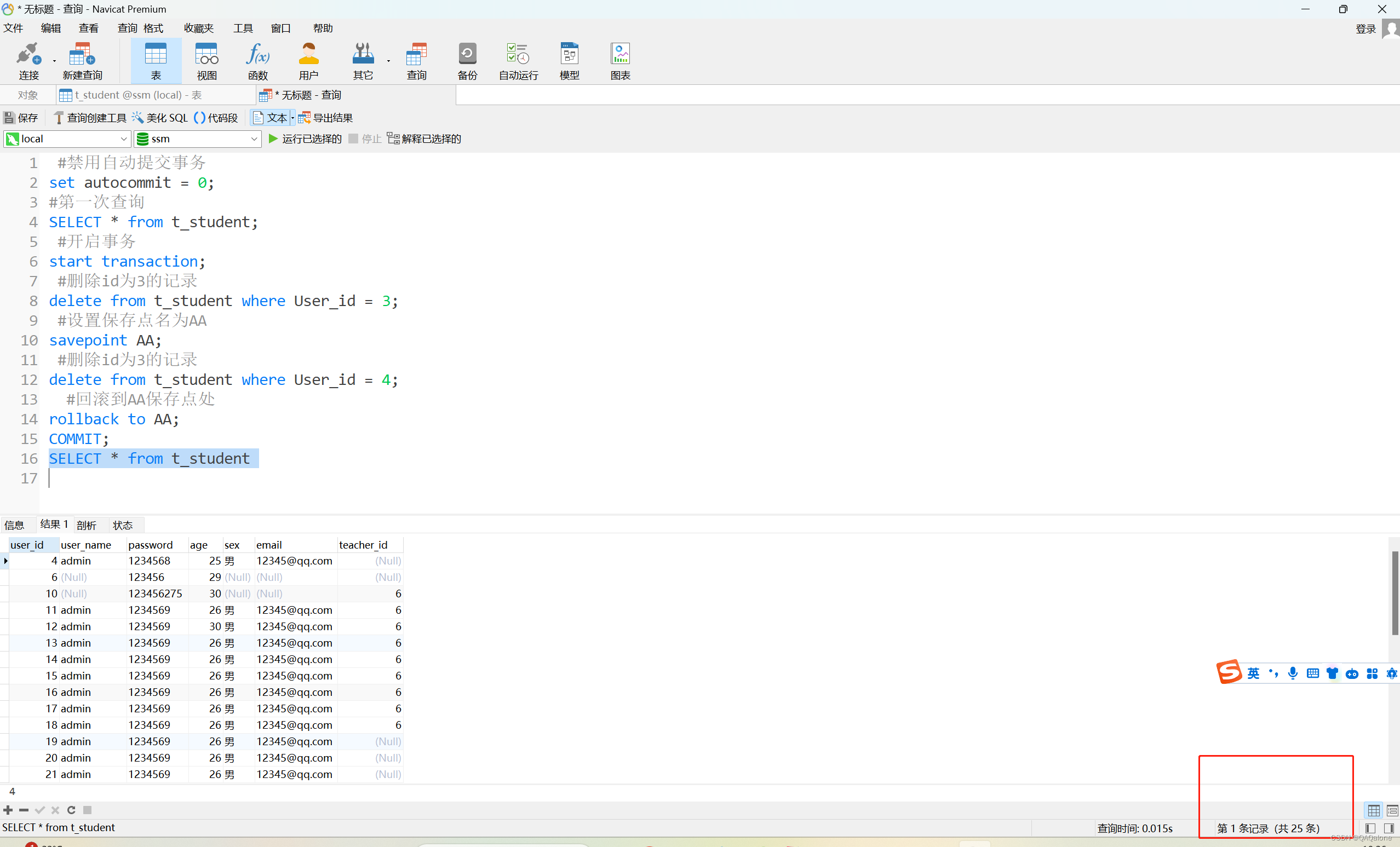

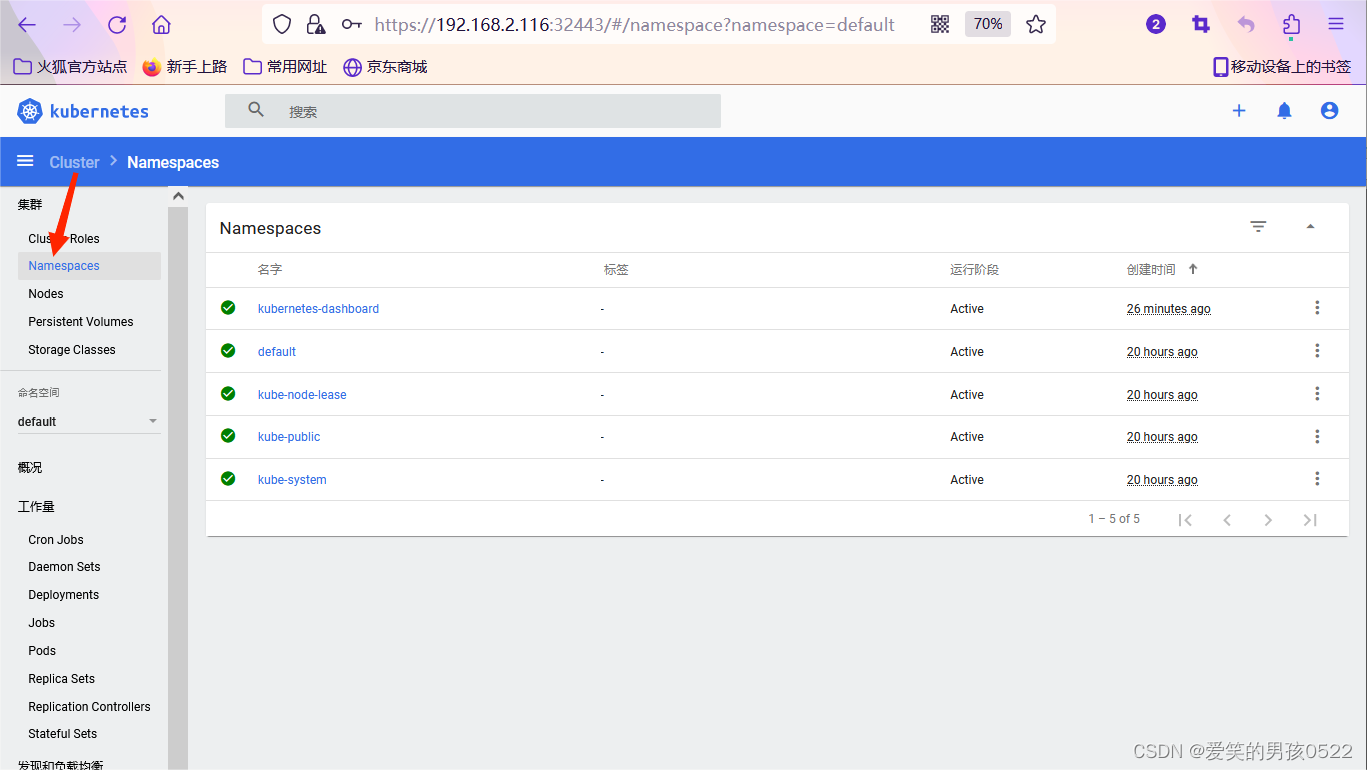

默认这个部署文件当中,会单独创建一个名为kubernetes-dashboard的命名空间,并将kubernetes-dashboard部署在该命名空间下。dashboard的镜像来自docker hub官方,所以可不用修改镜像地址,直接从官方获取即可。

3.2、开放端口设置

recommended.yaml文件提取链接:https://pan.baidu.com/s/1OYeC84YNY2_HZ1699n8Eug?pwd=7fhp

提取码:7fhp

在默认情况下,dashboard并不对外开放访问端口,这里简化操作,直接使用nodePort的方式将其端口暴露出来,修改serivce部分的定义:

[root@k8s-master ~]# docker pull kubernetesui/dashboard:v2.0.0

v2.0.0: Pulling from kubernetesui/dashboard

2a43ce254c7f: Pull complete

Digest: sha256:06868692fb9a7f2ede1a06de1b7b32afabc40ec739c1181d83b5ed3eb147ec6e

Status: Downloaded newer image for kubernetesui/dashboard:v2.0.0

docker.io/kubernetesui/dashboard:v2.0.0

[root@k8s-master ~]# docker pull kubernetesui/metrics-scraper:v1.0.4

v1.0.4: Pulling from kubernetesui/metrics-scraper

07008dc53a3e: Pull complete

1f8ea7f93b39: Pull complete

04d0e0aeff30: Pull complete

Digest: sha256:555981a24f184420f3be0c79d4efb6c948a85cfce84034f85a563f4151a81cbf

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.4

docker.io/kubernetesui/metrics-scraper:v1.0.4

[root@k8s-master ~]# vim recommended.yaml

30 ---

31

32 kind: Service

33 apiVersion: v1

34 metadata:

35 labels:

36 k8s-app: kubernetes-dashboard

37 name: kubernetes-dashboard

38 namespace: kubernetes-dashboard

39 spec:

40 type: NodePort

41 ports:

42 - port: 443

43 targetPort: 8443

44 nodePort: 32443

45 selector:

46 k8s-app: kubernetes-dashboard

47

48 ---

192 image: kubernetesui/dashboard:v2.0.0

276 image: kubernetesui/metrics-scraper:v1.0.43.3、权限配置

配置一个超级管理员权限

[root@k8s-master ~]# vim recommended.yaml

155 ---

156

157 apiVersion: rbac.authorization.k8s.io/v1

158 kind: ClusterRoleBinding

159 metadata:

160 name: kubernetes-dashboard

161 roleRef:

162 apiGroup: rbac.authorization.k8s.io

163 kind: ClusterRole

164 name: cluster-admin

165 subjects:

166 - kind: ServiceAccount

167 name: kubernetes-dashboard

168 namespace: kubernetes-dashboard

169

170 ---

[root@k8s-master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b59f7d4df-fp8qx 1/1 Running 0 67s

kubernetes-dashboard-74d688b6bc-qkwrn 1/1 Running 0 67s

[root@k8s-master ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-7f89b7bc75-bq88g 1/1 Running 0 20h 10.244.0.2 k8s-master <none> <none>

kube-system coredns-7f89b7bc75-nmmsf 1/1 Running 0 20h 10.244.0.3 k8s-master <none> <none>

kube-system etcd-k8s-master 1/1 Running 0 20h 192.168.2.116 k8s-master <none> <none>

kube-system kube-apiserver-k8s-master 1/1 Running 0 20h 192.168.2.116 k8s-master <none> <none>

kube-system kube-controller-manager-k8s-master 1/1 Running 0 20h 192.168.2.116 k8s-master <none> <none>

kube-system kube-flannel-ds-amd64-6vh6f 1/1 Running 4 58m 192.168.2.117 k8s-node1 <none> <none>

kube-system kube-flannel-ds-amd64-jxgjb 1/1 Running 0 58m 192.168.2.116 k8s-master <none> <none>

kube-system kube-flannel-ds-amd64-mxrz5 1/1 Running 4 58m 192.168.2.118 k8s-node2 <none> <none>

kube-system kube-proxy-94cl4 1/1 Running 0 19h 192.168.2.117 k8s-node1 <none> <none>

kube-system kube-proxy-ncwg6 1/1 Running 0 20h 192.168.2.116 k8s-master <none> <none>

kube-system kube-proxy-nvnr6 1/1 Running 0 19h 192.168.2.118 k8s-node2 <none> <none>

kube-system kube-scheduler-k8s-master 1/1 Running 0 20h 192.168.2.116 k8s-master <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-7b59f7d4df-fp8qx 1/1 Running 0 77s 10.244.2.2 k8s-node2 <none> <none>

kubernetes-dashboard kubernetes-dashboard-74d688b6bc-qkwrn 1/1 Running 0 77s 10.244.1.2 k8s-node1 <none> <none>

3.4、访问Token配置

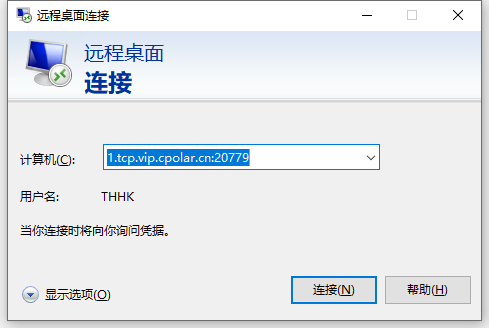

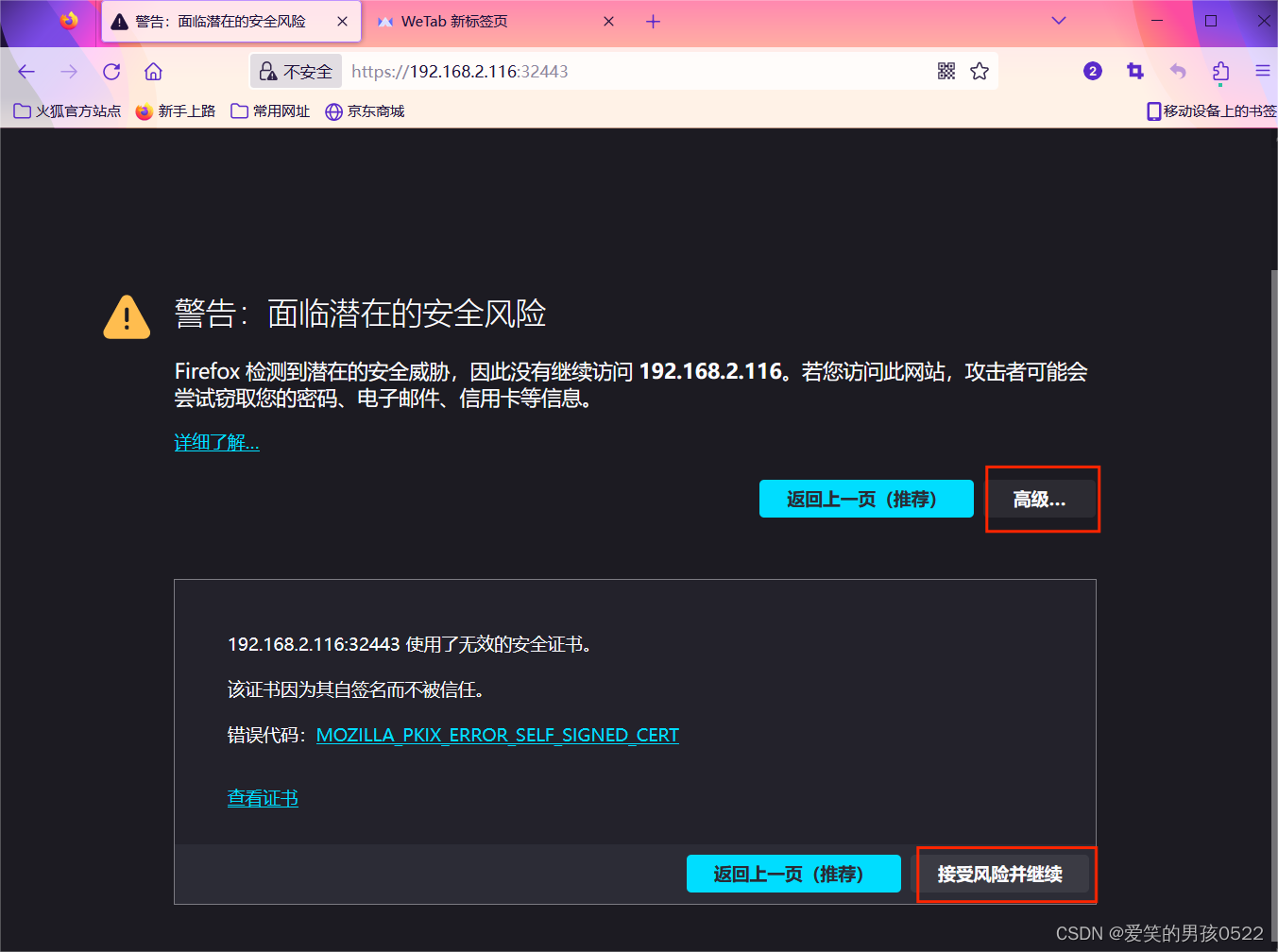

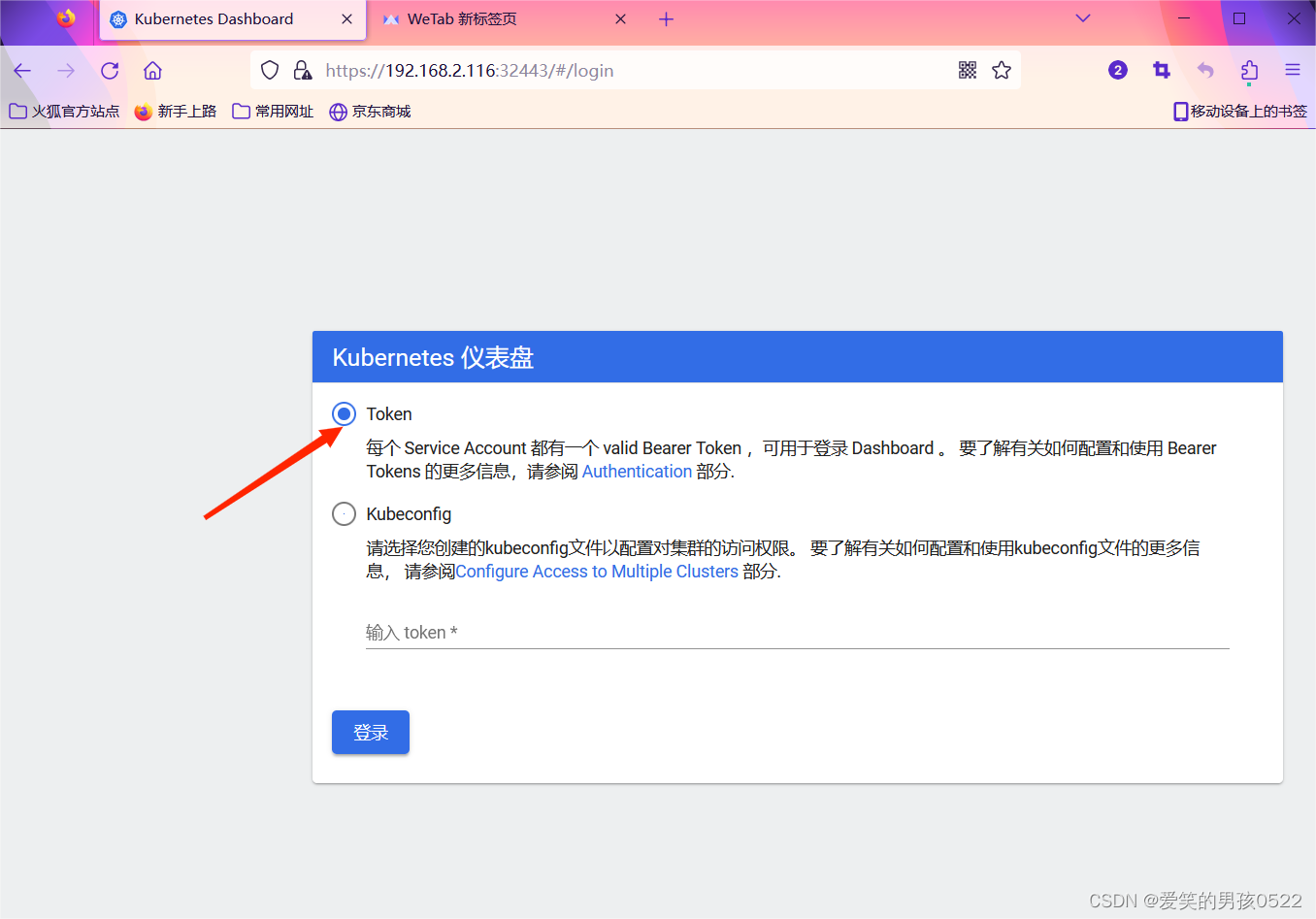

使用谷歌浏览器测试访问 https://192.168.2.116:32443

可以看到出现如上图画面,需要我们输入一个kubeconfig文件或者一个token。事实上在安装dashboard时,也为我们默认创建好了一个serviceaccount,为kubernetes-dashboard,并为其生成好了token,我们可以通过如下指令获取该sa的token:

[root@k8s-master ~]# kubectl describe secret -n kubernetes-dashboard $(kubectl get secret -n kubernetes-dashboard |grep kubernetes-dashboard-token | awk '{print $1}') |grep token | awk '{print $2}'

kubernetes-dashboard-token-tnv6k

kubernetes.io/service-account-token

eyJhbGciOiJSUzI1NiIsImtpZCI6InB0RVQwT3JvN3AxTXNhZnFmRTduNng3NGgzcEgtN0dpU1Y3QkNnVmlET2cifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi10bnY2ayIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjEzOTEzOWZlLTZiNDktNDQ3ZC05MjdmLWIwOGI1MjVmZDVjMSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.PQ_0QNs19ei_WlGK73NWJwG1YamGEQz3Gez6BlwAX4uiH_ZrVVS0G4ReWRQCLfqwFQmWlaNhuFIggfJAldxDzQ9aUtKrxbGcgdl4PQ28sT-0FpZSAS8qYtZOcM_Dv96Gp39Erm-PcPMk3vZD_TBn118l9-eAkEfgohUMBVD4cUMgL1olwgZHnlFbtrvpf_gwzF-oeGuRp8VeMXOYtB7J5_D8RSaoLpGQ-rfRq9mG0TqJ5laF_8Sb6WFsnjSb8i0_WXZ1pnXEh3_UiEgd78KlSH6wNoi4jB5B1k61KZlQ0ol9owDUDAAS-qznG8ER41KvMGs6CWt5queOjyQCK3XwQA

四、安装metrics-server

4.1、在Node节点上下载镜像

heapster已经被metrics-server取代

[root@k8s-node1 ~]# docker pull bluersw/metrics-server-amd64:v0.3.6

v0.3.6: Pulling from bluersw/metrics-server-amd64

e8d8785a314f: Pull complete

b2f4b24bed0d: Pull complete

Digest: sha256:c9c4e95068b51d6b33a9dccc61875df07dc650abbf4ac1a19d58b4628f89288b

Status: Downloaded newer image for bluersw/metrics-server-amd64:v0.3.6

docker.io/bluersw/metrics-server-amd64:v0.3.6

[root@k8s-node1 ~]# docker tag bluersw/metrics-server-amd64:v0.3.6 k8s.gcr.io/metrics-server-amd64:v0.3.6

4.2、修改 Kubernetes apiserver 启动参数

在kube-apiserver项中添加如下配置选项 修改后apiserver会自动重启

[root@k8s-master ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

44 - --enable-aggregator-routing=true4.3、Master上进行部署

[root@k8s-master ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

--2023-08-10 16:43:31-- https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

正在解析主机 github.com (github.com)... 20.205.243.166

正在连接 github.com (github.com)|20.205.243.166|:443... 已连接。

无法建立 SSL 连接。

修改安装脚本:

components.yaml文件提取链接:https://pan.baidu.com/s/1REsXCZIoy4rak3mFQDOgBg?pwd=m6xz

提取码:m6xz

[root@k8s-master ~]# vim components.yaml

73 template:

74 metadata:

75 name: metrics-server

76 labels:

77 k8s-app: metrics-server

78 spec:

79 serviceAccountName: metrics-server

80 volumes:

81 # mount in tmp so we can safely use from-scratch images and/or read-only containers

82 - name: tmp-dir

83 emptyDir: {}

84 containers:

85 - name: metrics-server

86 image: k8s.gcr.io/metrics-server-amd64:v0.3.6

87 imagePullPolicy: IfNotPresent

88 args:

89 - --cert-dir=/tmp

90 - --secure-port=4443

91 - --kubelet-preferred-address-types=InternalIP

92 - --kubelet-insecure-tls

[root@k8s-master ~]# kubectl create -f components.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

Warning: apiregistration.k8s.io/v1beta1 APIService is deprecated in v1.19+, unavailable in v1.22+; use apiregistration.k8s.io/v1 APIService

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

等待1-2分钟后查看结果

[root@k8s-master ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 102m 5% 1432Mi 39%

k8s-node1 34m 1% 978Mi 26%

k8s-node2 32m 1% 900Mi 24%

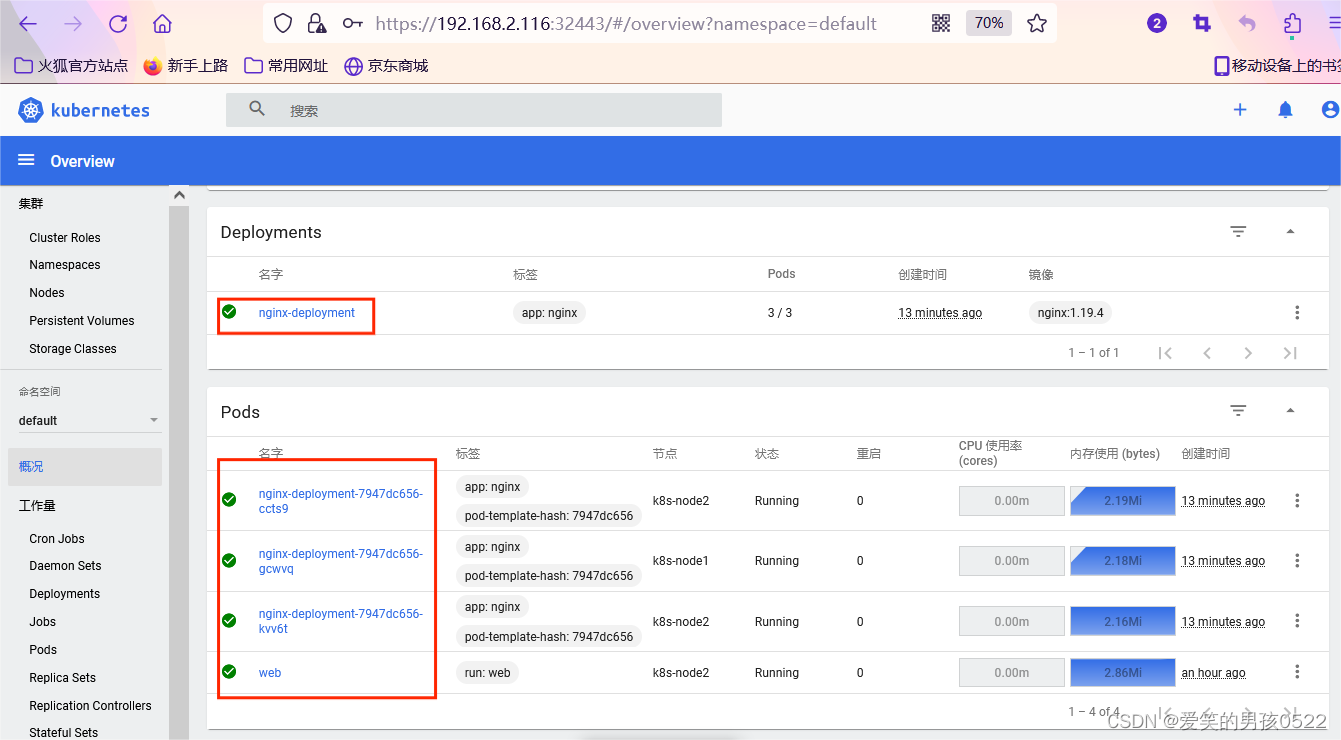

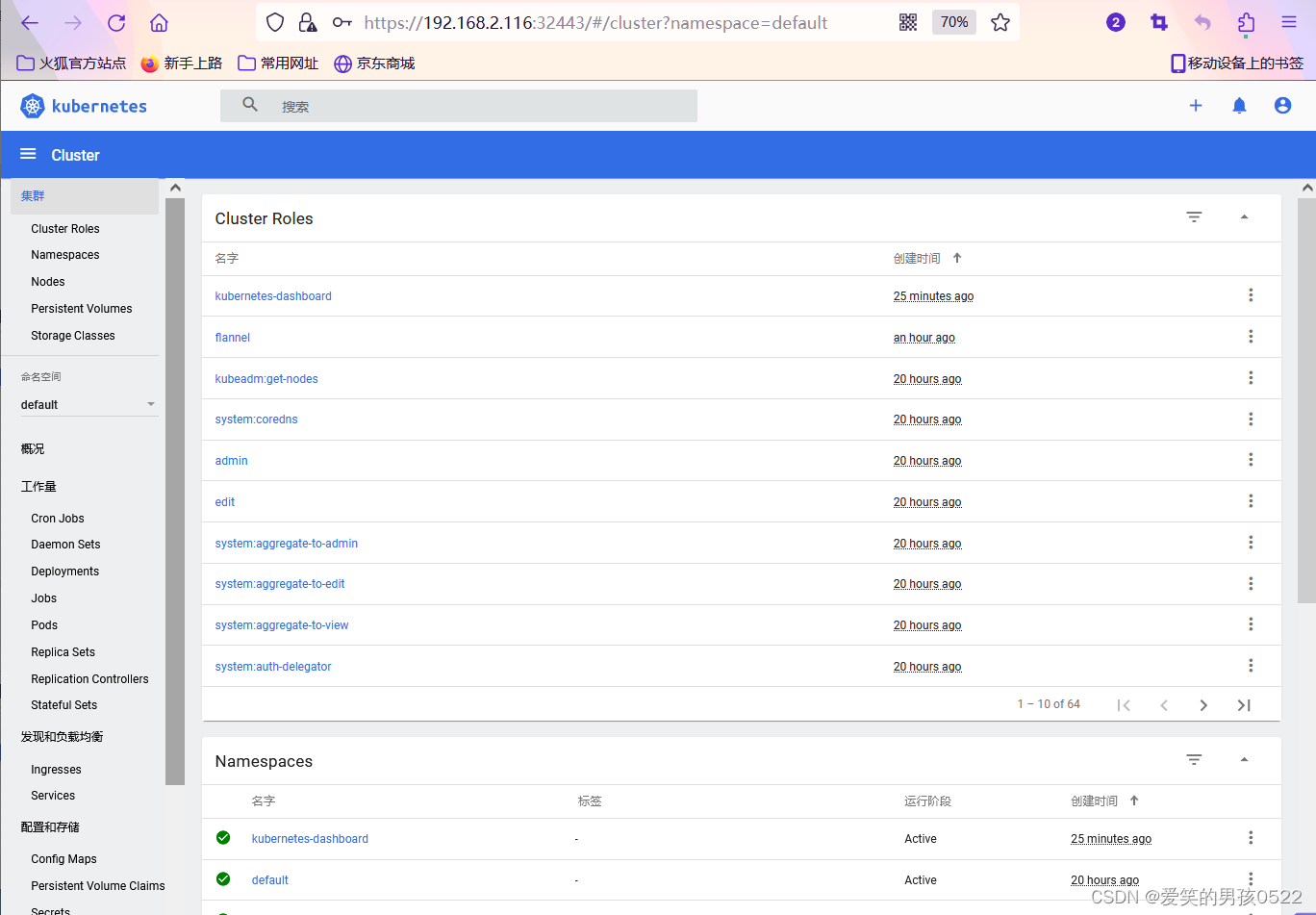

再回到dashboard界面可以看到CPU和内存使用情况了

到此K8S集群安装全部完成

五、应用部署测试

下面我们部署一个简单的Nginx WEB服务,该容器运行时会监听80端口。

Kubernetes 支持两种方式创建资源:

(1)用kubectl命令直接创建,在命令行中通过参数指定资源的属性。此方式简单直观,比较适合临时测试或实验使用。

[root@k8s-master ~]# kubectl run web --image=nginx --replicas=2(2)通过配置文件和kubectl create/apply创建。在配置文件中描述了应用的信息和需要达到的预期状态。

nginx-deployment.yaml文件提取链接:https://pan.baidu.com/s/1ZRnXausXPPr84k1sbvp2ww?pwd=4e8o

提取码:4e8o

[root@k8s-master ~]# kubectl create -f nginx-deployment.yaml以Deployment YAML方式创建Nginx服务

创建deployment

[root@k8s-master ~]# cat nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19.4

ports:

- containerPort: 80

deployment配置文件说明:

apiVersion: apps/v1 #apiVersion是当前配置格式的版本

kind: Deployment #kind是要创建的资源类型,这里是Deploymnet

metadata: #metadata是该资源的元数据,name是必须的元数据项

name: nginx-deployment

labels:

app: nginx

spec: #spec部分是该Deployment的规则说明

replicas: 3 #relicas指定副本数量,默认为1

selector:

matchLabels:

app: nginx

template: #template定义Pod的模板,这是配置的重要部分

metadata: #metadata定义Pod的元数据,至少要顶一个label,label的key和value可以任意指定

labels:

app: nginx

spec: #spec描述的是Pod的规则,此部分定义pod中每一个容器的属性,name和image是必需的

containers:

- name: nginx

image: nginx:1.19.4

ports:

- containerPort: 80创建nginx-deployment应用

[root@k8s-master ~]# kubectl create -f nginx-deployment.yaml

deployment.apps/nginx-deployment created

查看deployment详情

[root@k8s-master ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 3/3 3 3 5m57s

查看pod在状态,正在创建中,此时应该正在拉取镜像

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-7947dc656-ccts9 1/1 Running 0 6m32s

nginx-deployment-7947dc656-gcwvq 1/1 Running 0 6m32s

nginx-deployment-7947dc656-kvv6t 1/1 Running 0 6m32s

web 1/1 Running 0 73m

查看具体某个pod的状态信息

[root@k8s-master ~]# kubectl describe pod nginx-deployment-7947dc656-ccts9

Name: nginx-deployment-7947dc656-ccts9

Namespace: default

Priority: 0

Node: k8s-node2/192.168.2.118

Start Time: Thu, 10 Aug 2023 18:02:46 +0800

Labels: app=nginx

pod-template-hash=7947dc656

Annotations: <none>

Status: Running

IP: 10.244.2.4

IPs:

IP: 10.244.2.4

Controlled By: ReplicaSet/nginx-deployment-7947dc656

Containers:

nginx:

Container ID: docker://7043ecf3c52d43fe4bc11f27b0c614aadd10534c565bd320be87fd8feac90647

Image: nginx:1.19.4

Image ID: docker-pullable://nginx@sha256:c3a1592d2b6d275bef4087573355827b200b00ffc2d9849890a4f3aa2128c4ae

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Thu, 10 Aug 2023 18:07:49 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-s7c9z (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-s7c9z:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-s7c9z

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 7m43s default-scheduler Successfully assigned default/nginx-deployment-7947dc656-ccts9 to k8s-node2

Normal Pulling 7m42s kubelet Pulling image "nginx:1.19.4"

Normal Pulled 2m41s kubelet Successfully pulled image "nginx:1.19.4" in 5m1.644567511s

Normal Created 2m41s kubelet Created container nginx

Normal Started 2m40s kubelet Started container nginx

[root@k8s-master ~]# kubectl get pod -o wide #创建成功,状态为Running

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-7947dc656-ccts9 1/1 Running 0 9m3s 10.244.2.4 k8s-node2 <none> <none>

nginx-deployment-7947dc656-gcwvq 1/1 Running 0 9m3s 10.244.1.4 k8s-node1 <none> <none>

nginx-deployment-7947dc656-kvv6t 1/1 Running 0 9m3s 10.244.2.5 k8s-node2 <none> <none>

web 1/1 Running 0 75m 10.244.2.3 k8s-node2 <none> <none>

如果要删除这些资源,执行 kubectl delete deployment nginx-deployment 或者 kubectl delete -f nginx-deployment.yaml。

测试Pod访问

[root@k8s-master ~]# curl --head http://10.244.2.3

HTTP/1.1 200 OK

Server: nginx/1.21.5

Date: Thu, 10 Aug 2023 10:12:48 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Tue, 28 Dec 2021 15:28:38 GMT

Connection: keep-alive

ETag: "61cb2d26-267"

Accept-Ranges: bytes

[root@k8s-master ~]# elinks --dump http://10.244.2.3

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to [1]nginx.org.

Commercial support is available at [2]nginx.com.

Thank you for using nginx.

References

Visible links

1. http://nginx.org/

2. http://nginx.com/