文章目录

- Development of Edge Detection

- Multi-scale learning structure

- Loss Function

- Network Architecture

📄:Holistically-Nested Edge Detection

🔗:https://openaccess.thecvf.com/content_iccv_2015/html/Xie_Holistically-Nested_Edge_Detection_ICCV_2015_paper.html

💻:https://github.com/sniklaus/pytorch-hed/blob/master/run.py

Holistically-Nested Edge Detection (HED) 是ICCV 2015年的文章,解决1. image-to-image的边缘检测;2. 融合多尺度特征进行学习,在BSD500 F-score 0.782,NYU Depth F-score 0.746,预测一张图片大概0.4s

Development of Edge Detection

边缘检测的发展可分为以下几类:

- 早期探索性方法:sobel detector,zero-crossing,Canny detector

- information theory: Statistical Edges, Pb, gPb

- learning-based: BEL, Multi-scale, Sketch Tokens, Structured Edges

- deep learning based: Deep-Contour, Deep Edge, CSCNN

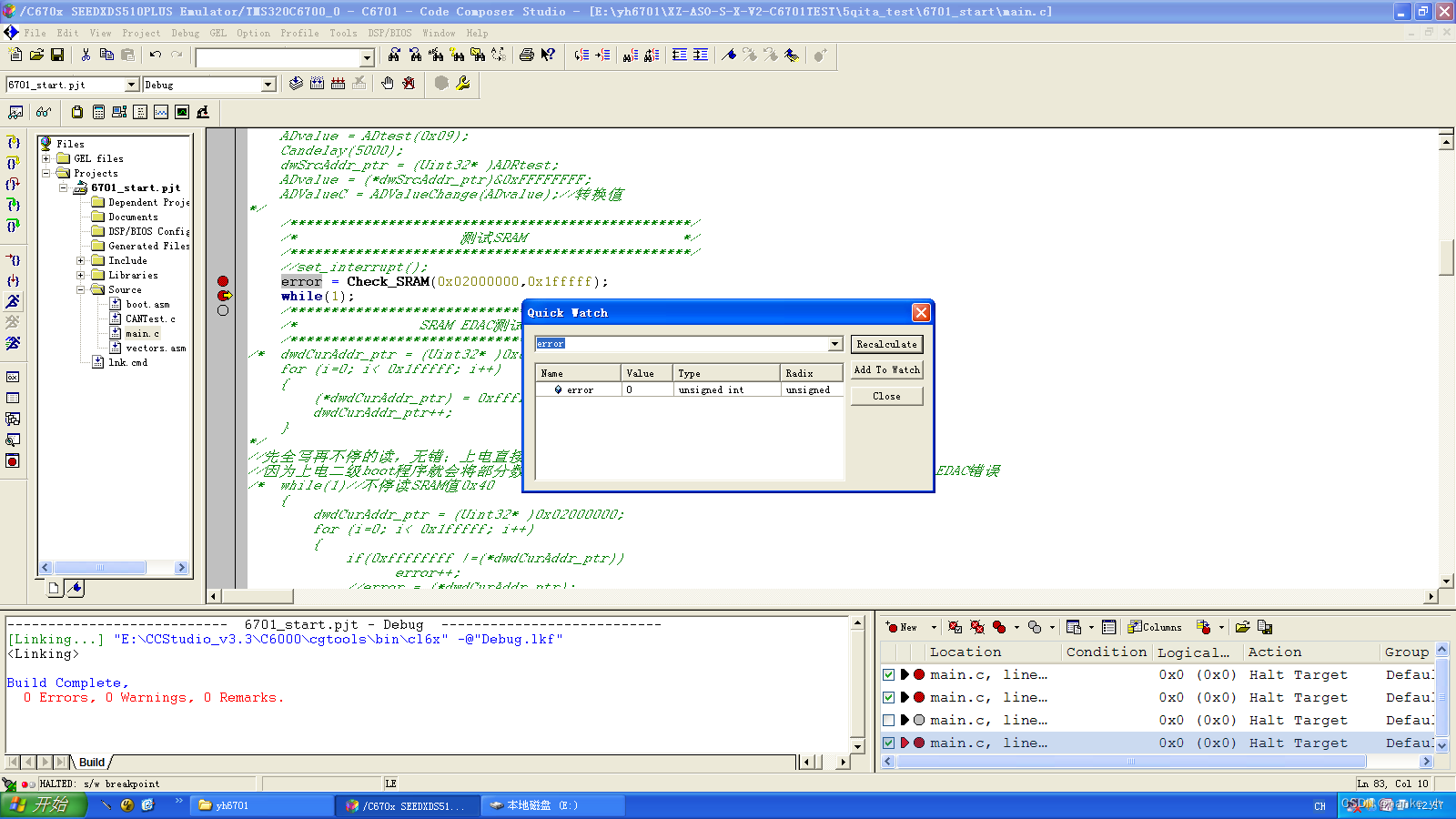

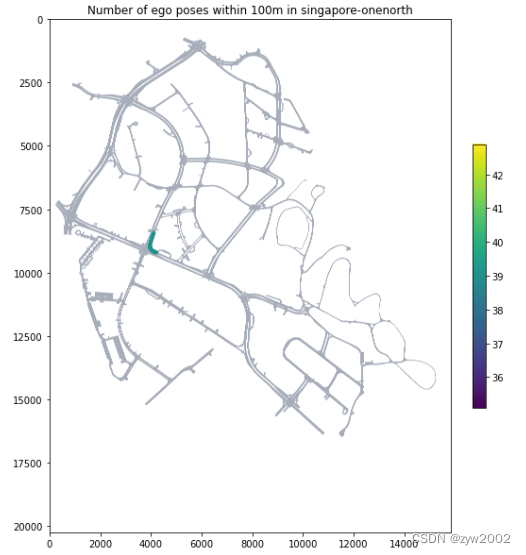

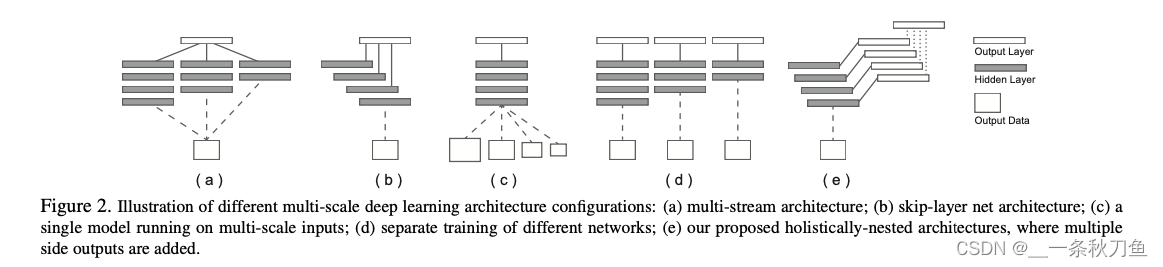

Multi-scale learning structure

其中,e为本文提出的Holistically-nested networks. 与a-d不同的是,之前的四种方法都存在冗余。

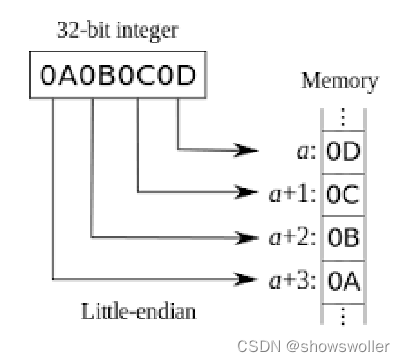

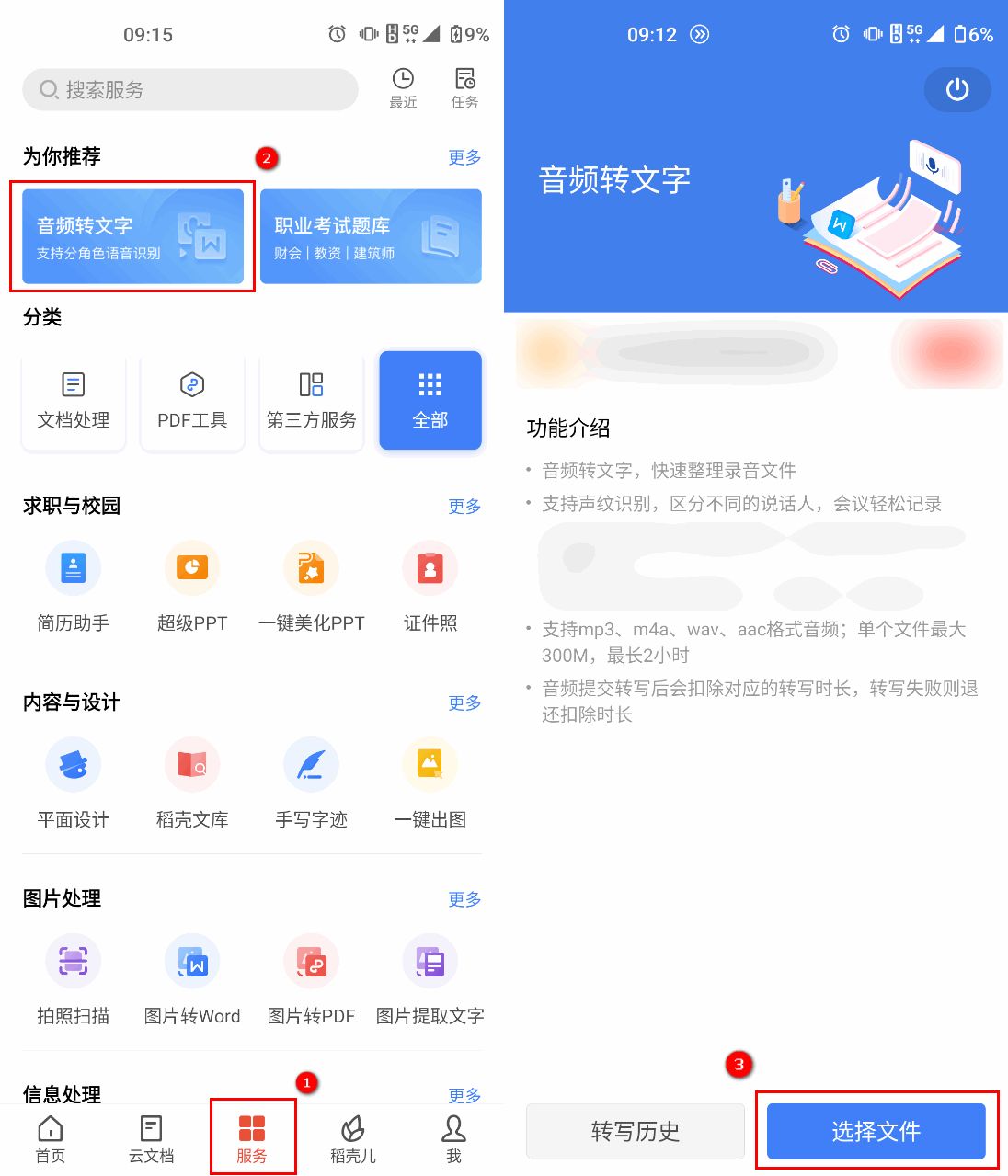

Loss Function

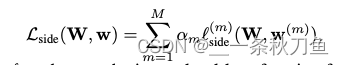

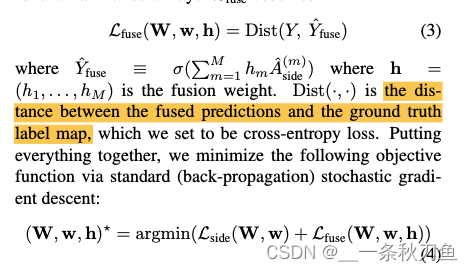

Loss function由两部分组成,一部分是side output,一部分是融合多层特征。

- Side

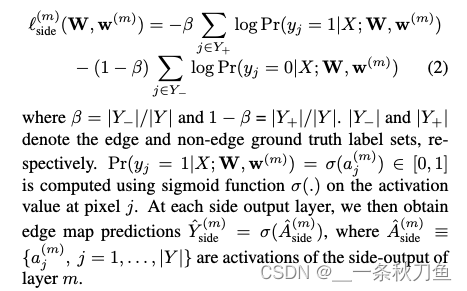

其中,M是side-output的层数。因为边缘检测的标签不平衡很严重,很大一部分是non-edge,因此需要用class-balanced cross-entropy loss function:

β \beta β很大,1- β \beta β很小,因此将大权重付给edge类。 - fuse

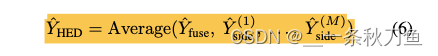

最后的预测结果由侧边输出和融合层的均值给出:

Network Architecture

与VGG比起来,剪掉了第五个pooling一级之后的全连接层。

- predict的结果由interpolation得到,如果加入第五层则会be too fuzzy to utilize

- 全连接层消耗大量计算资源

文章还测试了"fusion-output without deep supervision" & "fusion-output with deep supervision"两种情况,其中without是loss function只使用 L_fuse的情况,with是使用L_side和L_fuse的情况。如果没有deep supervision,则会在侧边输出的时候消失很多critical edges,导致整个网络更倾向输出large structure的边缘。

一些hyper-parameters:

- mini-batch size 10

- learning rate 1e-6

- momentum 0.9

- loss-weight for each side-output layer 0.1

- initialization of fusion layer weights 1/5

- weight decay 0.0002

- number of training iterations 10,000, divide learning rate by 10 after 5,000