YOLACT

1.Abstract

原理:

- 生成一组 prototype masks (原型掩码)

- 个数(nm)可自定义,基于protonet的最后一组卷积核个数

- 通过一组 coefficients (掩码系数) 预测每个 instance mask (输出mask)

- 掩码系数由head层输出,shape为(cls, nm)

- prototype mask @ coefficient >> instance mask + upsample >> output mask

优势:

1. 不依赖于池化,所以能生成高质量mask(不存在量化,后续会讲)

2. 速度快且稳定

2.Introduction

问题:

- 速度: 二阶段需要做定位和分类,速度太慢,希望基于SSD和YOLO实现一个实时的分割网络

- P1 为什么二阶段的分割速度慢 (附录)

- 一阶段在当时已有FCIS,但是时间仍然无法达到实时。

- P2 FCIS (附录)

idea:

1. prototype masks (原型掩码) + coefficients (掩码系数)

效果:

-

the network learns how to localize instance masks on its own

网络会自己定位mask实例

-

Then producing a full-image in stance segmentation from these two components is simple:

for each instance, linearly combine the prototypes using the corresponding predicted coeffificients and then crop with a predicted bounding box

对于每个实例,只需要在预测框内线性组合 prototype masks (原型掩码) coefficients (掩码系数) 即可生成原图mask

-

the number of prototype masks is independent of the number of categories

原型掩码数量与类别数无关

-

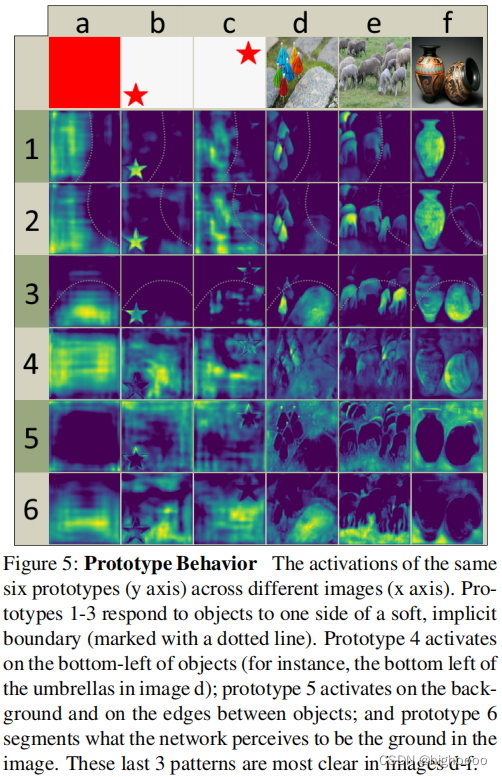

YOLACT learns a distributed representation in which each instance is segmented with a

combination of prototypes that are shared across categories. This distributed representation leads to interesting emergent behavior in the prototype space: some prototypes spatially partition the image, some localize instances, some detect instance contours, some encode position-sensitive directional maps (similar to those obtained by hard-coding a position sensitive module in FCIS [24]), and most do a combination of these tasks (see Figure 5).

原型掩码存在的意义:一部分学习空间特征,一部分学习位置特征,一部分学习轮廓特征,一部分学习编码特征

-

in fact, the entire mask branch takes only ∼5 ms to evaluate.

mask只需要5ms进行计算 (prototype mask @ coefficient)

-

since the masks use the full extent of the image space without any loss of quality from repooling, our masks for large objects are signifificantly higher quality than those of other methods

不需要repooling所需的特征损失(插值/量化)

-

In contrast, our assembly step is much more lightweight (only a linear combination) and can be implemented as one GPU-accelerated matrix-matrix multiplication, making our approach very fast.

实现矩阵乘法即可轻松GPU加速

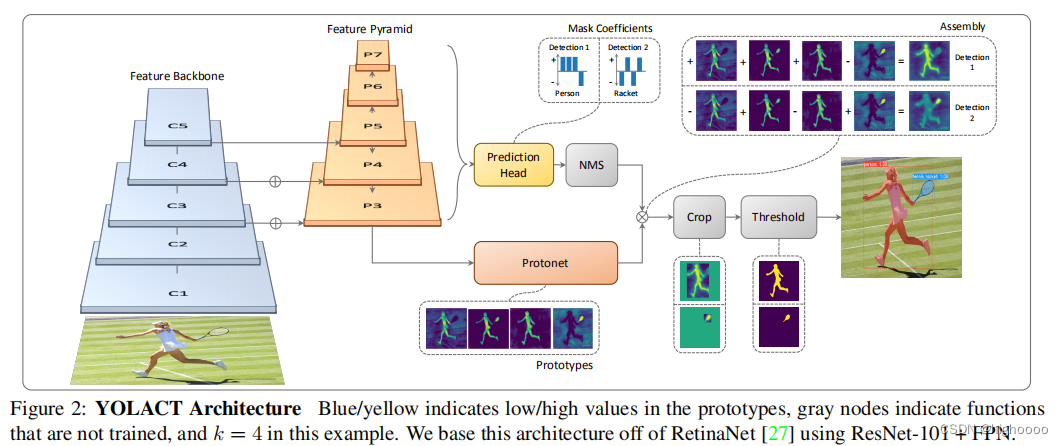

3.YOLACT

YOLACT

-

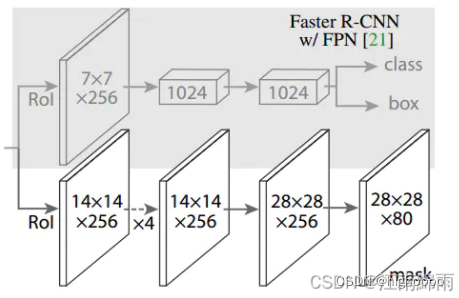

模型:两个并行分支:1. 由FCN生成原型掩码 2.目标检测头中加入掩码系数分支

-

后处理:对于在NMS中存活下来的每个实例,线性组合这两个分支结构来为该实例构造一个掩码。(每个实例都是唯一的,并不依赖于任何输入的类别/位置等信息,可以理解为每个卷积核都是为了提取自己想要的特征,比如提取竖线和横线的卷积核更新参数过程,为了达到神经网络替代人工设计编码器的效果)

-

模型这样设计的目的:在由二阶段变为一阶段的过程中,仍然能保证空间一致性

为什么不仅仅输出一个掩码系数,class box也要一起输出?

仅仅将掩码系数作为输出时,认为模型丢失了实例的空间信息,fc-mask为无class,box一同输出的效果,AP为20.7,比一起输入低了9个点

**空间一致性是什么**

在图像分割中,图像中的某个点和其周围邻域中的点具有相同类别属性的概率较大,这一特性称为图像的空间一致特性。

原文:由于conv层很自然的存在空间一致性,而FC层不存在,所以将cls,box加入FC层一起回归,保留了空间一致性

3.1. Prototype Generation

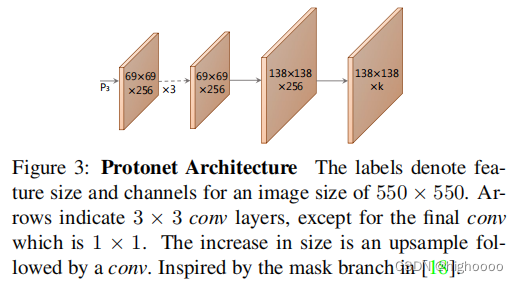

Protonet:最后一个1x1卷积控制原型掩码数量

从更深的主干特征中提取质子集可以产生更健壮的掩模,而更高分辨率的原型则可以产生更高质量的掩模,并在更小的物体上获得更好的性能。因此,我们使用FPN [26]是因为它最大的特征层(在我们的例子中是P3;参见图2)是最深的。然后,我们将其重新采样到输入图像维度的四分之一,以提高对小物体的性能。

3.2. Mask Coeffificients

For mask coeffificient prediction, we simply add a third branch in parallel that predicts k mask coeffificients, one corresponding to each prototype. Thus, instead of producing 4 + c coeffificients per anchor, we produce 4 + c + k Then for nonlinearity, we find it important to be able to subtract out prototypes from the final mask. Thus, we apply tanh to the k mask coeffificients, which produces more stable outputs over no nonlinearity. The relevance of this design choice is apparent in Figure 2, as neither mask would be constructable without allowing for subtraction.

- head输出4+c+k

- 为了使final masks和 prototypes 可以相减,使用tanh

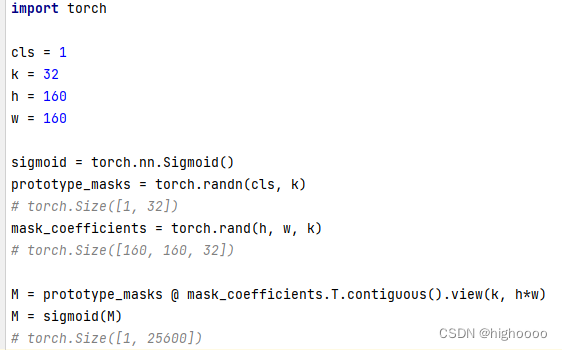

3.3. Mask Assembly

[cls, k] @ [h, w, k].T.view(~)

Loss: Lmask = BCE(M,Mgt)之间的像素级二值交叉熵。

Crop: 推理时会根据预测边界框裁剪finalmask,训练时根据groundtruth进行裁剪,并将Lmask除以地面真实边界框区域,以保留原型中的小物体。

3.4. Emergent Behavior(意外收获?)

结论

- 每个掩码都可以学习很多特征,可以重复但不是复制

- 掩码系数的作用是组合/管理/分配/利用各个掩码学习到的特征来生成finalmask

- K的改变不会对模型有大影响

4. Backbone Detector

ResNet-101 with FPN as our default feature backbone and a base image size of 550 × 550.

5. Other Improvements

Fast NMS

Semantic Segmentation Loss

P

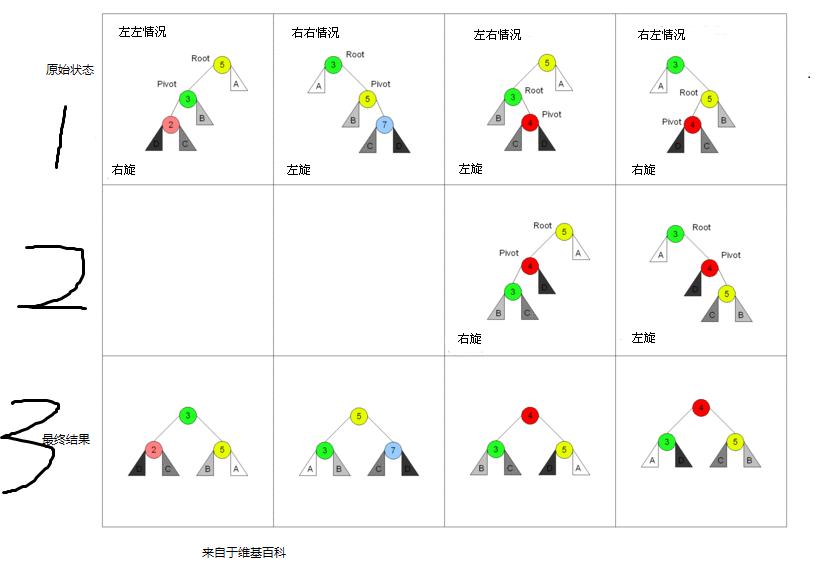

P1 为什么二阶段的分割速度慢

问题:

二阶段意味着必须要在全连接和softmax之前进行feature maps生成和 proposals推荐,且输入的shape不是可变的,所以必须基于repooling,而repooling是很难进行加速的

解决方案:

repooling 可以让不同shape的feature map 编码为同一个shape输出给全连接

- RoIPooling(进行两次量化)

- RoIAlign(双线性插值)

P2 FCIS

One-stage methods that perform these steps in parallel like FCIS do exist, but they require signifificant amounts of post-processing after localization, and thus are still far from real-time.

![[附源码]计算机毕业设计基于JEE平台springbt技术的订餐系统Springboot程序](https://img-blog.csdnimg.cn/7a2bf676d98443b8a8a3ba586da9412a.png)