文章目录

- Flink安装

- job部署

- 1、测试代码

- 2、打包插件

- 3、打包

- 4、测试

Flink安装

1、解压

wget -b https://archive.apache.org/dist/flink/flink-1.13.6/flink-1.13.6-bin-scala_2.12.tgz

tar -zxf flink-1.13.6-bin-scala_2.12.tgz

mv flink-1.13.6 /opt/module/flink

2、环境变量

vim /etc/profile.d/my_env.sh

export HADOOP_CLASSPATH=`hadoop classpath`

3、分发环境变量

source ~/bin/source.sh

4、Per-Job-Cluster时报错:Exception in thread “Thread-5” java.lang.IllegalStateException:

Trying to access closed classloader.

Please check if you store classloaders directly or indirectly in static fields.

If the stacktrace suggests that the leak occurs in a third party library and cannot be fixed immediately,

you can disable this check with the configuration ‘classloader.check-leaked-classloader’.

对此,编辑配置文件

vim /opt/module/flink/conf/flink-conf.yaml

在配置文件添加下面这行,可解决上面报错

classloader.check-leaked-classloader: false

5、下载 flink-sql-connector-kafka 和 fastjson1.2.83 的jar(去Maven官网找链接)

cd /opt/module/flink/lib

wget https://repo1.maven.org/maven2/org/apache/flink/flink-sql-connector-kafka_2.12/1.13.6/flink-sql-connector-kafka_2.12-1.13.6.jar

wget https://repo1.maven.org/maven2/com/alibaba/fastjson/1.2.83/fastjson-1.2.83.jar

job部署

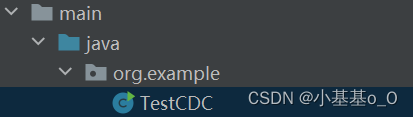

1、测试代码

package org.example;

import com.alibaba.fastjson.JSONObject;

import com.ververica.cdc.connectors.mysql.source.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.JsonDebeziumDeserializationSchema;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer;

public class TestCDC {

public static void main(String[] args) throws Exception {

//TODO 1 创建流处理环境,设置并行度

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment().setParallelism(1);

//TODO 2 创建Flink-MySQL-CDC数据源

MySqlSource<String> mySqlSource = MySqlSource.<String>builder()

.hostname("hadoop107")

.port(3306)

.username("root")

.password("密码")

.databaseList("db1") //设置要捕获的库

.tableList("db1.t") //设置要捕获的表(库不能省略)

.deserializer(new JsonDebeziumDeserializationSchema()) //将接收到的SourceRecord反序列化为JSON字符串

.startupOptions(StartupOptions.initial()) //启动策略:监视的数据库表执行初始快照,并继续读取最新的binlog

.build();

//TODO 3 读取数据并打印

env.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "sourceName")

.map(JSONObject::parseObject).map(j -> j.getJSONObject("source").toString())

.addSink(new FlinkKafkaProducer<>("hadoop105:9092", "topic01", new SimpleStringSchema()));

//TODO 4 执行

env.execute();

}

}

2、打包插件

服务器上已有的jar,就不需打包,加

<scope>provided</scope>

flink-connector-mysql-cdc和flink-table-api-java-bridge需要打包上

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.13.6</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>1.13.6</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>1.13.6</version>

<scope>provided</scope>

</dependency>

<!-- FlinkSQL -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.12</artifactId>

<version>1.13.6</version>

<scope>provided</scope>

</dependency>

<!-- FlinkCDC -->

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.1.0</version>

</dependency>

<!-- flink-table相关依赖,可解决下面报错:

Caused by: java.lang.ClassNotFoundException:

org.apache.flink.connector.base.source.reader.RecordEmitter -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_2.12</artifactId>

<version>1.13.6</version>

</dependency>

<!-- JSON处理 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.83</version>

<scope>provided</scope>

</dependency>

<!-- Flink_Kafka -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.12</artifactId>

<version>1.13.6</version>

<scope>provided</scope>

</dependency>

</dependencies>

<!-- 打包插件 -->

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

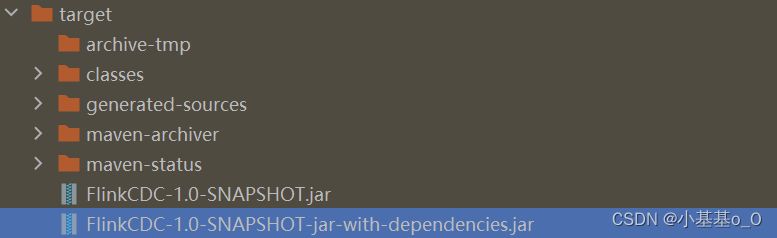

3、打包

上传

jar-with-dependencies的jar到服务器

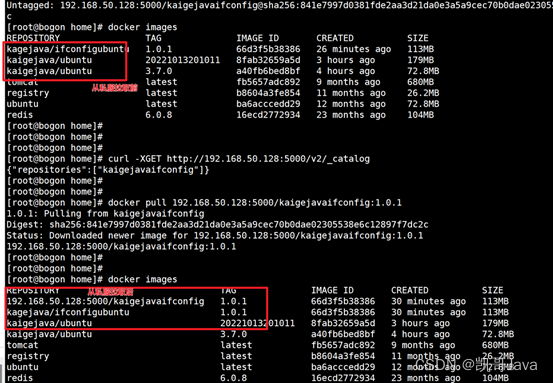

4、测试

/opt/module/flink/bin/flink run \

-t yarn-per-job \

-nm a2 \

-ys 1 \

-yjm 1024 \

-ytm 1024 \

-c org.example.TestCDC \

FlinkCDC-1.0-SNAPSHOT-jar-with-dependencies.jar

kafka-console-consumer.sh --bootstrap-server hadoop105:9092 --topic topic01